1. Introduction

Standardization in industries is an important aspect of sharing information and facilitating cooperation. When one talks about safety, security, risk, or performance, everyone understands what is being talked about. There is no one who does not grasp what the words mean in one’s own perception and how they can be understood. However, when opening a discussion on what these concepts really are and how one should study or deal with them, it is most likely ending up in ontological and semantic debates due to different experiences, views, perceptions, and understanding. Even though much research has been devoted to studies of safety, the concept in itself is undertheorized [

1].

Companies in industrial parks are not only connected by mutual interests such as technological similarities, logistics advantages, and the like, but they are also linked through the responsibility of obtaining and sustaining safety and security standards as well.

For instance, Chemical Industrial Parks are an important element of the development and economic growth in countries worldwide. They benefit from a common infrastructure, minimal utilities costs, the presence of complementary products and services, functioning as force multipliers on the surrounding region. When properly managed, they bring the benefit of scale economies and bring benefits to the region—the more standardized, the greater these benefits.

However, at the same time, these parks are high-risk areas, often showing great vulnerabilities and the potential for domino effects when things go wrong. As such, accident prevention and emergency response are crucial capabilities in securing the economic benefits these clusters generate. Similar to the importance of standardization of products and services for economic growth, it is important to have a common understanding of concepts such as safety and security to maximize efficiency and effectiveness in preventing disaster and facilitating health, safety, and the protection of the environment [

2,

3,

4].

Consequently, cooperation on topics concerning safety and security is highly relevant. Therefore, in industrial parks, it is important that managers from different companies belonging to the park have the same understanding of the concepts of risk, safety, and security. Because, only when a shared understanding of these concepts is present, organizations belonging to industrial parks are able to truly and optimally cooperate in the field of risk, safety, and security [

5,

6].

Risk and safety are often proposed as being antonyms, but more and more understanding grows that this is only partially true and not in line with the most modern, more encompassing views on risk and safety. Risk can also be considered as something that is positive, and the common idea of expressing risk using probabilities is too narrow [

1,

7,

8,

9,

10,

11]. Likewise, safety and security are often seen as being completely different fields of expertise and study that are separate from each other, while other views might more underline the similarities that are to be found between the two concepts and how they can be regarded as being synonyms [

12].

Science, including the domain of risk and safety, is served with clear and commonly agreed-upon definitions of concepts, and well-defined parameters, since having these precise definitions of concepts and parameters allows for standardization, enhancing communication, and allowing for an unambiguous sharing of knowledge. As Brazma (2001) says:

“Our ability to combine information from independent experiments depends on the use of standards analogous to manufacturing standards, needed for fitting parts from different manufacturers” [

13].

Standardization in science, in its turn, allows for a more exact measurement of observations, and this opens the opportunity of increasing the accuracy of analysis, which then can be used to develop more sound theories and practices. However, when studying in the field of safety science (a relatively young field of science), it is hard to find clear-cut definitions that indisputably link safety, security, performance, and risk. When reviewing the safety science literature, the question “what is safety” is answered in many ways, and it is very hard to find a clear definition of its opposite, which we could also name ‘unsafety’.

Although the field of safety science is relatively novel as a separate and independent domain of study, many theories, models, and metaphors have already been proposed, attempting to describe what safety is and how it can be achieved. Often these theories are drawn from the investigation of—and lessons learned from—catastrophes and disasters. As such, these theories are often justified by explaining how these mishaps came about. Therefore, in general, efforts to improve the safety of systems have mostly been driven by hindsight, both in research and in practice [

14]. Most problematic is that safety is mostly defined by its opposite, for instance, as being the antonym of risk.

As a consequence, looking at the concepts of risk, safety, and security in scientific literature shows that there is no truly commonly accepted and widely used semantic foundation to be used in safety and security science, providing common, unambiguous, clear, and standardized definitions. Likewise, such a survey also confirms that there is a lack of standardization when it comes to defining the opposite, the antonyms that indicate a lack of safety or security. Terms like accident, incident, mishap, disaster, and catastrophe are often used, indicating losses. Therefore, these words could be considered being the opposite of safety. Unfortunately, they all have different and sometimes specific meanings depending on the persons or fields of knowledge that use these commonly employed words. They are often more related to the level of consequences than associated with the nature or origin of the losses incurred. As such, it is very difficult to benchmark safety performance in industrial parks and compare safety performance in an objective way.

For the antonym of security, it is even worse to find a commonly used word covering the subject. A short survey on the internet to find the antonym for the word security brings about the following words: “break”, “disagreement”, “endangerment”, “harm”, “hurt”, “injury”, “danger”, “insecurity”, “peril”, “trouble”, “uncertainty”, “worry”; all words that are equally valid to describe “unsafety”. When looking up the meaning of the word insecurity in the Cambridge dictionary, the first meaning that is proposed is “a feeling of lacking confidence and not being sure of your own abilities or of whether people like you”, “a lack of confidence”. This is hardly what people generally think of when talking about security issues in safety and security science today. A second possible meaning seems to be closer to the subject we are currently talking about: “the quality of not being safe or strong”; “a lack of safety”. Here, as well, it is difficult to make a distinction between safety and security.

A perfect word to indicate a lack of safety would be “unsafety”, and for the antonym of security, “unsecurity” is a clear option, although both terms are less used in scientific literature, as is indicated in the following

Table 1.

Furthermore, in different languages, there is only one word that is used for safety and for security, e.g., in Italian, Spanish, German, Chinese, and Russian, which are also languages used around the globe. It seems that more clarity about both concepts is indeed needed in general and in scientific literature in particular.

So, how do these concepts relate to each other? How can a modern view on risk, safety, and security help in understanding and in dealing with the issues related to these concepts? How can it impact safety and security in industrial parks? These are the questions this article tries to tackle by looking at the historical evolution these concepts have endured. As a consequence, this paper proposes a set of fundamental definitions of the concepts “risk”, “safety” and “security”, where the similarities and differences become immediately apparent. From a scientific perspective, these definitions and how these concepts are linked intend to, at the same time, provide a semantic and also an ontological foundation for safety and security science.

In

Section 2, we will discuss and elaborate on the evolving perceptions regarding risk, safety, and security based on a concise etymological and etiological study of these concepts. Built on the findings of this study, we will propose an ontological and semantic foundation for safety science in

Section 3, followed by a discussion in

Section 4 and our conclusions in

Section 5.

2. Evolving Perceptions Regarding Safety (Science), Risk (Management) and Security

Safety, security, and risk, but also performance, are concepts that are becoming increasingly more important in our complex and fast changing society. From a broad perspective, the concepts of risk and safety are tightly coupled and have known similar evolutions in their development and in how people understood these concepts. Also, the evolution of how people have dealt with risk and safety is very much comparable. Safety and risk are often perceived in a similar way and are regularly used as antonyms. Approached from this limited perspective, risky often means unsafe and safe often means without or protected from risk (as indicated in many dictionary definitions of safety). However, in recent years, different perspectives have emerged, where risk and safety are not necessarily opposing concepts. Moreover, when looking at the past, one can see that ideas about safety and risk have evolved in a very analogous way and for comparable reasons, expanding the view on these concepts. Therefore, it is interesting to have a closer look at these two concepts and discover their etymology and its etiology.

2.1. A Historical Perspective on Risk Management, the Etymology of Risk and Its Etiology

2.1.1. Ancient Times

For thousands of years, people considered much of what happened to them as the will and acts of the gods [

15]. So, the general idea was that whatever one tried, things finally happened by the will of the gods, and there was nothing to do about it but to accept it.

However, this doesn’t mean that concepts of risk and safety were strange to people. In their article “Risk analysis and risk management: an historical perspective”, Covello and Mumpower [

16] describe how, in the Tigris-Euphrates valley, about 3200 B.C., a group of people, called the Asipu, already offered consultancy services related to risk and safety. The Asipu would analyze and interpret alternative options regarding important decisions to be taken and, “guided by the gods”, would recommend the most favorable options to pursue. In fact, they even made their final reports etched on clay tablets. As such, these reports indicate the first recorded form of risk analysis in the history of men [

17,

18].

2.1.2. The Renaissance and Modern Time Period

Essentially, the concept of risk as we generally use it today saw its appearance with the rise of commerce in the colonial era during the Renaissance, a moment in time where science started to challenge the superstitious beliefs associated with religion. It was a time of an expanding world and trade of new and scarce products transported overseas, which created a new reality. Trade overseas to distant destinations and far away countries was a high-risk endeavor. Huge profits were anticipated, but also equally huge losses were possible.

It is this economic factor that made people become more aware of the concept of managing risks, where the uncertainty regarding gain or loss was very high, and the level of consequences could be immense. Soon, the insurance industry emerged as an effort to manage risk in commerce, covering for possible losses and the consequences of unfortunate events. Wealth was no longer the privilege of the happy few but could be earned by investing in trade and making the right decisions. [

15,

16] Nevertheless, it took until the work of Pascal in the 17th Century to see sudden progress in the understanding of risk and decision making based on numbers.

2.1.3. Twentieth Century

Although the etymological roots of the term risk, in the way that many people understand it today, can be traced back as far as the late Middle Ages, and despite the fact that its practical use emerged during the colonial age, the more modern concepts of risk appeared only gradually, with the transition from a traditional to a modern society. With larger and ever more complex technology systems emerging after the Second World War (e.g., nuclear installations and general aviation), the focus on probability and risk increased and supported a scientific, mathematically-based approach toward risk and risk assessment [

19].

Later in the twentieth century, with standards of living quickly rising after World War II, other objectives also became important and the concept of managing risk expanded from a mathematically-based approach to include also more qualitative methods, in order to be able to deal with the achievement of non-financial objectives and to cope with uncertainties that were less easy to quantify. Hence, the origins of operational risk management can be traced back to the discipline of safety engineering, which in turn, is mainly concerned with the physical harm that may occur as a result of improper equipment or operator performance [

20]. Furthermore, the decade of the 1970s was a period of heightened public concern about the effects of technology on the environment, mainly regarding health hazards, such as the effects of asbestos or other chemical substances. This concern further increased government attention for risk assessment and risk management regarding health safety issues [

21].

Continuing losses, injuries, and casualties in peacetime operations and exercises, due to accidents, also triggered the US Armed Forces and NASA to develop risk management towards a more comprehensive approach, called Operational Risk Management (ORM) [

22,

23,

24]. They proposed a set of principles, a process, and specific guidelines on how to deal with risk in operations, adapting the world of risk management to the human factor involved in day to day operations. However, by the end of the century, further development of the concept of operational risk management expanded the view on risk from a purely loss-and-probability perspective to a more systemic view, shifting attention from the probability of loss to the likelihood of achieving goals. As such, no longer solely focusing on the prevention of loss.

In the same period of time, due to scandals such as the Barings Bank (1995), the dot.com bubble (1997–2001), and the ENRON Corporation (2001), people became more and more concerned with the management of risk and the good ethical practices in managing organizations, complying with legal and legislative requirements. The idea of risk is closely connected with the human aspiration to control the future, and the idea of ‘risk society’ [

25] might suggest a world becoming more hazardous, but this is not necessarily so. Rather, it is a society increasingly preoccupied with the future (and also with the safety of that future), which generates risk awareness [

26,

27]. However, at that time, the focus was still exclusively on the negative impact of risk at an organizational level, and operational risk scarcely existed as a category of practitioner thinking at the beginning of the 1990s. Nevertheless, by the end of that decade, regulators, financial institutions, and practitioners could talk of little else [

28].

As such, it is to be noted that the first risk-related national standard, the Norsk Standard NS5814:1991 concerning risk analysis, was only published in 1991, soon followed by other risk-related standards. For instance, IEC/IEC 300-3-9:1995 regarding the risk analysis of technology systems, or BSI PD 6668:2000 regarding the risk elements of corporate governance [

20,

29,

30,

31]. In this timeframe, risk management standards were also developed by the United States Armed Forces, providing a more comprehensive approach to manage (operational) risks, also aimed at achieving objectives safely (e.g., Air Force Pamphlet 91-2015—Operational Risk Management (ORM), Guidelines and tools—1 July 1998) [

32].

2.1.4. Twenty-First Century

The changes that gradually emerged during the last quarter of the 20th century persisted, and an increased understanding of the concept of risk started to grow as modern risk management evolved substantially due to a number of factors, such as the rise of knowledge-intensive work, an expanding view on stakeholders, a growing importance of project management, the expanded use of technology, increased competitive pressure, increased complexity, globalization, and continuing change [

20].

This growing concern and increasing awareness regarding risk management at the turn of this century led to the development of a whole range of additional risk management standards. These standards were issued by governments (Canada in 1997, United Kingdom 2000, Japan 2001, and Australia/New Zealand 2004), international institutions (IEEE-USA 2001, CEI/IEC-CH 2001), or professional organizations (IRM/ALARM/AIRMIC-UK 2002, APM-UK 2004, PMI-USA 2004) [

33,

34,

35,

36,

37,

38,

39,

40,

41]. Each of these standards, coming from different perspectives and fields of knowledge, reflect an increased understanding of risk and risk management, proposing different definitions of risk and comparable processes to manage risks. At that moment in time, a shift occurred from a purely negative view on risk, still expressed in the definitions of some of those (older) standards (CAN/CSA-Q850-97:1997 and IEEE 1540:2001), to more neutral or even very broad definitions of risk in the more modern standards [

32,

36]. Another remarkable aspect of the “newer” definitions of risk is the fact that risk is more explicitly linked to objectives and that the effects of uncertainties on objectives (consequences) can be positive, negative, or both [

20].

The beginning of this century is also characterized by international legislation putting a greater emphasis on transparency (e.g., Seveso III), collaboration, inspection, and moral values. This all driven by societal pressure due to increased connections between citizens.

Also, in the first decade of this century, and due to a number of scandals—similar to ENRON—there was ever-increasing attention for corporate governance and the role of operational risk management. This resulted in the first internationally used comprehensive corporate standard on risk management, the COSO Enterprise Risk Management Integrated Framework (2004) [

42,

43]. Enterprise Risk Management (ERM), similar to ORM in the military and aviation sectors, is the more organization-wide approach that is needed to cope with the complex realities and awareness of risks for the corporate world in the 21st century. This methodology stands in stark contrast to the segregated silo approach that is mainly occupied with the assessment of some well-defined risks, for instance in engineering, or when only looking at the financial aspects of risk in corporations, focusing on probabilities and a limited range of consequences, hence possibly under- or overestimating risks to the entity as a whole [

44].

However, the COSO ERM framework, which was mainly developed as an auditing tool to check compliance, failed during the 2008 financial crisis because organizations implementing ERM would still follow the reductionist approach of treating complex matters, such as Collateralized Debt Obligations (CDO’s), as being the simple stocks and bonds these organizations were used to. It caused the financial institutions to completely lose their ability to assess the involved risks, and the failure showed the need for a truly system-dynamics approach to ERM [

45]. Hence, the International Standardization Organization (ISO) set out to establish a working group of risk management professionals to achieve consistency and reliability in risk management, by creating a standard that would be applicable to all forms of risk and to all kinds of organizations, creating a standardized foundation for risk management [

46,

47,

48,

49].

Purdy (2010) states that “Little real progress could be made with the ISO standard until all agreed on a definition of risk that arose from a clear and common understanding of what risk is and how it occurs.” The working group arrived at: “risk is the effect of uncertainty on objectives”. This definition of risk reveals more clearly that managing risk is merely a process of optimization that facilitates the achievement of objectives. Risk treatment is then about changing the magnitude and likelihood of consequences (effects), both positive and negative, to achieve a net growth of gains (value creation) and the maintaining or achievement of objectives—objectives being understood in the broadest sense of the word. Controls are then the outcomes of risk treatment decisions, in which the purpose is to modify risk [

49].

The specific way in which risk is regarded by the ISO 31000 standard also broadens the understanding and attention of risk management towards performance instead of solely focusing on compliance or the prevention of loss. Lalonde and Boiral (2012) state that “

although this approach may seem relatively conventional, the standard does succeed in integrating into a single concise and practical model a considerable amount of knowledge, accumulated from research on multiple aspects of the field, which is widely scattered in the literature and thus difficult to consider” [

50].

The latest changes in the practical understanding of risk and risk management in different sectors and industries have been issued in updates of the COSO and ISO standards. In 2017, the revised COSO framework, called “COSO Enterprise Risk Management—Integrating with Strategy and Performance”, was issued, putting emphasis on the alignment of objectives with the corporate mission, vision, and core values. In 2018, ISO issued a revised version of the ISO 31000 standard. However, to the best of the authors’ knowledge and understanding, these new versions do not add any new insight to the understanding of the concept of risk, which was not already found in the first version of the ISO standard [

51,

52].

This overview of the evolution of the concept of risk and risk management is far from complete. Other interesting overviews and reflections on the concept of “risk” can, for instance, be found in Rechard (1999) and Aven (2012) [

9,

53]. Nevertheless, this and other overviews of historical and recent development trends on risk and risk management indicate a tendency towards more overall, general, holistic concepts, capable of assessing and managing decision problems, crossing traditional scientific disciplines and areas and opening up for new ways of describing/measuring uncertainties other than probability [

9].

2.2. Evolving Awareness in Safety Science

Surprisingly less attention has been given to the history of safety science, compared to risk and the other sciences. The oversight may stem from a common assumption that safety is a composite of engineering, bio-medicine, public health, and, more recently, environmental studies [

54]. As such, the following sections only try to give an indication of how and why the perceptions regarding safety, and the insights in safety through science, changed over time.

2.2.1. Time Period from the Industrial Revolution Till World War II

Safety science, similarly to risk management, originated because of a need to cope with uncertain profit, the failure of maintaining possession of valuable assets, and the injury or loss of workforce, particularly of losses due to accidents. Therefore, in safety science, scholars have always been searching for a fundamental understanding of why and how accidents happen. In the same way that expanding views impacted the etymology of the concepts of risk and risk management, an ever-increasing awareness and knowledge regarding the concepts of safety and safety management has also impacted the etymology of safety and thinking in safety science.

The industrial revolution and the appearance and use of new technologies, such as steam engines and weaving machines, provoked reoccurring and severe accidents, damaging valuable assets, causing severe casualties and injuries to workers. In the beginning, these accidents are just seen as setbacks, caused by workers’ behavior and part of the business. However, during the second industrial revolution, which mainly took place in Europe and North America starting at the end of the 19th Century, ongoing mechanization and new technological developments were used to develop new industries. With the advent of mass production and production engineering, productivity substantially increased. As a result, life was getting better, incomes were rising, and mortality was declining [

55]. These rapid economical, technological, and social changes also triggered the dawn of safety as a science, when occupational safety was developing into a professional field.

Accidents have always been a problem. Yet they did not appear as a major economic and health issue until the early 1800s when the declining death rate from infectious diseases shifted attention to other causes of mortality [

54]. Because accidents in a production line are costly, not only due to the casualties and lost workforce but also because of the loss in production and production capacity, this was a real burden on the profitability of these new factories. At that time, these accidents were responsible for high mortality in the industrial world, leading to a bad reputation. Due to the rising prosperity, this was no longer acceptable or taken for granted. Accidents were no longer considered to be acts of (the) God(s), but man-made, and could be prevented [

56].

So, from the start, awareness about risk and safety and ideas governing risk management, safety management, and safety science were triggered by the likelihood of bad things happening, impacting on the profitability of endeavors related to new emerging sectors such as worldwide trade and mass production. Both approaches (managing risk and managing safety) tried to accommodate for losses that impacted profitability. Risk, as such, became the domain of insurers and the start of a whole financial industry to cope with possible financial losses. Likewise, safety science started with focusing on accidents, injuries, and casualties, how they came about, and what could be done to prevent these mishaps from happening. In fact, in both illustrations of risk and safety, the attention of practitioners drifted away from what people really wanted or needed, which was safeguarding and achieving the objectives of higher financial profit and increased production figures.

One of the first theories concerning safety is about accident proneness. The term accident proneness was coined by psychological research workers in 1926 [

57]. According to this theory, some people were considered to be more likely to have accidents than others [

58]. Kerr (1957) [

59] defines it as follows: “Accident proneness is the constitutional (i.e., permanent) tendency within the organism to engage in unsafe behavior within some stated field of vocational activity”. However, the accident proneness theory only looks at one possible cause of accidents and, therefore, cannot explain accidents in a general manner. It has, therefore, been abandoned.

In the same timeframe, Heinrich observed production facilities to discover trends and patterns in occupational accidents, resulting in Heinrich’s pyramid or triangle [

60]. Even today, his conclusions are used as a basis to measure and predict safety in organizations, by parameters such as LTIR (Lost Time Injury Rates) or time without mishaps. Heinrich also proposed his Domino theory on accident causation when studying the cost of accidents and the impact of safety on efficiency, opening up the perspective to the role of management in accident prevention [

61]. Heinrich’s domino theory became a basis for many other studies on accident causation and the role of management in accident prevention, dominating the world of safety practitioners well beyond World War II [

62].

2.2.2. Time Period of the Sixties, Seventies, and Eighties

Heinrich’s research and work was the foundation for many other researchers, also incorporating the role of management in their models. For instance, Petersen (1971) [

63] developed a model based on “unsafe acts” and “unsafe conditions”, and Weaver (1971) and Bird (1974) [

64,

65] updated the domino model with more emphasis on the role of management [

62,

66].

At the beginning of the second half of the twentieth century, Gibson (1961) and Haddon (1970) focused on the causation of injuries, discovering and proposing a formula for injury prevention [

67,

68]. This shift in focus caused safety science to look at engineering as a way to reduce injuries, leading to safety belts, bumpers, and many other devices capable of absorbing or deflecting energy [

54]. In this period of time, also the introduction of the “hazard”–“barrier”–“target” model and analysis tools, such as Failure Mode and Effect Analysis (FMEA), Hazard and Operability Analysis (HAZOP), the Energy Analysis approach are to be noted [

66].

Similar to the evolutions in risk management, safety science further evolved as a result of unfortunate events, such as a series of accidents that had a huge impact on society. Names such as Flixborough (1 June 1974), Seveso (10 July 1976), and Three Miles Island (28 March 1979) are ingrained in the history of safety science, resulting in a broader perspective on safety and the advent of more safety regulations. The increased awareness about safety is reflected in increasing political attention for safety-related issues and an increase in associated regulations. It is also clearly demonstrated by the advent of a number of safety-related scientific journals in the last quarter of the twentieth century. As a result of the investigations of these accidents, the awareness of safety practitioners expands from the role of management to interactions in the entire socio-technical system.

2.2.3. More Major Accidents and Disasters in the 1980s

The socio-technical concept arose in 1949 [

69]. However, at that time and in the early fifties, the societal climate was negative towards socio-technical innovation. This climate would only become positive thirty years later [

70]. Again, similar to the development of risk management and operational risk, safety science took up this wider organizational perspective on safety issues as from the early eighties. For example, Rasmussen’s taxonomy concerning human error per skill-, rule- and knowledge-based mistakes, in conjunction with the interaction with technology and its signals, signs, and symbols, expanded the ideas on Human Factors, behavior, and performance. This was exemplified by what happened at Three Miles Island [

71]. At the same time, further advances in technology also made safety engineering an indispensable part of safety science, with the development of safety equipment such as safety belts and air bags.

Another result of analyzing, amongst others, the Three Miles Island accident, is Charles Perrow’s book, Normal Accidents (1984) [

72], in which the “normal accident theory” (NAT) is proposed. It has been particularly influential among researchers concerned with understanding the organizational origins of disasters and the strategies that might be used to make organizations safer [

68].

Safety science further developed in the past thirty years as a result of another series of significant accidents and important disasters, such as the disasters of Mexico City (20 November 1984), Bhopal (2–3 December 1984), Challenger (28 January, 1986), Tsjernobyl (26 April 1986), and The Herald of Free Enterprise (6 March 1987), to name some of the most important ones. Each of these accidents show the complexity of socio-technical systems. As a result, scholars try to model systems in order to predict their behavior. Building on the work of Rasmussen (1983), Reason (1990) proposes the Generic Error Modeling System (GEMS), later to become known as the Swiss Cheese model (of defenses) [

73,

74,

75,

76]. Other models that build on the human factor approach are, for instance, the Software-Hardware-Environment-Liveware-Liveware (SHELL) model [

77], or the Human Factors and Classification System (HFACS) [

78], building on the work of Reason.

2.2.4. The Last Thirty Years

By the end of that disastrous eighty’s decade, people also looked at human factors and behavior, by introducing the notion of safety culture. According to Cooper (2002) [

79], “

the term safety culture first appeared in the 1987 OECD Nuclear Agency Report on the 1986 Chernobyl disaster [

80].

It is loosely used to describe the corporate atmosphere or culture in which safety is understood to be, and is accepted as, top priority” [

81]. A more specific approach is the concept of Just Culture, coined by Dekker [

82,

83]. Furthermore, the concepts of ‘High Reliability Organizations’ [

84,

85,

86] and ‘Resilience Engineering’ [

14,

87] were introduced, looking at the whole organization.

Recent years have seen a whole range of models that try to model the taxonomy and structure of accidents. Examples are the Systems Theoretic Accident Modelling and Processes model (STAMP) by Leveson [

88] and the Functional Resonance Analysis Method (FRAM) by Hollnagel [

89]. The most remarkable distinction is that FRAM is focused on safety instead of unsafety, going beyond the failure concept and the concepts of barriers and controls, aiming at the day-to-day performance [

89]. This is remarkable because the idea is a result of finding ways to achieve safety proactively. In his article, ‘Is safety a subject for science’, Hollnagel indicates the difficulty in changing the mindset of the safety science community from what is going wrong to what is going right. An idea further developed with the advent of the concepts of Safety-I and Safety-II [

90,

91].

In summary, the new millennium, in the same way as risk management, safety science expanded into a more systemic/holistic view with the advent of concepts such as Resilience Engineering [

12], High Reliability Organizations [

84], Safety-I and Safety-II [

91], and Total Respect Management [

92,

93,

94,

95]. Ever more, these modern concepts in safety are focusing on what people want, what their objectives are, and how to achieve them instead of solely trying to avoid bad things from happening. Likewise, scientists are increasingly looking for significant leading indicators in order to be more proactive in avoiding accidents and achieving what is planned for. The concepts, therefore, also evolved from a purely negative view on risk and safety towards a more encompassing, expanded view, also considering the positive sides of risk and safety. Regarding safety, you could even say that, now, the focus is more on safety instead of exclusively concentrating on unsafety.

2.2.5. A Drastic Change in the Perception of Security

Until the last quarter of the twentieth century, security has been the realm of the protection of valuable assets and persons guarding these assets and individuals from damage, injury, or being taken away by unauthorized persons. In essence, security was a matter of the national security services to protect national assets and VIPs against actions of foreign nations, of large corporations against actions of competitors, or of wealthy individuals against the actions of criminals. It was not a main concern for the public at large.

This started to change in the second half of the twentieth century, where security was no longer solely needed to protect national interest or assets of wealthy people but also to counter deliberate and sometimes indiscriminate violence against randomly chosen targets resulting from actions related with local or global ethnic, social, and political movements and conditions. Actions of these movements range from guerrilla warfare, via selective and individualized acts of violence that can be qualified as terrorism to categorical, indiscriminate terrorism, mainly targeted at local, mostly government-related institutions and individuals [

96].

A key event, changing the perception of the world on terrorism and security were the so-called terrorist attacks of September 11th, 2001. Before 9/11, terrorism research was the exclusive domain of non-academic “security experts” and political scientists, of which only a limited number were interested in social-science theory [

96]. Up until 9/11, one could say that terrorism was a more or less confined, local, and regional phenomenon of social and political differences, and that, in general, people had a choice whether or not to go to those countries or areas that suffered from these acts of violence. The economic impact of these so-called terrorist actions remained rather local or regional at best, mainly having an adverse effect on tourism and the local or regional economy. However, the message of 9/11 was that terrorism could happen anywhere and to anyone, and as such, security also became everyone’s concern worldwide. The impact was immediate, affecting travel and commerce worldwide, resulting in global economic and psychological effects.

Another aspect that generates increased interest in security is the invention of the world wide web and the increasing interconnectedness of information technology. Together with the advent of global connectedness and the increasing use of information technology touching on all facets of human life, the illicit use of information technology became more and more an issue, leading to the specific field of cybersecurity. At first, this was a concern for large institutions and states, as, for instance, in recent years, there has been an increase in both the frequency and seriousness of cyberattacks targeting critical infrastructures [

97]. Nowadays, cybersecurity is also becoming ever more an issue of importance for individuals with the arrival of malware, ransomware, and phishing, aimed at individuals and this with criminal intent.

Today, virtually no one is secure against acts of violence, terrorism, and cybercrime. In a way, one could say that international terrorism and cybercrime have lifted the importance of security in society in the past decades. Not only because events related to unsecurity became a global risk and everyone’s concern but also because of the immense economic impact terrorism and cybercrime potentially have on society.

2.3. Advanced Perspectives on Risk and Safety

Looking at this historical overview of risk, safety, and security, one can see that these concepts and their understanding have evolved over periods of time, expanding awareness, triggered by times of perceived hardship. Actually, these concepts are culminating in more advanced and more holistic perspectives that are very similar, as both risk and safety include positive and negative outcomes to be managed in order to reach an optimum situation. As such, taking risk is aimed to increase value, and safety is reached when it is likely that this value will be secured by excellent performance (Safety II thinking). Likewise, running risk is the possibility of losing value, and unsafety is to be expected when it is likely this value will be lost due to all kinds of hazards and threats (Safety I thinking). Both parts are important and connected in a holistic view on risk, safety, security, and performance.

2.4. Quality of Perception

Risk, safety, and security are complex matters because, even for just one individual, it is very difficult, if not impossible, to discover or take all concerned objectives, all effects, and all uncertainties occurring at a given moment into account. Furthermore, irrespective of the actual conditions and possible future outcomes, risk, safety, and security will always be a construct in people’s minds. Every individual has different sets of objectives, or value the same objectives differently, creating different perceptions of the same reality. This also impacts people in an emotional way and determines the mental models with which they perceive reality. Hence, what is to be considered safe and secure for one individual, organization, or society, can be very unsafe to another, when objectives are different or when they are valued differently in equal circumstances. This is also the case in industrial parks, where levels of awareness and importance of safety vary from one organization to another.

Reality, in itself, will always need an interpretation, and therefore, can only be perceived according to the mental models present in the minds of those assessing the situation. So, there will always be a remaining level of uncertainty and residual lack of understanding related to risk, safety, and security. Consequently, the perceptions and mental models of the moment are also to be taken into account when studying risk, safety, and security. As such, organizations with a lower sense of awareness regarding safety and security and with less attention for risk and risk management could present an additional hazard to other organizations within the same industrial park.

Safety science should, therefore, aim for the highest possible quality of perception and methods to develop this quality of perception, where the deviation between reality itself, as it is, and the perception of that reality is the lowest possible. It is the ever-continuing difference and discussion between constructivism and positivism, studying reality or the perception of that reality when socio-technical systems are concerned. However, both approaches can start from the same fundamental ontological and semantic foundation.

3. Understanding and Defining Risk, (Un)Safety and (Un)Security, Proposing an Ontological and Semantic Foundation for Safety and Security Science

As Möller, Hansson, and Peterson [

1] mention, the concepts we need to define come in clusters of closely related concepts. Any serious work on definitions should start with a careful investigation of the relevant cluster, in order to determine if and how the concepts can be defined in terms of each other, and on the basis of that, which concept should be chosen as the primary definiendum.

3.1. The Importance of Standardization and Commonly Agreed-Upon Definitions of Concepts

As indicated earlier, science is served with clear and commonly agreed-upon definitions of concepts and well-defined parameters. Having these precise definitions of concepts and parameters allows for standardization, enhancing communication, and allowing for an unambiguous sharing of knowledge and comparison of scientific results. Brazma, a life science researcher, formulates it as follows:

“To obtain new insights and knowledge, huge amounts of information from experiments need to be transformed into executive summaries. To be able to do this, the information needs to meet certain criteria. First, it should include the elements that are essential to understand the phenomena that are investigated. One will need to know what units are used to express measurements. Second, the information should be presented in a way it can be parsed by a computer program correctly, pulling out the relevant descriptions in the correct semantic fields and standard names should be used to describe common properties. Finally, the information should meet with high quality standards to be usable in new contexts. This needs a formal language, and therefore controlled vocabularies and ontologies should be used.” [

13]

When concepts can be defined in different ways, giving different meanings to the same concepts, it is much harder to share knowledge and handle large amounts of data because, each time they are used, the concepts and their interpretation need to be explained over and over again. Consequently, these different explanations can also lead to misunderstanding and flawed conclusions when the concepts are changing, not clear, and/or ambiguously defined. As such, it is very likely that a lack of standardization hampers progress in science.

3.2. Foundations and Philosophies of Science

As it is the purpose to provide a fundamental way of looking at the concepts of risk and safety, it should not matter from which perspective this foundation is regarded and, therefore, should cater to whatever viewpoint one has on science. The proposed foundation should, as such, be equally available for any scholar or academic, independent from the scientific approach or philosophy one adheres to and is why this article does not wish to expand on the differing viewpoints on science or take any position in this debate. Any true foundation should be able to be inclusive in that regard, and we believe that the proposed foundation remains valid irrespective of the chosen scientific philosophy, as it can be used for either a qualitative or a quantitative approach. Also, the observations concerning the historical evolution of the understanding of the concepts of risk and safety can be seen as an inductive way of reasoning to come to the findings of the ontological and semantic foundation. While, at the same time, it is also possible to regard the proposed foundation as the result of deductive reasoning, starting from the etymological overview on risk and safety and the chosen definition of risk.

3.3. A Semantic Foundation for Risk

Semantics is the linguistic and philosophical study of meaning, in language, programming languages, formal logic, and semiotics. It is concerned with the relationship between signifiers—like words, phrases, signs, and symbols—and what they stand for, their denotation.

While standard definitions for safety and security are lacking, this is not so for the concept of risk. Regarding the concept of “risk”, many opinions and definitions exist, for instance, the Society for Risk Analysis Glossary alone offers at least seven different qualitative definitions for risk, expressing different views on the concept of risk. Unfortunately, they are not always aligned with nor fitting the proposed ontology.

For instance:

“Risk is the possibility of an unfortunate occurrence”.

It indicates a limited and exclusive negative view on risk, a view that is not consistent with the recent development and understanding of the concept “risk” in the twenty-first century, as demonstrated in the etymological and etiological overview.

Or also:

“Risk is uncertainty about and severity of the consequences of an activity with respect to something that humans value”.

While this definition is already more aligned with the proposed ontology, it is still inadequate, as it is an incomplete definition because uncertainty in the concept of risk is not restricted to the severity of the consequences. Also, the uncertainties regarding possible events, the nature of the consequences, and even uncertainties regarding the objectives themselves are all elements that matter in understanding risk. Furthermore, effects (consequences) can also result from situations and are not limited to the consequences of activities.

However, an encompassing internationally agreed upon and standardized definition is available that fits with the proposed ontology, providing a solution to the non-inclusivity of many available definitions of risk. The International Organization for Standardization (ISO) is an independent, non-governmental international organization with a membership of 161 national standards bodies. Through its members, it brings together experts from all over the world (from both industry and the academic world) to share knowledge and develop voluntary, consensus-based, market-relevant International Standards that support innovation and provide solutions to global challenges (ISO). Due to the way ISO standards are developed, it can be considered as a very stringent way to develop knowledge that also has a high level of acceptance worldwide.

ISO 31000 is the current standard on risk management, and it is adopted by an ever-increasing number of nations (via their national standardization bodies), making it a truly worldwide used and known standard. ISO 31000 defines risk as follows:

“Risk is the Effect of Uncertainty on Objectives”.

This definition is arguably the only worldwide officially accepted, known, and used standardized definition of risk. It is challenging, concise, but, at the same time, also covering all possible types of risk when each part of the definition is understood in its most encompassing way. It would be conceivable to find an easier wording for this definition (e.g., risk is an uncertain effect on objectives), but this would not necessarily be a better one.

The ISO 31000 definition of risk has the merit that it fits with the proposed ontological foundation. The effect of uncertainty stands for the uncertain future where anything can happen (e.g., events and consequences) and links this notion with the essential element of value represented by the concepts “effect” (positive, negative or both) and “objectives”. As such, it embraces the three essential elements that are needed for risk to exist (conditio sine qua non).

Furthermore, it incorporates these elements in the most concise yet encompassing form. As indicated before, these essential elements are “objectives”, “uncertainty”, and “effect”. All three elements need to be present and are indispensable. Leave one of these elements out and the word risk no longer has meaning. Risk will always be some sort of function in relation to these three elements. One could compare it with the same way in which fire needs “fuel” (objectives), “heat” (effects), and “oxygen” (uncertainty) for fire (risk) to exist. No fuel, not enough heat, or no oxygen, and it is impossible to have a fire. Also similar to risk, the presence of fire can have an effect that is positive, negative, or both. The same way as with fire, risk needs to be managed carefully to reach an optimum effect on objectives.

Taking the proposed ontology and definition of risk as a reference allows us to define safety and security and their antonyms in an analogous, unambiguous, and encompassing way. Risk and safety—where safety needs to be understood in a broad perspective including security—are tightly related, and the meaning and understanding of these two concepts have evolved in similar ways. The understanding expanded from a purely negative loss perspective towards a more encompassing and inclusive perspective, including the actual performance related to objectives. Today, according to ISO 31000:2018, it is clear that the effect of uncertainty can be negative (loss), positive (gain), or both. Also, in safety science, it becomes increasingly clearer that the domain of safety does not only cover the situation of being protected against loss (Safety-I) but that it also includes the condition where a positive/excellent performance makes achieving and maintaining objectives more certain (Safety-II) [

89].

As such, safety and security are no longer concepts that are solely described in negative terms, such as being vulnerable to or protected against negative things happening. Today, risk, safety, and security can also be linked to what one actually wants or needs and how to get it, instead of solely being concerned with what one does not want. It is this most obvious part, “the objectives”, that is often forgotten in many definitions and concepts.

It has to be stressed again that none of the earlier developed concepts and theories regarding safety, security, and risk are to be dismissed, because each of them holds the truth of the perspective and awareness of its timeframe and field of knowledge. However, today, a holistic view on risk and safety exists. Hence, the proposed foundation is semantically based on the definitions used in the inclusive ISO 31000 (2009/2018) standard. Consequently, it is meant to expand the vision on the concepts of risk, safety, and security and to tie them together in a semantic and ontological way to form a basic theory from which these concepts can be studied, expectantly leading to the generation of new insights and methods to deal with safety, security, and risk in ever more proactive ways.

3.4. Ontological Foundation of Risk, Safety, and Security

Ontology is the philosophical study of the nature of being, becoming, existence, or reality, as well as the basic categories of being and their relations. Ontology often deals with questions concerning what entities exist and how such entities may be grouped, related within a hierarchy, and subdivided according to similarities and differences. There is an extensive amount of literature available regarding the different perspectives on ontologies and how to engineer them. However, this is beyond the scope of this paper. The way we intend it to be is that an ontology is a set of concepts and categories in a subject area or domain that shows their properties and the relations between them. It is a particular theory about the nature of being or the kinds of things that have existence. Keet (2018) formulates it as follows:

“ontologies provide an application-independent representation of a specific subject domain, i.e., in principle, regardless the particular application. Or, phrased positively: (re)usable by multiple applications.” [

98]

The definitions of the terms risk, safety, and security vary widely in different contexts and technical communities. As indicated before, many scholars have discussed this topic and provided their input on how to understand these constructs. All of these attempts to come to a standardized understanding are valid when consistent with the perspective from which they were drafted. However, most of these definitions are related to the sector or subject domain they belong to, and it is questionable whether a universal and general definition is already available that is valid in any domain, circumstance, or situation. Furthermore, as was already mentioned above, in some languages, the same word is used for both safety and security. However, even in languages that offer two distinct words, the meaning of each term varies considerably from one context to another [

95]. Moreover, when talking about safety and security, people actually mean their opposites, which could be named ‘unsafety’ and ‘unsecurity’.

This lack of a consistent and unified perspective on the concepts of risk, safety, and security makes measurement or comparison very difficult because, when there is no commonly accepted way to define risk, safety, or security, and its opposite, it becomes very difficult to measure and compare the level of (un)safety/(un)security of situations and organizations in an unambiguous or objective manner, certainly not amongst different industries, sectors, or societies or the different organizations that belong to the same industrial park.

Also, it is more difficult to think of proactive solutions that generate safety and/or security instead of solely developing reactive methods that prevent unsafety and unsecurity. As such, one misses the opportunity to improve safety and security performance proactively and by design, by acting before anything bad has happened. While safety and security both deal with risk [

97], the question can be asked how all of these concepts are linked?

Risk, safety, and security are concerned with things that matter and that have significance or certain value to people and mankind. As such, this is a commonality between these concepts, and, therefore, the primary definiendum for this ontology is the concept “objective”, which can be defined as follows:

“Objectives are those matters, tangible and intangible, what individuals, organizations and societies (as groups of individuals) want, need, pursue, try to obtain or aim for. Objectives can also be conditions, situations or possessions that have already been established or acquired and that are, or have been, maintained as a purpose, wanted state or needed condition, whether consciously and deliberately expressed or unconsciously and un-deliberately present”.

What one “wants or needs”, anything that can be considered as some sort of value, can be considered being one’s “objectives”, with the concept “objective” understood in its most encompassing way. As such, this idea is in line with the more modern ideas on risk, as referenced in the introduction, where risk is also linked to what humans value [

11]. It is why the ISO 31000 definition of risk is a good starting point for our ontological and semantic foundation.

At the heart of this proposed foundation and the definition of risk, lies the concept of objectives. This, in contrast with a more traditional view of risk, being considered mainly the domain of uncertainty. This is, of course, true when a limited view on risk is adopted, where only a very limited range of objectives is considered in isolation, such as in finance or engineering. However, it is a view that no longer fits with today’s complex reality and its multitude of intertwined objectives that play a role in Enterprise Risk Management or the safety and security of industrial parks. Risk only exists when objectives are linked and exposed to a defined reality—the concept of objectives to be understood in its most encompassing way, as expressed in the proposed definition. As such, at the core of risk, the principal part of its definiens is the encompassing concept of objectives.

However, risk is always concerned with the future, and the future is uncertain, as it is always—to a certain degree—uncertain what will happen in the future. Often, it is also uncertain how this uncertainty (uncertain events) can affect the objectives that are linked and exposed to a certain reality. This combination of uncertainties and effects is what can be called the effect of uncertainty, which in the end can be positive, negative, or both. Because both good and bad things can happen (even simultaneously), creating effects that affect the involved objectives. As such, risk can be seen as being the effect of uncertainty on objectives.

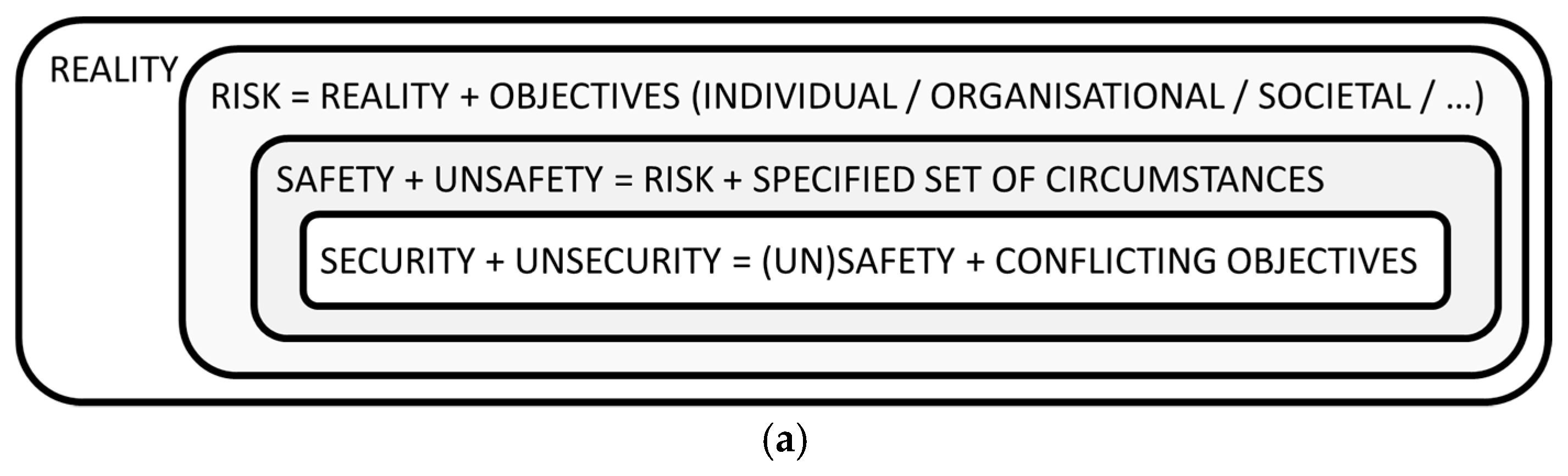

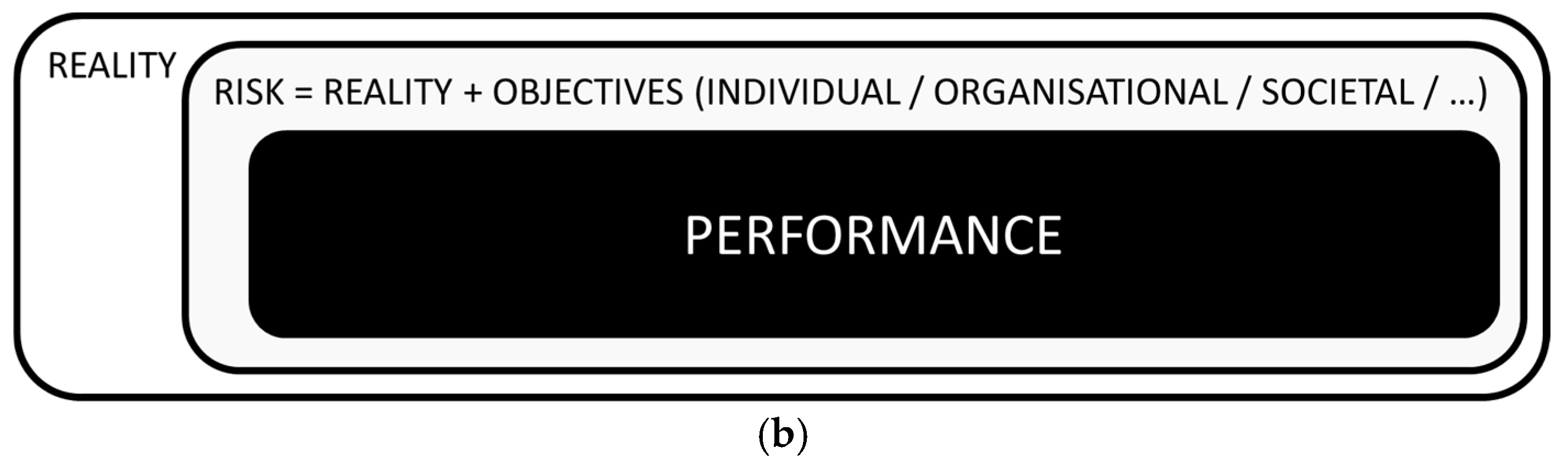

Figure 1 attempts to capture this central ontological base from which also a semantic foundation for safety and security is drafted.

Figure 1a represents a defined reality (say the environment of an industrial park) at a given moment (T), including all of its future possibilities, where

Figure 1b is the reflection of the result of that same reality when action or a lack of action has led to an actual result in time (same industrial park at time T+1).

The proposed ontology tries to give an overview of how risk, safety, security, and performance can be regarded and how they fit together in a timeline. It starts from a very broad perspective on reality as it is (to be seen as possible sets of circumstances) at a certain moment in time (T), including all of the possible future states of that reality. This reality is to be seen as the cosmos where everything is possible and where the future is less than 100% certain. When objectives are linked and exposed to that reality, risk arises.

3.5. Linking and Differentiating Risk and Safety

The link between risk and safety can be seen as follows: risk, in order to exist, requires the presence of a possible reality (set of circumstances) that can affect involved objectives. As such, all three of the following elements: “objectives”, “effects” that can affect those objectives, and “uncertainty”, related to both the objectives and the effects, need to exist to have risk.

In industrial parks, for instance, industrial processes often work with hazardous substances. When these substances escape the intended environment of the process, this can cause (substantial) loss and damage within or even beyond the industrial park. Therefore, the containment of the hazardous substance is an important objective. As a result, there’s also the risk of the loss of containment of hazardous substances due to uncertain events, causing uncertain effects, where such loss would immediately interfere with a number of other objectives, such as the health and wellbeing of personnel, preservation of assets, and many more.

Safety (including security), although in a way related to uncertainty, mainly concerns the objectives and the effects that can affect the concerned objectives when a specified set of circumstances is known or determined. By itself, safety (including unsafety) is the same as risk, but with less emphasis on uncertainty and more attention to the possible effects that can be linked with an actual situation. When conditions are such that the likelihood of loss of containment is very low, it is obvious that regarding the hazardous substance, the condition is safe. But when the conditions are such that loss of containment is imminent, the situation is unsafe. Understanding risk and safety (including security) then both require the understanding of the objectives involved, the possible effects that can affect these objectives, the likelihood of occurrence of these effects, and the level of impact—and its associated likelihood—of these effects. The only difference is that risk deals with a possible future state of reality, while safety is more concerned with the actual conditions and effects of a specific set of circumstances. In other words, one could say that the (level of) risk at a certain moment is the (level of) safety of the next moment, when the related uncertainty has transpired into the certainty of actual conditions, linking the objectives with the actual effects of a present situation/ set of circumstances. When these effects are mainly positive, they enhance safety, providing support for the objectives involved. While the negative effects degrade safety, or increase unsafety, as they subtract value from present and future objectives.

As such, the ontological relationship and hierarchy between reality and risk is clear. When no (subjective or objective) value can be attributed to reality, there is nothing at risk, and questions on safety and security are irrelevant because objectives are absent. There is no risk. Anything that happens is just an event.

REALITY + OBJECTIVES → RISK

Risk is always related to a future state, hence the presence of uncertainty. When risk is considered in a concrete and specified set of circumstances with its specific objectives (value) at a given time (T), “risk” becomes the related “safety”, including “unsafety”. The specific objectives that are likely to be obtained and safeguarded (likelihood of value obtained and kept) can be considered (being) safe(ty). Unsafe(ty) is to be used for those specific objectives that are likely not to be safeguarded or obtained (likelihood of value lost). As such, the relationship and hierarchy between risk and safety is established.

RISK + SPECIFIED SET OF CIRCUMSTANCES → SAFETY + UNSAFETY

3.6. Linking and Differentiating Safety and Security

So far, safety and security have been regarded in the same way. However, what is the distinction between these two similar yet different concepts? What are the common elements that make security the same as safety, and what is the distinction between these two words that possibly separates them? In essence, as indicated in several languages all over the world, security can be regarded as a subset of safety. As such, security requires additional elements to be distinguished from safety when safety is regarded in a narrow perspective (i.e., excluding security issues).

3.6.1. A Distinction on the Level of “Objectives”

A way to look at the difference between safety and security, on a fundamental level, is to have a look at the concerned objectives because a typical aspect of a security setting is the involvement of multiple parties (with a minimum of two). By itself, different perceptions come into play when more than one party is present. Accordingly, different objectives also become involved. One of the parties will try to maintain and protect a set of objectives, where one or more opposing parties will have different opinions on those objectives, as they intentionally will try to affect these objectives in a negative way (which is a positive effect for the opposing party). When looking at security situations from this perspective, it becomes clear that security issues can be regarded as situations or sets of circumstances where different, non-aligned objectives of stakeholders conflict with each other. (A stakeholder is a person or organization that can affect, be affected by, or perceive themselves to be affected by a decision or activity—ISO 31000 definition).

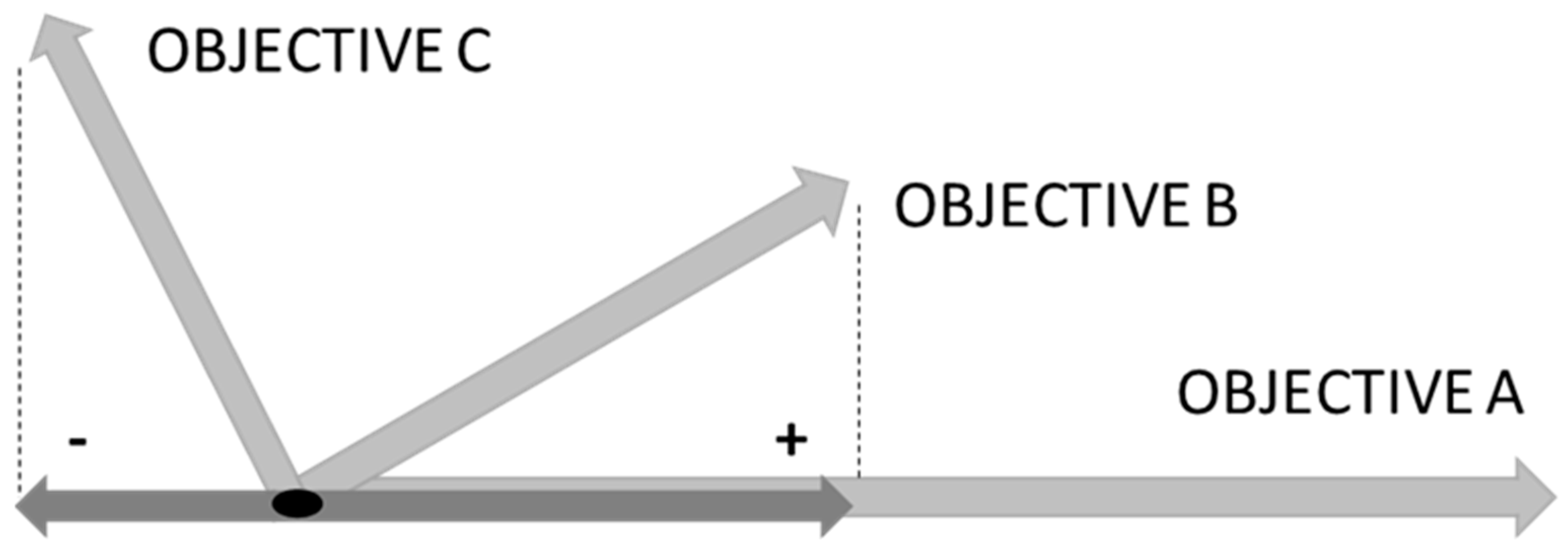

Supposed that objectives can point in a defined direction (e.g., objective A in

Figure 2) and that (non)alignment of objectives could be determined in a geometrical way, the difference between safety and security can then be determined by measuring the level of non-alignment of objectives of the different parties involved. Once the non-alignment of objectives becomes more than 90° (supposing fully aligned objectives are at a 0° deviation of each other), it is apparent that these objectives are conflicting (e.g., objective C in

Figure 2), and achieving the objective of one party (C) could cause negative effects on the objectives of the other party (A). Therefore, one could argue that in security management, discovering the presence of different, opposing, or non-aligned objectives is crucial.

This is conceptually clear; however, it might be very difficult to operationalize. For instance, someone who wants to work faster for the organization he works for, but therewith acting unsafe, might perceive his actions aligned with the operational objectives of the organization, but they are not, as these actions are not aligned with the organizations’ safety objectives. In such a case, it would be hard to determine the “degree of deflection” presented by the situation. In any case, it can be argued that even when the deviation is more than 90° (meaning that an opposing component between the objectives exists), since there’s no intention to cause a loss, this kind of situation is to be considered belonging to the domain of safety instead of security. As such, it seems that intentionality is also a governing factor in security. But, the presence of conflicting objectives is the distinguishing factor.

A level of distinction between safety and security, therefore, also can be found in the level of alignment of objectives of individuals, organizations, or societies.

3.6.2. A Distinction on the Level of “Effect”

In managing risk, risk professionals mainly try to determine the level of risk when risks have been identified. But, the assessment of the nature of risk is also a very important element to take into account in managing risk and, therefore, also in determining and managing safety.

The level of risk can be understood as being the level of the impact of effects on objectives (negative and positive) in combination with their related level of uncertainty. It is often expressed in the form of a combination of probabilities and consequences. The nature of risk, on the other hand, is more linked to the sources of risk and how these risks emerge and develop. In the ISO Guide 73, a risk source is defined as being an element that, alone or in combination, can give rise to risk [

99]. It is in the understanding of possible risk sources that the difference between safety and security can be found.

When continuing on the proposed ontology, the necessary elements of risk and the definition of risk source in ISO Guide 73, safety can be seen as “a condition or set of circumstances, where the combination of likelihood and negative effects of uncertainty on objectives is low”. When safety is regarded in a very general way, security then is just a sub-set of safety because when the likelihood of negative effects of uncertainty on objectives is low, this also means that a secure(d) condition or set of circumstances exists.

As such, a distinction between safety and security can also be revealed when looking at the “effects” on objectives, introducing the idea that effects can be regarded as being “intentional” or “unintentional” (accidental). When negative effects on objectives are “intentional”, it is appropriate and correct to use the term (un)security instead of speaking of (un)safety. Consequently, it would also be inappropriate to use the term “security” when the effects involved are “unintentional”.

As an example, one could consider the situation in an industrial park where people are not supposed to trespass on one of the compounds. While an intentional, but unauthorized ingress on the compound to cause damage or get unauthorized information (conflicting and opposing objectives) can be seen as a security issue, the ingress by a person aiming for a shortcut, without the explicit intention to enter any of the installations (not aligned objective at +/−90°), can be seen as a safety issue.

3.6.3. A Distinction on the Level of “Uncertainty”

Also, a distinction on the level of uncertainty can be made. Safety science and safety management often depend on statistical data in order to develop theories and decide upon safety measures. The nature of unintentional effects makes it so that the same events repeat themselves in different situations and circumstances. Furthermore, any individual can be taken into account for objectives that are very much aligned, such as keeping one’s physical integrity. This provides for a vast amount of data that can be used to build theories and consider measures.

Unfortunately, in security issues, the intentional nature and the non-alignment of objectives makes it so that each time, repeated attempts are made to invent new tactics and techniques to achieve the non-aligned objectives, making it much more difficult to build on statistical data to determine specific uncertainties.

To conclude, when a specified set of circumstances also contains conflicting objectives, safety and unsafety can also be regarded as being “security” and “unsecurity” for those risks related to the conflicting objectives. Hence, also the relationship and hierarchy between safety (including unsafety) and security (including unsecurity) can be established.

SAFETY + UNSAFETY + CONFLICTING OBJECTIVES → SECURITY + UNSECURITY

3.7. Linking and Differentiating Safety and Performance

Finally, when time has expired and an actual situation (T+1) exists, safety and unsafety are mirrored and expressed by the results of an actual performance. Hence, any performance indicator can be seen as being a lagging safety or security indicator for the objective(s) it covers.

Surely, the same performance indicators can also be leading (safety) indicators, as they correspondingly reflect a certain situation at a defined moment. As such, they are also risk indicators that can give warnings or signs of possible future levels of safety. It all depends from which position on a timeline one considers the risk–safety–performance continuum, as these three elements are always simultaneously present regarding the objectives one holds.

For instance, a well-designed and adequately-maintained installation, operating with an experienced and well-trained crew (performance), will certainly have a positive effect on the situation regarding the objective of containment of hazardous substances (risk), therefore also safeguarding other objectives and increasing the level of safety (safety). Likewise, suitable protective gear and training offer the potential to limit the effects of a loss of containment, adding to the level of safety. Both “risk controls” influence the risk, safety, and ultimately also performance when everything functions as intended and no losses are to be noticed.

When societal objectives are taken into account in addition to individual and corporate goals, this will lead to a more sustainable performance and social responsibility. As such, risk is a leading indicator for safety, safety is a leading indicator for performance, and finally, performance becomes a leading indicator for sustainability and corporate social responsibility (CSR) when individual, corporate, and societal objectives are taken into account in a balanced way.

3.8. An Inclusive Ontology

Due to the fact that this proposed ontological foundation is based on an encompassing definition of objectives, it is independent of any category of objectives and, therefore, covers any type of risk or safety domain.

3.9. Definitions for Safety and Security Science based on the Definition of Risk Proposed in the ISO31000 Guidance Standard

Let us look back at the definition of risk according to ISO31000:

“Risk is the effect of uncertainty on objectives”

It fits with the three aspects that are needed to have risk:

The semantic foundation can be seen as follows: risk is an uncertain effect on objectives, while safety is a possible and likely result of that uncertain effect in specified circumstances. It is why both concepts have evolved over time in similar ways and at a comparable timing. People want to take risks and be safe at the same time. They do not want to run risks, and they also want to avoid unsafety. Both are important and possible at the same time.

Risk management, in a way, started closely related with gambling activities. Professional poker players know that they do not win by chance or as a result of acts from the gods, but through carefully gathering information and analyzing/considering options based on that knowledge. It allows them to increase the quality of their perception and the probability that they make the right decision to support their aim of winning the game. They do it by taking more risk (aiming for higher gain) when it is appropriate to do so and limit the risks they take and run (limiting the amount of possible gain and loss) when it is the wiser decision, each time counting on the fact that the risk run for the decisions they take is low. However, they will only be safe when the game is over, and the profit has been paid. In the same way, an industrial installation will only be safe when the right decisions have been made, over and over again. As such, safety and risk are the same, where risk, and how it is managed, determine the future of one’s safety and performance.

Based on the definition of “objectives” proposed in paragraph of

Section 3.4, the following distinguishing definitions regarding safety and security can be presented:

3.9.1. Safety/Unsafety

Safe(ty) (broad perspective, including security) = “the condition/set of circumstances where the combination of likelihood and negative effects on objectives is Low”

Safe(ty) (narrow perspective) = “the condition/set of circumstances where the combination of likelihood and unintentional negative effects on objectives is Low”

Unsafe(ty) = “the condition/set of circumstances where the combination of likelihood and negative effects on objectives is High”

3.9.2. Security/Unsecurity

Secur(e)(ity) = “the condition/set of circumstances where the combination of likelihood and intentional negative effects on objectives is Low”

Unsecur(e)(ity) = “the condition/set of circumstances where the combination of likelihood and intentional negative effects on objectives is High”

Including the alignment perspective into the above proposed definition for security and unsecure(ty), it could also be envisaged as follows:

Secur(e)(ity) = “the condition/set of circumstances where the alignment of objectives is high and where the combination of likelihood and intentional negative effects on objectives is Low”

Unsecur(e)(ity) = “the conditions/set of circumstances where the alignment of objectives is low and where the combination of likelihood and intentional negative effects on objectives is High”

3.9.3. Alternative Formulation (Safety II Perspective)

Safety (broad perspective) = “the condition/set of circumstances where the combination of likelihood and positive effects on objectives is High”

Unsafe(ty) = “the condition/set of circumstances where the combination of likelihood and positive effects on objectives is Low”

Unsecur(e)(ity) = “the conditions/set of circumstances where the alignment of objectives is low and where the combination of likelihood and intentional positive effects on objectives is Low”

Security = “the condition/set of circumstances where the alignment of objectives is high and where the combination of likelihood and intentional positive effects on objectives is High”

3.9.4. Other Related Definitions

Performance = the condition or set of circumstances resulting from positive and negative effects on objectives.

Excellence = the condition or set of circumstances that improves the positive effects and reduces the negative effects on objectives.

Failure = the condition or set of circumstances where the negative effects on objectives are high.

Success = the condition or set of circumstances where the positive effects on objectives are high.

4. Discussion