Effect of an Instructor-Centered Tool for Automatic Assessment of Programming Assignments on Students’ Perceptions and Performance

Abstract

1. Introduction

2. Related Work

3. Description of the Case Study

3.1. Course Context

3.2. Automated Assessment Tool

- IAPAGS is instructor-centered and its use is transparent for students. These features allowed for incorporating the automated assessment tool into the course without modifying the course methodology or the course syllabus.

- IAPAGS can automatically generate feedback and grades for student programming assignments uploaded through Moodle 2.6 (the version of Moodle used in the course at the time of the study) without modifying the Moodle instance.

- IAPAGS is able to assess client-side web applications that students can submit through a single file by using common formats (ZIP, RAR, TAR, …) or through a URL pointing to a public web server.

- IAPAGS is able to assess Node.js applications submitted through single files using common formats.

- IAPAGS allows for teachers to define their own test cases and to specify the generated feedback as well as how the grades of the assignments are calculated.

- When using IAPAGS, it is possible to execute the students’ source code in a secure environment.

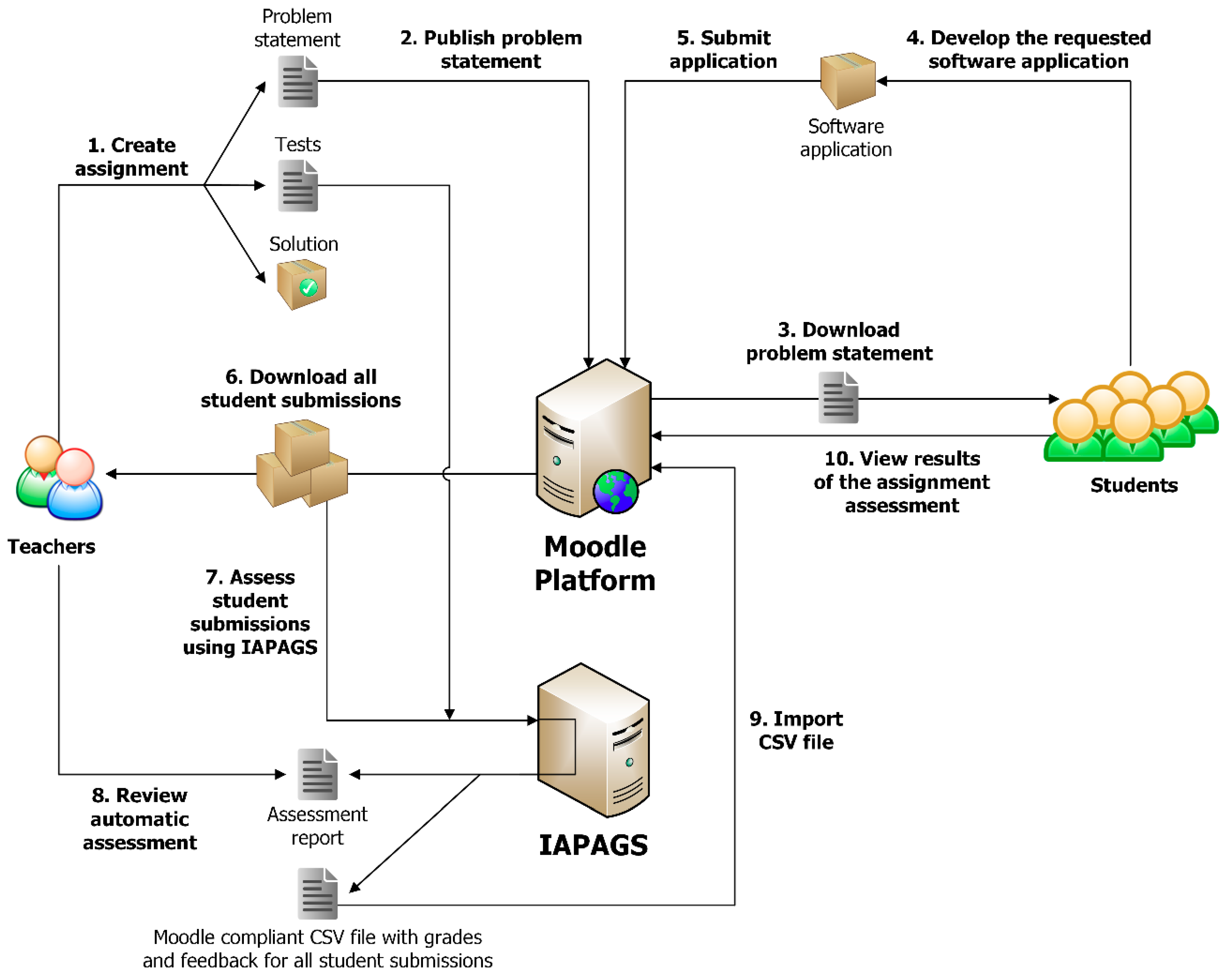

- 1)

- First, the course teachers create the programming assignment for the students. On the one hand, the problem statement of the assignment must be elaborated, describing the requirements of a software application that students will have to develop in detail. On the other hand, the teachers must also develop a battery of tests for the IAPAGS tool that will be used to automatically assess the software applications that are submitted by the students. Details about how these tests have to be implemented are provided later in this section. Besides the problem statement and the battery of tests, it is also recommended that the teachers produce a solution for the assignment and test it against its battery of tests.

- 2)

- The teachers publish the problem statement of the assignment on the Moodle platform of the course. The battery of tests can remain private.

- 3)

- The students download the problem statement of the assignment. They are given a certain period of time to develop the software application described in this statement and submit that application to the Moodle platform.

- 4)

- The students develop the requested software application. For this task, the students are free to use any development tool (code text editor, IDE-Integrated Development Environment, debugging tool, …) that they want.

- 5)

- The students submit the application they have developed for the assignment to the Moodle platform.

- 6)

- Once the assignment deadline is reached, the teachers download all of the software applications that were submitted by the students in the previous step to the Moodle platform.

- 7)

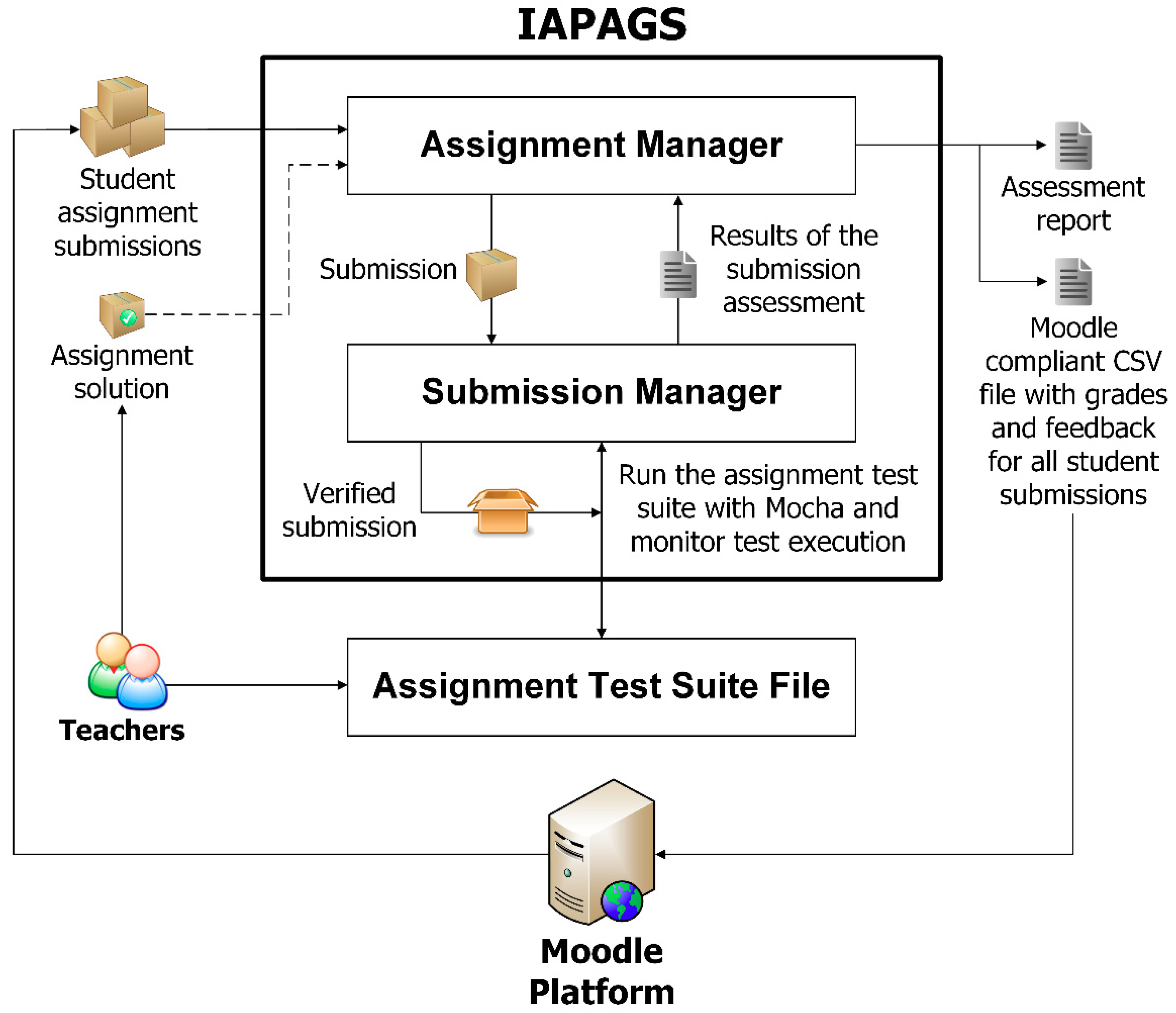

- The course teachers use the IAPAGS tool to generate a grade and an instance of feedback for each student submission. The battery of tests developed for the assignment in the first step is used in order to conduct this automatic assessment with IAPAGS. The output of the automatic assessment that is conducted by IAPAGS consists of two files: a text file containing a report with details about the assessment (errors occurred during the process, grades and feedback for each submission, the result of each individual test and the score given by these tests, messages for instructors, paths of the files and URLs assessed, …), and a Moodle compliant CSV file that contains all of the grades and feedback for the assessed submissions.

- 8)

- The teachers review the results of the automatic assessment performed by the IAPAGS tool. At this point, they can fix errors or make improvements in the assessment (e.g., by providing more detailed feedback for certain issues or by generating more accurate grades). To do this, the teachers should modify the assignment’s battery of tests and then perform a new automatic assessment. In this phase of the assessment process, the teachers also have the option of comparing students’ submissions to detect plagiarism.

- 9)

- After verifying the results of the automatic assessment, the course teachers import the CSV file that was generated by the IAPAGS tool (in step 7) to the Moodle platform to add the generated grades and feedback to the course gradebook, which makes this information available to the students.

- 10)

- The students can see the results of the automatic assessment: the grade of their assignment and feedback on their submission. Based on this feedback, the students can improve their application and, if the course teachers allow it, resubmit it to get new feedback and a better grade.

4. Evaluation Methodology

5. Results and Discussion

5.1. Student Survey

5.2. Student Assignment Submissions

5.3. Exam Grades

6. Conclusions and Future Work

Funding

Conflicts of Interest

References

- Watson, C.; Li, F.W.B. Failure rates in introductory programming revisited. In Proceedings of the 19th conference on Innovation and Technology in Computer Science Education (ITiCSE’14), Uppsala, Sweden, 21–25 June 2014; pp. 39–44. [Google Scholar]

- Mora, M.C.; Sancho-Bru, J.L.; Iserte, J.; Sánchez, F. An e-assessment approach for evaluation in engineering overcrowded groups. Comput. Educ. 2012, 59, 732–740. [Google Scholar] [CrossRef]

- Robins, A.; Rountree, J.; Rountree, N. Learning and teaching programming: A review and discussion. Comput. Sci. Educ. 2003, 13, 137–172. [Google Scholar] [CrossRef]

- Douce, C.; Livingstone, D.; Orwell, J. Automatic test-based assessment of programming: A review. J. Educ. Resour. Comput. 2005, 5, 4. [Google Scholar] [CrossRef]

- Ala-Mutka, K.M. A survey of automated assessment approaches for programming assignments. Comput. Sci. Educ. 2005, 15, 83–102. [Google Scholar] [CrossRef]

- Ihantola, P.; Ahoniemi, T.; Karavirta, V.; Seppälä, O. Review of recent systems for automatic assessment of programming assignments. In Proceedings of the 10th Koli Calling International Conference on Computing Education Research (Koli Calling’10), Koli, Finland, 28–31 October 2010; pp. 86–93. [Google Scholar]

- Caiza, J.C.; Del Alamo, J.M. Programming assignments automatic grading: review of tools and implementations. In Proceedings of the 7th International Technology, Education and Development Conference (INTED 2013), Valencia, Spain, 4–5 March 2013; pp. 5691–5700. [Google Scholar]

- Souza, D.M.; Felizardo, K.R.; Barbosa, E.F. A systematic literature review of assessment tools for programming assignments. In Proceedings of the 2016 IEEE 29th Conference on Software Engineering Education and Training (CSEET 2016), Dallas, TX, USA, 5–6 April 2016; pp. 147–156. [Google Scholar]

- Keuning, H.; Jeuring, J.; Heeren, B. Towards a systematic review of automated feedback generation for programming exercises. In Proceedings of the 21th conference on Innovation and Technology in Computer Science Education (ITiCSE’16), Arequipa, Peru, 11–13 July 2016; pp. 41–46. [Google Scholar]

- Lajis, A.; Baharudin, S.A.; Kadir, D.A.; Ralim, N.M.; Nasir, H.M.; Aziz, N.A. A review of techniques in automatic programming assessment for practical skill test. J. Telecommun. Electron. Comput. Eng. 2018, 10, 109–113. [Google Scholar]

- Ullah, Z.; Lajis, A.; Jamjoom, M.; Altalhi, A.; Al-Ghamdi, A.; Saleem, F. The effect of automatic assessment on novice programming: Strengths and limitations of existing systems. Comput. Appl. Eng. Educ. 2018, 26, 2328–2341. [Google Scholar] [CrossRef]

- Bai, X.; Ola, A.; Akkaladevi, S.; Cui, Y. Enhancing the learning process in programming courses through an automated feedback and assignment management system. Issues Inf. Syst. 2016, 17, 165–175. [Google Scholar]

- Kalogeropoulos, N.; Tzigounakis, I.; Pavlatou, E.A.; Boudouvis, A.G. Computer-based assessment of student performance in programing courses. Comput. Appl. Eng. Educ. 2013, 21, 671–683. [Google Scholar] [CrossRef]

- Amelung, M.; Krieger, K.; Rösner, D. E-assessment as a service. IEEE Trans. Learn. Technol. 2011, 4, 162–174. [Google Scholar] [CrossRef]

- Pieterse, V. Automated assessment of programming assignments. In Proceedings of the 3rd Computer Science Education Research Conference (CSERC’13), Arnhem, Netherlands, 4–5 April 2013; pp. 45–56. [Google Scholar]

- García-Mateos, G.; Fernández-Alemán, J.L. A course on algorithms and data structures using on-line judging. In Proceedings of the 14th conference on Innovation and Technology in Computer Science Education (ITiCSE’09), Paris, France, 6–9 July 2009; pp. 45–49. [Google Scholar]

- Rubio-Sánchez, M.; Kinnunen, P.; Pareja-Flores, C.; Velázquez-Iturbide, Á. Student perception and usage of an automated programming assessment tool. Comput. Hum. Behav. 2014, 31, 453–460. [Google Scholar] [CrossRef]

- Morris, D.S. Automatic grading of student’s programming assignments: an interactive process and suite of programs. In Proceedings of the 33rd Frontiers in Education Conference (FIE 2003), Westminster, CO, USA, 5–8 November 2003; pp. 3–6. [Google Scholar]

- Bey, A.; Jermann, P.; Dillenbourg, P. A comparison between two automatic assessment approaches for programming: An empirical study on MOOCs. Educ. Technol. Soc. 2018, 21, 259–272. [Google Scholar]

- Wang, T.; Su, X.; Wang, Y.; Ma, P. Semantic similarity-based grading of student programs. Inf. Softw. Technol. 2007, 49, 99–107. [Google Scholar] [CrossRef]

- Bey, A.; Bensebaa, T. ALGO+, an assessment tool for algorithmic competencies. In Proceedings of the 2011 IEEE Global Engineering Education Conference (EDUCON 2011), Amman, Jordan, 4–6 April 2011; pp. 941–946. [Google Scholar]

- Wang, T.; Su, X.; Ma, P.; Wang, Y.; Wang, K. Ability-training-oriented automated assessment in introductory programming course. Comput. Educ. 2011, 56, 220–226. [Google Scholar] [CrossRef]

- Edwards, S.H.; Pérez-Quiñones, M.A. Web-CAT: automatically grading programming assignments. In Proceedings of the 13th conference on Innovation and Technology in Computer Science Education (ITiCSE’08), Madrid, Spain, 30 June–2 July 2008; p. 328. [Google Scholar]

- Leal, J.P.; Silva, F. Mooshak: A web-based multi-site programming contest system. Softw. Pract. Exp. 2003, 33, 567–581. [Google Scholar] [CrossRef]

- Higgins, C.A.; Gray, G.; Symeonidis, P.; Tsintsifas, A. Automated assessment and experiences of teaching programming. J. Educ. Resour. Comput. 2005, 5, 5. [Google Scholar] [CrossRef]

- Joy, M.; Griffiths, N.; Boyatt, R. The boss online submission and assessment system. J. Educ. Resour. Comput. 2005, 5, 2. [Google Scholar] [CrossRef]

- Spacco, J.; Hovemeyer, D.; Pugh, W.; Emad, F.; Hollingsworth, J.K.; Padua-Perez, N. Experiences with Marmoset: designing and using an advanced submission and testing system for programming courses. In Proceedings of the 11th conference on Innovation and Technology in Computer Science Education (ITiCSE’06), Bologna, Italy, 26–28 June 2006; pp. 13–17. [Google Scholar]

- Gotel, O.; Scharff, C. Adapting an open-source web-based assessment system for the automated assessment of programming problems. In Proceedings of the sixth IASTED International Conference on Web-based Education (WBE 2007), Chamonix, France, 14–16 March 2007; pp. 437–442. [Google Scholar]

- Srikant, S.; Aggarwal, V. A system to grade computer programming skills using machine learning. In Proceedings of the 20th ACM SIGKDD international conference on Knowledge Discovery and Data mining (KDD’14), New York, NY, USA, 24–27 August 2014; pp. 1887–1896. [Google Scholar]

- Derval, G.; Gego, A.; Reinbold, P.; Frantzen, B.; Van Roy, P. Automatic grading of programming exercises in a MOOC using the INGInious platform. In Proceedings of the European MOOC Stakeholder Summit 2015 (EMOOCS’15), Mons, Belgium, 18–20 May 2015; pp. 86–91. [Google Scholar]

- Lobb, R.; Harlow, J. Coderunner: A tool for assessing computer programming skills. ACM Inroads 2016, 7, 47–51. [Google Scholar] [CrossRef]

- Rodríguez-del-Pino, J.C.; Rubio-Royo, E.; Hernández-Figueroa, Z.J. A Virtual Programming Lab for Moodle with automatic assessment and anti-plagiarism features. In Proceedings of the 2012 International Conference on e-Learning, e-Business, Enterprise Information Systems, & e-Government, Las Vegas, NV, USA, 16–19 July 2012. [Google Scholar]

- Edwards, S.H. Improving student performance by evaluating how well students test their own programs. J. Educ. Resour. Comput. 2003, 3, 1. [Google Scholar] [CrossRef]

- Gutiérrez, E.; Trenas, M.A.; Ramos, J.; Corbera, F.; Romero, S. A new Moodle module supporting automatic verification of VHDL-based assignments. Comput. Educ. 2010, 54, 562–577. [Google Scholar] [CrossRef]

- Ramos, J.; Trenas, M.A.; Gutiérrez, E.; Romero, S. E-assessment of Matlab assignments in Moodle: Application to an introductory programming course for engineers. Comput. Appl. Eng. Educ. 2013, 21, 728–736. [Google Scholar] [CrossRef]

- Jurado, F.; Redondo, M.; Ortega, M. eLearning standards and automatic assessment in a distributed eclipse based environment for learning computer programming. Comput. Appl. Eng. Educ. 2014, 22, 774–787. [Google Scholar] [CrossRef]

- De-La-Fuente-Valentín, L.; Pardo, A.; Kloos, C.D. Addressing drop-out and sustained effort issues with large practical groups using an automated delivery and assessment system. Comput. Educ. 2013, 61, 33–42. [Google Scholar] [CrossRef]

- Bakar, M.A.; Esa, M.I.; Jailani, N.; Mukhtar, M.; Latih, R.; Zin, A.M. Auto-marking system: A support tool for learning of programming. Int. J. Adv. Sci. Eng. Inf. Technol. 2018, 8, 1313–1320. [Google Scholar] [CrossRef]

- Restrepo-Calle, F.; Ramírez, J.J.; González, F.A. Continuous assessment in a computer programming course supported by a software tool. Comput. Appl. Eng. Educ. 2019, 27, 80–89. [Google Scholar] [CrossRef]

- Eldering, J.; Gerritsen, N.; Johnson, K.; Kinkhorst, T.; Werth, T. DOMjudge. Available online: https://www.domjudge.org (accessed on 9 October 2019).

- Mocha. Available online: https://mochajs.org (accessed on 9 October 2019).

- Chai. Available online: https://www.chaijs.com (accessed on 9 October 2019).

- Cohen, J. A power primer. Psychol. Bull. 1992, 112, 155–159. [Google Scholar] [CrossRef] [PubMed]

| Question | M | SD | |

|---|---|---|---|

| Please, state your level of agreement with the following statements:(1 Strongly disagree–5 Strongly agree) | |||

| My overall opinion of the automated assessment tool is positive. | 3.1 | 1.2 | |

| I prefer the reports (i.e., feedback and grades) generated by the automated assessment tool rather than not receiving any feedback at all | 4.3 | 1.0 | |

| I complete the programming assignments with greater motivation if I know that I am going to receive feedback and a grade generated by the automated assessment tool | 3.7 | 1.2 | |

| The reports generated by the automated assessment tool were easily understandable | 2.7 | 1.2 | |

| The reports generated by the automated assessment tool were useful | 3.0 | 1.3 | |

| I have improved my software applications thanks to the reports generated by the automated assessment tool | 3.0 | 1.2 | |

| The feedback generated by the automated assessment tool allowed me to discover bugs in my software applications that otherwise would have remained unnoticed | 3.0 | 1.3 | |

| I believe that the reports generated by the automated assessment tool were provided quickly | 3.0 | 1.2 | |

| The grades generated by the automated assessment tool were fair | 2.5 | 1.2 | |

| I would like this type of automated assessment system to be used in other programming subjects | 3.2 | 1.4 | |

| Assignment | Students Who Submitted the Assignment | First Submissions | Second Submissions | ||||||

|---|---|---|---|---|---|---|---|---|---|

| N | Grade | N | Grade | N (Resubmissions) | Grade | ||||

| M | SD | M | SD | M | SD | ||||

| #1 | 236 | 81 | 28 | 230 | 74 | 34 | 44 (38) | 80 | 30 |

| #2 | 230 | 62 | 35 | 229 | 55 | 36 | 57 (55) | 67 | 34 |

| #3 | 235 | 52 | 28 | 223 | 46 | 27 | 77 (63) | 57 | 32 |

| #4 | 177 | 31 | 35 | 18 | 49 | 37 | 177 (18) | 31 | 35 |

| Assignment | N | First Submissions | Resubmissions | Paired Aamples t-test p-Value (2-tailed) | Cohen’s d Effect Size | ||

|---|---|---|---|---|---|---|---|

| Grade | Grade | ||||||

| M | SD | M | SD | ||||

| #1 | 38 | 40 | 43 | 80 | 29 | < 0.001 | 0.97 |

| #2 | 55 | 38 | 32 | 68 | 33 | < 0.001 | 0.89 |

| #3 | 63 | 40 | 27 | 66 | 28 | < 0.001 | 0.96 |

| #4 | 18 | 49 | 37 | 63 | 30 | 0.03 | 0.57 |

| Students Who Did not Use the Automated Feedback (N = 129) | Students Who Used the Automated Feedback (N = 111) | Independent Samples t-test p-Value (2-tailed) | Cohen’s d Effect Size | ||

|---|---|---|---|---|---|

| M | SD | M | SD | ||

| 5.1 | 2.1 | 5.9 | 2.1 | 0.003 | 0.4 |

© 2019 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gordillo, A. Effect of an Instructor-Centered Tool for Automatic Assessment of Programming Assignments on Students’ Perceptions and Performance. Sustainability 2019, 11, 5568. https://doi.org/10.3390/su11205568

Gordillo A. Effect of an Instructor-Centered Tool for Automatic Assessment of Programming Assignments on Students’ Perceptions and Performance. Sustainability. 2019; 11(20):5568. https://doi.org/10.3390/su11205568

Chicago/Turabian StyleGordillo, Aldo. 2019. "Effect of an Instructor-Centered Tool for Automatic Assessment of Programming Assignments on Students’ Perceptions and Performance" Sustainability 11, no. 20: 5568. https://doi.org/10.3390/su11205568

APA StyleGordillo, A. (2019). Effect of an Instructor-Centered Tool for Automatic Assessment of Programming Assignments on Students’ Perceptions and Performance. Sustainability, 11(20), 5568. https://doi.org/10.3390/su11205568