Monitoring Potato Waste in Food Manufacturing Using Image Processing and Internet of Things Approach

Abstract

:1. Introduction

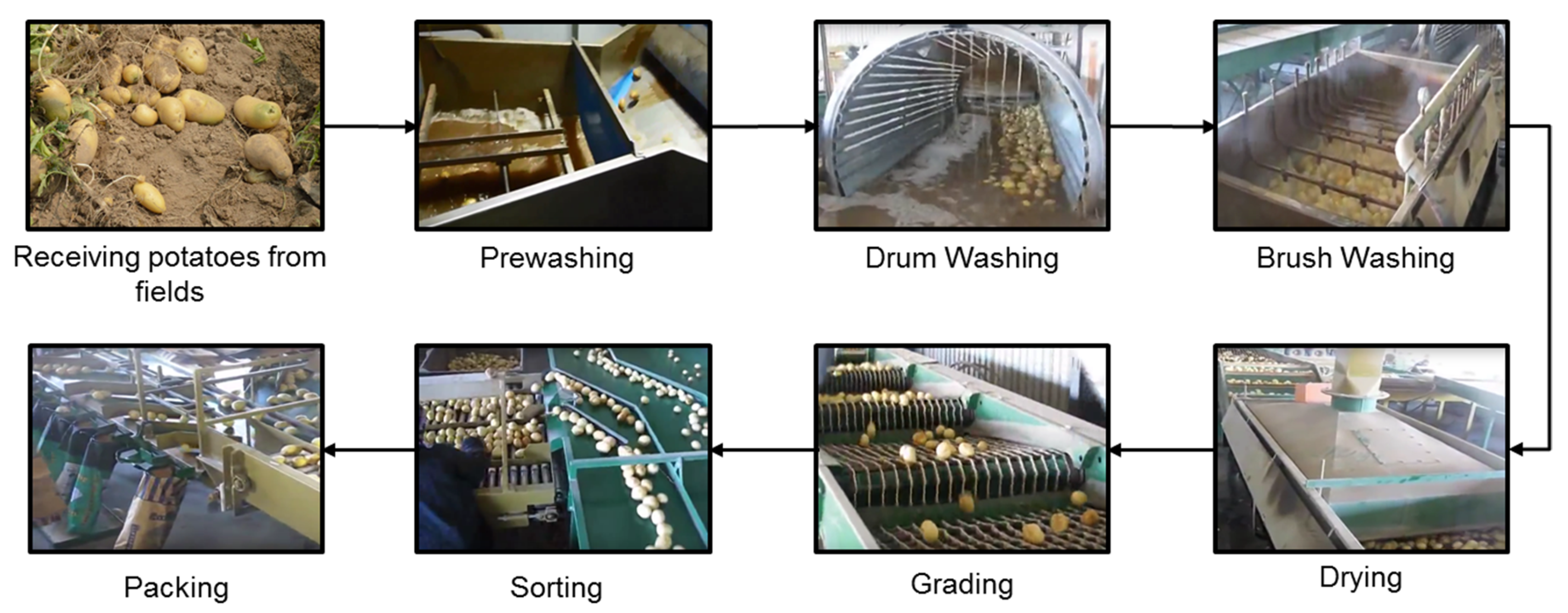

2. A Brief Overview of the Potato Packing Process

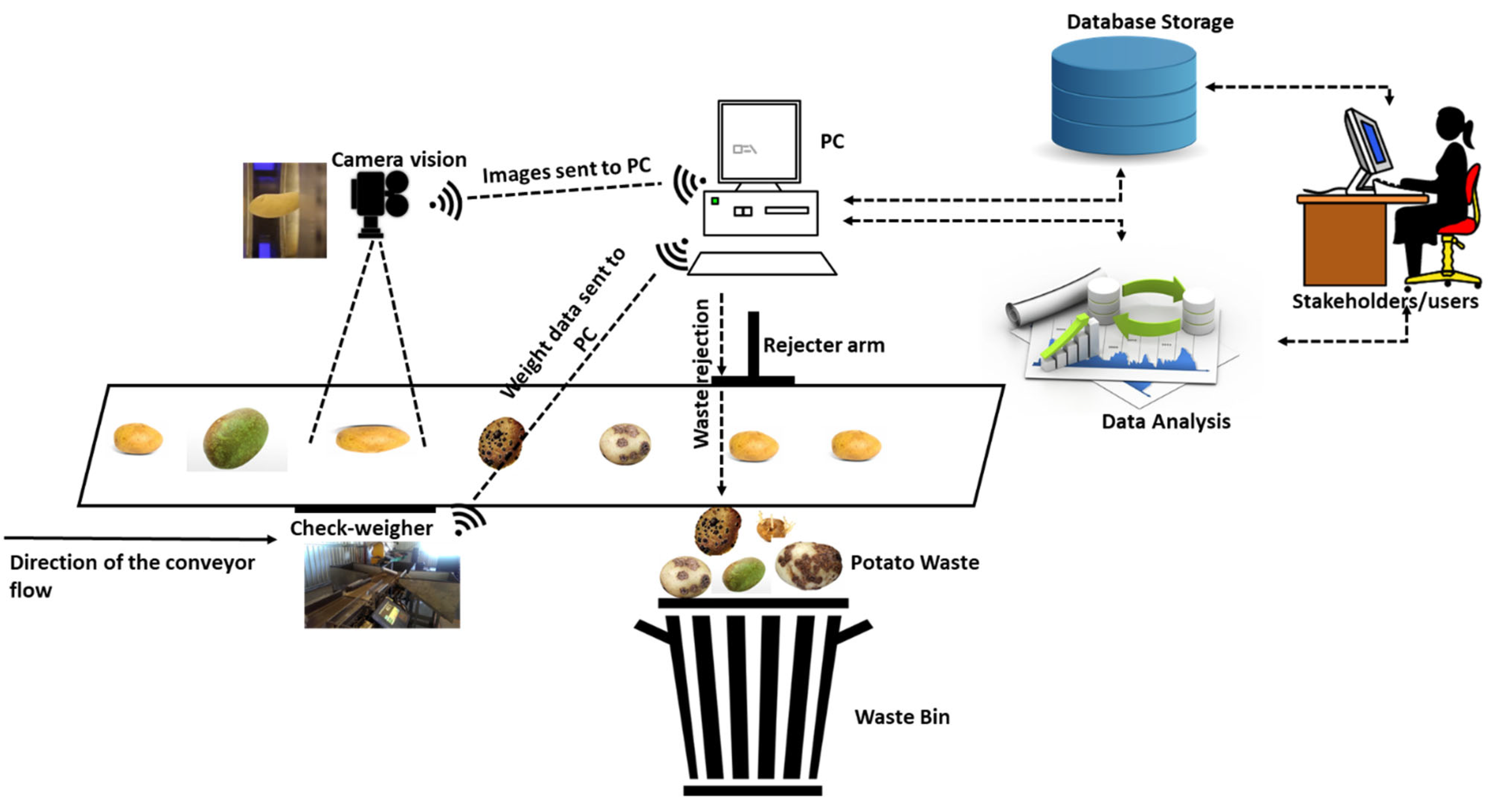

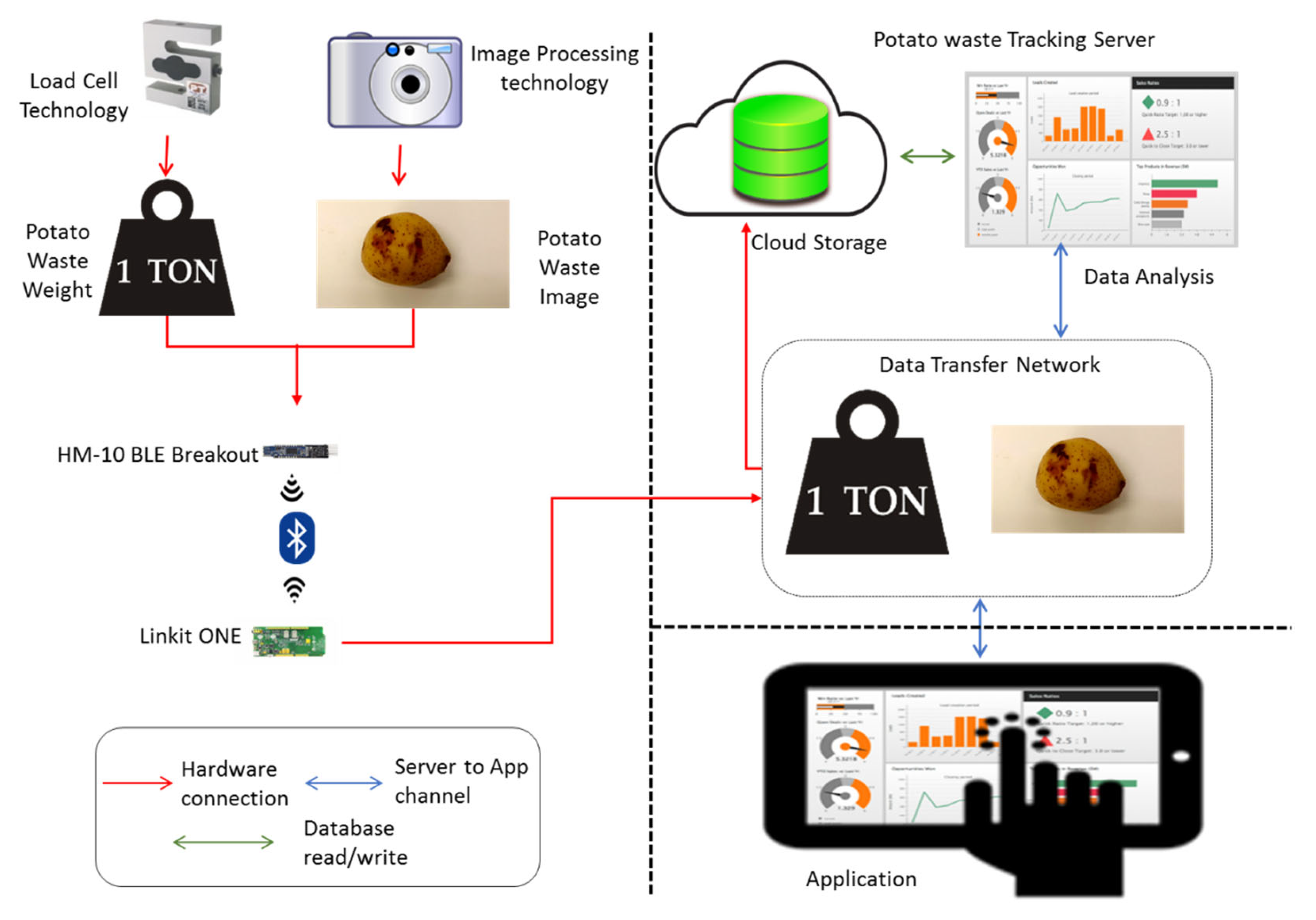

3. Methodology

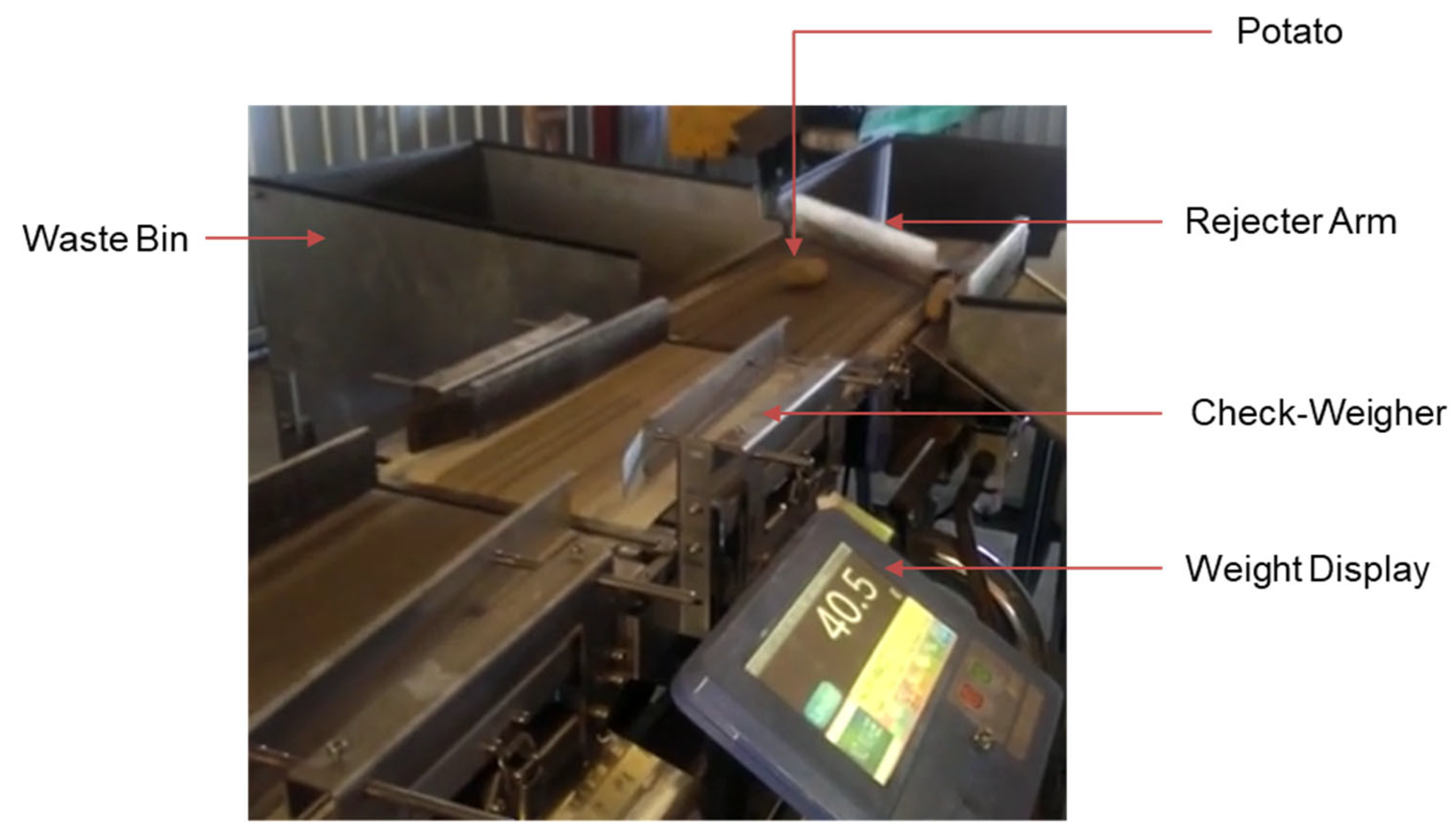

Hardware and Software System Setups

4. Image Processing and Weight Recording

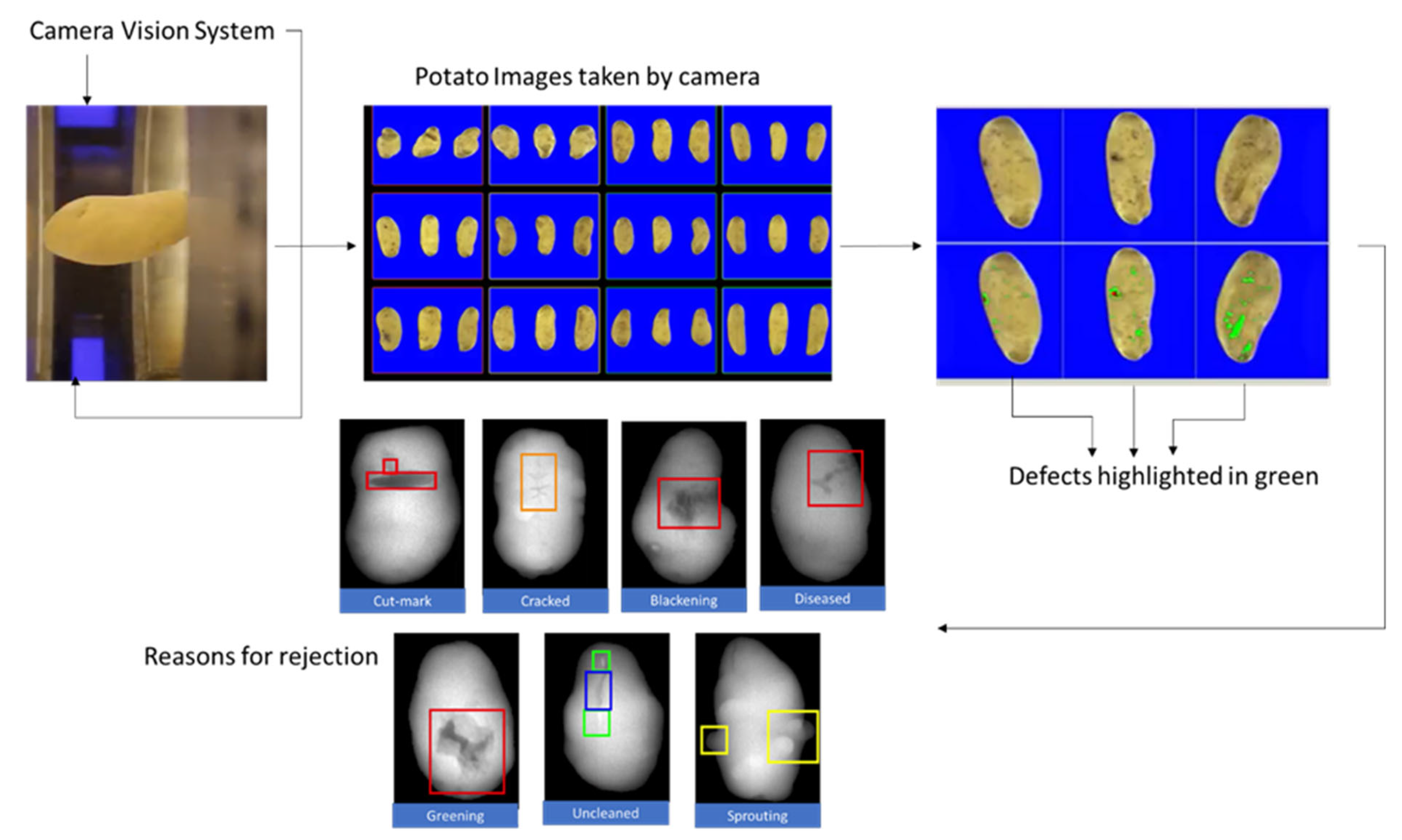

4.1. Image Processing

4.1.1. Segmentation

4.1.2. Data

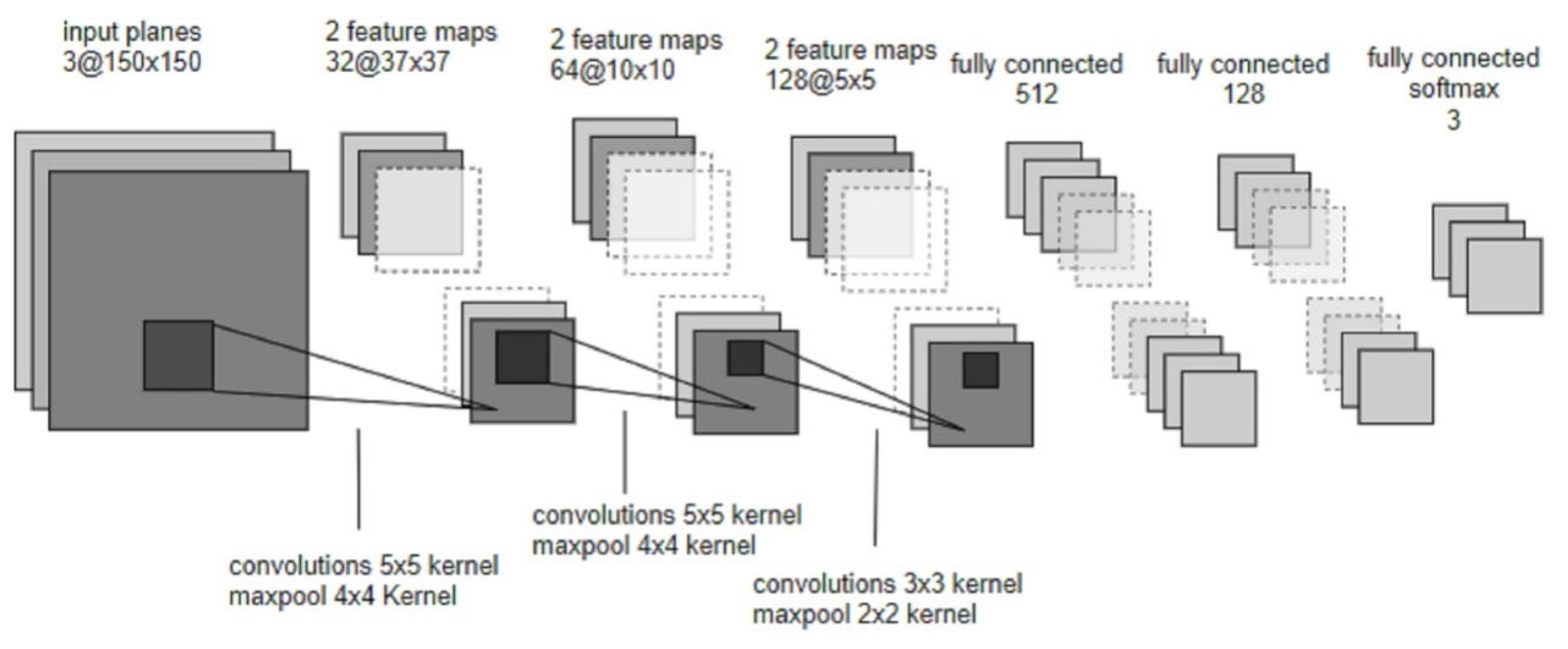

4.1.3. CNN Architecture

4.1.4. Choosing the Activation

4.1.5. Gradient Descent Optimiser

4.2. Weight Recording

4.3. Experimental Parameters

5. Results and Discussion

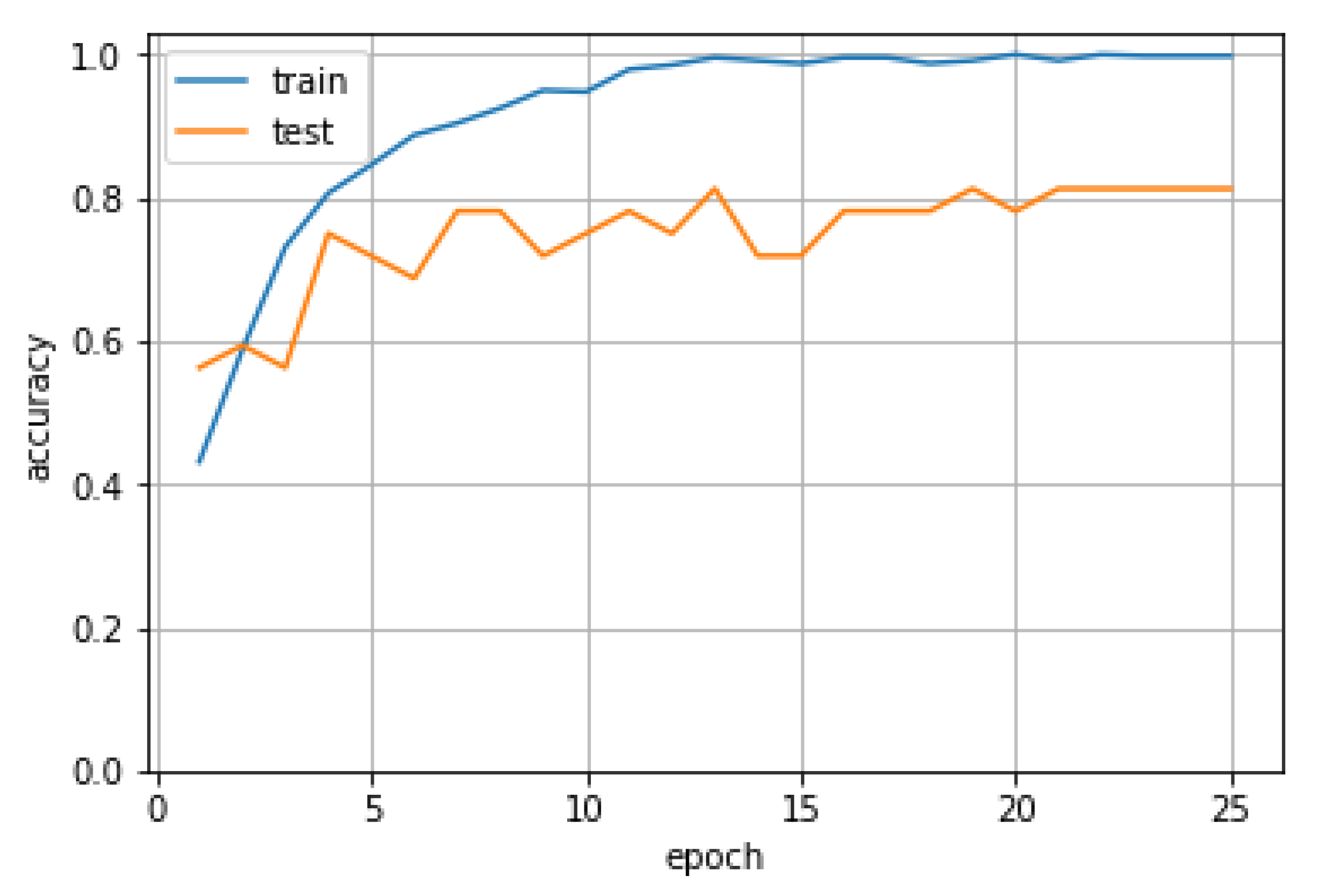

5.1. Image Processing Results

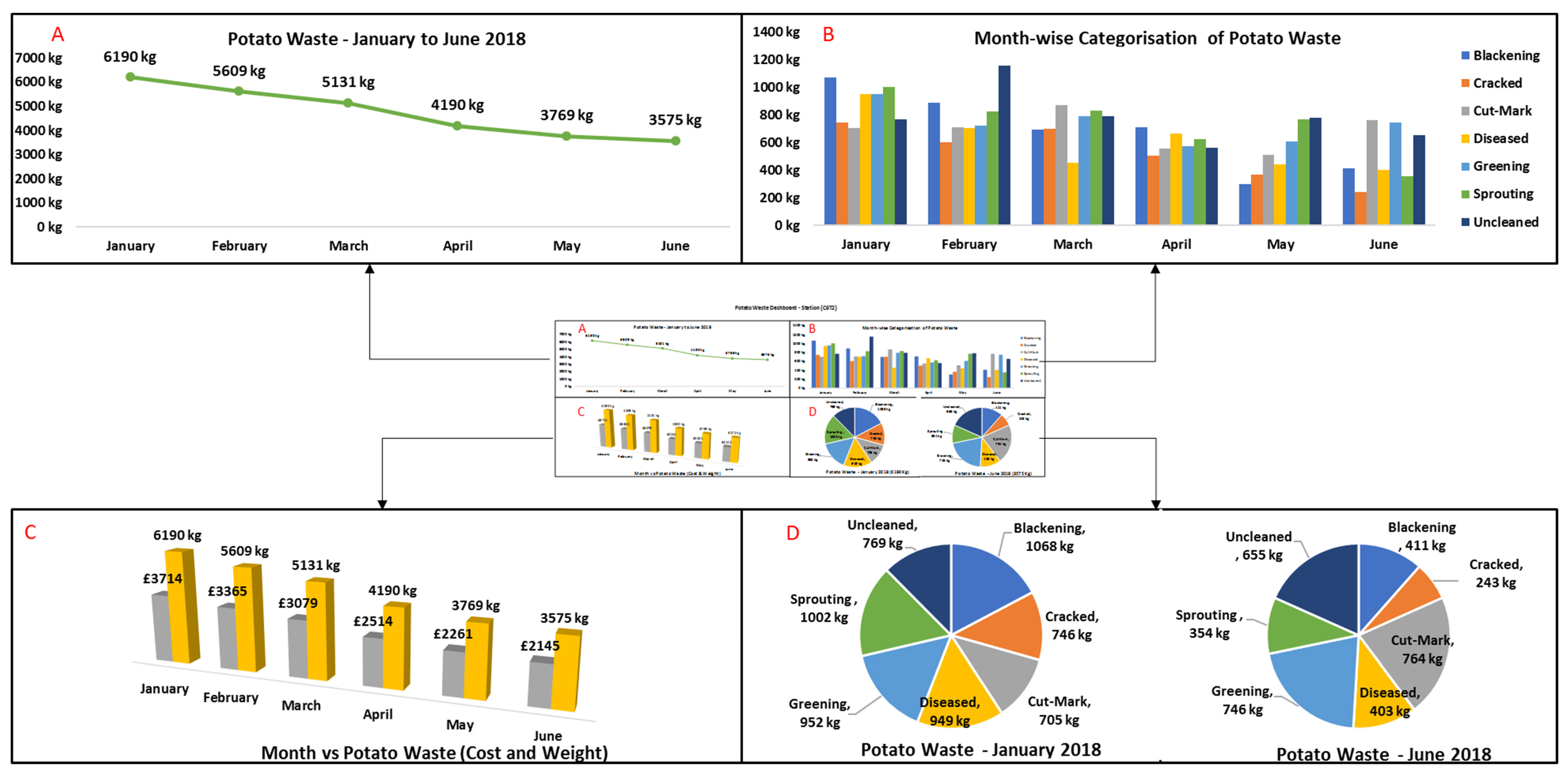

5.2. Food Waste Tracker Results

5.3. Analysis of Company Case-Study Results

6. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- WRAP. Estimates of Food Surplus and Waste Arisings in the UK. 2017. Available online: http://www.wrap.org.uk/sites/files/wrap/Estimates_%20in_the_UK_Jan17.pdf (accessed on 2 October 2017).

- Boye, J.; Arcand, Y. Current trends in green technologies in food production and processing. Food Eng. Rev. 2013, 5, 1–17. [Google Scholar] [CrossRef]

- Finn, S. Valuing our food: Minimizing waste and optimizing resources. Zygon 2014, 49, 992–1008. [Google Scholar] [CrossRef]

- UN. World Economic and Social Survey 2013—Sustainable Development Challenges. 2013. Available online: https://sustainabledevelopment.un.org/content/documents/2843WESS2013.pdf (accessed on 2 October 2017).

- Biggs, E.; Bruce, E.; Boruff, B.; Duncan, J.; Horsley, J.; Pauli, N.; McNeill, K.; Neef, A.; Van Ogtrop, F.; Curnow, J.; et al. Sustainable development and the water–energy–food nexus: A perspective on livelihoods. Environ. Sci. Policy 2015, 54, 389–397. [Google Scholar] [CrossRef]

- Jörissen, J.; Priefer, C.; Bräutigam, K. Food waste generation at household level: Results of a survey among employees of two european research centers in Italy and Germany. Sustainability 2015, 7, 2695–2715. [Google Scholar] [CrossRef]

- Eriksson, M.; Persson Osowski, C.; Malefors, C.; Bjorkman, J.; Eriksson, E. Quantification of food waste in public catering services—A case study from a Swedish municipality. Waste Manag. 2017, 61, 415–422. [Google Scholar] [CrossRef] [PubMed]

- Elimelech, E.; Ayalon, O.; Ert, E. What gets measured gets managed: A new method of measuring household food waste. Waste Manag. 2018, 76, 68–81. [Google Scholar] [CrossRef] [PubMed]

- Manzocco, L.; Alongi, M.; Sillani, S.; Nicoli, M. Technological and consumer strategies to tackle food wasting. Food Eng. Rev. 2016, 8, 457–467. [Google Scholar] [CrossRef]

- Wright, A. GB Potato Production Falls to 4.89Mt, lowest since 2012. 2018. Available online: https://potatoes.ahdb.org.uk/publications/gb-potato-production-falls-489mt-lowest-2012 (accessed on 9 May 2019).

- O’Donovan, S. Use Your Loaf and Save Billions. 2013. Available online: http://www.wrap.org.uk/content/use-your-loaf-and-save-billions (accessed on 18 December 2017).

- Terry, L.A.; Mena, C.; Williams, A.; Jenney, N.; Whitehead, P. Fruit and Vegetable Resource Maps: Mapping Fruit and Vegetable Waste through the Wholesale Supply Chain, RSC008; WRAP: Oxon, UK, 2011. [Google Scholar]

- WRAP. Reducing Supply Chain and Consumer Potato Waste. 2012. Available online: http://www.wrap.org.uk/sites/files/wrap/Amcor%20project%20report%20final%2C%2003%20Jan%202012.pdf (accessed on 22 January 2018).

- Chen, Z.; Xia, F.; Huang, T.; Bu, F.; Wang, H. A localization method for the internet of things. J. Supercomput. 2013, 63, 657–674. [Google Scholar] [CrossRef]

- Bhatt, C.; Dey, N.; Ashour, A. Internet of Things and Big Data Technologies for Next Generation Healthcare, 1st ed.; Springer International Publishing: Basel, Switzerland, 2017. [Google Scholar]

- Ting, N.G.Y.; Shee, T.Y.; Choong, L.J.S. Internet of Things for Real-time Waste Monitoring and Benchmarking: Waste Reduction in Manufacturing Shop Floor. Procedia CIRP 2017, 61, 382–386. [Google Scholar] [CrossRef]

- Jagtap, S.; Rahimifard, S. The digitisation of food manufacturing to reduce waste—Case study of a ready meal factory. Waste Manag. 2019, 87, 387–397. [Google Scholar] [CrossRef] [PubMed]

- Cisco. 2012 Cisco CSR Report—Environment. 2012. Available online: https://www.cisco.com/c/dam/en_us/about/citizenship/reports/pdfs/CSR-Report-2012-Environment.pdf (accessed on 3 October 2017).

- Kan, C.; Yang, H.; Kumara, S. Parallel computing and network analytics for fast industrial internet-of-things (IIoT) machine information processing and condition monitoring. J. Manuf. Syst. 2018, 46, 282–293. [Google Scholar] [CrossRef]

- Miller, B.; Delwiche, M. A color vision system for peach grading. Trans. ASAE 1989, 32, 1484–1490. [Google Scholar] [CrossRef]

- Nielsen, H.; Paul, W. Modelling image processing parameters and consumer aspects for tomato quality grading. IFAC Proc. Vol. 1997, 30, 141–146. [Google Scholar] [CrossRef]

- Shearer, A.; Payne, F. Color and defect sorting of bell peppers using machine vision. Trans. ASAE 1990, 33, 1245–1250. [Google Scholar] [CrossRef]

- Darweesh, A.; Abouelfarag, A.; Kadry, R. Real time adaptive approach for image processing using mobile nodes. In Proceedings of the 2018 6th International Conference on Future Internet of Things and Cloud Workshops (FiCloudW), Barcelona, Spain, 6–8 August 2018; pp. 158–163. [Google Scholar]

- Fu, Y.; Fengquan, L. Application of internet of things to the monitoring system for food quality safety. In Proceedings of the 2013 Fourth International Conference on Digital Manufacturing & Automation, Qingdao, China, 29–30 June 2013; pp. 296–298. [Google Scholar]

- Aljerf, L. Data of thematic analysis of farmer’s use behavior of recycled industrial wastewater. Data Br. 2018, 21, 240–250. [Google Scholar] [CrossRef] [PubMed]

- Sujatha, K.; Deepalakshmi, B.; Cao, S.-Q. Optimal condition monitoring of wind turbines using intelligent image processing and internet of things. Int. J. Renew. Energy Technol. 2018, 9, 158–180. [Google Scholar] [CrossRef]

- Arel, I.; Rose, D.; Karnowski, T. Deep machine learning—A new frontier in artificial intelligence research. Res. Front. IEEE Comput. Intell. Mag. 2010, 5, 13–18. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G. Imagenet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems; Neural Information Processing Systems (NIPS): Lake Tahoe, Nevada, 2012. [Google Scholar]

| Layer (type) | Output Shape | Parameters |

|---|---|---|

| conv2d_1 (Conv2D) | (None, 150, 150, 32) | 2432 |

| conv2d_2 (Conv2D) | (None, 150, 150, 32) | 25,632 |

| max_pooling2d_1 (MaxPooling2 | (None, 37, 37, 32) | 0 |

| conv2d_3 (Conv2D) | (None, 37, 37, 64) | 51,264 |

| conv2d_4 (Conv2D) | (None, 37, 37, 64) | 102,464 |

| max_pooling2d_2 (MaxPooling2 | (None, 10, 10, 64) | 0 |

| dropout_1 (Dropout) | (None, 10, 10, 64) | 0 |

| conv2d_5 (Conv2D) | (None, 10, 10, 128) | 73,856 |

| conv2d_6 (Conv2D) | (None, 10, 10, 128) | 147,584 |

| max_pooling2d_3 (MaxPooling2 | (None, 5, 5, 128) | 0 |

| dropout_2 (Dropout) | (None, 5, 5, 128) | 0 |

| flatten_1 (Flatten) | (None, 3200) | 0 |

| dense_1 (Dense) | (None, 512) | 1,638,912 |

| dropout_3 (Dropout) | (None, 512) | 0 |

| dense_2 (Dense) | (None, 128) | 65,664 |

| dense_3 (Dense) | (None, 3) | 387 |

| Total params: 2,108,195 Trainable params: 2,108,195 Non-trainable params: 0 |

| Batch | Sample | Terminal | Operator | Date | Time | Weight (gm) | Image | Vision Status | Reason |

|---|---|---|---|---|---|---|---|---|---|

| 35,882 | 13,412 | C6T2 | Packing | 01/01/18 | 02:13:37 | 40.4 |  | FAIL | Diseased |

| 35,882 | 13,713 | C6T2 | Packing | 01/01/18 | 02:14:23 | 39.7 |  | FAIL | Blackening |

| 35,882 | 13,847 | C6T2 | Packing | 01/01/18 | 02:14:59 | 39.9 |  | FAIL | Cracked |

| 35,882 | 14,517 | C6T2 | Packing | 01/01/18 | 02:17:06 | 40.3 |  | FAIL | Sprouting |

| 35,882 | 14,729 | C6T2 | Packing | 01/01/18 | 02:18:09 | 41.6 |  | FAIL | Greening |

| 35,882 | 15,007 | C6T2 | Packing | 01/01/18 | 02:19:12 | 40.1 |  | FAIL | Uncleaned |

| 35,882 | 15,089 | C6T2 | Packing | 01/01/18 | 02:19:38 | 40.8 |  | FAIL | Cut-mark |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jagtap, S.; Bhatt, C.; Thik, J.; Rahimifard, S. Monitoring Potato Waste in Food Manufacturing Using Image Processing and Internet of Things Approach. Sustainability 2019, 11, 3173. https://doi.org/10.3390/su11113173

Jagtap S, Bhatt C, Thik J, Rahimifard S. Monitoring Potato Waste in Food Manufacturing Using Image Processing and Internet of Things Approach. Sustainability. 2019; 11(11):3173. https://doi.org/10.3390/su11113173

Chicago/Turabian StyleJagtap, Sandeep, Chintan Bhatt, Jaydeep Thik, and Shahin Rahimifard. 2019. "Monitoring Potato Waste in Food Manufacturing Using Image Processing and Internet of Things Approach" Sustainability 11, no. 11: 3173. https://doi.org/10.3390/su11113173