Extractive Article Summarization Using Integrated TextRank and BM25+ Algorithm

Abstract

1. Introduction

2. Literature Review

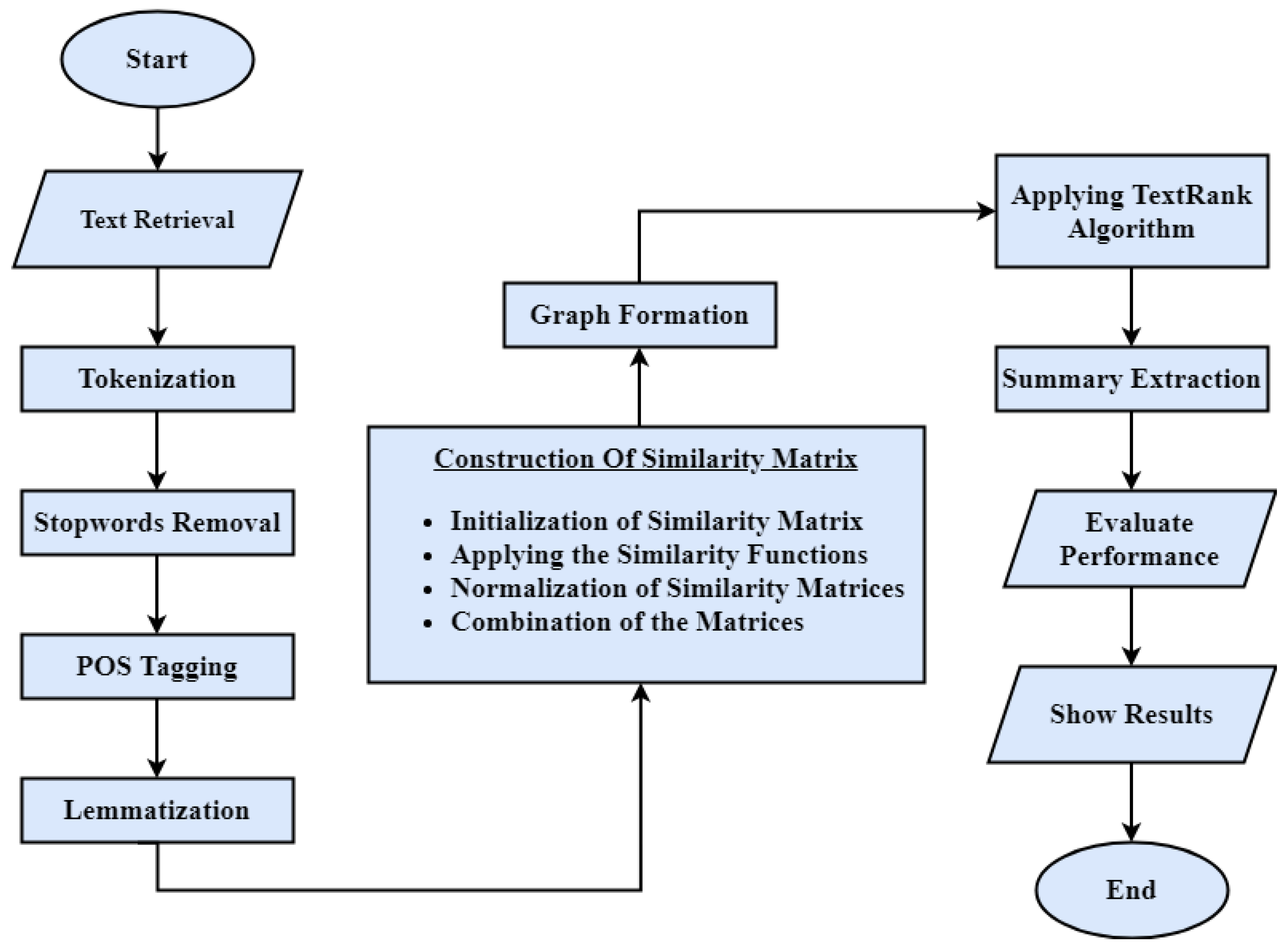

3. Materials and Methods

- A

- Data Collection

- B

- Dataset Description

4. Proposed Methodology

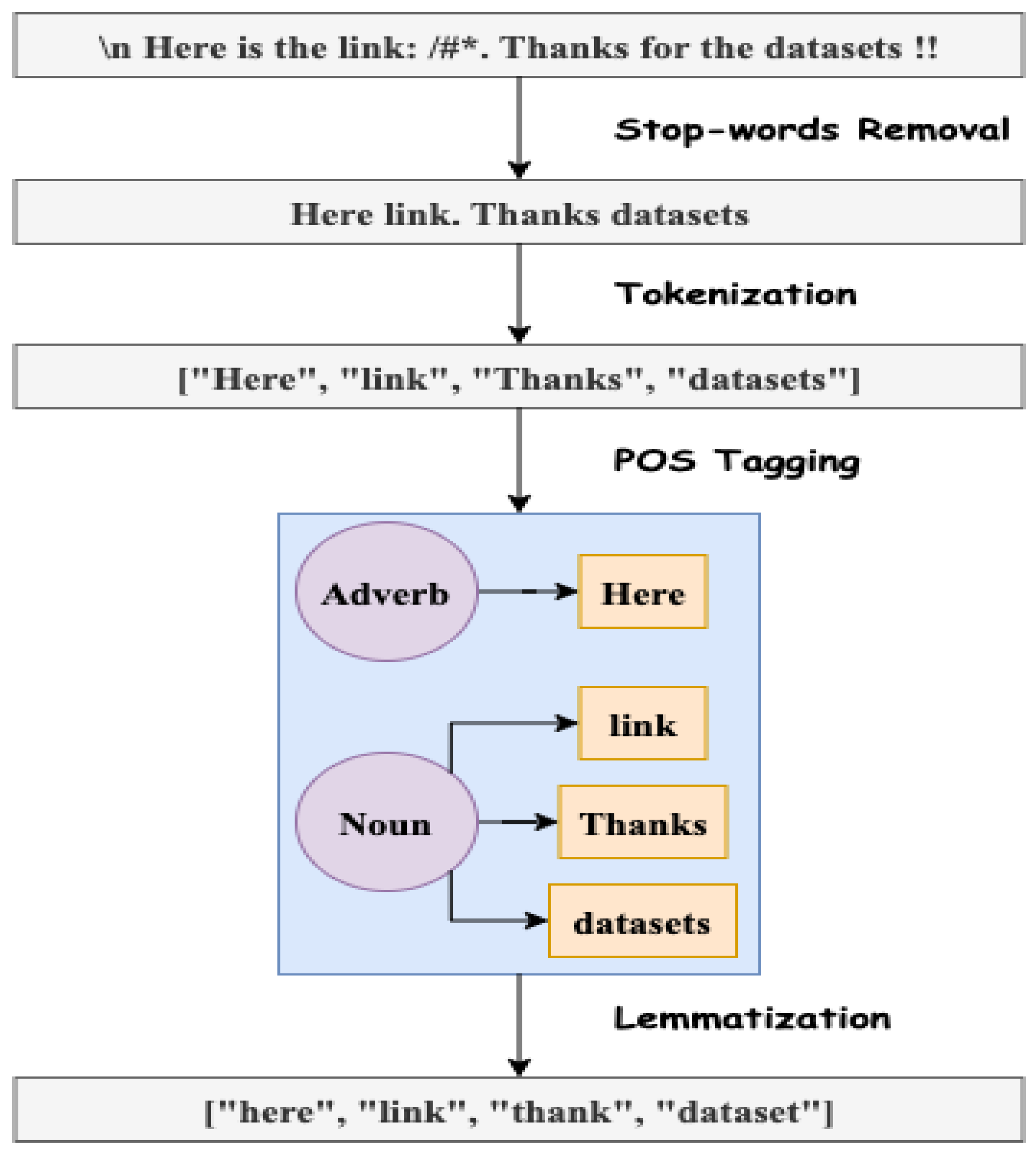

4.1. Text Pre-Processing

4.1.1. Sentence Tokenization

4.1.2. Stopwords Removal

4.1.3. POS Tagging

4.1.4. Lemmatization

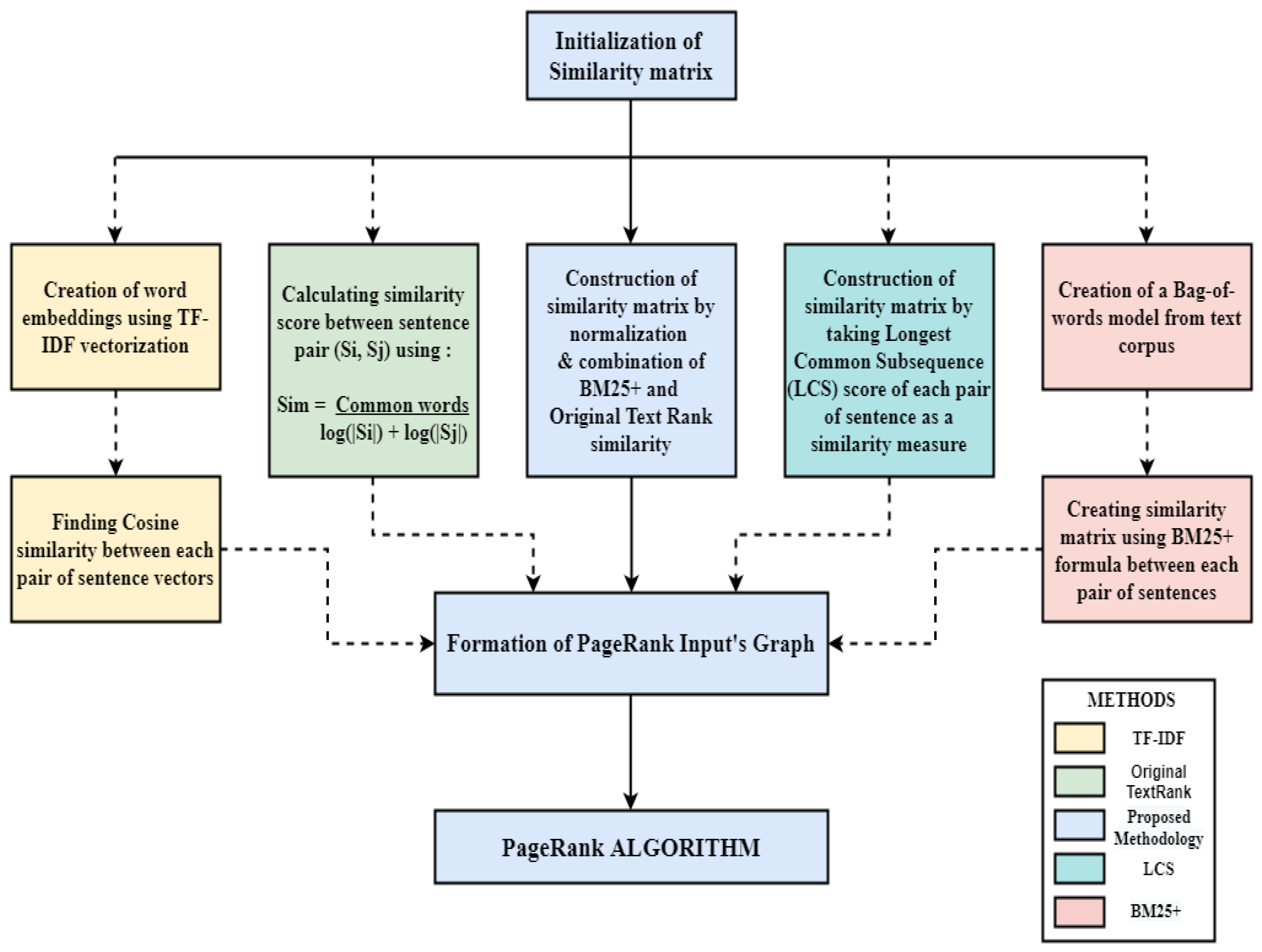

4.2. Formation of the Similarity Matrix

4.2.1. Initialization of Similarity Matrix

4.2.2. Determining the Sentence Similarity Scores Using a Similarity Function

- BM25+ Similarity Function

- Original TextRank Similarity Function.

4.2.3. Normalization and Combination

4.3. Summary Extraction Using TextRank

4.3.1. Graph Formation

4.3.2. PageRank Algorithm

4.3.3. TextRank Algorithm

4.3.4. Summary Extraction

4.4. Notable TextRank Similarity Matrix Models

4.4.1. Original TextRank Similarity Function

4.4.2. TF-IDF Cosine Similarity Function

4.4.3. TF-ISF Cosine Similarity Function

4.4.4. LCS Similarity Function

4.4.5. BM25+ Similarity Function

4.4.6. Karcı Summarization

4.4.7. Word Mover’s Distance

5. Results

- A

- Evaluation Metrics

- B

- Comparison with state-of-the-art methods

- C

- Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Vlainic, M.; Preradovic, N.M. A comparative study of automatic text summarization system performance. In Proceedings of the 7th European Computing Conference (ECC 13), Dubrovnik, Croatie, 25–27 June 2013. [Google Scholar]

- Lloret, E.; Palomar, M. Text summarisation in progress: A literature review. Artif. Intell. Rev. 2011, 37, 1–41. [Google Scholar] [CrossRef]

- Gambhir, M.; Gupta, V. Recent automatic text summarization techniques: A survey. Artif. Intell. Rev. 2016, 47, 1–66. [Google Scholar] [CrossRef]

- Gong, Y.; Liu, X. Creating generic text summaries. In Proceedings of the Sixth International Conference on Document Analysis and Recognition, Seattle, WA, USA, 10–13 September 2001. [Google Scholar]

- Chopra, S.; Auli, M.; Rush, A.M. Abstractive sentence summarization with attentive recurrent neural networks. In Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, San Diego, CA, USA, 12–17 June 2016. [Google Scholar]

- Bhargava, R.; Sharma, Y.; Sharma, G. Atssi: Abstractive text summarization using sentiment infusion. Procedia Comput. Sci. 2016, 89, 404–411. [Google Scholar] [CrossRef]

- Kan, M.-Y.; McKeown, K.R.; Klavans, J.L. Applying natural language generation to indicative summarization. arXiv 2001, arXiv:cs/0107019. [Google Scholar]

- García-Hernández, R.A.; Montiel, R.; Ledeneva, Y.; Rendón, E.; Gelbukh, A.; Cruz, R. Text Summarization by Sentence Extraction Using Unsupervised Learning. In Proceedings of the Mexican International Conference on Artificial Intelligence, Atizapán de Zaragoza, Mexico, 27–31 October 2008; Springer: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- Mihalcea, R.; Tarau, P. Textrank: Bringing order into text. In Proceedings of the 2004 Conference on Empirical Methods in Natural Language Processing, Barcelona, Spain, 25–26 July 2004. [Google Scholar]

- Zha, P.; Xu, X.; Zuo, M. An Efficient Improved Strategy for the PageRank Algorithm. In Proceedings of the 2011 International Conference on Management and Service Science, Bangkok, Thailand, 7–9 May 2011. [Google Scholar]

- Moratanch, N.; Chitrakala, S. A survey on extractive text summarization. In Proceedings of the 2017 International Conference on Computer, Communication and Signal Processing (ICCCSP), Chennai, India, 10–11 January 2017. [Google Scholar]

- Ghodratnama, S.; Beheshti, A.; Zakershahrak, M.; Sobhanmanesh, F. Extractive document summarization based on dynamic feature space mapping. IEEE Access 2020, 8, 139084–139095. [Google Scholar] [CrossRef]

- Luhn, H.P. A statistical approach to mechanized encoding and searching of literary information. IBM J. Res. Dev. 1957, 1, 309–317. [Google Scholar] [CrossRef]

- Onwutalobi, A.C. Using Lexical Chains for Efficient Text Summarization. Available online: https://ssrn.com/abstract=3378072 (accessed on 16 May 2009).

- Neto, J.L.; Freitas, A.A.; Kaestner, C.A.A. Automatic text summarization using a machine learning approach. In Proceedings of the Brazilian Symposium on Artificial Intelligence, Porto de Galinhas, Recife, Brazil, 11–14 November 2002; Springer: Berlin/Heidelberg, Germany, 2002. [Google Scholar]

- Jain, H.J.; Bewoor, M.S.; Patil, S.H. Context sensitive text summarization using k means clustering algorithm. Int. J. Soft Comput. Eng 2012, 2, 301–304. [Google Scholar]

- El-Kilany, A.; Saleh, I. Unsupervised document summarization using clusters of dependency graph nodes. In Proceedings of the 2012 12th International Conference on Intelligent Systems Design and Applications (ISDA), Kochi, India, 27–29 November 2012. [Google Scholar]

- Sharaff, A.; Shrawgi, H.; Arora, P.; Verma, A. Document Summarization by Agglomerative nested clustering approach. In Proceedings of the 2016 IEEE International Conference on Advances in Electronics, Communication and Computer Technology (ICAECCT), New York, NY, USA, 2–3 December 2016. [Google Scholar]

- Nallapati, R.; Zhai, F.; Zhou, B. Summarunner: A recurrent neural network based sequence model for extractive summarization of documents. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Brin, S.; Page, L. The anatomy of a large-scale hypertextual web search engine. Comput. Netw. ISDN Syst. 1998, 30, 107–117. [Google Scholar] [CrossRef]

- Erkan, G.; Radev, D.R. Lexrank: Graph-based lexical centrality as salience in text summarization. J. Artif. Intell. Res. 2004, 22, 457–479. [Google Scholar] [CrossRef]

- Wan, X.; Xiao, J. Towards a unified approach based on affinity graph to various multi-document summarizations. In Proceedings of the International Conference on Theory and Practice of Digital Libraries, Budapest, Hungary, 16–21 September 2007; Springer: Berlin/Heidelberg, Germany, 2007. [Google Scholar]

- Baralis, E.; Cagliero, L.; Mahoto, N.; Fiori, A. GRAPHSUM: Discovering correlations among multiple terms for graph-based summarization. Inf. Sci. 2013, 249, 96–109. [Google Scholar] [CrossRef]

- Ravinuthala, V.V.M.K.; Chinnam, S.R. A keyword extraction approach for single document extractive summarization based on topic centrality. Int. J. Intell. Eng. Syst. 2017, 10, 5. [Google Scholar] [CrossRef]

- Binwahlan, M.S.; Salim, N.; Suanmali, L. Swarm based text summarization. In Proceedings of the 2009 International Association of Computer Science and Information Technology-Spring Conference, Singapore, 17–20 April 2009. [Google Scholar]

- Babar, S.A.; Patil, P.D. Improving performance of text summarization. Procedia Comput. Sci. 2015, 46, 354–363. [Google Scholar] [CrossRef]

- Wongchaisuwat, P. Automatic keyword extraction using textrank. In Proceedings of the 2019 IEEE 6th International Conference on Industrial Engineering and Applications (ICIEA), Tokyo, Japan, 26–29 April 2019. [Google Scholar]

- Mitchell, R. Web Scraping with Python: Collecting More Data from the Modern Web; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2018. [Google Scholar]

- Nenkova, A. Automatic text summarization of newswire: Lessons learned from the document understanding conference. In Proceedings of the Twentieth National Conference on Artificial Intelligence and the Seventeenth Innovative Applications of Artificial Intelligence Conference, Pittsburgh, PA, USA, 9–13 July 2005. [Google Scholar]

- Anandarajan, M.; Hill, C.; Nolan, T. Text preprocessing. Practical Text Analytics; Springer: Cham, Switzerland, 2019; pp. 45–59. [Google Scholar]

- Loper, E.; Bird, S. Nltk: The natural language toolkit. arXiv 2002, arXiv:cs/0205028. [Google Scholar]

- Mikolov, T.; Chen, K.; Corrado, G.; Dean, J. Efficient estimation of word representations in vector space. arXiv 2013, arXiv:1301.3781. [Google Scholar]

- Oliphant, T.E. A Guide to NumPy; Trelgol Publishing: Austin, TX, USA, 2006; Volume 1. [Google Scholar]

- Robertson, S.; Zaragoza, H. The probabilistic relevance framework: BM25 and beyond. Found. Trends® Inf. Retr. 2009, 3, 333–389. [Google Scholar] [CrossRef]

- Rehurek, R.; Sojka, P. Software framework for topic modelling with large corpora. In Proceedings of the LREC 2010 workshop on new challenges for NLP frameworks, Valletta, Malta, 22 May 2010. [Google Scholar]

- Zhang, Y.; Jin, R.; Zhou, Z.H. Understanding bag-of-words model: A statistical framework. Int. J. Mach. Learn. Cybern. 2010, 1, 43–52. [Google Scholar] [CrossRef]

- Barrios, F.; López, F.; Argerich, L.; Wachenchauzer, R. Variations of the similarity function of textrank for automated summarization. arXiv 2016, arXiv:1602.03606. [Google Scholar]

- Lee, D.; Verma, R.; Das, A.; Mukherjee, A. Experiments in Extractive Summarization: Integer Linear Programming, Term/Sentence Scoring, and Title-driven Models. arXiv 2020, arXiv:2008.00140. [Google Scholar]

- Hagberg, A.; Swart, P.; S Chult, D. Exploring network structure, dynamics, and function using NetworkX. In Proceedings of the 7th Python in Science Conference, Pasadena, CA, USA, 19–24 August 2008; No. LA-UR-08-05495; LA-UR-08-5495. Los Alamos National Lab.(LANL): Los Alamos, NM, USA, 2008. [Google Scholar]

- Page, L.; Brin, S.; Motwani, R.; Winograd, T. The PageRank Citation Ranking: Bringing Order to the Web; Stanford InfoLab: Stanford, CA, USA, 1999. [Google Scholar]

- Christian, H.; Agus, M.P.; Suhartono, D. Single document automatic text summarization using term frequency-inverse document frequency (TF-IDF). ComTech Comput. Math. Eng. Appl. 2016, 7, 285–294. [Google Scholar] [CrossRef]

- Meena, Y.K.; Gopalani, D. Feature priority based sentence filtering method for extractive automatic text summarization. Procedia Comput. Sci. 2015, 48, 728–734. [Google Scholar] [CrossRef]

- Hark, C.; Karcı, A. Karcı summarization: A simple and effective approach for automatic text summarization using Karcı entropy. Inf. Process. Manag. 2019, 57, 102187. [Google Scholar] [CrossRef]

- Wang, H.-C.; Hsiao, W.-C.; Chang, S.-H. Automatic paper writing based on a RNN and the TextRank algorithm. Appl. Soft Comput. 2020, 97, 106767. [Google Scholar] [CrossRef]

- Lin, C.-Y. Rouge: A package for automatic evaluation of summaries. In Proceedings of the Workshop on Text Summarization Branches Out, Barcelona, Spain, 25–26 July 2004; pp. 74–81. [Google Scholar]

- El-Kassas, W.S.; Salama, C.R.; Rafea, A.A.; Mohamed, H.K. EdgeSumm: Graph-based framework for automatic text summarization. Inf. Process. Manag. 2020, 57, 102264. [Google Scholar] [CrossRef]

- Mallick, C.; Das, A.K.; Dutta, M.; Das, A.K.; Sarkar, A. Graph-based text summarization using modified TextRank. In Soft Computing in Data Analytics; Springer: Singapore, 2019; pp. 137–146. [Google Scholar]

- Liu, Y. Fine-tune BERT for extractive summarization. arXiv 2019, arXiv:1903.10318. [Google Scholar]

- Al-Sabahi, K.; Zuping, Z.; Nadher, M. A hierarchical structured self-attentive model for extractive document summarization (HSSAS). IEEE Access 2018, 6, 24205–24212. [Google Scholar] [CrossRef]

- Jadhav, A.; Rajan, V. Extractive summarization with swap-net: Sentences and words from alternating pointer networks. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics, Melbourne, Australia, 15–20 July 2018; Volume 1. [Google Scholar]

- Ge, N.; Hale, J.; Charniak, E. A statistical approach to anaphora resolution. In Proceedings of the Sixth Workshop on Very Large Corpora, Montreal, QC, Canada, 15–16 August 1998. [Google Scholar]

- Mitkov, R. Multilingual anaphora resolution. Mach. Transl. 1999, 14, 281–299. [Google Scholar] [CrossRef]

| Dataset Parameters | Value |

|---|---|

| Number of articles | 75 |

| Categories | 25 |

| No. of sentences per article (average) | 89.5 |

| No. of sentences per article (maximum) | 229 |

| No. of sentences per article (minimum) | 30 |

| Summary length (%) | 20 |

| Model | ROUGE-1 | ROUGE-2 | ROUGE-L | |

|---|---|---|---|---|

| Original TextRank | ||||

| Recall | 0.757743 | 0.652379 | 0.726952 | |

| Precision | 0.739719 | 0.637702 | 0.714006 | |

| F-score | 0.744394 | 0.641341 | 0.716935 | |

| TF-IDF Cosine Similarity | ||||

| Recall | 0.670118 | 0.546507 | 0.623722 | |

| Precision | 0.733197 | 0.598681 | 0.696064 | |

| F-score | 0.695501 | 0.567516 | 0.653939 | |

| LCS Similarity Function | ||||

| Recall | 0.733842 | 0.557659 | 0.696512 | |

| Precision | 0.579289 | 0.442102 | 0.524561 | |

| F-score | 0.644777 | 0.491148 | 0.596118 | |

| BM25+ Similarity Function | ||||

| Recall | 0.804444 | 0.699020 | 0.779963 | |

| Precision | 0.709480 | 0.618667 | 0.682280 | |

| F-score | 0.750457 | 0.653352 | 0.724864 | |

| Proposed Approach | ||||

| Recall | 0.776544 | 0.674904 | 0.748617 | |

| Precision | 0.739773 | 0.643668 | 0.715275 | |

| F-score | 0.753685 | 0.655442 | 0.728332 | |

| Source | Author | Methodology | F-Score * (in %) | ||

|---|---|---|---|---|---|

| ROUGE-1 | ROUGE-2 | ROUGE-L | |||

| [19] | Ramesh Nallapati, et al. | SummaRuNNer (two-layer RNN-based sequence classifier) | 46.60 | 23.10 | 43.03 |

| [43] | Cengiz Harka, Ali Karcı | Karcı entropy-based summarization | 49.41 | 22.47 | 46.13 |

| [46] | Wafaa S. El-Kassas, et al. | EdgeSumm (unsupervised graph-based framework) | 53.80 | 28.58 | 49.79 |

| [47] | Chirantana Mallick, et al. | Modified TextRank (inverse sentence frequency-cosine similarity) | 73.53 | 67.33 | 67.65 |

| [48] | Yang Liu | BERT-SUM (BERT with interval segment embeddings and inter-sentence transformer) | 43.25 | 20.24 | 39.63 |

| [49] | Kamal Al-Sabahi, et al. | HSSAS (hierarchical structured LSTM with self-attention mechanism) | 52.10 | 24.50 | 48.80 |

| [50] | Aishwarya Jadhav, et al. | SWAP-NET (Seq2Seq Model with switching mechanism) | 41.60 | 18.30 | 37.70 |

| Proposed Methodology | Integrated TextRank | 75.37 | 65.54 | 72.83 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gulati, V.; Kumar, D.; Popescu, D.E.; Hemanth, J.D. Extractive Article Summarization Using Integrated TextRank and BM25+ Algorithm. Electronics 2023, 12, 372. https://doi.org/10.3390/electronics12020372

Gulati V, Kumar D, Popescu DE, Hemanth JD. Extractive Article Summarization Using Integrated TextRank and BM25+ Algorithm. Electronics. 2023; 12(2):372. https://doi.org/10.3390/electronics12020372

Chicago/Turabian StyleGulati, Vaibhav, Deepika Kumar, Daniela Elena Popescu, and Jude D. Hemanth. 2023. "Extractive Article Summarization Using Integrated TextRank and BM25+ Algorithm" Electronics 12, no. 2: 372. https://doi.org/10.3390/electronics12020372

APA StyleGulati, V., Kumar, D., Popescu, D. E., & Hemanth, J. D. (2023). Extractive Article Summarization Using Integrated TextRank and BM25+ Algorithm. Electronics, 12(2), 372. https://doi.org/10.3390/electronics12020372