1. Introduction

Easier accessibility to multimodel content, i.e., digital samples and audiovisual data, has boosted the development of jobs in the area of computer vision (CV). Some famous areas of CV include object identification and tracking [

1] and computer-aided analysis of several medical imaging techniques [

2]. Professionals are being aided by the use of CV techniques in the analysis of medical images to complete tasks rapidly and correctly. Chest X-ray (CXR) examinations are one of these applications [

3]. In order to diagnose various respiratory anomalies, including pneumonia, COVID-19, bronchiectasis, lung lesions, etc., chest X-ray imaging is the modality that is most frequently used worldwide [

4]. Important clinical inspections are performed daily as a result of the chest X-ray modality’s simpler and more practical approach [

5]. However, the accessibility of the subject matter to experts is crucial for the manual review of these samples. Additionally, manual inspection of chest CXR research is a time-consuming, challenging task with a significant likelihood of inaccurate findings. Contrarily, a computerized recognition model can expedite the procedure while also improving the system’s effectiveness.

Around 65 million individuals worldwide are afflicted by one or more types of chest diseases, and 3 million people pass away each year as a result of such illnesses. Thus, early detection of these abnormalities can spare individuals’ lives and prevent them from invasive surgical treatments [

6]. Consequently, scientists have concentrated their efforts to propose trustworthy computerized alternatives to address the issues with manual CXR inspection. Hence, artists have concentrated their efforts to propose trustworthy automated alternatives to address the issues with the manual CXR examination process. Initially, various CXR anomalies were classified using hand-crafted pattern calculation techniques. These procedures are straightforward and effective with tiny amounts of data. Hand-crafted key feature-extraction methods, in comparison, take a long time and require a lot of subject knowledge to generate correct answers. Additionally, there will always be a trade-off between categorization accuracy and computational cost for such algorithms. These approaches’ ability to recognize objects can be improved by using large keypoints, but at the expense of extra processing costs. Though using smaller keypoint sets makes hand-coded techniques more effective, it leaves out important aspects of visual modalities, which lowers the precision of classification outcomes. These factors prevent these approaches from being effective for CXR evaluation [

7].

The progress of AI-based solutions in the automated identification of medical problems is astounding at the moment. When used in medicine, AI aids with patient management, diagnosis, and treatment. This relieves physicians’ burden and lends a helping hand to doctors. Such frameworks also provide assistance to the administration department of a healthcare unit by providing automated management solutions [

8]. The scientific world has progressively become more engaged in using deep learning (DL) methods for digital image processing, along with the CXR test. To accomplish the segmentation and categorization task involved in several medical disease-related issues, a variety of well-studied DL models are used, such as convolution neural networks (CNN) [

9] and recurrent neural networks (RNNs) [

10]. As a result, deep learning is becoming an extremely potent solution in the health sector, since the majority of tasks are related to the classification and segmentation of diseases. Due to the empowerment of DL techniques, these systems are ideally suited for analyzing medical images, since they can compute a more nominative set of image characteristics without the need for subject-matter specialists. The way in which people’s brains view and remember different objects serves as an inspiration for Cnn architectures. A few such frameworks are VGG [

11], GoogleNet [

12], ResNet [

13], XceptionNet [

14], DenseNet [

15], and EfficientNet [

16], etc., which are being thoroughly explored in the field of medical image analysis. Such techniques can deliver consistent results with little computation. The key rationale behind employing DL-based algorithms for the computer-aided diagnosis of medical sample investigations is that they have the ability to compute the essential data of the input sequence and can handle challenging sample distortions, i.e., luminance and chrominance changes, clutter, blurring, and size changes, among others.

Extensive research has been carried out in the domain of the recognition of chest abnormalities. In this section, a detailed analysis of existing work is accomplished to recognize the various abnormalities of the chest. One method was discussed in [

3], in which the DL methods Vgg-16 and XceptionNet were employed for locating pneumonia-affected areas of images. In the first step, data augmentation was performed with various operations such as zoom, angle rotation, and image flip to enhance the diversity of the database. In the next phase, the DL approaches were applied to compute a set of deep keypoints. The work gained the highest results for the XceptionNet model; however, categorization performance requires more enhancement. Bhandary et al. [

17] also introduced a model to recognize pneumonia-affected areas from lung samples by employing DL approaches. Initially, a model called custom AlexNet was introduced for pneumonia detection. In the second step, an ensemble approach was used to join pattern-based keypoints computed via the application of the Haralick and Hu algorithm [

18] with the deep keypoints calculated with the first model. The computed keypoint set was utilized to train a support vector machine (SVM) and softmax classifier. The work in [

17] acquired categorization results of 97.27% utilizing CT samples over the LIDC-IDRI repository. In [

19], the researchers used various image dimensions and transfer learning to assess the effectiveness of various pre-trained deep networks, including GoogleNet, InceptionNet, and ResNet. Additionally, the properties that these models learned were analyzed using network visualization. According to the findings, shallower nets, such as GoogleNet, perform better than deep networks at differentiating between healthy and diseased chest x-rays. A DL-based approach called CheXNet was developed by Rajpurkar et al. [

20] to diagnose various disorders in the chest. Using batch normalization and dense connection, the network had 121 layers. The ChestX-ray14 database was used to reinforce the ChexNet classifier, which was built earlier with ImageNet samples. This method received an F1 measure of 0.435 and an AUC of 0.801. A DL approach for coronavirus disease identification over a variety of other chest ailments using chest X-rays was put forth by the researchers in [

21]. To address the concerns with skewed class samples, the authors used a GAN-based method to produce synthetic images. They used a variety of scenarios to analyze the model behavior, including data augmentation, transfer learning, and unbalanced category samples. The outcomes demonstrated that, with balanced data, the ResNet model produced a high performance of 87%. Ho et al. [

22] developed a two-phase method to accurately identify 14 distinct disorders from X-ray samples of the chest. Utilizing activation weights taken from the final convolutional layer of the trained DenseNet121 net, the anomalous area was initially located. Next, categorization was carried out by combining patterns and deep features to perform the fusion of keypoints. Composite characteristics were categorized using a number of supervised learning algorithms, including SVM, KNN, AdaBoost, and others. The experiments revealed that, with an accuracy of 84.62%, the ELM predictor performed well in contrast to other learners. Utilizing chest X-ray scans, the researchers in [

23] created a CNN network with 3 convolutional layers to identify 12 distinct disorders. They examined and studied how well unsupervised learning performed compared to backpropagation NN and competitive NN. The outcomes showed that the suggested CNN’s strong feature learning enabled it to achieve a high classification rate and greater generalization capability. However, the number of convergence cycles and calculation time was a little higher.

Another work was discussed in [

24] in which the researchers created a multi-scale attention net to improve the efficiency of identifying multiple classes of chest diseases. By employing a multi-stage attention block that combined local attributes obtained at various sizes with global keypoints, the introduced network used Densenet-169 as its core. To address the problem of imbalanced image samples, a unique loss method utilizing perceptual and multi-label balancing was also devised. The AUC of the proposed method was 85 on the CheXpert database and 81.50 on the ChestX-Ray14 repository. A cross-attention-oriented, end-to-end structure was proposed by Ma et al. [

25] to overcome the class-imbalanced issue of multi-label X-ray chest disease categorization. For improved keypoint depiction via shared attention, the model included a loss formula containing attention and multi-category balancing loss in addition to the computation of features with Densenet having 121 and 169 layers. On the ChestX-Ray14 samples, the discussed model displayed an improved AUC of 81.70. To increase the precision of multi-class respiratory disease detection using chest radiography, Wang et al. [

26] introduced the ChestNet framework. Two sub-networks, a categorization net, and an attention net made up the entire framework. A pre-trained ResNet152 algorithm was utilized to collect uniform keypoints that served as the foundation for the categorization network. Utilizing the keypoints that were retrieved, the attention net was utilized to examine the connection between category labels and anomalous areas. Employing the ChestX-ray14 database, the developed framework performed better for categorization. A strategy to perform multi-label chest illness classification and abnormality localization concurrently was suggested by Ouyang et al. [

27]. The paradigm included both an activation- and a gradient-based attention mechanism (AM). The work utilized a hierarchy-based visual AM with three levels: forefront, positive/negative, and abnormal attention. Because there were only a few box annotations for the diseased area that were readily available, the framework was learned by employing a weakly supervised learning approach. The average AUC value for this method on the ChestX-ray14 database was 81.90.

Pan et al. [

28] employed the well-known DL networks DenseNet and MobileNet-V2 to differentiate diseased samples of chest X-rays from normal images. The work was focused on classifying 14 types of chest diseases. The approach was trained and tested on two different datasets to test the cross-corpus behavior of the model. The work discussed in [

28] attained the best results with the MobileNet-V2 model. Another technique was presented in [

29] focusing on classifying both coronavirus and chest-related diseases from the X-ray image modality via the employment of the DL approach. In the first phase, samples were categorized as healthy, coronavirus-affected, and other, which were later distributed into 14 classes of chest abnormalities. The work utilized various deep nets, and ResNet-50 attained the best results in the recognition of COVID-19 and other chest disease-affected samples. A technique for the identification of both bacterial and viral influenza using chest images was developed by Alqudah et al. [

30]. First, a customized CNN technique was tailored to learn characteristics unique to the pneumonia illness after being pre-trained on other medical samples. Next, categorization was carried out with various predictors, including the KNN, SVM, and softmax algorithm. The findings demonstrated that the SVM significantly improved results compared to the other classifiers, but the effectiveness was assessed using a small sample set. An end-to-end training method for performing multi-class respiratory illness categorization was described in [

31]. In order to eliminate irrelevant information from samples, a preprocessing step was initially applied using trim and resize procedures. In order to construct a nominative feature set and subsequently classify the images into the appropriate categories, the pre-trained EfficientNetv2 network was applied. This strategy yields better outcomes for three-class classification, but the algorithm has over-fitting issues and performs worse as the classes increase. The effectiveness of various ResNet-based methods for the problem of labeling multiple chest X-ray data was examined by Baltruschat et al. [

32]. For better classification, the scientists expanded the design and added non-image information, including the patient’s age, gender, and sample capture category, to the net. According to the findings, the ResNet model with 38 layers integrating metadata outperformed the rest of the networks, with an AUC of 72.70. A DL approach performing multi-category identification of chest diseases from X-ray and CT samples was proposed in [

33]. Four distinct customized structures built on VGG-19, V2-based ResNet-152, and Gated Recurrent Unit (GRU) were evaluated by the researchers. The findings demonstrated that the customized VGG-19 model beat the other algorithms by achieving an accuracy rate of 98.05% on the X-ray and CT data samples; however, the method had problems with model over-fitting. Ge et al. [

34] developed a multi-class technique for diagnosing diseases from chest X-rays making use of labeling dependencies for illnesses and states of health. The network was made up of two separate sub-CNNs that learned with multiple loss method pairings, including correlation, multi-label softmax, and binary cross-entropy losses. Bilinear pooling was additionally implemented by the researchers to calculate useful keypoints for effective categorization. With ResNet as the backbone model, this technique [

34] displayed an AUC of 83.98; however, it had a high complexity of computation. Albahli et al. [

35] proposed an approach for classifying chest diseases using the X-ray image modality. Initially, samples were preprocessed to improve the visual appearance. After this, a histogram of the gradient (HOG) descriptor was used with numerous DL frameworks such as ResNet101, DenseNet201, etc. for features computation. Meanwhile, the SVM technique was used for classification. The approach acquired the best results with the ResNet101 model, but at the expense of increased computational cost.

The research mentioned above has produced impressive results. However, these approaches can only be used to classify a small number of chest-related disorders, because they are not generalizable.

Table 1 provides a summary of the methods for identifying chest illnesses from the literature. As can be observed, there is still room for advancement in regard to categorization accuracy, computing overhead, and generalization power for multi-label chest diseases.

Although current methodologies have produced impressive CXR recognition accuracy, there is a need for progress in terms of precision and computational burden. As a result, a deeper analysis of the classical machine learning (ML) and DL methodologies are needed to improve the effectiveness of classifying chest-related disorders from the X-ray medical image modality. The main issue with ML approaches for categorizing CXR abnormalities is their decreased efficacy with longer computation times [

36]. Comparing DL techniques to human brain intelligence, the capacity to resolve challenging real-world problems is astounding. Even though the DL method fixes issues with ML techniques, it also made models more sophisticated. Consequently, a more reliable method of classifying CXR-related diseases is required.

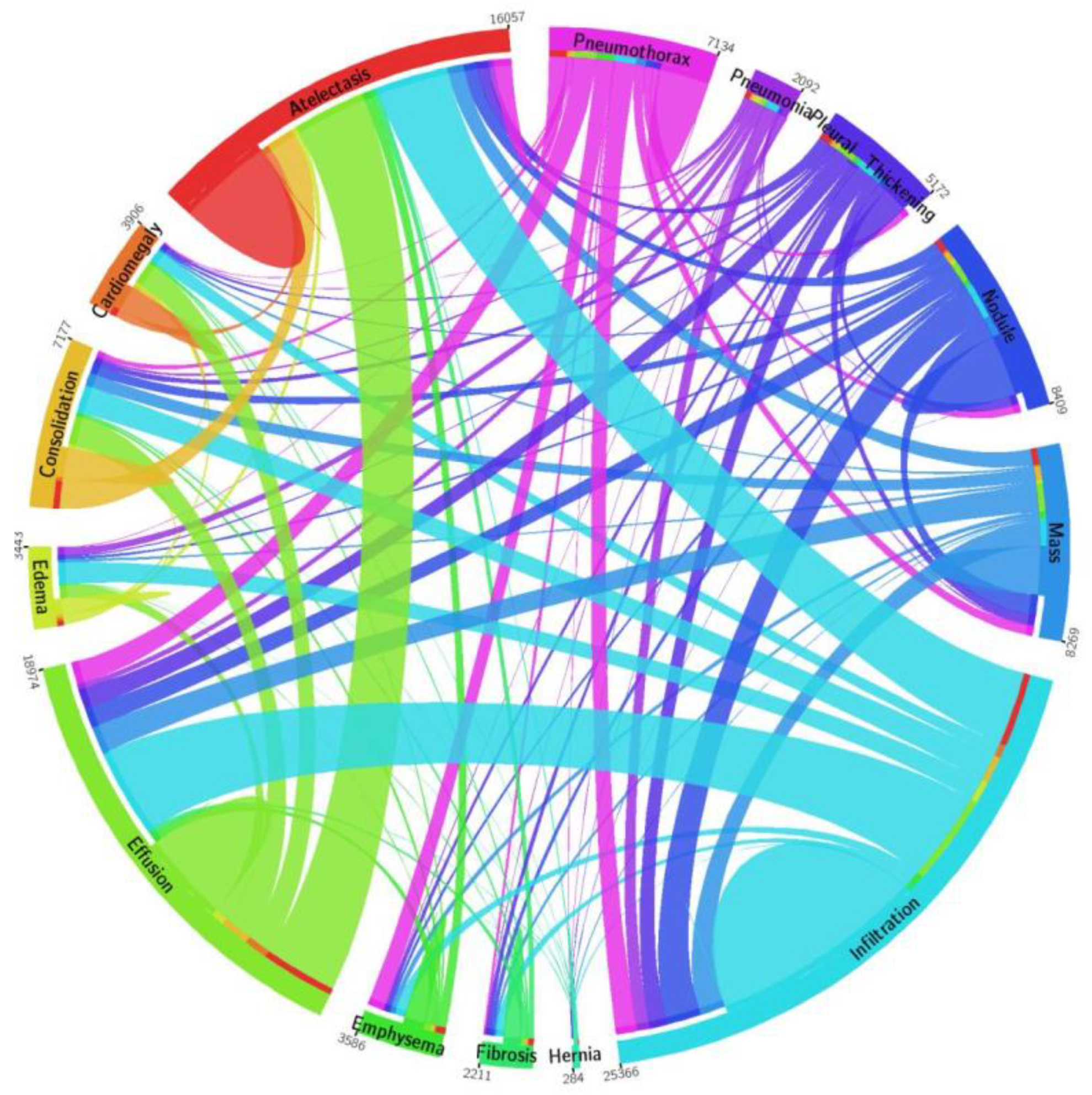

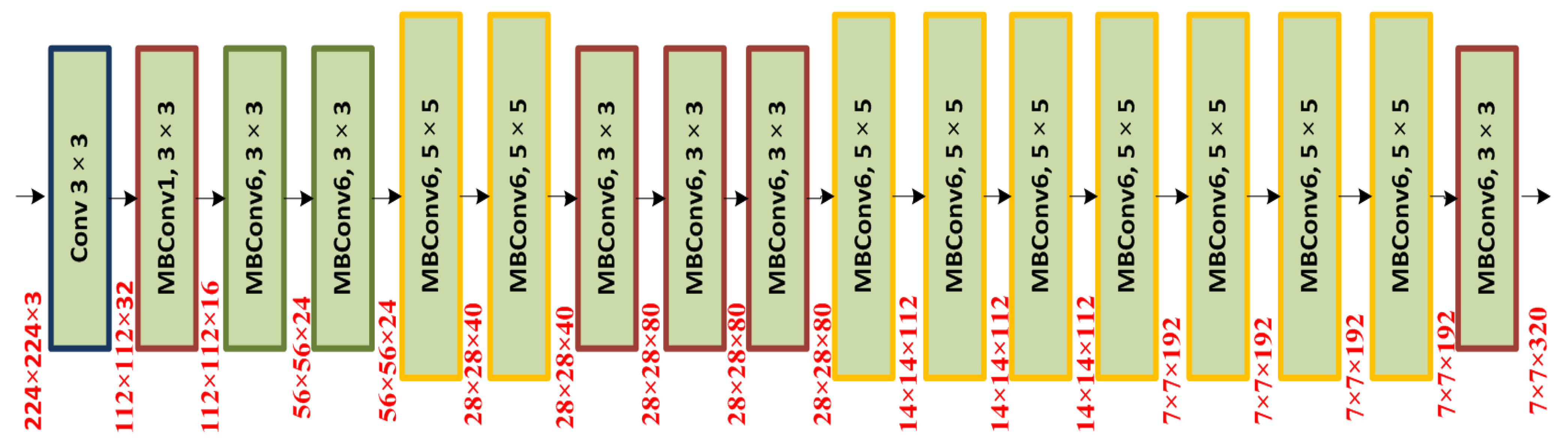

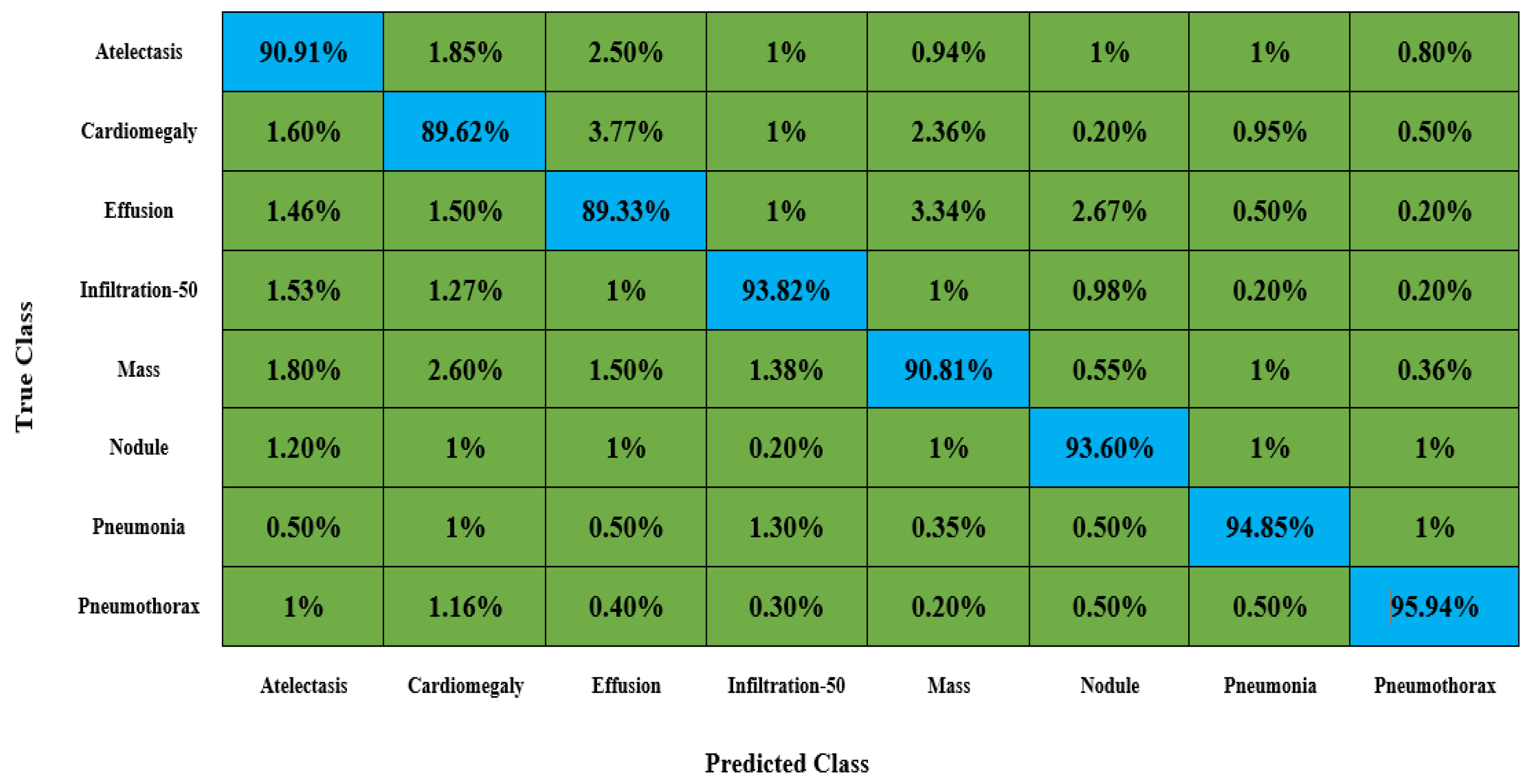

Due to the many commonalities between various chest disorders, it might be challenging to classify various CXR disorders accurately and on time. Additionally, the input samples’ prevalence of distortion, fading, illumination variance, and brightness shifts makes the categorization process even more challenging. Therefore, we developed a new framework called chest abnormalities’ detection from the X-ray modality using the EfficientDet (CXray-EffDet) model to locate and categorize the eight different categories of chest anomalies in order to address the shortcomings of previous approaches. Descriptively, we utilized the EfficientNet-B0-based EfficientDet-D0 model to compute a reliable set of sample features and accomplish the classification task by categorizing the eight categories of chest illness from the X-ray images. The experimental findings demonstrate that even with the existence of diverse image distortions, our method is competent at differentiating between the different kinds of chest disorders. Below are the primary contributions of the proposed work:

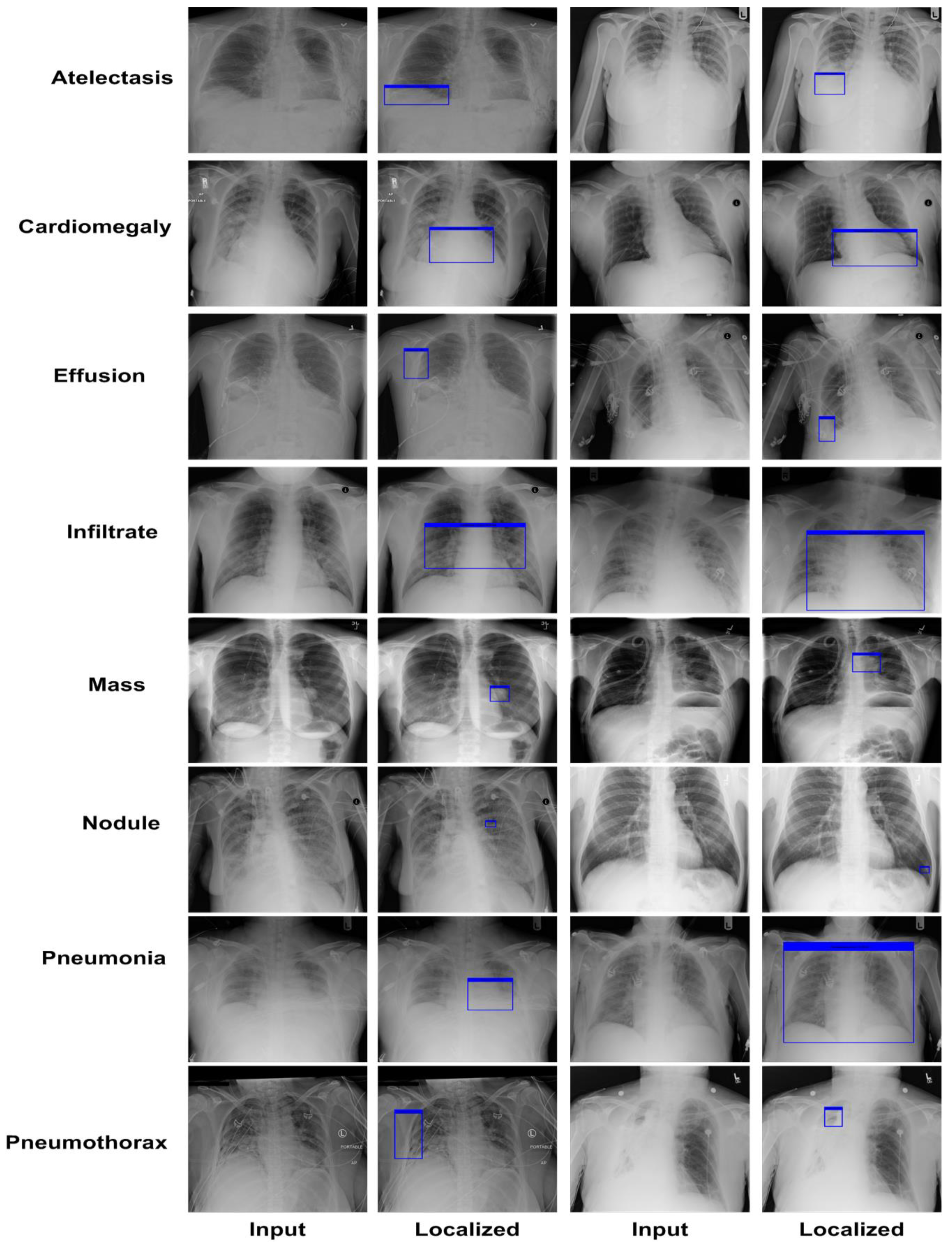

A model named the CXray-EffDet is proposed to recognize chest diseases from X-ray images.

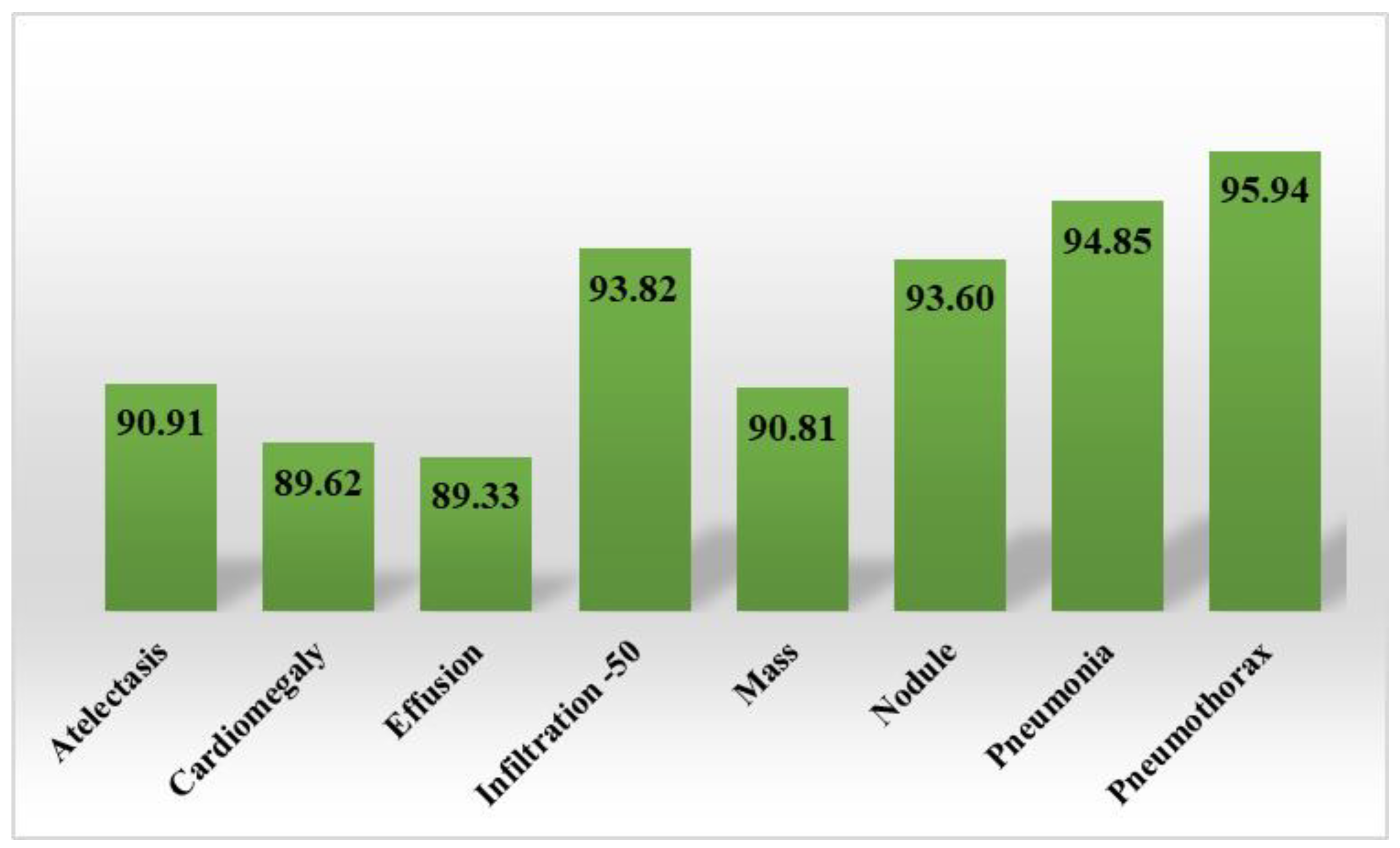

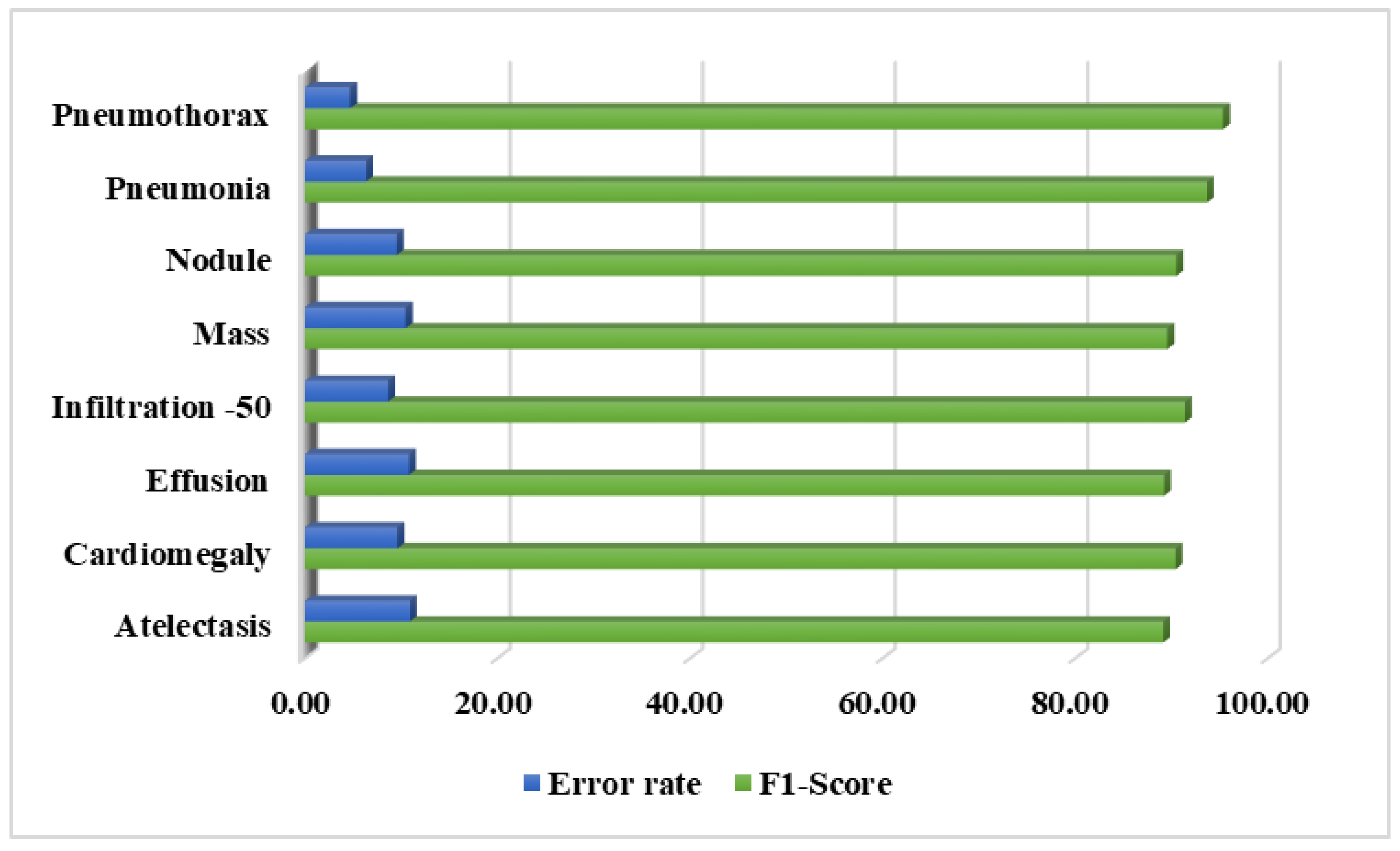

The introduced framework is proficient in correctly identifying and categorizing the eight kinds of chest abnormalities from the X-ray images due to the robustness of the CXray-EffDet approach.

The proposed model boosted the categorization performance due to its enhanced recognition ability to tackle complex sample transformation changes.

The CXray-EffDet approach presents a computationally effective approach to classifying chest disorders from X-ray samples due to its one-stage object identifier.

The proposed work is capable of both detecting the locations of diseased regions and their associated class.

To demonstrate the accuracy of our method, we give a detailed assessment of the new methods for the categorization of CXR diseases and perform extensive experiments on a challenging database called NIH Chest X-ray.

The rest of the article follows the above outline. An explanation of the presented work and employed dataset is given in

Section 2.

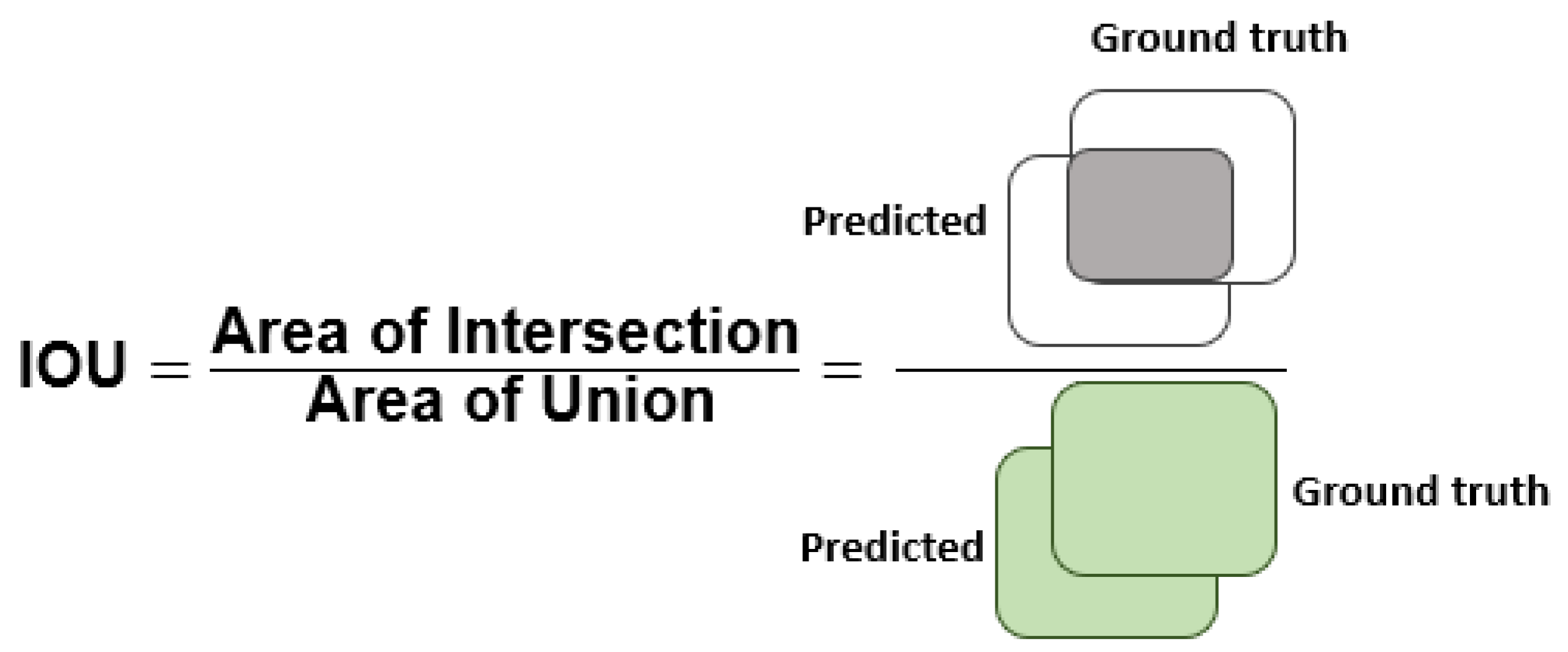

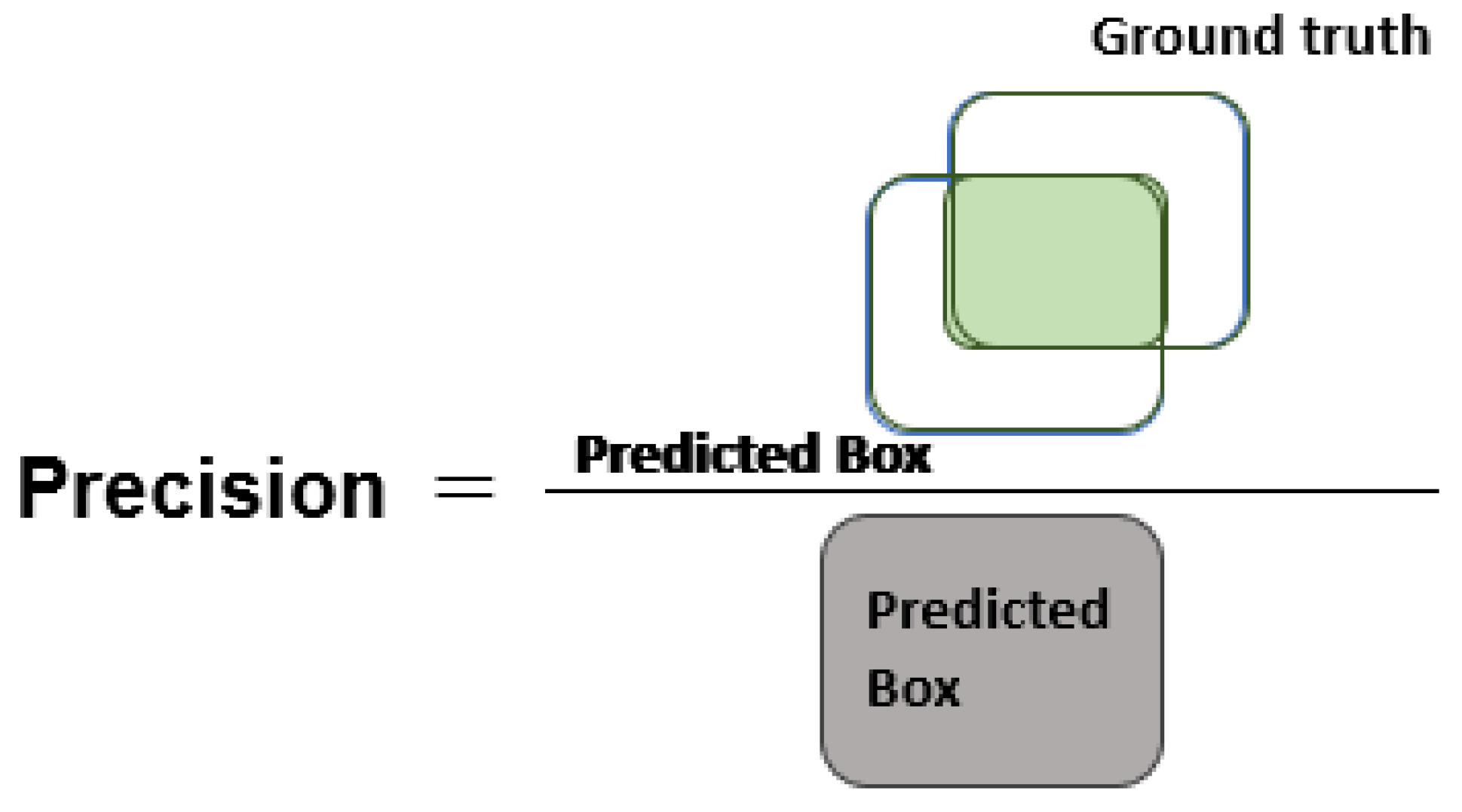

Section 3 comprises the description of the employed performance metrics and experiments used to assess the classification results of the presented work.

Section 4 comprises the discussion, and the conclusion is elaborated on in

Section 5.