Abstract

Measuring speed is a critical factor to reduce motion artifacts for dynamic scene capture. Phase-shifting methods have the advantage of providing high-accuracy and dense 3D point clouds, but the phase unwrapping process affects the measurement speed. This paper presents an absolute phase unwrapping method capable of using only three speckle-embedded phase-shifted patterns for high-speed three-dimensional (3D) shape measurement on a single-camera, single-projector structured light system. The proposed method obtains the wrapped phase of the object from the speckle-embedded three-step phase-shifted patterns. Next, it utilizes the Semi-Global Matching (SGM) algorithm to establish the coarse correspondence between the image of the object with the embedded speckle pattern and the pre-obtained image of a flat surface with the same embedded speckle pattern. Then, a computational framework uses the coarse correspondence information to determine the fringe order pixel by pixel. The experimental results demonstrated that the proposed method can achieve high-speed and high-quality 3D measurements of complex scenes.

1. Introduction

Three-dimensional (3D) shape measurement has applications in many fields, such as forensic science, medical surgery, and robotics. In the fields such as robotics, the measuring scene is usually dynamic. Therefore, in addition to measurement accuracy, measurement speed is also a critical factor for alleviating motion artifacts. The widely adopted 3D measurement techniques include stereo vision, time-of-flight, and phase-based methods. Although stereo vision and time-of-flight methods usually have higher capturing speeds, phase-based methods have the advantages of robustness and accuracy. They can provide dense 3D point clouds with high spatial resolutions. Over the years, numerous phase-based methods have been introduced including the Fourier method [1], the Windowed Fourier method [2], and the phase-shifting methods [3]. These methods are capable of retrieving phase information. However, the retrieved phases are wrapped between and with discontinuities. Therefore, phase unwrapping algorithms have to be employed to eliminate these discontinuities.

Phase unwrapping algorithms essentially find the integer multiple of s to add or subtract. These integers are usually called the fringe order, K. There are roughly two types of phase unwrapping algorithms—spatial phase unwrapping algorithms and temporal phase unwrapping algorithms. Spatial phase unwrapping algorithms detect the discontinuities and determine the fringe order K of each pixel by analyzing the phase values of the neighboring pixels on the wrapped phase map itself. Some of the approaches are scan-line unwrapping methods, quality-guided methods [4,5,6], and multi-anchor unwrapping methods [7]. Regardless of the speed of spatial phase unwrapping methods, they usually generate relative phase maps because the phase is unwrapped with respect to a starting point on the wrapped phase map. Therefore, the phase and the 3D points reconstructed are relative instead of absolute. On the other hand, temporal phase unwrapping algorithms determine the fringe order by referring to information from additional captured images. Since each pixel is unwrapped independently, the temporal phase unwrapping algorithm can generate absolute phase maps. Over the years, numerous temporal phase unwrapping methods have been developed including the binary-coding method [8], the gray-coding method [9], the multi-wavelength method [10,11,12], and the phase encoding method [13]. All these methods can retrieve absolute phase maps, yet they require the capturing of additional images which will slow down the measuring speed and is not desirable for high-speed applications.

To address the measuring speed reduction issue, An et al. [14] developed a phase unwrapping algorithm that utilizes the geometric constraint between the camera and the projector on a structured light system. An artificial absolute phase map is generated to assist in absolute phase unwrapping. This method does not require additional images. However, in order to correctly acquire the absolute phase, the minimum depth of the measuring object has to be known. Additionally, there is a measuring range limitation which depends on the spatial span of the fringe period and the angle between the projector and the camera. An and Zhang [15] combined the binary statistical matching with the phase-shifting method. They match the binarized statistical patterns captured by the camera with the ideal computer-generated projector image to generate the disparity map. The disparity map is further refined and used to obtain the final unwrapped phase. This method only requires the projection of one additional image and does not have the limitations of the previous method. However, the projector and camera usually have different sensor sizes and lenses, and the computer-generated images do not undergo the lens effects that the camera-captured images do. Hence, the projector or camera images have to be cropped and down-sampled (or up-sampled) before matching to match the field-of-view and the resolution, and the matching result requires extensive hole filling and refinement to correct the phase value. Zhang et al. [16] captured speckle-embedded fringe patterns of reference planes at different distances to generate a wrapped phase-to-height lookup table (LUT). Then the absolute spatial height of the measured object can be reconstructed by looking up the LUT with the speckle correlation. This method needs only four speckle-embedded fringe patterns and the wrapped phase constraint improves the LUT computational efficiency. However, this method requires the capturing of reference planes at many different heights and the measuring range is limited by the LUT.

Researchers have also attempted to add extra cameras to the standard single-camera and single-projector structured light system. Stereo-assisted phase-shifting profilometry [17,18,19,20,21,22,23] has been proposed in past few years. With extra cameras, conventional stereo vision techniques can be used along with the phase information to assist image correspondence establishment. However, adding extra cameras increases the costs and the complexity of hardware and algorithm development [24]. In addition, only the region that can be simultaneously observed by all cameras and projectors can be measured, which further limits the field of view. Therefore, in our previous work [25], we incorporated the digital image correlation (DIC) with the phase-shifting method on a single-camera and single-projector structured light system. Three phase-shifted fringe images and one speckle pattern are projected. The inverse-compositional Gauss–Newton algorithm (IC-GN) [26] is used to establish the correspondence between the pre-captured speckle image of a white surface and the captured speckle image of the measuring object. Then we developed a computational framework to use the correspondence to assist high-accuracy absolute phase retrieval. This method has been proven successful. However, although we have significantly improved the IC-GN algorithm efficiency by using the wrapped phase and epipolar constraint, the algorithm is still time-consuming. Given this, we only perform IC-GN on interval pixels and use the resultant deformation vector to complete the remaining correspondence.

In this research, we propose a phase unwrapping method on a single-camera and single-projector structured light system that combines Semi-Global Matching (SGM) [27] with the phase-shifting method to perform high-accuracy 3D measurements. Similar to our previous method, the wrapped phase map is obtained from the phase-shifted patterns. Instead of the IC-GN algorithm, the SGM algorithm is used to establish the coarse correspondence between the camera coordinate and the projector coordinate. Then the coarse correspondence is used to unwrap the wrapped phase. Finally, the spatial phase unwrapping is applied locally to each pixel with the SGM correspondence error to generate the final absolute phase map. The experiments verified the success of our proposed method. The computational speed is significantly increased compared to the IC-GN algorithm. Therefore, instead of interval pixel search, we can conduct SGM on every pixel which increases the number of pixels that have correspondence results. In addition, because the SGM algorithm improves the result of the correspondence search in our experiments, the proposed method has the ability to further reduce the number of patterns projected to three by allowing us to embed the speckle pattern into the three-step phase-shifted patterns, which is desirable for high-speed absolute 3D shape measurement.

2. Materials and Methods

2.1. Speckle-Embedded Three-Step Phase-Shifting Algorithm

The three-step phase-shifted fringe images with equal phase shifts can be mathematically written as

where is the pixel coordinate, is the average intensity, is the intensity modulation and is the phase to be solved. Speckle patterns can be embedded into the phase-shifted fringe patterns using Lohry and Zhang’s method [21]. The speckle-embedded fringe images can be written as

where is randomly generated intensity ranging from to 1. The embedded speckle pattern can be recovered by

and the phase can be solved by

The speckle-embedded fringe images are 8-bit grayscale images. We convert the 8-bit grayscale patterns to 1-bit binary patterns by applying MATLAB’s dithering function (Floyd–Steinberg’s error diffusion dither algorithm [28]). Although dithering lowers the fringe pattern quality, it can significantly increase the pattern projection rate on a digital light processing (DLP) projector, thus the 3D measurement speed.

The phase map solved from Equation (4) ranges from to and has discontinuities between each phase period because of the arctangent operation, which we call the wrapped phase. Conventionally, to eliminate the discontinuities, a temporal or spatial phase unwrapping algorithm is applied. The mathematical relationship between a wrapped phase and an unwrapped phase is

where K is called the fringe order. The phase unwrapping algorithm is essentially finding the fringe order K of each pixel such that the discontinuities are removed. In this research, we developed a phase unwrapping algorithm based on the pipeline in our previous work [25]. The proposed algorithm finds the low-accuracy absolute phase of each pixel in the scene automatically and uses it to unwrap the wrapped phase to obtain the high-accuracy absolute phase. Then, the high-accuracy absolute 3D geometry can be reconstructed. Instead of using the IC-GN algorithm, we employed the SGM algorithm in this work. The SGM algorithm improves the matching result in our experiments and significantly speeds up the computational process which allowed us to conduct a pixel-wise instead of grid points correspondence search.

2.2. Low-Accuracy Absolute Phase Extraction Using SGM

Prior to any 3D measurement, we projected and captured speckle-embedded three-step phase-shifted fringe patterns on a white flat surface. Then, we recovered the speckle pattern from the fringe images reflected by the white flat surface using Equation (3). We denote the retrieved from images of the white flat surface as

The hat () symbol means that the image is retrieved from the speckle-embedded three-step phase-shifted fringe patterns. We also projected two sets of fifteen phase-shifted fringe patterns onto the same white flat surface to obtain the horizontal and vertical phase maps, and , using the multi-wavelength phase-shifting algorithm. There exists a unique mapping between , and because they are obtained from the same object with the same camera and projector positions. We save them for future use. It is important to note that we do not need to recapture the aforementioned images for any 3D measurement.

For a 3D measurement, we projected the same speckle-embedded three-step phase-shifted fringe patterns onto the object. Again, we recovered the speckle pattern from the fringe images reflected by the object using Equation (3). We denote the retrieved from images of the object as

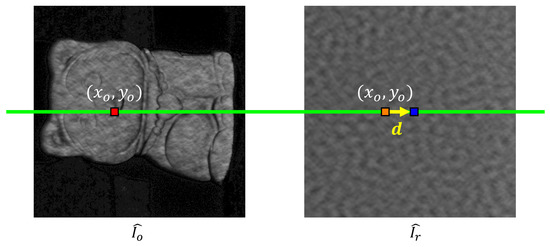

The wrapped phase of the object was calculated using Equation (4). Next, we used SGM [27] to obtain the correspondences between and . SGM obtains the disparity map by finding the disparity that leads to the lowest total cost aggregated from multiple directions. Conventional stereo SGM searches between rectified stereo images within a predefined disparity range on the epipolar line. However, in our case, there was only one image from one camera at a time. Therefore, we have to find the epipolar points of on using the phase information. From the camera and the projector calibration data, we can find epipolar lines in the projector coordinate for pixels on . When we projected the multi-wavelength phase-shifted patterns, each pixel in the projector coordinate had been assigned a unique horizontal and vertical absolute phase value. Therefore, we can locate the points in that are on the epipolar line by finding the pixel on each column (direction with small phase variation) of that has coordinate (, ) closest to the epipolar line. The pixel coordinate difference between the point of interest (POI) on and its corresponding point on found using SGM is the disparity vector in this research. Figure 1 shows the definition of the disparity for the SGM algorithm in this research.

Figure 1.

The definition of the disparity in this research. The disparity is the pixel coordinate difference between the point of interest (POI) on and its corresponding point on found using SGM. (Red point: POI, blue point: corresponding point, green line: pre-computed epipolar line through absolute phase constraints, orange point: point on with the same pixel coordinate as POI, yellow arrow: disparity vector ).

The cost of pixel aggregate along eight directions at disparity vector can be recursively defined as

where and are two constant parameters, with . is the disparity vector change tolerance for allowing smooth surface geometry changes. The cost function C we used is the Zero-mean Normalized Sum of Squared Differences (ZNSSD) criterion [29], which is insensitive to the potential scale and offset changes of the subset intensity. The ZNSSD coefficient can be expressed as

where and are the grayscale values at of and . We only considered the valid pixels filtered by and . is the local coordinates of the valid pixels in each subset and N is the total number of valid pixels in each subset. and . and .

We also incorporated SGM with the wrapped phase constraint we had. Theoretically, the corresponding points in and should have the same wrapped phase. The relation between the unwrapped phase map and wrapped phase of is

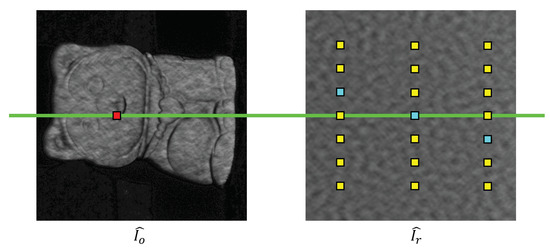

By comparing and , we can further narrow down the epipolar points to those that have the same wrapped phase value. To reduce the impact of the system calibration error and phase noise, we calculated the seven times, 0, offset pixels on the same column for all epipolar points after applying wrapped phase constraint, and chose the pixel with the smallest to apply SGM search to determine coarse correspondence. Figure 2 shows the schematic process of generating candidate corresponding points for the SGM algorithm. Once the correspondences between and are established, we can extract the low-accuracy absolute phase map of using the mapping between and .

Figure 2.

The schematic process of generating candidate corresponding points for the SGM algorithm. (Red point: POI, yellow points: offset pixels from epipolar points with wrapped phase constraint, light blue points: offset pixels with the smallest on their column, green line: pre-computed epipolar line through absolute phase constraints).

2.3. High-Accuracy Absolute Phase Unwrapping

The high-accuracy absolute phase will be obtained by unwrapping the wrapped phase in the following steps. First, we determine the fringe order using the following equation,

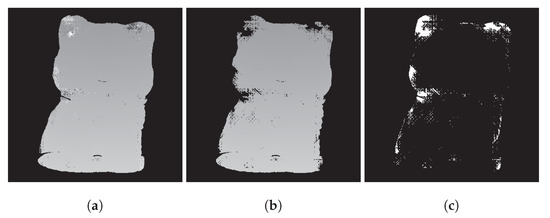

where is the rounding operator that obtains the closest integer to the value. We used to generate unwrapped phase . Second, we removed the errors in due to false SGM results at low signal-to-noise ratio areas and the abrupt surfaces by masking out the connected regions that had an area size lower than a threshold and obtained . Finally, we performed local spatial phase unwrapping [7] with respect to on the pixels we masked out in the previous step to obtain the final high-accuracy absolute phase, . Figure 3 shows examples of , , and the pixels we performed local spatial phase unwrapping on.

Figure 3.

Example of determining the pixels to perform local spatial phase unwrapping on. (a) . (b) . (c) Pixels (white) that will be unwrapped by local spatial phase unwrapping.

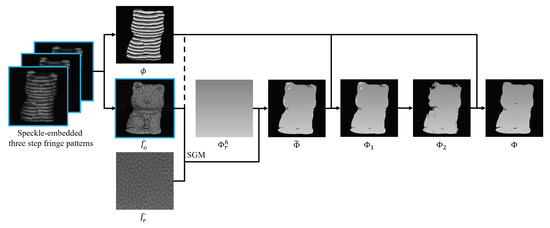

The overall framework of our proposed method is summarized in Figure 4. In total, there were only three patterns projected. We obtained the wrapped phase and from the speckle-embedded three-step phase-shifted fringe patterns. Then we utilized the correspondence between and found by SGM to extract the low-accuracy absolute phase , and directly unwrapped to obtain . Next, pixels in the discontinuous minor regions were masked out to generate . Finally, local spatial phase unwrapping unwraps the phase at pixels we masked out in the previous step with respect to . The resulting high-accuracy absolute phase can be used for high-accuracy 3D reconstruction [30].

Figure 4.

The overall computational framework of our proposed method.

3. Results and Discussions

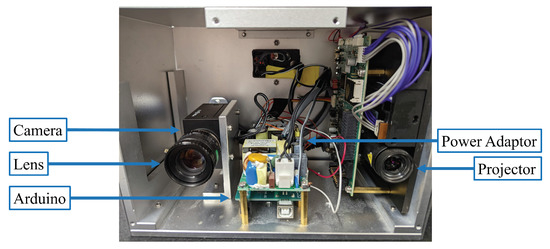

We verified our proposed method by developing a structured light prototype system, shown in Figure 5. The system was comprised of one camera (FLIR Grasshopper3 GS3-U3-23S6M) that was attached to a 16 mm focal length lens (Computar M1614-MP2) and one projector (Texas Instrument LightCrafter 4500). The full resolution of the camera was pixels and the full resolution of the projector was pixels. The fringe period of the three-step phase-shifted fringe patterns was 18 pixels. The fringe periods of the multi-wavelength phase-shifting algorithm were 36, 216, and 1140 pixels for the horizontal direction and 18, 114, and 912 pixels for the vertical direction. The system was calibrated using Zhang and Huang’s method [30] and the camera coordinate system was chosen to be the world coordinate system. The subset size for calculating in the SGM algorithm was pixels, and the and thresholds were 0.1 and 3, respectively. The minimum area for a connected region was the same as the number of pixels of a subset, pixels.

Figure 5.

Photograph of our prototype system.

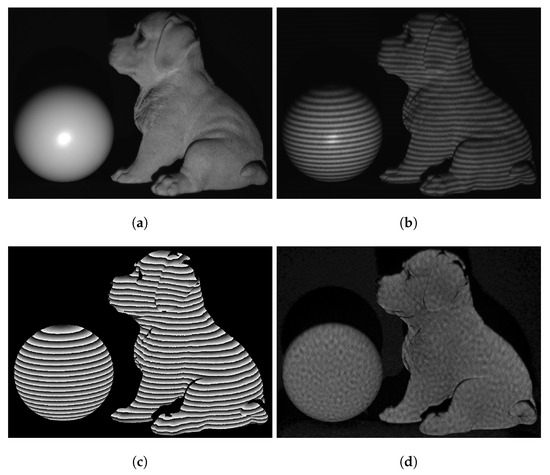

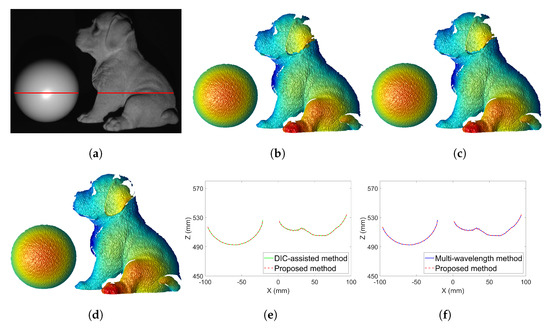

We compared the computational framework in this work with our previously developed DIC-assisted method [25] and the multi-wavelength phase unwrapping method. The grid step in the DIC-assisted method was set to 1 pixel in order to perform pixel-wise searches. We measured two isolated 3D objects: a sphere and a complex sculpture, shown in Figure 6a. Prior to any 3D measurement, we obtained and its corresponding and . Then, we measured 3D objects by projecting speckle-embedded three-step phase-shifted fringe patterns and capturing these fringe patterns. Figure 6b shows one of the fringe images. The wrapped phase and the speckle image retrieved from the fringe images are shown in Figure 6c and Figure 6d, respectively.

Figure 6.

Measurement of two isolated 3D objects. (a) Photograph of the objects. (b) One of the fringe images. (c) Wrapped phase . (d) Recovered speckle image .

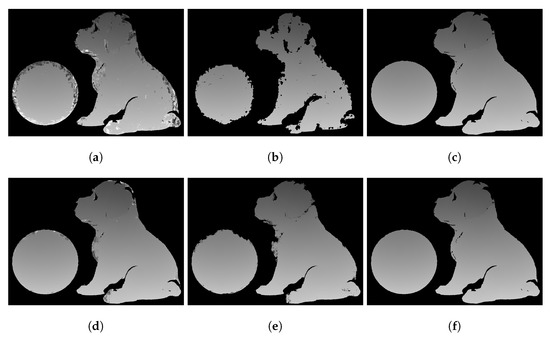

The wrapped phase was then unwrapped by the three aforementioned different methods. The results are shown in Figure 7 and Figure 8. Figure 7a shows the low-accuracy absolute phase map generated from the DIC-assisted method. Figure 7b shows the partially unwrapped phase map after error removal using Figure 7a. Figure 7c shows the final high-accuracy absolute phase map using the DIC-assisted method. Figure 7d shows the low-accuracy absolute phase map generated from the proposed SGM. Figure 7e shows the partially unwrapped phase map using Figure 7d. Figure 7f shows the final high-accuracy absolute phase map using our proposed method. From Figure 7a,d, one can see that the SGM algorithm results in a much better quality correspondence map, especially near the edges. Therefore, it is not required to mask out the edges, so more pixel correspondences are preserved in Figure 7e compared to Figure 7b. As a result, the local spatial phase unwrapping algorithm is required on much fewer points. Furthermore, the DIC finds correspondences at an average rate of 10 pixels/s but the SGM finds correspondences at an average rate of 320 pixels/s, which is over 30 times faster.

Figure 7.

Phase unwrapping process of the 3D objects. (a) Low-accuracy absolute phase generated from the DIC-assisted method. (b) Partially unwrapped phase map using (a) after removing error points. (c) Final high-accuracy absolute phase map from the DIC-assisted method. (d) Low-accuracy absolute phase map, , generated from the proposed method. (e) Partially unwrapped phase map, , using (b) after removing error points. (f) Final high-accuracy absolute phase map, , using the proposed method.

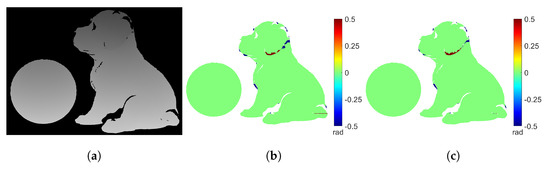

Figure 8.

Comparison of the absolute phase maps obtained from unwrapping with the DIC−assisted method, our proposed method, and the multi−wavelength method. (a) Absolute phase map from the multi−wavelength method. (b) Difference map between Figure 7c and (a) (3615 error points). (c) Difference map between Figure 7f and (a) (2997 error points).

For comparison, the absolute phase map obtained from the multi-wavelength method is shown in Figure 8a. Figure 8b shows the difference map between Figure 7c and Figure 8a. Figure 8c shows the difference map between Figure 7f and Figure 8a. There are 3615 error points in Figure 8b and 2997 error points in Figure 8c. The proposed method generates similar or slightly fewer error points than the DIC-assisted method. Also, Figure 8c shows that our proposed method and the multi-wavelength method produce identical results in smooth areas. However, there are phase unwrapping errors near the abrupt surfaces (e.g., around the ear of the dog sculpture). This is because in these areas the speckle pattern comes from different heights. Therefore, it is difficult for the SGM algorithm to accurately determine the correct correspondence. Nevertheless, it is important to note that only a small number of pixels are unwrapped incorrectly in such a complex scene, which demonstrates the success of our proposed method.

Figure 9 shows the 3D reconstructions from the unwrapped phase with the DIC-assisted method, the proposed method, and the multi-wavelength method. We can observe that our proposed method successfully reconstructed the details of the dog sculpture including edges as the other two methods. Figure 9e,f shows the overlapping cross-section of reconstructed 3D shapes. As expected, the results from the proposed method and the other two methods are identical in the smooth areas. One may notice that 3D reconstructions have large random noise due to the embedded speckle pattern.

Figure 9.

Three dimensional (3D) reconstructed shapes with the absolute unwrapped phase using different phase unwrapping methods. (a) Photograph of the 3D objects with the red line being the cross−section we examined. (b) 3D reconstruction using the unwrapped phase map shown in Figure 7c. (c) 3D reconstruction using the unwrapped phase map shown in Figure 7f. (d) 3D reconstruction using the unwrapped phase map shown in Figure 8a. (e) Overlap of the same cross−section of the reconstructed 3D shapes in (b,c). (f) Overlap of the same cross−section of the reconstructed 3D shapes in (c,d).

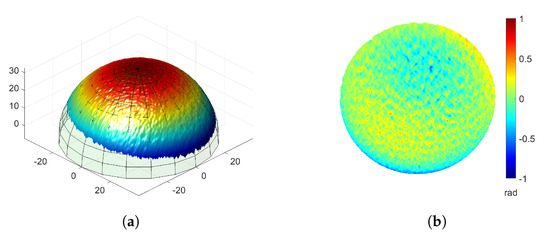

We further evaluated the measurement accuracy of our proposed method. An ideal sphere was fitted to the point cloud of the measured sphere shown in Figure 9c. A Gaussian filter with a size of pixels and a standard deviation of pixels was applied to the measured data to reduce the most significant random noise. The function of the ideal sphere is

where is the center of the sphere and r is the radius of the sphere. The error map was created by taking the difference between the measured data and the ideal sphere. Figure 10a shows the overlap of the measured data and the ideal sphere. Figure 10b shows the error map. The root mean square (RMS) error is approximately and the mean of the error is with a standard deviation () of . These are quite small compared to the radius of the sphere (approximately ).

Figure 10.

Accuracy evaluation of the measuring result of a sphere. (a) Overlap 3D measured data of the sphere shown in Figure 9c with an ideal sphere. (b) Error map of (a) (RMS: , Mean: , : ).

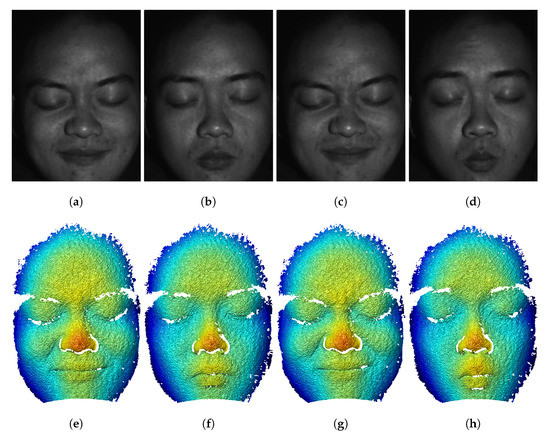

Since only three patterns are required for each 3D reconstruction, we also demonstrated the high-speed ability of the proposed method by capturing a dynamic human face. We used the same structured light system and changed the camera resolution to pixels in order to increase the camera capture rate to 300 Hz. The projector’s refresh rate was also set to 300 Hz. The threshold is increased to 0.18. Since we only need three patterns to reconstruct one 3D frame, the achieved 3D measurement speed is 100 Hz. Figure 11 shows a few typical frames of 3D reconstructions shown in Video S1. This experiment demonstrated the success of our proposed method for measuring dynamic scenes with complex surface geometry and texture.

Figure 11.

Typical 3D frames of capturing a moving face at 100 Hz (Video S1). (a–d) Texture images of the face. (e–h) 3D geometry of (a–d).

4. Conclusions

In this research, we proposed an SGM-assisted absolute phase unwrapping method on a single-camera and single-projector structured light system. The proposed method can measure the absolute depth of multiple isolated 3D objects with complex geometries without prior knowledge of the scene. Compared to our previously developed DIC-assisted method, the SGM-assisted method is more than 30 times faster in pixel-wise correspondence search and generates many fewer correspondence errors. This enabled us to successfully reconstruct one 3D frame using only three speckle-embedded phase-shifted patterns. The proposed method achieves a high measurement accuracy: an RMS error of with a mean of and a standard deviation of for a sphere with a radius of approximately using the speckle-embedded fringe pattern. Since only three patterns are required for one 3D reconstruction, the proposed method can achieve a high speed. We developed a high-speed prototype system that achieved a 100 Hz 3D measurement rate.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/s23010411/s1, Video S1: Captured 3D video of a moving face at 100 Hz.

Author Contributions

Conceptualization, Y.-H.L. and S.Z.; methodology, Y.-H.L. and S.Z.; software, Y.-H.L.; validation, Y.-H.L. and S.Z.; formal analysis, Y.-H.L. and S.Z.; writing—original draft preparation, Y.-H.L.; writing—review and editing, Y.-H.L. and S.Z.; visualization, Y.-H.L.; supervision, S.Z.; project administration, Y.-H.L. and S.Z.; funding acquisition, S.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by National Science Foundation grant number IIS-1763689.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Data underlying the results presented in this paper are not publicly available at this time but may be obtained from the authors upon reasonable request.

Acknowledgments

The authors of this paper would like to thank Wang Xiang for the assistance to serve as the model to test our system. This work was sponsored by National Science Foundation (NSF) under the grant No. IIS-1763689. Views expressed here are those of the authors and not necessarily those of the NSF.

Conflicts of Interest

YHL: The author declares no conflict of interest. SZ: ORI LLC (C), Orbbec 3D (C), Vision Express Optics Inc (I). The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Takeda, M.; Mutoh, K. Fourier transform profilometry for the automatic measurement of 3-D object shapes. Appl. Opt. 1983, 22, 3977–3982. [Google Scholar] [CrossRef] [PubMed]

- Kemao, Q. Windowed Fourier transform for fringe pattern analysis. Appl. Opt. 2004, 43, 2695–2702. [Google Scholar] [CrossRef] [PubMed]

- Schreiber, H.; Bruning, J.H. Optical Shop Testing, 3rd ed.; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 2006; pp. 547–666. [Google Scholar]

- Zhang, S.; Li, X.; Yau, S.T. Multilevel quality-guided phase unwrapping algorithm for real-time three-dimensional shape reconstruction. Appl. Opt. 2007, 46, 50–57. [Google Scholar] [CrossRef] [PubMed]

- Zhao, M.; Huang, L.; Zhang, Q.; Su, X.; Asundi, A.; Kemao, Q. Quality-guided phase unwrapping technique: Comparison of quality maps and guiding strategies. Appl. Opt. 2011, 50, 6214–6224. [Google Scholar] [CrossRef]

- Chen, K.; Xi, J.; Yu, Y. Quality-guided spatial phase unwrapping algorithm for fast three-dimensional measurement. Opt. Commun. 2013, 294, 139–147. [Google Scholar] [CrossRef]

- Xiang, S.; Yang, Y.; Deng, H.; Wu, J.; Yu, L. Multi-anchor spatial phase unwrapping for fringe projection profilometry. Opt. Express 2019, 27, 33488–33503. [Google Scholar] [CrossRef]

- Zhang, S. High-Speed 3D Imaging with Digital Fringe Projection Techniques, 1st ed.; CRC Press: Boca Raton, FL, USA, 2016; pp. 50–52. [Google Scholar]

- Sansoni, G.; Carocci, M.; Rodella, R. Three-dimensional vision based on a combination of gray-code and phase-shift light projection: Analysis and compensation of the systematic errors. Appl. Opt. 1999, 38, 6565–6573. [Google Scholar] [CrossRef]

- Zuo, C.; Huang, L.; Zhang, M.; Chen, Q.; Asundi, A. Temporal phase unwrapping algorithms for fringe projection profilometry: A comparative review. Opt. Lasers Eng. 2016, 85, 84–103. [Google Scholar] [CrossRef]

- Cheng, Y.Y.; Wyant, J.C. Two-wavelength phase shifting interferometry. Appl. Opt. 1984, 23, 4539–4543. [Google Scholar] [CrossRef]

- Cheng, Y.Y.; Wyant, J.C. Multiple-wavelength phase-shifting interferometry. Appl. Opt. 1985, 24, 804–807. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, S. Novel phase-coding method for absolute phase retrieval. Opt. Lett. 2012, 37, 2067–2069. [Google Scholar] [CrossRef] [PubMed]

- An, Y.; Hyun, J.S.; Zhang, S. Pixel-wise absolute phase unwrapping using geometric constraints of structured light system. Opt. Express 2016, 24, 18445–18459. [Google Scholar] [CrossRef] [PubMed]

- An, Y.; Zhang, S. Three-dimensional absolute shape measurement by combining binary statistical pattern matching with phase-shifting methods. Appl. Opt. 2017, 56, 5418–5426. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Guo, W.; Wu, Z.; Zhang, Q. Three-dimensional shape measurement based on speckle-embedded fringe patterns and wrapped phase-to-height lookup table. Opt. Rev. 2021, 28, 227–238. [Google Scholar] [CrossRef]

- Hu, P.; Yang, S.; Zhang, G.; Deng, H. High-speed and accurate 3D shape measurement using DIC-assisted phase matching and triple-scanning. Opt. Lasers Eng. 2021, 147, 106725. [Google Scholar] [CrossRef]

- Yin, W.; Feng, S.; Tao, T.; Huang, L.; Trusiak, M.; Chen, Q.; Zuo, C. High-speed 3D shape measurement using the optimized composite fringe patterns and stereo-assisted structured light system. Opt. Express 2019, 27, 2411–2431. [Google Scholar] [CrossRef]

- Gai, S.; Da, F.; Dai, X. Novel 3D measurement system based on speckle and fringe pattern projection. Opt. Express 2016, 24, 17686–17697. [Google Scholar] [CrossRef]

- Lohry, W.; Chen, V.; Zhang, S. Absolute three-dimensional shape measurement using coded fringe patterns without phase unwrapping or projector calibration. Opt. Express 2014, 22, 1287–1301. [Google Scholar] [CrossRef]

- Lohry, W.; Zhang, S. High-speed absolute three-dimensional shape measurement using three binary dithered patterns. Opt. Express 2014, 22, 26752–26762. [Google Scholar] [CrossRef]

- Zhong, K.; Li, Z.; Shi, Y.; Wang, C.; Lei, Y. Fast phase measurement profilometry for arbitrary shape objects without phase unwrapping. Opt. Lasers Eng. 2013, 51, 1213–1222. [Google Scholar] [CrossRef]

- Garcia, R.R.; Zakhor, A. Consistent Stereo-Assisted Absolute Phase Unwrapping Methods for Structured Light Systems. IEEE J. Sel. Top. Signal Process 2012, 6, 411–424. [Google Scholar] [CrossRef]

- Pankow, M.; Justusson, B.; Waas, A.M. Three-dimensional digital image correlation technique using single high-speed camera for measuring large out-of-plane displacements at high framing rates. Appl. Opt. 2010, 49, 3418–3427. [Google Scholar] [CrossRef] [PubMed]

- Liao, Y.H.; Xu, M.; Zhang, S. Digital image correlation assisted absolute phase unwrapping. Opt. Express 2022, 30, 33022–33034. [Google Scholar] [CrossRef] [PubMed]

- Baker, S.; Matthews, I. Lucas-Kanade 20 Years On: A Unifying Framework. Int. J. Comput. Vis. 2004, 56, 221–255. [Google Scholar] [CrossRef]

- Hirschmuller, H. Stereo Processing by Semiglobal Matching and Mutual Information. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 328–341. [Google Scholar] [CrossRef] [PubMed]

- Floyd, R.W.; Steinberg, L. An Adaptive Algorithm for Spatial Grayscale. Proc. Soc. Inf. Disp. 1976, 17, 75–77. [Google Scholar]

- Pan, B.; Wang, Z.; Lu, Z. Genuine full-field deformation measurement of an object with complex shape using reliability-guided digital image correlation. Opt. Express 2010, 18, 1011–1023. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.; Huang, P.S. Novel method for structured light system calibration. Opt. Eng. 2006, 45, 083601. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).