Music Sound Quality Assessment in Bimodal Cochlear Implant Users—Toward Improved Hearing Aid Fitting

Abstract

1. Introduction

2. Materials and Methods

2.1. Subjects

2.2. Setup

2.3. Music Stimuli

2.4. Sound Quality Assessment

2.5. Procedures

2.6. Statistical Analysis

3. Results

3.1. General Condition

3.2. Fitting Condition

4. Discussion

4.1. General Condition

4.2. Fitting Condition

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Clark, G.M. The multi-channel cochlear implant: Multi-disciplinary development of electrical stimulation of the cochlea and the resulting clinical benefit. Hear. Res. 2015, 322, 4–13. [Google Scholar] [CrossRef] [PubMed]

- Moore, B.C.J. Listening to music through hearing aids: Potential lessons for cochlear implants. Trends Hear. 2022, 26, 23312165211072969. [Google Scholar] [CrossRef] [PubMed]

- Friesen, L.M.; Shannon, R.V.; Baskent, D.; Wang, X. Speech recognition in noise as a function of the number of spectral channels: Comparison of acoustic hearing and cochlear implants. J. Acoust. Soc. Am. 2001, 110, 1150–1163. [Google Scholar] [CrossRef] [PubMed]

- Rubinstein, J.T. How cochlear implants encode speech. Curr. Opin. Otolaryngol. Head Neck Surg. 2004, 12, 444–448. [Google Scholar] [CrossRef]

- Vickers, D.A.; Moore, B.C.J. Editorial: Cochlear implants and music. Trends Hear. 2004, 28, 1–13. [Google Scholar] [CrossRef]

- Akbulut, A.A.; Karaman Demirel, A.; Çiprut, A. Music perception and music-related quality of life in adult cochlear implant users: Exploring the need for music rehabilitation. Ear Hear. 2025, 46, 265–276. [Google Scholar] [CrossRef]

- Zatorre, R.J.; Salimpoor, V.N. From perception to pleasure: Music and its neural substrates. Proc. Natl. Acad. Sci. USA 2013, 110 (Suppl. 2), 10430–10437. [Google Scholar] [CrossRef]

- McDermott, H.J. Music perception with cochlear implants: A review. Trends Amplif. 2004, 8, 49–82. [Google Scholar] [CrossRef]

- Looi, V.; McDermott, H.; McKay, C.; Hickson, L.M.H. Pitch discrimination and melody recognition by cochlear implant users. Int. Congr. Ser. 2004, 1273, 197–200. [Google Scholar] [CrossRef]

- Gfeller, K.; Woodworth, G.; Robin, D.A.; Witt, S.; Knutson, J.F. Perception of rhythmic and sequential pitch patterns by normally hearing adults and adult cochlear implant users. Ear Hear. 1997, 18, 252–260. [Google Scholar] [CrossRef]

- Gfeller, K.; Witt, S.; Woodworth, G.; Mehr, M.A.; Knutson, J. Effects of frequency, instrumental family, and cochlear implant type on timbre recognition and appraisal. Ann. Otol. Rhinol. Laryngol. 2002, 111, 349–356. [Google Scholar] [CrossRef] [PubMed]

- Wong, L.L.; Hickson, L.; McPherson, B. Hearing aid satisfaction: What does research from the past 20 years say? Trends Amplif. 2003, 7, 117–161. [Google Scholar] [CrossRef] [PubMed]

- Fuller, C.; Free, R.; Maat, B.; Başkent, D. Self-reported music perception is related to quality of life and self-reported hearing abilities in cochlear implant users. Cochlear Implant. Int. 2022, 23, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Creff, G.; Lambert, C.; Coudert, P.; Pean, V.; Laurent, S.; Godey, B. Comparison of tonotopic and default frequency fitting for speech understanding in noise in new cochlear implantees: A prospective, randomized, double-blind, cross-over study. Ear Hear. 2024, 45, 35–52. [Google Scholar] [CrossRef]

- Illg, A.; Bojanowicz, M.; Lesinski-Schiedat, A.; Lenarz, T.; Büchner, A. Evaluation of the bimodal benefit in a large cohort of cochlear implant subjects using a contralateral hearing aid. Otol. Neurotol. 2014, 35, e240–e244. [Google Scholar] [CrossRef]

- Sucher, C.M.; McDermott, H.J. Bimodal stimulation: Benefits for music perception and sound quality. Cochlear Implant. Int. 2009, 10 (Suppl. 1), 96–99. [Google Scholar] [CrossRef]

- Scollie, S.; Seewald, R.; Cornelisse, L.; Moodie, S.; Bagatto, M.; Laurnagaray, D.; Beaulac, S.; Pumford, J. The Desired Sensation Level multistage input/output algorithm. Trends Amplif. 2005, 9, 159–197. [Google Scholar] [CrossRef]

- Keidser, G.; Dillon, H.; Flax, M.; Ching, T.; Brewer, S. The NAL-NL2 prescription procedure. Audiol. Res. 2011, 1, e24. [Google Scholar] [CrossRef]

- Vroegop, J.L.; Dingemanse, J.G.; van der Schroeff, M.P.; Goedegebure, A. Comparing the effect of different hearing aid fitting methods in bimodal cochlear implant users. Am. J. Audiol. 2019, 28, 1–10. [Google Scholar] [CrossRef]

- Scherf, F.W.; Arnold, L.P. Exploring the clinical approach to the bimodal fitting of hearing aids and cochlear implants: Results of an international survey. Acta Oto-Laryngol. 2014, 134, 1151–1157. [Google Scholar] [CrossRef]

- Ching, T.Y.; Hill, M.; Dillon, H.; van Wanrooy, E. Fitting and evaluating a hearing aid for recipients of a unilateral cochlear implant: The NAL approach, part 1. Hearing aid prescription, adjustment, and evaluation. Hear. Rev. 2004, 14, 58. [Google Scholar]

- Grimm, G.; Herzke, T.; Berg, D.; Hohmann, V. The master hearing aid: A PC-based platform for algorithm development and evaluation. Acta Acust. United Acust. 2006, 92, 618–628. [Google Scholar]

- El Fata, F.; James, C.J.; Laborde, M.L.; Fraysse, B. How much residual hearing is ‘useful’ for music perception with cochlear implants? Audiol. Neurootol. 2009, 14 (Suppl. 1), 14–21. [Google Scholar] [CrossRef] [PubMed]

- Lartillot, O.; Toiviainen, P. A MATLAB toolbox for musical feature extraction from audio. In Proceedings of the 10th International Conference on Digital Audio Effects (DAFx-07), Bordeaux, France, 10–15 September 2007; Volume 237, pp. DAFX-1–DAFX-8. [Google Scholar]

- ITU-R BS.1534-2; Method for the Subjective Assessment of Intermediate Quality Level of Audio Systems. BS Series, Broadcasting Service (Sound); International Telecommunication Union: Geneva, Switzerland, 2014.

- Landsberger, D.M.; Vermeire, K.; Stupak, N.; Lavender, A.; Neukam, J.; Van de Heyning, P.; Svirsky, M.A. Music is more enjoyable with two ears, even if one of them receives a degraded signal provided by a cochlear implant. Ear Hear. 2020, 41, 476–490. [Google Scholar] [CrossRef]

- Gaudrain, E.; Başkent, D. Factors limiting vocal-tract length discrimination in cochlear implant simulations. J. Acoust. Soc. Am. 2015, 137, 1298–1308. [Google Scholar] [CrossRef]

- Gaudrain, E. Vocoder, v1.0. Online Code. Available online: https://github.com/egaudrain/vocoder (accessed on 18 June 2019). [CrossRef]

- Greenwood, D.D. A cochlear frequency-position function for several species—29 years later. J. Acoust. Soc. Am. 1990, 87, 2592–2605. [Google Scholar] [CrossRef]

- Völker, C.; Bisitz, T.; Huber, R.; Kollmeier, B.; Ernst, S.M.A. Modifications of the MUlti Stimulus test with Hidden Reference and Anchor (MUSHRA) for use in audiology. Int. J. Audiol. 2018, 57 (Suppl. 3), S92–S104. [Google Scholar] [CrossRef]

- Crew, J.D.; Galvin, J.J., 3rd; Fu, Q.J. Perception of sung speech in bimodal cochlear implant users. Trends Hear. 2016, 20, 2331216516669329. [Google Scholar] [CrossRef]

- Ewert, S.D. AFC—A modular framework for running psychoacoustic experiments and computational perception models. In Proceedings of the AIA-DAGA 2013 International Conference on Acoustics, Merano, Italy, 18–21 March 2013; pp. 1326–1329. [Google Scholar]

- Lorho, G.; Le Ray, G.; Zacharov, N. eGauge—A measure of assessor expertise in audio quality evaluations. Int. Conf. 2010, 191–200. Available online: https://www.researchgate.net/publication/287636913_eGauge_-_A_measure_of_assessor_expertise_in_audio_quality_evaluations (accessed on 18 June 2019).

- Dijksterhuis, G.B.; Heiser, W.J. The role of permutation tests in exploratory multivariate data analysis. Food Qual. Prefer. 1995, 6, 263–270. [Google Scholar] [CrossRef]

- Riley, P.E.; Ruhl, D.S.; Camacho, M.; Tolisano, A.M. Music appreciation after cochlear implantation in adult patients: A systematic review. Otolaryngol. Head Neck Surg. 2018, 158, 1002–1010. [Google Scholar] [CrossRef]

- Roy, A.T.; Carver, C.; Jiradejvong, P.; Limb, C.J. Musical sound quality in cochlear implant users: A comparison in bass frequency perception between fine structure processing and high-definition continuous interleaved sampling strategies. Ear Hear. 2015, 36, 582–590. [Google Scholar] [CrossRef] [PubMed]

- D’Alessandro, H.D.; Ballantyne, D.; Portanova, G.; Greco, A.; Mancini, P. Temporal coding and music perception in bimodal listeners. Auris Nasus Larynx 2022, 49, 202–208. [Google Scholar] [CrossRef] [PubMed]

- Ching, T.Y.; Incerti, P.; Hill, M.; van Wanrooy, E. An overview of binaural advantages for children and adults who use binaural/bimodal hearing devices. Audiol. Neurootol. 2006, 11 (Suppl. 1), 6–11. [Google Scholar] [CrossRef] [PubMed]

- Francart, T.; McDermott, H.J. Psychophysics, fitting, and signal processing for combined hearing aid and cochlear implant stimulation. Ear Hear. 2013, 34, 685–700. [Google Scholar] [CrossRef]

- Gilbert, M.L.; Deroche, M.L.D.; Jiradejvong, P.; Chan Barrett, K.; Limb, C.J. Cochlear implant compression optimization for musical sound quality in MED-EL users. Ear Hear. 2022, 43, 862–873. [Google Scholar] [CrossRef]

- Kirchberger, M.; Russo, F.A. Dynamic range across music genres and the perception of dynamic compression in hearing-impaired listeners. Trends Hear. 2016, 20, 2331216516630549. [Google Scholar] [CrossRef]

- Roy, A.T.; Jiradejvong, P.; Carver, C.; Limb, C.J. Musical sound quality impairments in cochlear implant users as a function of limited high-frequency perception. Trends Amplif. 2012, 16, 191–200. [Google Scholar] [CrossRef]

- Landwehr, M.; Fürstenberg, D.; Walger, M.; von Wedel, H.; Meister, H. Effects of various electrode configurations on music perception, intonation and speaker gender identification. Cochlear Implants Int. 2014, 15, 27–35. [Google Scholar] [CrossRef]

- Mo, J.; Jiam, N.T.; Deroche, M.L.D.; Jiradejvong, P.; Limb, C.J. Effect of frequency response manipulations on musical sound quality for cochlear implant users. Trends Hear. 2022, 26, 23312165221120017. [Google Scholar] [CrossRef]

- Gfeller, K.; Oleson, J.; Knutson, J.F.; Breheny, P.; Driscoll, V.; Olszewski, C. Multivariate predictors of music perception and appraisal by adult cochlear implant users. J. Am. Acad. Audiol. 2008, 19, 120–134. [Google Scholar] [CrossRef]

- Buyens, W.; van Dijk, B.; Moonen, M.; Wouters, J. Music mixing preferences of cochlear implant recipients: A pilot study. Int. J. Audiol. 2014, 53, 294–301. [Google Scholar] [CrossRef]

- Limb, C.J.; Mo, J.; Jiradejvong, P.; Jiam, N.T. The impact of vocal boost manipulations on musical sound quality for cochlear implant users. Laryngoscope 2023, 133, 938–947. [Google Scholar] [CrossRef]

- Vaisberg, J.M.; Beaulac, S.; Glista, D.; Macpherson, E.A.; Scollie, S.D. Perceived sound quality dimensions influencing frequency-gain shaping preferences for hearing aid-amplified speech and music. Trends Hear. 2021, 25, 2331216521989900. [Google Scholar] [CrossRef]

- Hake, R.; Bürgel, M.; Lesimple, C.; Vormann, M.; Wagener, K.C.; Kuehnel, V.; Siedenburg, K. Perception of recorded music with hearing aids: Compression differentially affects musical scene analysis and musical sound quality. Trends Hear. 2025, 29, 23312165251368669. [Google Scholar] [CrossRef]

| ID | Gender | Age Range [yrs] | CI Experience [yrs] | HA Experience [yrs] | LF PTA [dB HL] | LF Gain Stepsize [dB] | Reliab. Classical | Reliab. Pop with Vocals | Reliab. Pop No Vocals |

|---|---|---|---|---|---|---|---|---|---|

| S01 | f | 71–75 | 5 | 20 | 70.0 | 5.1 | yes | yes | yes |

| S02 | m | 56–60 | 4 | 4 | 63.0 | 7.1 | no | yes | yes |

| S03 | m | 51–55 | 1.5 | 3 | 43.7 | 6.0 | yes | yes | yes |

| S04 | m | 66–70 | 1.6 | 55 | 82.3 | 7.2 | yes | yes | no |

| S05 | m | 51–55 | 1.1 | 27 | 39.3 | 4.5 | yes | yes | yes |

| S06 | m | 26–30 | 1.1 | 20 | 83.7 | 5.8 | yes | yes | yes |

| S07 | f | 76–80 | 1.0 | 11 | 38.3 | 12.6 | no | no | no |

| S08 | m | 26–30 | 6.0 | 16 | 76.0 | 7.0 | yes | yes | yes |

| S09 | m | 66–70 | 1.0 | 6 | 81.0 | 11.3 | yes | no | yes |

| S10 | m | 66–70 | 0.3 | 16 | 44.7 | 6.3 | yes | yes | yes |

| S11 | f | 36–40 | 0.6 | 16 | 55.3 | 8.0 | yes | yes | yes |

| S12 | m | 36–40 | 0.3 | 31 | 80.0 | 6.2 | yes | no | yes |

| S13 | m | 61–65 | 2 | 26 | 53.0 | 10.7 | yes | no | yes |

| General Condition | |

| Condition | Description |

| DSL | Standard fitting based on DSL v5.0 |

| No overlap | Electric and acoustic stimulation do not share a common frequency range |

| Only CI | Stimulation via MHA turned off |

| Only HA | Stimulation via CI turned off |

| Linear gain | Compression ratio of the MHA set to 1:1 |

| Anchor | Noise-vocoded signal as a reference for poor sound quality |

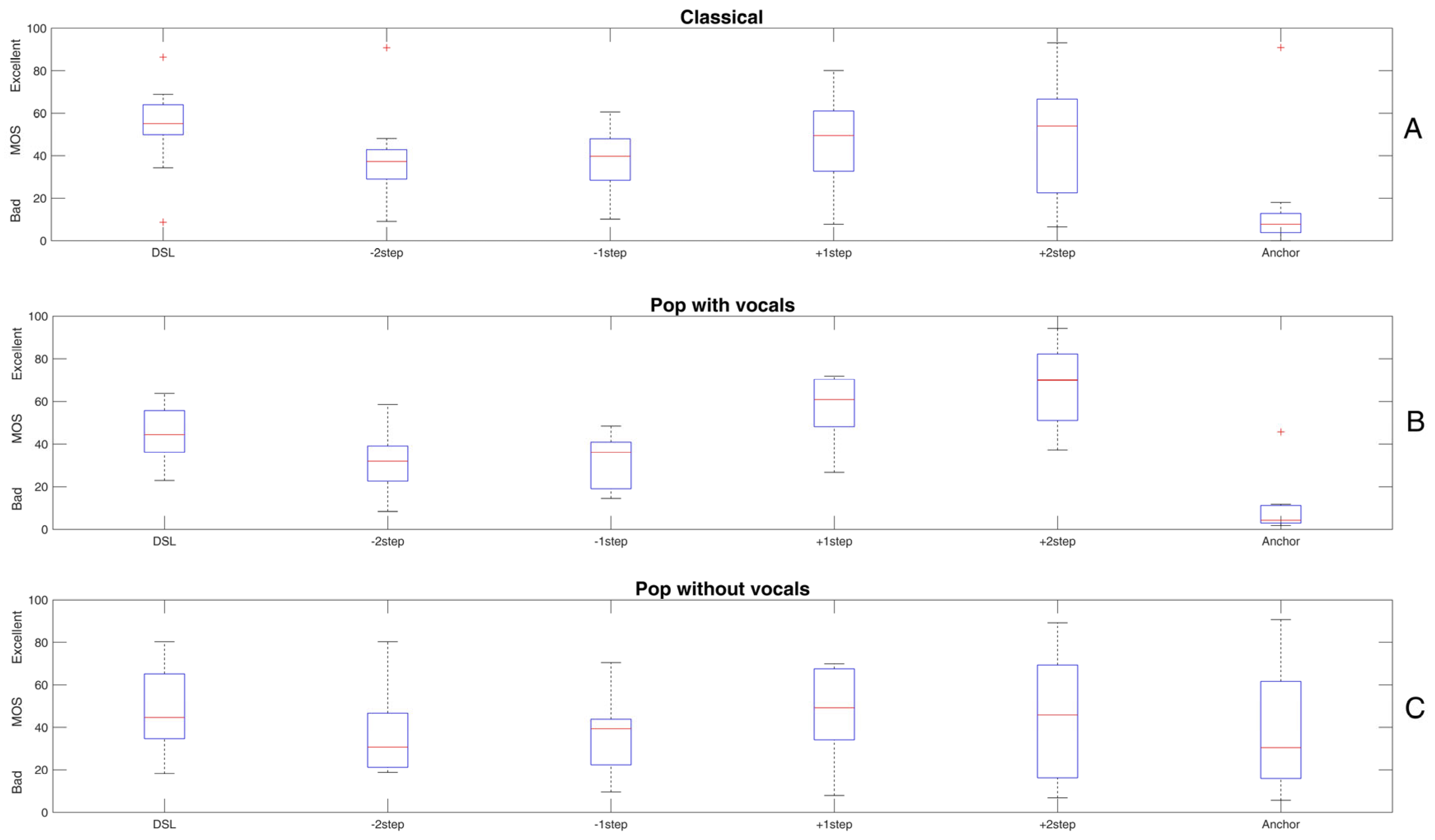

| Fitting Condition | |

| Condition | Description |

| DSL | Standard fitting based on DSL v5.0 |

| −2 step | Low-frequency gain reduced by 2 individual steps |

| −1 step | Low-frequency gain reduced by 1 individual step |

| +1 step | Low-frequency gain increased by 1 individual step |

| +2 step | Low-frequency gain increased by 2 individual steps |

| Anchor | Noise-vocoded signal as a reference for poor sound quality |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Abdellatif, K.H.A.; Hessel, H.; Wächtler, M.; Müller, V.; Walger, M.; Meister, H. Music Sound Quality Assessment in Bimodal Cochlear Implant Users—Toward Improved Hearing Aid Fitting. Audiol. Res. 2025, 15, 151. https://doi.org/10.3390/audiolres15060151

Abdellatif KHA, Hessel H, Wächtler M, Müller V, Walger M, Meister H. Music Sound Quality Assessment in Bimodal Cochlear Implant Users—Toward Improved Hearing Aid Fitting. Audiology Research. 2025; 15(6):151. https://doi.org/10.3390/audiolres15060151

Chicago/Turabian StyleAbdellatif, Khaled H. A., Horst Hessel, Moritz Wächtler, Verena Müller, Martin Walger, and Hartmut Meister. 2025. "Music Sound Quality Assessment in Bimodal Cochlear Implant Users—Toward Improved Hearing Aid Fitting" Audiology Research 15, no. 6: 151. https://doi.org/10.3390/audiolres15060151

APA StyleAbdellatif, K. H. A., Hessel, H., Wächtler, M., Müller, V., Walger, M., & Meister, H. (2025). Music Sound Quality Assessment in Bimodal Cochlear Implant Users—Toward Improved Hearing Aid Fitting. Audiology Research, 15(6), 151. https://doi.org/10.3390/audiolres15060151