Lip-Reading: Advances and Unresolved Questions in a Key Communication Skill

Abstract

1. Introduction

2. Tests to Assess Lip-Reading Skills in Children, Adults, and Hearing-Impaired Individuals

Practical Considerations, Limitations, and Future Directions

| Test Name | Target Group | Strengths | Weaknesses | Ecological Validity |

|---|---|---|---|---|

| Utley Lip-reading Test | General (historically used for children) | First attempt to standardize lip-reading evaluation; raised awareness of the need for objective assessment tools | Outdated; subjective scoring; lacks developmental sensitivity | Low—Relies on isolated words/sentences |

| Tri-BAS (Build-a-Sentence) | Children | Structured closed-set format; uses picture aids; reduces cognitive demand; well-suited for younger population | Limited in assessing spontaneous or contextual comprehension | Moderate—Contextual clues from images, but lacks natural dialogue |

| Illustrated Sentence Test (IST) | Children | Open-set format with context-rich illustrations; encourages naturalistic sentence processing | Requires higher verbal output; may be harder for very young or language-delayed children | Moderate to High—Contextual imagery enhances realism |

| Gist Test | Children | Focuses on overall sentence meaning; useful for assessing higher-level comprehension | Less precise for word-level analysis; performance may vary due to the interpretive nature | Moderate—Emulates real-life gist-based understanding |

| CAVET (Children’s Audio-visual Enhancement Test) | Children | Simple carrier-phrase format; good for basic visual word recognition | Narrow focus on final-word repetition; limited sentence context | Low—Lacks conversational or contextual elements |

| CUNY Sentence Test | Adults with hearing loss or cochlear implants | Validated with clinical populations; used in research and clinical tracking; compatible with neuroimaging | Sensitive to subject fatigue; concentration-dependent; low realism | Low to Moderate—Uses full sentences but lacks conversational flow and speaker variability |

3. The Development of Lip-Reading Abilities

3.1. Infant Sensitivity to Visual Speech and Perceptual Narrowing

3.2. Language-Specific Tuning in Childhood

3.3. Declining Lip-Reading Ability with Aging

4. Culture and Lip-Reading

4.1. Developmental and Cultural Variations in Lip-Reading Ability

4.2. Tonal Languages and Reduced Visual Influences

5. Lip-Reading Ability in Atypical Development

5.1. Lip-Reading in Hearing-Impaired and Cochlear-Implanted Individuals

5.2. Lip-Reading Abilities in Individuals with Dyslexia

5.3. Visual Speech Processing in Children with Specific Language Impairments (SLI)

6. Lip-Reading in Noisy Environments

7. The Neural Basis of Lip-Reading

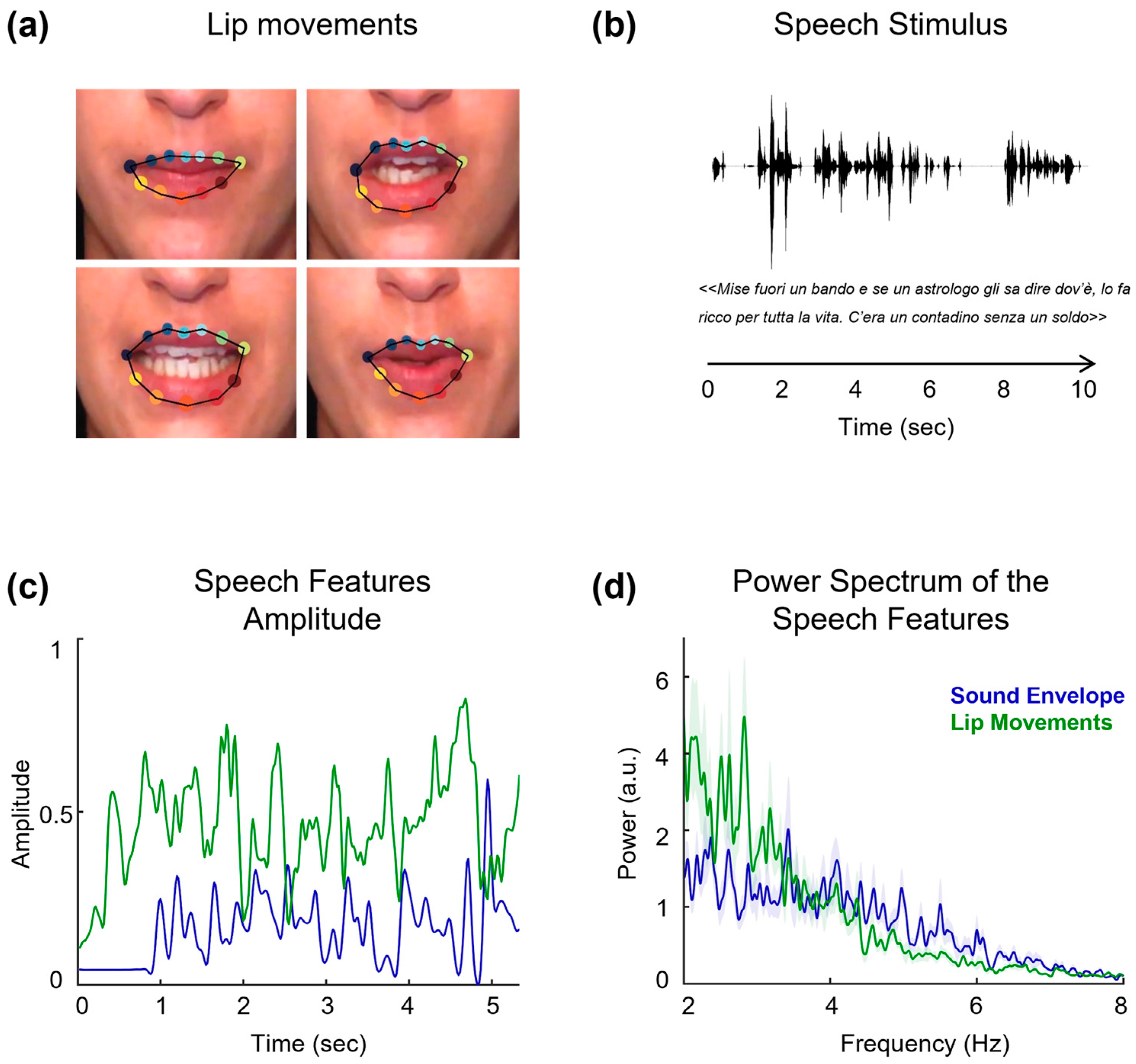

7.1. Neural Tracking and Integration of Visual Speech

7.2. Impact of Face Masks on Neural Processing

7.3. Developmental Cortical Mapping of Visual Speech

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AV | Audiovisual |

| IST | Illustrated Sentence Test |

| WM | Working memory |

| PS | Processing speed |

| CAVET | Children’s Audio-visual Enhancement Test |

| Tri-BAS | 3 × 3 Build-A-Sentence |

| CUNY | City University of New York |

| Ess | English speakers |

| JSs | Japanese speakers |

| SLI | Specific language impairments |

| SNRs | Signal-to-noise ratios |

| SSN | Speech-shaped noise |

| MEG | Magnetoencephalography |

| EEG | Electroencephalography |

| fNIRS | functional Near-infrared spectroscopy |

| TVSA | Temporal visual speech area |

References

- Crosse, M.J.; Butler, J.S.; Lalor, E.C. Congruent Visual Speech Enhances Cortical Entrainment to Continuous Auditory Speech in Noise-Free Conditions. J. Neurosci. 2015, 35, 14195–14204. [Google Scholar] [CrossRef] [PubMed]

- Park, H.; Kayser, C.; Thut, G.; Gross, J. Lip movements entrain the observers’ low-frequency brain oscillations to facilitate speech intelligibility. eLife 2016, 5, e14521. [Google Scholar] [CrossRef] [PubMed]

- Cappelletta, L.; Harte, N. Viseme definitions comparison for visual-only speech recognition. In Proceedings of the 2011 19th European Signal Processing Conference, Barcelona, Spain, 29 August–2 September 2011; pp. 2109–2113. [Google Scholar]

- Schorr, E.A.; Fox, N.A.; Van Wassenhove, V.; Knudsen, E.I. Auditory-visual fusion in speech perception in children with cochlear implants. Proc. Natl. Acad. Sci. USA 2005, 102, 18748–18750. [Google Scholar] [CrossRef] [PubMed]

- Chandrasekaran, C.; Trubanova, A.; Stillittano, S.; Caplier, A.; Ghazanfar, A.A. The Natural Statistics of Audiovisual Speech. PLoS Comput. Biol. 2009, 5, e1000436. [Google Scholar] [CrossRef] [PubMed]

- Guellai, B.; Streri, A. Mouth Movements as Possible Cues of Social Interest at Birth: New Evidences for Early Communicative Behaviors. Front. Psychol. 2022, 13, 831733. [Google Scholar] [CrossRef] [PubMed]

- Sloutsky, V.M.; Napolitano, A.C. Is a Picture Worth a Thousand Words? Preference for Auditory Modality in Young Children. Child Dev. 2003, 74, 822–833. [Google Scholar] [CrossRef] [PubMed]

- Werker, J.F.; Yeung, H.H.; Yoshida, K.A. How Do Infants Become Experts at Native-Speech Perception? Curr. Dir. Psychol. Sci. 2012, 21, 221–226. [Google Scholar] [CrossRef]

- Kuhl, P.K.; Meltzoff, A.N. The Bimodal Perception of Speech in Infancy. Science 1982, 218, 1138–1141. [Google Scholar] [CrossRef] [PubMed]

- Kushnerenko, E.; Tomalski, P.; Ballieux, H.; Potton, A.; Birtles, D.; Frostick, C.; Moore, D.G. Brain responses and looking behavior during audiovisual speech integration in infants predict auditory speech comprehension in the second year of life. Front. Psychol. 2013, 4, 432. [Google Scholar] [CrossRef] [PubMed]

- Danielson, D.K.; Bruderer, A.G.; Kandhadai, P.; Vatikiotis-Bateson, E.; Werker, J.F. The organization and reorganization of audiovisual speech perception in the first year of life. Cogn. Dev. 2017, 42, 37–48. [Google Scholar] [CrossRef] [PubMed]

- Bastianello, T.; Keren-Portnoy, T.; Majorano, M.; Vihman, M. Infant looking preferences towards dynamic faces: A systematic review. Infant Behav. Dev. 2022, 67, 101709. [Google Scholar] [CrossRef] [PubMed]

- Sumby, W.H.; Pollack, I. Visual Contribution to Speech Intelligibility in Noise. J. Acoust. Soc. Am. 1954, 26, 212–215. [Google Scholar] [CrossRef]

- Ross, L.A.; Saint-Amour, D.; Leavitt, V.M.; Javitt, D.C.; Foxe, J.J. Do You See What I Am Saying? Exploring Visual Enhancement of Speech Comprehension in Noisy Environments. Cereb. Cortex 2006, 17, 1147–1153. [Google Scholar] [CrossRef] [PubMed]

- Summerfield, Q. Lipreading and Audio-Visual Speech Perception. Philos. Trans. R. Soc. Lond. Ser. B Biol. Sci. 1992, 335, 71–78. [Google Scholar] [CrossRef]

- McGurk, H.; MacDonald, J. Hearing lips and seeing voices. Nature 1976, 264, 746–748. [Google Scholar] [CrossRef] [PubMed]

- Bernstein, L.E.; Tucker, P.E.; Demorest, M.E. Speech perception without hearing. Percept. Psychophys. 2000, 62, 233–252. [Google Scholar] [CrossRef] [PubMed]

- Utley, J. A Test of Lip Reading Ability. J. Speech Disord. 1946, 11, 109–116. [Google Scholar] [CrossRef] [PubMed]

- Tye-Murray, N.; Hale, S.; Spehar, B.; Myerson, J.; Sommers, M.S. Lipreading in School-Age Children: The Roles of Age, Hearing Status, and Cognitive Ability. J. Speech Lang. Hear. Res. 2014, 57, 556–565. [Google Scholar] [CrossRef] [PubMed]

- Feld, J.E.; Sommers, M.S. Lipreading, Processing Speed, and Working Memory in Younger and Older Adults. J. Speech Lang. Hear. Res. 2009, 52, 1555–1565. [Google Scholar] [CrossRef] [PubMed]

- Boothroyd, A.; Hanin, L.; Hnath, T. A Sentence Test of Speech Perception: Reliability, Set Equivalence, And Short Term Learning. CUNY Acad. Work. 1985, 9. Available online: https://academicworks.cuny.edu/gc_pubs/399/ (accessed on 12 July 2025).

- Debener, S.; Hine, J.; Bleeck, S.; Eyles, J. Source localization of auditory evoked potentials after cochlear implantation. Psychophysiology 2008, 45, 20–24. [Google Scholar] [CrossRef] [PubMed]

- Ludman, C.N.; Summerfield, A.Q.; Hall, D.; Elliott, M.; Foster, J.; Hykin, J.L.; Bowtell, R.; Morris, P.G. Lip-Reading Ability and Patterns of Cortical Activation Studied Using fMRI. Br. J. Audiol. 2000, 34, 225–230. [Google Scholar] [CrossRef] [PubMed]

- Plant, G.; Bernstein, C.; Levitt, H. Optimizing Performance in Adult Cochlear Implant Users through Clinician Directed Auditory Training. Semin. Hear. 2015, 36, 296–310. [Google Scholar] [CrossRef] [PubMed]

- Rogers, E.J. Development and Evaluation of the New Zealand Children’s-Build-a-Sentence Test (NZ Ch-BAS). 2012. Available online: https://ir.canterbury.ac.nz/items/c2e663d8-be90-40c9-82a0-3efa7ef0183a (accessed on 12 July 2025).

- Desjardins, R.N.; Rogers, J.; Werker, J.F. An Exploration of Why Preschoolers Perform Differently Than Do Adults in Audiovisual Speech Perception Tasks. J. Exp. Child Psychol. 1997, 66, 85–110. [Google Scholar] [CrossRef] [PubMed]

- Guellaï, B.; Streri, A.; Chopin, A.; Rider, D.; Kitamura, C. Newborns’ sensitivity to the visual aspects of infant-directed speech: Evidence from point-line displays of talking faces. J. Exp. Psychol. Hum. Percept. Perform. 2016, 42, 1275–1281. [Google Scholar] [CrossRef] [PubMed]

- Tye-Murray, N.; Sommers, M.S.; Spehar, B. Audiovisual Integration and Lipreading Abilities of Older Adults with Normal and Impaired Hearing. Ear Hear. 2007, 28, 656–668. [Google Scholar] [CrossRef] [PubMed]

- Meltzoff, A.N.; Moore, M.K. Imitation of facial and manual gestures by human neonates. Science 1977, 198, 75–78. [Google Scholar] [CrossRef] [PubMed]

- Maurer, D.; Werker, J.F. Perceptual narrowing during infancy: A comparison of language and faces. Dev. Psychobiol. 2014, 56, 154–178. [Google Scholar] [CrossRef] [PubMed]

- Pons, F.; Lewkowicz, D.J.; Soto-Faraco, S.; Sebastián-Gallés, N. Narrowing of intersensory speech perception in infancy. Proc. Natl. Acad. Sci. USA 2009, 106, 10598–10602. [Google Scholar] [CrossRef] [PubMed]

- Lewkowicz, D.J.; Ghazanfar, A.A. The emergence of multisensory systems through perceptual narrowing. Trends Cogn. Sci. 2009, 13, 470–478. [Google Scholar] [CrossRef] [PubMed]

- Erdener, D.; Burnham, D. The relationship between auditory–visual speech perception and language-specific speech perception at the onset of reading instruction in English-speaking children. J. Exp. Child Psychol. 2013, 116, 120–138. [Google Scholar] [CrossRef] [PubMed]

- Ezzat, T.; Poggio, T. Visual speech synthesis by morphing visemes. Int. J. Comput. Vis. 2000, 38, 45–57. [Google Scholar] [CrossRef]

- Peymanfard, J.; Reza Mohammadi, M.; Zeinali, H.; Mozayani, N. Lip reading using external viseme decoding. In Proceedings of the 2022 International Conference on Machine Vision and Image Processing (MVIP), Ahvaz, Iran, 23–24 February 2022; pp. 1–5. [Google Scholar] [CrossRef]

- Werker, J.F.; Hensch, T.K. Critical Periods in Speech Perception: New Directions. Annu. Rev. Psychol. 2015, 66, 173–196. [Google Scholar] [CrossRef] [PubMed]

- Mohammed, T.; Campbell, R.; Macsweeney, M.; Barry, F.; Coleman, M. Speechreading and its association with reading among deaf, hearing and dyslexic individuals. Clin. Linguist. Phon. 2006, 20, 621–630. [Google Scholar] [CrossRef] [PubMed]

- Cienkowski, K.M.; Carney, A.E. Auditory-Visual Speech Perception and Aging. Ear Hear. 2002, 23, 439–449. [Google Scholar] [CrossRef] [PubMed]

- Taljaard, D.S.; Olaithe, M.; Brennan-Jones, C.G.; Eikelboom, R.H.; Bucks, R.S. The relationship between hearing impairment and cognitive function: A meta-analysis in adults. Clin. Otolaryngol. 2016, 41, 718–729. [Google Scholar] [CrossRef] [PubMed]

- Sekiyama, K.; Burnham, D. Impact of language on development of auditory-visual speech perception. Dev. Sci. 2008, 11, 306–320. [Google Scholar] [CrossRef] [PubMed]

- Hisanaga, S.; Sekiyama, K.; Igasaki, T.; Murayama, N. Language/Culture Modulates Brain and Gaze Processes in Audiovisual Speech Perception. Sci. Rep. 2016, 6, 35265. [Google Scholar] [CrossRef] [PubMed]

- Sekiyama, K. Cultural and linguistic factors in audiovisual speech processing: The McGurk effect in Chinese subjects. Percept. Psychophys. 1997, 59, 73–80. [Google Scholar] [CrossRef] [PubMed]

- Kelly, D.J.; Liu, S.; Rodger, H.; Miellet, S.; Ge, L.; Caldara, R. Developing cultural differences in face processing: Developing cultural differences in face processing. Dev. Sci. 2011, 14, 1176–1184. [Google Scholar] [CrossRef] [PubMed]

- Burnham, D.; Lau, S. The Effect of Tonal Information on Auditory Reliance in the McGurk Effect. In Proceedings of the Auditory-Visual Speech Processing (AVSP), Sydney, Australia, 4–6 December 1998; pp. 37–42. [Google Scholar]

- Brown, K.A.; Parikh, S.; Patel, D.R. Understanding basic concepts of developmental diagnosis in children. Transl. Pediatr. 2020, 9, S9–S22. [Google Scholar] [CrossRef] [PubMed]

- Heikkilä, J.; Lonka, E.; Ahola, S.; Meronen, A.; Tiippana, K. Lipreading Ability and Its Cognitive Correlates in Typically Developing Children and Children with Specific Language Impairment. J. Speech Lang. Hear. Res. 2017, 60, 485–493. [Google Scholar] [CrossRef] [PubMed]

- Bernstein, L.E.; Auer, E.T., Jr.; Tucker, P.E. Enhanced Speechreading in Deaf Adults. J. Speech Lang. Hear. Res. 2001, 44, 5–18. [Google Scholar] [CrossRef] [PubMed]

- Suh, M.-W.; Lee, H.-J.; Kim, J.S.; Chung, C.K.; Oh, S.-H. Speech experience shapes the speechreading network and subsequent deafness facilitates it. Brain 2009, 132, 2761–2771. [Google Scholar] [CrossRef] [PubMed]

- Alegria, J.; Charlier, B.L.; Mattys, S. The Role of Lip-reading and Cued Speech in the Processing of Phonological Information in French-educated Deaf Children. Eur. J. Cogn. Psychol. 1999, 11, 451–472. [Google Scholar] [CrossRef]

- Auer, E.T.; Bernstein, L.E. Enhanced Visual Speech Perception in Individuals with Early-Onset Hearing Impairment. J. Speech Lang. Hear. Res. 2007, 50, 1157–1165. [Google Scholar] [CrossRef] [PubMed]

- Capek, C.M.; MacSweeney, M.; Woll, B.; Waters, D.; McGuire, P.K.; David, A.S.; Brammer, M.J.; Campbell, R. Cortical circuits for silent speechreading in deaf and hearing people. Neuropsychologia 2008, 46, 1233–1241. [Google Scholar] [CrossRef] [PubMed]

- Federici, A.; Fantoni, M.; Pavani, F.; Handjaras, G.; Bednaya, E.; Martinelli, A.; Berto, M.; Trabalzini, F.; Ricciardi, E.; Nava, E.; et al. Resilience and vulnerability of neural speech tracking after hearing restoration. Commun. Biol. 2025, 8, 343. [Google Scholar] [CrossRef] [PubMed]

- Francisco, A.A.; Groen, M.A.; Jesse, A.; McQueen, J.M. Beyond the usual cognitive suspects: The importance of speechreading and audiovisual temporal sensitivity in reading ability. Learn. Individ. Differ. 2017, 54, 60–72. [Google Scholar] [CrossRef]

- Knowland, V.C.P.; Evans, S.; Snell, C.; Rosen, S. Visual Speech Perception in Children with Language Learning Impairments. J. Speech Lang. Hear. Res. 2016, 59, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Cherry, E.C. Some experiments on the recognition of speech, with one and with two ears. J. Acoust. Soc. Am. 1953, 25, 975–979. [Google Scholar] [CrossRef]

- McDermott, J.H. The cocktail party problem. Curr. Biol. CB 2009, 19, R1024–R1027. [Google Scholar] [CrossRef] [PubMed]

- Jaha, N.; Shen, S.; Kerlin, J.R.; Shahin, A.J. Visual Enhancement of Relevant Speech in a ‘Cocktail Party’. Multisensory Res. 2020, 33, 277–294. [Google Scholar] [CrossRef] [PubMed]

- Middelweerd, M.J.; Plomp, R. The effect of speechreading on the speech-reception threshold of sentences in noise. J. Acoust. Soc. Am. 1987, 82, 2145–2147. [Google Scholar] [CrossRef] [PubMed]

- Macleod, A.; Summerfield, Q. A procedure for measuring auditory and audiovisual speech-reception thresholds for sentences in noise: Rationale, evaluation, and recommendations for use. Br. J. Audiol. 1990, 24, 29–43. [Google Scholar] [CrossRef] [PubMed]

- O’Neill, J.J. Contributions of the Visual Components of Oral Symbols to Speech Comprehension. J. Speech Hear. Disord. 1954, 19, 429–439. [Google Scholar] [CrossRef] [PubMed]

- Binnie, C.A.; Montgomery, A.A.; Jackson, P.L. Auditory and Visual Contributions to the Perception of Consonants. J. Speech Hear. Res. 1974, 17, 619–630. [Google Scholar] [CrossRef] [PubMed]

- Grant, K.W.; Seitz, P.-F. The use of visible speech cues for improving auditory detection of spoken sentences. J. Acoust. Soc. Am. 2000, 108, 1197–1208. [Google Scholar] [CrossRef] [PubMed]

- Macleod, A.; Summerfield, Q. Quantifying the contribution of vision to speech perception in noise. Br. J. Audiol. 1987, 21, 131–141. [Google Scholar] [CrossRef] [PubMed]

- Shahin, A.J.; Bishop, C.W.; Miller, L.M. Neural mechanisms for illusory filling-in of degraded speech. NeuroImage 2009, 44, 1133–1143. [Google Scholar] [CrossRef] [PubMed]

- Fantoni, M.; Federici, A.; Camponogara, I.; Handjaras, G.; Martinelli, A.; Bednaya, E.; Ricciardi, E.; Pavani, F.; Bottari, D. The impact of face masks on face-to-face neural tracking of speech: Auditory and visual obstacles. Heliyon 2024, 10, e34860. [Google Scholar] [CrossRef] [PubMed]

- O’Sullivan, J.A.; Crosse, M.J.; Power, A.J.; Lalor, E.C. The effects of attention and visual input on the representation of natural speech in EEG. In Proceedings of the 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Osaka, Japan, 3–7 July 2013; pp. 2800–2803. [Google Scholar] [CrossRef]

- Zion Golumbic, E.M.; Ding, N.; Bickel, S.; Lakatos, P.; Schevon, C.A.; McKhann, G.M.; Goodman, R.R.; Emerson, R.; Mehta, A.D.; Simon, J.Z.; et al. Mechanisms Underlying Selective Neuronal Tracking of Attended Speech at a “Cocktail Party”. Neuron 2013, 77, 980–991. [Google Scholar] [CrossRef] [PubMed]

- Giovanelli, E.; Valzolgher, C.; Gessa, E.; Todeschini, M.; Pavani, F. Unmasking the Difficulty of Listening to Talkers with Masks: Lessons from the COVID-19 pandemic. I-Perception 2021, 12, 2041669521998393. [Google Scholar] [CrossRef] [PubMed]

- Nidiffer, A.R.; Cao, C.Z.; O’Sullivan, A.; Lalor, E.C. A representation of abstract linguistic categories in the visual system underlies successful lipreading. NeuroImage 2023, 282, 120391. [Google Scholar] [CrossRef] [PubMed]

- Bednaya, E.; Mirkovic, B.; Berto, M.; Ricciardi, E.; Martinelli, A.; Federici, A.; Debener, S.; Bottari, D. Early visual cortex tracks speech envelope in the absence of visual input. bioRxiv 2022. [Google Scholar] [CrossRef]

- Lakatos, P.; Gross, J.; Thut, G. A New Unifying Account of the Roles of Neuronal Entrainment. Curr. Biol. 2019, 29, R890–R905. [Google Scholar] [CrossRef] [PubMed]

- Obleser, J.; Kayser, C. Neural Entrainment and Attentional Selection in the Listening Brain. Trends Cogn. Sci. 2019, 23, 913–926. [Google Scholar] [CrossRef] [PubMed]

- Bourguignon, M.; Baart, M.; Kapnoula, E.C.; Molinaro, N. Lip-Reading Enables the Brain to Synthesize Auditory Features of Unknown Silent Speech. J. Neurosci. 2020, 40, 1053–1065. [Google Scholar] [CrossRef] [PubMed]

- Haider, C.L.; Suess, N.; Hauswald, A.; Park, H.; Weisz, N. Masking of the mouth area impairs reconstruction of acoustic speech features and higher-level segmentational features in the presence of a distractor speaker. NeuroImage 2022, 252, 119044. [Google Scholar] [CrossRef] [PubMed]

- Haider, C.L.; Park, H.; Hauswald, A.; Weisz, N. Neural Speech Tracking Highlights the Importance of Visual Speech in Multi-speaker Situations. J. Cogn. Neurosci. 2024, 36, 128–142. [Google Scholar] [CrossRef] [PubMed]

- Weikum, W.M.; Vouloumanos, A.; Navarra, J.; Soto-Faraco, S.; Sebastián-Gallés, N.; Werker, J.F. Visual Language Discrimination in Infancy. Science 2007, 316, 1159. [Google Scholar] [CrossRef] [PubMed]

- Dopierała, A.A.W.; López Pérez, D.; Mercure, E.; Pluta, A.; Malinowska-Korczak, A.; Evans, S.; Wolak, T.; Tomalski, P. Watching talking faces: The development of cortical representation of visual syllables in infancy. Brain Lang. 2023, 244, 105304. [Google Scholar] [CrossRef] [PubMed]

- Jessica Tan, S.H.; Kalashnikova, M.; Di Liberto, G.M.; Crosse, M.J.; Burnham, D. Seeing a talking face matters: The relationship between cortical tracking of continuous auditory-visual speech and gaze behaviour in infants, children and adults. NeuroImage 2022, 256, 119217. [Google Scholar] [CrossRef] [PubMed]

- Çetinçelik, M.; Jordan-Barros, A.; Rowland, C.F.; Snijders, T.M. The effect of visual speech cues on neural tracking of speech in 10-month-old infants. Eur. J. Neurosci. 2024, 60, 5381–5399. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Battista, M.; Collesei, F.; Orzan, E.; Fantoni, M.; Bottari, D. Lip-Reading: Advances and Unresolved Questions in a Key Communication Skill. Audiol. Res. 2025, 15, 89. https://doi.org/10.3390/audiolres15040089

Battista M, Collesei F, Orzan E, Fantoni M, Bottari D. Lip-Reading: Advances and Unresolved Questions in a Key Communication Skill. Audiology Research. 2025; 15(4):89. https://doi.org/10.3390/audiolres15040089

Chicago/Turabian StyleBattista, Martina, Francesca Collesei, Eva Orzan, Marta Fantoni, and Davide Bottari. 2025. "Lip-Reading: Advances and Unresolved Questions in a Key Communication Skill" Audiology Research 15, no. 4: 89. https://doi.org/10.3390/audiolres15040089

APA StyleBattista, M., Collesei, F., Orzan, E., Fantoni, M., & Bottari, D. (2025). Lip-Reading: Advances and Unresolved Questions in a Key Communication Skill. Audiology Research, 15(4), 89. https://doi.org/10.3390/audiolres15040089