Prediction of Auditory Performance in Cochlear Implants Using Machine Learning Methods: A Systematic Review

Abstract

1. Introduction

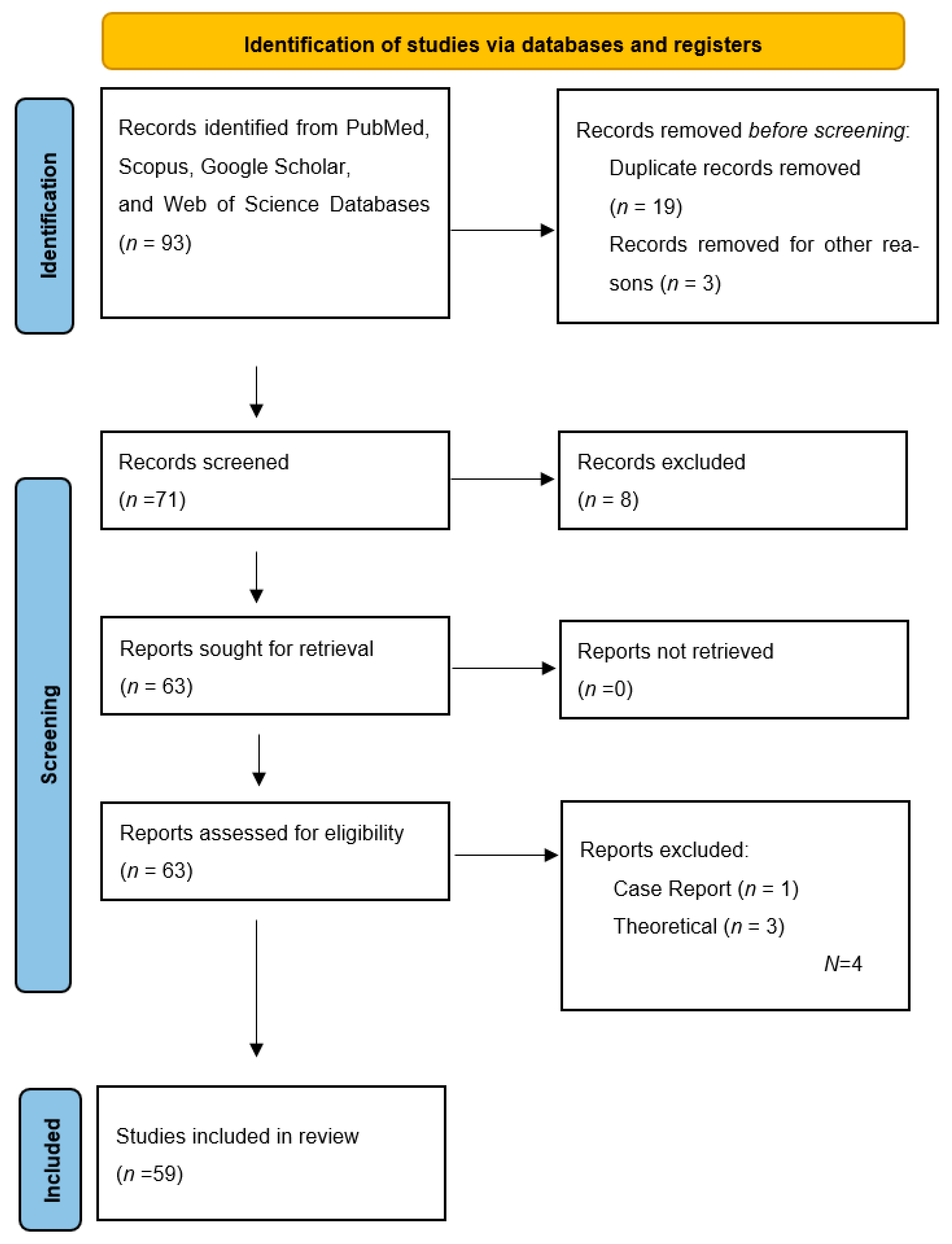

2. Materials and Methods

2.1. Data Resources and Search Strategy

2.2. Inclusion and Exclusion Criteria

- Cochlear implantation studies using machine learning models;

- International full-text studies published in peer-reviewed journals.

- Audiologic studies other than cochlear implantation using machine learning models;

- Theoretical studies;

- Abstracts published at conferences;

- Case reports.

2.3. Selection of Studies

2.4. Data Analysis

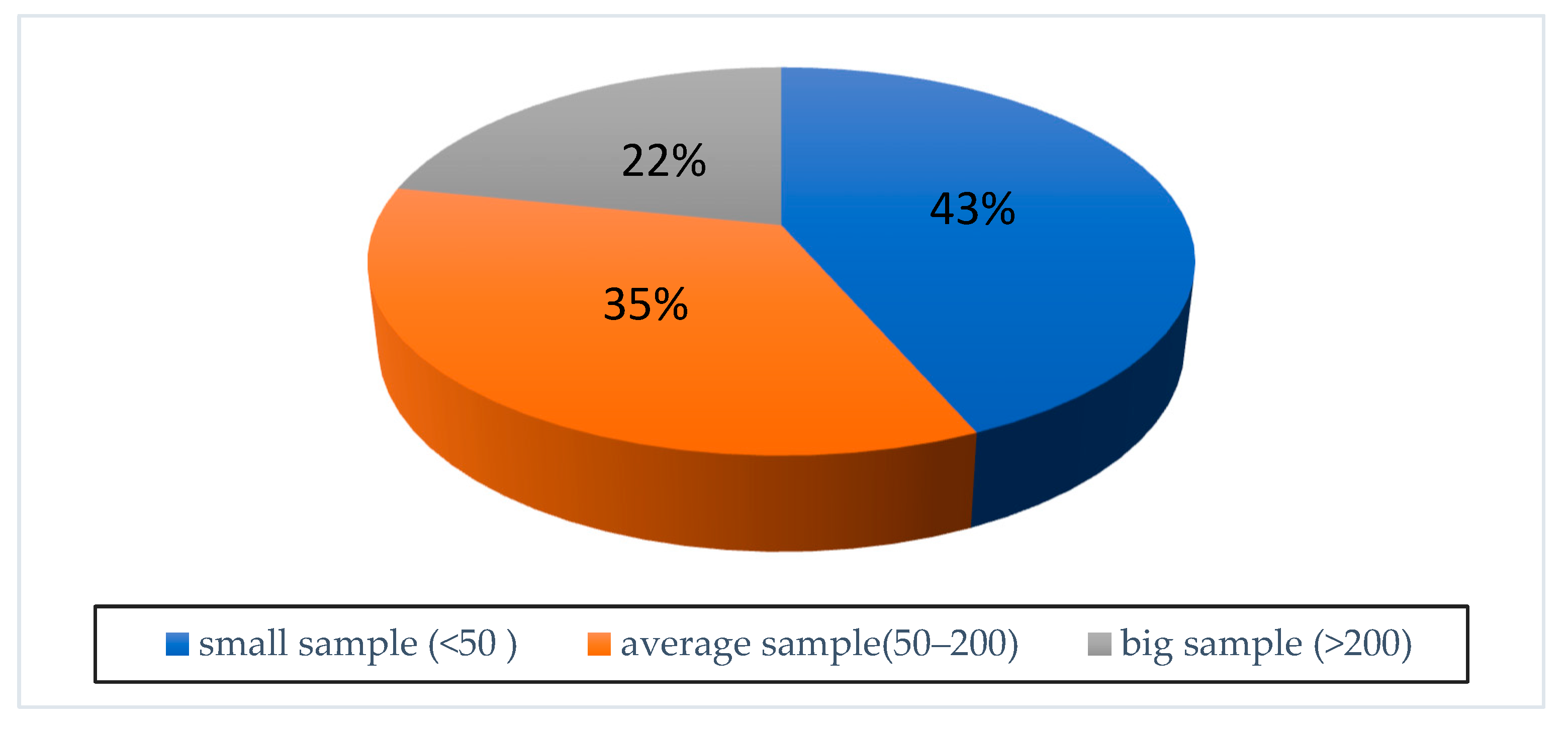

3. Results

4. Discussion

4.1. Preoperative Candidacy

4.2. Intraoperative–Postoperative Measurements

4.3. Speech Perception

4.4. Speech Perception in Noise

4.5. Other Studies

4.6. Evaluation in Terms of Clinical Practice

5. Conclusions

Funding

Data Availability Statement

Conflicts of Interest

References

- Chadha, S.; Kamenov, K.; Cieza, A. The world report on hearing, 2021. Bull. World Health Organ. 2021, 99, 242–242A. Available online: https://pmc.ncbi.nlm.nih.gov/articles/PMC8085630/ (accessed on 3 May 2025). [CrossRef]

- Deep, N.; Dowling, E.; Jethanamest, D.; Carlson, M. Cochlear Implantation: An Overview. J. Neurol. Surg. B Skull Base 2019, 80, 169–177. [Google Scholar] [CrossRef]

- Goudey, B.; Plant, K.; Kiral, I.; Jimeno-Yepes, A.; Swan, A.; Gambhir, M.; Büchner, A.; Kludt, E.; Eikelboom, R.H.; Sucher, C.; et al. A MultiCenter Analysis of Factors Associated with Hearing Outcome for 2735 Adults with Cochlear Implants. Trends Hear. 2021, 25, 23312165211037525. [Google Scholar] [CrossRef]

- Pisoni, D.B.; Kronenberger, W.G.; Harris, M.S.; Moberly, A.C. Three challenges for future research on cochlear implants. World J. Otorhinolaryngol. Head Neck Surg. 2017, 3, 240–254. [Google Scholar] [CrossRef]

- Boisvert, I.; Reis, M.; Au, A.; Cowan, R.; Dowell, R.C. Cochlear implantation outcomes in adults: A scoping review. PLoS ONE 2020, 15, e0232421. [Google Scholar] [CrossRef]

- Lazard, D.S.; Vincent, C.; Venail, F.; Van de Heyning, P.; Truy, E.; Sterkers, O.; Skarzynski, P.H.; Skarzynski, H.; Schauwers, K.; O’Leary, S.; et al. Pre-, Per- and Postoperative Factors Affecting Performance of Postlinguistically Deaf Adults Using Cochlear Implants: A New Conceptual Model over Time. PLoS ONE 2012, 7, e48739. [Google Scholar] [CrossRef]

- Blamey, P.; Artieres, F.; Başkent, D.; Bergeron, F.; Beynon, A.; Burke, E.; Dillier, N.; Dowell, R.; Fraysse, B.; Gallégo, S.; et al. Factors Affecting Auditory Performance of Postlinguistically Deaf Adults Using Cochlear Implants: An Update with 2251 Patients. Audiol. Neurotol. 2013, 18, 36–47. [Google Scholar] [CrossRef]

- Roditi, R.E.; Poissant, S.F.; Bero, E.M.; Lee, D.J. A Predictive Model of Cochlear Implant Performance in Postlingually Deafened Adults. Otol. Neurotol. 2009, 30, 449–454. [Google Scholar] [CrossRef]

- Crowson, M.G.; Lin, V.; Chen, J.M.; Chan, T.C.Y. Machine Learning and Cochlear Implantation—A Structured Review of Opportunities and Challenges. Otol. Neurotol. 2020, 41, E36–E45. [Google Scholar] [CrossRef]

- Crowson, M.G.; Dixon, P.; Mahmood, R.; Lee, J.W.; Shipp, D.; Le, T.; Lin, V.; Chen, J.; Chan, T.C.Y. Predicting Postoperative Cochlear Implant Performance Using Supervised Machine Learning. Otol. Neurotol. 2020, 41, E1013–E1023. [Google Scholar] [CrossRef]

- Mo, J.T.; Chong, D.S.; Sun, C.; Mohapatra, N.; Jiam, N.T. Machine-Learning Predictions of Cochlear Implant Functional Outcomes: A Systematic Review. Ear Hear. 2025. Available online: https://pubmed.ncbi.nlm.nih.gov/39876044/ (accessed on 3 May 2025).

- Weng, J.; Xue, S.; Wei, X.; Lu, S.; Xie, J.; Kong, Y.; Shen, M.; Chen, B.; Chen, J.; Zou, X.; et al. Machine learning-based prediction of the outcomes of cochlear implantation in patients with inner ear malformation. Eur. Arch. Oto-Rhino-Laryngol. 2024, 281, 3535–3545. [Google Scholar] [CrossRef] [PubMed]

- Shafieibavani, E.; Goudey, B.; Kiral, I.; Zhong, P.; Jimeno-Yepes, A.; Swan, A.; Gambhir, M.; Buechner, A.; Kludt, E.; Eikelboom, R.H.; et al. Predictive models for cochlear implant outcomes: Performance, generalizability, and the impact of cohort size. Trends Hear. 2021, 25, 23312165211066174. [Google Scholar] [CrossRef] [PubMed]

- Lu, S.; Xie, J.; Wei, X.; Kong, Y.; Chen, B.; Chen, J.; Zhang, L.; Yang, M.; Xue, S.; Shi, Y.; et al. Machine Learning-Based Prediction of the Outcomes of Cochlear Implantation in Patients with Cochlear Nerve Deficiency and Normal Cochlea: A 2-Year Follow-Up of 70 Children. Front. Neurosci. 2022, 16, 895560. [Google Scholar] [CrossRef]

- Jiang, F.; Jiang, Y.; Zhi, H.; Dong, Y.; Li, H.; Ma, S.; Wang, Y.; Dong, Q.; Shen, H.; Wang, Y. Artificial intelligence in healthcare: Past, present and future. Stroke Vasc. Neurol. 2017, 2, 230–243. [Google Scholar] [CrossRef] [PubMed]

- Hamet, P.; Tremblay, J. Artificial intelligence in medicine. Metabolism 2017, 69, S36–S40. [Google Scholar] [CrossRef]

- Miller, T. Explanation in artificial intelligence: Insights from the social sciences. Artif. Intell. 2019, 267, 1–38. [Google Scholar] [CrossRef]

- Saeed, H.S.; Stivaros, S.M.; Saeed, S.R. The potential for machine learning to improve precision medicine in cochlear implantation. Cochlear Implant. Int. 2019, 20, 229–230. [Google Scholar] [CrossRef]

- Erickson, B.J.; Korfiatis, P.; Akkus, Z.; Kline, T.L. Machine Learning for Medical Imaging. RadioGraphics 2017, 37, 505–515. [Google Scholar] [CrossRef]

- Le, Q.V. Building high-level features using large scale unsupervised learning. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 8595–8598. [Google Scholar]

- Botros, A.; van Dijk, B.; Killian, M. AutoNRTTM: An automated system that measures ECAP thresholds with the Nucleus® FreedomTM cochlear implant via machine intelligence. Artif. Intell. Med. 2007, 40, 15–28. [Google Scholar] [CrossRef]

- Heman-Ackah, Y.D. Diagnostic tools in laryngology. Curr. Opin. Otolaryngol. Head Neck Surg. 2004, 12, 549–552. [Google Scholar] [CrossRef]

- Thaler, E.R.; Hanson, C.W. Use of an electronic nose to diagnose bacterial sinusitis. Am. J. Rhinol. 2006, 20, 170–172. [Google Scholar] [CrossRef] [PubMed]

- Burgansky-Eliash, Z.; Wollstein, G.; Chu, T.; Ramsey, J.D.; Glymour, C.; Noecker, R.J.; Ishikawa, H.; Schuman, J.S. Optical Coherence Tomography Machine Learning Classifiers for Glaucoma Detection: A Preliminary Study. Investig. Opthalmology Vis. Sci. 2005, 46, 4147. [Google Scholar] [CrossRef] [PubMed]

- McCullagh, P.; Wang, H.; Zheng, H.; Lightbody, G.; McAllister, G. A comparison of supervised classification methods for auditory brainstem response determination. Stud. Health Technol. Inform. 2007, 129 Pt 2, 1289–1293. [Google Scholar]

- Holmes, A.E.; Shrivastav, R.; Krause, L.; Siburt, H.W.; Schwartz, E. Speech based optimization of cochlear implants. Int. J. Audiol. 2012, 51, 806–816. [Google Scholar] [CrossRef]

- Francart, T.; McDermott, H.J. Psychophysics, Fitting, and Signal Processing for Combined Hearing Aid and Cochlear Implant Stimulation. Ear Hear. 2013, 34, 685–700. [Google Scholar] [CrossRef]

- Konrad-Martin, D.; Reavis, K.M.; McMillan, G.P.; Dille, M.F. Multivariate DPOAE metrics for identifying changes in hearing: Perspectives from ototoxicity monitoring. Int. J. Audiol. 2012, 51, S51–S62. [Google Scholar] [CrossRef] [PubMed]

- Song, X.D.; Wallace, B.M.; Gardner, J.R.; Ledbetter, N.M.; Weinberger, K.Q.; Barbour, D.L. Fast, Continuous Audiogram Estimation Using Machine Learning. Ear Hear. 2015, 36, e326–e335. [Google Scholar] [CrossRef]

- Kong, Y.Y.; Mullangi, A.; Kokkinakis, K. Classification of Fricative Consonants for Speech Enhancement in Hearing Devices. PLoS ONE 2014, 9, e95001. [Google Scholar] [CrossRef]

- Tan, L.; Holland, S.K.; Deshpande, A.K.; Chen, Y.; Choo, D.I.; Lu, L.J. A semi-supervised Support Vector Machine model for predicting the language outcomes following cochlear implantation based on pre-implant brain fMRI imaging. Brain Behav. 2015, 5, e00391. [Google Scholar] [CrossRef]

- Goehring, T.; Bolner, F.; Monaghan, J.J.M.; van Dijk, B.; Zarowski, A.; Bleeck, S. Speech enhancement based on neural networks improves speech intelligibility in noise for cochlear implant users. Hear Res. 2017, 344, 183–194. [Google Scholar] [CrossRef]

- Wathour, J.; Govaerts, P.J.; Lacroix, E.; Naïma, D. Effect of a CI Programming Fitting Tool with Artificial Intelligence in Experienced Cochlear Implant Patients. Otol. Neurotol. 2023, 44, 209–215. [Google Scholar] [CrossRef] [PubMed]

- Borjigin, A.; Kokkinakis, K.; Bharadwaj, H.M.; Stohl, J.S. Deep learning restores speech intelligibility in multi-talker interference for cochlear implant users. Sci. Rep. 2024, 14, 13241. [Google Scholar] [CrossRef] [PubMed]

- Koyama, H. Machine learning application in otology. Auris Nasus Larynx 2024, 51, 666–673. [Google Scholar] [CrossRef]

- Alohali, Y.A.; Fayed, M.S.; Abdelsamad, Y.; Almuhawas, F.; Alahmadi, A.; Mesallam, T.; Hagr, A. Machine Learning and Cochlear Implantation: Predicting the Post-Operative Electrode Impedances. Electronics 2023, 12, 2720. [Google Scholar] [CrossRef]

- Schuerch, K.; Wimmer, W.; Dalbert, A.; Rummel, C.; Caversaccio, M.; Mantokoudis, G.; Gawliczek, T.; Weder, S. An intracochlear electrocochleography dataset—From raw data to objective analysis using deep learning. Sci. Data 2023, 10, 157. [Google Scholar] [CrossRef]

- Waltzman, S.B.; Kelsall, D.C. The Use of Artificial Intelligence to Program Cochlear Implants. Otol. Neurotol. 2020, 41, 452–457. [Google Scholar] [CrossRef]

- Hafeez, N.; Du, X.; Boulgouris, N.; Begg, P.; Irving, R.; Coulson, C.; Tourrel, G. Electrical impedance guides electrode array in cochlear implantation using machine learning and robotic feeder. Hear Res. 2021, 412, 108371. [Google Scholar] [CrossRef]

- Lai, Y.H.; Tsao, Y.; Lu, X.; Chen, F.; Su, Y.T.; Chen, K.C.; Chen, Y.H.; Chen, L.C.; Li, L.P.H.; Lee, C.H. Deep learning-based noise reduction approach to improve speech intelligibility for cochlear implant recipients. Ear Hear. 2018, 39, 795–809. [Google Scholar] [CrossRef]

- Gajecki, T.; Nogueira, W. A Fused Deep Denoising Sound Coding Strategy for Bilateral Cochlear Implants. IEEE Trans. Biomed. Eng. 2024, 71, 2232–2242. [Google Scholar] [CrossRef]

- Gajecki, T.; Zhang, Y.; Nogueira, W. A Deep Denoising Sound Coding Strategy for Cochlear Implants. IEEE Trans. Biomed. Eng. 2023, 70, 2700–2709. [Google Scholar] [CrossRef]

- de Nobel, J.; Kononova, A.V.; Briaire, J.J.; Frijns, J.H.M.; Bäck, T.H.W. Optimizing stimulus energy for cochlear implants with a machine learning model of the auditory nerve. Hear Res. 2023, 432, 108741. [Google Scholar] [CrossRef] [PubMed]

- Mamun, N.; Khorram, S.; Hansen, J.H.L. Convolutional Neural Network-Based Speech Enhancement for Cochlear Implant Recipients. In Proceedings of the Interspeech 2019, Graz, Austria, 15–19 September 2019; ISCA: Graz, Austria, 2019; pp. 4265–4269. [Google Scholar]

- Henry, F.; Parsi, A.; Glavin, M.; Jones, E. Experimental Investigation of Acoustic Features to Optimize Intelligibility in Cochlear Implants. Sensors 2023, 23, 7553. [Google Scholar] [CrossRef] [PubMed]

- Chu, K.; Throckmorton, C.; Collins, L.; Mainsah, B. Using machine learning to mitigate the effects of reverberation and noise in cochlear implants. Proc. Mtgs. Acoust. 2018, 33, 050003. [Google Scholar]

- Sinha, R.; Azadpour, M. Employing deep learning model to evaluate speech information in acoustic simulations of Cochlear implants. Sci. Rep. 2024, 14, 24056. [Google Scholar] [CrossRef]

- Erfanian Saeedi, N.; Blamey, P.J.; Burkitt, A.N.; Grayden, D.B. An integrated model of pitch perception incorporating place and temporal pitch codes with application to cochlear implant research. Hear Res. 2017, 344, 135–147. [Google Scholar] [CrossRef] [PubMed]

- Hajiaghababa, F.; Marateb, H.R.; Kermani, S. The design and validation of a hybrid digital-signal-processing plug-in for traditional cochlear implant speech processors. Comput. Methods Programs Biomed. 2018, 159, 103–109. [Google Scholar] [CrossRef]

- Hazrati, O.; Sadjadi, S.O.; Hansen, J.H.L. Robust and efficient environment detection for adaptive speech enhancement in cochlear implants. In Proceedings of the 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Florence, Italy, 4–9 May 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 900–904. [Google Scholar]

- Desmond, J.M.; Collins, L.M.; Throckmorton, C.S. Using channel-specific statistical models to detect reverberation in cochlear implant stimuli. J. Acoust. Soc. Am. 2013, 134, 1112–1120. [Google Scholar] [CrossRef]

- Zheng, Q.; Wu, Y.; Zhu, J.; Cao, L.; Bai, Y.; Ni, G. Cochlear Implant Artifacts Removal in EEG-Based Objective Auditory Rehabilitation Assessment. IEEE Trans. Neural Syst. Rehabil. Eng. 2024, 32, 2854–2863. [Google Scholar] [CrossRef]

- Kyong, J.S.; Suh, M.W.; Han, J.J.; Park, M.K.; Noh, T.S.; Oh, S.H.; Lee, J.H. Cross-Modal Cortical Activity in the Brain Can Predict Cochlear Implantation Outcome in Adults: A Machine Learning Study. J. Int. Adv. Otol. 2021, 17, 380–386. [Google Scholar] [CrossRef]

- Kang, Y.; Zheng, N.; Meng, Q. Deep Learning-Based Speech Enhancement With a Loss Trading Off the Speech Distortion and the Noise Residue for Cochlear Implants. Front. Med. 2021, 8, 740123. [Google Scholar] [CrossRef]

- Pavelchek, C.; Michelson, A.P.; Walia, A.; Ortmann, A.; Herzog, J.; Buchman, C.A.; Shew, M.A. Imputation of missing values for cochlear implant candidate audiometric data and potential applications. PLoS ONE 2023, 18, e0281337. [Google Scholar] [CrossRef] [PubMed]

- Ashihara, T.; Furukawa, S.; Kashino, M. Estimating Pitch Information From Simulated Cochlear Implant Signals With Deep Neural Networks. Trends Hear. 2024, 28, 23312165241298606. [Google Scholar] [CrossRef] [PubMed]

- Prentiss, S.; Snapp, H.; Zwolan, T. Audiology Practices in the Preoperative Evaluation and Management of Adult Cochlear Implant Candidates. JAMA Otolaryngol.–Head Neck Surg. 2020, 146, 136. [Google Scholar] [CrossRef]

- Holder, J.T.; Reynolds, S.M.; Sunderhaus, L.W.; Gifford, R.H. Current Profile of Adults Presenting for Preoperative Cochlear Implant Evaluation. Trends Hear. 2018, 22, 2331216518755288. [Google Scholar] [CrossRef]

- Sennaroglu, L.; Saatci, I.; Aralasmak, A.; Gursel, B.; Turan, E. Magnetic resonance imaging versus computed tomography in pre-operative evaluation of cochlear implant candidates with congenital hearing loss. J. Laryngol. Otol. 2002, 116, 804–810. [Google Scholar] [CrossRef] [PubMed]

- Verschuur, C.; Hellier, W.; Teo, C. An evaluation of hearing preservation outcomes in routine cochlear implant care: Implications for candidacy. Cochlear Implants Int. 2016, 17 (Suppl. 1), 62–65. [Google Scholar] [CrossRef]

- Cinar, B.C.; Özses, M. How differ eCAP types in cochlear implants users with and without inner ear malformations: Amplitude growth function, spread of excitation, refractory recovery function. Eur. Arch. Oto-Rhino-Laryngol. 2025, 282, 731–742. [Google Scholar] [CrossRef] [PubMed]

- Patro, A.; Perkins, E.L.; Ortega, C.A.; Lindquist, N.R.; Dawant, B.M.; Gifford, R.; Haynes, D.S.; Chowdhury, N. Machine Learning Approach for Screening Cochlear Implant Candidates: Comparing with the 60/60 Guideline. Otol. Neurotol. 2023, 44, E486–E491. [Google Scholar] [CrossRef]

- Zeitler, D.M.; Buchlak, Q.D.; Ramasundara, S.; Farrokhi, F.; Esmaili, N. Predicting Acoustic Hearing Preservation Following Cochlear Implant Surgery Using Machine Learning. Laryngoscope 2024, 134, 926–936. [Google Scholar] [CrossRef]

- Carlson, M.L.; Carducci, V.; Deep, N.L.; DeJong, M.D.; Poling, G.L.; Brufau, S.R. AI model for predicting adult cochlear implant candidacy using routine behavioral audiometry. Am. J. Otolaryngol.—Head Neck Med. Surg. 2024, 45, 104337. [Google Scholar] [CrossRef]

- İkiz Bozsoy, M.; Parlak Kocabay, A.; Koska, B.; Demirtaş Yılmaz, B.; Özses, M.; Avcı, N.B.; Akkaplan, S.; Ateş, Z.B.; Çınar, B.Ç.; Yaralı, M.; et al. Intraoperative impedance and ECAP results in cochlear implant recipients with inner ear malformations and normal cochlear anatomy: A retrospective analysis. Acta Otolaryngol. 2025, 145, 222–228. [Google Scholar] [CrossRef]

- Yıldırım Gökay, N.; Demirtaş, B.; Özbal Batuk, M.; Yücel, E.; Sennaroğlu, G. Auditory performance and language skills in children with auditory brainstem implants and cochlear implants. Eur. Arch. Oto-Rhino-Laryngol. 2024, 281, 4153–4159. [Google Scholar] [CrossRef] [PubMed]

- Budak, Z.; Batuk, M.O.; D’Alessandro, H.D.; Sennaroglu, G. Hearing-related quality of life assessment of pediatric cochlear implant users with inner ear malformations. Int. J. Pediatr. Otorhinolaryngol. 2022, 160, 111243. [Google Scholar] [CrossRef]

- Hawthorne, G.; Hogan, A.; Giles, E.; Stewart, M.; Kethel, L.; White, K.; Plaith, B.; Pedley, K.; Rushbrooke, E.; Taylor, A. Evaluating the health-related quality of life effects of cochlear implants: A prospective study of an adult cochlear implant program. Int. J. Audiol. 2004, 43, 183–192. [Google Scholar] [CrossRef] [PubMed]

- Vaerenberg, B.; Smits, C.; De Ceulaer, G.; Zir, E.; Harman, S.; Jaspers, N.; D’Hondt, C.; Frijns, J.H.M.; De Beukelaer, C.; Govaerts, P.J. Cochlear implant programming: A global survey on the state of the art. Sci. World J. 2014, 2014, 501738. [Google Scholar] [CrossRef] [PubMed]

- Wolfe, J.; Schafer, E.C. Programming Cochlear Implants, 2nd ed.; Plural Publishing: San Diego, CA, USA, 2015; 408p. [Google Scholar]

- Thangavelu, K.; Nitzge, M.; Weiß, R.M.; Mueller-Mazzotta, J.; Stuck, B.A.; Reimann, K. Role of cochlear reserve in adults with cochlear implants following post-lingual hearing loss. Eur. Arch. Oto-Rhino-Laryngol. 2023, 280, 1063–1071. [Google Scholar] [CrossRef]

- Sawaf, T.; Vovos, R.; Hadford, S.; Woodson, E.; Anne, S. Utility of intraoperative neural response telemetry in pediatric cochlear implants. Int. J. Pediatr. Otorhinolaryngol. 2022, 162, 111298. [Google Scholar] [CrossRef]

- Demirtaş, B.; Özbal Batuk, M.; Dinçer D’Alessandro, H.; Sennaroğlu, G. The Audiological Profile and Rehabilitation of Patients with Incomplete Partition Type II and Large Vestibular Aqueducts. J. Int. Adv. Otol. 2024, 20, 196–202. [Google Scholar] [CrossRef]

- Schraivogel, S.; Weder, S.; Mantokoudis, G.; Caversaccio, M.; Wimmer, W. Predictive Models for Radiation-Free Localization of Cochlear Implants’ Most Basal Electrode using Impedance Telemetry. IEEE Trans. Biomed. Eng. 2024, 72, 1453–1464. [Google Scholar] [CrossRef]

- Skidmore, J.; Xu, L.; Chao, X.; Riggs, W.J.; Pellittieri, A.; Vaughan, C.; Ning, X.; Wang, R.; Luo, J.; He, S. Prediction of the Functional Status of the Cochlear Nerve in Individual Cochlear Implant Users Using Machine Learning and Electrophysiological Measures. Ear Hear. 2021, 42, 180–192. [Google Scholar] [CrossRef]

- Calmels, M.N.; Saliba, I.; Wanna, G.; Cochard, N.; Fillaux, J.; Deguine, O.; Fraysse, B. Speech perception and speech intelligibility in children after cochlear implantation. Int. J. Pediatr. Otorhinolaryngol. 2004, 68, 347–351. [Google Scholar] [CrossRef]

- Dillon, M.T.; Buss, E.; Adunka, M.C.; King, E.R.; Pillsbury, H.C.; Adunka, O.F.; Buchman, C.A. Long-term Speech Perception in Elderly Cochlear Implant Users. JAMA Otolaryngol.–Head Neck Surg. 2013, 139, 279. [Google Scholar] [CrossRef] [PubMed]

- Yildirim Gökay, N.; Gündüz, B.; Karamert, R.; Tutar, H. Postoperative Auditory Progress in Cochlear-Implanted Children with Auditory Neuropathy. Am. J. Audiol. 2025, 34, 29–36. [Google Scholar] [CrossRef] [PubMed]

- Yıldırım Gökay, N.; Yücel, E. Bilateral cochlear implantation: An assessment of language sub-skills and phoneme recognition in school-aged children. Eur. Arch. Oto-Rhino-Laryngol. 2021, 278, 2093–2100. [Google Scholar] [CrossRef] [PubMed]

- Gajęcki, T.; Nogueira, W. Deep learning models to remix music for cochlear implant users. J. Acoust. Soc. Am. 2018, 143, 3602–3615. [Google Scholar] [CrossRef]

- Chang, Y.J.; Han, J.Y.; Chu, W.C.; Li, L.P.H.; Lai, Y.H. Enhancing music recognition using deep learning-powered source separation technology for cochlear implant users. J. Acoust. Soc. Am. 2024, 155, 1694–1703. [Google Scholar] [CrossRef]

- Yüksel, M.; Taşdemir, İ.; Çiprut, A. Listening Effort in Prelingual Cochlear Implant Recipients: Effects of Spectral and Temporal Auditory Processing and Contralateral Acoustic Hearing. Otol. Neurotol. 2022, 43, e1077–e1084. [Google Scholar] [CrossRef]

- Caldwell, A.; Nittrouer, S. Speech Perception in Noise by Children With Cochlear Implants. J. Speech Lang. Hear. Res. 2013, 56, 13–30. [Google Scholar] [CrossRef]

- Dunn, C.C.; Noble, W.; Tyler, R.S.; Kordus, M.; Gantz, B.J.; Ji, H. Bilateral and Unilateral Cochlear Implant Users Compared on Speech Perception in Noise. Ear Hear. 2010, 31, 296–298. [Google Scholar] [CrossRef]

- Oxenham, A.J.; Kreft, H.A. Speech Perception in Tones and Noise via Cochlear Implants Reveals Influence of Spectral Resolution on Temporal Processing. Trends Hear. 2014, 18, 2331216514553783. [Google Scholar] [CrossRef]

- Srinivasan, A.G.; Padilla, M.; Shannon, R.V.; Landsberger, D.M. Improving speech perception in noise with current focusing in cochlear implant users. Hear Res. 2013, 299, 29–36. [Google Scholar] [CrossRef] [PubMed]

- Atay, G.; Tellioğlu, B.; Tellioğlu, H.T.; Avcı, N.B.; Çınar, B.Ç.; Şekeroğlu, H.T. Evaluation of auditory pathways and comorbid inner ear malformations in pediatric patients with Duane retraction syndrome. Int. J. Pediatr. Otorhinolaryngol. 2025, 188, 112207. [Google Scholar] [CrossRef] [PubMed]

- Gaultier, C.; Goehring, T. Recovering speech intelligibility with deep learning and multiple microphones in noisy-reverberant situations for people using cochlear implants. J. Acoust. Soc. Am. 2024, 155, 3833–3847. [Google Scholar] [CrossRef] [PubMed]

- Ocak, E.; Kocaoz, D.; Acar, B.; Topcuoglu, M. Radiological Evaluation of Inner Ear with Computed Tomography in Patients with Unilateral Non-Pulsatile Tinnitus. J. Int. Adv. Otol. 2018, 14, 273–277. [Google Scholar] [CrossRef]

- Paquette, S.; Gouin, S.; Lehmann, A. Improving emotion perception in cochlear implant users: Insights from machine learning analysis of EEG signals. BMC Neurol. 2024, 24, 115. [Google Scholar] [CrossRef]

- Patro, A.; Lawrence, P.J.; Tamati, T.N.; Ning, X.; Moberly, A.C. Using Machine Learning and Multifaceted Preoperative Measures to Predict Adult Cochlear Implant Outcomes: A Prospective Pilot Study. Ear Hear. 2025, 46, 543–549. [Google Scholar] [CrossRef]

- Saeed, H.S.; Fergie, M.; Mey, K.; West, N.; Bille, M.; Caye-Thomasen, P.; Nash, R.; Saeed, S.R.; Stivaros, S.M.; Black, G.; et al. Enlarged Vestibular Aqueduct and Associated Inner Ear Malformations: Hearing Loss Prognostic Factors and Data Modeling from an International Cohort. J. Int. Adv. Otol. 2023, 19, 454–460. [Google Scholar] [CrossRef]

- Patro, A.; Freeman, M.H.; Haynes, D.S. Machine Learning to Predict Adult Cochlear Implant Candidacy. Curr. Otorhinolaryngol. Rep. 2024, 12, 45–49. [Google Scholar] [CrossRef]

- Zhang, M.; Tang, E.; Ding, H.; Zhang, Y. Artificial Intelligence and the Future of Communication Sciences and Disorders: A Bibliometric and Visualization Analysis. J. Speech Lang. Hear. Res. 2024, 67, 4369–4390. [Google Scholar] [CrossRef]

| Year | Number of Articles | Featured Topics and Methods |

|---|---|---|

| 2013–2017 | 12 | Early machine learning applications Early deep learning approaches, experimental data analysis |

| 2018–2022 | 22 | Predictive models, dataset optimizations Increasing use of “machine learning”, basic AI applications |

| 2023–2025 | 25 | Integration of multiple machine learning methods, innovative techniques Transition to broad-based deep learning applications, advanced algorithms |

| Total | 59 |

| Algorithm | Using Field | Evaluation Methods | Regularization | Performance Metrics |

|---|---|---|---|---|

| MAP | Speech in Noise | 10-fold CV | - | Accuracy |

| RVM | Speech in Noise | Train-Test Split | L2 | Accuracy |

| GMM | Speech in Noise | 5-fold CV | - | Accuracy |

| SVM | Post-Op Speech Perception | 10-fold CV | L1/L2 | Accuracy, F1-score |

| ANN | Electrode Design | 10-fold CV | Dropout | Accuracy |

| DNN | Speech in Noise | Train-Test Split | Dropout, L2 | Accuracy, MSE |

| KNN | Speech Perception | 5-fold CV | - | Accuracy |

| Random Forest | Electrophysiological Measurements | 10-fold CV | - | Accuracy, ROC-AUC |

| LSTM | Speech in Noise | 10-fold CV | Dropout | Accuracy, F1-score |

| CNN | Electrode Placement | Train-Test Split | Batch Norm, Dropout | Accuracy |

| DDAE | Speech in Noise | 5-fold CV | - | MSE, Accuracy |

| MLP | Signal Processing | Train-Test Split | Dropout | Accuracy |

| SepFormer | Speech in Noise | 10-fold CV | - | Accuracy |

| ELM | Electrode Design | Train-Test Split | - | Accuracy |

| Bayesian LR | Electrode Impedance | Bayesian prior | - | MSE, Accuracy |

| GBM | Candidacy | 10-fold CV | - | Accuracy, AUC |

| RNN | Speech in Noise | 5-fold CV | Dropout | Accuracy |

| Method | Accuracy Ratio Range | Field of Use |

|---|---|---|

| Decision trees | 91–96% | Intra-Post-Op Measurement |

| Maksimum A Posteriori [MAP] | 91.7–96.2% | Speech in Noise |

| Relevance Vector Machine [RVM] | 91.7–96.2% | Speech in Noise |

| Gaussian Mixture Model [GMM] | 95.13–97.79% | Speech in Noise |

| Support Vector Machine [SVM] | 76–97.79% | Speech in Noise, Post-Op Speech Perception |

| Artificial Neural Networks [ANN] | 65–89% | Electrode Design, Speech Perception |

| Deep Neural Networks [DNN] | 18.2–44.4% [improved speech intelligibility] | Speech in Noise |

| K-Nearest Neighbors [KNN] | 80.7–96.52% | Speech Perception-Quality of Life |

| Random Forest | 73.3–96.2% | Electrophysiological Measurements, |

| Linear Regression | 78.9–96.52% | Programming, Speech Detection |

| LSTM [Long Short-Term Memory] | 71.1–82.9% | Speech in Noise |

| Convolutional Neural Networks [CNN] | 54–99% | Electrode Placement, Speech Perception |

| Deep Denoising Autoencoder [DDAE] | 46.8–77% | Speech in Noise |

| Multilayer Perceptron [MLP] | 75–80% | Music Perception/Signal Processing |

| SepFormer [Separation Transformer] | 59.5–74.7% | Speech in Noise |

| Extreme Learning Machine [ELM] | 90–99% | Electrode Design |

| Bayesian Linear Regression | 83–99% | Electrode Impedance Prediction |

| Gradient Boosting Machines [GBM] | 87–93% | Preoperative Candidacy |

| Recurrent Neural Network [RNN] | 59.5–74.7% | Speech in Noise |

| Period | Highlights and Practices | |

|---|---|---|

| Machine Learning | Areas of Use in Audiology | |

| Early Machine Learning Approaches | Use of basic machine learning methods such as basic decision trees and linear regression. | First experimental applications in the field of cochlear implant, especially post-op measurements |

| Early Deep Learning Approaches, Experimental Data Analyses | The introduction of more complex models, such as artificial neural networks (ANN) and support vector machines (SVM). | Early studies to improve cochlear implant performance by analysing experimental data. |

| Predictive Models, Data Set Optimizations | Use of predictive models (e.g., Random Forest, Gradient Boosting) | Prediction models in areas such as language development and speech perception after cochlear implantation. |

| Data set optimizations and studies to improve model accuracy. | ||

| Rising Use of ‘Machine Learning’, Basic Artificial Intelligence Applications | Expansion of machine learning methods in areas such as CI programming, electrode design and speech in noise. | Using basic AI applications (e.g., FOX system) to improve speech intelligibility of cochlear implant users |

| Increasing Emphasis on ‘Artificial Intelligence’, Model Comparisons | An increased emphasis on artificial intelligence (AI) and comparison of different models (SVM, ANN, Random Forest). | Using various AI models to predict hearing and speech performance after cochlear implant. |

| Integration of Multiple Machine Learning Methods, Innovative Techniques | Integrating multiple machine learning methods (e.g., LSTM, CNN, RNN). | Comprehension problems in noise with innovative techniques (e.g., deep learning-based noise reduction). Improving speech intelligibility of cochlear implant users. |

| The Transition to Comprehensive Deep Learning Approaches, Advanced Algorithms | Common use of deep learning models (e.g., Transformer, SepFormer) in CI. | Using deep learning models for the analysis of EEG signals and other biomedical data. |

| Working with advanced algorithms (e.g., Multi-Task Learning, Deep ACE). | Improving cochlear implant users’ music perception and speech understanding in noise. | |

| Authors | Years | Machine Learning Model | Area of Use | Number of Data | Number of Participants | Accuracy Rate | Explanatory Statement | |

| 1 | Desmond, J.M. et al. [51] | 2013 | Maksimum A Posteriori (MAP), Relevance Vector Machine | Speech in Noise | simulation- | 91.7–96.2% | Machine learning models were able to distinguish echo from other types of noise. The algorithms showed durability against different room and cochlear implant parameters. | |

| 2 | Hazrati, O. et al. [50] | 2014 | Gaussian Mixture Model (GMM), support vector machine (SVM), Neural Network (NN) | Speech in Noise | 720 | 95.13–97.79% | SVM model in speech intelligibility (showed the highest success with 97.79% accuracy rate). | |

| 3 | Saeedi, N.E. et al. [48] | 2017 | Artificial neural network—ANN, Spiking Neural Network—SNN | Speech Perception | 116 | 29 | 65–89% | Artificial neural network (ANN) has shown the best pitch ranking success when it uses spatial and temporal information together.Models using only spatial or temporal codes have lower performance. |

| 4 | Chu, K. et al. [46] | 2018 | Relevance Vector Machine (RVM) | Speech in Noise | - | 15 | 10% improvement in reverberant environments, deterioration when noise and reverberation are combined | Partially successful machine learning applications |

| 5 | Lai, Y.H. et al. [40] | 2018 | Deep Learning/NC + DDAE (Noise Classifier + Deep Denoising Autoencoder) | Speech in Noise | 320 | 9 | Noise classification success rate 99.6% noise reduction: 67.3% | NC + DDAE gives at least 2 times, sometimes up to 4 times, better results compared to classical noise reduction methods |

| 6 | Hajiaghababa, F. et al. [49] | 2018 | Wavelet Neural Networks (WNNs), Infinite Impulse Response Filter Banks (IIR FBs), Dual Resonance Nonlinear (DRNL), Simple Dual Path Nonlinear (SDPN) | Speech Intelligibility | 120 | - | Wavelet Neural Networks (WNNs) showed the highest performance in both test and training sets. | |

| 7 | Waltzman, S.B. & Kelsall, D.C. [38] | 2020 | FOX | Electrophysiological-Programming-Speech Perception | 55 | No statistically significant difference between manual programming and fox p = 0.65, and 0.47 | With FOX, standardised rehabilitation, equal performance and improved patient experience have been found. | |

| 8 | Kang, Y. et al. [54] | 2021 | LSTM | - | - | 19 | - | Deep learning based machine learning method for voice enhancement for speech understanding in noise |

| 9 | Hafeez, N. et al. [39] | 2021 | Support vector machine (SVM), Shallow Neural Network (SNN), k-Nearest Neighbors (KNN) | Electrode Insertion Depth-Intra Op | 137 | 86.1–97.1% | Highly accurate classification of EA using different insertion measurements during the electrode array placement process | |

| 10 | Gajecki, T. et al. [42] | 2023 | Deep Neural Networks—DNN, Deep ACE, Fully-Convolutional Time-Domain Audio Separation Network, Adam, Binary Cross-Entropy | Speech in Noise | - | 8 | SRT speech discrimination 63.1 | The best model for noise reduction: Deep ACE |

| 11 | Pavelchek, C. et al. [55] | 2023 | Univariate Imputation (UI)Interpolation (INT), Multiple Imputation by Chained Equations (MICE), k-Nearest Neighbors (KNN), Gradient Boosted Trees (XGB), Neural Networks (NN) | Cochlear implant candidacy-Behavioural Tests | - | 1304 | 93% | In real-world hearing tests, it has been shown that missing data can be safely filled in. In particular, RMSE = 7.83 dB was achieved, below the clinically significant error threshold of 10 dB. |

| 12 | de Nobel, J. et al. [43] | 2023 | Convolutional Neural Network (CNN), Evolutionary Algorithm (EA), Polynomial Elastic Net (PEN), Random Forest (RF), Gradient Boosting (GB), Multilayer Perceptron (MLP) | 1,466,189 simulation samples, 12,441,600 different excitation waveforms | Accuracy—54–99% | The energy savings of these new waveforms may contribute to longer operation of CI devices with smaller batteries. | ||

| 13 | Zheng, Q. et al. [52] | 2024 | SVM—Support vector machine-EEMD-ICA | EEG-Optimisation | 8448 | 91 | 95.44% | The SVM-based classification algorithm achieved 95.44% accuracy in automatically identifying channels containing cochlear implant artifacts. |

| 14 | Gajecki, T. & Nogueira, W. [41] | 2024 | Bilateral ACE, Bilateral Deep ACE, Fused Deep ACE | Speech in Noise | - | 168 | Speech intelligibility: 45–82%, noise reduction: 72–90% | Fused deep ACE model is the most successful model, 20–30% better speech understanding |

| 15 | Ashihara, T. et al. [56] | 2024 | Deep Neural Network (DNN) | Speech Perception | 1024 | - | Machine learning to improve speech perception in cochlear implant users |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Demirtaş Yılmaz, B. Prediction of Auditory Performance in Cochlear Implants Using Machine Learning Methods: A Systematic Review. Audiol. Res. 2025, 15, 56. https://doi.org/10.3390/audiolres15030056

Demirtaş Yılmaz B. Prediction of Auditory Performance in Cochlear Implants Using Machine Learning Methods: A Systematic Review. Audiology Research. 2025; 15(3):56. https://doi.org/10.3390/audiolres15030056

Chicago/Turabian StyleDemirtaş Yılmaz, Beyza. 2025. "Prediction of Auditory Performance in Cochlear Implants Using Machine Learning Methods: A Systematic Review" Audiology Research 15, no. 3: 56. https://doi.org/10.3390/audiolres15030056

APA StyleDemirtaş Yılmaz, B. (2025). Prediction of Auditory Performance in Cochlear Implants Using Machine Learning Methods: A Systematic Review. Audiology Research, 15(3), 56. https://doi.org/10.3390/audiolres15030056