Applying a Deep Neural Network and Feature Engineering to Assess the Impact of Attacks on Autonomous Vehicles

Abstract

1. Introduction

2. Materials and Methods

2.1. Impact Variables

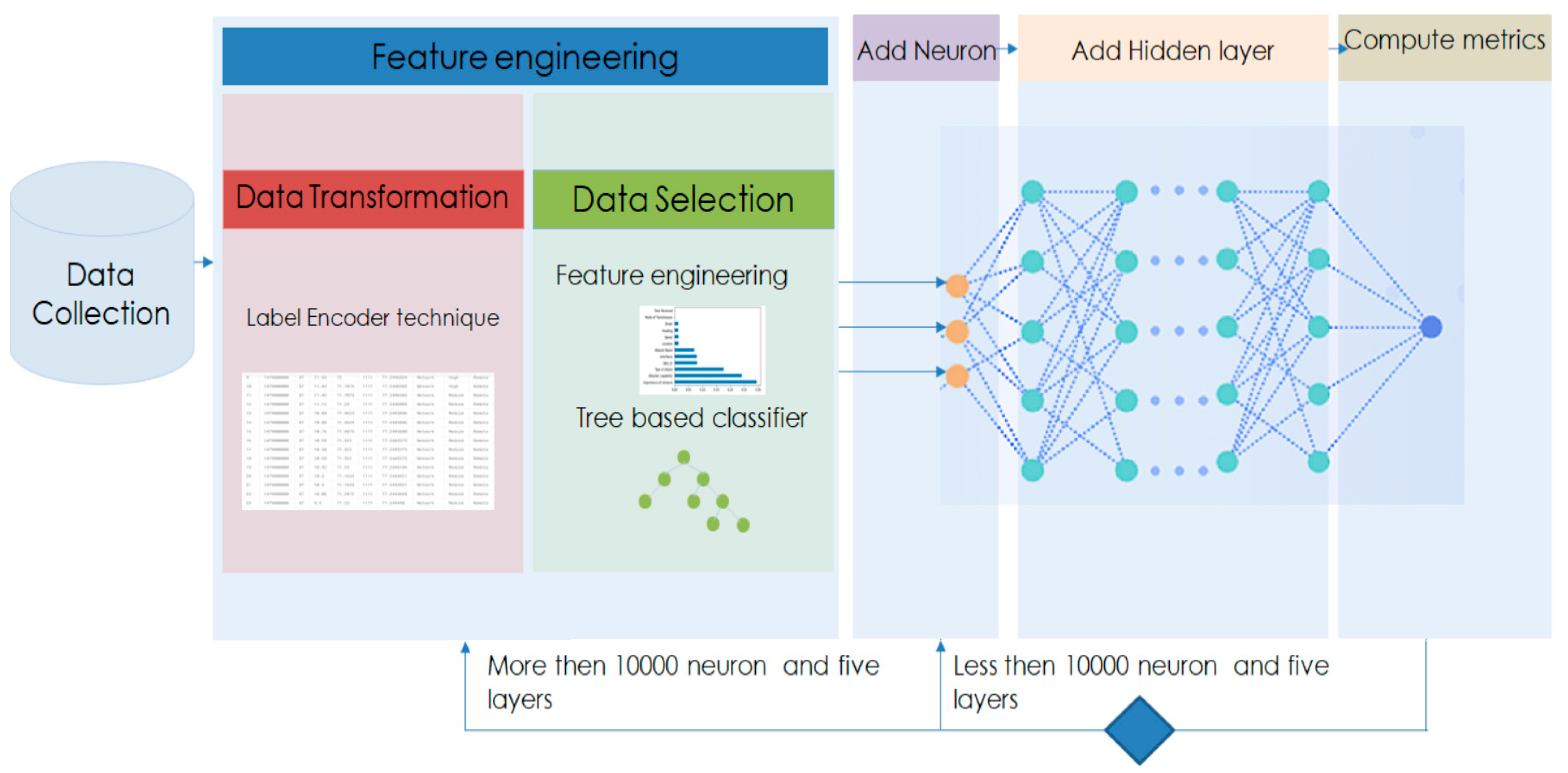

2.2. Method

2.2.1. Data Transformation

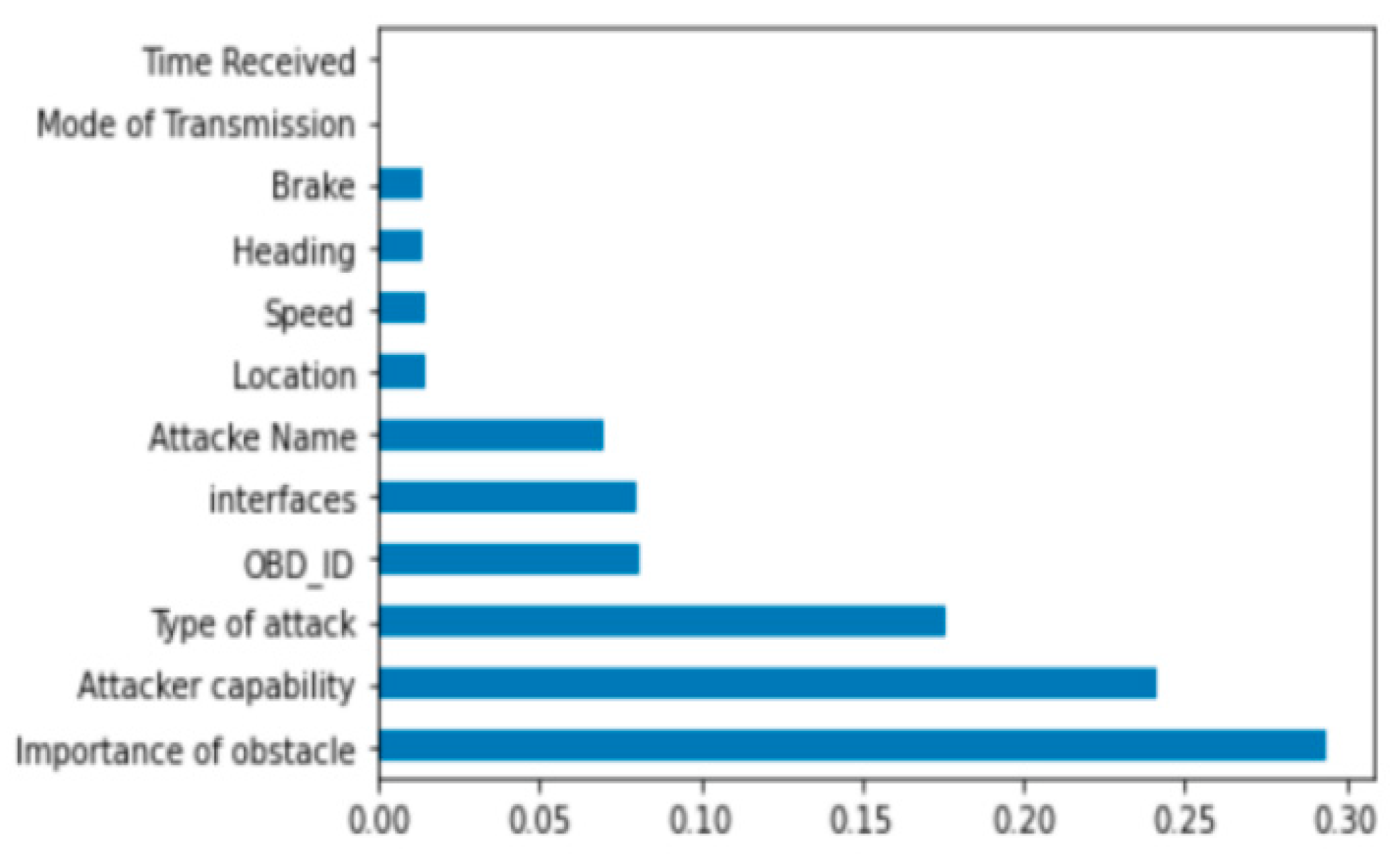

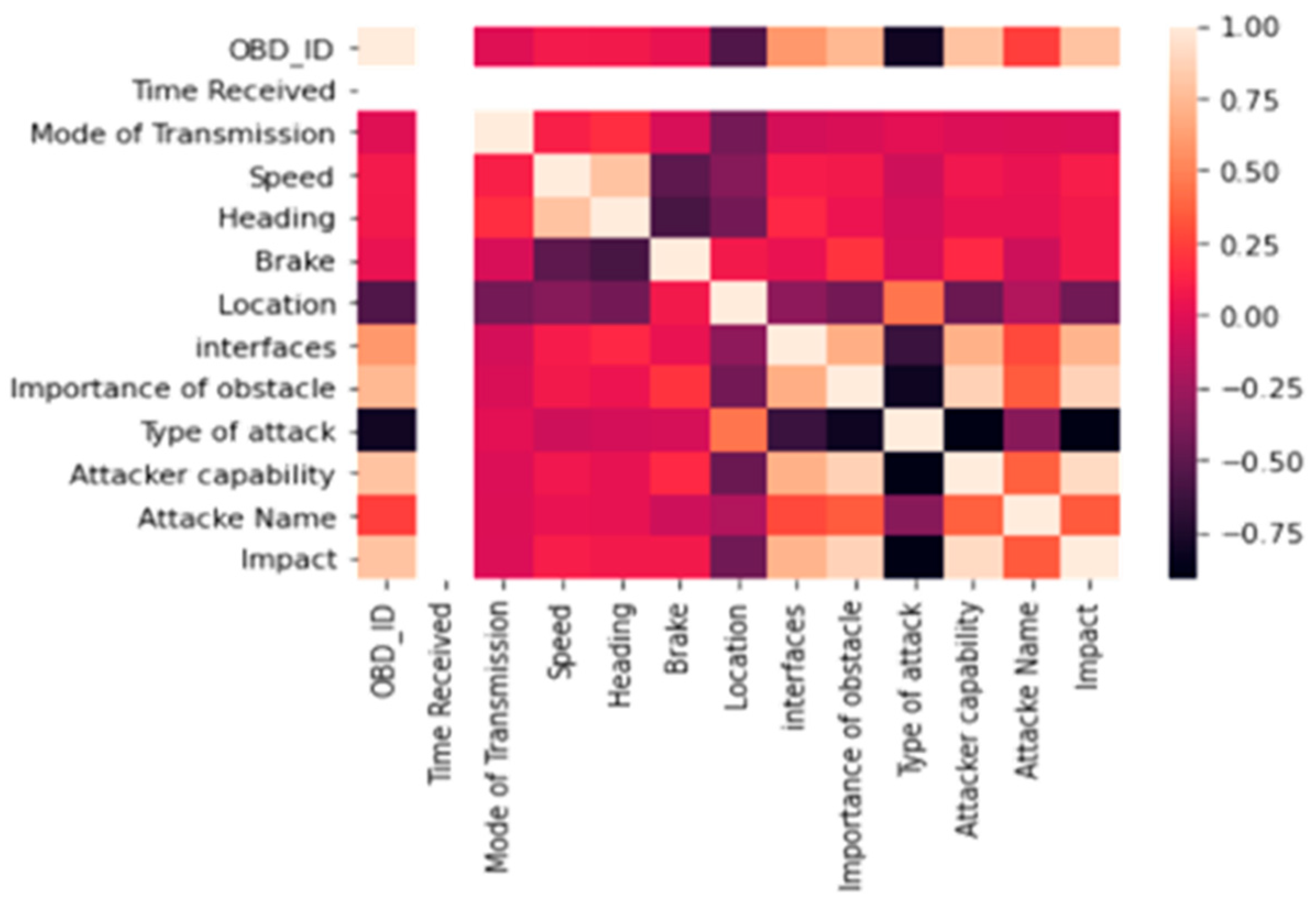

2.2.2. Feature Selection

- ➢

- Initial Feature Evaluation:

- ○

- We initially considered all available features in the dataset.

- ○

- The Gini index was computed for each feature to measure its contribution to reducing uncertainty in classification.

- ➢

- Feature Ranking and Selection:

- ○

- The Extra Trees Classifier assigned importance scores to each feature by calculating the total normalized reduction of the Gini index.

- ○

- Features with higher importance scores were prioritized, while those with minimal contribution were eliminated.

- ○

- A cutoff threshold was applied, and the top 12 features were retained for model training.

- ➢

- Results of Feature Selection:

- ○

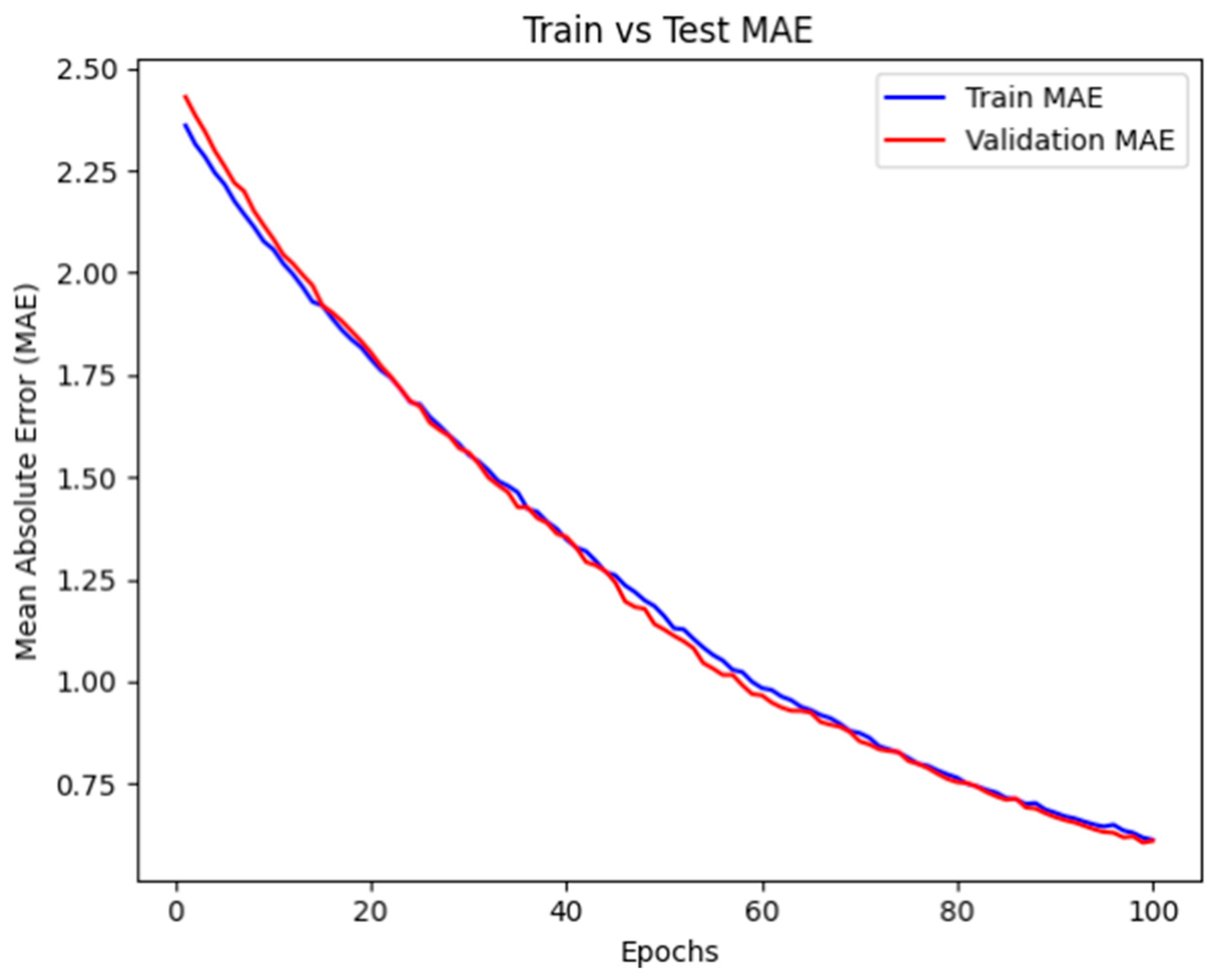

- Reducing the feature set improved training efficiency, leading to a faster convergence of the deep neural network.

- ○

- The final selected features preserved the model’s predictive performance, as verified through Spearman’s correlation and model validation metrics (MAE and loss function).

- ○

- The trade-off between model complexity and interpretability was optimized, ensuring that only the most critical features influencing attack impact were considered.

2.2.3. Designing a Neural Network

- ○

- Vehicle status parameters (e.g., speed, braking status, acceleration);

- ○

- Sensor data (e.g., LiDAR or camera readings);

- ○

- Environmental conditions (e.g., weather, road surface state);

- ○

- System vulnerabilities (e.g., attack type, affected system component).

- ○

- Human factors (e.g., injuries or fatalities);

- ○

- Material and ecological damage (e.g., vehicle damage, infrastructure impact);

- ○

- Moral impacts (e.g., ethical concerns, trust in autonomous systems).

- Vehicle status parameters (e.g., speed, braking status, acceleration);

- Sensor data (e.g., LiDAR or camera readings);

- Environmental conditions (e.g., weather, road surface state);

- System vulnerabilities (e.g., attack type, affected system component).

- Human factors (e.g., injuries or fatalities);

- Material and ecological damage (e.g., vehicle damage, infrastructure impact);

- Moral impact (e.g., ethical concerns, trust in autonomous systems).

- ➢

- If the model performance was poor, we added a second hidden layer with a similar number of neurons and re-evaluated the results.

- ➢

- If further improvements were needed, we increased the number of neurons and adjusted the hidden layers.

- ➢

- If the model showed persistent overfitting or underfitting, or if performance metrics remained low, we reconsidered feature engineering before exceeding five layers or 1000 nodes.

- The number of input and output nodes.

- The amount of available training data.

- The complexity of the function to be learned.

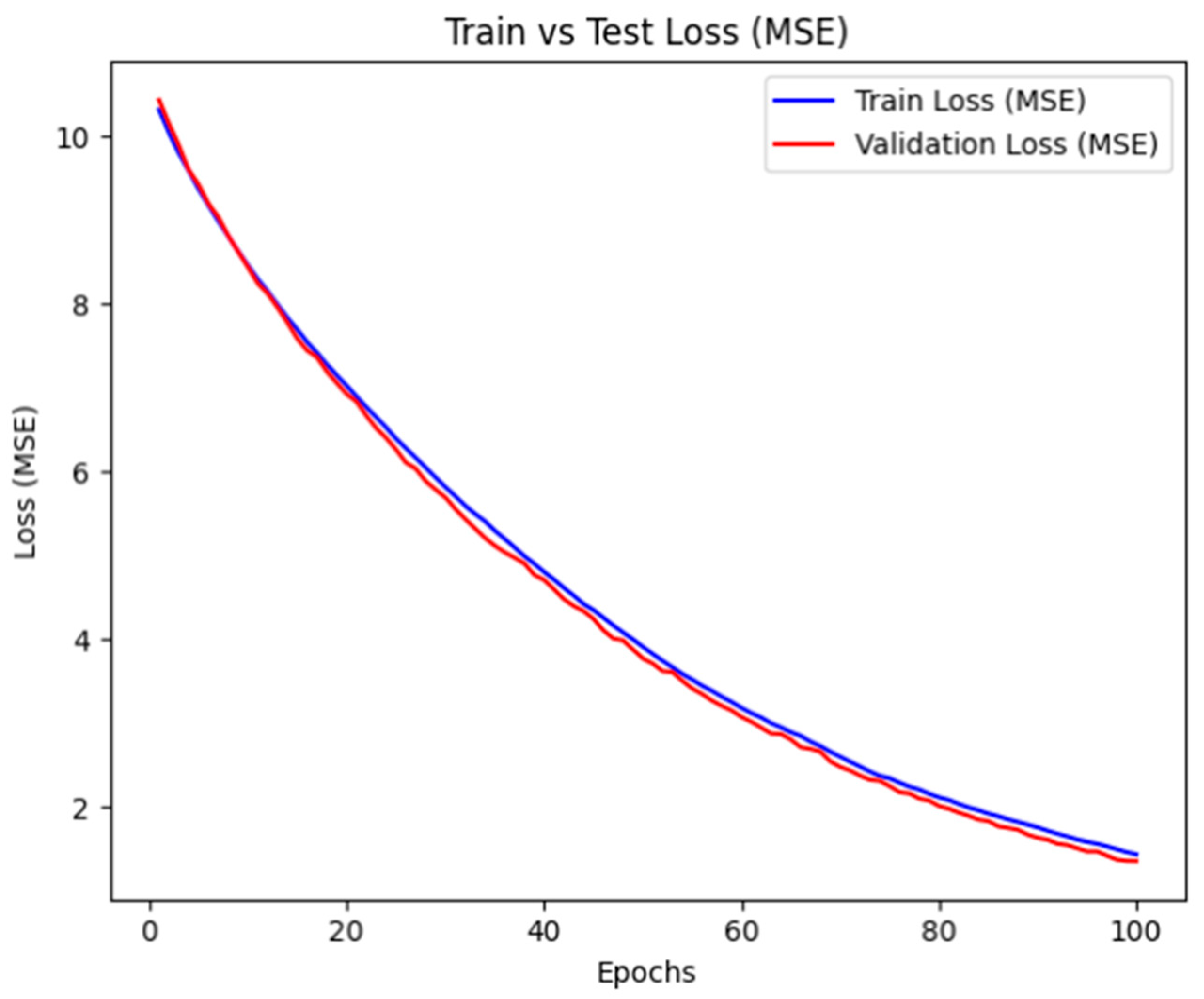

3. Results

- The importance of obstacles;

- The attacker’s capability;

- The type of attack.

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- United Nations Department of Economic and Social Affairs. World Urbanization Prospects: The 2018 Revision; UN: New York, NY, USA, 2019. [Google Scholar] [CrossRef]

- Fagnant, D.J.; Kockelman, K. Preparing a nation for autonomous vehicles: Opportunities, barriers and policy recommendations. Transp. Res. Part A Policy Pract. 2015, 77, 167–181. [Google Scholar] [CrossRef]

- Dingus, T.A.; Guo, F.; Lee, S.; Antin, J.F.; Perez, M.; Buchanan-King, M.; Hankey, J. Driver crash risk factors and prevalence evaluation using naturalistic driving data. Proc. Natl. Acad. Sci. USA 2016, 113, 2636–2641. [Google Scholar] [CrossRef] [PubMed]

- Fu, X.; Meng, H.; Wang, X.; Yang, H.; Wang, J. A hybrid neural network for driving behavior risk prediction based on distracted driving behavior data. PLoS ONE 2022, 17, e0263030. [Google Scholar] [CrossRef] [PubMed]

- Petit, J.; Shladover, S.E. Potential Cyberattacks on Automated Vehicles. IEEE Trans. Intell. Transport. Syst. 2014, 16, 546–556. [Google Scholar] [CrossRef]

- Malik, S.; Sun, W. Analysis and Simulation of Cyber Attacks Against Connected and Autonomous Vehicles. In Proceedings of the 2020 International Conference on Connected and Autonomous Driving (MetroCAD), Detroit, MI, USA, 27–28 February 2020; pp. 62–70. [Google Scholar] [CrossRef]

- Chellapandi, V.P.; Yuan, L.; Brinton, C.G.; Zak, S.H.; Wang, Z. Federated Learning for Connected and Automated Vehicles: A Survey of Existing Approaches and Challenges. arXiv 2023. [Google Scholar] [CrossRef]

- Seetharaman, A.; Patwa, N.; Jadhav, V.; Saravanan, A.S.; Sangeeth, D. Impact of Factors Influencing Cyber Threats on Autonomous Vehicles. Appl. Artif. Intell. 2021, 35, 105–132. [Google Scholar] [CrossRef]

- Parkinson, S.; Ward, P.; Wilson, K.; Miller, J. Cyber Threats Facing Autonomous and Connected Vehicles: Future Challenges. IEEE Trans. Intell. Transport. Syst. 2017, 18, 2898–2915. [Google Scholar] [CrossRef]

- Thing, V.L.L.; Wu, J. Autonomous Vehicle Security: A Taxonomy of Attacks and Defences. In Proceedings of the 2016 IEEE International Conference on Internet of Things (iThings) and IEEE Green Computing and Communications (GreenCom) and IEEE Cyber, Physical and Social Computing (CPSCom) and IEEE Smart Data (SmartData), Chengdu, China, 15–18 December 2016; pp. 164–170. [Google Scholar] [CrossRef]

- Li, Z.; Li, S.; Zhang, H. Overview of Sensing Attacks on Autonomous Vehicle Technologies and Impact on Traffic Flow. arXiv 2024, arXiv:2401.15193. [Google Scholar]

- Yan, C. Can You Trust Autonomous Vehicles: Contactless Attacks Against Sensors of Self-Driving Vehicle. 2016. Available online: https://av.tib.eu/media/36252 (accessed on 14 January 2025).

- Giannaros, A.; Karras, A.; Theodorakopoulos, L.; Karras, C.; Kranias, P.; Schizas, N.; Kalogeratos, G.; Tsolis, D. Autonomous Vehicles: Sophisticated Attacks, Safety Issues, Challenges, Open Topics, Blockchain, and Future Directions. J. Cybersecur. Priv. 2023, 3, 493–543. [Google Scholar] [CrossRef]

- Gasimova, A.; Khoei, T.T.; Kaabouch, N. A Comparative Analysis of the Ensemble Models for Detecting GPS Spoofing attacks on UAVs. In Proceedings of the 2022 IEEE 12th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 26–29 January 2022; pp. 310–315. [Google Scholar] [CrossRef]

- Tzoannos, Z.-R.; Kosmanos, D.; Xenakis, A.; Chaikalis, C. The Impact of Spoofing Attacks in Connected Autonomous Vehicles under Traffic Congestion Conditions. Telecom 2024, 5, 747–759. [Google Scholar] [CrossRef]

- Lothes, R.N.; Szymanski, M.B.; Wiley, R.G. Radar Vulnerability to Jamming; The Artech House Radar Library; Artech House: Boston, MA, USA, 1990; ISBN 978-0-89006-388-0. [Google Scholar]

- Pham, M.; Xiong, K. A Survey on Security Attacks and Defense Techniques for Connected and Autonomous Vehicles. arXiv 2020, arXiv:2007.08041. [Google Scholar] [CrossRef]

- Roome, S.J. Digital radio frequency memory. Electron. Commun. Eng. J. 1990, 2, 147. [Google Scholar] [CrossRef]

- Harris, M. Researcher Hacks Self-driving Car Sensors. IEEE Spectrum 2015, 9. Available online: http://spectrum.ieee.org/cars-that-think/transportation/self-driving/researcher-hacks-selfdriving-car-sensors (accessed on 14 January 2025).

- Stottelaar, B.G.B. Practical Cyber-Attacks on Autonomous Vehicles. Master’s Thesis, University of Twente, Enschede, The Netherlands, 2015. [Google Scholar]

- Rouf, I.; Miller, R.; Mustafa, H.; Taylor, T.; Oh, S.; Xu, W.; Gruteser, M.; Trappe, W.; Seskar, I. Security and Privacy Vulnerabilities of In-Car Wireless Networks: A Tire Pressure Monitoring System Case Study. p. 17. Available online: https://www.usenix.org/legacy/events/sec10/tech/full_papers/Rouf.pdf (accessed on 14 January 2025).

- El-Rewini, Z.; Sadatsharan, K.; Sugunaraj, N.; Selvaraj, D.F.; Plathottam, S.J.; Ranganathan, P. Cybersecurity Attacks in Vehicular Sensors. IEEE Sensors J. 2020, 20, 13752–13767. [Google Scholar] [CrossRef]

- Chowdhury, M.A.; Apon, A.; Dey, K. (Eds.) Data Analytics for Intelligent Transportation Systems; Elsevier: Amsterdam, The Netherlands, 2017; ISBN 978-0-12-809851-6. [Google Scholar]

- Kumar, A.D.; Chebrolu, K.N.R. A Brief Survey on Autonomous Vehicle Possible Attacks, Exploits and Vulnerabilities. arXiv 2018. [Google Scholar] [CrossRef]

- El-Rewini, Z.; Sadatsharan, K.; Selvaraj, D.F.; Plathottam, S.J.; Ranganathan, P. Cybersecurity challenges in vehicular communications. Veh. Commun. 2020, 23, 100214. [Google Scholar] [CrossRef]

- Hoppe, T.; Dittman, J. Sniffing/replay attacks on can buses: A simulated attack on the electric window lift classified using an adapted cert taxonomy. In Proceedings of the 2nd Workshop on Embedded Systems Security (WESS), Salzburg, Austria, 4 October 2007; pp. 66–72. [Google Scholar]

- Koscher, K.; Czeskis, A.; Roesner, F.; Patel, S.; Kohno, T.; Checkoway, S.; McCoy, D.; Kantor, B.; Anderson, D.; Shacham, H.; et al. Experimental Security Analysis of a Modern Automobile. In Proceedings of the 2010 IEEE Symposium on Security and Privacy, Oakland, CA, USA, 16–19 May 2010; pp. 447–462. [Google Scholar] [CrossRef]

- Liu, J.; Zhang, S.; Sun, W.; Shi, Y. In-Vehicle Network Attacks and Countermeasures: Challenges and Future Directions. IEEE Netw. 2017, 31, 50–58. [Google Scholar] [CrossRef]

- do Nascimento, R.M.; Oening, A.P.; Marcilio, D.C.; Aoki, A.R.; de Paula Rocha, E.; Schiochet, J.M. Outliers’ detection and filling algorithms for smart metering centers. In Proceedings of the PES T&D 2012, Orlando, FL, USA, 7–10 May 2012; pp. 1–6. [Google Scholar] [CrossRef]

- Rao, R.; Akella, S.; Guley, G. Power line carrier (PLC) signal analysis of smart meters for outlier detection. In Proceedings of the 2011 IEEE International Conference on Smart Grid Communications (SmartGridComm), Brussels, Belgium, 17–20 October 2011; pp. 291–296. [Google Scholar] [CrossRef]

- Begum, M.; Raja, G.; Guizani, M. AI-Based Sensor Attack Detection and Classification for Autonomous Vehicles in 6G-V2X Environment. IEEE Trans. Veh. Technol. 2024, 73, 5054–5063. [Google Scholar] [CrossRef]

| Interface | Attack | Capability of Attackers | Physical/Remote Access | Damages |

|---|---|---|---|---|

| Camera | Blind the camera [5,9,10,11]. | High | Remote | The vehicle cannot detect obstacles |

| GPS | Spoofing, Jamming [9,10,12,13,14]. | High | Remote | Wrong positioning;disable vehicle’s navigation mechanism;redirect vehicles [15] |

| Radar | Jamming, Ghost vehicle [11,12,16,17,18]. | High | Remote | Turn off radar/degrade mode; false detection |

| LiDAR | Spoofing, Jamming [5,9,10] | High | Remote | Force the vehicle to stop [19,20] |

| TPMS | TPMS-based attack [5,9,10,21,22] | Medium | Remote | Incorrect information |

| ECU | CarShark, Fuzzing, Reverse engineering [23] | High | Physical | Depending on the malware’s capability, control the vehicle |

| OBU | Code Modification, Code Injection, Packet Sniffing, Packet Fuzzing [10] | High | Physical | Control the vehicle;inject code to the ECU;modification of code [24] |

| CAN | Replayattack, DOS, Eavesdroppingattack, Injection attack [25,26,27,28] | High Low (for eavesdropping attack) | Physical andRemote | Control ECU |

| V2V/V2I | Blackhole, Sybil attack, DDOS [23,29,30] | Medium | Remote | Redirect traffic;flood the network;track vehicles;falsify information |

| V2X [7] | Inject malware [23,31] | Medium | Remote | Control vehicle;depends on the malware’s capability |

| In-vehicle devices | Inject malware [24] | Medium | Physical and Remote | Depends on the malware’s capability |

| Obstacle | Degree of Importance |

|---|---|

| Pedestrian | Very High |

| Tree | Medium |

| Building | Medium |

| RSU | High |

| Vehicle | Very high |

| Learning Rate | Batch Size | Epochs | Optimizer |

|---|---|---|---|

| 0.001 | 32 | 50 | Adam with L2 regularization |

| Optimizer | Loss Function | Activation Function | Metrics | Tensorflow Version | Operating System |

|---|---|---|---|---|---|

| AdaptiveMomentEstimation | MAE | and ReLU function | MAE | 2.4.1 | Windows 11 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the World Electric Vehicle Association. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ftaimi, S.; Mazri, T. Applying a Deep Neural Network and Feature Engineering to Assess the Impact of Attacks on Autonomous Vehicles. World Electr. Veh. J. 2025, 16, 388. https://doi.org/10.3390/wevj16070388

Ftaimi S, Mazri T. Applying a Deep Neural Network and Feature Engineering to Assess the Impact of Attacks on Autonomous Vehicles. World Electric Vehicle Journal. 2025; 16(7):388. https://doi.org/10.3390/wevj16070388

Chicago/Turabian StyleFtaimi, Sara, and Tomader Mazri. 2025. "Applying a Deep Neural Network and Feature Engineering to Assess the Impact of Attacks on Autonomous Vehicles" World Electric Vehicle Journal 16, no. 7: 388. https://doi.org/10.3390/wevj16070388

APA StyleFtaimi, S., & Mazri, T. (2025). Applying a Deep Neural Network and Feature Engineering to Assess the Impact of Attacks on Autonomous Vehicles. World Electric Vehicle Journal, 16(7), 388. https://doi.org/10.3390/wevj16070388