Abstract

Aiming at the problem of easily missed and misdetected parking spaces and obstacles in the automatic parking perception task under low-illumination conditions, this paper proposes a low-illumination parking space and obstacle detection algorithm based on image adaptive enhancement. The algorithm comprises an image adaptive enhancement module, which predicts adaptive parameters using CNN and integrates the low-light image enhancement via illumination map estimation and contrast-limited adaptive histogram equalization algorithms for image processing. The parking space and obstacle detection module adopts parking space corner detection based on image gradient matching, as well as obstacle detection utilizing yolov5s, whose feature pyramid network structure is optimized. The two modules are cascaded to optimize the prediction parameters of the image adaptive enhancement module, comprehensively considering the similarity loss of parking space corner matching and the obstacle detection loss. Experiments show that the algorithm makes the image pixel value distribution more balanced in low-light scenarios, the accuracy of parking space recognition reaches 95.46%, and the mean average precision of obstacle detection reaches 90.4%, which is better than the baseline algorithms, and is of great significance for the development of automatic parking sensing technology.

1. Introduction

In recent years, with the continuous development of intelligent driving technology, automatic parking technology has become one of the hottest research directions in the field of automatic driving [1]. There are three key technologies for automatic parking: environment sensing, path planning [2], and path tracking control [3,4]. In the automatic parking perception task, how to accurately detect and identify parking spaces and obstacles is a crucial issue, and the lighting conditions are one of the important factors affecting detection accuracy. In the natural light scenario, the target features are clearly visible and the foreground and background are easy to distinguish, which is very favorable to the detection task. However, in the common and unavoidable low-illumination scenarios, such as cloudy days, nights, or poorly lit underground parking garages and other scenarios, the quality of the acquired parking scenario images is poor, and the parking space and obstacle information is not clear enough, which affects the recognition of the parking space and leads to the paralysis of the entire automatic parking function. Therefore, it is particularly important to carry out research on parking space and obstacle detection under low-illumination conditions, which has aroused the interest of many researchers.

In order to improve the quality of images captured in low-illumination scenarios, a large number of researchers have continued to improve and investigate image enhancement methods from various aspects. Li et al. [5] developed a light enhancement network (LE-net) based on convolutional neural networks and proposed a low-illumination image generation pipeline to train and validate the proposed LE-net on the generated low-illumination images. Liu et al. [6] proposed a low-illumination image enhancement method based on multi-scale network fusion, which fuses features from different resolution levels in the network, improves the details of the low illumination regions in the image, and solves the problem of feature loss due to too deep network layers. Huang et al. [7] proposed a visual detection and image processing method for parking spaces based on deep learning, which established a Faster R-CNN parking detection model based on deep learning, and effectively solved the problems of uneven lighting and complex background by removing the background light in the image. However, the image enhancement algorithms in several of the above approaches are only used as an independent preprocessing step to improve the visual quality of the input image and are not deeply associated with the downstream visual detection task. In low-illumination parking space and obstacle detection applications, the ultimate goal of image enhancement is to improve the accuracy of parking space and obstacle detection, and the above algorithms lack a parameter adaptive strategy that is effectively linked to the detection task.

In addition to improving image quality, many researchers have started with the detection method to improve parking space or obstacle detection in order to achieve higher detection accuracy. Li et al. [8] proposed an improved computer vision-based method for parking space state recognition. Preprocessing the video, introducing a texture feature extraction method based on the LBP operator, and improving the background difference method were used to improve the overall recognition accuracy. Using this approach, the authors realized the detection and recognition of whether a parking space is occupied or not in three environmental conditions: with direct light, without direct light, and in rainy or snowy weather. Ma et al. [9] proposed a method for detecting parking spaces and obstacles based on vision sensors and laser devices, in which a laser transmitter produces a checkerboard-shaped laser grid that changes with the situation encountered on the ground, and which is then captured by a camera and subjected to the necessary image processing as a region of interest. The method is effective in identifying obstacles and parking spaces that are similar in color to the background. However, in the above method, the parking space and obstacle detection networks are independent and there is a lack of information sharing and joint training between the network models.

Compared with the above methods, the advantages of the low-illumination parking space and obstacle detection method based on image adaptive enhancement proposed in this paper are as follows:

- (1)

- When facing different low-illumination conditions, the parameters of the image enhancement algorithm can be adaptively adjusted to achieve different degrees of enhancement, enabling the downstream parking space and obstacle detection network to achieve higher detection accuracy;

- (2)

- The original feature pyramid network (FPN) structure of YOLOv5s is improved to a bidirectional feature pyramid network (BiFPN) structure, which significantly improves the detection ability of targets at different scales; the weighted feature fusion mechanism can dynamically adjust the contribution of each layer of features according to different input images, further optimizing the effect of feature fusion, and better working with the image adaptive enhancement module.

- (3)

- The image adaptive enhancement module and the detection module are cascaded to optimize the prediction parameters of the image adaptive enhancement module, taking into account the weighted loss that comprises both the matching similarity loss of the corner of parking spaces and the loss of obstacle detection results to achieve the purpose of adaptive enhancement, and ultimately make the image enhancement effect more and more conducive to the parking space corner and obstacle detection.

The rest of this paper is organized as follows. Section 2 provides an overview of related methods, including methods for low illumination image enhancement, methods for parking space corner detection, and methods for obstacle detection. Section 3 describes our proposed method for detecting available parking spaces in low-illumination complex parking scenarios. Section 4 shows the corresponding results and the superiority of our proposed method. Finally, Section 5 gives the main conclusions.

2. Related Work

2.1. Low-Illumination Image Enhancement

Low-illumination image processing techniques comprise mainly traditional algorithms and deep learning-based algorithms. Histogram equalization methods and Retinex model-based methods are traditional low-illumination enhancement methods. Histogram Equalization (HE) is a technique that enhances contrast by adjusting the gray scale of image pixels. Although it can enhance the overall contrast, it has a limited effect on overly bright or dark areas and may amplify noise in dark areas [10]. An improved version, Adaptive Histogram Equalization (AHE), is more suitable for improving local contrast and works by dividing the image into multiple regions and performing histogram equalization for each region separately. However, AHE also suffers from the problem of amplifying noise in smooth regions, and the block effect is obvious after equalization [11]. CLAHE is an improved version of AHE, and its core difference is that it restricts the amplification of contrast, thus effectively reducing the problem of noise amplification [12]. The above methods have the problem of over- or under-enhancement due to the lack of guidance on image enhancement, and the noise of the image is also enhanced, which makes it difficult to achieve the desired effect. Methods based on the Retinex model include Single Scale Retinex (SSR) and Multi-Scale Retinex (MSR), which estimate the light mapping in the digital domain; however, they often generate noise and take a long time to run [13,14,15].

For the enhancement of low-contrast images or images that need to focus on localized information, traditional image enhancement methods have significant limitations. Compared with traditional algorithms, deep learning-based methods are faster and have better accuracy and robustness [16,17]. Li et al. proposed the Zero-DCE algorithm based on CNN, which converts the low-light enhancement task into a specific curve estimation task and can recover low-light images without natural-light images [18]. Pan et al. [19] proposed a system that can quickly generate clear images even in the dark by using an end-to-end training mode based on the FCN model structure, and this method uses raw sensor data directly and improves a large number of traditional image processing processes, but only performs well on the constructed dataset. Li et al. [20] proposed the Retinex Net algorithm, which is combined with a deep neural network to enhance the original low-illumination image by processing the light component of the object. Although this method can fully recover the color and brightness, the resulting image is noisy. Currently, deep learning-based low-illumination image enhancement algorithms still suffer from noise, artifacts, and distortion.

To address the shortcomings of the above algorithms, we use a combination of traditional algorithms and deep learning methods. Firstly, we design the CNN-based adaptive parameter prediction module, which allows the parameters in the process of image enhancement to be adaptively adjusted. Secondly, in view of the specificity of the parking space corner, it is necessary not only to improve the quality of the entire image but also to employ some methods to highlight the details of the parking space corner to make the parking space corner information clearer. So this paper also proposes a fusion of the low-light image enhancement via the illumination map estimation algorithm (LIME) and the contrast-limited adaptive histogram equalization algorithm (CLAHE) image adaptive enhancement algorithms, making full use of the advantages of the two algorithms to improve the effect of enhancement.

2.2. Parking Space Detection

For vision-based parking space detection, most of the current algorithms are based on visual features—line features and corner point features.

In terms of research based on line features, Shih et al. [21] proposed a parking space line recognition method based on an improved Hough transform, which utilizes the probabilistic Hough transform method to recognize the parking space lines in the image. Suhr et al. [22] proposed a color-based image segmentation method to extract parking space lines based on the difference between the color of parking marking lines and the ground. Additionally, they determined the location of parking space corners by identifying the intersection of two perpendicularly intersecting straight lines. However, at present, many urban parking spaces do not include complete rectangular parking space lines, but simply mark the position of the parking space with the parking space corner. Therefore, in this case, the identification of the parking space cannot be realized with the above method based on line features.

In the research of corner-based features, Suhr et al. [23] proposed a hierarchical tree structure parking space line recognition algorithm, which used different parking space corner models to establish a hierarchical tree structure to recognize parking spaces. Yang et al. [24] proposed a learning-based method, using the 360° surround view-based system to obtain a large number of parking space corner images as samples for training, to recognize the parking space corner in the image to identify the target parking space. The method did not rely on the complete parking space straight line; however, the learning-based method required a large number of training samples and a long training time, and compared with traditional image processing algorithms, its processing speed is still slow.

Since the detection of available parking spaces studied in this paper starts with the detection of the parking space, followed by the detection of obstacles to determine whether the parking space is occupied or not, the detection of parking space corners has the highest priority. In order to improve the detection speed of parking space corners, this paper uses an image gradient matching-based parking space corner detection method, which identifies and localizes the parking space corners in the image to be tested by matching the pixel gradient template of the parking space corner image with the image to be tested. The method is relatively simple, does not rely on complex deep learning models, and has a fast processing speed and low computational cost.

2.3. Parking Scenario Obstacle Detection

Currently, target detection under normal lighting conditions has made great progress. According to whether the region candidate box is generated or not, the deep learning-based target detection algorithms can be categorized into two-stage algorithms and one-stage algorithms.

The two-stage algorithm first generates a candidate region that may contain an object, and then does further classification and calibration of that candidate region to obtain the final detection result. The most representative of these algorithms is the Region-based Convolutional Neural Network (R-CNN) family. The R-CNN model is a milestone in target detection using deep learning. The method first generates many candidate regions on the input image and labels each region with categories and bounding boxes, then after further feature extraction and category determination by Support Vector Machine (SVM), the position of the bounding boxes is finally adjusted to output the prediction results. Following R-CNN, the same team proposed the representative method Fast R-CNN, which directly performs feature extraction on the input image and reduces the repetitive computation of R-CNN for feature extraction on each candidate region. The Faster R-CNN designed by Ren was the first to employ Region Proposal Networks (RPNs) for generating candidate frames, which greatly improves the detection speed and detection accuracy [25].

Conversely, one-stage algorithms do not employ the step of generating candidate regions and directly output the detection results. Such algorithms include the Single Shot MultiBox Detector (SSD) algorithm and the You Only Look Once (YOLO) series algorithms. The SSD algorithm generates anchor frames of different sizes based on each pixel point of different convolutional layers of the output feature maps and then carries out the subsequent detection [26]. Conversely, the YOLO series algorithms treat the target detection problem as a regression problem and utilize the convolutional neural network’s final output feature map to predict both the object’s category and location information. Each input image only needs to pass through the YOLO network once to get the position information of all the objects in the image and their categories and corresponding confidence rates [27].

The above target detection algorithms can achieve good detection accuracy in detection tasks under normal lighting conditions. However, under low-illumination conditions, degradation phenomena will occur in the captured images, such as the presence of background noise and motion blur, making it difficult to distinguish between the foreground and background, which makes it difficult for the target detection network to extract effective image features and positional information and thus reduces the target detection accuracy. Therefore, in this paper, image enhancement and detection algorithms will be used jointly to improve detection accuracy. In addition, for the more complex parking scenarios, there may be a variety of sizes and multiple categories of detection targets (such as vehicles, cones, electric bicycles, etc.). For these cases, this paper improves the structure of the original model to improve the detection of different scales of the target, in order to better cope with the real-time target detection task in complex parking scenarios.

3. Detection Methods for Parking Spaces and Obstacles

3.1. Detection Process

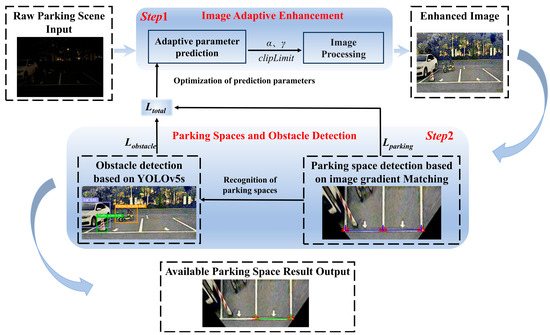

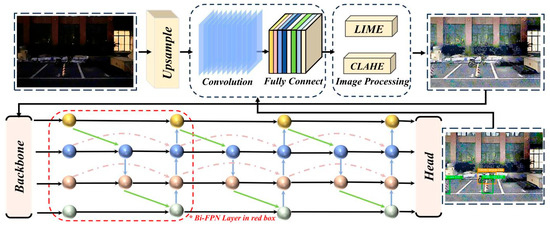

Aiming at the characteristics of low-illumination complex parking scenarios, the flow of available parking space detection proposed in this paper is shown in Figure 1 and is mainly divided into two steps, namely, the image adaptive enhancement module and the parking space and obstacle detection module. The two are cascaded to optimize the prediction parameters of the image adaptive enhancement module with the weighted loss function Ltotal of both the loss function Lparking for parking space detection and the loss function Lobstacle for obstacle detection fed back by the detection module. The prediction parameters are the positive coefficient α for adjusting the effect, the gamma transform parameter γ for adjusting the luminance distribution, and the contrast clipping threshold clipLimit, which is optimized to achieve the purpose of adaptive enhancement by optimizing the prediction parameters, and ultimately make the image enhancement effect more and more favorable to parking space and obstacle detection.

Figure 1.

Schematic diagram of detection method.

The two main steps specifically, the first step of the image adaptive enhancement module consists of two parts: an adaptive parameter prediction module designed based on CNN and an image processing module that fuses the LIME algorithm and the CLAHE algorithm, after which a preliminary enhanced image can be obtained. Proceeding to the second step, the parking space and obstacle detection module consists of two parts: the parking space corner detection based on the image gradient matching and the obstacle detection network based on the YOLOv5s. The parking space detection module carries out obstacle detection after obtaining the recognized parking space, and the obstacle detection network is based on YOLOv5s, which improves the original FPN structure into a BiFPN structure to improve the YOLOv5s’ feature fusion capability and performance in target detection. After the second step, the algorithm can determine whether the parking space that has been recognized is occupied by an obstacle or not. If it is not occupied, the available parking space result is output.

In order to be able to express the steps and logical structure of the algorithm more clearly, Algorithm 1 provides the pseudo-code of the available parking space detection algorithm.

| Algorithm 1: Available parking space detection | |

| 1: | Inputs: Low-illumination image L, adjustment coefficient α, brightness parameter γ, contrast threshold clipLimit |

| 2: | Output: Coordinates of available parking space C_out |

| 3: | Initialization: |

| 4: | P_out ← None # Coordinates of detected parking space |

| 5: | O_out ← None # Coordinates of detected obstacle |

| 6: | C_out ← None # Coordinates of available parking space |

| 7: | H ← None # Initial enhanced image |

| 8: | Step 1: Adaptive image enhancement |

| 9: | H, α, γ, clipLimit ← Adaptive_Enhancement(L, α, γ, clipLimit) |

| 10: | Step 2: Parking space detection and loss feedback |

| 11: | P_out, L_parking ← Detect_Parking_Spaces(H) |

| 12: | if P_out is None: |

| 13: | P_out ← “NO” |

| 14: | Step 3: Obstacle detection and loss feedback |

| 15: | O_out, L_obstacle ← Detect_Obstacles(H) |

| 16: | if O_out is None: |

| 17: | O_out ← “safe” |

| 18: | Step 4: Loss function minimization check |

| 19: | while not Is_Minimized(L_total = a·L_parking + b·L_obstacle): |

| 20: | H, α, γ, clipLimit ← Adaptive_Enhancement(L, α, γ, clipLimit) |

| 21: | P_out, L_parking ← Detect_Parking_Spaces(H) |

| 22: | O_out, L_obstacle ← Detect_Obstacles(H) |

| 23: | Step 5: Decision logic |

| 24: | if P_out == “NO”: |

| 25: | C_out ← None |

| 26: | Print(“NO parking”) |

| 27: | elif O_out == “safe”: |

| 28: | C_out ← P_out |

| 29: | Print(“Available parking space:”, C_out) |

| 30: | elif Is_Obstacle_In_Parking_Space(O_out, P_out): |

| 31: | C_out ← None |

| 32: | Print(“occupied”) |

| 33: | else: |

| 34: | C_out ← P_out |

| 35: | Print(“Available parking space:”, C_out) |

| 36: | Return C_out |

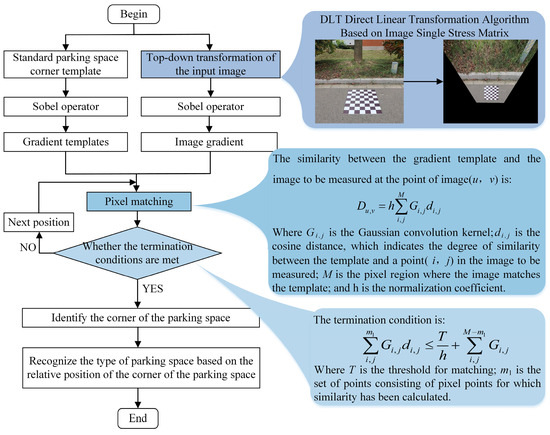

In this paper, the parking space detection module adopts a more mature method based on image pixel gradient matching [28] to recognize the parking space corner, and the detection process is shown in Figure 2. Firstly, the region of interest (ROI) is selected in the standard parking space image, i.e., the region where the corner of the parking space is located in the parking space image, in which the pixel gradient is extracted by the Sobel operator to build the template. At the same time, the image output from the image adaptive enhancement module is subjected to top-down transformation, and its image gradient is extracted through the Sobel operator [29]. Then, the pixel gradient template of the parking angle image is matched with the image to be tested using Gaussian-weighted cosine distance as the similarity metric for matching, and the location of the parking space corner is recognized. Finally, the type of parking space is recognized based on the relative location of the parking space corners.

Figure 2.

Parking space corner detection flow.

3.2. Image Adaptive Enhancement Module

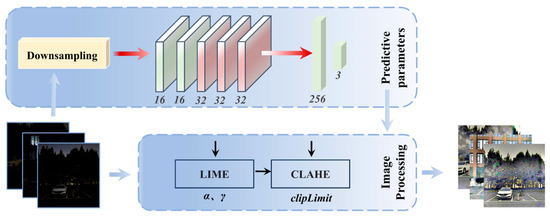

The image adaptive enhancement module consists of two parts: an adaptive parameter prediction module designed based on CNN and an image processing module that incorporates the LIME algorithm and CLAHE algorithm, as shown in Figure 3.

Figure 3.

Image adaptive enhancement module.

In the parameter prediction stage, in order to cope with input images of different scales and to reduce the number of modeling operations, we downsample each input image, using the downsampling method to uniformly process the resolution of the original image to 640 × 640. When the original image size is smaller than 640 × 640, in order to ensure that there is enough image information to be used in the parameter prediction, we take the size of the original image as the input to the parameter prediction module. The processed image is input to the parameter prediction network consisting of five convolutional layers and two fully connected layers. The parameters required by the image processing module are finally output by the fully connected layers. As shown in Figure 3, in the parameter prediction network, each convolutional block consists of a 3 × 3 convolutional layer with a step size of 2 and an activation function of Leaky ReLU. The output channels of the five convolutional layers are 16, 16, 32, 32, and 32. The outputs of the two fully connected layers are 256 and 3, respectively (three prediction parameters are needed for the image processing module).

In the image enhancement stage, for the special characteristics of the parking space markers, in addition to the need to enhance the quality of the whole image, we also need to employ some methods to highlight the details of the parking space markers to make the information of the parking space markers clearer. This paper proposes an image enhancement algorithm that integrates the LIME algorithm and the CLAHE algorithm.

The LIME algorithm enhances low-light images by estimating the illumination map of the image. It analyzes the brightness distribution of the image and generates an illumination map that reflects the brightness levels of different regions in the image. Using this illumination map, we can adjust the brightness of the image to improve the visibility of the image under low light conditions. The advantage of LIME is that it can enhance the brightness while preserving the edges and details of the image.

The CLAHE algorithm is an improved histogram equalization technique that avoids image distortion by limiting the excessive enhancement of contrast. Compared to global histogram equalization, CLAHE applies histogram equalization to local areas of the image, and is, therefore, better able to deal with uneven lighting. This approach significantly improves the local contrast of the image, resulting in sharper details.

The reason for integrating LIME and CLAHE is that their respective strengths complement each other; LIME improves the brightness level of the image through illumination map estimation but may result in insufficient contrast in certain areas; conversely, CLAHE enhances the local contrast of the image, but may lead to over-enhancement of brightness or noise amplification if left unchecked. Therefore, we combine LIME and CLAHE by first using LIME for initial brightness adjustment and then using CLAHE to further enhance the local contrast of the image. This integrated approach preserves the details and edge information of the image while improving the overall brightness and contrast, resulting in better image enhancement.

The specific steps are as follows:

The LIME algorithm [30] is first utilized to estimate the illumination of each pixel individually by finding the maximum value in the R, G, and B channels, then it is refined by applying a structural prior to the initial illumination map as the final illumination mapping. Finally, a preliminary enhanced intermediate resultant image M can be generated based on the light mapping. Among the input parameters, the positive coefficient α controls the intensity of the image enhancement, and its value range is usually set between [0.5, 1.5], while γ is the coefficient that adjusts the luminance distribution of the image, and its value range is usually set between [0.5, 2.5], and at the same time, the fluctuation of the values of both is dynamically adjusted with the CNN. The following Algorithm 2 shows the pseudo-code of LIME.

| Algorithm 2: LIME | |

| 1: | Input: Low-illumination image L, adjustment coefficient α, brightness parameter γ |

| 2: | Output: Enhanced result M |

| 3: | Initialization: |

| 4: | W ← Construct_Weight_Matrix( ) |

| 5: | Step 1: Estimate initial luminance map Ti |

| 6: | Ti(x)←max Lc(x),c∈{R,G,B} |

| 7: | Step 2: Refine luminance map T using an exact solver algorithm |

| 8: | T←Refine_Luminance_Map(Ti) |

| 9: | Step 3: Apply gamma correction to T |

| 10: | T←Tγ |

| 11: | Step 4: Enhance L using T |

| 12: | M ← Enhance_Image(L, T) |

| 13: | Return M |

Based on the image M processed by the LIME algorithm, the CLAHE algorithm is then used to ensure that the enhancement effect is natural and not excessive by histogram equalization and contrast limiting.

The image M is first divided into multiple sub-blocks of equal size. Subsequently, histogram equalization is performed for each sub-block to make the distribution of pixel values in each sub-block more uniform, thereby enhancing the contrast of the image. In the process of histogram equalization, the contrast of each sub-block is calculated, and if the contrast of the sub-block exceeds a preset threshold clipLimit, the pixel points of the exceeding portion are uniformly distributed to the other histograms, which limits the contrast of the whole image to the threshold range, in order to avoid the problem of the image having too high local contrast. Finally, all the processed sub-blocks are stitched together to get the final enhanced image H.

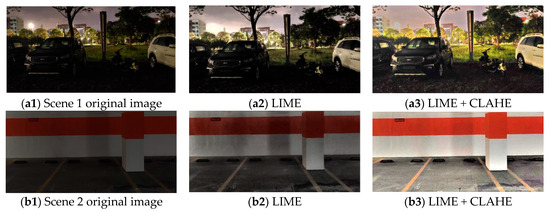

The image after fusion processing by the LIME algorithm and the CLAHE algorithm compared to the original image, as shown in Figure 4, enhances the fine details and textures in the image to make its featured areas clearer and more prominent, and strengthens the textures, edges and other features, making the image richer and more contrasting.

Figure 4.

Comparison between the original image and the image visualization processed by the LIME + CLAHE algorithms.

For images of parking environments, not only the visual perception quality but also the structural information of the image should be considered when evaluating the performance of image enhancement algorithms. Therefore, the Peak Signal-to-Noise Ratio (PSNR) and Structural Similarity Index (SSIM) are chosen as the two metrics for comprehensive evaluation. PSNR is usually measured in decibels (dB), and the calculation is based on the Mean-Square Error (MSE), i.e., the sum of the squared differences of each pixel value between the original image and the processed image. The larger the value of PSNR, the better the quality of the image, i.e., the lower the distortion. SSIM is often used to evaluate the similarity between the enhanced image and the original image; the closer the SSIM value is to 1, the higher the similarity between the processed image and the original image. From Table 1, it can be seen that the method of fusing LIME and CLAHE used in this paper is better compared to the pre-improvement method.

Table 1.

Comparison of the effectiveness of the method before and after improvement.

In summary, the workflow of the image adaptive enhancement module is as follows: firstly, the parameter prediction module predicts the parameters required in the image enhancement module based on the global information of the input image. Then, the parameters are imported into the image processing module to enhance the input image. Next, the parameters are optimized and updated using the loss Ltotal returned by the parking space and obstacle detection module. Finally, the updated parameters are imported into the image adaptive enhancement module to enhance the input image again.

3.3. Improvement of Multi-Scale Feature Fusion

In this paper, we study the improvement of the original FPN structure of YOLOv5s to a BiFPN structure and apply this improvement after the parking system enhances the captured images.

BiFPN is an improvement of FPN, aiming at more efficient feature fusion [31]. BiFPN not only retains the traditional bottom-up and top-down feature fusion paths but also adds direct connections across scales for better fusion of feature information at different scales. For the automatic parking system in this study, the main improvements introduced are as follows: Firstly, a bidirectional flow of information is realized by establishing a bidirectional connection between the up-sampled and down-sampled feature maps. In this way, feature maps at different levels can complement each other to improve the accuracy of target detection.

Secondly, a weighted feature fusion mechanism is introduced. By fusing the feature maps of neighboring layers, the features of different scales can complement each other and further enhance the feature expression ability. In the process of feature fusion, adaptive weights are used, which can be weighted and fused according to the importance of different features to improve the fusion effect.

Figure 5 shows an overview of obstacle detection based on the improved YOLOv5s.

Figure 5.

Overview of obstacle detection in this paper.

The specific improvement details are as follows:

- (a)

- Adding bi-directional paths: By introducing bi-directional connections in feature pyramid networks at different scales, information exchange and fusion across layers are realized. This bi-directional connectivity is not limited to simple top-down and bottom-up paths but is more flexible and efficient in that each bi-directional path is considered as a feature network layer and can be repeated many times to achieve higher-level feature fusion [32].

- (b)

- Add weight learning module: At each feature fusion node, a weight learning module is added. This module learns the weights of each layer of features through convolutional operations and weights the features for fusion according to these weights so that the network can pay more attention to more informative features. The contribution of each level of features in the fusion process can be dynamically adjusted according to their importance, which makes the feature fusion more flexible and effective, improves the fusion effect, and further improves the accuracy of target detection.

- (c)

- Simplify feature fusion operations: Cross-scale connectivity is optimized by removing nodes with only one input edge, adding additional edges between input and output nodes in the same layer, and treating each bi-directional path as a feature network layer and repeating it multiple times. This simplified network structure and optimized cross-scale connectivity allow BiFPN to reduce computational complexity and increase detection speed while maintaining accuracy.

Next, the BiFPN is formulated, given the input feature column Pin of multi-scale features, the final desired output feature column Pout now requires a fusion function fusion maas follows:

Taking the example of three to seven layers of input features (P3in ... P7in) given a traditional FPN structure, the traditional FPN aggregates multi-scale features in a top-down manner:

Next, we design bi-directional top-down and bottom-up paths, each containing multi-scale feature fusion operations, with each fusion layer weighting the input features by learnable weights:

where P6td is the intermediate feature at level 6 on the top-down path. Resize denotes the rescaling resolution function, using the bilinear interpolation method, which can provide better spatial accuracy while ensuring computational efficiency. Conv denotes the convolution operation, w is the learning weight, the value of ϵ is a fixed constant set to ϵ = 0.0001, which adds a tiny offset to the denominator to avoid dividing by zero or unstable values, and the value of ϵ = 0.0001 is sufficiently small to avoid affecting the reasonableness of the weight assignment while ensuring computational stability.

where P6out denotes the output features of the sixth layer in the bottom-up path, P5out denotes the output features of the fifth layer in the bottom-up path, followed by the application of ReLU and weights for fast normalized fusion:

With the series of improvements mentioned above, BiFPN significantly improves the feature fusion capability and performance in target detection of YOLOv5s. In more complex parking scenarios, there may be multiple sizes and multiple classes of detection targets (e.g., vehicles, cones, and electric bicycles) in the image, and the bi-directional feature fusion path ensures sufficient communication between multi-scale features, thus improving the detection capability of targets at different scales. To better work with the image adaptive enhancement module, the weighted feature fusion mechanism is able to dynamically adjust the contribution of each layer of features according to different input images to further optimize the feature fusion effect. By simplifying the fusion operation, BiFPN is able to better cope with real-time target detection tasks in complex parking scenarios while improving the detection accuracy and robustness, better-handling targets with different scales and complex backgrounds, and enhancing the model robustness.

3.4. Joint Training

In this paper, we combine two parts, the image adaptive enhancement module and the parking space and obstacle detection module, to form a low-illumination parkable parking space detection network by cascading. Because the enhancement is effective as long as the result of image enhancement can improve the performance of subsequent detection, there is no need to design a separate loss function for the image adaptive enhancement process, and only the weighted loss Ltotal of the parking space and obstacle detection module is used to optimize the overall network. In this way, the enhancement result of the adaptive enhancement module is more conducive to the improvement of the performance of the subsequent parking space and obstacle detection rather than to the quality enhancement of the visual senses. Ltotal represents the weighted loss function for the two tasks of the parking space detection loss function, Lparking, and the obstacle detection loss function, Lobstacle. In the parking space and obstacle detection module, when running the two independent tasks of image gradient matching-based parking space corner detection method and YOLOv5s-based parking scenario obstacle detection method, respectively, designing the weighted loss function Ltotal is an important step in order to be able to flexibly adjust and optimize the performance of the model in the subsequent research.

The following are the specific details of the weighted loss function Ltotal designed in this paper:

First, the parking space corner detection loss function Lparking is designed based on the matching similarity Du,v between the parking corner gradient template and the image to be tested. The similarity between the gradient template and the image to be tested at the point of image (u, v) is:

where Gi,j is the Gaussian convolution kernel, di,j is the cosine distance, which indicates the degree of similarity between the template and a point (i, j) in the image to be tested, M is the pixel region where the image matches the template, and h is the normalization coefficient.

The loss function is designed using the square of the cosine distance, which is Gaussian weighted and normalized, using the formula:

The above equation can reflect the overall degree of dissimilarity between the template and the image to be tested on the matching region. By minimizing this loss function, we can optimize the parameters of the image adaptive enhancement module, thus improving the accuracy of parking space corner recognition.

Second, the obstacle detection loss function Lobstacle is designed:

where Lr is the bounding box position loss, calculated by using CIOU, and Lo and Lc are the bounding box confidence loss and category loss, respectively, calculated by using cross-entropy loss.

Finally, the loss functions of the two tasks are combined by weighting to form an overall loss function:

where a and b are two hyperparameters used to adjust the weights between the two task losses. The system proposed in this paper is set to prioritize the parking space detection task. Because it does not make much sense to detect obstacles if there is no parking space, we assign a higher weight to the loss of the corner point detection task (a = 0.7), and the obstacle detection task is set to a lower weight (b = 0.3).

4. Tests and Analysis

4.1. Introduction to the Dataset and Test Environment

For the obstacle detection module in the available parking space detection network, for the complex parking scenario in which traffic cones, ground locks, illegally parked electric bicycles, and other obstacles may appear in the parking space, this paper collects a large number of images under the low-illumination complex parking scenarios by photographing an actual parking environment. When constructing the dataset, we paid special attention to the lighting conditions of the parking environment and the variability of the parking scene, and the dataset not only contains situations with different degrees of low lighting (dusk hours under natural lighting conditions, weak street lighting at night, and completely dark parking environment without any lighting) but also includes different types of parking spaces (parallel, perpendicular), and different parking lot layouts (open surface parking lot, crowded on-street parking, composite parking in residential areas, underground parking). Based on the above considerations, we constructed the self-constructed dataset for this paper, which contains a total of four main types of objects: automobiles, electric bicycles, traffic cones, and ground locks.

Although self-built datasets covering a wide range of scenes in low-illumination parking environments offer advantages in terms of content and relevance, their scale and diversity may still be limited. Especially in low-lighting conditions, the number and quality of images may not be sufficient to adequately train a high-performance deep learning model. Therefore, in order to increase the number of samples to improve the generalization ability of the model and to cover more diverse scenes to improve the robustness of the model, this paper adds the real low-light dataset ExDark [33], which is a dataset focusing on the image recognition task under low-light conditions, and contains a large number of low-light images, of which the images of bicycles and automobiles, which are the two types of common obstacles in parking environments, are selected to increase the content and relevance of the model images of common obstacles to increase the size of the dataset.

Thus, the merged dataset contains a total of five categories of objects. Each image contains at least one instance of an object in one of these categories. We ensure that the location and category of each object are clearly labeled in the annotation of the dataset so that it can be accurately recognized when training the model. The final merged dataset contains a total of 5840 images, with a number ratio of 8:2 between the training and test sets, i.e., the training set contains 4672 images and the test set contains 1168 images. We ensure that the division of the dataset is randomized to avoid any potential bias.

All experiments were conducted on a unified hardware platform with i9-11900KF CPU, RTX 3060 GPU, and Windows 11 operating system. The algorithms in this paper are based on the Ubuntu 18.04 system with Py-Torch 1.10.0 deep learning framework, developed using Python 3.8 programming language and accelerated using CUDA10.2 for computation.

4.2. Parking Space Detection Experiment

In order to verify the effectiveness of the adaptive image enhancement method proposed in this paper in the task of parking space detection in a low-illumination parking scenario, the detection results obtained using the adaptive image enhancement method Adaptive Image Enhancement Algorithm Fusing LIME Algorithm and CLAHE Algorithm (ALC) in this paper are compared with those obtained using a single image enhancement algorithms under the premise that both adopt the method based on the gradient matching of the image pixels to recognize parking spaces. The average elapsed time and recognition accuracy are used as evaluation metrics and the results are shown in Table 2.

Table 2.

Results of the parking space detection comparison experiment.

From Table 2, the following can be seen:

- (a)

- Using the adaptive image enhancement method proposed in this paper, the parameters of the image enhancement algorithm can be adjusted adaptively in the face of different low-illumination conditions, and different degrees of enhancement can be achieved, so that the downstream parking space detection network achieves higher detection accuracy, significantly improves the detection performance, and obtains the highest accuracy of the detection of parking spaces.

- (b)

- After fusing the two algorithms for image enhancement, the parking space recognition accuracy is higher than that obtained by using a single image enhancement algorithm.

- (c)

- Although the average time consumed by the optimized algorithm during detection increases, real-time performance can still be guaranteed in low-speed scenarios of automatic parking.

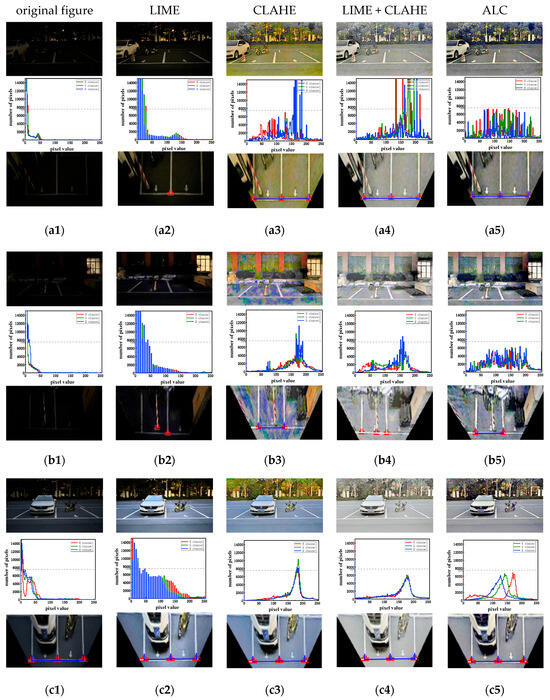

In order to verify the system’s ability to adapt to environmental changes, we collected images with different illumination levels and different parking environments for experiments. Figure 6 shows the comparison of parking space detection results obtained using the image enhancement algorithm of this paper with those obtained using other image enhancement algorithms. Figure 6a–c show three images with different luminance and different parking lot views, the RGB histograms of the corresponding images, and the results of parking space detection. The second to the five columns are the results of parking space detection obtained by the different image enhancement algorithms used in this paper’s experiments, which are, from left to right, LIME, CLAHE, LIME + CLAHE, and ALC, the algorithm of this paper.

Figure 6.

Comparative experimental results of parking space detection.

For a,b, two scenarios with very low illumination levels, the pixels of the original image are mainly concentrated in 0~20, the image is overall dark, and the parking space cannot be recognized. Taking the number of pixels of 7500 as the reference line and taking the part that exceeds it for comparison, when using the LIME algorithm for processing, the pixels in Figure 6(a2) are mainly concentrated in 0~30, and the pixels in Figure 6(b2) are mainly concentrated in 0~50. When using the CLAHE algorithm for processing, the pixels in Figure 6(a3) are mainly concentrated in 160~185, and the pixels in Figure 6(b3) are mainly concentrated in 170~190. When processed using the LIME + CLAHE algorithm, the pixels in Figure 6(a4) are mainly concentrated in 130~210, and the pixels in Figure 6(b4) are mainly concentrated in 150~160. Compared with the above image enhancement algorithms, the distribution of pixel values is more balanced using the algorithm of this paper, and the number of pixels in each pixel value is under 7500, and there is a significant enhancement of the image in terms of brightness and contrast, and it also avoids the problem of excessive local contrast in the image.

For the second parking scenario b, which is more complex, the processing using other enhancement algorithms did not alleviate the interference caused by the complex scenario, and misdetection and omission occurred. The image processed using the algorithm in this paper has the most accurate results for parking space recognition.

Detecting the third brighter parking scenario c, our algorithm can also fulfill the task excellently and accurately detect the parking space. The experimental results show that the adaptive enhancement algorithm used in this paper is able to adapt to changing environments and maintain more stable performance under different environmental conditions, reflecting its robustness to environmental variability, which is conducive to the improvement of the overall system detection performance.

4.3. Obstacle Detection Experiment

In this experiment, the epoch is set to 200, the batch size is set to 16, the initial learning rate is set to 0.01, and other training hyper-parameter settings are kept consistent with the base model YOLOv5s. In this paper, the effectiveness and detection accuracy of the detection algorithm are quantitatively analyzed using the Precision (P), Recall (R), and mean Average Precision (mAP). The effectiveness of obstacle detection is analyzed and validated below.

In order to verify the effectiveness of this paper’s algorithm in the task of obstacle detection in low-illumination parking scenarios, using the same configuration of experimental equipment as well as experimental strategy, this paper’s improved algorithm ALC-BiFPN is compared with other algorithms, and quantitatively detects the effectiveness of the algorithm by using the number of participants, the average elapsed time, and the mean Average Precision (mAP), etc. The experimental results are shown in Table 3.

Table 3.

Comparative experimental results of obstacle detection.

Two types of algorithms are selected for the comparison experiments; one is the most primitive class of target detection algorithms, such as the YOLOv5s and SSD algorithms, which do not perform additional enhancement processing on the images and directly perform target detection. The other class is the improved target detection algorithms researched by other scholars, such as the YOLOv5-Fog [34], FISNet [35], and Dark-YOLO [36] algorithms, which first process the captured images with low quality and then detect them.

For the first class of algorithms, we experimented with a homemade dataset, and although their average elapsed time is shorter than the time consumed by the algorithm ALC-BiFPN in this paper, the obstacle detection mAP value is not as high as that of this paper’s algorithm. For the second class of algorithms, we cite experimental data from other scholars. The YOLOv5-Fog algorithm targets blurred images with fog and uses a dataset that covers traffic and driving scenarios with the Real-world Task-Driven Testing Set (RTTS). The FISNet and Dark-YOLO algorithms target the same low-light images as in this paper, and the dataset used is ExDark, which contains a large number of low-light images, and the two categories of bicycles and automobiles are also selected as in the self-constructed dataset of this paper. We choose to cite these authors’ experimental data because the method they use is very similar to the method in this paper, and the purpose of the research is to improve the quality of the image and then improve the accuracy of detection. According to the comparative experimental results, it can be seen that the obstacle detection mAP value obtained using the algorithm of this paper is 90.46%, which is better than other algorithms.

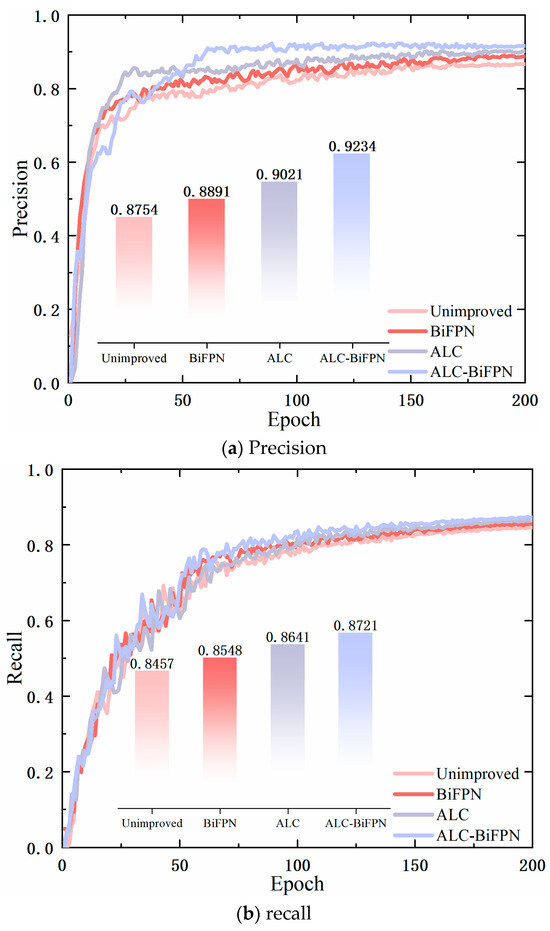

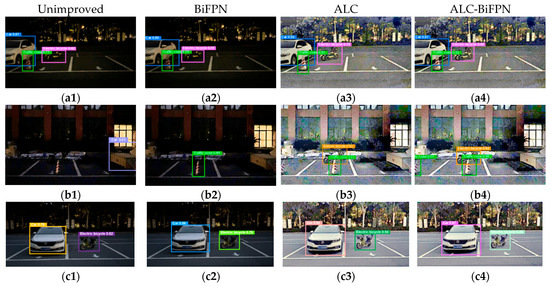

In order to test the impact of the proposed image adaptive enhancement method ALC and the improvement strategy BiFPN of multi-scale feature fusion on improving the accuracy and detection rate of obstacle detection in low-illumination complex scenarios, ablation experiments are used to validate the results. The effectiveness of detection algorithms and detection accuracy are quantitatively analyzed using Precision (P), Recall (R), and mean Average Precision (mAP), the values of which are listed in Table 4, with YOLOv5s used in all the base models. The “√” symbols in the table indicate that the corresponding structures were added to the network.

Table 4.

Obstacle detection ablation experiment results.

The results of the ablation experiments demonstrate the following:

- (a)

- In Experiment 2, the improvement in multi-scale feature fusion achieves a more comprehensive fusion of feature information and improves the network’s ability to capture the feature information of obstacles, and the mAP of obstacle detection is improved by 1.25%.

- (b)

- In Experiment 3, the image adaptive enhancement method proposed in this paper is used, which can adaptively adjust the parameters of the image enhancement algorithm when facing different low-illumination conditions, and the low-illumination image is adaptively enhanced, which improves the precision by 2.67%, the recall by 1.87%, and the mAP for obstacle detection by 2.95%, relative to the original network.

- (c)

- In Experiment 4, after simultaneously piggybacking the image adaptive enhancement method and the improvement strategy of multi-scale feature fusion into the network, the precision is improved by 4.8%, the recall is improved by 2.64%, and the mAP of obstacle detection is improved by 4.14%, which is significantly higher than that of both Experiment 3 with the image adaptive enhancement method alone and Experiment 2 with the improvement strategy of multi-scale feature fusion alone, which proves the effectiveness of the network improvement.

However, with the introduction of the image adaptive enhancement method and the improved strategy of multi-scale feature fusion, the operation rate of the network decreases, and the average elapsed time of detection increases from 9.8 ms to 18.18 ms. As the detection of available parking spaces studied in this paper has two main steps—if the parking space detection module can detect the parking space, then the obstacle detection is carried out—the time consumed by the whole model is the sum of the time consumed by the parking space detection and the time consumed by the obstacle detection. As can be seen from [37], auto-parking is carried out in a low-speed working condition, and the real-time requirements are lower. From the experimental results of Section 4.2 and Section 4.3, it can be seen that the time consumed by the whole model is 33.322 ms, which can completely guarantee real-time performance in the low-speed environment of automatic parking. Figure 7 shows the results of the ablation experiment.

Figure 7.

Results of ablation experiments.

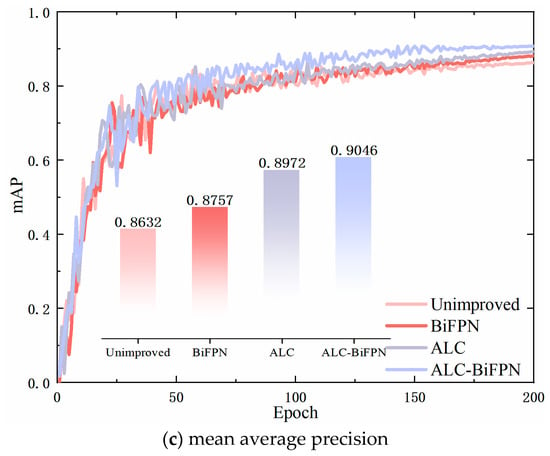

In order to verify the system’s ability to adapt to environmental changes, we collected images with different illumination levels and different parking environments for the test. A set of test results for the ablation experiments are displayed in Figure 8. For the two darker scenarios, compared to other methods, the algorithm ALC-BiFPN proposed in this paper has no occurrence of leakage and misdetection, and the results obtained are more accurate. For the brighter parking scenario, our algorithm can also accurately detect obstacles. The experimental results show that the ALC-BiFPN algorithm proposed in this paper is able to adapt to changing environments and maintain a more stable performance under different environmental conditions, which reflects its robustness to environmental variability and is conducive to the improvement of the overall system detection performance.

Figure 8.

Demonstration of ablation experiment results.

5. Conclusions

In this study, a low-illumination parking space and obstacle detection algorithm based on image adaptive enhancement is proposed for low-illumination complex parking scenarios. The main contributions of this paper can be summarized as follows:

- (a)

- The method designs an image adaptive enhancement module, which consists of two parts: an adaptive parameter prediction module designed based on CNN and an image processing module fusing the LIME algorithm and the CLAHE algorithm, which enhances the image to different degrees by learning the global information of the input image.

- (b)

- The parking space and obstacle detection module of this method consists of two parts: parking space corner detection based on image gradient matching and parking scenario obstacle detection based on YOLOv5s. In this paper, the obstacle detection network is improved, and the original FPN structure is improved to a BiFPN structure, which enhances the feature fusion capability of the detection network when facing complex scenarios with the presence of multiple classes of detection targets.

- (c)

- The method cascades the image adaptive enhancement module with the parking space and obstacle detection module, and optimizes the prediction parameters of the image adaptive enhancement module, taking into account the weighted loss that comprises both the matching similarity loss of the corner of parking spaces and the loss of obstacle detection results to achieve the purpose of adaptive enhancement, and to make the effect of the image enhancement more conducive to the subsequent detection tasks.

- (d)

- In the task of detecting parking spaces and obstacles facing low illumination conditions, the method proposed in this paper has stronger robustness and higher detection accuracy compared to other detection methods. The experimental results on a merged parking scenario dataset show that the algorithm proposed in this paper achieves 95.46% recognition accuracy for parking spaces and 90.4% mean average precision for obstacles, which is better than the baseline algorithms. The research results of this paper have a certain significance for the development of automatic parking perception technology, and in our future work, we plan to add sensors such as LIDAR to increase the 3D spatial perception capability to improve the model’s performance in complex environments so that the model can be generalized to a wider range of unstructured environments.

Author Contributions

Conceptualization, M.Z. and X.X.; methodology, M.Z. and H.T.; software, W.X.; validation, H.T.; formal analysis, X.X.; investigation, X.X.; resources, W.X. and Z.Z.; data curation, X.X.; writing—original draft preparation, X.X.; writing—review and editing, M.Z.; visualization, H.T.; supervision, M.Z.; project administration, M.Z.; funding acquisition, B.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Acknowledgments

Thanks to the teachers and students who provided help and suggestions for this study.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Khalid, M.; Wang, K.; Aslam, N.; Cao, Y.; Ahmad, N.; Khan, M.K. From smart parking towards autonomous valet parking: A survey, challenges and future Works. J. Netw. Comput. Appl. 2021, 175, 102935. [Google Scholar] [CrossRef]

- Ayalew, W.; Menebo, M.; Merga, C.; Negash, L. Optimal path planning using bidirectional rapidly-exploring random tree star-dynamic window approach (BRRT*-DWA) with adaptive Monte Carlo localization (AMCL) for mobile robot. Eng. Res. Express 2024, 6, 035212. [Google Scholar] [CrossRef]

- Kedir, C.A.; Abdissa, C.M. PSO based linear parameter varying-model predictive control for trajectory tracking of autonomous vehicles. Eng. Res. Express 2024, 6, 035229. [Google Scholar] [CrossRef]

- Wendemagegn, Y.A.; Asfaw, W.A.; Abdissa, C.M.; Lemma, L.N. Enhancing trajectory tracking accuracy in three-wheeled mobile robots using backstepping fuzzy sliding mode control. Eng. Res. Express 2024, 6, 045204. [Google Scholar] [CrossRef]

- Li, G.; Yang, Y.; Qu, X.; Cao, D.; Li, K. A deep learning based image enhancement approach for autonomous driving at night. Knowl.-Based Syst. 2021, 213, 106617. [Google Scholar] [CrossRef]

- Liu, X.; Zhang, C.; Wang, Y.; Ding, K.; Han, T.; Liu, H.; Tian, Y.; Xu, B.; Ju, M. Low Light Image Enhancement Based on Multi-Scale Network Fusion. IEEE Access 2022, 10, 127853–127862. [Google Scholar] [CrossRef]

- Huang, C.; Yang, S.; Luo, Y.; Wang, Y.; Liu, Z. Visual Detection and Image Processing of Parking Space Based on Deep Learning. Sensors 2022, 22, 6672. [Google Scholar] [CrossRef]

- Li, Y.; Mao, H.; Yang, W.; Guo, S.; Zhang, X. Research on Parking Space Status Recognition Method Based on Computer Vision. Sustainability 2023, 15, 107. [Google Scholar] [CrossRef]

- Ma, S.; Jiang, Z.; Jiang, H.; Han, M.; Li, C. Parking Space and Obstacle Detection Based on a Vision Sensor and Checkerboard Grid Laser. Appl. Sci. 2020, 10, 2582. [Google Scholar] [CrossRef]

- Mousania, Y.; Karimi, S.; Farmani, A. Optical remote sensing, brightness preserving and contrast enhancement of medical images using histogram equalization with minimum cross-entropy-Otsu algorithm. Opt. Quantum Electron. 2023, 55, 22. [Google Scholar] [CrossRef]

- Xiang, D.; Wang, H.H.; He, D.Y.; Zhai, C.K. Research on Histogram Equalization Algorithm Based on Optimized Adaptive Quadruple Segmentation and Cropping of Underwater Image (AQSCHE). IEEE Access 2023, 11, 69356–69365. [Google Scholar] [CrossRef]

- Yuan, Z.; Zeng, J.; Wei, Z.; Jin, L.; Zhao, S.; Liu, X.; Zhang, Y.; Zhou, G. CLAHE-Based Low-Light Image Enhancement for Robust Object Detection in Overhead Power Transmission System. IEEE Trans. Power Deliv. 2023, 38, 2240–2243. [Google Scholar] [CrossRef]

- Oishi, S.; Fukushima, N. Retinex-Based Relighting for Night Photography. Appl. Sci. 2023, 13, 19. [Google Scholar] [CrossRef]

- Zhou, F.; Sun, X.; Dong, J.Y.; Zhu, X.X. SurroundNet: Towards effective low-light image enhancement. Pattern Recognit. 2023, 141, 12. [Google Scholar] [CrossRef]

- Li, J.F.; Hao, S.; Li, T.S.; Zhuo, L.; Zhang, J. RDMA: Low-light image enhancement based on retinex decomposition and multi-scale adjustment. Int. J. Mach. Learn. Cybern. 2024, 15, 1693–1709. [Google Scholar] [CrossRef]

- Zhao, Z.J.; Xiong, B.S.; Wang, L.; Ou, Q.F.; Yu, L.; Kuang, F. RetinexDIP: A Unified Deep Framework for Low-Light Image Enhancement. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 1076–1088. [Google Scholar] [CrossRef]

- Liu, J.Y.; Xu, D.J.; Yang, W.H.; Fan, M.H.; Huang, H.F. Benchmarking Low-Light Image Enhancement and Beyond. Int. J. Comput. Vis. 2021, 129, 1153–1184. [Google Scholar] [CrossRef]

- Li, C.Y.; Guo, C.L.; Loy, C.C. Learning to Enhance Low-Light Image via Zero-Reference Deep Curve Estimation. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 4225–4238. [Google Scholar] [CrossRef]

- Pan, Y.N.; Li, Y.; Jin, J. Design and Implementation of Fully Convolutional Network Algorithm in Landscape Image Processing. Wirel. Commun. Mob. Comput. 2022, 2022, 9. [Google Scholar] [CrossRef]

- Li, X.F.; Wang, W.W.; Feng, X.C.; Li, M. Deep parametric Retinex decomposition model for low-light image enhancement. Comput. Vis. Image Underst. 2024, 241, 14. [Google Scholar] [CrossRef]

- Shih, S.E.; Tsai, W.H. A Convenient Vision-Based System for Automatic Detection of Parking Spaces in Indoor Parking Lots Using Wide-Angle Cameras. IEEE Trans. Veh. Technol. 2014, 63, 2521–2532. [Google Scholar] [CrossRef]

- Suhr, J.K.; Jung, H.G. Automatic Parking Space Detection and Tracking for Underground and Indoor Environments. IEEE Trans. Ind. Electron. 2016, 63, 5687–5698. [Google Scholar] [CrossRef]

- Suhr, J.K.; Jung, H.G. Full-automatic recognition of various parking slot markings using a hierarchical tree structure. Opt. Eng. 2013, 52, 14. [Google Scholar] [CrossRef]

- Yang, C.F.; Ju, Y.H.; Hsieh, C.Y.; Lin, C.Y.; Tsai, M.H.; Chang, H.L. iParking—A real-time parking space monitoring and guiding system. Veh. Commun. 2017, 9, 301–305. [Google Scholar] [CrossRef]

- Ren, S.Q.; He, K.M.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Zhao, K.; Ren, X.X.; Kong, Z.Z.; Liu, M. Object detection on remote sensing images using deep learning: An improved single shot multibox detector method. J. Electron. Imaging 2019, 28, 7. [Google Scholar] [CrossRef]

- Hussain, M. YOLO-v1 to YOLO-v8, the Rise of YOLO and Its Complementary Nature toward Digital Manufacturing and Industrial Defect Detection. Machines 2023, 11, 25. [Google Scholar] [CrossRef]

- Huang, J.; Yang, F.; Chai, L. Multimodal Remote Sensing Image Registration Based on Adaptive Spectrum Congruency. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 14965–14981. [Google Scholar] [CrossRef]

- Zhang, J.; Liu, T.; Yin, X.; Wang, X.; Zhang, K.; Xu, J.; Wang, D. An improved parking space recognition algorithm based on panoramic vision. Multimed. Tools Appl. 2021, 80, 18181–18209. [Google Scholar] [CrossRef]

- Guo, X.J.; Li, Y.; Ling, H.B. LIME: Low-Light Image Enhancement via Illumination Map Estimation. IEEE Trans. Image Process. 2017, 26, 982–993. [Google Scholar] [CrossRef]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Tang, H.; Xu, X.; Xu, H.; Liu, S.; Ji, J.; Qiu, C.; Shen, Y. Lightweight ViT with Multiscale Feature Fusion for Driving Risk Rating Warning System. Adv. Theory Simul. 2024, 7, 2400586. [Google Scholar] [CrossRef]

- Loh, Y.P.; Chan, C.S. Getting to know low-light images with the exclusively dark dataset. Comput. Vis. Image Underst. 2019, 178, 30–42. [Google Scholar] [CrossRef]

- Wang, H.; Xu, Y.; He, Y.; Cai, Y.; Chen, L.; Li, Y.; Sotelo, M.A.; Li, Z. YOLOv5-Fog: A Multiobjective Visual Detection Algorithm for Fog Driving Scenes Based on Improved YOLOv5. IEEE Trans. Instrum. Meas. 2022, 71, 2515612. [Google Scholar] [CrossRef]

- Mai, J.W.; Li, H.; Kang, Y. Low-Light Object Detection Based on Feature Interaction Structure. Comput. Eng. Appl. 2024, 60, 224–232. [Google Scholar]

- Jiang, Z.; Xiao, Y.; Zhang, S.; Zhu, L.; He, Y.; Zhai, F. Low-Illumination Object Detection Method Based on Dark-YOLO. J. Comput. Aided Des. Comput. Grap 2023, 35, 441–451. [Google Scholar]

- Zhang, L.; Huang, J.; Li, X.; Xiong, L. Vision-based parking-slot detection: A DCNN-based approach and a large-scale benchmark dataset. IEEE Trans. Image Process. 2018, 27, 5350–5364. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the World Electric Vehicle Association. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).