Supporting Elderly People by Ad Hoc Generated Mobile Applications Based on Vocal Interaction

Abstract

:1. Introduction

2. Related Work

- -

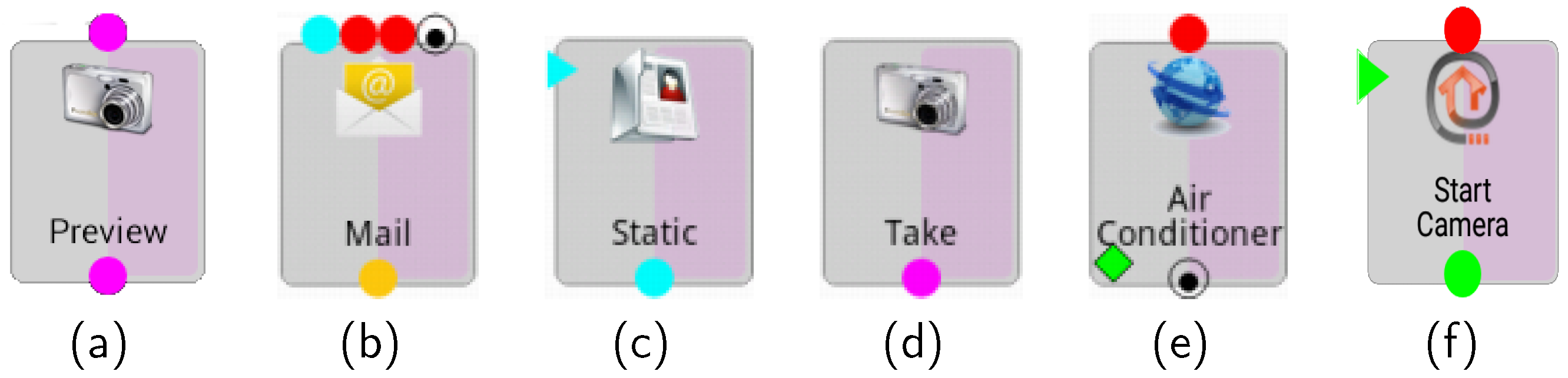

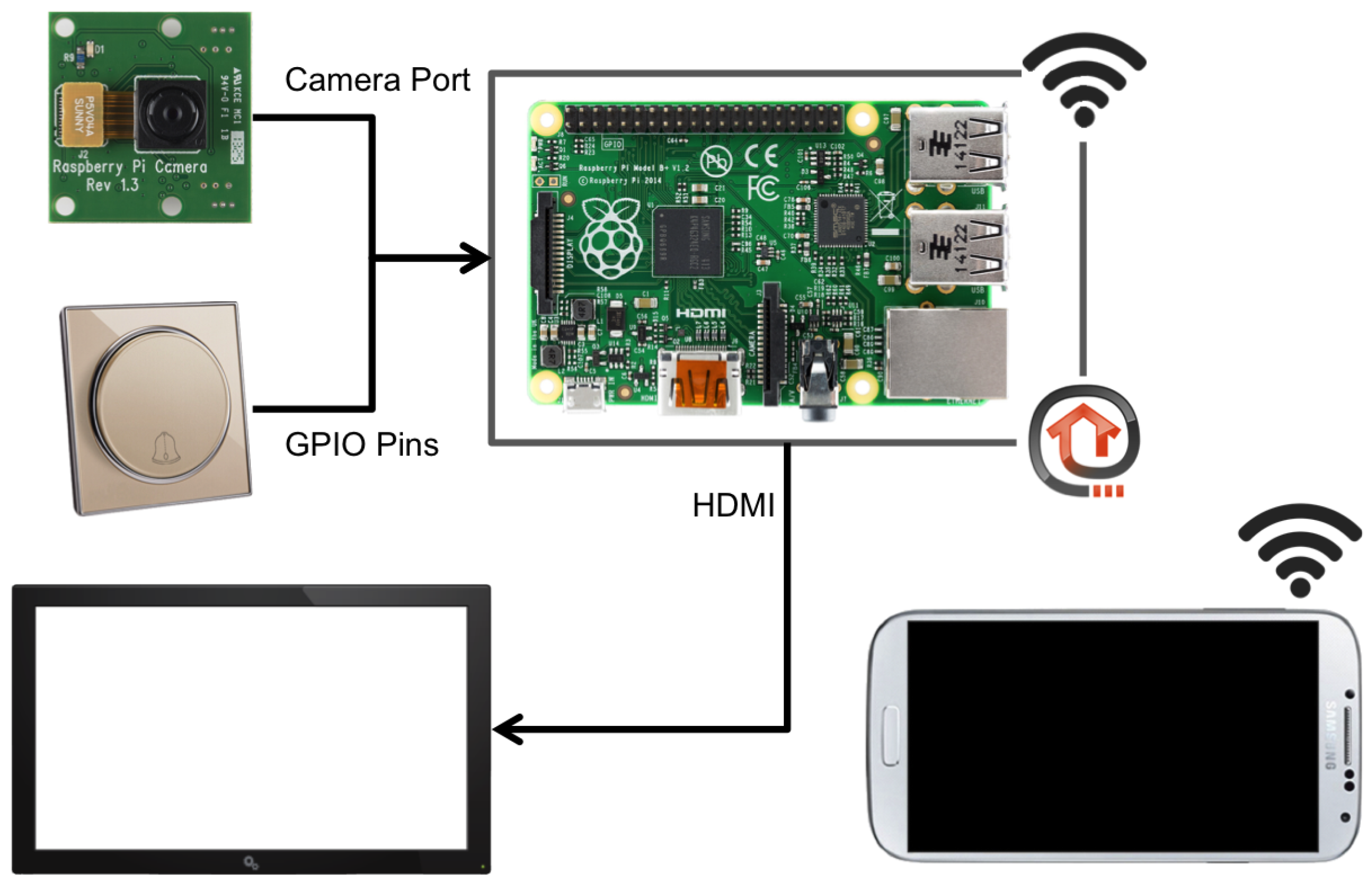

- Components. The kind of components that the tool can use for generating apps are classified as follows: Service Components (SC) to access Web and Internet services; Sensor and Domotics Components (SDC) to handle sensor data and networks; Native Components (NC) to exploit the functionalities available on the mobile device (e.g., phone call, camera, etc.).

- -

- Language. The tool uses one of the following interaction metaphors to specify the applications: Visual (Vis), the user interacts by means of a visual/graphical language; Template-Based (TB), the user interacts by exploiting predefined forms; Template-Based and Textual (TBT), only simple apps can be programmed by using the template-based metaphor, whilst the others need textual programming.

- -

- Target Users. It specifies if the tool is End-User (EU) oriented (i.e., no programming skills are required); or Developer (D) oriented (i.e., programming skills are required).

- -

- Target Device. The final execution device on which the generated application will run: Smartphone (Sm); Personal Computer (PC).

- -

- Vocal Interaction. The tool provides support for vocal interaction: Text-To-Speech (TTS) and/or Speech-To-Speech (STT) modules; Screen (Sc), the application is able to speech the text present on the screen and it enables the user to interact with the interface widgets.

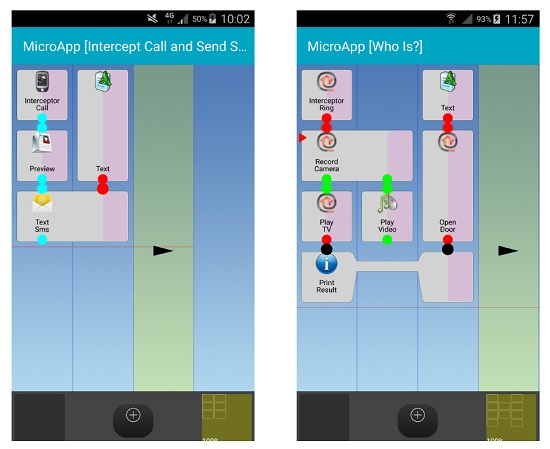

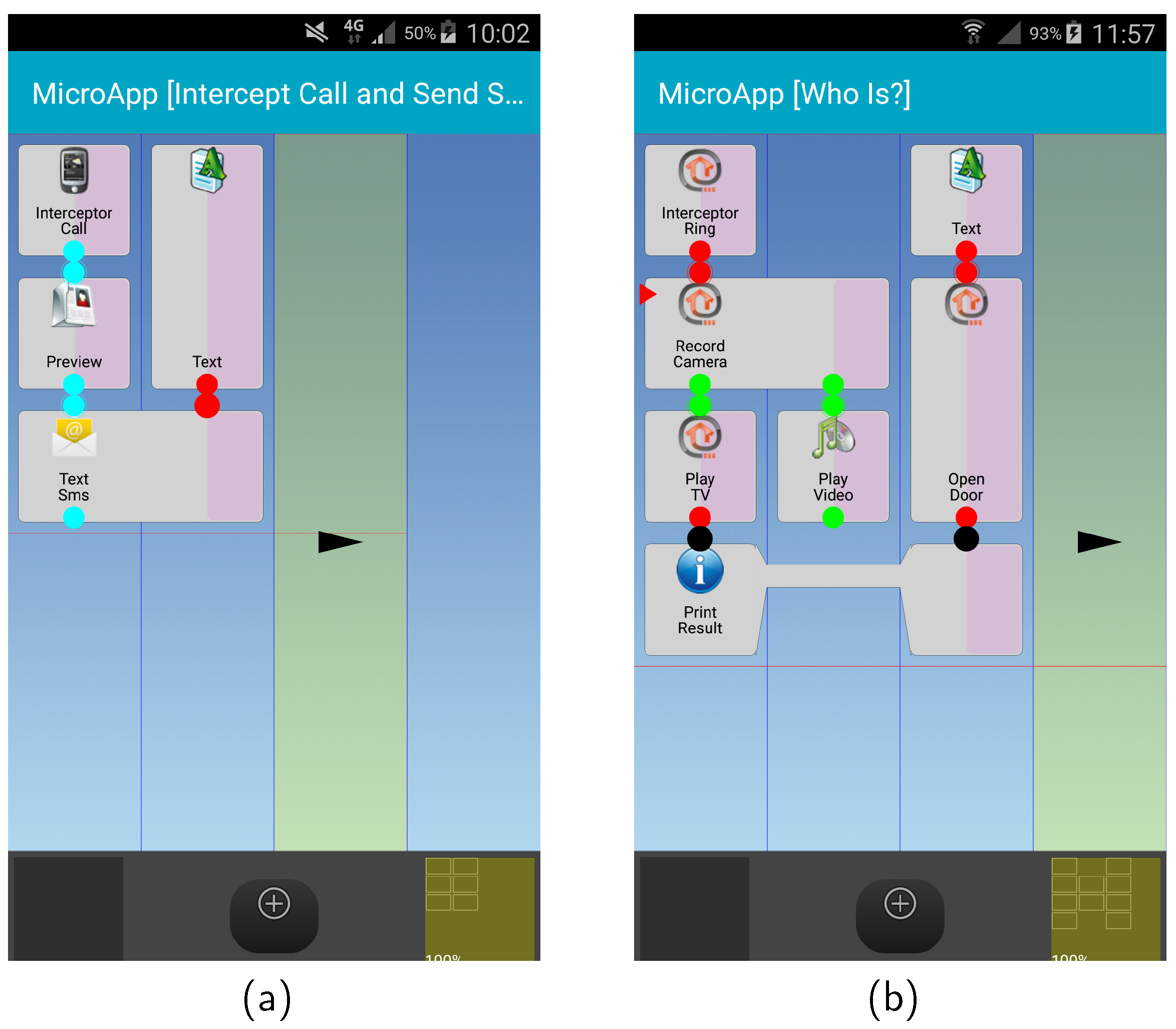

3. The MicroApp Generator

4. Generating Apps with Vocal Interaction

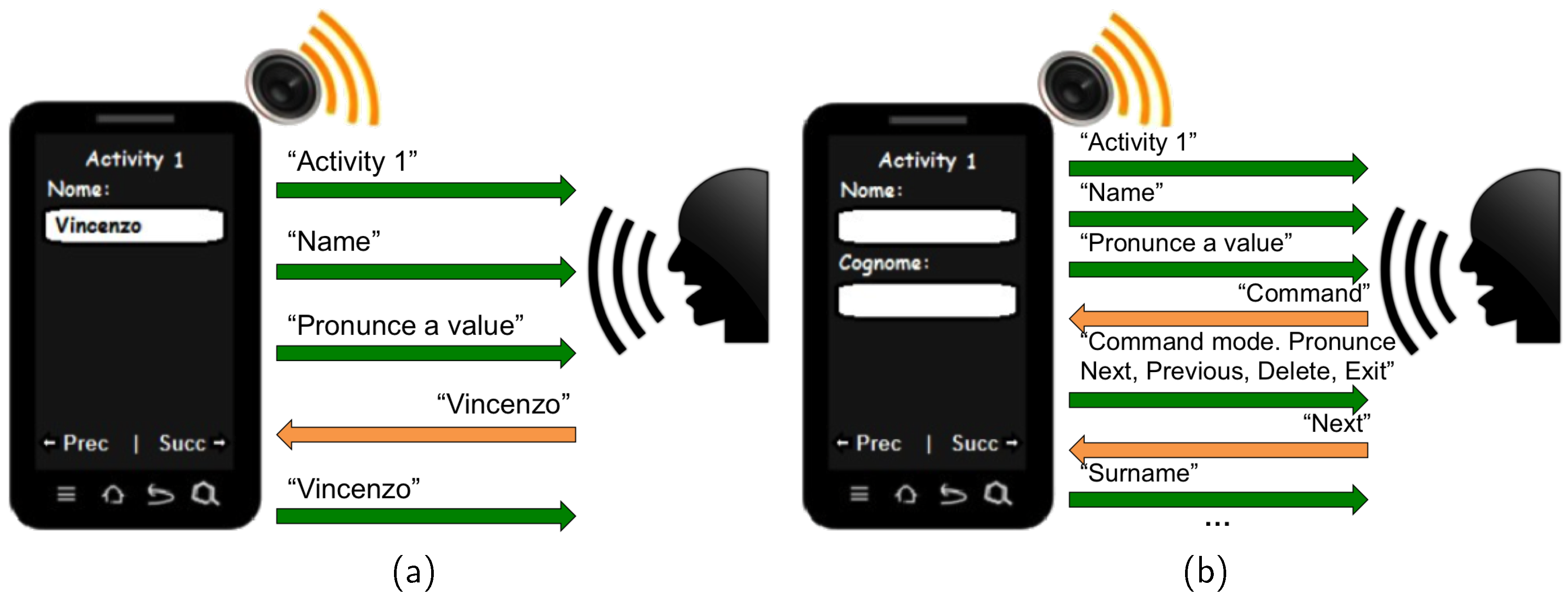

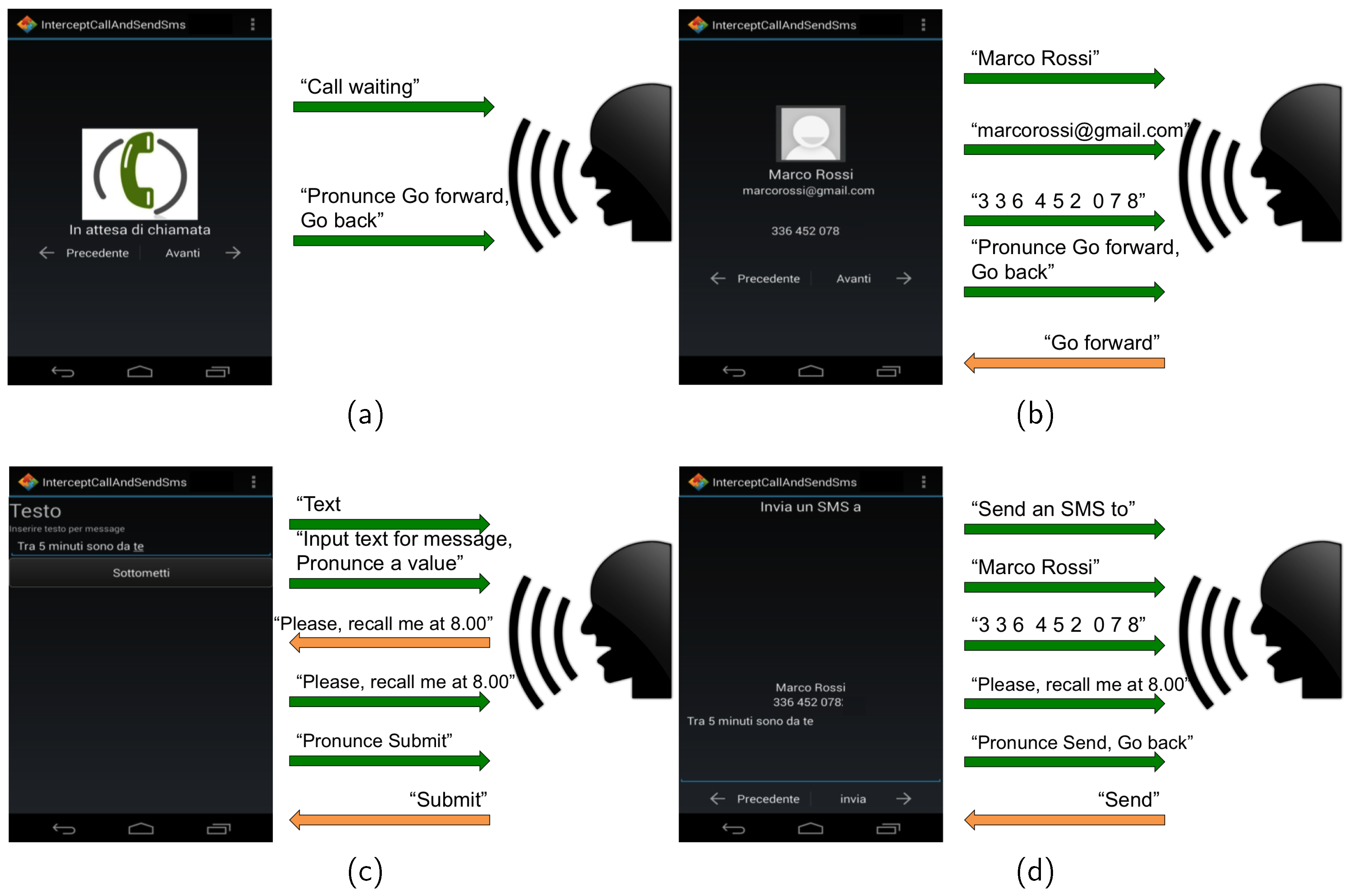

4.1. Interaction Design

- Vocal inputs, from the user. They correspond to the user interface events that the user needs to cause, such as pressing the “Next” button;

- Vocal outputs, from the MicroApp. They correspond to the description of the application interface, such as the availability of the “Next” button to be pressed or the possibility of inserting textual context into a text field with a given label.

- -

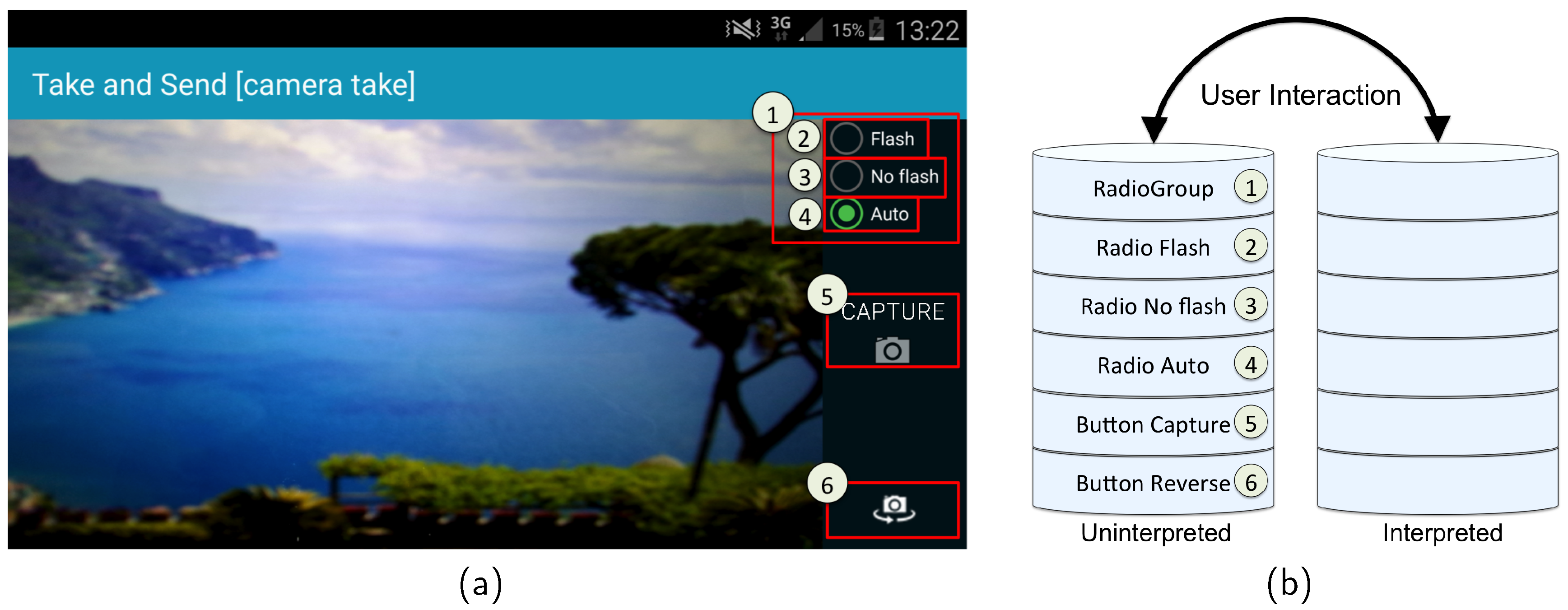

- Screen widget acquisition. To enable the interaction between the user and the MicroApp, there is the need of acquiring all the widgets composing the screen. The widgets are inserted into two stacks: the first is the stack of the uninterpreted widgets, which have not been read to the user yet, the other is the stack of the already read ones. Each time a widget is read, it is moved from the first stack into the second one. In the case of a command interaction, the user can go backward to previous read widgets, which are accordingly moved back into the uninterpreted widget stack. An example of the ordered acquisition of the Camera.Take service is shown in Figure 4, where widgets are ordered from high to low and left to right.

- -

- Screen reading. The vocal interface gets the stack of the uninterpreted widgets and sequentially reads them, including the text they eventually contain. When a widget requires an user interaction, the interface communicates to the user the actions to be performed, i.e., insert text into a text field, and starts the voice recognition phase.

- -

- Voice recognition. Once communicated the interaction, the vocal interface subsystem waits for the vocal input. It determines when the registration has to be interrupted by evaluating the time in which the user does not speak and a fixed maximum waiting time.

- -

- Command detection. The user pronounces one of the available commands. Among them, it is always possible to navigate the widgets in a sequential way, by recalling commands to go to the successive or previous widget or for starting to read again the screen from the beginning. When the user input is not recognized, the system asks the user to insert it again.

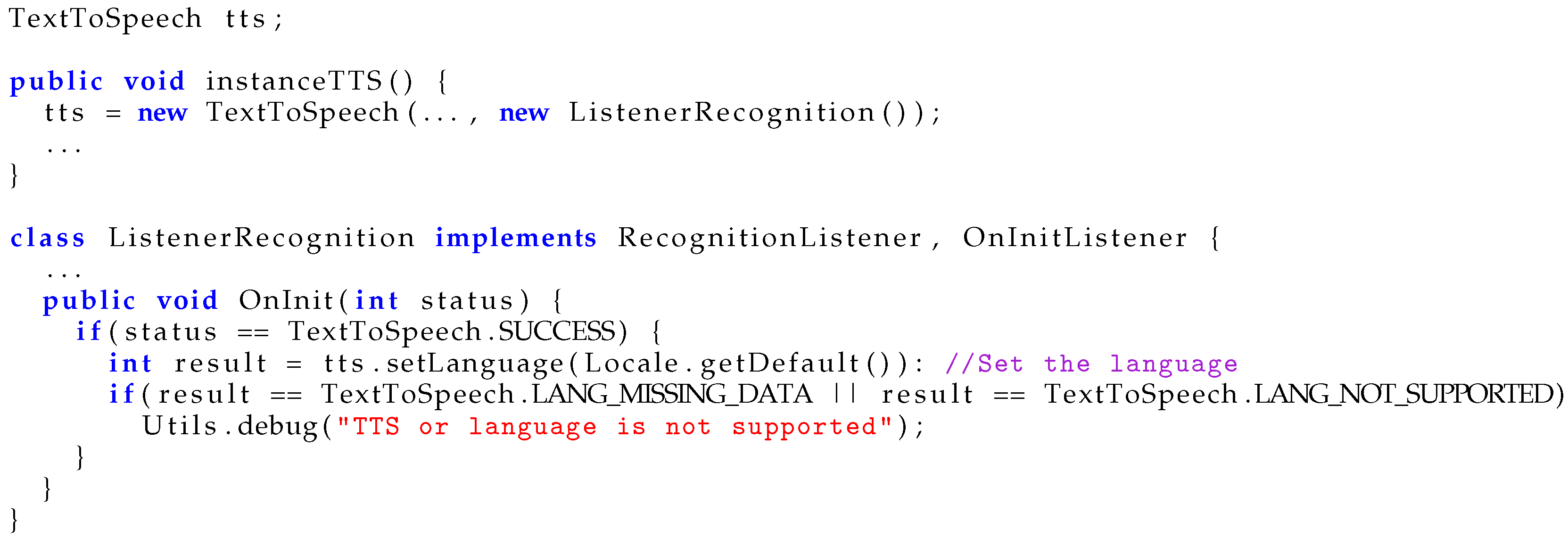

4.2. Implementation

4.3. The SpeakAndSpeech Class

- null: the view provides only text;

- ListenerRecognitionEdit: the view is a text field requiring the user to insert text;

- ListenerRecognitionRadioGroup: the view is a radio group containing a set of radio buttons requiring the user to select one of them;

- ListenerRecognitionButton: the view is a button requiring to be checked by the user.

4.4. An Example of Interaction

5. Evaluation

5.1. Context and Procedure

5.2. Results

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Francese, R.; Risi, M.; Tortora, G.; Tucci, M. Visual Mobile Computing for Mobile End-Users. IEEE Trans. Mob. Comput. 2016, 15, 1033–1046. [Google Scholar] [CrossRef]

- Portet, F.; Vacher, M.; Golanski, C.; Roux, C.; Meillon, B. Design and Evaluation of a Smart Home Voice Interface for the Elderly: Acceptability and Objection Aspects. Pers. Ubiquitous Comput. 2013, 17, 127–144. [Google Scholar] [CrossRef]

- Alam, M.R.; Reaz, M.B.I.; Ali, M.A.M. A Review of Smart Homes—Past, Present, and Future. IEEE Trans. Syst. Man Cybern. Part C Appl. Rev. 2012, 42, 1190–1203. [Google Scholar] [CrossRef]

- Carbonell, N. Ambient Multimodality: Towards Advancing Computer Accessibility and Assisted Living. Univers. Access Inf. Soc. 2006, 5, 96–104. [Google Scholar] [CrossRef]

- Kolias, C.; Kolias, V.; Anagnostopoulos, I.; Kambourakis, G.; Kayafas, E. Design and implementation of a VoiceXML-driven wiki application for assistive environments on the web. Pers. Ubiquitous Comput. 2010, 14, 527–539. [Google Scholar] [CrossRef]

- App Inventor. MIT Center for Mobile Learning. Available online: http://appinventor.mit.edu/explore (accessed on 19 August 2016).

- Tillmann, N.; Moskal, M.; de Halleux, J.; Fahndrich, M. TouchDevelop: Programming Cloud-connected Mobile Devices via Touchscreen. In Proceedings of the 10th SIGPLAN Symposium on New Ideas, New Paradigms, and Reflections on Programming and Software, Portland, OR, USA, 22–27 October 2011; pp. 49–60.

- Wajid, U.; Namoun, A.; Mehandjiev, N. Alternative Representations for End User Composition of Service-Based Systems. In Proceedings of the Third International Symposium on End-User Development (IS-EUD), Torre Canne, Italy, 7–10 June 2011; pp. 53–66.

- Ardito, C.; Francesca Costabile, M.; Desolda, G.; Lanzilotti, R.; Matera, M.; Piccinno, A.; Picozzi, M. User-driven Visual Composition of Service-based Interactive Spaces. J. Vis. Lang. Comput. 2014, 25, 278–296. [Google Scholar] [CrossRef]

- Aghaee, S.; Pautasso, C. End-User Development of Mashups with NaturalMash. J. Vis. Lang. Comput. 2014, 25, 414–432. [Google Scholar] [CrossRef]

- Atooma. Available online: http://www.atooma.com (accessed on 10 August 2016).

- IFTTT. Available online: http://ifttt.com (accessed on 10 August 2016).

- Paschou, M.; Sakkopoulos, E.; Tsakalidis, A. easyHealthApps: e-Health Apps Dynamic Generation for Smartphones & Tablets. J. Med. Syst. 2013, 37, 1–12. [Google Scholar]

- Sakkopoulos, E.; Paschou, M.; Panagis, Y.; Kanellopoulos, D.; Eftaxias, G.; Tsakalidis, A. e-souvenir appification: QoS web based media delivery for museum apps. Electron. Commer. Res. 2015, 15, 5–24. [Google Scholar] [CrossRef]

- De Lucia, A.; Francese, R.; Risi, M.; Tortora, G. Generating Applications Directly on the Mobile Device: An Empirical Evaluation. In Proceedings of the International Working Conference on Advanced Visual Interfaces (AVI), Capri, Italy, 21–25 May 2012; pp. 640–647.

- Roque, R.V. OpenBlocks: An Extendable Framework for Graphical Block Programming Systems. Master Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 2007. [Google Scholar]

- Uday, G.; Ketan, K.; Dipak, U.; Swapnil, N. Voice Based Internet Browser. Int. J. Comput. Appl. 2013, 66, 20–22. [Google Scholar]

- Francese, R.; Risi, M.; Tortora, G. Management, Sharing and Reuse of Service-Based Mobile Applications. In Proceedings of the 2nd ACM International Conference on Mobile Software Engineering and Systems (MOBILESoft), Florence, Italy, 16–17 May 2015; pp. 105–108.

- Cuccurullo, S.; Francese, R.; Risi, M.; Tortora, G. MicroApps Development on Mobile Phones. In Proceedings of the Third International Symposium on End-User Development (IS-EUD), Torre Canne, Italy, 7–10 June 2011; pp. 289–294.

- Mynatt, E.D.; Melenhorst, A.S.; Fisk, A.D.; Rogers, W.A. Aware Technologies for Aging in Place: Understanding User Needs and Attitudes. IEEE Pervasive Comput. 2004, 3, 36–41. [Google Scholar] [CrossRef]

- Laugwitz, B.; Held, T.; Schrepp, M. Construction and Evaluation of a User Experience Questionnaire. In Proceedings of the 4th Symposium of the Workgroup Human-Computer Interaction and Usability Engineering of the Austrian Computer Society on HCI and Usability for Education and Work (USAB), Graz, Austria, 20–21 November 2008; Springer-Verlag: Berlin, Germany, 2008; pp. 63–76. [Google Scholar]

- Android 5.1 APIs. Available online: https://developer.android.com/about/versions/android-5.1.html (accessed on 19 August 2016).

| Tool | Components | Language | Target Users | Target Device | Vocal Interaction |

|---|---|---|---|---|---|

| App Inventor | SC, NC | Vis | EU | Sm | TTS, STT |

| Microsoft TouchDevelop | SC, NC | TBT | D | Sm | TTS, STT |

| IFTTT | SC, SDC, NC | Vis | EU | Sm | TTS |

| Atooma | SC, NC | TB | EU | Sm | TTS |

| Vocal User Interface | - | - | EU | Pc | Sc |

| MicroApp Generator | SC, SDC, NC | Vis | EU | Sm | TTS, STT, Sc |

| View Name | Description | Vocal Output | Vocal Input |

|---|---|---|---|

| TextView | Display text. | Y | N |

| EditText | Display text and enable to modify it. | Y | Y (not always) |

| Button | Button widget. Can be pressed to perform an action. | Y | Y |

| RadioButton | Two state button. It can be selected or unselected. | Y | Y (not always) |

| RadioGroup | Multiple exclusion for a set of radio buttons. | Y | Y |

| CheckBox | Two state button. It can be selected or unselected. | Y | Y (not always) |

| Spinner | Display one child at a time and lets the user pick among them. | Y | Y |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Francese, R.; Risi, M. Supporting Elderly People by Ad Hoc Generated Mobile Applications Based on Vocal Interaction. Future Internet 2016, 8, 42. https://doi.org/10.3390/fi8030042

Francese R, Risi M. Supporting Elderly People by Ad Hoc Generated Mobile Applications Based on Vocal Interaction. Future Internet. 2016; 8(3):42. https://doi.org/10.3390/fi8030042

Chicago/Turabian StyleFrancese, Rita, and Michele Risi. 2016. "Supporting Elderly People by Ad Hoc Generated Mobile Applications Based on Vocal Interaction" Future Internet 8, no. 3: 42. https://doi.org/10.3390/fi8030042

APA StyleFrancese, R., & Risi, M. (2016). Supporting Elderly People by Ad Hoc Generated Mobile Applications Based on Vocal Interaction. Future Internet, 8(3), 42. https://doi.org/10.3390/fi8030042