Windows Based Data Sets for Evaluation of Robustness of Host Based Intrusion Detection Systems (IDS) to Zero-Day and Stealth Attacks

Abstract

:1. Introduction

- (a)

- The evaluation of the complexity of the data sets through frequency distribution method (see Section 5.1), which identify the frequency distributions of audit data (i.e., DLL calls) among normal and attacked data. Its purpose is to identify the natural similarity between attacked and normal data that is likely to assist in the design of an HIDS decision engine.

- (b)

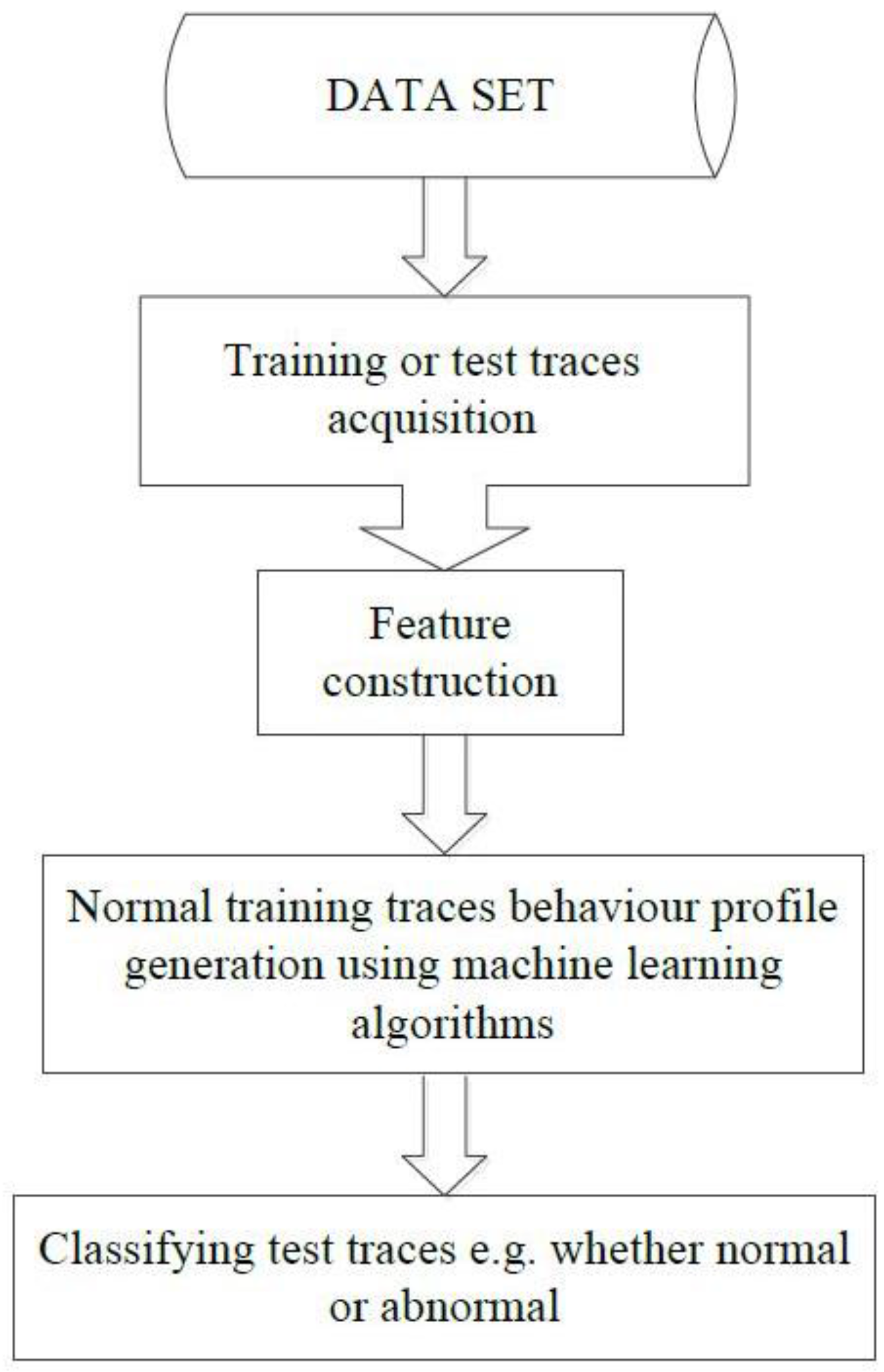

- Evaluating the complexity of the data sets through anomaly detection frame work by the use of several machine learning algorithms integrated with the novel feature selection scheme (see Section 5.2).

2. ADFA-WD

3. ADFA-WD: SAA

4. Structures, Formats and Utilization of Data Sets

5. Data Analysis

5.1. Complexity Analysis through Frequency Distribution Method

5.2. Complexity Analysis through Anomaly Detection Frame Work

| Algorithm 1 Building Profile and Classification of traces |

| Require: FM && FM 1: I←[(FM, label] 2: Build_Profile{I}&&{SVM|| KNN|| ANN|| ELM|| NB} 3: for i = 1 to N do 4: Learn [Build_Profile]←Trace(FM) is -normal- or- abnormal 5: end for |

6. Conclusion

Author Contributions

Conflicts of Interest

References

- Hu, J. Host-based anomaly intrusion detection. In Handbook of Information and Communication Security; Springer: Berlin/Heidelberg, Germany, 2010; pp. 235–255. [Google Scholar]

- Paul, B. Application firewalls in a defence-in-depth design. Netw. Secur. 1983, 9, 9–11. [Google Scholar]

- Creech, G. Developing a high-accuracy cross platform host-based intrusion detection system capable of reliably detecting zero-day attacks. Ph.D. Thesis, University of New South Wales, Sydney, Australia, 2014. [Google Scholar]

- Creech, G.; Hu, J. Generation of a new ids test dataset: Time to retire the kdd collection. In Proceedings of the 2013 IEEE Wireless Communications and Networking Conference (WCNC), Shanghai, China, 7–10 April 2013; pp. 4487–4492.

- Creech, G.; Hu, J. A semantic approach to host-based intrusion detection systems using contiguous and discontiguous system call patterns. IEEE Trans. Comput. 2014, 4, 807–819. [Google Scholar] [CrossRef]

- Information Systems Security, Assurance, and Privacy. Available online: http://aisel.aisnet.org/amcis2014/ISSecurity/GeneralPresentations/12 (accessed on 5 March 2015).

- Osanaiye, O.; Cai, H.; Choo, K.K.R.; Dehghantanha, A.; Xu, Z.; Dlodlo, M. Ensemble-based multi-filter feature selection method for DDoS detection in cloud computing. EURASIP J. Wirel. Commun. Netw. 2016, 1. [Google Scholar] [CrossRef]

- Osanaiye, O.; Choo, K.K.R.; Dlodlo, M. Distributed Denial of Service (DDoS) Resilience in Cloud: Review and Conceptual Cloud DDoS Mitigation Framework. J. Netw. Comput. Appl. 2016, 67, 147–165. [Google Scholar] [CrossRef]

- Walls, J.; Choo, K.K.R. A Review of Free Cloud-Based Anti-Malware Apps for Android. In Proceedings of the IEEE Trustcom/BigDataSE/ISPA, Helsinki, Finland, 20–22 August 2015; pp. 1053–1058.

- Do, Q.; Martini, B.; Choo, K.K.R. Exfiltrating data from Android devices. Comput. Secur. 2016, 48, 74–91. [Google Scholar] [CrossRef]

- Dorazio, C.J.; Choo, K.K.R.; Yang, L.T. Data Exfiltration from Internet of Things Devices: iOS Devices as Case Studies. IEEE J. Internet Things 2016. [Google Scholar] [CrossRef]

- Windows based IDS data sets. Available online: https://www.unsw.adfa.edu.au/school-of-engineering-and-information-technology /professor-jiankun-hu (accessed on 21 March 2015).

- Smith, C.L. Understanding concepts in the defence in depth strategy. In Proceedings of the IEEE 37th Annual 2003 International Carnahan Conference on Security Technology, Taipei, Taiwan, 14–16 October 2003; pp. 8–16.

- Common Vulnerabilities and Exposures. Available online: http://cve.mitre.org/data/refs/refmap/source-ISS.html (accessed on 12 January 2015).

- Xie, M.; Hu, J.; Yu, X.; Chang, E. Evaluating host-based anomaly detection systems: Application of the frequency-based algorithms to ADFA-LD. In Network and System Security; Springer: Xi’an, China, 2014; pp. 542–549. [Google Scholar]

- Haider, W.; Hu, J.; Xie, M. Towards reliable data feature retrieval and decision engine in host-based anomaly detection systems. In Proceedings of the 2015 IEEE 10th Conference on Industrial Electronics and Applications (ICIEA), Auckland, New Zealand, 15–17 June 2015; pp. 513–517.

- Haider, W.; Hu, J.; Yu, X.; Xie, Y. Integer Data Zero-Watermark Assisted System Calls Abstraction and Normalization for Host Based Anomaly Detection Systems. In Proceedings of the 2015 IEEE 2nd International Conference on Cyber Security and Cloud Computing (CSCloud), New York, NY, USA, 3–5 November 2015; pp. 349–355.

- Amari, S.I.; Wu, S. Improving support vector machine classifiers by modifying kernel functions. Neural Netw. 1999, 12, 783–789. [Google Scholar] [CrossRef]

- Quinlan, J.R. C4. 5: Programs for Machine Learning; Elsevier: San Diego, CA, USA, 2014. [Google Scholar]

- Huang, G.B.; Zhou, H.; Ding, X.; Zhang, R. Extreme learning machine for regression and multiclass classification. IEEE Trans. Syst. Man Cybern. B Cybern. 2012, 42, 513–529. [Google Scholar] [CrossRef] [PubMed]

- Chen, W.-H.; Hsu, S.-H.; Shen, H.-P. Application of svm and ann for intrusion detection. Comput. Oper. Res. 2005, 32, 2617–2634. [Google Scholar] [CrossRef]

- Heckerman, D.; Geiger, D.; Chickering, D.M. Learning Bayesian networks: The combination of knowledge and statistical data. Mach. Learn. 1995, 20, 197–243. [Google Scholar] [CrossRef]

| Data Sets | Normal Training | Normal Validation | Attack |

|---|---|---|---|

| ADFA-WD | 356 traces | 1828 traces | 5773 traces |

| ADFA-WD:SAA | same as above | 863 traces | |

| Algorithms | ADFA-WD | ADFA-WD-SAA | ||||

|---|---|---|---|---|---|---|

| DR% | FAR% | Processing Time | DR% | FAR% | Processing Time | |

| SVM | 64 | 13 | 55 | 61 | 18 | 52 |

| KNN | 59 | 16 | 12 | 53 | 23 | 12 |

| ANN | 48 | 10 | 32 | 42 | 12 | 34 |

| ELM | 69.3 | 15 | 72 | 65 | 21 | 72 |

| NB | 72 | 12 | 55 | 68 | 14 | 48 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Haider, W.; Creech, G.; Xie, Y.; Hu, J. Windows Based Data Sets for Evaluation of Robustness of Host Based Intrusion Detection Systems (IDS) to Zero-Day and Stealth Attacks. Future Internet 2016, 8, 29. https://doi.org/10.3390/fi8030029

Haider W, Creech G, Xie Y, Hu J. Windows Based Data Sets for Evaluation of Robustness of Host Based Intrusion Detection Systems (IDS) to Zero-Day and Stealth Attacks. Future Internet. 2016; 8(3):29. https://doi.org/10.3390/fi8030029

Chicago/Turabian StyleHaider, Waqas, Gideon Creech, Yi Xie, and Jiankun Hu. 2016. "Windows Based Data Sets for Evaluation of Robustness of Host Based Intrusion Detection Systems (IDS) to Zero-Day and Stealth Attacks" Future Internet 8, no. 3: 29. https://doi.org/10.3390/fi8030029

APA StyleHaider, W., Creech, G., Xie, Y., & Hu, J. (2016). Windows Based Data Sets for Evaluation of Robustness of Host Based Intrusion Detection Systems (IDS) to Zero-Day and Stealth Attacks. Future Internet, 8(3), 29. https://doi.org/10.3390/fi8030029