1. Introduction

There has been a lot of discussion and research into mobile learning or m-learning and learning theories for eLearning, web-based learning and mobile learning [

1,

2,

3,

4,

5,

6]. The first research pilots on mobile learning were based on transferring electronic content to mobile phones. Soon, it was realized that mobile learning should not just copy the “old” practices of eLearning, but the strengths of the mobile device should be harnessed in some other way for learning purposes. With the technology developing at a rapid phase, mobile phones were equipped with digital cameras. The mobile phone appeared as a tool for creating digital recordings of learning activities, for example, on field trips [

7].

The next innovations in mobile devices are sensors [

8]. Many mobile phones already have a GPS (Global Positioning System) feature that can be used to add location information for mobile applications. Some devices have motion sensors that monitor the acceleration and movements of the device in 3D space. It is also possible to use a heart rate monitor with a mobile phone for monitoring physical exercise. In addition, mobile phones can observe ambient light or sound levels in their surroundings.

In general, a sensor is a small device that observes its environment and reports back to a remote base station [

9]. The remote base station takes care of the manipulation of the sensor data. Sometimes, a mobile device (such as a phone or a tablet device) acts both as a sensor platform and a base station. The sensor data is then processed locally. However, the mobile device can also send the sensor data, for example, to a cloud service.

By their nature, mobile phones have good connectivity, and in addition to voice, there can be Internet data traffic between the phone and a remote service. As such, mobile phones with sensors form

ad hoc sensor networks [

8]. In this article, a sensor network is defined as a group of sensors that observe its environment and send data to a server, where it is processed and combined with other data in the server. It should be noted that the definition differs from what is commonly defined as a Wireless Sensor Network, which is a fully autonomous self-configuring

ad hoc network [

10].

The data collected from the surroundings of the device can be used to determine something about the context where the device and, hence, the user is. The context information can then be used in personalizing the user experience. For example, there are already applications that can alert users to restaurants or stores nearby based on the user’s location. Could the context information and sensor data also be used in creating new kinds of mobile learning applications?

Sharples [

11] argues that; “the main barriers to developing these new modes of mobile learning are not technical, but social” (p. 4). According to Sharples [

11] we should have more understanding of context, learning outside the classroom and the role of new mobile technologies in the new learning process. In this article, contexts, technology-mediated experiences and shared felt experiences will be used as building blocks for sensor based mobile learning environments. It is claimed that in future mobile learning environments, learning will be based on sensor data and collective experiences. This kind of learning will be described as learning experiences augmented with collective sensor data. Finally, the article reflects on examples of learning experiences augmented with collective sensor data and future skills,

i.e., the skills that educational policy makers in many countries think will be needed in the information society. [

12]

2. Context in Mobile Learning

Lonsdale, Baber, Sharples and Arvantis [

13] have suggested that contextual information should be used in mobile learning to deliver the right content and services to users. This could help to overcome the user interface (UI) and bandwidth related limitations of mobile devices. A context in Lonsdale

et al. [

13] is understood as a combination of an awareness of current technical capabilities and limitations and the needs of learners in the learning situation. For example, based on the information of the learner’s device, the learning environment could adapt the delivered content to fit the learner’s smaller screen size.

The context can be understood using a more holistic viewpoint. It is not only a technological set of capabilities, but also a combination of personal, technological and social variables. The concept of context is also related to the concepts of being adaptive, pervasive and ubiquitous. For example, Syvänen, Beale, Sharples, Ahonen and Lonsdale [

14] presented their experiences of developing an adaptive and context aware mobile learning system that they also describe as a pervasive learning environment. They define a pervasive learning environment to be a single entity formed by the overlapping of “mental (e.g., needs, preferences, prior knowledge), physical (e.g., objects, other learners close by) and virtual (e.g., content accessible with mobile devices, artefacts) contexts” (p. 2).

Sharples

et al. [

5] explains that computer technology and learning are both ubiquitous. The concept of mobile learning is closely related to ubiquitous learning and context-aware ubiquitous learning. A context-aware ubiquitous learning system integrates authentic learning environments and digital (virtual) learning environments and, hence, as a result, enables the learning system to more actively interact with the learners. This is possible because of current mobile devices and sensors [

15]. Learning in a workplace or outside the classroom on a field trip can be described as a ubiquitous learning experience. An example of a ubiquitous and context-sensitive learning service is a technician repairing copying machines. She could automatically get correct maintenance manuals to her mobile device based on the location where she is doing her maintenance work.

Yau and Joy [

16] have presented three different types of context-aware mobile learning applications: location-dependent, location-independent and situated learning. They also present a theoretical framework for mobile and context-aware adaptive learning. In the framework, they use contexts, such as the learner’s schedule, learning styles, knowledge level, concentration level and frequency of interruption. In this article, the idea is to define the context in relation to context data provided by sensors available in mobile devices. It is claimed that the contexts that support sensor based learning environments are local, collaborative and collective context. Compared to Yau and Joy [

16], these contexts can support location-dependent, location-independent or situated learning. The contexts described in this paper can be seen as a pervasive learning environment (Syvänen

et al. [

14]) or ubiquitous (Sharples

et al. [

5]). The main difference is that the concept of context is discussed here with the concepts of technology-mediated experiences, shared felt experiences and experiential learning theory in order to describe sensor based mobile learning environments.

3. Experience, Technology and Learning

In this article, technology-mediated experiences and shared felt experiences will be used as the building blocks of learning environments and extensions to Kolb’s concept of experiential learning. In addition, learning experiences augmented with collective sensor data will be defined.

An experience can be characterized by two principles, which are continuity and interaction [

17]. Continuity describes our experiences as a continuum in which each experience will influence our future experiences. It can be said that the continuum of experiences defines a mental context.

Interaction refers to the current situation (physical or virtual contexts) and its influence on one's experience. In Dewey’s terminology, interaction can also modify the context. Each experience affects the human mind and, as such, a continuum of related experiences can lead to learning. Thinking of technological environments, it can be said that technologies can mediate and enrich our experiences. Expanding the meaning of Dewey’s concept of continuum, technology-mediated experiences also contribute to the continuum of the experiences and to learning.

An important characteristic of technology-mediated experiences is sharing in our social media rich Internet. According to Multisilta, sharing an experience with a family, friends or communities using technology is called a

shared felt experience [

18]. In general, a

felt experience is one’s interpretation of the experience [

19]. Sharing the felt experience means that the experience is described by telling a story or showing a picture or a video from the event where the experience happened. If the sharing involves technology, it can also be described as a technology-mediated experience (

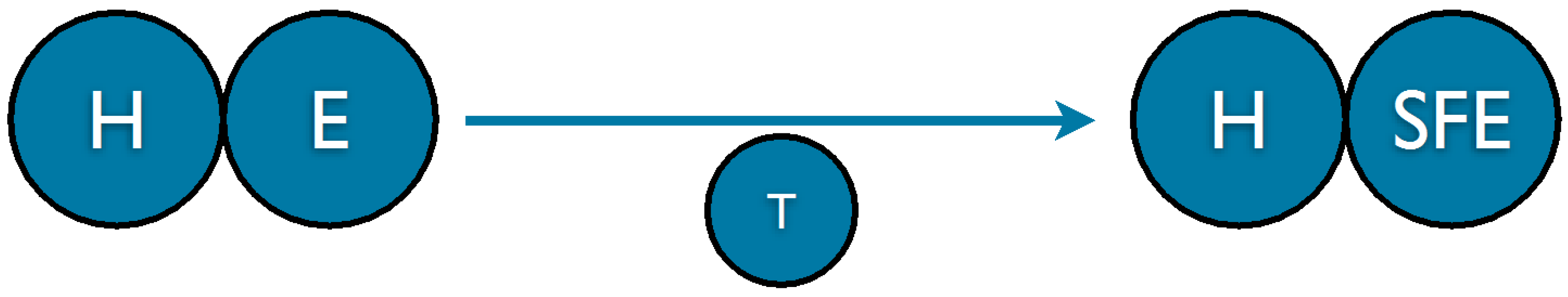

Figure 1). In addition, while technology mediates our experiences, the use of the technology is an experience in itself. In this sense, the technology-mediated experience depends on both the experience and the technology used to mediate it to others.

Figure 1.

Technology mediated experience, shared felt experience, SFE (H = human, E = experience, T = technology).

Figure 1.

Technology mediated experience, shared felt experience, SFE (H = human, E = experience, T = technology).

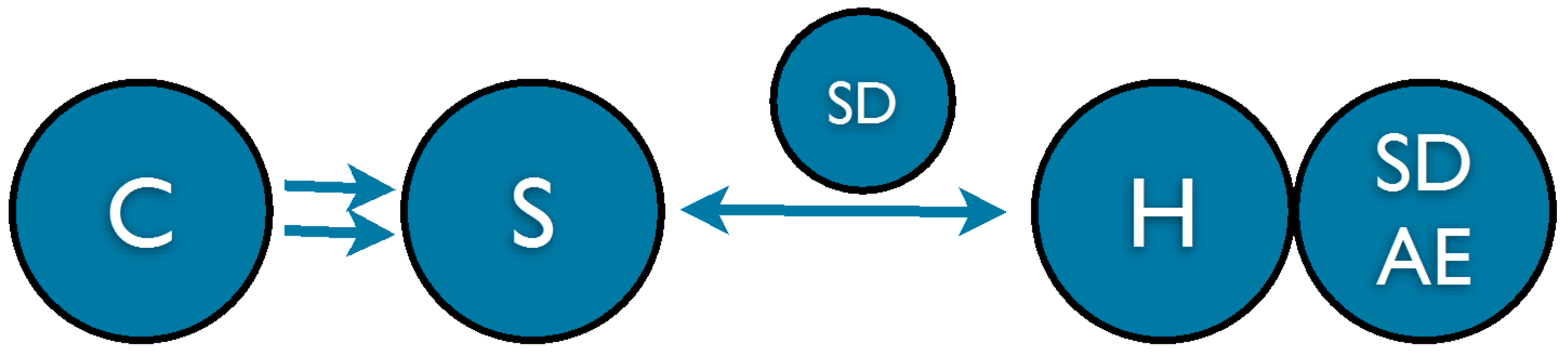

The data produced by a sensor can add meaning to the experience. For example, if a person is running, she may realize her heart rate goes up and down, depending on her pace. However, when she is using a heart rate monitor, she can immediately check that she is running at the pace that best supports her training. This kind of experience is defined as a

sensor data augmented experience (

Figure 2).

Figure 2.

Sensor data augmented experience, SDAE (C = context, S = sensor, SD = sensor data, H = human).

Figure 2.

Sensor data augmented experience, SDAE (C = context, S = sensor, SD = sensor data, H = human).

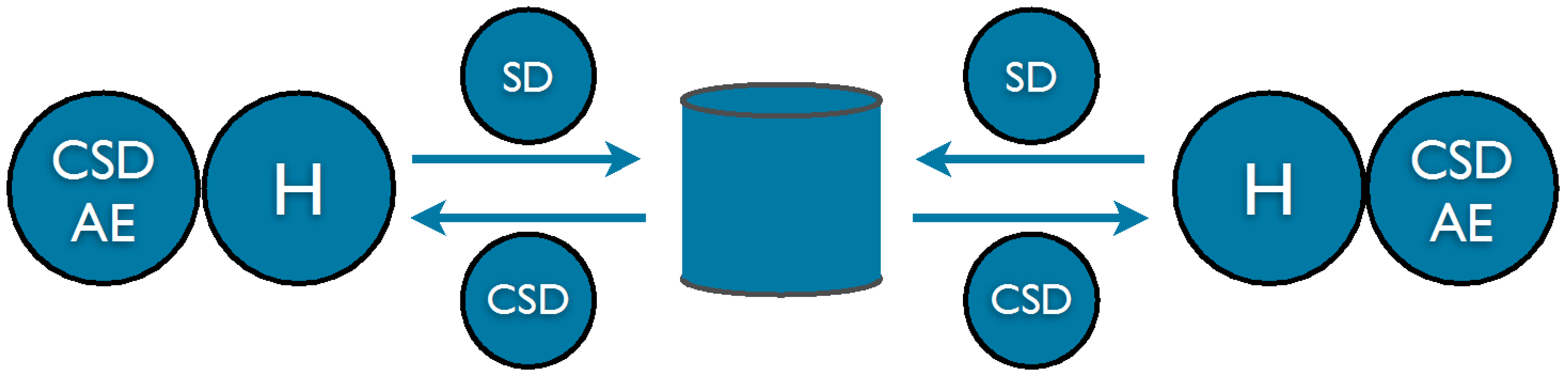

In some cases, the sensor data may be much more meaningful when it is based on several sensors. For example, the sensors of a mobile device could be collecting data from the shaking of the device. It might be evidence of an earthquake if several hundreds or thousands of mobile devices in the same area suddenly start sending high shaking data. This kind of an experience is defined as

a collective sensor data augmented experience (

Figure 3). The experience is collective, because several people are experiencing it at the same time. The experience is augmented in the sense that the users having an earthquake warning service active on their mobile devices could see the summary of the sensor data (earthquake magnitude and the area) on their phones, for example, on a map.

Figure 3.

Collective sensor data augmented experience, CSDAE (H = human, SD = sensor data, CSD = collective sensor data).

Figure 3.

Collective sensor data augmented experience, CSDAE (H = human, SD = sensor data, CSD = collective sensor data).

From the point of view of learning, an important role of the technology is to mediate learning experience in a learning community [

18]. Based on Kolb [

20], the experiential learning theory defines learning as “the process whereby knowledge is created through the transformation of experience. Knowledge results from the combination of grasping and transforming experience”. The Experiential Learning Theory presents the learning process as a circle. The process can be divided into four stages: concrete experience (CE), reflective observation (RO), abstract conceptualization (AC) and active experimentation (AE). The learning process can begin from any stage.

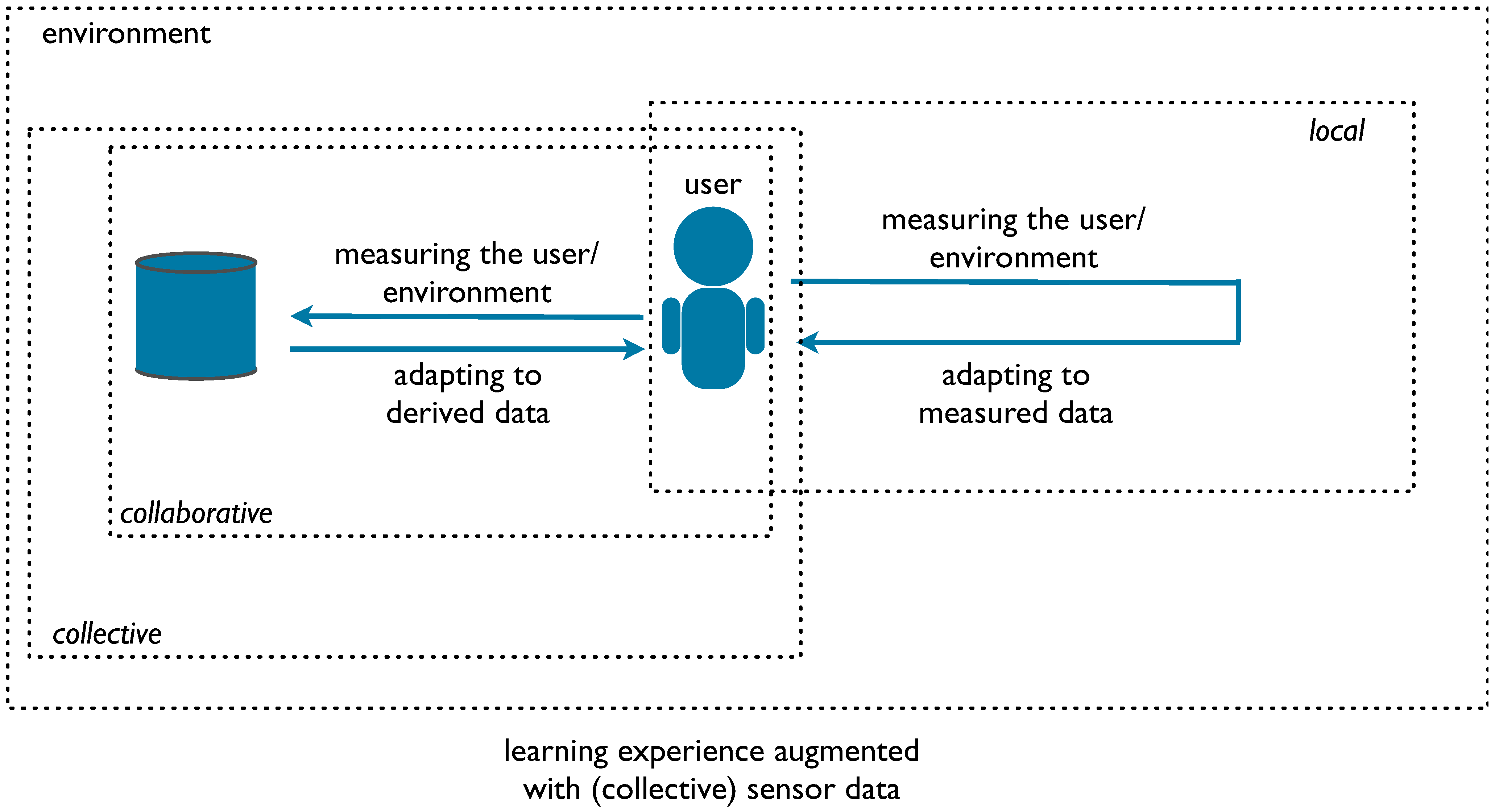

Experiential learning theory can be used to describe learning also in environments where sensors and sensor data are present. In these environments, the learning experience could be (a) technology mediated learning experience; (b) sensor data augmented learning experience or (c) collective sensor data augmented learning experience.

The sensor data in learning environments enrich or augment the learning experience. For example, a learner wearing a heart-rate monitor becomes aware of her heart rate in a physical training session and, thus, can control her pace of training to fit the goals of the training session.

Individual learners can control their activities also based on collective sensor data,

i.e., data collected from several participants. For example, in a gaming environment, the game teaching physical activity and its relation to reducing obesity could be collecting heart rate data from all the players. Based on the heart rate sensor data, the game computes the mean of their heart rates,

i.e., the collective heart rate, at a certain time. The team may have a goal to keep the team’s collective heart rate at a certain level. In order to succeed in their mission, the team members have to constantly monitor their collective heart rate and communicate with each other on how to share physical activities between the team members. In this case, the heart rate data adds an important dimension in learning. This kind of technology-mediated learning experience is called

learning experience augmented with collective sensor data (

Figure 4).

Figure 4.

Learning experience augmented with sensor data in three contexts: local, collaborative and collective context.

Figure 4.

Learning experience augmented with sensor data in three contexts: local, collaborative and collective context.

4. Learning Scenarios Using Sensors

In this chapter, we present scenarios of technology mediated learning experie nce and learning experiences augmented with (collective) sensor data. We especially discuss location, heart rate, ambient sound orientation, cameras and humans used as sensors. In these examples, the sensors provide information that can be used to construct a context for learning (such as location) or the learning topic is itself based on the sensor data (measuring sound levels in an environment).

4.1. Location

Brown [

21] states that, “if learning becomes mobile, location becomes an important context, both in terms of the physical whereabouts of the learner and also the opportunities for learning to become location-sensitive” (p. 7). Many mobile phones already have a capability to locate the device based on satellite navigation systems and present the location of the device on a map. The location data can also be sent to a service, and for example, enquiries can be made about the nearest restaurant based on the location data. In any case, all mobile devices can be located based on cell tower location, but this method may not be so accurate as GPS positioning. The drawback of GPS is that it cannot be used inside a building.

In mobile devices, it is also possible to use other location methods that do not require GPS [

22]. W3C has a standard API for web applications to use different kinds of location technologies. The Geolocation API defines a high-level interface for location information associated with the user’s device. In addition to GPS data, the API can use location data based on network signals, such as IP address, RFID, Wi-Fi and Bluetooth MAC addresses and GSM/CDMA cell IDs [

23]. Google has been scanning WLAN networks in several countries and has a database of the locations of WLAN networks in areas where they have street view images [

24]. Although this has led to concerns about privacy and security issues, the database enables Geolocation API-based applications to obtain location data quite accurately, even inside buildings.

GPS positioning has been used in outdoor games to generate location based game plots. The players have GPS enabled devices, and the game is designed so that the location affects the game logic. For example, in order to get to the next level in the game, the player has to go to a certain building or find another player based on her location information.

Researchers from the University of Zurich have developed a mobile game for a university studies introductory course [

25]. The game contains collaborative elements, and it is based on location technology. Studies have shown that the players considered the game to be motivating. The game world was a mixed reality experience for game players, where both the real world game space integrated with the virtual game world and virtual and real social spaces were combined into an interactive experience. Falk, Ljungstrand, Björk and Hansson [

26] have similar research results. In the future, mobile mixed reality games that support video-mixed see-through displays will generate more immersive gaming environments. It will also be an important future research topic [

27]. Mixed reality learning games are clearly sensor data augmented environments. They also support the collective sensor data augmented experience, if the activities of several players affect the events in the gaming world.

The location-based social networking applications, Gowalla [

28] (service had been closed since 2012) and Foursquare [

29], add gaming aspects to social networking for mobile devices [

30]. The idea behind these applications is simply to log into places where the user is. The application locates the user and displays a map showing places where she can check-in. By checking in, the users collect badges or pins that are displayed on the users’ profile; a user can typically also see where her friends have checked in and who have checked in to the same place as she. The user can also send images from the places to the service and collect new stamps for this activity.

Location-based social media applications could be used also for learning purposes. For example, a university could create a gaming environment where new students have to check-in to certain locations on the campus using their mobile devices. Each check-in would earn points. There could also be certain tasks that the students would have to do while visiting a place. For example, while checking in to the library, they should get the access to the library’s resources and take an image with a mobile phone as proof of the activity. This kind of gaming environment would replace tutoring tours around campuses, because while playing the game, the students learn how to navigate around their campus. These learning experiences can be described as sensor data augmented experiences.

Geocaching [

31] is another example of using GPS positioning in a game-like application. In geocaching, users try to find geocaches other users have hidden. The geocaches can be in historically important places, and they can also reveal facts about the place.

Geocaching does not actually use sensor networks as such. It can be played by only one player and it is not necessarily a social activity. However, geocachers use websites to share their experiences of finding a geocache, and this adds a social dimension to geocaching. Geocaching can be described as a collective sensor data augmented experience, and the sharing of the experience in a geocaching website is a technology-mediated experience.

An example of a social activity and the use of GPS data is a pilot done with high school students in Pori, Finland [

32]. The students were studying different forest types, and to do that, they made a field trip to a forest near the city. The students were divided into groups that were sent to different parts of the forest. The groups reported from their areas about the forest types they observed using their mobile phones. The data included the location and the heights and thicknesses of the trees. The students could visualize the collective data on a map using their mobile phones while they were still on the trip. The experience was clearly a collective sensor data augmented experience.

Based on the location information, the current weather information can be fetched from a free weather service on the Internet. This could be used to enhance content production. For example, the MoViE mobile social media platform for mobile video sharing creates automatic geotags and weather tags when users upload video files to the service [

33,

34,

35,

36]. The weather could be accessed using search criteria, e.g., all the videos that were recorded in a certain location on a sunny day could be searched.

MoViE supports tag creation by automatically collecting as much context data as possible. A custom-made mobile client application is a video capturing, tagging and uploading tool. It uses GPS and GSM cell information as automatic context to videos. This information is used to find the most appropriate words for tag suggestions. GPS and GSM cell information are stored as background processes during the video capturing. With this information, the database can perform queries to the server and determine where the video was captured. Also, the MoViE server tries to suggest some tags that could be appropriate for a particular video. [

34,

35]

MoViE was used as a part of teaching biology and geography for the eighth and ninth grades in Finland [

36]. According to Tuomi and Multisilta [

36], the MoViE service and mobile social media in general were useful tools for school projects. Learning occurred in several phases during the project. For example, the students had to decide what kinds of video clips they needed in their story and how each clip should be shoot so that the clip supports the goals of the story. Students had to decide the shooting locations, the shooting angles and the content of each clip. While composing their story, they had to select the best clips produced by their team, the order and the length of the clips,

etc. The making of mobile videos using social media services, and thus, telling stories is an example of twenty first century skills that will be needed by society in the future. It can be seen that the making of mobile video stories supports creativity and digital literacy skills. Sharing the mobile video stories with MoViE can be considered to be a technology-mediated experience.

4.2. Heart Rate

Heart rate monitors have been used for several years for monitoring optimal heart rate during and after exercise. The devices have typically been a watch with a heart rate belt. The heart rate has been monitored from the wristwatch in real-time. Optionally, the belt or the watch saved the data, which could later be transferred to a computer and analyzed there. Nokia SportsTracker and Nokia N79 mobile phone enabled users to upload their heart rate and GPS data online to the SportsTracker service [

37]. Using the service, exercise sessions can be studied by plotting the data on a map and making use of several graphical summaries. Similar services are available for almost all smartphones.

Heart rate monitors can surely be used in physical education (PE) classes, as such. In addition, using services, such as SportsTracker, students can compare their exercise sessions with others and learn from the analysis.

These kinds of data are important not only in running or bicycling exercises, but also in team sports, such as soccer and football. During the games, the coach could monitor in real-time, for example, who has run the longest distance. This information could then be used to select the best players and planning changes on the field. In this case, sensor data is augmenting the sports experience, and the data give opportunities to reflect one’s activities versus the measured heart rate during the game.

Monitoring collective heart rate makes it possible for us to create new types of educational games. We define collective heart rate to be a mathematical mean of measured heart rates in a group at a certain time. For example, a group of students wear heart rate monitors that send the data in real-time to the game server. The group should keep their collective heart rate at a certain level, for example, at 120 beats/min for a certain period, for example, one minute. After that, they have to raise their collective heart rate to the level of 130 beats/min and sustain it for a minute. The group can then decide how they gain the target heart rate and how they can sustain it (i.e., by running, jumping, etc.). Groups compete against each other to see how well they can succeed in meeting the aims of the mission. A game involving a collective heart rate is an example of a collective sensor data augmented experience.

4.3. Sound

Sound can easily be observed using the microphone found on every phone. It could be used to create a worldwide sound sensor network for observing the kinds of sound environments we live in. For example, students in classrooms could compare their sound environments against other schools in other countries. The ambient noise may also be an important issue when we think about neighborhoods we would like to live in. Sound pollution is an environmental issue, and it could be visualized using data from mobile phone sound systems. For example, student teams could go around a selected area or city and measure sound levels with their phones. The data could then be uploaded to a server, and a colored layer could be overlaid on a map based on the measurements. One of the existing systems is the WideNoise mobile application and webpage [

38]. Again, this is an example of a collective sensor data augmented experience.

Another application could be the automatic recognition of different sounds. Selin, Turunen and Tanttu [

39] have studied how inharmonic and transient bird sounds can be recognized efficiently. The results indicate that it was possible to recognize bird sounds of the test species using neural networks with only four features calculated from the wavelet packet decomposition coefficients. A modified version of this kind of automatic sound recognition system could be also used in learning environments. In this case, technology would be used to augment the learning experience—without the technology, it would be difficult to learn the names of the birds singing.

Furthermore, voice can be used even to control gaming applications. For example, Uplause Ltd. [

40] has developed social games for big crowds. Games are designed for large events, where the audience can collectively participate in playing the interactive mini-games, in real-time, on location. Game controllers for the game are the audience’s voice—clapping and shouts. Although these games are not yet used in learning, they represent collective sensor data augmented experiences.

4.4. Accelerometer and Orientation

Some phones have orientation sensors or accelerometers that can monitor the movements of the phone. This is similar to those used in Nintendo Wii remote controllers. For example, Apple’s iPhone has accelerometers that are used in gaming applications for controlling a game object. The iPhone could be a steering wheel for a car in a game environment, and by turning the phone, the player controls the movements of the car in the game.

Apple iPad has demonstrated the use of location and compass data in several augmented reality-types of software, such as Plane Finder AR or the Go Sky Watch Planetarium application. In the Plane Finder AR, the user can see in real-time the data of the airplanes flying over the area the user is located. The software uses location and compass sensor data and the camera in the device and overlays the real-time camera image with flight data available on the Internet. The Go Sky Watch is a simple planetarium and astronomy star guide software for educational purposes. When the device is pointed at the sky, it utilizes the date, time, location and compass in the iPad for displaying the sky view in the correct orientation. The use of the applications helps in the identification of objects in the sky and, thus, supports learning. In addition, the application includes a lot of detailed information of the selected object, which can be displayed on the screen with the sky image. This kind of user interface is very natural for us, and it raises the usability and learnability of a game or application to a new level. Clearly, these are examples of sensor data augmented experiences.

The orientation sensors could be also used for monitoring the movement of a person. In a simple application, a device with an accelerometer can be used as a pedometer. In more complex applications, the device could record for example movements in a dance practice. The data could be used to analyze the dancers, and their movement data could then be compared to data available from a teacher doing the same movements. To fully use this kind of motion detection requires the use of several motion sensors in the legs, arms and body. However, using current technology, the usefulness of this concept could already have been validated.

For deaf people, a phone with a rhythm counter that converts tapping rhythms, for example, to colors could be used to teach musical rhythms. Another solution to bring out rhythms is to use mobile devices’ vibration feature. This kind of feedback and guidance can be contrasted with the use of dance movement recording. Here, the phone provides directions instead of data storing.

Novel interaction solutions provide new possibilities to design appealing game experiences for wider demographics. The development of motion-based controllers has facilitated the emergence of an exercise game genre that involves physical activity as a means of interacting with a game. Furthermore, the major reason for increased interest in exergaming is concern about the high levels of obesity in Western society. One of the biggest challenges is the need to make games attractive collective experiences for players, while at the same time, providing effective exercise. [

41,

42,

43] In the games developed by Kiili

et al. [

41,

42,

43], the players control the game by moving their bodies. An accelerometer in the player’s mobile phone transmits the body movements to the game. Typically, in the exergame, there are two teams involving several players in each team. The team members have to discuss problem-solving tasks during the game in order to achieve the game goals. For example, the team controls a car on the screen, each player represents a different tire and the rotation speed of the tire depends on the jumping pace of the player representing that tire. The goal could be, for example, to drive the car collectively to the correct garage that represents the right solution to the problem the game provided.

4.5. Camera

Obviously, the camera in the mobile device is a sensor. Belhumeur

et al. [

44] have presented an interesting application that aids the identification of plant species using a computer vision system. The system is in use by botanists at the Smithsonian Institution National Museum of Natural History. NatureGate by Lehmuskallio, E., Lehmuskallio, J., Kaasinen and Åhlberg [

45] is another tool that can be used as a tool for identifying plants, but, currently, without computer recognition. The camera in mobile phones has already been used to identify a poisonous plant [

46]. In this case, an image of the suspected plant taken by a mobile phone was emailed to a specialist who identified the plant.

Collaboration between the Department of Botany at the Smithsonian’s National Museum of Natural History and the computer science departments of Columbia University and the University of Maryland has produced the Image Identification System (IIS) for this purpose [

47]. IIS matches a silhouette of a leaf from the plant against a database of leaf shapes and returns species names and detailed botanical information to the screen of the user’s phone. It would be easy to add an automatic collection of other sensor data to these kinds of applications, for example, date, time, location and amount of light. Again, students could use these applications on field trips as interactive encyclopedia with the plant recognition system. It is also an example of a sensor data augmented experience.

4.6. Other Sensors

Some phones have an ambient light sensor. The sensor observes the lightning conditions of the environment, and the phone adjusts its screen brightness accordingly. The ambient light sensor could provide meaningful data for example in situ applications, where students take images with their phones. The ambient light data could be added as metadata for the image. With other metadata (such as the date and time), the information could be used to decide if the image had been taken, for example, at night or if it has been taken inside or outside. In biology experiments, the amount of light could be measured at various intervals. The device could take an image of a plant every hour and record the light conditions, as well as other data. The data could then be viewed with the images and the results compared with other classes doing the same experiment (but on the other side the country).

4.7. Human Activity and Sensors

So far, we have discussed sensors that are a part of a device itself, i.e., pieces of technology. However, in some cases, where technology is mediating the experience and the experience is also collective, we could have the users performing the task of a sensor. For example, when users are sending tweets using Twitter, they could write something they have observed in their surroundings. The users can also express their feelings in the tweets. The students could use Twitter for creating a school-wide or nation-wide emotion channel. Students could be tweeting their emotions to a certain Twitter channel by marking their tweets with a hashtag, for example, #emotionNYC. The tweets could automatically be monitored, and a “heat-map” could be generated based on the time and the location of the tweets. Although not a sensor data augmented experience, the heat-map is a visualization of a collective experience that is mediated by technology.

Nokia’s Internet Pulse [

48] is a web service for monitoring public opinion by tracking social network status updates and grouping the results based on positive or negative sentiment. Nokia’s Internet Pulse presents the aggregated status updates in a timeline format as an interactive graph that allows users to drill down into the various search terms that correlate with the hot topics in Twitter feeds at selected time periods.

Perttula

et al. [

49] presented a pilot study on using collective heart rate visualized in an indoor ice rink to make intensiveness visible to an audience. The aim of the study was to explore the usefulness and effect of the developed collective heart rate and to evaluate it as one of the new features that could enhance the user experiences to audiences in wide public events. In particular, the study focuses on studying the significance of the technological equipment in creating a sense of collectiveness and togetherness for the audience. The results indicate that it is possible to enrich user experience in public events from user-generated data.

In Perttula, Koivisto, Mäkelä, Suominen and Multisilta [

50], the audience is seen as an active part of large-scale public events, and one of the intentions of their study was to increase and enhance the interaction between the audience and the happenings in the event area. Audience members can be described as sensors, not just consumers, but producers and content creators, as well. With the concept of a collective emotion tracker, it is possible to invite the audience to participate in the event and give advice to other participants. In this study, participants sent location-based mood information to the server by using a custom-made mobile application or mobile web page. Moods formed a heat map of emotions. Furthermore, participants were able to select interesting ones from different venues from the mood heat map.

Navigating unfamiliar, eventful environments, such as a festival or conference, can be challenging. It can be hard to decide what to see and when you have decided where to go; it can be difficult to know how to get there. While several research projects have investigated the mobile navigation of physical environments, past work has focused on extracting general landmarks from relatively static sources, such as structured maps. Perttula, Carter and Denoue [

51] presented a system that derives points-of-interest and associated landmarks from user-generated content captured onsite. This approach helps users navigate standard environments, as well as temporary events, such as festivals and fairs. Using social networks and preference information, the system also gives access to more personalized sets of points-of-interest. This kind of collective experience system could enhance learning events, for example, in large camps or campus-wide events.

5. Discussion and Conclusions

The main research questions in this article are how to conceptualize experiential learning involving sensors and what kinds of learning applications involving sensors already exist or can be designed. In previous chapters, a framework for sensor-based mobile learning environments has been formulated and used to characterize learning activities in several examples involving sensors. In general, Kolb’s Experiential Learning Theory presents the learning process as a circle that is divided into four stages: concrete experience (CE), reflective observation (RO), abstract conceptualization (AC) and active experimentation (AE). In such a learning process where technology and sensors are involved, the experience were defined to be a (a) technology-mediated learning experience; (b) sensor data augmented learning experience; or (c) collective sensor data augmented learning experience. The examples and scenarios presented in previous chapters show that this classification can be used to describe learning experience in cases where technology and sensor are involved.

There has been a lot of discussion about what are the skills and competences that will be needed in our society in the future. The core skills and competences mentioned in the literature include thinking and problem-solving skills, learning and innovation skills, communication skills, digital literacy skills and skills for life-management (for example, [

52]). Sometimes, these competences are also referred to as twenty first century skills. It is claimed that experiential learning, context information- and sensor data-based learning environments support these core skills and competences. In order to support the claim, the examples of learning experiences augmented with collective sensor data presented above are described using the following skills and competences: learning and innovation skills, digital literacy skills and life-management skills.

In

Table 1, the examples presented in Chapter 4 are characterized using the selected skills and competences. The set of skills and competences used in

Table 1 is neither a complete set of twenty first century skills nor a complete synthesis of competences presented in the literature under this title. However, it presents a substantial share of skills and competences commonly referenced in twenty first century learning.

According to the present study, sensors and sensor networks provide new possibilities for a variety of learning applications. Some of these applications promote twenty first century learning, as such. In addition, the understanding of sensor-based contextual data and the skill to apply these data to everyday activities in learning and life could also be considered an important part of digital literacy and personal life-management in the future.

The core concepts for analyzing learning in environments involving sensors are based on Kolb’s experiential learning and to the concept of a technology-mediated experience. When an experience is influenced by sensor data, it is considered to be a sensor data augmented experience. Finally, collective sensor data augmented experiences involve several individuals and their sensor data, which are combined to produce collective data. The role of technology is to mediate the experience to the community. However, technology-mediated experiences also contribute to the continuum of the experiences and to learning. Learning experiences always have a context, and sensor data may reveal the context to the learner and to the community in such a way that enriches the learning experience or, for example, can lead the learner to what-if problem solving situations, thus contributing to the skills needed in our future societies.

Table 1.

Learning experiences augmented with (collective) sensor and twenty first century skills.

Table 1.

Learning experiences augmented with (collective) sensor and twenty first century skills.

| Skill | An Example of a Learning Experience |

|---|

| Learning and innovation skills: creativity | |

| Learning and innovation skills: critical thinking | collective data, sensor data visualization (collective sensor data augmented learning experience) visualizing ambient sound with a graphical indicator and adjusting one’s own activity based on the observation (sensor data augmented learning experience) understanding the results of one’s own actions (technology-mediated learning experience) effects-to-cause reasoning (sensor data augmented learning experience)

|

| Learning and innovation skills: problem solving | mixed reality gaming environments (collective sensor data augmented learning experience) geocaching (sensor data augmented learning experience; technology-mediated learning experience) automatic recognition of bird sounds or recognition of a plant from an image (sensor data augmented learning experience)

|

| Decision making | sharing experiences with social media; social media augmented with sensor data (technology-mediated learning experience; collective sensor data augmented learning experience) collective and collaborative games based on sensor data (technology-mediated learning experience; collective sensor data augmented learning experience)

|

| Communication and collaboration | location-based collaborative games (technology-mediated learning experience; collective sensor data augmented learning experience) location based social networking (technology-mediated learning experience; sensor data augmented learning experience)

|

| Digital literacy skills | video storytelling (technology-mediated learning experience) sensor data visualization (sensor data augmented learning experience) understanding collective data (collective sensor data augmented learning experience)

|

| Career and life skills: personal and social responsibility | exercise games as a means to control the quality of life (collective sensor data augmented learning experience) collective sound level measurement in a classroom and one’s actions that contribute to a peaceful and supportive learning environment (collective sensor data augmented learning experience)

|