Abstract

Agentic AI systems are a recently emerged and important approach that goes beyond traditional AI, generative AI, and autonomous systems by focusing on autonomy, adaptability, and goal-driven reasoning. This study provides a clear review of agentic AI systems by bringing together their definitions, frameworks, and architectures, and by comparing them with related areas like generative AI, autonomic computing, and multi-agent systems. To do this, we reviewed 143 primary studies on current LLM-based and non-LLM-driven agentic systems and examined how they support planning, memory, reflection, and goal pursuit. Furthermore, we classified architectural models, input–output mechanisms, and applications based on their task domains where agentic AI is applied, supported using tabular summaries that highlight real-world case studies. Evaluation metrics were classified as qualitative and quantitative measures, along with available testing methods of agentic AI systems to check the system’s performance and reliability. This study also highlights the main challenges and limitations of agentic AI, covering technical, architectural, coordination, ethical, and security issues. We organized the conceptual foundations, available tools, architectures, and evaluation metrics in this research, which defines a structured foundation for understanding and advancing agentic AI. These findings aim to help researchers and developers build better, clearer, and more adaptable systems that support responsible deployment in different domains.

1. Introduction

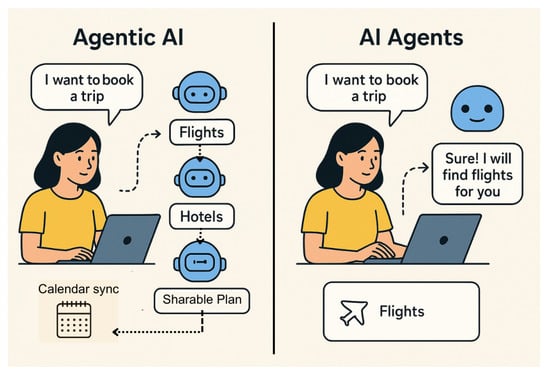

Agentic AI refers to AI systems that do not just answer prompts; they set sub-goals, choose tools, and take multi-step actions to achieve a user’s objective with limited supervision. In practice, these systems coordinate multiple agents, each handling part of the job, and an orchestration layer that keeps them aligned with the goal [1]. An agent is a system that senses its environment, decides what to do, and takes action to achieve a goal. A simple example is a travel planner agent: Given ‘plan a 3-day trip to Chicago under USD 1500’, it breaks the task into subtasks such as flights, hotel, itinerary, queries real-time sources, compares options, book reservations, then produces a shareable plan that asks for confirmation only at key steps. This goes beyond traditional chatbots by planning, acting, and verifying outcomes in a loop [2]. The term AI agent refers to an autonomous software entity that perceives, reasons, and acts to complete specific adaptively directed tasks. The term agentic AI refers to a multi-agent system where specialized agents collaborate, coordinate, and plan to achieve complex, high-level objectives. The difference in these terms for the flight booking example is shown in Figure 1.

Figure 1.

Agentic AI vs. AI agents.

Interest in agentic AI is rising quickly as organizations look for automation that impacts real work, not just content generation. Industry analysts now track “AI agents” as a distinct market, estimating ∼USD 5.3–5.4B in 2024 and projecting ∼USD 50–52 B by 2030 (≈41–46% CAGR), reflecting strong enterprise demand for agents that can reason, use tools, and execute workflows [3,4]. Scholarly research suggests the same movement. One work from Stanford HAI [5] shows human agent that did exhibit human behavior and sustained personas. Additionally, industry advice such as by AWS [2] describes increasing levels of autonomy of which the agent iteratively planned, acted, checked experience, and adjusted each step of the way autonomously rather than expecting prompts step by step from a human. Together, these advances move AI from reactive assistants to proactive collaborators in various fields [6,7,8].

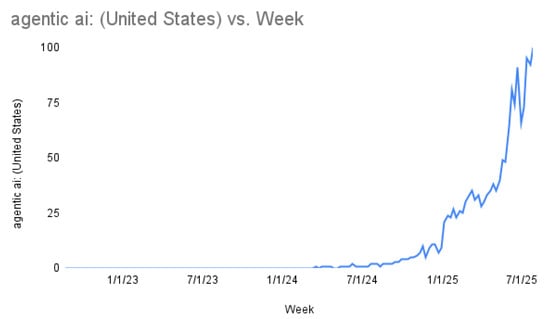

According to Google Trends, interest in “agentic AI” remained minimal for years, then spiked beginning in April 2024, reaching its peak in July 2025. A value of 100 represents the term’s highest recorded popularity, as shown in Figure 2. This sharp rise reflects a global shift toward building AI that can plan, act, and adapt with minimal human guidance.

Figure 2.

Google Trends shows the popularity of agentic AI.

Traditional systems enabled the automation of individual steps that complied with limited rules; agentic AI connect these steps, track progress, recover from errors, and they are able to automate any end-to-end process, month-end close, claims adjudication, outreach in sales, and even research workflows, previously limited to human effort. Early enterprise reporting highlights this architectural turn from smarter models to agentic, process-embedded systems [9]. This review synthesizes how the field defines agentic AI, the architectures that enable it, where it is being applied, how it is measured, and the open challenges, such as reliability, coordination, safety, and governance, that must be addressed for widespread deployment.

1.1. Research Purpose

The purpose of this research is to provide a comprehensive review of agentic AI by synthesizing its definitions, frameworks, and architectures, while comparing agentic AI from related paradigms. This study aims to find current tools and frameworks that support agentic capabilities, describe available architectural models of such systems, and explore their applications across domains. Furthermore, it concentrates on the input–output mechanism of agentic AI, existing testing methods, and metrics used to assess agentic performance, and addresses the key challenges and limitations. By doing so, the paper establishes a structured foundation for understanding, assessing, and advancing the field of agentic AI.

1.2. Research Questions

The motivation for this research resulted from the growing importance of agentic AI and the need to better understand what it is and how it works. This study aims to provide researchers and developers with a clear overview of agentic AI by examining its definitions, how it differs from related technologies, the tools and frameworks that support it, its system design, the types of tasks it can perform, how it interacts with inputs and outputs, methods for evaluating it, and the challenges it faces. The objective is to support responsible development and deployment while also assisting in the improvement of agentic system design, use, and evaluation across various domains.

- RQ1

- How is agentic AI conceptually defined, and how does it differ from related paradigms?

- Ans:

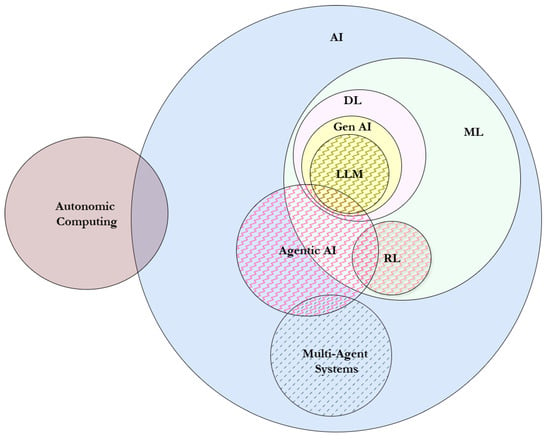

- We define agentic AI’s core characteristics, distinguishing it from generative AI, autonomic computing, and multi-agent systems. A Venn diagram-based taxonomy illustrates overlaps and distinctions, clarifying terminology and providing a structured foundation.

- RQ2

- To what extent do current LLM-based and non-LLM-driven agentic AI, tools, and frameworks enable agentic capabilities?

- Ans:

- We analyze frameworks like LangChain, AutoGPT, BabyAGI, OpenAgents, Autogen, CAMEL, MetaGPT, SuperAGI, TB-CSPN, and non-LLM-driven agentic systems. Their features planning, memory, reflection, and goal pursuit are compared to evaluate how effectively current LLM-based tools enable agentic capabilities and approach true autonomy.

- RQ3

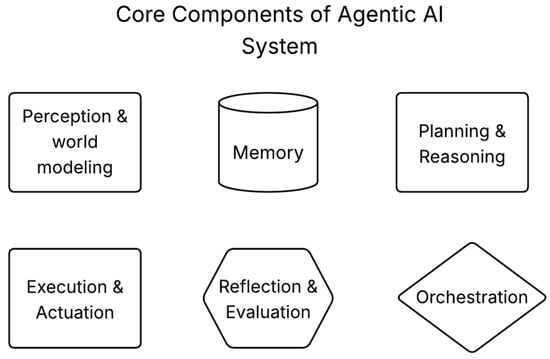

- What are the core components or architectural models used to build agentic AI systems?

- Ans:

- We define the core architectural components of agentic AI, emphasizing planning modules, memory system, and reasoning engines.

- RQ4

- What types of goals and tasks are currently being solved using agentic AI across domains?

- Ans:

- We provide a comprehensive classification of goals and tasks being addressed by agentic AI across multiple domains, along with their application contexts and corresponding systems used in the literature, presented in a tabular format.

- RQ5

- What kinds of input and output formats do agentic AI systems handle in comparison to traditional AI systems?

- Ans:

- We provide a detailed overview of the input and output formats handled by agentic AI systems, along with the specific tasks and corresponding applications, summarized in a tabular format, and compare this with the input–output mechanisms of traditional AI systems.

- RQ6

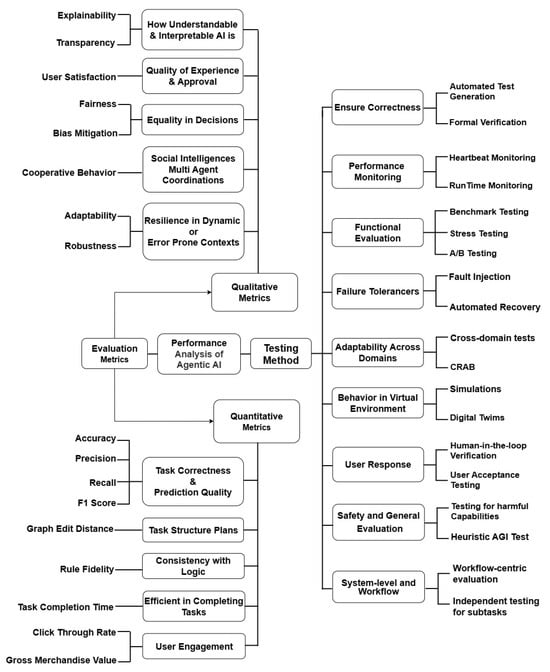

- What evaluation methods and metrics are used to assess the performance of agentic AI systems?

- Ans:

- We presented a classification for both the testing methods and the evaluation metrics to evaluate agentic AI systems. The evaluation metrics are further classified into qualitative and quantitative.

- RQ7

- What are the key challenges and limitations in designing and deploying agentic AI systems?

- Ans:

- We presented different challenges of agentic AI, including architecture and technical challenges, performance and tool integration issues, coordination between multi-agents, and user experience issues, along with ethical and security challenges.

1.3. Contributions and Research Significance

This survey provides detailed answers for research questions that show the importance and impacts of agentic AI systems in the real world. Exploring these definitions, frameworks, and architectural models of agentic AI provides important guidance and information to researchers and developers. Understanding these foundational elements is useful for designing agentic AI systems capable of autonomous planning, decision-making, and adaptive behavior. Also, this study reviews current tools and frameworks of agentic AI systems, their input and output mechanisms, and describes how agentic capabilities are implemented in real-time applications. Organizing architectures, applications, and evaluation methods in a clear overview helps people understand and choose the right approaches and tools easily for different tasks and areas.

Additionally, this research examines the key challenges and limitations in developing and deploying agentic AI, including reliability, safety, interpretability, and governance concerns. By highlighting these challenges and discussing robust evaluation methods, it contributes to establishing reliable assessment frameworks that improve the credibility and practical application of agentic AI systems. These insights facilitate informed decision-making in both academic and industrial contexts and support the development of more advanced, dependable, and context-aware agentic AI solutions. Overall, this study offers critical knowledge on conceptual, architectural, and practical aspects of agentic AI, advancing understanding and guiding future research and implementation efforts.

1.4. Organization of the Paper

The remainder of this paper is organized as follows. Section 1 introduces the motivation and background for studying agentic AI, the research purpose, proposed research questions, and outlines the overall organization of the paper. Section 2 describes the methodology used to conduct the review and analysis. Section 3 presents the results, beginning with the conceptual definition of agentic AI and its differences from related paradigms. It then examines the extent to which current LLM-based frameworks enable agentic capabilities, followed by a discussion of core components and architectural models, including a comparison between architectures and components. The section further explores the types of goals and tasks addressed by agentic AI across domains, the input–output mechanisms in comparison to generative AI systems, and the evaluation methods and metrics used to assess performance. Challenges and limitations in designing and deploying agentic AI systems are also highlighted. Section 4 concludes the paper by summarizing the findings and offering directions for future research. Appendix A presents tables summarizing the bibliographic search results of primary studies, including publication venues, years, and paper types, while Appendix B lists the abbreviations used throughout the paper.

2. Methodology

To carry out this study, we searched several academic databases, including Library Search, ACM Digital Library, ScienceDirect, Google Scholar, and Semantic Scholar. We created a precise search query (“agentic AI” OR “agent” OR “autonomous AI” OR “multi-agent systems” OR “autonomic computing” OR “generative AI” OR “AI planning” OR “AI memory systems” OR “AI feedback loops”) AND (“survey” OR “overview” OR “review” OR “summary” OR “literature review”) to find studies relevant to our research. After collecting the results, we removed duplicates and examined abstracts and conclusions to determine relevance. We included papers that discussed agentic AI concepts, systems, or challenges, using case studies, surveys, and other research methods. We also looked at the references of these papers to find additional useful studies. Finally, we extracted key information from all selected studies to summarize current knowledge on agentic AI.

As a result of the search process, we used these 143 primary studies to extract the data, which were published from the year 2005 to the present. More than 90% of these papers were published in 2024 and 2025; from this, it is clear that most of the agentic-AI-system-related papers are being published in recent times. More statistics related to year-wise agentic AI papers publications are shown in Table A1. Out of these papers 17% are conference proceedings, 46% are peer-reviewed journals, and 28% are preprints. Others are dissertations, articles, workshops, etc. Table A2 is a clear distribution format of the type of publication corresponding to the number of papers.

Turning to the publication platform, 32 of these selected papers are published on the arXiv platform, followed by 27 papers from conference proceedings, including ACM International Conferences such as on Autonomous Agents and Multi-agent Systems, Interactive Media Experiences, Information and Knowledge Management, Human Factors in Computing Systems, etc. Additionally, we found 43 papers that are published in journal-based venues such as IEEE Access, Engineering Applications of Artificial Intelligence. One of the selected papers is a PhD dissertation, and the other papers are from Procedia CIRP, MethodsX, etc. Table A3 provides the complete list of venues. We observed that most of the publications are multidisciplinary, which makes sense in the context of agentic AI, as these disciplines are focusing on solving their problems using agentic AI.

Along with the main research papers, we also studied 21 survey and review articles that provide useful perspectives on agentic AI. Each of these surveys was examined based on our chosen dimensions, and their contributions were marked as L, M, H, or NA to represent low, medium, high, and not applicable. As shown in Table 1, many of the surveys emphasize specific aspects such as taxonomy, system architecture, or domain-focused applications of LLM-based agents, while giving little attention to evaluation methods or input–output classifications. Some papers discuss specialized areas like planning, reasoning, integration with 6G networks, or retail systems, while others examine broader themes including trust, risk, operational practices, and public attitudes toward AI.

Table 1.

Summary of important surveys on agentic AI (L—Low, M—Medium, H—High, NA—Not Applicable).

From this analysis, it is clear that most existing surveys cover only selected dimensions, leaving important areas such as standardized benchmarks, performance evaluation, and input–output modeling less developed. In contrast, our paper provides complete coverage of all six dimensions’ definitions and concepts, taxonomy, architecture, applications, input–output classifications, evaluation metrics, and challenges. By taking this wider and unified approach, our research deals with the issues faced in prior research and provides a better understanding of agentic AI that will help address the questions or challenges of any future research and real practice.

3. Results

3.1. How Is Agentic AI Conceptually Defined, and How Does It Differ from Related Paradigms?

Agentic artificial intelligence (agentic AI) marks a major change in how AI systems are designed. Moving beyond passive and reactive tools, agentic AI refers to autonomous, goal-driven systems that can operate on their own for long periods, requiring minimal human supervision [27,31,32,33]. Unlike traditional AI or standard large language models (LLMs) that respond only to single prompts [34], these systems can understand broad objectives, break them down into smaller tasks, and carry out multi-step plans while adapting to feedback from changing environments [35,36,37]. Their “agentic” nature also highlights their ability to take on responsibilities from humans [32], act purposefully, and be accountable for the results they produce.

What makes agentic AI stand out is its combination of key abilities in one system. These include strategic planning, memory that preserves context over time, using external tools to extend capabilities, and collaborating with other agents [38,39,40]. By combining foundation models with planners, knowledge bases, sensors, actuators, and feedback mechanisms, these systems can understand complex environments, adjust strategies in real time, and achieve their goals independently [37,41,42]. However, in the real world, autonomy can be defined as a system’s capacity to establish sub-goals, finish tasks without human involvement, and adjust to unexpected events [22]. Even though existing frameworks show some autonomy, they are frequently still constrained by problems like reliance on human-defined goals, vulnerability to mistakes in long-term planning, and difficulties with accountability. This makes them highly valuable in areas like scientific research, industrial automation, and compliance management [43,44,45], where precision, continuous learning, and smart decision-making are essential.

At the heart of agentic AI is self-directed, goal-focused intelligence. These systems operate with a “degree of agenticness,” meaning they can work proactively, plan ahead, and shape outcomes over time [14,46]. As research and industry continue to adopt these systems, agentic AI has the potential to become a reliable partner for humans extending our abilities while raising new questions about trust, oversight, and collaboration [36].

Agentic AI goes beyond simple automation by enabling systems to understand high-level intent, create actionable plans, and carry them out with minimal human input [27,31,38,47]. These systems combine goal-driven behavior, flexible decision-making, and adaptive reasoning to work effectively in dynamic and evolving situations [48,49,50]. They can pursue multiple goals at once, plan for the long term, and collaborate across several agents or components [37,38,51]. Architecturally, they often integrate large AI models with knowledge bases, planners, access to external tools, and persistent memory to improve understanding and independence [21,42,52]. By continuously learning from experience and adjusting to complex, unpredictable environments [53], agentic AI shifts from simple automation toward reflective, autonomous systems capable of achieving complex objectives.

The purpose of the comparative overview is to present the similarities and differences between multi-agent systems, generative AI, and agentic AI in a structured format, as shown in Table 2. By organizing the paradigms across key aspects such as decision-making, adaptability, learning approach, and workflow management, it highlights their distinctive features. The side-by-side format illustrates the progression from distributed coordination in MAS and pattern-based generation in GenAI toward the autonomy and goal-driven reasoning of agentic AI. This approach ensures complex concepts are communicated clearly.

Table 2.

Comparative analysis of AI paradigms: multi-agent systems, generative AI, and agentic AI.

The purpose of the below Venn diagram in Figure 3 is to provide a visual representation of how multiple fields of artificial intelligence overlap and intersect while emphasizing the importance of agentic AI. It shows how agentic AI overlaps with reinforcement learning and multi-agent systems and places it within the broader universe of AI. This format allows us to better understand and visualize the dependencies and shared characteristics between agentic AI and related fields. In general, the diagram serves as complement to the written discussion in providing a concise and more accessible picture of how agentic AI is situated and interacts within the greater ecosystem of AI technologies.

Figure 3.

A Venn diagram illustrating the conceptual foundations of agentic AI.

Figure 3 shows the relationships between different areas of artificial intelligence. At the broadest level, everything falls under AI, with Machine Learning (ML) as a major subset. Inside ML, deep learning (DL), generative AI (Gen AI), and LLMs are shown as nested areas, reflecting their strong connection. Reinforcement learning (RL) also sits within ML but separately. Agentic AI overlaps with these technologies, as it draws from both LLMs and RL, while also sharing ideas with MAS [77,78]. Autonomic computing is placed outside, showing that it is related but not fully inside the AI family; it focuses on self-managing systems that can configure, heal, optimize, and protect themselves, which overlaps with AI but also extends into broader fields like distributed systems and IT infrastructure.

Agentic AI is built strongly on top of LLMs and RL. LLMs give agents the ability to understand and generate natural language, enabling them to reason, plan, and interact in ways that feel human-like. RL, meanwhile, allows agents to learn from their actions and continually improve their decisions, making them adaptive and focused on achieving goals [31,79]. Together, LLMs provide intelligence in communication and knowledge, while RL contributes the ability to learn from experience. This combination is at the heart of how agentic AI systems can plan tasks, solve problems, and act independently.

The overlap between MAS and agentic AI is also important. MAS focus on how multiple autonomous agents interact, cooperate, or compete within a shared environment. These skills are essential for agentic AI, where several agents often need to coordinate to achieve complex objectives. Beyond using LLMs and RL, agentic AI depends on planning algorithms and memory systems, which help agents remember past experiences and develop long-term strategies. Altogether, these components form the foundation of agentic AI, allowing it to act not just as a tool but as an intelligent, goal-driven partner.

3.2. To What Extent Do Current LLM-Based and Non-LLM-Driven Agentic Systems, Tools, and Frameworks Enable Agentic Capabilities?

3.2.1. LLM-Based Agentic Systems

The development of LLM-based frameworks like LangChain [26,31,78,80], AutoGPT [26,31,78], BabyAGI [31], AutoGen [80], and OpenAgents [26] shows a clear trend toward building smarter, more independent AI systems. These frameworks give AI agents the ability to plan, remember past actions, reflect on their performance, pursue goals, and use external tools. By equipping agents with these cognitive skills, they become more adaptable and capable of handling complex tasks with minimal human guidance.

Table 3 presents an organized overview of major LLM-based frameworks. It highlights each framework’s, primary purpose, key agentic capabilities, and the underlying LLM used. Structuring this information in tabular form makes complex details easier to navigate. The format also facilitates side-by-side comparison, enabling the identification of unique features as well as areas of overlap in agentic capabilities.

Table 3.

Agentic capabilities across current LLM-based frameworks.

LangChain is a popularly used open source framework that connects LLM to external tools, data sources and APIs, which simplifies the work of constructing complex workflows with multiple steps [26,31,78,79]. It allows developers to construct sequences of reasoning steps (i.e., chains) to help the model reach an objective. LangChain has basic memory that allows you to store and recall information and integrate external knowledge using Retrieval-Augmented Generation (RAG), which gives agents access to documents and databases or search results while they reason [77,80]. While it facilitates task planning and chaining, it does not natively include self-reflection or optimization, so its intelligence is largely guided by the developer. LangChain is ideal for creating structured, goal-oriented workflows where models can interact with external systems and perform contextual reasoning.

AutoGPT is built as a fully autonomous agent capable of completing complex tasks without needing constant human guidance [43,45,47]. It can take high-level goals, break them down into smaller actionable steps, and adjust its plan dynamically as circumstances change. With both short-term and long-term memory, AutoGPT keeps track of context and can handle tasks that go beyond a single interaction. It also leverages tools like web search, code execution, and file management to operate effectively in real-world settings [29,44]. Through its iterative process, AutoGPT can analyze outcomes and refine its actions, combining planning, memory, reflection, and goal-oriented execution to manage tasks autonomously.

BabyAGI approaches the idea of autonomous agents with a slightly different approach, emphasizing simplicity and general accessibility for task generation [49,81]. It can decompose high-level goals into smaller, actionable steps for task outputs and has memory to keep track of progress made on a particular multi-step task. BabyAGI has extensions for working with external tools, such as those for code generation and robotics, so it can effectively act in both digital and physical environments. Although it does not have a fully explicit reflection ability like AutoGPT, and it is still all iterative, meaning that it can execute a task, receive feedback, and refine or adopt new actions over time, to give it a practical level of self-correction for goal achievement [82]. BabyAGI should provide developers the ability to implement autonomous problem-solving agents of moderate complexity.

AutoGen is designed for multi-agent systems, allowing several AI agents to communicate, collaborate, and work together to solve tasks [41]. Each agent can create its own plan while coordinating with others, using memory to track past actions and shared context. The framework supports reflection and optimization, so agents can evaluate their plans and improve them over time. By combining tool use with teamwork, AutoGen reduces errors, boosts performance, and can tackle complex, distributed goals more effectively. This makes it especially useful for research or applications that rely on multi-agent reasoning and collaborative workflows.

OpenAgents focuses on developing LLM-based agents that can work together in a shared ecosystem to accomplish complex goals [26,78,79]. The framework uses contextual memory to keep interactions coherent over multiple steps, and it supports iterative planning and reasoning so agents can refine their decisions over time. By integrating external tools, agents can carry out tasks effectively while combining internal reasoning with knowledge from outside sources [31,77,80]. OpenAgents also lets agents improve themselves through repeated reasoning cycles and supports collaboration among multiple agents on tasks that require continuous decision-making, dynamic problem-solving, or integrating diverse sources of knowledge.

Communicative Agents for “Mind” Exploration of Large-Scale Language Model Society (CAMEL) is a framework for multiple agents that enables role-playing: LLM agents discuss with each other to see what new behaviors they come up with. In order to solve tasks, it assigns complementary roles (such as “User” and “Assistant”) and promotes dialogue-based collaboration [83,87]. CAMEL facilitates autonomous reasoning, negotiation, and iterative solution refinement by breaking down objectives into conversational exchanges. In contrast to conventional single-agent systems, CAMEL makes use of multiple agents’ interactions to produce more complex results. The majority of implementations use GPT-4 and Large Language Model Meta AI (LLaMA) as the underlying models, though it can operate on other LLMs. In constrained or open-ended environments, CAMEL is especially helpful for studying agent coordination, cooperation, and emergent problem-solving. MetaGPT is an advanced framework for multi-agent collaborative problem-solving that leverages GPT-4 as a central intelligent agent. It automates tasks that can be represented as a series of smaller sub-problems by breaking each problem into sub-problems, automatically assigning sub-problems to agents based on their specialization, and coordinating agents’ interactions to solve the overlying problem [84,87]. By managing an organized “society” of agents, MetaGPT can achieve efficiency and scalability in solving challenging problems. MetaGPT can be used to automate workflows or research or to support decision-making and reasoning tasks that draw upon diverse expertise and collaborative reasoning. It is best designed with seamless communication and task management of dynamic complexity among multiple agents, showcasing a highly advanced evolution of AI-enabled collaboration.

SuperAGI is an open-source framework for autonomous agents. Developers can quickly define, deploy, and manage LLM-powered agents, with great flexibility. The software is mostly model-agnostic and supports LLMs from many providers (LLaMA, Anthropic, and OpenAI). SuperAGI agents have the capability for goal-directed execution; memory management; multi-step planning; and tool use, among others [85]. In addition, SuperAGI includes resource controls and monitoring to constrain autonomous behavior. Unlike lighter-weight orchestration tools, SuperAGI is oriented toward production readiness, allowing agents to be scaled and monitored within real-world workflows. Its modularity and extensibility make it a strong contender for enterprise-level autonomous agent applications.

A hybrid framework called Task-Based Cognitive Sequential Planning Network (TB-CSPN) blends selective LLM use with formal rule-based coordination [86]. Its architecture limits LLM involvement to semantic topic extraction and uses Colored Petri Nets for deterministic task planning and execution. This eliminates the possibility of LLM visions or non-determinism by guaranteeing that reasoning and sequencing are logically consistent and verifiable. Instead of using unrestricted LLM reasoning, TB-CSPN agents use structured tokens to communicate, and their interactions are guided by formal cognitive models. TB-CSPN demonstrates a more cautious integration of AI balancing LLM flexibility with symbolic rigor, in contrast to frameworks that mainly rely on language models for planning. It works particularly well in fields that need topic-driven task coordination, auditability, and dependability.

3.2.2. Non-LLM-Driven Agentic Systems

Intelligent agents can act and make decisions outside of large language models. Non-LLM-dependent agentic systems achieve autonomy through either rules, learning, planning, or reactive behaviors; they have been used extensively in robotics, simulations, and automated decision-making. These systems show that AI can be effective and adaptive without natural language understanding.

One important type is rule-based multi-agent systems. In these systems, agents follow predefined rules or logic to interact and achieve coordinated outcomes. Examples include CLIPS, Drools, and JADE [88], which are used for simulations, workflow automation, and decision-support tasks. Another key category is RL [89,90] agents, which learn from trial and error. Using algorithms like Q-learning, Deep Q-Networks (DQN), [91] and Policy Gradient methods, RL agents improve their performance over time by interacting with their environment. These agents are commonly used in robotics, autonomous vehicles, and dynamic systems.

Other non-LLM agentic systems include planning agents, which use algorithms such as STRIPS or PDDL [92] to achieve goals; agent-based simulation frameworks like NetLogo [93] and MASON [94], which model complex social, ecological, or economic behaviors; and behavior-based robotic agents, such as those using subsumption architectures, which respond to environmental stimuli through simple sensorimotor rules. Together, these systems demonstrate that intelligence, adaptability, and autonomous behavior can emerge through rules, learning, and planning, highlighting the broad potential of agentic AI beyond language-based models.

3.3. Core Components and Architectural Models for Agentic AI

This section defines a set of agent components and uses them to compare architectures by control flow and module wiring rather than terminology: perception, world/state, memory, planning, execution/tooling, reflection/evaluation, orchestration, and interaction. We then outline five orchestration models, ReAct (Reasoning + Acting) single-agent, supervisor/hierarchical, Hybrid reactive–deliberative, BDI, and layered neuro-symbolic, highlighting when to use each.

3.3.1. Core Functional Components of Agentic AI Systems

Core components are the reusable building blocks of agentic AI, wired together by architectural choices. A common set includes the following:

- Perception and world modeling—Ingests and structures external inputs (e.g., text, sensors, APIs) into internal representations [41,95].

- Memory (Short-Term, Long-Term, Episodic)—Stores short- and long-term knowledge; retrieval/promotion rules connect past to present reasoning [39,95].

- Planning, Reasoning, and Goal Decomposition—Transforms goals into actionable steps, evaluates alternatives, and selects next actions [95].

- Execution and Actuation—Carries out actions via APIs or actuators, with monitoring and dynamic replanning [41].

- Reflection and Evaluation—Enables self-critique, verification, and refinement of actions and plans [50].

- Communication, Orchestration, and Autonomy—Coordinates task flow, retries, and timeouts, either centrally (e.g., LLM-based supervisor) or via decentralized protocols [50,96].This component stack recurs across both academic and industry implementations, including high-stakes domains like finance [39].

The model, typically an LLM, large agent model (LAM), or foundation model (FM), provides the system’s core intelligence. It often serves as the reasoning engine, perceptual front-end, and, in many cases, the orchestrator. In Multi-Agent Artificial Intelligence (MAAI) frameworks, the model forms a base layer for perception, action, orchestration, workflows, and user interaction [96]. Tutorials increasingly integrate LLMs/LAMs with planners, memory, tools, and knowledge bases [42].

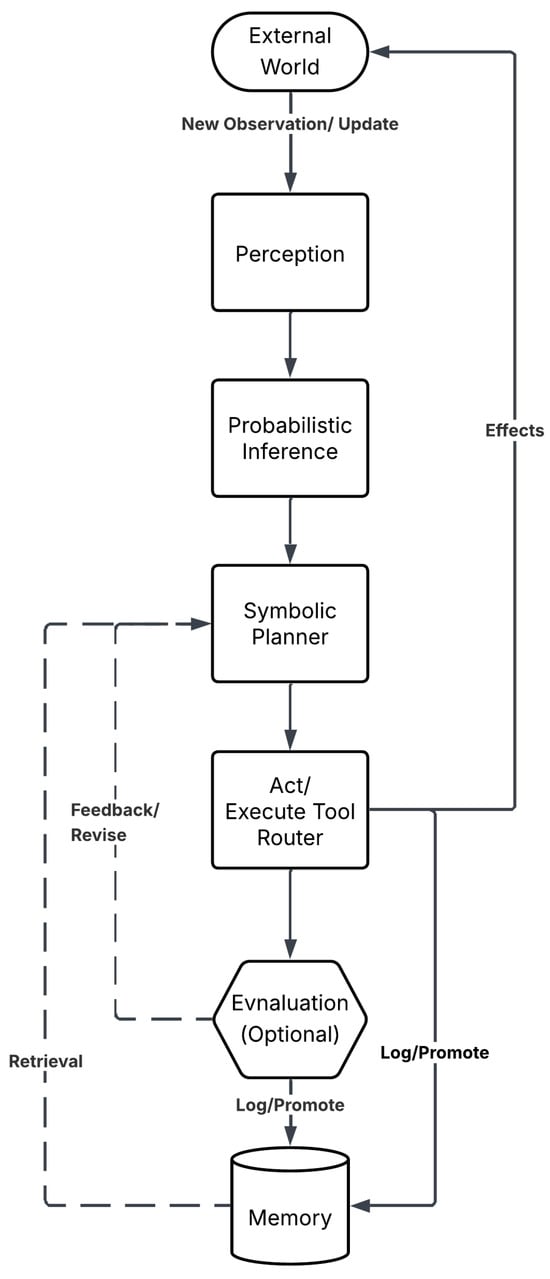

Agentic AI behavior arises from a coordinated set of reusable components rather than from a single model, as shown in Figure 4. In the following, we synthesize the modules and how they interact.

Figure 4.

Core components of agentic AI system.

Perception and World Modeling: Perception ingests external inputs (text, events, sensors/APIs) and normalizes them into structured observations. World/state modeling maintains an internal representation used for prediction, consistency checks, and counterfactual simulation. In embodied or data-rich settings, perception is multimodal and frequently layered with probabilistic inference to manage uncertainty before symbolic planning or execution [41,42,95].

Memory (Short-Term, Long-Term, Episodic): Memory provides temporal continuity. Short-term memory (STM) maintains episode context (e.g., current plan, recent exchanges); long-term memory (LTM) stores episodic/semantic knowledge (e.g., preferences, histories, artifacts). Retrieval and promotion rules connect STM and LTM so that prior outcomes inform future decisions, reflection, and personalization [47,50,95].

Planning, Reasoning, and Goal Decomposition: The planning/reasoning module transforms goals into actionable steps, evaluates alternatives, and selects next actions across short and long horizons. Granularity varies by paradigm: BDI filters desires into intentions; HRL decomposes abstract goals into sub-tasks; single-agent ReAct interleaves reasoning with action (often with tool calls) [41,47,95].

Execution and Actuation: Execution bridges cognition to impact. It invokes tools/APIs, actuators, or workflow steps; validates outcomes against expectations; and triggers retries or replanning on deviation. Production-oriented variants emphasize schema checks, budget/latency limits, and robust error handling to support closed-loop operation in dynamic environments [41,42,95].

Reflection and Evaluation: Reflection/evaluation modules verify intermediate or final outputs, critique candidate plans, and trigger selective replanning. Practical patterns include self-critique, external tool-assisted verification, and nested “critic” roles that reduce hallucination and improve reliability while adding computational overhead [50,95].

Communication, Orchestration, and Autonomy: Interaction modules support human–agent and agent–agent dialogue (clarification, negotiation, oversight) and surface trace information (e.g., actions, tools, sources) for transparency. Autonomy emerges when perception, planning, execution, memory, and reflection are orchestrated over time; in many systems, an LLM-based supervisor coordinates sub-agents, invokes memory or tools, and maintains coherence across steps [41,50,97].

3.3.2. Architectural Models in Agentic AI

Agentic AI systems are not monolithic; their capabilities emerge from architectures that coordinate planning, execution, memory, reasoning, and interaction. Below, we outline five commonly used models and their control flows.

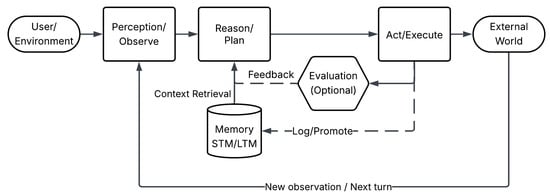

- ReAct Single-Agent:

Figure 5 represents the architecture flow of ReAct single agent. ReAct instantiates a single agent that interleaves stepwise reasoning and acting, optionally inserting a lightweight evaluator before committing an output [98]. The core components are perception/world-state, planning/reasoning, execution and actuation, memory (STM/LTM), and reflection/evaluation; the architecture wires these into a minimal, locally orchestrated pipeline with no external supervisor. The agent observes via perception, reasons in plan/reason to select the next step, acts in execute/tools to affect the world or produce a user reply, may evaluate the result, and then updates memory before repeating. The goal is fast iteration on well-bounded tasks, where a compact loop outperforms heavier orchestration.

Figure 5.

ReAct single-agent loop.

Components and flow: Data and control proceed left to right: the agent observes (perception), optionally retrieves salient context from memory, reasons in plan/reason to select the next step with success criteria, acts in act/execute via a tool/API, and may evaluate the outcome before logging/promoting state and repeating. In the diagram, rounded rectangles denote processing modules (perception → reason/plan → act/execute) connected by solid arrows (primary progression); memory is shown as a cylinder with an upward arrow to reason/plan (retrieval) and dashed downward arrows from act/execute (and, if present, the evaluator) for logging/promotions; evaluation appears as a hexagon branching by a solid arrow from act/execute and returning dashed feedback to reason/plan and/or memory. User-visible responses are produced in act/execute; the loop closes at the environment boundary when the updated world or next user turn re-enters perception as a new observation.

Use and trade-offs: Interleaving reasoning with action tends to improve multi-step task success, and brief critique/verification further suppresses hallucinations; both, however, introduce extra inference steps and wall-clock latency. ReAct is therefore a strong baseline for bounded, single-persona workloads and rapid prototyping. Its simplicity does not scale gracefully: the loop affords little parallelism, weak long-horizon coordination, and increasing tool-selection brittleness as the tool surface and dependency depth grow. For tasks requiring persistent goals, specialization, or oversight, escalation to supervisor/hierarchical (delegation and parallel execution) or Hybrid reactive–deliberative (real-time reflexes with planning) is appropriate despite added coordination overhead [98].

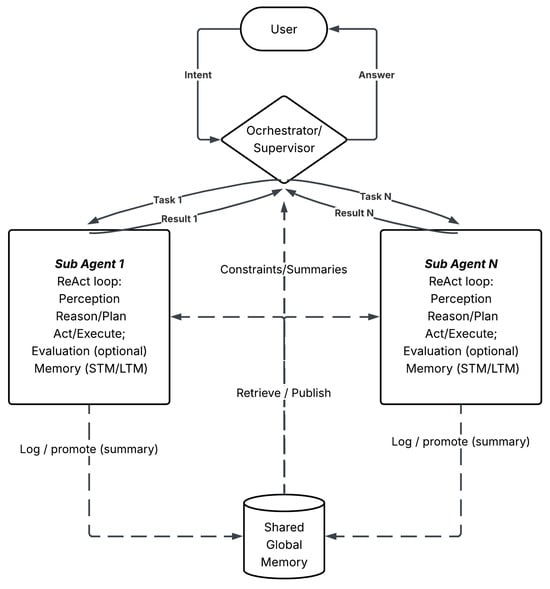

- Supervisor/Hierarchical:

A supervisor/orchestrator as shown in Figure 6 decomposes high-level goals into subtasks and delegates them to role-specialized sub-agents, each of which executes locally using a compact ReAct-style loop, perception → reason/plan → act/execute, with optional evaluation and memory, while an optional shared/federated state maintains coherence across agents [47,50,95].

Figure 6.

Supervisor/hierarchical architecture.

Components and flow: Operationally, control proceeds as planning → delegation → execution → reporting → possible re-planning. The supervisor (diamond) issues assignments (solid progression) and receives reports (dashed) that trigger retries or re-planning when outcomes deviate. Within each sub-agent, memory supports retrieval (solid) into planning and accepts logging/promotions (dashed) from execution/evaluation; evaluation appears as a hexagon branching from act/execute, feeding feedback into planning/memory.

Use and trade-offs: This architecture is well-suited to decomposable, multi-stage tasks that benefit from parallel specialization and clear ownership; it improves scalability via hierarchical delegation but incurs coordination overhead and risks a supervisor bottleneck/single point of failure if the controller is not engineered for throughput, fault-tolerance, and observability [47,50,95].

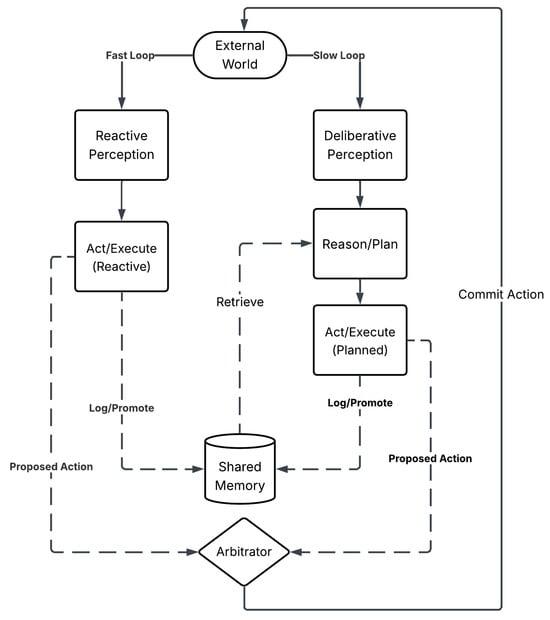

- Hybrid reactive–deliberative:

A hybrid architecture runs two coordinated loops in parallel, as shown in Figure 7. A fast reactive loop that handles time-critical events and a slower deliberative loop that maintains goals and plans. The loops share a common memory and are overseen by an Arbitrator (diamond) that resolves conflicts when immediate reactions and long-horizon plans disagree [38,47,95].

Figure 7.

Hybrid reactive–deliberative.

Components and flow: The reactive path couples perception of events to actions/actuation with minimal latency; safety/guard checks are typically embedded here. The deliberative path performs planning/reasoning over longer horizons, updating goals and selecting action sequences; it reads from and writes to memory (cylinder) to maintain temporal coherence. A shared memory module synchronizes state between the loops. The Arbitrator (diamond) mediates which loop has control when their recommendations diverge, using task/goal priorities and policy constraints. Flow proceeds: perceive (reactive and abstract inputs) → (branch) fast react vs. slower plan → arbitrate (diamond) → execute → evaluate (hexagon) → update memory → possibly re-plan.

Use and trade-offs: The hybrid model balances speed with strategic reasoning, but this comes with arbitration complexity and a requirement for reliable state synchronization across loops to avoid instability or “thrash.” Empirically and in surveyed practice, it is preferred for real-time operations with long-horizon objectives; risks concentrate in arbitration design and consistency across loops [38,47,95].

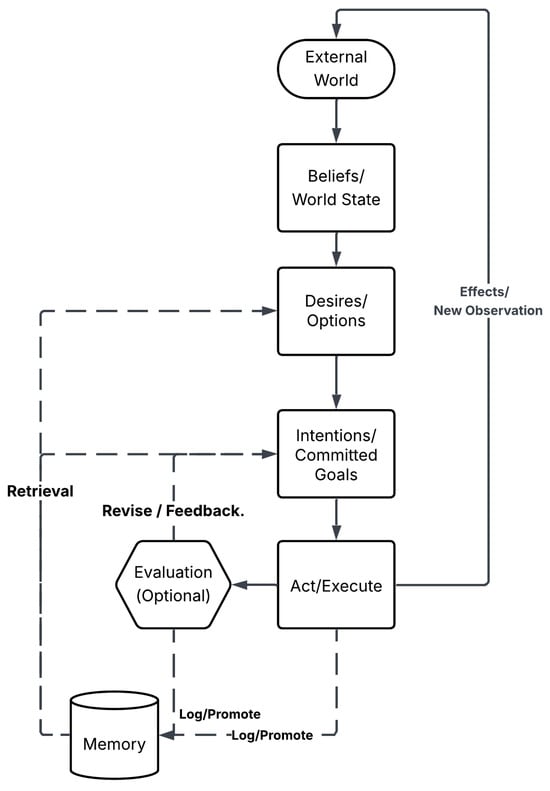

- Belief–Desire–Intention (BDI):

The BDI architecture models, as shown in Figure 8, deliberation with explicit mental states: beliefs (world information/state), desires (candidate goals), and intentions (the subset of goals the agent commits to pursue). A canonical cycle is observe → update beliefs → generate desires → filter to intentions → act → repeat; commitment rules maintain intentions until a goal is achieved, becomes impossible, or is superseded [38,95].

Figure 8.

Belief–desire–intention (BDI) architecture.

Components and flow: External world inputs are observed and update beliefs/world state. The agent derives desires and filters them into intentions according to domain rules and commitment policies. Act/execute realizes the current intention via tools/APIs/actuators, producing effects on the external world. An optional evaluation gate verifies outcomes or constraints and can feed revisions back to the desire set or commitment filter. Memory supplies retrieval to the desire/intention stage (schemas, facts, episodic traces) and receives logs/promotions from act/execute and evaluation (summaries, artifacts, outcomes). Control proceeds top→down, world → beliefs → desires → intentions → act/execute, followed by evaluation and memory update; the loop closes when the changed world yields the next observation back into beliefs [38,95].

Use and trade-offs: BDI is a strong fit for tasks where explainability, goal discipline, and traceability matter (e.g., auditability or safety cases). It trades some adaptability for clarity: commitment rules and symbolic state make reasoning legible but can become brittle in open-world settings without robust belief-revision and exception policies [47,50,95].

- Layered Decision (Neuro-Symbolic):

Layered decision architecture is shown in Figure 9. Layered decision architectures integrate neural perception and probabilistic inference with a symbolic planner and an execution layer, typically followed by evaluation and memory update. The aim is to handle uncertainty via statistical inference while preserving interpretability and traceable decisions through symbolic planning [38,39,41,95].

Figure 9.

Layered decision (neuro-symbolic) architecture.

Components and flow: Perception ingests signals (text, images, telemetry) and encodes features. Probabilistic inference converts these into calibrated state estimates and event hypotheses, exposing uncertainty to discrete reasoning. The symbolic planner operates on this structured state with explicit rules, goals, and constraints; it retrieves schemas, facts, and episodic traces from memory. Act/execute materializes the chosen step via tools/APIs/actuators, producing effects on the external world. An optional evaluation stage performs verification (e.g., constraint checks, cross-model critics), returning feedback to the planner and logging/promoting artifacts to memory; act/execute likewise logs/promotes to memory. Control is top→down, external world → perception → probabilistic inference → symbolic planner → act/execute, followed by evaluation and memory updates; the loop closes as the updated world re-enters perception [38,95].

Use and trade-offs: Layered neuro-symbolic designs are well-suited to open-world decision-making under uncertainty and to domains needing transparent, auditable reasoning (e.g., public-sector workflows). The principal cost is integration and representation alignment, bridging neural encodings with symbolic state/rules and evaluation/feedback, which increases design and maintenance overhead. These strengths and costs are summarized in your comparative table and discussion.

3.3.3. Coordination and Modularity in Agentic Architectures

Agentic AIs succeed or fail not only by which components they contain but by how those components are modularized and coordinated at runtime. This section summarizes dominant coordination mechanisms and their trade-offs.

- Modular Composition:

Architectures commonly encapsulate perception, planning/reasoning, memory, execution/tooling, and reflection into independently deployable subsystems with clear interfaces. This promotes scalability (scale out specific modules), fault isolation (contain failures), and parallelism (concurrent role execution). Modularity also enables “plug-and-play” evolution, e.g., swapping a planner or adding a compliance checker, without redesigning the full system. Design guidance emphasizes tight control of interfaces and state contracts between modules to support predictable orchestration and observability [42,47,50,96].

- Orchestration and Supervisory Control:

Centralized orchestration: Many systems employ a supervisor (often LLM-based) to route tasks, maintain shared context, trigger reflection or replanning, and arbitrate conflicts between competing goals. The supervisor coordinates specialist agents (planner, retriever, executor, critic), aggregates results, and enforces guardrails and approval gates where required [50,95].

Decentralized orchestration: In multi-agent settings, decision-making is distributed via message-passing and shared-state protocols; roles negotiate or vote to coordinate actions. This improves fault tolerance and throughput but increases testing and observability complexity and requires robust protocols for consensus and backoff [42,47,96].

When to prefer which: Centralized control suits tightly regulated or safety-critical workflows with clear accountability; distributed control fits large, decomposable workloads with dynamic specialization and resilience needs.

- Communication Protocols and Workflow Graphs:

Production systems typically (i) exchange structured messages (e.g., JSON) including task intents, state snapshots, and tool results, enabling replay and lineage; (ii) use workflow graphs to represent control flow (branching, retries, compensations); and (iii) attach evaluation hooks (critics, policy checks) at key transitions to gate risky actions and trigger replanning or escalation. Graph-based orchestration frameworks and multi-agent planning protocols are frequently reported to improve robustness under non-determinism while preserving transparency for operators [41,42,50,96].

3.3.4. Integration of Components into Architecture

Agentic AI differ from conventional pipelines in that components are integrated into continuous decision loops that sense, plan, act, evaluate, and learn.

- Layered and Modular Pipelines:

A common integration pattern is a layered flow that couples components into an end-to-end decision loop: perception → world/state modeling → planning/reasoning → execution/tooling → evaluation/feedback → memory → (back to) planning. Although often depicted linearly, these flows are event-driven and cyclic: evaluation and feedback can branch to retries or replanning; memory promotion/demotion changes subsequent retrieval; and perception can interrupt with high-priority events. Effective designs define explicit interfaces (inputs/outputs, schemas, confidence) at each boundary and attach gates (e.g., policy checks, critics) before risky actions [41,42,95]. This organization supports persistent goal pursuit (long horizon), real-time adaptation (short horizon), and safe recovery from deviations [41,95].

- Orchestration Mechanisms:

Centralized orchestration: Many systems elevate an LLM to a supervisor role that maintains shared context, routes tasks to specialist modules/agents (planner, retriever, executor, critic), triggers reflection or replanning, and arbitrates conflicts among goals. The supervisor executes a graph (DAG/state machine) with branching, retries, compensations, and evaluator hooks at critical transitions to keep lineage auditable and side effects idempotent [42,50,97].

- Graph-based execution:

Regardless of centralization, integrating components with an explicit workflow graph improves robustness under non-determinism. Nodes encapsulate component calls (with contracts for inputs/outputs and budgets), and edges encode control logic (success/failure, timeouts, escalation). Evaluators or policy guards can be placed at ingress/egress of high-risk nodes (e.g., external tools) [42,50].

Trade-offs: Centralized supervisors simplify accountability and debugging but may bottleneck or introduce single-point-of-failure risk; decentralized flows increase resilience and throughput but require stronger state contracts, message protocols, and observability [42,47,50].

- Multi-Agent Integration and Decentralization:

In decentralized designs, agents each own a subset of components (e.g., local planner + executor + memory) and coordinate via shared context and message passing. Common patterns include role-based teams (planner/solver/critic), peer networks with negotiation/voting, and hybrid hierarchies that mix supervisor(s) with collaborating peers. Integration hinges on (i) shared state (what is global vs. local), (ii) protocols for task handoff and conflict resolution, and (iii) placement of evaluators/approvals for safety or compliance. This model yields fault tolerance and parallel specialization but increases testing/monitoring complexity and demands clear traceability of who decided what and why [39,47,97].

3.3.5. Architectural Models vs. Core Components

Table 4 synthesizes how common architectural families connect the components introduced earlier and compares the core agent components between the major architectural families, highlighting their typical uses and associated risks. It shows how different designs emphasize particular strengths: BDI architectures rely on explicit beliefs, desires, and intentions to support explainable decision systems, but require extensive symbolic modeling and can be brittle under uncertainty [38,41]; hierarchical/HRL models scale well for decomposable tasks through multi-level goal delegation, though they risk supervisor bottlenecks [50,96]; ReAct single agents offer lightweight reasoning–action loops for rapid iteration, but struggle with parallelism and reliable tool selection [47]; Hybrid reactive–deliberative systems balance fast reflexes with long-horizon planning, yet require careful arbitration design [38]; and layered neuro-symbolic approaches integrate neural inference with symbolic reasoning to manage uncertainty and provide traceability, though at the cost of added integration complexity [39,41,95]. Together, the table underscores how architectural choices map to trade-offs in scalability, transparency, and robustness, guiding when each model is most appropriate.

Table 4.

Components and risks across architecture families.

3.4. What Types of Goals and Tasks Are Currently Being Solved Using Agentic AI Across Domains?

Agentic AI is used in a large number of areas and applications in order to accomplish a large variety of goals and tasks. Agentic AI systems can determine a high-level objective, breaking that objective down into smaller tasks, and efficiently accomplishing those tasks, even in environments that are evolving and that are inherently unpredictable and/or complex processes. Agentic AI can reason, build plans, and act autonomously to adapt to a new scenario, work as part of a team, and produce results that may be difficult or impractical to produce with other types of AI.

Agentic AI systems are used to complete routine activities, support in real-time decision-making, handle complex tasks or problems, and provide personalized assistance by adapting to the context. The system’s strength is in combining data from many sources, making ethical and informed decisions, and coordinating multiple agents to work together in real time.

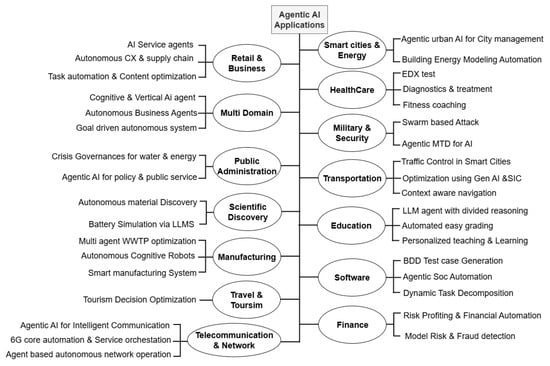

The rationale for creating a domain-based categorization of agentic AIs is to clarify how these systems are molded and shaped by the specific needs, boundaries, and objectives of each of the fields deployed. The domain-based framework not only provides the clarity in displaying agentic capabilities across multiple domains highlighting similarities in abilities that were common but others that would be unique to a specific agent. The overview of the application categories is shown in Figure 10. Moreover, it allowed the reader to identify active patterns within a specific domain, while allowing for tabular comparison of other domains.

Figure 10.

Overview of agentic AI applications across multiple domains.

There are three components of Table 5: the domain, the application, and the tasks and/or considerations associated with the agents’ work. This allows for quick access to important information without reading full paragraphs. The diagram serves as a companion piece, but includes an additional layer to represent a domain with relationships to their applications, as well as where domains share characteristics. Viewing where the table and diagram intersect means the reader has both meaningful representation, and sufficient detail to challenge if agentic AIs are acting as agents in real and experimental contexts.

Table 5.

Agentic AI applications and tasks across multiple domains.

3.4.1. Healthcare

For agentic AI in healthcare, it will include diagnostics, treatment plans, and personalized care. Agentic AI enables pinpoint risk stratification, rapid segmentation of medical images, personalized diets from scratch, real-time seizure prediction, and robust human–robot interaction [59]. Fitness and wellness agents have enabled adaptive coaching, strengthened posture analysis paradigms, and offered multimodal feedback, tracked progress, and coordinated recommendations [60]. The EDX test standardized factor could have organized EMG/NCS data, smartly enabled incorporation of known diagnostic contexts through RAG, and offered accurate level physician reports [99]. Genomic assessment could predict risk for disease, specify treatments, indicate progression, and personalize care for costs, in accordance with safeguards of security [35]. Agents could indicate the ethical framework for generative AI [44], clean and automate medical computer vision pipelines [100], develop bioethical decision support [101], greatly enhance clinical decision-making of some use cases for workflows in surgery and drug discovery workflows [56], and would streamline real-time differential diagnosis of DILI derived from clinical notes [102].

3.4.2. Military

In military and cybersecurity efforts, agentic AI makes it possible to have groups of autonomous agents to carry out both offensive actions and defensive actions based on collective coordinated decisions. Every agent can independently act based on its own preferences. They can defend their positions, attack enemy positions, move around as needed, adapt their movements based on performance metrics, and choose to deploy a variety of effective formations [119]. For example, in cloud-based AI platforms, these forms of agentic AI systems can facilitate a kind of continual protective action to foster security by allowing the rotation of particular active services in order to minimize some risk, preemptively defend against known threats, limit vulnerabilities associated with repeated attacks, apply strict security rules, report unusual activity, and automatically respond with system changes [120].

3.4.3. Transportation

Intelligent agentic traffic control systems simulate traffic situations, control dynamic routing and implement on-demand signal operations, coordinate agents over distributed transport networks, and work toward improving safety, efficiency, and sustainability [103]. Real-time process optimization using generative models and soft inputs with SIC architectures predicts quality variables, performs closed-loop control, allows for closed-loop control with soft sensors to scale biomanufacturing, personalize product design, and minimize energy use and emissions [43]. Context-aware navigation reduces risk of collision in dynamic environments and complex place types through multimodal perception to inform actions and behavior used with semantic understanding for informing decision-making [74]. Multi-agent routing and scheduling coordinate agents traveling multiple traffic channels to eliminate delays, avoid deadlock, and provide opportunity for agents to reach locations given occupancy constraints [61]. Multi-phase transportation lifecycle management is human-centered and adaptable and strive to address barriers toward inclusion while engaging with persistent issues of congestion, collisions, environmental impact, and equity while optimizing travel outcomes for individuals and collectives [104].

3.4.4. Software

Agents independently decompose complex queries, determine which tools are relevant, and execute subtasks, while providing adjustable feedback in real time [105]. Behavior-based development agents use a natural language query as an input and generate structured test cases to collaborate across teams [106], whereas autonomous visual intelligence agents fuse spatial context into multimodal sensory data to perceive the environment, adapt, and collaborate in real-time for tasks such as robotic surgery, disaster response, and augmented/mixed reality applications [107]. Software engineering and business automation agents take a goal and decompose it to oversee who is assigned to each role and to automate workflows, including inventory management, customer relationship management, invoicing, and demand forecasting [30]. Self-directed AI pipelines autonomously select models, make use of data featuring imbalanced learning classes, and manage knowledge-aware, interpretable decision-making [54]. Agentic search, reviews, and personalization systems are capable of multi-step reasoning, retrieval in cross-lingual forms, and bias mitigation [108]. Multi-agent orchestration systems can facilitate computer vision task automation and manage complex, fault-tolerant, high-risk systems [62,109].

End-user programming agents are giving rise to democratized software development [110]. Near real-time multi-agent frameworks simultaneously can be used for software development, controlling operational IT workflows, and strategic decision-making [111]. Autonomous software development and multi-domain digital task agents will plan, assign, execute, and self-correct the software development, browsing, coding, and file operations associated with a release [50,113]. Human-algorithmic agency modeling also theorizes an evolving model for collective human–AI agency [114]. Conversational agents will become increasingly integrated into software development to improve software test case development, ML workflow improvement, and SOC automation through natural language interpretation, test case generation, pipeline and incident monitoring and detection, rule zero-trust enforcement and multi-agent interaction coordination [115,116,117]. Fully autonomous software development, predictive incident detection, IT automation, and other tasks do not require a fully autonomous agent to decompose prompts, write and test code, integrate third-party APIs, optimize resource use and performance, manage Git workflows, or manage incidents using coordinated multi-agent planning [49,51,118].

3.4.5. Finance, Banking and Insurance

Agents operating in finance, banking, and insurance have improved customers’ risk profiling, automated forecasting for loans, treasury management, and fraud detection by examining real-time data and behavioral patterns [80]. Agents also increase model risk management by using automated ML workflows, compliance checks, and by cooperating agent to agent [39]. Further, customer services receive outstanding support 24/7, recommendations on investments and insurance, and automated transactions, due diligence, and KYC [128]. Risk and fraud detection agents can spot risky actions, break down complicated processes, and use AI that clearly explains how it works [129]. Personalized banking agents automate budgeting, product recommendations, and can provide instant behavioral interventions which also support inclusion through quasi-real-time feedback mechanisms [130]. Agents for insurance claims evaluation utilize multimodal inputs, decompose tasks into assigned subtasks, and respond in a policy-compliant way [96]. Finally, customer-centric chatbots improve sales, diagnostic ability, predictive analytics, and can automate IoT-driven processes for better interactive engagement across organizational partners [131].

3.4.6. Manufacturing and Industrial

Agents are useful within the manufacturing and industrial contexts in terms of increasing efficiency, safety, and decision-making across production and supply chains. Multi-agent systems play a role in the optimization of a number of tasks spanning wastewater treatment, factory operations, predictive maintenance, and energy-aware task scheduling, among others [33,63]. Supply chain agents may also forecast demand and monitor for contamination and then recommend actions with respect to quality and pricing [55]. Cognitive robots and collaborative human–robot agent systems can also be used to predict the possible states of a scenario, learn from social interactions with humans, adapt movements based on learning, and collaborate in shared tasks with humans safely [122,124]. Workflow agents and autonomic computing agents can also increase efficiency in robotic assembly, and automate the tasks of compliance, scheduling, and resource planning [121,123]. Multi-agent pickup and delivery systems address the coordination task, collision avoidance tasks, and other delay reduction tasks [69].

3.4.7. Tourism and Traveling

They optimize pricing, planning, and customer service using human–AI teams. They provide real-time decision support ethically and transparently, through RAG, Interactive Cognitive Agents (ICAs), and other management support systems [127].

3.4.8. Multi-Domain

Agents model user behavior, provide recommendations and context, coordinate processes across domains, automate business processes, break down goals, utilize external knowledge, and coordinate safely and ethically [53,132,133]. Agents also engage in real-time decision-making, minimize costs, make better use of resources, produce intelligible outputs, and plan and execute tasks under uncertainty [36,135]. Multi-domain agents engage with tools and/or APIs, manage workflows that will inevitably be very complex, such as coding, healthcare, finance, crime analysis, etc., and provide memory-based learning that allows them to continue a task by absorbing adaptive information from external cues [31,67,75,76,77,136,139]. Agents also enhance personal assistants, cybersecurity, and autonomous knowledge management, while realizing reduced computation cost and smarter cross-domain problem solving [32,46,66,134,137,138].

3.4.9. Scientific Discovery and Research

Autonomous systems are aiding scientific inquiry by making hypotheses, automating the literature review and analysis, and designing experiments. They can physically perform majority of robotic chemical and biological experiments, analyze data across all domains, and iteratively refine experiments to facilitate efficient experimentation for drug discovery, material science, and energy applications [64,65,150,151]. Multi-agent systems of LLMs can incorporate physical models with domain knowledge to plan simulations, make predictions for material properties, and develop multimodal outputs (numerical data, charts, text reports) for engineers [64,65]. Agents can facilitate automatic writing of research papers, analyze textual or hypertext content, organize qualitative interviews or conversations, and create knowledge graphs while embedding factors such as interpretability, bias detection, and collaborative reasoning throughout scientific workflows [45,152,153].

3.4.10. Retail, Business, and E-Commerce

Autonomous systems create customized customer interactions and coordinated management of supply chains by offering personalized shopping experiences, situation-aware support in the form of chatbots and virtual assistants, navigation support inside buildings or stores, and through coordinated autonomous operation by multi-agent systems like inventory management, logistics, and fulfillment [78]. Autonomous systems also manage automating task scheduling, digital management of content, and automating multi-step workflows like travel booking or e-commerce tasks [141,144]. Autonomous systems depend on using natural language processing (NLP) and situational reasoning algorithms to adapt to changing situationals [144]. There are also autonomous decision-making agents that are intended to quickly assist an organization or team by helping with aspects of strategic and tactical planning, or rapid data analytics along with error detection or forecasting to improve productivity and customer satisfaction [143]. The increasingly sophisticated capabilities of AI service agents and chatbots allow for continued personalized interactions, as well as the use of financial advice, reservations, smart device activation, and decreased human workload [140,145]. Workflow automation is at play in areas such as law and retail along with automating Kubernetes tasks with minimal supervision, and e-commerce process optimization provides opportunities for reduced costs and increased customer satisfaction [27,142]. Human–machine collaboration is added by using humanoid robotics and agentic LLMs for claims handling, smart marketing, HR, and trust establishment in customer service [68,146]. Interactive recommender systems will provide conversational personalized recommendations across movies/entertainment and retail domains [79]. GenAI agents showcase multi-domain reasoning, planning, and task execution capabilities, including finance and banking, cybersecurity, healthcare/remote healthcare, and software operation/administrative tasks [40].

3.4.11. Smart Cities and Energy

Autonomous systems support a city’s services, like transport, health, and emergency management, and can proactively provide solutions to problems like pollution and traffic in real time [125]. Agents can take on responsibility for building energy work that now involves data processing, coding, and simulations [58]. Multi-agent systems use less energy in smart buildings while maintaining comfort, reducing cost, and preventing energy spikes [70]. Digital twin agents support monitoring buildings, fault detection, maintenance to avoid faults, and energy efficiency for use [126].

3.4.12. Public Administration

Autonomous systems assist public administration with efficiently managing resources (water and energy), correctly anticipating demand, simulating policies with digital twins, and making adjustments in real-time. Autonomous systems also enhance services to citizens, automate administrative workflows, aid with fraud detection, and help with smart cities and population health decision-making [37,41].

3.4.13. Education

The personalized learning capabilities of autonomous systems in education derive from tracking student performance, adjusting content, giving timely feedback, taking on administrative responsibilities, identifying signs of disengagement, and promoting collaborative learning [57]. They can also help automate objective essay questions, thus offering a consistent, fair, and explainable way of marking via collaborative engagement of multiple agents [148]. Adaptive AI agents would be described as metacognitive support, facilitating the diagnosis of gaps in learning, providing scaffolding for complex content, relieving learner burden and acknowledgement from remote participation, and automation in marking [147]. Multi-agent LLM systems help deliver personalized and consistent results for both subjective and objective purposes, merging agent coordinate reasoning, validation, and quality coding of data generation [97]. In addition to helping learners, agents help the cognitive workflows related to research, decision-making, report writing, organizing travel, and many other complex academic workloads [149].

3.5. What Kinds of Input and Output Formats Do Agentic AI Systems Handle in Comparison to Traditional AI Systems?

Agentic AI systems designed for operation in complex environments are developed with flexible input–output structures for independent decision-making. Various agentic AI models use input–output schemes suited to the nature of the task, which will differ in terms of structural design and user styles of interaction. This section presents the various interaction methods with agentic AI systems, including how they process different input forms and produce appropriate outputs. Table 6 is categorized according to the input types given to the system, along with the task performed and the technologies used to produce that output.

Table 6.

Agentic AI models for various input–output transformations.

The rationale for the agentic AI models for input–output formats is to demonstrate how agentic AI systems are designed by directly mapping input modalities to their outputs, the tasks performed, and the technologies used. This comprehensive table is organized for easy comparison of how a single input can produce multiple types of output and perform different types of tasks. This provides important information about system flow in the real world applications of agentic AI systems. The table also highlights the diversity and specialization of agentic AI systems to help researchers and practitioners identify models or technologies appropriate for particular applications.

3.5.1. Text to Actions

Text as input is a free-form language from user to system; mostly, it is a natural language that can be structured or unstructured. In agentic AI models, actions as output means executing something in the system to complete the tasks [120]. More advanced models, like Vectara-agentic [27] and AutoGPT [34], can take text input, read the user’s prompt to create outputs or undertake actions, and coordinate with other agents without human assistance.

Beyond task executions, agentic AI systems also contribute to robotic process automation. It is possible for an agentic AI model to analyze manufacturing data and automatically execute optimized robotic assembly sequences, based on a structured framework like MAPE-K [121]. Similarly, in network operations [71], an agentic AI can interpret controlling commands and independently react to failure by interpreting and implementing possible actions in real-time [82] to make a decision to execute an action. In doing so, these models improve the fault tolerance of systems and enhance resilience [62]. Moreover, in the case of smart environments, agentic AI can drive energy savings by connecting power supply across home appliances [38] using multiple AI agents collaborating in a coordination process enhanced with LLMs.

Agentic AI models can improve themselves. BrainBody-LLM and RASC [75] utilize feedback in self-reinforcement to continue learning and optimizing over time. In scenarios where the past outputs have been wrong, self-reflective models like SELF-REFINE [50] can improve their behaviors by reviewing their own actions to create their improvement without relying on outside parties. Agency AI Systems can remove ambiguity, enact user preferences [154], and derive next actions through self-reflection to generate signals [144].

3.5.2. Text to Text

Text as output is human-readable language, which is a combination of formatted and structured text that is read and understood by people. Recent LLM models can share their knowledge amongst other agents, resulting in a multi-agent ecosystem capable of collective learning [143]. LLaMA-3 models [40] can help users in information retrieval by parsing users’ prompts [77], looking for responses to the prompts, and aggregating information from other sources [52]. LLM models support problem-solving [137] and programming [155] by completing or correcting code, and describing runtime errors [110]. The GPT models can also help automate customer interaction process workflows [140], logic-based processing in automated support [137], and responding to inquiries [41].

3.5.3. Text to Multimodel Data

Agentic AI systems can produce structured outputs, such as JSON or YAML scripts, after completing complex tasks [105], orchestration [100], or documenting research in research documents [151]. AgentGPT models can also produce multimodal data as output, which can be data visualizations such as projecting future growth using an interactive timeline [138] or charting their current and historical data and actions [64]. LLM models can fill logs and audits when they process textual input and produce structured records of activity [40].

3.5.4. Audio Files to Text/Audio

Audio as input in agentic AI systems can be speech, music, or signals, which are processed by the system to understand the goal, supporting human experience by allowing for more natural, context-rich interactions. Audio as output is a sound-based content as a response, which is human-perceivable sound for listening. Agentic AI systems utilize technologies including Automatic Speech Recognition (ASR), short-term and long-term memory, and advanced language models such as GPT-4 or Llama 3.2 to process spoken language and operationalize it into actionable insights. It is possible to use audio input for ethical advisory scenarios where agentic AIs can generate fairness-aware decisions through parsing spoken queries [101]. Similarly, feedback-generating interactive systems can use audio to goal parse and make context-aware outputs [60]. In cases such as empathetic interviewing, multimodal agentic AI are a more advanced use of audio input as both parse input and response are captured and subsequently provided in a structured, emotionally intelligent discussion [153]. Audio input allows agentic AI to extend their capabilities of real-time communications that exhibit human-like attributes.

3.5.5. Real-Time Data to Actions

Agentic AI can receive continuous real-time data from a sensor or camera, allowing it to obtain live information. Sensor data can detect a building’s power grid [73], analyze how it is being used, and instruct the building to save energy [70]. Agentic AI also suggests the optimal routes in traffic [103]. ML-based agentic systems can detect the issue [131] to optimise the operations [123]. When the AI is engaged in operational tasks, it is also measuring and detecting threats, assessing the environment and deciding on an immediate action [156], response to disruptions [30]. Using a trial RT learning approach, the system recognizes and stabilizes performances in the system’s strategizing over time [36]. Real-time data can also involve coordinates or the accurate location of a person, car, or agent in a game, allowing the system to respond immediately and perform actions timely manner [72,119].

3.5.6. Datasets to Text