A Novel Framework Leveraging Large Language Models to Enhance Cold-Start Advertising Systems

Abstract

1. Introduction

2. Related Work and Contribution

2.1. CTR Prediction State-of-the-Art Models

2.2. Cold-Start-Related Approaches

- Content-based initialization uses ad metadata, visual descriptors, or textual content to seed embeddings for unseen entities. This allows models to assign a “best guess” representation even before interaction data accumulates. For example, meta-learning approaches such as RGMeta and Graph Meta Embedding create pseudo-cold-start episodes during training, enabling the model to infer embeddings based on feature similarities or graph neighborhoods [7]. Nevertheless, content-based initialization depends heavily on high-quality metadata or descriptors, which are often incomplete or uninformative for novel entities, limiting embedding quality. Also, meta-learning methods require artificially constructed training episodes that may not reflect real-world cold-start dynamics, risking poor generalization.

- Exploration-driven approaches treat the early recommendation phase as a contextual bandit problem, allocating impressions to gather information while minimizing immediate revenue loss. Large-scale video platforms have reported over 60% improvement in new-ad performance by applying such strategies in production deployments. Contextual bandits improve new-ad performance but incur exploration costs and require careful reward balancing to mitigate short-term revenue loss.

- Graph neural networks (GNNs) represent features or users as nodes and propagate relational signals through edges, making them robust to sparse data. Fi-GNN and other graph-enhanced CTR models improve representation learning by capturing inter-feature and inter-entity structures [20]. However, graph neural networks suffer from computational inefficiency at scale.

- Two-tower architectures have gained popularity by separating user and item encoders, enabling efficient candidate retrieval via approximate nearest neighbors. The shortlisted candidates are then re-ranked using richer single-tower or cross-attention-based models, balancing efficiency and accuracy [21]. Nevertheless, two-tower architectures trade interaction modeling for speed, potentially missing key cross-feature signals.

2.3. LLM-Based Systems

2.4. Contribution

- Transformer-Enhanced Feature Extraction: We validate that replacing conventional embedding mechanisms with transformer-based architectures significantly improves feature representation for frozen-start users, yielding superior performance on standard CTR prediction benchmarks. Unlike conventional CTR prediction models that rely on static embeddings and struggle with sparse user data, this approach enables dynamic semantic relationship modeling between user attributes, advertisement content, and contextual information.

- Ensemble Learning Framework: We develop and validate a novel ensemble approach that effectively combines multiple transformer-enhanced models to produce more accurate CTR predictions than any individual model, addressing the variability inherent in frozen-start scenarios. This ensemble approach differs from traditional cold-start solutions by leveraging diverse modeling perspectives simultaneously rather than relying on individual techniques such as content-based initialization or exploration-driven approaches. The learnable weighted aggregation strategy ensures that model contributions are dynamically balanced based on performance rather than fixed predetermined weights. This adaptive weighting mechanism addresses the limitation of static ensemble approaches that cannot adjust to varying data distributions in cold-start scenarios.

- LLM-Powered Reranking and Refinement: This LLM-powered enhancement system addresses critical limitations observed in previous studies by combining the computational efficiency of traditional CTR models with the semantic understanding capabilities of large language models. Unlike existing LLM-based systems that suffer from latency constraints and hallucination issues, this framework employs the LLM only for post-processing the top-five candidates, significantly reducing computational overhead while maintaining the benefits of semantic reasoning. Moreover, conventional approaches that either fully replace traditional models with LLMs or use them in isolation, while the proposed solution leverages the complementary strengths of both paradigms while systematically addressing their individual weaknesses through built-in fairness constraints, sentiment analysis, and real-time adaptation capabilities. This integrated approach not only enhances performance but also provides an ethically grounded solution to persistent cold-start challenges that are frequently neglected in existing systems. Finally, the integration of explainability features and real-time message refinement significantly enhances recommendation transparency and interpretability, enabling dynamic content adaptation that surpasses conventional static recommendation approaches.

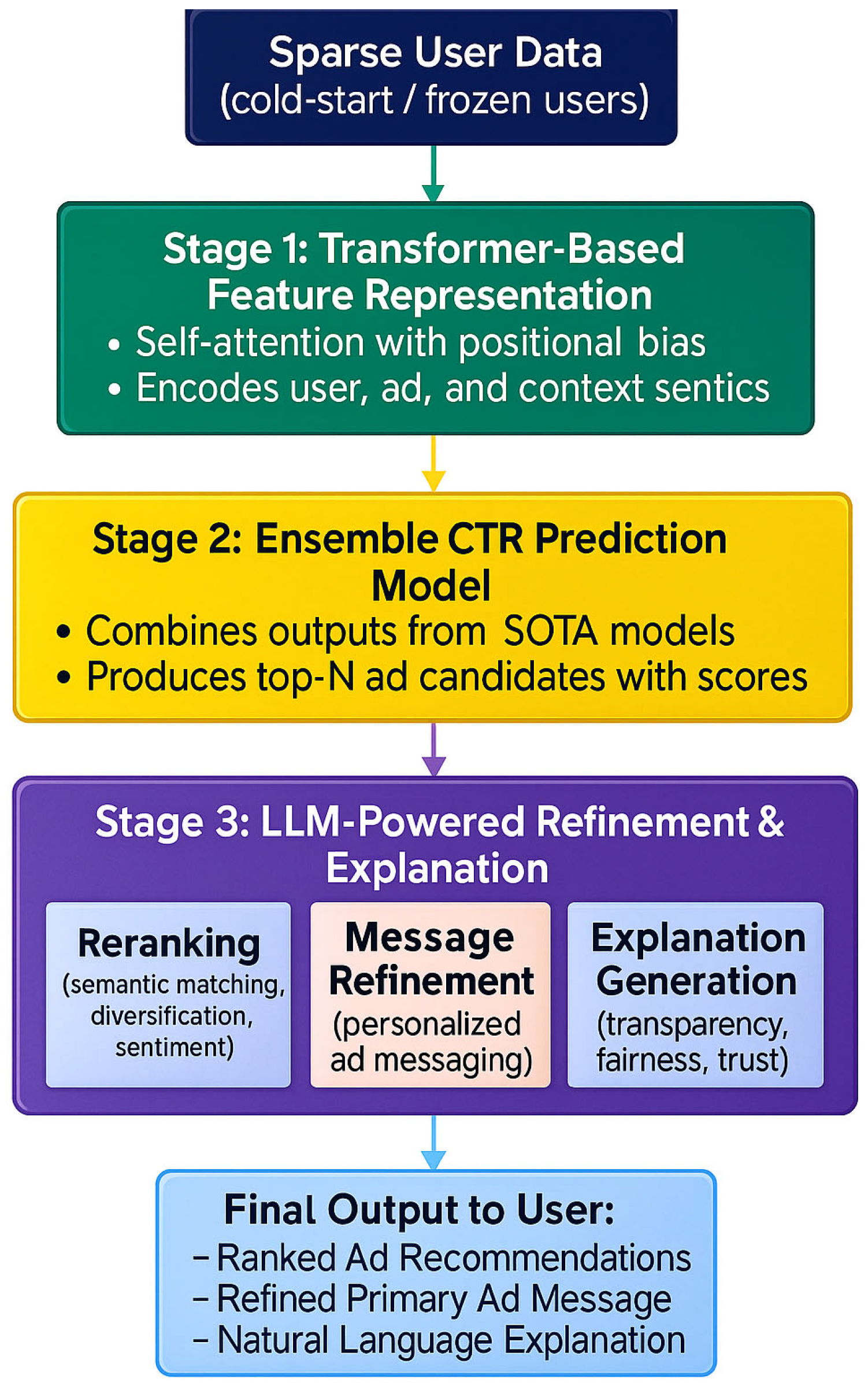

3. Materials and Methods—The Proposed Framework

3.1. Stage 1: Transformer-Enhanced Feature Representation

3.2. Stage 2: Ensemble Model Integration

- Leverages diverse modeling perspectives to improve prediction robustness;

- Employs a learnable weighted aggregation strategy optimized through fine-tuning;

- Outputs calibrated probability scores for candidate advertisements;

- Selects top-performing candidates for further refinement.

3.3. Stage 3: LLM-Powered Enhancement System

- Semantic reranking and best-match advertisement. The LLM evaluates the top five advertisements to select the one best aligned with the user’s profile, considering contextual relevance, category diversity, sentiment alignment, fairness, and available feedback. The LLM processes a prompt with the user profile (e.g., location, time, inferred preferences) and ad metadata (e.g., description, category). This process incorporates:

- Contextual Relevance: The LLM matches user attributes to ad metadata more effectively by processing sparse user data alongside rich ad descriptions and categories [31]. We include in the prompt a directive to prioritize ads whose metadata aligns closely with user attributes.

- Recommendation Diversification: Using techniques inspired by Maximal Marginal Relevance [32], the LLM ensures the top recommendations span varied categories rather than redundant offerings. We include a prompt instruction to favor ads from distinct categories, reducing redundancy among the top selections.

- Sentiment-Aware Ranking: The LLM incorporates sentiment analysis on the content to prioritize ads that align with positive user preferences inferred from available data [33]. We include a directive to prioritize ads with positive sentiment that matches user preferences, based on the LLM’s natural language-processing capabilities.

- Bias Mitigation: The system implements fairness constraints to prevent overrepresentation of certain ad categories and ensure balanced recommendations [34]. An instruction is added to ensure balanced category representation among the selected ads, using metadata to identify categories.

- Real-Time Adaptation: The LLM framework can dynamically update rankings based on user feedback signals [35].

- 2.

- Message Refinement: For the top-ranked advertisement, the LLM generates refined messaging that better aligns with the user’s profile characteristics while maintaining the core business proposition. This personalization process leverages:

- 3.

- Explanation Generation: The system produces natural language explanations that articulate the reasoning behind the recommendation, increasing transparency and helping users understand why particular advertisements were selected or eliminated. These explanations:

- Reveal the factors influencing the recommendation decision;

- Build user trust by making the recommendation process transparent [38];

- Address the “black box” nature of traditional recommendation systems.

- 4.

- Cold-Start Problem Mitigation: The LLM leverages its pre-trained knowledge to generate recommendations even in the absence of user interaction history. By employing transfer learning techniques [39], the system can:

- Use pre-trained embeddings to fill gaps in sparse data scenarios;

- Infer ad relevance based on content descriptions and categories;

- Generalize patterns from similar users or products to new entities.

4. Validation Methodology

4.1. Dataset Selection for Validation of Stages 1 and 2

- Temporal attributes: Time period for capturing temporal patterns;

- Contextual information: Application and site category and domain, application name and ID for content understanding;

- Placement characteristics: Advertisement position indicating display location;

- Device specifications: Device type and model for user profiling;

- Network conditions: Connection type for contextual awareness;

- Temporal dimensions: Time period for temporal pattern recognition;

- Application categorization: Application and site category for content classification;

- Advertisement characteristics: Display form of ad material, app level 1 and 2 categories, application ID, tag, and score/rating for comprehensive ad profiling;

- Device metadata: Device name, size, and model release time for user device understanding;

- Network specifications: Connection type for contextual adaptation.

4.2. Validation of Stages 1 and 2

- Stage 1 Validation: Several state-of-the-art recommendation models: DCN-V2, DIFM, FiBiNET, and MMO, were used as strong baselines for comparison. These models were evaluated on the two benchmark datasets using standard performance metrics, including AUC and accuracy. Subsequently, we modified their embedding mechanisms by integrating transformer-based enhancements, replacing the traditional shallow embeddings. Comparative experiments were then conducted to systematically assess the impact of these transformer-enhanced representations against the original architectures in frozen-start scenarios.

- Stage 2 Validation: To evaluate the effectiveness of ensemble integration, we implemented the weighted ensemble model that combines the outputs of the individually enhanced models (DCN-V2, DIFM, FiBiNET, and MMOE). The ensemble was tested on the same datasets, and its performance was compared directly against each individual model. This allowed us to assess whether combining transformer-enhanced architectures could yield further gains in AUC and accuracy beyond what each model achieved independently.

4.3. Stage 3 and Integrated Framework Validation Through User Study

- Condition 1: 54% male (n = 12), 46% female (n = 11);

- Condition 2: 58% male (n = 13), 42% female (n = 10);

- Chi-square test: χ2 (1, N = 46) = 0.089, p = 0.778.

- 18–24 years: Condition 1 (n = 5), Condition 2 (n = 4);

- 25–34 years: Condition 1 (n = 6), Condition 2 (n = 5);

- 35–44 years: Condition 1 (n = 5), Condition 2 (n = 6);

- 45–54 years: Condition 1 (n = 4), Condition 2 (n = 5);

- 55+ years: Condition 1 (n = 3), Condition 2 (n = 3);

- Chi-square test: χ2(4, N = 46) = 0.542, p = 0.969.

- Advertisement Relevance (3 items): α = 0.89, 7%;

- Behavioral Intention (3 items): α = 0.81, 95%;

- Explanation Effectiveness (3 items): α = 0.87, 9%;

- Comparative Relevance (3 items): α = 0.85, 8%;

- Message Quality (3 items): α = 0.88, 3%.

- Condition 1: AI-enhanced Recommendation SystemAdvertisements were initially selected from an experimentally curated ad pool using the ensemble prediction model, which estimated the likelihood of ad engagement based on both user-profile data and the assigned website category. The top five ads with the highest predicted click-through probabilities were passed to a large language model (LLM) API for further personalization. The LLM dynamically refined the content of the most relevant ad message and provided a brief explanation based on the user profile and context. It also evaluated whether a reranking of the selected ads was necessary. Participants in this condition were shown the final recommended advertisement (post-refinement), a system-generated explanation message, and the four excluded ads that were not selected as optimal.

- Condition 2: Baseline (Random Selection)Participants were shown five randomly selected advertisements from the same ad pool. One was randomly labeled as the recommended ad, and the remaining four were presented as excluded. No personalization or explanation was provided.

- Advertisement Relevance: A set of three questions assessing how relevant participants perceived the ad to be.

- Behavioral Intention: A three-question group evaluating the likelihood of participants engaging with the ad (e.g., clicking on it).

- Explanation Effectiveness:

- ○

- Condition 1: Questions focused on whether the provided explanation helped participants understand why the ad was shown and whether it increased their likelihood of engaging with the ad.

- ○

- Condition 2: Questions asked participants whether an explanation (hypothetically) would increase their receptiveness to the ad.

- Comparative Relevance: Three questions assessing the perceived relevance of the displayed ad compared to potential alternatives that were not shown.

- Message Quality: A question group evaluating the clarity and communicative effectiveness of the ad’s message.

5. Results and Discussion

5.1. Transformer-Based Feature Representation and Ensembler Model Evaluation Results

5.2. Integrated Framework Evaluation Results

- Advertisement Relevance: Participants in Survey 1 rated advertisements as significantly more relevant (M = 4.15) than those in Survey 2 (M = 3.25), U = 435.0, p = 0.014.

- Behavioral Intention: Survey 1 participants reported significantly greater likelihood of clicking on the ad (M = 4.15) compared to Survey 2 (M = 2.69), U = 542.0, p < 0.001.

- Explanation Effectiveness: Participants in Survey 1, who were shown an explanation, reported higher scores on explanation effectiveness (M = 4.15) than Survey 2 participants (M = 3.23), U = 492.0, p = 0.0017.

- Comparative Relevance: Survey 1 participants rated the displayed ad as more relevant compared to unseen alternatives (M = 4.00) than Survey 2 participants did (M = 2.69), U = 472.0, p = 0.0015. This difference shows that LLM-enhanced reranking produces ad selections users perceive as substantially more relevant than unrefined alternatives. A limitation in evaluating the complete ensembler + LLM system versus the ensembler is that traditional AUC metrics cannot be directly applied, as the LLM operates as a post-processing reranking layer that selects advertisements based on semantic relevance rather than click probability prediction. Since ground-truth click labels correspond to the original dataset interactions and not to the LLM’s semantic reranking decisions, the system’s final output represents a qualitatively different recommendation paradigm that requires alternative evaluation methodologies beyond standard CTR prediction metrics.

- Message Quality: Survey 1 participants rated the clarity of the ad’s message higher (M = 4.23) than Survey 2 participants (M = 3.38), U = 548.0, p < 0.001.

- Relevance/interest: 92.3%;

- Advertisement message: 84.6%;

- Brand 61.5%;

- Design: 53.8%;

- Explanation: 46.2%;

- Transparency: 38.4%.

- Knowledge distillation—Training a lightweight student model to replace the current ensemble approach, preserving most accuracy while significantly improving inference speed.

- Simplified architectures—Evaluating whether slightly less accurate but more efficient models could provide suitable performance for certain use cases [43].

6. Conclusions

- Versus Traditional CTR Models: Compared to classic approaches this framework adds semantic understanding and explainability that addresses fundamental limitations in these models.

- Versus Pure LLM Approaches: Unlike recent work that uses LLMs as end-to-end recommendation engines, this hybrid approach leverages the strengths of both statistical modeling and natural language processing while mitigating their respective weaknesses.

- Versus Other Cold-Start Solutions: The framework offers advantages over traditional cold-start techniques such as meta-learning and content-based filtering by incorporating dynamic contextual understanding and transparent explanations.

- Versus Explainable Recommendation Systems: While explainable recommendation systems have gained attention, this framework goes beyond post hoc explanations by integrating explanation generation directly into the recommendation process.

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| ML | Machine Learning |

| ANN | Artificial Neural Networks |

| CTR | Click-Through Rate |

Appendix A

- Questionnaire

Demographic Information

|

References

- Zhang, W.; Qin, J.; Guo, W.; Tang, R.; He, X. Deep Learning for Click-Through Rate Estimation. arXiv 2021, arXiv:2104.10584. [Google Scholar] [CrossRef]

- Reddy, S.; Beg, H.; Overwijk, A.; O’Byrne, S. Sequence Learning: A Paradigm Shift for Personalized Ads Recommendations. 2024. Available online: https://engineering.fb.com/2024/11/19/data-infrastructure/sequence-learning-personalized-ads-recommendations/ (accessed on 19 November 2024).

- Viktoratos, I.; Tsadiras, A. A Machine Learning Approach for Solving the Frozen User Cold-Start Problem in Personalized Mobile Advertising Systems. Algorithms 2022, 15, 72. [Google Scholar] [CrossRef]

- Wang, R.; Shivanna, R.; Cheng, D.; Jain, S.; Lin, D.; Hong, L.; Chi, E. DCN V2: Improved Deep & Cross Network and Practical Lessons for Web-scale Learning to Rank Systems. In Proceedings of the WWW ’21: The Web Conference 2021, Ljubljana, Slovenia, 19–23 April 2021; Volume 2, pp. 1785–1797. [Google Scholar]

- Zhao, F.; Huang, C.; Xu, H.; Yang, W.; Han, W. RGMeta: Enhancing Cold-Start Recommendations with a Residual Graph Meta-Embedding Model. Electronics 2024, 13, 3473. [Google Scholar] [CrossRef]

- Ye, Z.; Zhang, D.J.; Zhang, H.; Zhang, R.; Chen, X.; Xu, Z. Cold Start to Improve Market Thickness on Online Advertising Platforms: Data-Driven Algorithms and Field Experiments. Manag. Sci. 2022, 69, 3838–3860. [Google Scholar] [CrossRef]

- Ouyang, W.; Zhang, X.; Ren, S.; Li, L.; Zhang, K.; Luo, J.; Liu, Z.; Du, Y. Learning Graph Meta Embeddings for Cold-Start Ads in Click-Through Rate Prediction. In Proceedings of the SIGIR ’21: The 44th International ACM SIGIR Conference on Research and Development in Information Retrieval, Online, 11–15 July 2021; Volume 1, pp. 1157–1166. [Google Scholar]

- Liu, Y.; Ma, L.; Wang, M. GAIN: A Gated Adaptive Feature Interaction Network for Click-Through Rate Prediction. Sensors 2022, 22, 7280. [Google Scholar] [CrossRef]

- Wang, Z.; She, Q.; Zhang, P.; Zhang, J. ContextNet: A Click-Through Rate Prediction Framework Using Contextual in-formation to Refine Feature Embedding. arXiv 2017. [Google Scholar] [CrossRef]

- Dilbaz, S.; Saribas, H. STEC: See-Through Transformer-based Encoder for CTR Prediction. arXiv 2023. [Google Scholar] [CrossRef]

- Wang, D.; Salamatian, K.; Xia, Y.; Deng, W.; Zhang, Q. BERT4CTR: An Efficient Framework to Combine Pre-trained Language Model with Non-textual Features for CTR Prediction. In Proceedings of the KDD ’23: The 29th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Long Beach, CA, USA, 6–10 August 2023; Volume 1, pp. 5039–5050. [Google Scholar]

- Muhamed, A.; Keivanloo, I.; Perera, S.; Mracek, J.; Xu, Y.; Cui, Q.J.; Rajagopalan, S.; Zeng, B.; Chilimbi, T. CTR-BERT: Cost-effective knowledge distillation for billion-parameter teacher models. In Proceedings of the 35th Conference on Neural Information Processing Systems, Online, 6–14 December 2021; Available online: https://neurips2021-nlp.github.io/accepted_papers.html (accessed on 5 August 2025).

- Huang, J.; Qu, M.; Li, L.; Wei, Y. AdGPT: Explore Meaningful Advertising with ChatGPT. ACM Trans. Multimedia Comput. Commun. Appl. 2025, 21, 1–23. [Google Scholar] [CrossRef]

- Cheng, H.-T.; Koc, L.; Harmsen, J.; Shaked, T.; Chandra, T.; Aradhye, H.; Anderson, G.; Corrado, G.; Chai, W.; Ispir, M.; et al. Wide & Deep Learning for Recommender Systems. In Proceedings of the 1st Workshop on Deep Learning for Recommender Systems—DLRS 2016, Boston, MA, USA, 15 September 2016; pp. 7–10. [Google Scholar]

- Wang, R.; Fu, B.; Fu, G.; Wang, M. Deep & Cross Network for Ad Click Predictions. In Proceedings of the ADKDD’17: The 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Halifax, NS, Canada, 13–17 August 2017; Volume 1, pp. 1–7. [Google Scholar]

- Guo, H.; Tang, R.; Ye, Y.; Li, Z.; He, X. DeepFM: A Factorization-Machine based Neural Network for CTR Prediction. arXiv 2017, arXiv:1703.04247. [Google Scholar]

- Xi, X.; Leng, S.; Gong, Y.; Li, D. An accuracy improving method for advertising click through rate prediction based on enhanced xDeepFM model. arXiv 2022. [Google Scholar] [CrossRef]

- Song, W.; Shi, C.; Xiao, Z.; Duan, Z.; Xu, Y.; Zhang, M.; Tang, J. AutoInt. In Proceedings of the CIKM ’19: The 28th ACM International Conference on Information and Knowledge Management, Beijing, China, 3–7 November 2019; pp. 1161–1170. [Google Scholar]

- Li, Y.; Wang, J.; Dai, T.; Zhu, J.; Yuan, J.; Zhang, R.; Xia, S.-T. RAT: Retrieval-Augmented Transformer for Click-Through Rate Prediction. In Proceedings of the WWW ’24: The ACM Web Conference 2024, Singapore, 13–17 May 2024; pp. 867–870. [Google Scholar]

- Li, Z.; Cui, Z.; Wu, S.; Zhang, X.; Wang, L. Fi-GNN: Modeling Feature Interactions via Graph Neural Networks for CTR Prediction. In Proceedings of the CIKM ’19: The 28th ACM International Conference on Information and Knowledge Management, Beijing, China, 3–7 November 2019; pp. 539–548. [Google Scholar]

- Schifferer, B. Solving the Cold-Start Problem using Two-Tower Neural Networks for NVIDIA’s E-Mail Recommender Systems. Available online: https://medium.com/nvidia-merlin/solving-the-cold-start-problem-using-two-tower-neural-networks-for-nvidias-e-mail-recommender-2d5b30a071a4 (accessed on 4 February 2025).

- Sanner, S.; Balog, K.; Radlinski, F.; Wedin, B.; Dixon, L. Large Language Models are Competitive Near Cold-start Recommenders for Language- and Item-based Preferences. In Proceedings of the RecSys ’23: Seventeenth ACM Conference on Recommender Systems, Singapore, 18–22 September 2023; pp. 890–896. [Google Scholar]

- Zhang, W.; Wu, C.; Li, X.; Wang, Y.; Dong, K.; Wang, Y.; Dai, X.; Zhao, X.; Guo, H.; Tang, R. LLMTreeRec: Unleashing the Power of Large Language Models for Cold-Start Recommendations. arXiv 2024. [Google Scholar] [CrossRef]

- Liu, R.; Chen, H.; Bei, Y.; Zhou, Z.; Chen, L.; Shen, Q.; Huang, F.; Karray, F.; Wang, S. FilterLLM: Text-To-Distribution LLM for Billion-Scale Cold-Start Recommendation. arXiv 2025. [Google Scholar] [CrossRef]

- Ramel, D. Google Expands AI Portfolio with Gemini 2.0, Enhancing Multimodal Capabilities. Available online: https://pureai.com/articles/2025/02/10/google-unveils-gemini-2-0.aspx (accessed on 6 February 2025).

- Shu, D.; Zhao, H.; Liu, X.; Demeter, D.; Du, M.; Zhang, Y. LawLLM: Law Large Language Model for the US Legal System. In Proceedings of the CIKM ’24: The 33rd ACM International Conference on Information and Knowledge Management, Boise, ID, USA, 21–25 October 2024; pp. 4882–4889. [Google Scholar]

- Choi, I.; Kim, J.; Kim, W.C. An explainable prediction for dietary-related diseases via language models. Nutrients 2024, 16, 686. [Google Scholar] [CrossRef] [PubMed]

- Zhang, W.; Bei, Y.; Yang, L.; Zou, H.P.; Zhou, P.; Liu, A.; Li, Y.; Chen, H.; Wang, J.; Wang, Y.; et al. Cold-Start Recommendation towards the Era of Large Language Models (LLMs): A Comprehensive Survey and Roadmap. arXiv 2025. [Google Scholar] [CrossRef]

- Han, W.; Zhang, Z.; Zhang, Y.; Yu, J.; Chiu, C.-C.; Qin, J.; Gulati, A.; Pang, R.; Wu, Y. ContextNet: Improving Convolutional Neural Networks for Automatic Speech Recognition with Global Context. arXiv 2020. [Google Scholar] [CrossRef]

- Ma, J.; Zhao, Z.; Yi, X.; Chen, J.; Hong, L.; Chi, E.H. Modeling Task Relationships in Multi-task Learning with Multi-gate Mixture-of-Experts. In Proceedings of the KDD ’18: The 24th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, London, UK, 19–23 August 2018; pp. 1930–1939. [Google Scholar]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. arXiv 2019, arXiv:1810.04805. [Google Scholar] [CrossRef]

- Carbonell, J.; Goldstein, J. The use of MMR, diversity-based reranking for reordering documents and producing summaries. In Proceedings of the SIGIR98: 21st Annual ACM/SIGIR International Conference on Research and Development in Information Retrieval, Melbourne, Australia, 24–28 August 1998; pp. 335–336. [Google Scholar]

- Li, X.; Zhang, Z.; Stefanidis, K. Sentiment-aware Analysis of Mobile Apps User Reviews Regarding Particular Updates. In Proceedings of the in The Thirteenth International Conference on Software Engineering Advances, ICSEA, Nice, France, 14–18 October 2018. [Google Scholar]

- Mehrabi, N.; Morstatter, F.; Saxena, N.; Lerman, K.; Galstyan, A. A survey on bias and fairness in machine learning. ACM Comput. Surv. 2021, 54, 1–35. [Google Scholar] [CrossRef]

- Grbovic, M.; Cheng, H. Real-time Personalization using Embeddings for Search Ranking at Airbnb. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, London, UK, 19–23 August 2018; pp. 311–320. [Google Scholar]

- Viktoratos, I.; Tsadiras, A.; Bassiliades, N. Combining community-based knowledge with association rule mining to alleviate the cold start problem in context-aware recommender systems. Expert Syst. Appl. 2018, 101, 78–90. [Google Scholar] [CrossRef]

- Covington, P.; Adams, J.; Sargin, E. Deep Neural Networks for YouTube Recommendations. In Proceedings of the RecSys’16: 10th ACM Conference on Recommender Systems, Boston, MA, USA, 15–19 September 2016; pp. 191–198. [Google Scholar]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. “Why Should I Trust You?” Explaining the Predictions of Any Classifier. In Proceedings of the KDD’16: 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 1135–1144. [Google Scholar] [CrossRef]

- Pan, S.J.; Yang, Q. A Survey on Transfer Learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- Tam, K.Y.; Shuk, Y.H. Understanding the Impact of Web Personalization on User Information Processing and Decision Outcomes. MIS Q. 2006, 30, 865–890. [Google Scholar] [CrossRef]

- Petty, R.E.; Cacioppo, J.T. The Elaboration Likelihood Model of Persuasion. In Advances in Experimental Social Psychology; Berkowitz, L., Ed.; Academic Press: Cambridge, MA, USA, 1986; Volume 19, pp. 123–205. [Google Scholar]

- Doshi-Velez, F.; Kim, B. Towards A Rigorous Science of Interpretable Machine Learning. arXiv 2017. [Google Scholar] [CrossRef]

- Uruqi, A.; Viktoratos, I. Exploiting Spiking Neural Networks for Click-Through Rate Prediction in Personalized Online Advertising Systems. Forecasting 2025, 7, 38. [Google Scholar] [CrossRef]

You are an expert copywriter and evaluator for targeted advertising. Given a user profile [attributes: location, time, website type etc.] and a list of five advertisements [categories, descriptions], perform the following:

Output format:

Constraints:

|

| Parameter | DigiX | Avazu |

|---|---|---|

| Learning rate | 0.001 | 0.001 |

| Optimizer | Adam | Adam |

| Batch size | 2086 | 2086 |

| Embedding size | 32 | 32 |

| Activation functions | ReLU in hidden layers, Sigmoid in output | ReLU in hidden layers, Sigmoid in output |

| Loss function | BCE | BCE |

| Epochs | 20 with early stopping | 20 with early stopping |

| Weight initialization | Xavier | Xavier |

| Model Name | AUC ROC | Accuracy | AUC—PI (%) |

|---|---|---|---|

| DIFM | 0.7179 | 0.8255 | 0.69 |

| DIFM-TR | 0.7229 | 0.8256 | |

| DCN | 0.7192 | 0.8256 | 0.45 |

| DCN-TR | 0.7224 | 0.8273 | |

| MMOE | 0.7188 | 0.8254 | 0.19 |

| MMOE-TR | 0.7202 | 0.8283 | |

| FiBiNET | 0.7203 | 0.8271 | 0.15 |

| FiBiNET-TR | 0.7214 | 0.8272 | |

| ENSEMBLER | 0.7251 | 0.8286 | 0.3 |

| Model Name | AUC ROC | Accuracy | PI (%) |

|---|---|---|---|

| DIFM | 0.6581 | 0.9497 | 3.45 |

| DIFM-TR | 0.6808 | 0.9626 | |

| DCN | 0.6598 | 0.9614 | 0.33 |

| DCN-TR | 0.6619 | 0.9623 | |

| MMOE | 0.6777 | 0.9630 | 1.36 |

| MMOE-TR | 0.6870 | 0.9630 | |

| FiBiNET | 0.6087 | 0.9267 | 1.78 |

| FiBiNET-TR | 0.6178 | 0.9462 | |

| ENSEMBLER | 0.6893 | 0.9684 | 0.33 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Uruqi, A.; Viktoratos, I.; Tsadiras, A. A Novel Framework Leveraging Large Language Models to Enhance Cold-Start Advertising Systems. Future Internet 2025, 17, 360. https://doi.org/10.3390/fi17080360

Uruqi A, Viktoratos I, Tsadiras A. A Novel Framework Leveraging Large Language Models to Enhance Cold-Start Advertising Systems. Future Internet. 2025; 17(8):360. https://doi.org/10.3390/fi17080360

Chicago/Turabian StyleUruqi, Albin, Iosif Viktoratos, and Athanasios Tsadiras. 2025. "A Novel Framework Leveraging Large Language Models to Enhance Cold-Start Advertising Systems" Future Internet 17, no. 8: 360. https://doi.org/10.3390/fi17080360

APA StyleUruqi, A., Viktoratos, I., & Tsadiras, A. (2025). A Novel Framework Leveraging Large Language Models to Enhance Cold-Start Advertising Systems. Future Internet, 17(8), 360. https://doi.org/10.3390/fi17080360