Abstract

The integration of digital twin technology with robotic automation holds significant promise for advancing sustainable horticulture in controlled environment agriculture. This article presents DigiHortiRobot, a novel AI-driven digital twin architecture tailored for hydroponic greenhouse systems. The proposed framework integrates real-time sensing, predictive modeling, task planning, and dual-arm robotic execution within a modular, IoT-enabled infrastructure. DigiHortiRobot is structured into three progressive implementation phases: (i) monitoring and data acquisition through a multimodal perception system; (ii) decision support and virtual simulation for scenario analysis and intervention planning; and (iii) autonomous execution with feedback-based model refinement. The Physical Layer encompasses crops, infrastructure, and a mobile dual-arm robot; the virtual layer incorporates semantic modeling and simulation environments; and the synchronization layer enables continuous bi-directional communication via a nine-tier IoT architecture inspired by FIWARE standards. A robot task assignment algorithm is introduced to support operational autonomy while maintaining human oversight. The system is designed to optimize horticultural workflows such as seeding and harvesting while allowing farmers to interact remotely through cloud-based interfaces. Compared to previous digital agriculture approaches, DigiHortiRobot enables closed-loop coordination among perception, simulation, and action, supporting real-time task adaptation in dynamic environments. Experimental validation in a hydroponic greenhouse confirmed robust performance in both seeding and harvesting operations, achieving over 90% accuracy in localizing target elements and successfully executing planned tasks. The platform thus provides a strong foundation for future research in predictive control, semantic environment modeling, and scalable deployment of autonomous systems for high-value crop production.

1. Introduction

The COVID-19 pandemic has underscored the critical role of digital technologies in sustaining and enhancing operations across sectors. Among them, agriculture, an essential pillar of the primary economy, stands to benefit significantly from this digital transition. Embracing emerging technologies is likely to prove pivotal for improving competitiveness, addressing structural inefficiencies, and mitigating risks associated with future food supply crises.

Simultaneously, demographic projections by the United Nations anticipate a global population of 8.5 billion by 2030 and approximately 9.7 billion by 2050 [1]. These projections intensify the demand for sustainable agricultural intensification, particularly under constraints such as declining arable land, labor scarcity, and the low profitability of small- to medium-scale farms.

To respond to these challenges, researchers have proposed a wide range of innovative approaches. Controlled environment agriculture systems, such as hydroponics and aeroponics, have demonstrated the potential to reduce water usage, eliminate pesticide dependence, and lower greenhouse gas emissions [2,3]. In parallel, the integration of sensory systems with intelligent analytics and machine learning has enabled enhanced crop monitoring, precision interventions, and yield optimization [4]. Recently, explainable AI approaches have also been applied to greenhouse decision-making; for example, Abekoon et al. (2025) demonstrated the use of interpretable machine learning models for irrigation scheduling and yield prediction in controlled environments [5]. Additionally, robotics platforms equipped with advanced perception and learning algorithms have proven effective in automating repetitive and labor-intensive horticultural tasks [6].

Despite these technological advancements, real-world deployments remain fragmented, with limited interoperability between sensing, control, and actuation components. Unlocking the full potential of smart farming technologies requires integrative frameworks that bridge the physical and digital domains. In this context, digital twins offer a promising paradigm for enabling seamless synchronization, predictive intelligence, and closed-loop control in precision agriculture.

This article introduces DigiHortiRobot, a novel digital twin architecture designed for hydroponic greenhouse horticulture using a dual-arm mobile robotic platform. The system integrates sensing, control, predictive modeling, and autonomous task execution within an IoT-enabled infrastructure. DigiHortiRobot provides the following:

- Real-time monitoring of crop status, environmental variables (e.g., CO2, temperature, humidity, pH), and robotic actions;

- Simulation-based inference for seeding and harvesting optimization;

- Self-diagnosis and predictive maintenance based on robot telemetry;

- Closed-loop refinement of decision policies via interaction-aware feedback.

DigiHortiRobot explicitly places the farmer at the center of the decision loop, enabling remote monitoring, scenario simulation, planning, and validation via a virtual representation of the greenhouse. By integrating robotics, artificial intelligence, Big Data analytics, and cloud computing, DigiHortiRobot offers a robust framework for sustainable, high-efficiency greenhouse horticulture.

The main contributions of this article are as follows: (i) the design of a unified and modular digital twin architecture tailored for smart greenhouses; (ii) the implementation of AI-driven decision-making layers within the digital twin framework; and (iii) a demonstration of a three-phase execution model encompassing monitoring, virtual planning, and physical deployment for key horticultural tasks. Furthermore, the proposed system introduces a unified virtualization approach capable of representing biological, infrastructural, and robotic elements, overcoming current limitations in agricultural digital twin prototypes.

The rest of the article is organized as follows. Section 2 reviews related work on digital twins and their applications in agriculture. Section 3 introduces the conceptual framework and system architecture of DigiHortiRobot. Section 4 describes the three-phase implementation strategy. Section 5 discusses the main contributions, potential applications, and current limitations. Finally, Section 6 concludes the article by summarizing the key findings and proposing directions for future research and practical deployment.

2. Background and Related Work

Digital twin technology has emerged as a powerful paradigm for modeling and managing complex systems by maintaining a synchronized representation between physical entities and their virtual counterparts in real time. Originally conceptualized by Michael Grieves in the early 2000s [7,8], the roots of digital twins trace back to NASA’s use of mirrored simulation systems for spacecraft monitoring in the 1970s [9]. NASA later defined a digital twin as “an integrated multi-physics, multi-scale, probabilistic simulation of a system that uses the best available models, sensor data, and fleet history to mirror its physical counterpart” [10]. This foundational concept has since been adopted across sectors such as aerospace, automotive, manufacturing, and energy [11,12,13].

In the domain of Product Lifecycle Management, digital twins have demonstrated value in enabling continuous, data-informed decision-making throughout the life cycle of engineered assets. These implementations typically rely on structured environments with well-characterized sensor integration, standardized control systems, and repeatable operating conditions [12], particularly within industrial and engineering contexts.

By contrast, the application of digital twins in agriculture presents distinctive challenges. Agricultural environments are unstructured, biologically heterogeneous, and subject to temporal and climatic variability. Furthermore, they involve complex interactions with living systems—including plants, insects, and microorganisms—that require perceptual adaptability, semantic reasoning, and robust real-time control strategies [14,15]. These characteristics complicate the development of accurate and responsive virtual representations. Several recent reviews emphasize this complexity, highlighting the need for ontological frameworks, AIoT-driven architectures, and integration strategies capable of supporting interoperability and reasoning [16,17,18].

Initial explorations of digital twins in agriculture have primarily focused on isolated functions, such as field monitoring, crop yield estimation, and irrigation control. For example, Verdouw et al. proposed a conceptual classification of agricultural digital twins based on the IoT-mediated integration of field data with simulation models [14]. Pylianidis et al. emphasized the need to transition from conceptual prototypes to operational frameworks that tightly couple sensing, modeling, decision-making, and actuation [15]. More recently, comprehensive analyses by Purcell and Neubauer, as well as Gong et al., have documented that most digital twin implementations in agriculture remain at early stages of development and that current approaches are often fragmented and lacking integration [19,20].

Parallel advances in agricultural robotics have led to the development of mobile systems capable of performing targeted tasks such as fruit detection, harvesting, and environmental monitoring. Notable examples include cucumber-picking robots [4] and dual-arm platforms for automated aubergine harvesting [6]. However, these systems are typically deployed in isolation, without integration into a digital twin feedback loop. Consequently, they lack predictive modeling, real-time simulation, and semantic feedback, core components of the digital twin concept.

To date, few research studies have attempted to bridge robotic automation with digital twin architectures in agriculture. Existing approaches often rely on simplified robotic models and remain limited to passive data acquisition or remote supervision. Architectures capable of active learning, semantic environment modeling, or hybrid edge–cloud control remain rare. Furthermore, current systems seldom exploit feedback mechanisms that combine task planning, execution, and policy refinement in closed-loop form. Recent contributions, such as those by Rahman et al. and Bua et al., have begun to address this gap by integrating machine learning and reinforcement learning into digital twin systems for smart greenhouse management and predictive synchronization [21,22]. However, these efforts are still in their early stages and often focus on specific functionalities or simulation layers, lacking full integration with robotic platforms capable of executing tasks autonomously within real greenhouse environments.

Building on these initial advances, this work proposes DigiHortiRobot, a novel digital twin architecture that unifies the virtual and physical representations of crops, greenhouse infrastructure, and robotic systems. The proposed system advances the state of the art by embedding prediction, task optimization, semantic modeling, and autonomous execution within a modular, IoT-enabled framework. By integrating perception, simulation, control, and decision-making, DigiHortiRobot transcends monitoring-centric approaches, enabling real-time self-adaptation in dynamic horticultural environments.

3. Concept and Architecture of DigiHortiRobot

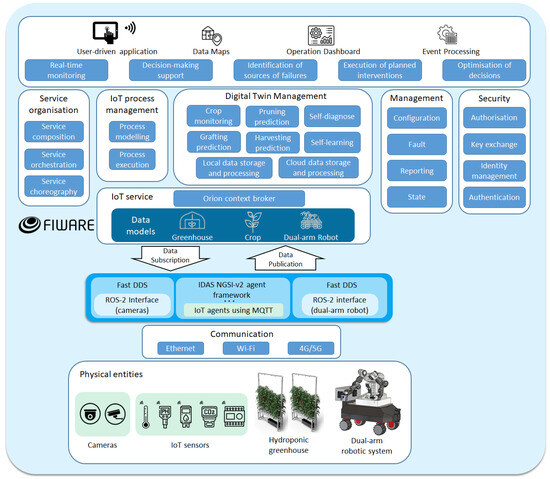

The DigiHortiRobot architecture is designed to support intelligent and sustainable horticulture in hydroponic greenhouse environments through a fully integrated digital twin framework. The system establishes a synchronized loop between physical components, comprising biological, robotic, and infrastructural elements, and their digital counterparts, modeled using artificial intelligence, semantic representations, and simulation techniques. An IoT-enabled communication infrastructure ensures real-time data exchange, facilitating continuous alignment between virtual models and physical processes. The architecture adheres to the foundational structure of a digital twin: (i) a physical system, (ii) its virtual representation, and (iii) a bidirectional data interface that enables convergence and interaction between the two domains [8,23]. To enable the practical deployment of the proposed architecture, DigiHortiRobot is structured into three interdependent components: (1) the Physical Layer, comprising all real-world entities and sensing devices; (2) the virtual layer, encompassing AI-driven models and synchronization infrastructure; and (3) the implementation framework, which governs task execution and learning across multiple operational phases. Figure 1 illustrates the core structure of DigiHortiRobot, organized into three primary layers: the Physical Layer (bottom), the Virtual Layer (top), and the Implementation Framework (center). The Physical Layer encompasses biological systems, sensors, and robotic components operating in the greenhouse. Data collected from this layer is transmitted upward through the Communication and IoT Service layers using protocols such as ROS 2 and MQTT. The Virtual Layer contains dynamic models of crops, environment, and robot behavior, supported by AI-based reasoning and semantic representations. Bidirectional arrows indicate the synchronization flow: sensor data is ingested and used for simulation, while planned actions and task policies are sent back to the physical actuators. The central Implementation Framework governs decision-making, workflow orchestration, and task execution across phases. This modular structure ensures scalability, interoperability, and continuous adaptation between real-world conditions and digital reasoning. These components are described in more detail in the following subsections.

Figure 1.

Overview of the DigiHortiRobot architecture, showing the Physical Layer, Virtual Layer, and Implementation Framework.

3.1. Physical Layer Components

The Physical Layer comprises all tangible elements involved in horticultural production and autonomous robotic manipulation:

- Biological components: Crops such as aubergines and tomatoes, modeled from seedling to harvest, incorporating phenotypic traits and growth stages.

- Greenhouse infrastructure: A hydroponic cultivation setup equipped with environmental sensors for CO2 concentration, temperature, relative humidity, and pH. QR tags are embedded within the planters to facilitate spatial identification and traceability.

- Robotic system: A mobile dual-arm platform capable of executing horticultural operations such as seeding [24] and harvesting [6]. These tasks are both time-critical and labor-intensive, representing key phases in the crop production process.

The robotic platform integrates an advanced multimodal perception system, combining RGB and Time-of-Flight (ToF) vision to enable a 3D reconstruction of the environment and semantic mapping. This enables detection of relevant plant structures and facilitates complex manipulation in confined and delicate environments. The robot also incorporates learning-from-demonstration capabilities to generalize manipulation strategies across tasks with varying plant geometries and operational contexts, enhancing adaptability and reducing the need for manual reprogramming.

3.2. Virtual Layer and Synchronization

The Virtual Layer consists of dynamic, AI-enhanced models of the crops, greenhouse environment, and robotic system. These models are continuously updated with real-time sensor data, enabling the predictive simulation of future states, fault diagnostics, and virtual testing of robotic actions. The robot’s virtual agent mirrors its kinematic chain, task states, and interaction constraints, supporting anticipatory planning and online decision refinement.

Synchronization between the physical and virtual domains is structured through a nine-layer IoT architecture inspired by FIWARE-compliant systems [14]:

- Device Layer: Incorporates all edge-level sensors and actuators embedded within the greenhouse and the robotic system.

- Communication Layer: Utilizes heterogeneous networking protocols (e.g., Wi-Fi, Ethernet, 4G/5G) for resilient and low-latency data transmission. Communication between the physical entities (e.g., dual-arm robot, cameras, and sensors) and higher-level services is facilitated by ROS 2 interfaces for perception and control, as well as IoT agents using MQTT for structured context data exchange.

- IoT Service Layer: Employs middleware (e.g., Orion Context Broker) for context-aware data aggregation and control dispatch.

- Digital Twin Management Layer: Handles the instantiation, storage, and synchronization of digital counterparts, including environmental models and plant lifecycle representations.

- IoT Process Management Layer: Specifies task execution logic, procedural dependencies, and event-based triggers through workflow engines.

- Service Organization Layer: Performs the orchestration and composition of services across abstraction levels, enabling scalability and modularity.

- Security Layer: Ensures system integrity and data privacy through identity management, authorization, and authentication.

- Management Layer: Oversees configuration, resource allocation, fault detection, and system status monitoring.

- Application Layer: Provides a user-friendly interface for farmers and stakeholders to interact with the system.

3.3. Functional Capabilities and Digital Execution Cycle

The initial deployment of DigiHortiRobot focused on demonstrating the system’s ability to autonomously execute core horticultural tasks, specifically seeding and harvesting, by leveraging predictive simulation, real-time data integration, and bidirectional synchronization between the Physical and Virtual Layers. These capabilities are supported by a unified architecture that combines multimodal perception, semantic mapping, and AI-based decision support.

Rather than functioning as a passive monitoring system, DigiHortiRobot transforms collected data, such as plant imagery and environmental sensor streams, into structured digital representations that drive action planning and validation. These entities are synchronized with the virtual map of the greenhouse environment, allowing a simulation-based assessment of interventions prior to execution. The virtual layer models not only geometric layouts and plant attributes, but also encodes operational constraints and expected outcomes for planned actions.

This architecture enables the system to autonomously propose and evaluate alternative intervention strategies. Farmers can specify desired crop attributes (e.g., ripeness index or harvest diameter), and the system will simulate candidate operations, rank outcomes using predictive metrics, and suggest optimal plans. Once validated by the user, the robotic platform executes the selected strategy. Execution results are logged and used to incrementally update the decision models, ensuring adaptive behavior over time.

4. Implementation Strategy

The implementation of DigiHortiRobot is structured into three progressive phases, each building on the previous to incrementally increase the system’s intelligence, autonomy, and sustainability impact. These stages represent a transition from data acquisition to simulation-based decision support, and finally to closed-loop robotic execution. This section details the operational objectives and enabling technologies of each phase.

4.1. Phase I—Monitoring and Data Acquisition

The initial phase establishes the foundational capabilities of the digital twin: real-time monitoring of crop development and environmental conditions within the greenhouse. The robotic platform acquires data through its multimodal perception system, enabling spatial understanding and a high-resolution 3D reconstruction of the cultivation environment. Key tasks in this phase include the following:

- Seeding assessment and scheduling, based on tray analysis and current environmental conditions;

- Fruit detection and tracking, including estimation of key phenotypic attributes such as diameter, color, maturity stage, and spatial coordinates;

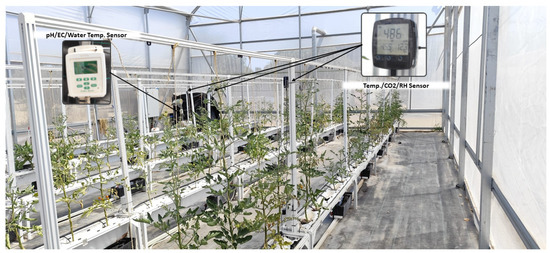

- Environmental monitoring, via embedded sensors measuring CO2, relative humidity, temperature, and pH—see Figure 2;

Figure 2. Hydroponic greenhouse sensors installed for monitoring.

Figure 2. Hydroponic greenhouse sensors installed for monitoring. - Planter identification and localization, achieved using QR markers to establish contextual links between plant data and specific hydroponic units; Figure 3 shows this labeling strategy based on QR markers.

Figure 3. QR-based crop identification system.

Figure 3. QR-based crop identification system.

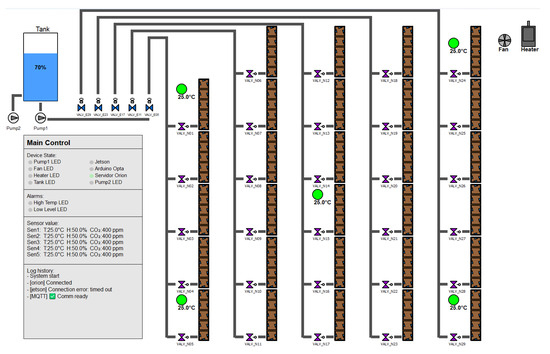

All data streams are semantically structured and synchronized with the Virtual Layer, forming a digital representation of crop lifecycles and environmental dynamics. This representation is accessible through a real-time dashboard, which integrates irrigation layout, environmental sensor data, historical trends, forecasting tools, and system status indicators. Figure 4 illustrates the interface used to monitor the irrigation and environmental conditions within the hydroponic greenhouse in real time.

Figure 4.

Real-time monitoring dashboard for the hydroponic greenhouse, showing irrigation layout, environmental sensor readings, and system status.

To contextualize the capabilities of DigiHortiRobot, Table 1 provides a comparative overview with respect to conventional smart farming monitoring tools, based on technical information commonly reported in the literature [20,25].

Table 1.

Comparison of monitoring functionalities supported by DigiHortiRobot and those available in conventional greenhouse systems [20,25].

While conventional systems rely primarily on environmental sensors and dashboard-level visualization, DigiHortiRobot introduces semantic spatial mapping, real-time synchronization, and closed-loop integration with robotic agents. This foundational layer enables predictive task planning and execution, surpassing the passive role of traditional monitoring systems.

4.2. Phase II—Decision Support and Virtual Simulation

In this phase, the digital twin evolves from a monitoring tool to an active decision-support system. Farmers define desired agronomic outcomes, such as harvest timing or target fruit size, and the system simulates candidate interventions using AI-based models. Core activities include the following:

- Virtual simulation of robotic operations, such as seeding and harvesting, under varying crop and climate conditions—see Figure 5 and Figure 6;

Figure 5. IsaacSim Simulation—Robotic platform.

Figure 5. IsaacSim Simulation—Robotic platform. Figure 6. IsaacSim simulation—hydroponic greenhouse environment.

Figure 6. IsaacSim simulation—hydroponic greenhouse environment. - Outcome prediction, based on models trained with historical crop data and external agronomic knowledge;

- Comparative evaluation of strategies, enabling users to analyze projected impacts on productivity, resource usage, and crop health;

- Farmer validation, using a cloud interface where proposed interventions can be reviewed, adjusted, and approved prior to physical deployment.

4.3. Phase III—Autonomous Execution

The third phase closes the loop between planning and physical action. The robotic platform autonomously executes validated interventions while the digital twin monitors outcomes and refines its models through feedback. This enables the continuous improvement of decision-making accuracy and operational reliability.

Major functionalities include the following:

- Autonomous task execution, governed by a human-in-the-loop scheduling system, as detailed in Algorithm 1. The user initiates the process via a graphical interface, selecting the target robot, defining the action type (e.g., harvesting), and specifying timing parameters such as date, time, and repetition frequency. The system then assigns a navigation route within the greenhouse and activates execution controls for pausing, stopping, or resuming operations in real time. Throughout task execution, the robot’s status is continuously monitored via a feedback loop that checks for failure states or completion events. In the case of anomalies, alerts are triggered to inform the operator, ensuring safe and robust operation. Upon successful task completion, a notification is issued. This logic closes the control loop between virtual planning and physical action, and supports the high-level orchestration of horticultural interventions.While predictive planning, strategy ranking, and decision-making are handled in the preceding layers of the DigiHortiRobot architecture, the task assignment algorithm is dedicated to runtime scheduling and actuator coordination. Its logic is composed of fixed-branch operations and condition checks, resulting in constant-time execution per monitoring cycle. Therefore, the overall computational complexity is constant per loop iteration, and the total runtime is primarily dominated by the physical duration of robotic task execution. As a result, the algorithm imposes minimal computational load on the central node and ensures responsive operation under standard greenhouse operating conditions.

- Response monitoring, measuring crop reactions (e.g., growth rate, fruit development) to specific control actions.

- Predictive maintenance, achieved through an analysis of sensor logs and robotic performance data to detect early signs of failure or degradation.

By integrating perception, simulation, planning, and actuation into a unified feedback system, DigiHortiRobot evolves from a modeling framework into a fully adaptive, autonomous horticultural assistant. The digital twin not only maintains a synchronized representation of the greenhouse and robotic system, but also actively contributes to optimizing the coordinated behavior through data-driven reasoning and continuous interaction with the physical environment.

| Algorithm 1 Robot task assignment algorithm for greenhouse operations |

|

5. Discussion

The DigiHortiRobot architecture demonstrates a structured and scalable integration of digital twin technology with real robotic systems in greenhouse horticulture. Its three-phase implementation strategy enables a progressive transition from environmental monitoring to autonomous task execution, ensuring technological readiness and deployment feasibility. A core contribution of the system lies in its closed-loop execution cycle, which links perception, simulation, decision-making, and actuation into a continuous and adaptive pipeline.

In contrast to many existing digital twin approaches in agriculture, typically limited to passive data monitoring, visualization, or off-line simulations, DigiHortiRobot enables bidirectional synchronization with a physical robotic agent, capable of real-time intervention. For example, while Purcell and Neubauer (2023) provided a detailed review of agricultural digital twins, they emphasized that most implementations remain conceptual and rarely progress beyond simulation or dashboard-level integration [19]. Similarly, Gong et al. (2025) highlighted that current solutions are often fragmented, focusing on specific components such as sensing or visualization without closing the perception–action loop [20]. DigiHortiRobot addresses this gap by embedding semantic reasoning and predictive simulation into a platform that supports robotic execution under real-world constraints.

Technically, the inclusion of a dual-arm robotic platform distinguishes our system from prior work. For instance, Rahman et al. (2024) proposed a progressive transition from environmental monitoring to autonomous task execution, ensuring technological framework for greenhouse management that integrates machine learning and IoT communication layers, but does not interface with any real robotic hardware [21]. Barbie et al. (2024) explored automated software testing for agricultural digital twins, but their prototype remains virtual and lacks a physical execution layer [26]. By contrast, our platform not only maintains a synchronized digital representation of the greenhouse but also enables the physical execution of complex tasks such as seeding and harvesting. The use of 3D perception, semantic mapping, and goal-driven planning further allows the system to operate under dynamic spatial and phenotypic variability.

The contribution of DigiHortiRobot also resides in its decision-making cycle. Unlike systems focused solely on visual feedback or statistical modeling, our approach uses the simulation-based evaluation of action policies that are generated in response to user-defined agronomic targets. This mechanism improves operational robustness by validating decisions in a risk-free virtual environment before physical execution. Although similar ideas have been proposed in the industrial digital twin literature, their adaptation to agriculture, where biological uncertainty and unstructured environments prevail, remains rare. Our implementation validates this approach using ToF-based perception, real-time mapping, and policy refinement grounded in actual feedback.

Nonetheless, several limitations affect the system’s current applicability and must be acknowledged. First, the generalization of the perception and planning models remains limited due to the morphological diversity across crop types. Although DigiHortiRobot has been validated with tomato and aubergine plants, their distinct structural characteristics, such as fruit arrangement, stem rigidity, and leaf density, mean that deployment to other crops would require additional perception tuning, the retraining of detection models, and the possible adaptation of manipulation strategies. This poses a challenge for short-term scalability in heterogeneous greenhouse settings. Second, the use of edge–cloud communication introduces latency, especially in phases involving large-scale image processing or task rescheduling. This latency, although tolerable in the current task pipeline, may hinder responsiveness in more time-critical scenarios such as dynamic obstacle avoidance, real-time multi-robot coordination, or high-speed harvesting in dense foliage. Third, the task scheduling mechanism is based on single-agent execution and does not yet account for distributed coordination or competition for shared resources, which could become critical in larger or multi-robot setups.

These limitations highlight avenues for future improvement. A major priority is the integration of reinforcement learning techniques to enable dynamic policy adaptation across environments. Likewise, a hierarchical scheduling engine incorporating resource constraints and multi-robot coordination would expand the applicability to commercial-scale deployments. From an architectural standpoint, efforts are underway to reduce latency through edge computation and to optimize model synchronization rates based on task criticality.

In summary, DigiHortiRobot contributes a significant advance in the design of agricultural digital twins by demonstrating a real-world implementation that closes the loop between digital planning and robotic execution. Its architecture supports semantic interoperability, predictive reasoning, and autonomous operation in structured greenhouse environments. Comparative analysis confirms that the system addresses core limitations identified in recent surveys and prototyping efforts. While challenges remain, the results presented lay a strong foundation for extending the approach to other crop types, operational contexts, and decision frameworks, thus advancing the vision of truly intelligent and sustainable horticultural automation.

6. Conclusions

This paper presented DigiHortiRobot, a digital twin-based architecture that advances the state of the art by enabling closed-loop coordination between virtual models and robotic actions in hydroponic greenhouse horticulture. Unlike many prior efforts limited to simulation or monitoring, our system closes the loop between perception and action through predictive planning and real-world task execution. This methodological integration, bridging AI, robotics, and IoT, is central to advancing automation in structured agricultural domains.

Beyond its initial deployment on aubergine and tomato crops in hydroponic greenhouses, DigiHortiRobot establishes foundational principles applicable to a broader range of agri-tech scenarios. These include automated nursery management, vertical farming control, and adaptive resource allocation in smart agriculture infrastructures. The system’s modularity and compliance with semantic interoperability standards facilitate its adaptation across heterogeneous production environments.

Future research will focus on multi-robot coordination, dynamic policy refinement through reinforcement learning, and edge–cloud architectures with adaptive synchronization strategies. In particular, we aim to pursue hypothesis-driven evaluations of how semantic feedback loops influence task efficiency, fault tolerance, and predictive reliability under biological uncertainty. These studies will also explore broader questions of economic viability and integration into farm-level decision support systems. Furthermore, we recognize the importance of promoting reproducibility across digital agriculture initiatives. As such, we intend to contribute to the development of standardized benchmarks, simulation testbeds, and structured evaluation protocols to support scientific transparency and comparability.

Author Contributions

Conceptualization, R.F.; methodology, R.F. and E.N.; validation, D.R.-N. and L.E.; formal analysis, E.N. and L.E.; investigation, D.R.-N., A.A.R.-G. and L.E.; data curation, D.R.-N. and A.A.R.-G.; writing—original draft preparation, R.F.; writing—review and editing, R.F. and E.N.; visualization, D.R.-N. and A.A.R.-G.; supervision, R.F.; principal investigator, R.F. All authors have read and agreed to the published version of the manuscript.

Funding

The research leading to these results was supported in part by the following: (i) the Grant TED2021-132710B-I00 funded by MICIU/AEI/10.13039/501100011033 and by the “European Union NextGenerationEU/PRTR”; (ii) the Grant PID2023-151493OB-I00, funded by MICIU/AEI/10.13039/501100011033 and by FEDER, EU; (iii) Programas de Actividades de I+D con referencia TEC-2024/TEC-62 y acrónimo iRoboCity2030-CM, concedido por la Comunidad de Madrid a través de la Dirección General de Investigación e Innovación Tecnológica a través de la Orden 5696/2024; and (iv) CSIC under Grant 202350E072, Proyecto Intramural IAMC-ROBI-II (Inteligencia Artificial y Mecatrónica Cognitiva para la Manipulación Robótica Bimanual—2° Fase).

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- United Nations Department of Economic and Social Affairs, Population Division. World Population Prospects 2022. 2022. Available online: https://population.un.org/wpp/ (accessed on 27 June 2025).

- Walters, K.J.; Behe, B.K.; Currey, C.J.; Lopez, R.G. Historical, Current, and Future Perspectives for Controlled Environment Hydroponic Food Crop Production in the United States. HortSci. Horts 2020, 55, 758–767. [Google Scholar] [CrossRef]

- Winker, M.; Fischer, M.; Bliedung, A.; Bürgow, G.; Germer, J.; Mohr, M.; Nink, A.; Schmitt, B.; Wieland, A.; Dockhorn, T. Water reuse in hydroponic systems: A realistic future scenario for Germany? Facts and evidence gained during a transdisciplinary research project. J. Water Reuse Desalin. 2020, 10, 363–379. Available online: https://iwaponline.com/jwrd/article-pdf/10/4/363/831355/jwrd0100363.pdf (accessed on 27 June 2025). [CrossRef]

- Fernández, R.; Montes, H.; Surdilovic, J.; Surdilovic, D.; Gonzalez-De-Santos, P.; Armada, M. Automatic Detection of Field-Grown Cucumbers for Robotic Harvesting. IEEE Access 2018, 6, 35512–35527. [Google Scholar] [CrossRef]

- Abekoon, T.; Sajindra, H.; Rathnayake, N.; Ekanayake, I.U.; Jayakody, A.; Rathnayake, U. A novel application with explainable machine learning (SHAP and LIME) to predict soil N, P, and K nutrient content in cabbage cultivation. Smart Agric. Technol. 2025, 11, 100879. [Google Scholar] [CrossRef]

- SepúLveda, D.; Fernández, R.; Navas, E.; Armada, M.; González-De-Santos, P. Robotic Aubergine Harvesting Using Dual-Arm Manipulation. IEEE Access 2020, 8, 121889–121904. [Google Scholar] [CrossRef]

- Grieves, M. Digital Twin: Manufacturing Excellence through Virtual Factory Replication, 2014. White Paper. Executive Summary. Available online: https://www.researchgate.net/publication/275211047_Digital_Twin_Manufacturing_Excellence_through_Virtual_Factory_Replication (accessed on 27 June 2025).

- Grieves, M.; Vickers, J. Digital Twin: Mitigating Unpredictable, Undesirable Emergent Behavior in Complex Systems. In Transdisciplinary Perspectives on Complex Systems: New Findings and Approaches; Kahlen, F.J., Flumerfelt, S., Alves, A., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 85–113. [Google Scholar] [CrossRef]

- Shafto, M.; Conroy, M.; Doyle, R.; Glaessgen, E.; Kemp, C.; LeMoigne, J.; Wang, L. Modeling, Simulation, Information Technology & Processing Roadmap. Technical Report, NASA, 2010. Available online: https://www.researchgate.net/publication/280310295_Modeling_Simulation_Information_Technology_and_Processing_Roadmap (accessed on 27 June 2025).

- Glaessgen, E.; Stargel, D. The Digital Twin Paradigm for Future NASA and U.S. Air Force Vehicles. In Proceedings of the 53rd AIAA/ASME/ASCE/AHS/ASC Structures, Structural Dynamics and Materials Conference, Honolulu, HI, USA, 23–26 April 2012; Available online: https://arc.aiaa.org/doi/pdf/10.2514/6.2012-1818 (accessed on 27 June 2025). [CrossRef]

- Guerra-Zubiaga, D.; Kuts, V.; Mahmood, K.; Bondar, A.; Nasajpour-Esfahani, N.; Otto, T. An approach to develop a digital twin for industry 4.0 systems: Manufacturing automation case studies. Int. J. Comput. Integr. Manuf. 2021, 34, 933–949. [Google Scholar] [CrossRef]

- Qi, Q.; Tao, F.; Zuo, Y.; Zhao, D. Digital Twin Service towards Smart Manufacturing. Procedia CIRP 2018, 72, 237–242. [Google Scholar] [CrossRef]

- Meske, C.; Osmundsen, K.S.; Junglas, I. Designing and Implementing Digital Twins in the Energy Grid Sector. MIS Q. Exec. 2021, 20, 183–198. Available online: https://aisel.aisnet.org/misqe/vol20/iss3/3 (accessed on 27 June 2025).

- Verdouw, C.; Tekinerdogan, B.; Beulens, A.; Wolfert, S. Digital twins in smart farming. Agric. Syst. 2021, 189, 103046. [Google Scholar] [CrossRef]

- Pylianidis, C.; Osinga, S.; Athanasiadis, I.N. Introducing digital twins to agriculture. Comput. Electron. Agric. 2021, 184, 105942. [Google Scholar] [CrossRef]

- Karabulut, E.; Pileggi, S.F.; Groth, P.; Degeler, V. Ontologies in digital twins: A systematic literature review. Future Gener. Comput. Syst. 2024, 153, 442–456. [Google Scholar] [CrossRef]

- Muhammed, D.; Ahvar, E.; Ahvar, S.; Trocan, M.; Montpetit, M.J.; Ehsani, R. Artificial Intelligence of Things (AIoT) for smart agriculture: A review of architectures, technologies and solutions. J. Netw. Comput. Appl. 2024, 228, 103905. [Google Scholar] [CrossRef]

- Zhang, R.; Zhu, H.; Chang, Q.; Mao, Q. A Comprehensive Review of Digital Twins Technology in Agriculture. Agriculture 2025, 15, 903. [Google Scholar] [CrossRef]

- Purcell, W.; Neubauer, T. Digital Twins in Agriculture: A State-of-the-art review. Smart Agric. Technol. 2023, 3, 100094. [Google Scholar] [CrossRef]

- Gong, J.; Chen, J.; Chen, Z.; Chi, H.; Liu, J. Overview of the Application Progress of Digital Twins in Agriculture. In Proceedings of the 2025 International Conference on Digital Management and Information Technology. Association for Computing Machinery, New York, NY, USA, 14–16 March 2025; DMIT ’25. pp. 295–303. [Google Scholar] [CrossRef]

- Rahman, H.; Shah, U.M.; Riaz, S.M.; Kifayat, K.; Moqurrab, S.A.; Yoo, J. Digital twin framework for smart greenhouse management using next-gen mobile networks and machine learning. Future Gener. Comput. Syst. 2024, 156, 285–300. [Google Scholar] [CrossRef]

- Bua, C.; Borgianni, L.; Adami, D.; Giordano, S. Reinforcement Learning-Driven Digital Twin for Zero-Delay Communication in Smart Greenhouse Robotics. Agriculture 2025, 15, 1290. [Google Scholar] [CrossRef]

- Redelinghuys, A.; Basson, A.; Kruger, K. A Six-Layer Digital Twin Architecture for a Manufacturing Cell. In Service Orientation in Holonic and Multi-Agent Manufacturing; Borangiu, T., Trentesaux, D., Thomas, A., Cavalieri, S., Eds.; Springer: Cham, Switzerland, 2019; pp. 412–423. [Google Scholar]

- Rodríguez-Nieto, D.; Navas, E.; Fernández, R. Automated Seeding in Hydroponic Greenhouse with a Dual-Arm Robotic System. IEEE Access 2025, 13, 30745–30761. [Google Scholar] [CrossRef]

- Shamshiri, R.; Weltzien, C.; Hameed, I.; Yule, I.; Grift, T.; Balasundram, S.; Pitonakova, L.; Ahmad, D.; Chowdhary, G. Research and development in agricultural robotics: A perspective of digital farming. Int. J. Agric. Biol. Eng. 2018, 11, 1–14. [Google Scholar] [CrossRef]

- Barbie, A.; Hasselbring, W.; Hansen, M. Digital Twin Prototypes for Supporting Automated Integration Testing of Smart Farming Applications. Symmetry 2024, 16, 221. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).