3.1.1. A Systematic Literature Review (SLR) of the Analyzed Concepts

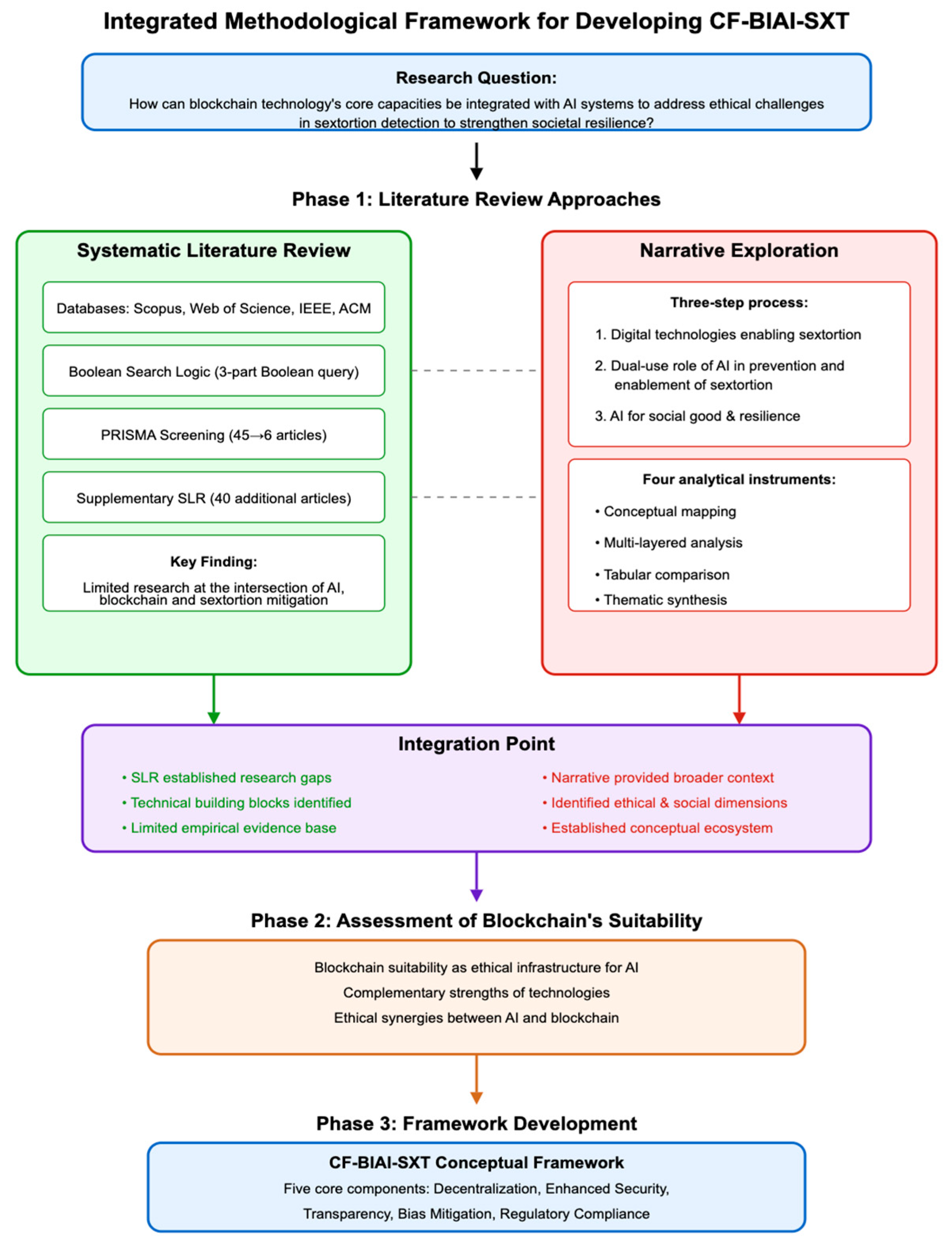

To fully deploy the SLR part of the exploratory investigation, we searched Scopus, Web of Science, IEEE Explore, and ACM Digital Library using keywords spanning AI, blockchain, and sextortion (2017–2025) and identified 45 candidate publications. We then applied PRISMA 2020 guidelines to our screening process, as illustrated in

Figure A2 in

Appendix A, which shows the flow of information through identification, screening, and inclusion. As described in the methodology, to enhance the transparency and reproducibility of our literature review and ensure unbiased selection, we used a rigorous, multi-reviewer screening approach. Each author of this paper independently screened the records using the Rayyan platform, a web tool for systematic review management. We included only studies that met all criteria (addressing AI and blockchain in the context of digital sexual exploitation with an ethics focus) and excluded others (see

Appendix A for detailed criteria).

To ensure that we keep the SLR as intended, at the intersection of AI, blockchain, ethics, and sextortion, we used Boolean logic in the search terms and presented them in

Appendix A. Thus, in our systematic review, we included only sources that: (1) integrate AI and blockchain (technologies relevant to our research question); (2) focus on digital sexual exploitation (e.g., sextortion contexts); and (3) address ethical considerations (such as fairness, transparency, or privacy in AI use). Studies that failed to meet any of these criteria were excluded. For instance, we omitted works with no AI or no blockchain component (16 articles), those unrelated to sextortion or digital abuse (12 articles), and those lacking any AI ethics dimension (11 articles).

By using the defined search for the period 2017–2025, we find the following literature results:

Scopus identified 6 papers from 2019 onwards, with 1 being a false report—5 articles;

Web of Science returned 0 results (even without a period range);

IEEE Explore returned 0 results (even without a period range);

ACM Digital Library returned 55 results for the period 2017–2025 without defining the search by timeframe; therefore, we can identify two brief conclusions: those are all articles on the defined topic from the database and the chosen timeframe is valid.

Although we initially excluded Arvix from the search, we nonetheless tried the query on this database as well and received 0 results. Similarly, Semantic Scholar returned 0 results for the same Boolean logic.

The limited number of relevant results may reflect a disciplinary/research dissemination skew caused by the fact that these databases tend to emphasize either highly technical implementations or formal computer science publications, with less coverage of interdisciplinary studies addressing ethics, governance, or social impact. It highlights a lack of holistic handling of macro issues with societal impacts by incentivizing researchers to focus on narrow issues, more suitable for publication. Moreover, the tight Boolean logic using AND operators may have narrowed retrieval too far for broader-indexing platforms. This limitation is to be mitigated by the supplementary SLR, as will be described further in this section.

We screened the 60 articles for duplicates and identified that ACM proposes both the Proceedings and the article in case there is a match, so we retained in the analysis just the article. This leads to retaining in the analysis only 40 references from the ACM Library. We applied the PRISMA methodology of literature review for the found articles (45 in total—5 from Scopus and 40 from ACM, all in English and peer-reviewed. See full list in

Appendix B) and proceeded with a screening based on the inclusion/exclusion criteria detailed in

Appendix A. Following the screening procedure identified that only 6 of the 45 articles fit the Boolean logic [

14,

15,

16,

17,

18,

19], an outcome that highlights a significant research gap at the intersection of AI, blockchain, and sextortion, rather than being an inadequacy. This limitation of the study is thus acknowledged explicitly and justifies our dual review approach: a targeted SLR to identify what little work exists, in combination with a broader narrative exploration to capture adjacent insights, as presented in the following

Section 3.1.2. The use of a narrative review, as informing a position paper, is recognized in emerging or interdisciplinary fields where empirical studies are few as a suitable means to synthesize and contextualize knowledge, as previously mentioned. Thus, although the quantitative aspect of the SLR is limited, our study remains comprehensive through the integrative strategy proposed in the methodological section presented previously.

The main reasons for the exclusion of the rest of the articles were

Lack of AI or blockchain relevance (16 articles)

No focus on digital abuse or sexual exploitation (12 articles)

Absence of ethical considerations such as fairness, transparency, or data privacy (11 articles)

We then coded the articles in Rayyan based on the following:

AI function (mitigation, surveillance, moderation, etc.),

Ethical focus (e.g., bias, explainability, misuse of data),

Governance model (centralized vs. decentralized),

Framework alignment (AI4SG, resilience, digital trust).

Considering that our review is exploratory and conceptual in nature, and our aim was to synthesize themes and inform a conceptual framework (rather than to evaluate an intervention’s effectiveness), we did not assign formal “quality scores” to the 6 articles included in the study. All selected works are peer-reviewed and from reputable databases, as per the initial requirement, and were deemed directly relevant to our research question, through the process of inclusion/exclusion by the authors. This inherently sets a baseline of credibility, in line with guidance on integrative reviews, relying more on thematic insight than on formal quality scoring. Moreover, in view of the nature of this study as a position paper, the quantitative risk-of-bias appraisal would add limited value.

However, for transparency, we have documented the qualitative coding protocol used in our review. Each of the 6 included studies was systematically coded along predefined dimensions: the role of AI (e.g., mitigation, detection), the ethical focus (fairness, privacy, etc.), the governance model (centralized vs. decentralized), and alignment with broader frameworks (AI for Social Good, societal resilience). The authors independently applied these codes to each article, then compared and reconciled any differences to ensure reliability, both for the SLR and the narrative review. In

Appendix A, we included the tables (

Table A1 and

Table A2) for the coding protocols for each stage. We also included a summary table (

Table A3) to how each study in the SLR seed 6 maps to these categories, providing a clear audit trail from literature to topics. This coding scheme, in line with established thematic analysis techniques for building conceptual models, connects to the cluster map below (

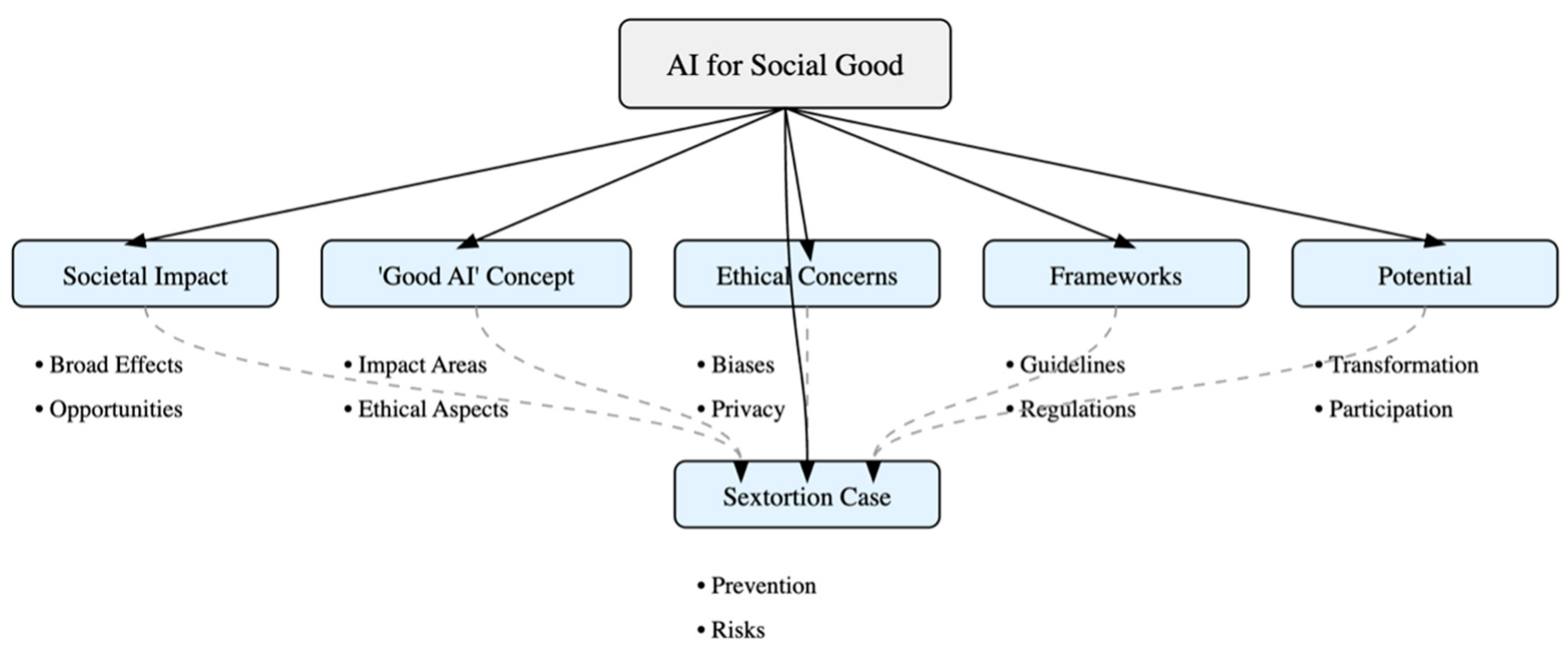

Figure 3) and ensures that the analytic process is traceable.

A cluster map of the 6 articles [

14,

15,

16,

17,

18,

19] considered for the systematic literature review is presented in

Figure 3. However, the limited number of articles and the fact that the criteria for the intersection exclude a significant part of the overall picture of sextortion as a societal resilience threat in the context of AI and blockchain lead to the need for a more encompassing presentation of the concepts at hand. Thus, the methodological approach to include a narrative exploration is sound and offers the ability to cover the issue more comprehensively.

Before presenting the narrative exploration, we chose to test whether the literature gap holds across a broader research landscape. For this, we extended the Boolean logic by using OR operators instead of AND operations to include studies that addressed AI-blockchain integration with explicit emphasis on ethics, privacy, transparency, and sextortion-related contexts. This expansion led to additional papers that offered insights into adjacent topics, hence were reviewed thematically and also included in the narrative exploration in

Section 3.1.2. We use Semantic Scholar and Rayyan as the main screening tools. It is to be noted here that this extra systematic review is meant to offer quantitative and thematic insights complementary to the SLR, not as a substitute. We screened 40 articles, and the findings of this supplementary SLR are structured in

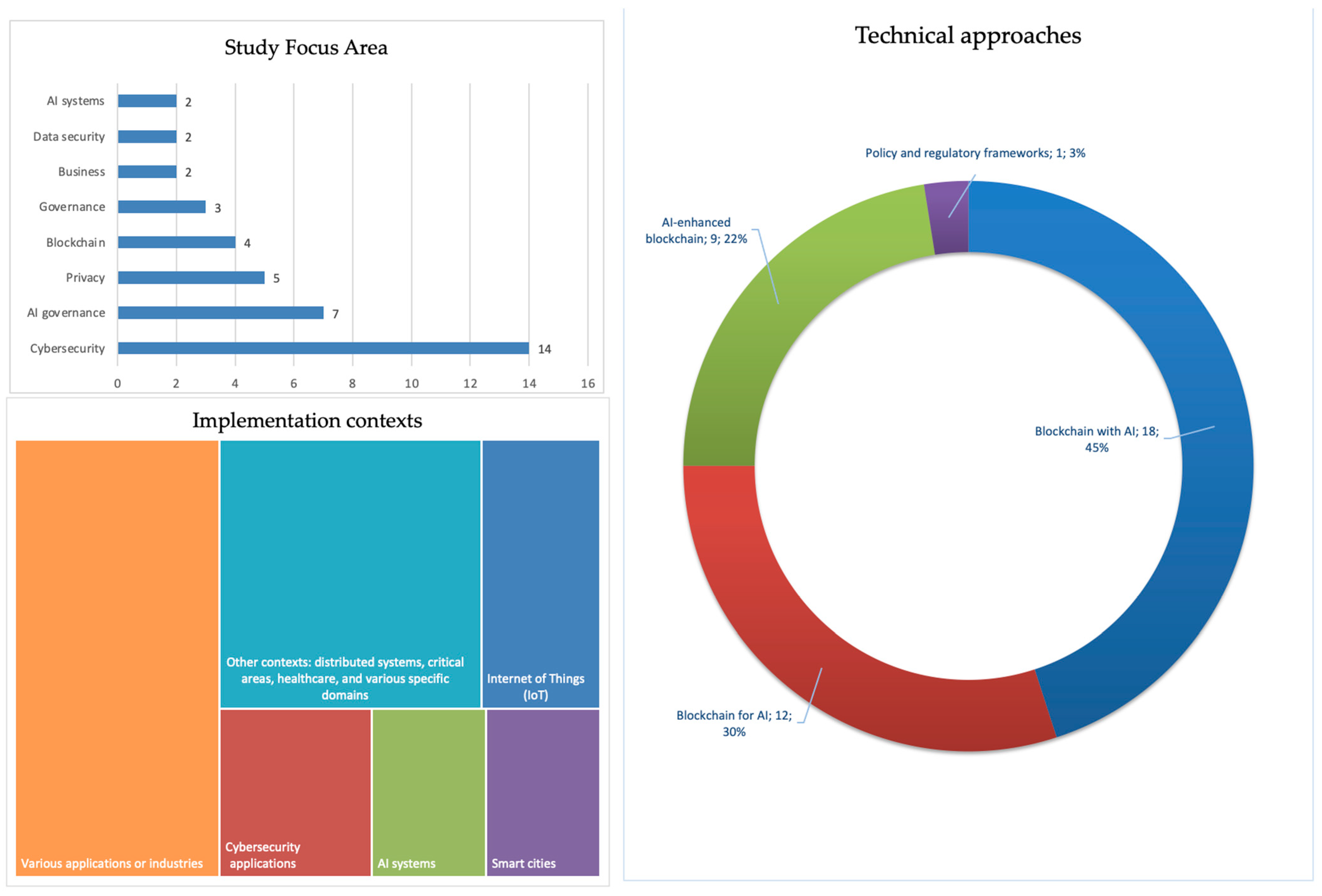

Figure 4.

This supplementary SLR confirms the rarity of full-stack frameworks but points to building blocks (e.g., federated learning, explainable AI, smart contracts) that inform the conceptual model developed further on.

On the thematic analysis presented by this supplementary SLR, we identify the following four areas (as shown in

Table 1), with blockchain-AI integration being the most developed.

While several studies propose concrete architectures combining federated learning, secure multiparty computation, and smart contracts, ethical frameworks appear less consistently and are concentrated around privacy, consent, and bias, with fewer addressing explainability or transparency. In governance terms, centralized architectures dominate, although several studies point towards decentralization through smart contracts or identity management. Most articles address AI functionality in contexts of moderation, mitigation, or detection, while only a minority directly engage with sextortion as a phenomenon. Sextortion mitigation per se is rare, but societal resilience is growing in interest, especially related to trust and stakeholder coordination, though largely extrapolated from other domains. Finally, the alignment with frameworks like AI4SG, or digital trust, or societal resilience is often implicit, thus confirming the gap that CF-BIAI-SXT seeks to mitigate. Building on the list of articles analyzed in this stage (excluded and included) and adding articles to connect to the regulatory aspects, as well as AI for Good, AI governance, digital trust frameworks, and the role of blockchain in ethical infrastructures, we create the narrative exploration that explains in detail the concepts linking them to the conceptual framework proposed further on.

3.1.2. A Narrative Conceptual Exploration

The SLR in the previous section maps the limited empirical footprint at the intersection of AI, blockchain, and sextortion. This section, working on those findings, adopts a broader epistemic lens, engaging with adjacent topics to build towards the conceptual framework. This shift allows for the connection of the technical architectures with ethical governance and societal resilience perspectives, critical to the design logic of CF-BIAI-SXT.

To ensure traceability of this narrative review, we used a modified coding sheet, similar to the one used for the SLR, to map every narrative sources considered and referenced. This coding sheet is presented in

Table A2 in

Appendix A, to allow external researchers to follow and replicate or extend our thematic decisions, although it was not meant to eliminate subjectivity. The snowball method with added reference points based on the concepts yielded a total number of 157 sources, of which 118 peer-reviewed and 39 non-peer-reviewed (news reports, policy/brief items, arXiv/working papers, or other gray-lit, such as the FBI web brief, OECD framework). These 157 sources include the 6 seed sources from the SLR and are listed in full in the references list. The corpus breakdown is as follows: 27% refer to digital-abuse technology, 19% to AI dual-use and risk, 22% to Ethical AI frameworks, 20% to blockchain capacity, and 12% to societal resilience. Their direct informing of the framework is detailed in the narrative review in this section, mostly in tabular format, with other sources linked indirectly being mentioned in other parts of the article. The corpus also includes survivor-voice studies, such as [

1,

35] that provide first-person evidence on coercion dynamics and thus, lead to informing the data-wallet consent logic of CF-BIAI-SXT.

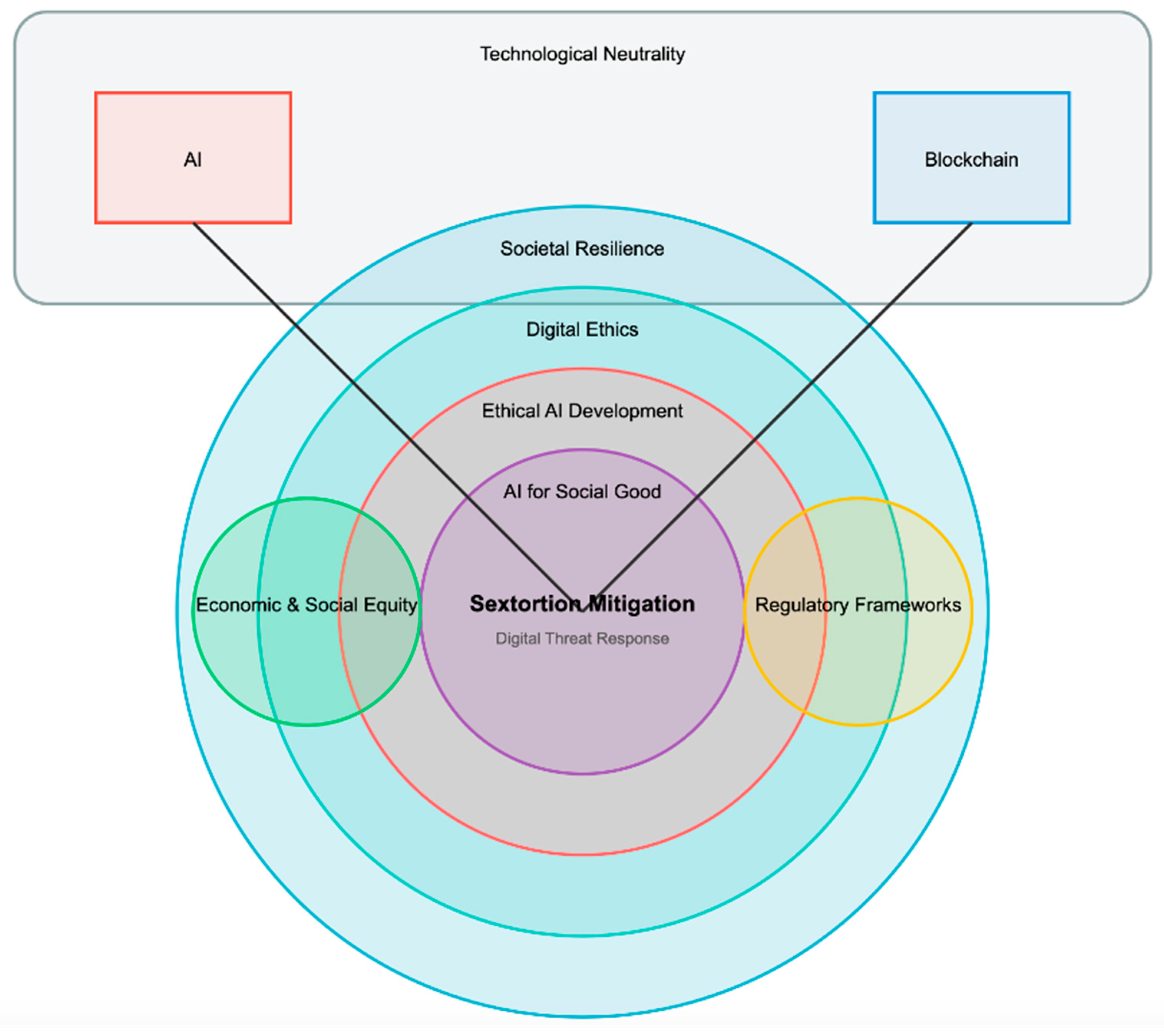

The conceptualization of sextortion mitigation as embedded in a multilayered ethical ecosystem that includes AI for social good, blockchain-enabled governance, societal resilience, and supporting regulatory and equity frameworks is achieved in a three-step process.

Firstly, we identify the digital technologies that provide the environment for sexual exploitation leading to sextortion. Sextortion, defined as a form of sexual exploitation in which victims are, e.g., extorted with their sexual images [

36], is a form of dissolution of societal resilience. It exploits vulnerabilities in social and economic structures and corrodes the integrity of society by breaking down structures meant to protect privacy and security. The harms of sextortion involve dignity, well-being, safety, and individual impact, touching on privacy, security, and societal consequences. It affects, at the global level, all social strata, although some may be more vulnerable than others.

Statistics on the topic is often missing, however, recent statistics from jurisdictions that collect data on the topic, such as those from the US Federal Bureau of Investigations (FBI) [

37], indicate “more than 13,000 reports of online financial sextortion of minors [with] at least 12,600 victims and led to at least 20 suicides” between October 2021 and March 2023. The victims are minors, typically “men between the ages of 14 to 17”; however, the issue is affecting all genders. In a study in 2024 [

37], the authors highlight that of close to 17,000 respondents, “14.5% reported victimization and 4.8% reported perpetration”, with men, LGBTQ+, and young victims more likely to report victimization than other categories. The same study affirms that “experiencing threats to distribute intimate content is a relatively common event, affecting 1 in 7 adults”. A report by the Korean Digital Sex Crime Victim Support Center [

2] mentions 10,305 confirmed cases of digital sex crimes, with “teenagers and people in their 20s [accounting] for 78.7 percent of reported victims” with “1384 cases involving image manipulation using AI and advanced technology. This marks a sharp increase from the 423 cases reported in 2023—more than triple the previous year’s figure”. The landscape is dire and becoming riskier by the day. To add to the risks, this digital threat breeds in fertile environments for manipulation, grooming, and blackmail, such as social media, messaging, and virtual reality platforms [

38,

39]. These, hence, exacerbate this form of abuse with a significant emotional toll on its victims [

40].

Although in most countries, sextortion is often not legally defined as a crime, authorities prosecute related crimes in varying ways between jurisdictions, categorizing it as child pornography, harassment, extortion, stalking, hacking, and violations of personal privacy [

24]. For instance, in the USA, sextortion is defined in two primary ways: as a threat to share a victim’s private sexual images to extort something from them or as coercion to make the victim send sexual material under threats. Federal law typically prosecutes sextortion as extortion or child pornography, depending on the victim’s age [

35]. The member states of the European Union also prosecute sextortion in a variety of ways according to domestic legislation, often through extortion, privacy violation, and sexual harassment charges that account for individual and broad social impacts, from defamation and integrity infringements to media manipulation and gender inequalities [

41]. For example, in France, illicit coercion of sexual favors faces civil and criminal charges such as sexual assault, extortion, blackmail, or corruption [

41]. In addition to this, the Digital Republic Law covers issues related to the unauthorized use of personal data, including non-consensual sexual imagery and deepfakes [

42]. Similarly, data protection laws are used to protect against sextortion in Germany, Spain, and Hungary, with legislation in the latter specifically including provisions for sexual exploitation, defined as coercing someone into sexual activities through threats, and, since 2013, sexual blackmail and extortion [

41]. While the USA’s prosecution approach through existing extortion laws provides flexibility, it fails to address the specific psychological harms of sextortion that specialized legislation like Germany’s might better target. This legal fragmentation creates significant challenges for cross-border enforcement and technology-based solutions.

Digital technologies play a role in enabling sextortion by providing environments for sexual exploitation. Hence, it is necessary to understand these digital technologies, the roles they play in sexual exploitation, and the impacts on the victims. We examined the literature articles [

38,

39,

40,

43,

44] in

Table 2 to identify social media platforms, chatting platforms, dating websites, and virtual reality platforms where sexual exploitation activities are prevalent. The findings [

38] show that mobile apps emphasize psychological impacts, but fail to differentiate between platform-specific risks, highlighting a critical gap when designing technological countermeasures. The broader categorization of platforms from [

43] offers more nuanced insights into how different technological environments create unique exploitation opportunities, suggesting that a one-size-fits-all mitigation approach is insufficient. These platforms enable criminals to stalk, coerce, threaten, and harass victims into sharing sexually explicit images and videos. Social media platforms also provide grooming channels to lure victims into various romance scams. These explicit images are then stored on cloud services, web hosting platforms, and other file hosting services to extort the victims, thereby resulting in sextortion cases. Extortion of victims has several health implications, such as psychological, emotional, and financial, which can lead to depression, self-harm, and, in the worst cases, result in suicide.

In this context, a case study on sextortion as a form of cyber abuse and growing societal concern, particularly in the case of young people and/or minors, can be a niche illustration of the ethical challenges of AI. Efforts to combat this cybercrime may benefit from the use of AI [

3,

45], while some countries (Indonesia, for example) are setting regulatory frameworks in place to deal with sexual violence crimes, including sextortion (the TPKS law of 2022 [

46]). On the opposite side of the spectrum, AI can be shown to leverage the sextortion efforts of perpetrators through dating apps [

47] or deepfakes [

4]. Despite timid advances in the topic, both in the literature and in regulatory and legal frameworks, the significant gap in empirical studies demonstrating the effectiveness of technological solutions (AI or other) in preventing and mitigating sextortion has yet to be addressed. This empirical gap stems from both methodological challenges in studying sensitive populations and the rapid evolution of digital technologies that outpace traditional research timelines. Furthermore, existing studies often focus on technical detection without adequately addressing the ethical dimensions of AI deployment in these contexts.

Table 2.

Digital technologies’ roles and impact in enabling sextortion.

Table 2.

Digital technologies’ roles and impact in enabling sextortion.

| Digital Technologies | Roles in Enabling Sextortion | Impact | Referenced Studies |

|---|

| Mobile apps, virtual reality platforms, and social media. | Non-consensual taking, sharing, or threats to share personal, intimate images or videos. | Psychological and emotional, social, financial, and behavioral impacts, and physical harm | [38] |

| Social media platforms, messaging apps, online dating sites, camera and video-enabled devices, email, and online communication channels | Grooming, harassment, and non-consensual sharing of intimate images, cyberstalking, romance scams, revenge porn and sextortion, coercive messages | Violence, digital harassment, image-based abuse, sexual aggression and/or coercion, and gender-/sexuality-based harassment | [43] |

| Social networking, online hosting services, and advanced encryption techniques | Recruit victims, advertise services, store and share illicit content, protecting their communications and data from detection | Mental health risks, psychological terrorism | [40] |

| Social media, messaging apps, GPS tracking apps, online video-sharing platforms, cloud storage, and digital media sharing | Coercion, harassment, and dissemination of sexually explicit content, coerced sexting and sextortion, cyberstalking and monitoring of victims, recording and distribution of sexual assaults, and storing and disseminating explicit content. | Sexual violence and exploitation | [44] |

| Internet, online platforms, mobile phones, messaging apps, and live-streaming technology | Advertise victims and connect with potential clients, communicate covertly, coordinate logistics, broadcast exploitative content, and non-consensual explicit content | Fear, anxiety, anger, humiliation, shame, self-blame, suicidal ideation and suicide, depression, financial scam | [39] |

| Educational platforms, poor digital literacy infrastructure | Lack of early cybersafety education enables grooming and manipulation | Psychological and emotional harm, especially to minors | [48] |

| IoT devices and infrastructure | Potential for remote access to cameras, microphones, and private data, enabling covert monitoring and exploitation | Surveillance-driven coercion, data exposure, and non-consensual recordings | [49] |

| Socio-technical platforms (architecture-level vulnerabilities rather than just app-level usage) | Hosting sensitive user data that can be exploited, potential detection gaps | Privacy violations, data exploitation | [50] |

The manner in which these identified technological enablers of sextortion highlight how centralized data storage and insufficient user control over intimate content create exploitation vulnerabilities directly informs our framework’s focus on decentralized control and enhanced privacy mechanisms.

Secondly, we identify the dual-use roles of AI in preventing and countering sexual exploitation on digital platforms and the potential ethical issues in automated AI systems. AI plays a role both in enabling and mitigating sexual exploitations that lead to sextortion cases. Even when AI is applied to help prevent sexual exploitation through various automated algorithms, there are also ethical concerns about the use and misuse of personal data. In

Table 3, we analyzed the following related articles [

3,

4,

14,

17,

18,

19,

49,

51,

52,

53,

54,

55,

56,

57,

58,

59,

60,

61,

62,

63,

64,

65,

66,

67,

68,

69,

70] to identify the roles of AI in enabling and mitigating sexual exploitation and collect the list of ethical concerns that are raised when AI is applied to address sexual exploitation problems. The role of AI in enabling sexual exploitation mostly involves the alteration of images and video recordings where victims’ faces or voices are used to replace original actors in sexually explicit content. These forms of alterations are commonly referred to as deepfakes. These digitally altered images and videos are then used to blackmail and extort victims on social media and chatting platforms, thereby resulting in sextortion. Other examples of AI usage in sexual exploitation include the sexualization of female AI agents.

According to the findings of the research in

Table 3, AI can be used in many ways to limit, combat, and prevent sexual exploitation on digital platforms. The same

Table 3 also highlights some ethical issues that arise, such as privacy issues due to data misuse and sensitivity, the accuracy of detection logic due to false positives, bias due to incomplete training datasets, security, and data confidentiality. Other ethical issues include transparency, trustability, and accountability of AI systems. There is also the problem of accessibility of AI tools and legal issues related to regulatory jurisdiction and different interpretations of sexual exploitation, issues that we explain in detail in the following paragraphs of this narrative exploration.

Thirdly, we clarify the emergence of AI for social good and societal resilience as anchors for ethical AI development.

AI for Social Good: With its ability to both help and harm, AI has immense promise in producing multidimensional impacts. We should properly harness its abilities, especially regarding social issues. This potential to address societal challenges is proven by solutions such as conversational AI tools being deployed for issues such as mental health awareness. For example, Ref. [

71] shows how an AI-based emotional chatbot can be used to detect mental issues such as depression by analyzing facial expressions and textual content produced by users, while studies [

72,

73] describe AI language models to identify depression and suicide prevention. However, there is a gap in understanding how AI may support the mitigation of long-term psychological effects and how it may be integrated into mental health frameworks. Often proposed individually, solutions discussed fail to consider how each component might work synergistically as part of a holistic support system, maximizing AI’s ability to meaningfully impact individuals and society. A balanced and holistic approach to embedding AI within existing complex support networks can maximize its capacity to benefit individuals and society at large.

Societal Impact and Ethical Complexity: The societal impact of AI goes beyond the micro-level of individuals interacting with technology, and the literature is booming with analyses of various aspects of its potential uses for social good. Before 2021, there were fewer than 450,000 results in Google Scholar on AI and social good; as of March 2025, there were close to 6 million, with the potential for an increase in this number (see also the analysis by [

74]). For example, Ref. [

75] explores the “potential economic, political, and social costs”, while [

76] investigates the ethical applications of AI in social systems, paving the way for a nuanced discussion on the topic of AI use in addressing social challenges. Similar issues are addressed in [

76], following an assertion that the wide implementation of AI systems goes beyond engineering to an intersection of technology and society, and proposes an illustration of the concept of “ethically designed social computing”. From the positive aspects of “accuracy, efficiency, and cost savings” [

77], issues such as privacy, trust, accountability, and bias must be considered [

78]. Diverse aspects related to education and critical thinking can lead to unequal deployment of such technology and ultimately to greater societal polarization [

79], an exacerbation of social inequality [

80], and dissolution of societal resilience. Similarly to the previous assertion, the critical analysis of this wide range of insights lacks clarity on the way biases and inequities resulting from AI may be mitigated to enhance societal resilience. The literature so far remains at the status quo level and assessment without dwelling on the next steps of a risk management process: risk interconnection and mitigation or reduction.

Resilient societies and “Good AI”: The idea of a ‘good’ AI society is not new. The work of [

81] starts a conversation on ten potential areas of social impact: “crisis response, economic empowerment, educational challenges, environmental challenges, equality and inclusion, health and hunger, information verification and validation, infrastructure management, public and social sector management, security, and justice”. In the same line, the work of [

82] maps 14 ethical implications for AI in digital technologies: “dignity and well-being, safety, sustainability, intelligibility, accountability, fairness, promotion of prosperity, solidarity, autonomy, privacy, security, regulatory impact, financial and economic impact and individual and societal impact.” All these implications and potential impacts are woven into a very complex ontological system of a society existing dually (in real and in digital) in which AI represents a new layer. An interplay of individual, institutional, and community capacities, societal resilience is based on coping, adaptation, and transformation and relies on holistic approaches, inter- and multi-disciplinary frameworks, and normative epistemological questions [

83,

84,

85]. Although AI is a potential tool to improve individual resilience (particularly given the theory of resource conservation, as discussed by [

86]), its role in community resilience is only beginning to be acknowledged. Firstly, societal resilience is promoted through adaptable and flexible systems and structures and innovation spaces [

87], with AI-supported operational cyber resilience [

88]. Secondly, AI’s own resilience must be assessed, and one way to do so is through agent-based modeling [

89]. However, more empirical research on how AI can be integrated to validly enhance social resilience without introducing new risks and vulnerabilities is needed.

Ethical Risks and Governance Challenges: In contrast, artificial intelligence (AI) is considered to pose significant risks to humans and societies if it is not ethically developed and used. Researchers, corporations, and NGOs, along with policymakers, explore maximizing AI’s benefits and capabilities. Yet, fast progress and implementation of AI solutions may outpace understanding of unintended effects. The challenges posed by AI are diverse [

78], ranging from algorithmic biases to the potential for humans to inherit AI errors. Fundamentally, AI must be fair, transparent, explainable, responsible, trustworthy, and reliable. Without these attributes, it remains a ‘black box’ where developers may themselves struggle to comprehend how the system generates its responses. It is of utmost importance that society (including all stakeholders) takes immediate steps to prevent AI tools from engaging in unpredictable behaviors and establish the credibility and trustworthiness essential to society.

Frameworks for Ethical AI, Guidelines and Ethical Principles, Regulatory and Legal Frameworks: As early as 2007, the authors in [

90] emphasized that evaluating technologies in isolation is futile due to their social implications. In this view, a series of multi-layered frameworks have been developed to assess AI’s impact potential for societal good. In [

91], an analysis of AI solutions was performed using four criteria: breadth and depth of impact, potential implementation of the solution, risks of the solutions (“Bias/Fairness/Transparency Concerns” and “Need for Human Involvement”), and synergies in the area of opportunity. Although [

92] claims that even before the widespread use of LLM, there was a need for a unified vision of the future of AI, the proposed guidelines fail to find a common thread. In their investigation of 84 guidelines, Refs. [

93,

94] highlight the consensus on fundamental AI ethics while noticing the high variation in how the principles are implemented. Based on [

95], on the ethics of algorithms, Ref. [

96] propose 7 essential factors for AI for Good: “(1) falsifiability and incremental deployment; (2) safeguards against the manipulation of predictors; (3) receiver contextualized intervention; (4) receiver-contextualized explanation and transparent purposes; (5) privacy protection and data subject consent; (6) situational fairness; and (7) human-friendly semanticisation”. Similarly, Refs. [

97,

98,

99] expand on the topic and refer to the need for a value-sensitive design, defined as a method to integrate values into technological solutions, while [

100] advocates for a socially responsible algorithm and [

101] proposes an ethics penetration testing for AI solutions.

Various countries are implementing AI regulations in a struggle against the black-box complexities of AI, particularly in balancing its benefits and potential harm. In the United States, the Biden administration has introduced an executive order advocating for ‘Safe, Secure, and Trustworthy AI’; Canada proposed an Algorithmic Impact Assessment; the World Economic Forum an AI Procurement in a Box, and the OECD a Framework on AI Strategies [

102]. The European Union is enacting its first AI regulations, although it requires a more integrated approach from the member states and governments [

103,

104,

105]. The EU AI Act establishes four categories of prohibited AI practices: “(1) AI systems deploying subliminal techniques; (2) AI practices exploiting vulnerabilities; (3) social scoring systems; and (4) ‘real-time’ remote biometric identification systems” [

105]. Still, existing frameworks, such as the Assessment List for Trustworthy AI (ALTAI), play a key role in guiding the development of fair and ethical AI [

106]. ALTAI, in particular, protects people’s fundamental rights [

107], while other concurrent regulations, such as the General Data Protection Regulation (GDPR), protect user privacy [

108]. The efficacy of these frameworks has yet to be completely determined. For example, Ref. [

107] argues that we must interpret AI frameworks like ALTAI from a systems theory standpoint to be applied in various disciplines, allowing “the integration of a rich set of tools, legislation, and approaches”. The scalability of ethical AI frameworks, in the context of their proper practical application in highly sensitive areas, such as sextortion, is also an area of potential improvement for current research. Beyond ethical frameworks, regulating AI through data privacy laws as a clear instrument presents several challenges. These challenges include controlling personal data, ensuring the right to access personal data, adhering to the purpose limitation principle, and addressing the lack of transparency in AI decision-making processes. Furthermore, AI systems often introduce privacy risks by obscuring algorithmic biases, complicating the enforcement of the right to be forgotten, and affecting the right to object to automated decision-making [

109]. Regardless of the scope, relevance, or enforcement of these regulations, AI applications pose significant risks beyond the range of data privacy laws.

Toward Blockchain-Enabled Ethical AI and Their Transformative Potential for a Resilient Society: The social good of AI goes beyond technology fixes; it calls for societal transformation. And ethical AI requires the participation of the communities that it seeks to enhance [

110]. Although significant progress has been made in investigating the potential of AI for societal resilience, there are two noticeable gaps. The first is a critical gap in understanding the perpetuation of biases, especially in the context of sextortion, linking social, anthropological, and technological concerns. The second is a lack of critical analysis on the potential risks and consequences, with most of the literature corpus being polarized or presenting pinpointed solutions. Moreover, the current literature lacks insight into the scalability and practical implementation of ethical AI frameworks, particularly in combating sextortion. This status quo underscores the need for both an ethical framework as the one proposed in this position document, and for comprehensive studies that critically assess limitations.

Although blockchain characteristics and capacities are referenced as part of the ethical ecosystem (

Figure 1),

Section 3.2 offers a dedicated assessment of this technology’s suitability as an ethical infrastructure (particularized on the case of sextortion), as the second phase of the research.