Abstract

Federated learning (FL) is an advanced distributed machine learning method that effectively solves the data silo problem. With the increasing popularity of federated learning and the growing importance of privacy protection, federated learning methods that can securely aggregate models have received widespread attention. Federated learning enables clients to train models locally and share their model updates with the server. While this approach allows collaborative model training without exposing raw data, it still risks leaking sensitive information. To enhance privacy protection in federated learning, secure aggregation is considered a key enabling technology that requires further in-depth investigation. This paper summarizes the definition, classification, and applications of federated learning; reviews secure aggregation protocols proposed to address privacy and security issues in federated learning; extensively analyzes the selected protocols; and concludes by highlighting the significant challenges and future research directions in applying secure aggregation in federated learning. The purpose of this paper is to review and analyze prior research, evaluate the advantages and disadvantages of various secure aggregation schemes, and propose potential future research directions. This work aims to serve as a valuable reference for researchers studying secure aggregation in federated learning.

1. Introduction

With the explosive growth of data, neural networks have achieved important results in computer vision, speech recognition, agricultural science, and other fields [1,2,3,4,5]. However, building joint datasets across organizations and individuals faces the dual challenges of data silos and privacy protection. Traditional data processing methods usually adopt centralized collection and unified processing, but they are prone to data leakage, especially in scenarios where multiple users share data [6]. In order to protect user privacy, countries have introduced relevant laws and regulations; for example, the Cyber Security Law of the People’s Republic of China clearly stipulates that network operators shall not disclose or provide personal information without authorization. Although these regulations effectively protect privacy, they also make data collection and model training more difficult.

In order to protect privacy and solve the data silo problem, federated learning has emerged. In 2016, Google first proposed federated learning [7,8], the core idea of which is to train models through local devices and send model parameters to a central server for global aggregation, thus realizing localized training of data and privacy protection. Compared with traditional centralized machine learning methods, federated learning avoids the risk of privacy leakage caused by centralized data storage and, at the same time, reduces communication overhead and improves computational efficiency.

However, federated learning has system vulnerabilities that can be exploited for attacks during the training process to transmit model data. For instance, a malicious centralized server may potentially reconstruct a user’s private data, and a malicious participant may also negatively impact the training of the global model. Therefore, designing secure and efficient aggregation algorithms becomes crucial to safeguarding the privacy and performance of federated learning. Current research focuses on combining privacy-preserving mechanisms with aggregation algorithms to address more complex threat models. In addition, since the participants are usually computationally resource-constrained devices, such as mobile terminals, the aggregation schemes also need to be characterized by high communication efficiency and fault tolerance. Given the growing demand for privacy protection, it is crucial to systematically analyze the strengths and limitations of existing security aggregation techniques. In this paper, we categorize and discuss mainstream schemes from the perspectives of security and efficiency, and evaluate their applicability scenarios.

The current research review on federated learning mainly focuses on its definition, application, classification, and system security, and seldom focuses on the main aspects of security aggregation. In summarizing the existing literature, we identify the following four deficiencies:

- There is no detailed introduction of privacy-preserving mechanisms applied to federated learning, and the discussion of security is limited to describing the development process of integrating privacy-preserving mechanisms with federated learning [9,10,11].

- In the discussion of security, only the means of attack and the defense methods that can be used are analyzed, and there is no systematic categorization of defense methods based on privacy-preserving mechanisms [12].

- The literature only analyzes the possibility of combining individual privacy-preserving mechanisms with federated learning, lacking a side-by-side comparison with other security aggregations [13,14].

- Existing work compares security aggregation algorithms with other aggregation algorithms, focusing on their differences, but lacks a longitudinal comparison of security aggregation algorithms [15,16].

Therefore, this paper primarily introduces the classification of federated learning from the perspective of secure aggregation, details the privacy protection mechanisms currently employed in federated learning, and categorizes the secure aggregation schemes based on privacy protection mechanisms. The main contributions of this work are as follows:

- This work classifies federated learning from the perspective of secure aggregation, provides a detailed overview of privacy-preserving mechanisms currently applied in federated learning, and categorizes existing secure aggregation schemes based on these mechanisms.

- This work evaluates the resource consumption, protected models, accuracy, and network structures of different schemes, with vertical comparisons of secure aggregation algorithms under the same privacy-preserving mechanism and horizontal comparisons across distinct mechanisms.

- This work examines the future research directions of secure aggregation and the associated challenges.

This paper is organized as follows: Section 2 introduces the definition, classification, and application scenarios of federated learning; Section 3 introduces the privacy-preserving mechanisms used in federated learning; Section 4 introduces the original aggregation algorithms for federated learning and analyzes and summarizes existing research on secure aggregation algorithms; Section 5 analyzes the challenges faced by secure aggregation in federated learning and the future research directions, and finally concludes the paper.

2. Federated Learning

2.1. Definition of Federated Learning

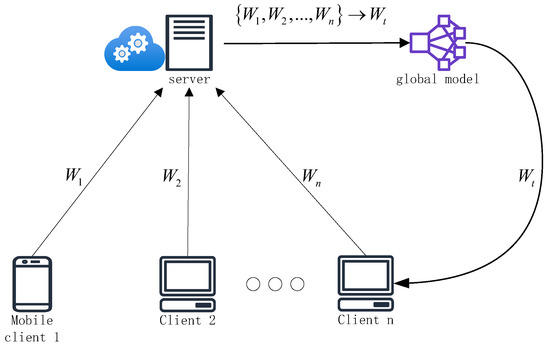

Federated learning is the process of distributed machine learning training deployed across multiple clients. To ensure data privacy, federated learning only allows clients to exchange model gradients with a central server. In this process, each client trains its own model using local data and then uploads the local model to the central server. After aggregating all received models, the server returns the new global model to each client, as shown in Figure 1.

Figure 1.

A schematic diagram of federated learning.

In practice, it is assumed that each n client holds a private dataset and has no direct access to the data of other clients. A typical training flow consists of the following three steps [8]:

- Initialization: At the t-th round of communication, the client downloads the latest model, , from the server for initialization.

- Local training: Each client, , iteratively trains on its own local dataset, , and hyperparameters, . The local model, , is updated according to and sent to the server.

- Model aggregation: The server aggregates the collected local models into a global model for the global model update.

2.2. Classification of Federated Learning

2.2.1. Data Partition

According to the difference between feature space and sample space [17], federated learning can be categorized into three types, which are horizontal federated learning, vertical federated learning, and federated transfer learning. Horizontal federated learning is suitable for scenarios that have the same feature space but different sample spaces. Vertical federated learning applies to scenarios that have the same sample space but with different feature spaces. Federated transfer learning applies to scenarios where the data comes from different participants that are very different in terms of features and samples.

Horizontal federated learning partitions the same feature space across participants’ data, brings together multiple data holders, and trains on data with the same features. Horizontal federated learning improves the accuracy and generalization ability of the model and is widely used in healthcare and finance.

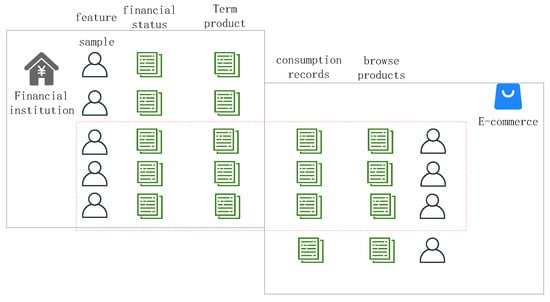

As shown in Figure 2, samples with the same features from different healthcare organizations are collected through federated learning, and the shared features are used to train the model. Using horizontal federated learning to train the model not only increases the total number of training samples but also improves the accuracy of the model.

Figure 2.

A schematic diagram of horizontal federated learning.

Vertical federated learning partitions the same sample space in the participant data to align the overlapping sample data between different participants and improve the feature dimension of the sample data, and it is mainly applied between non-competing companies or organizations.

As shown in Figure 3, financial institutions cooperate with e-commerce platforms to use different feature space data of the same users for model training to improve the feature dimension of the samples, better predict user consumption, and facilitate the rating of their service levels afterward.

Figure 3.

A schematic diagram of vertical federated learning.

Federated transfer learning derives common representations from different feature spaces and a limited set of common samples. Transfer learning is applied to scenarios where the relevant dataset is small, but more data is needed to optimize the model performance [18,19,20].

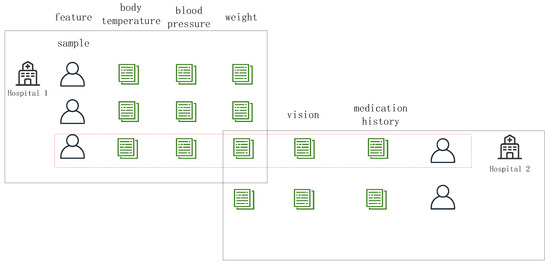

As shown in Figure 4, federated transfer learning can be used to transfer treatment and diagnosis information from different healthcare organizations to make a more comprehensive diagnosis of a disease. It can make training more flexible under different data structures, but the current research in federated transfer learning is not mature enough, so there is still much room for development.

Figure 4.

A schematic diagram of federated transfer learning.

2.2.2. Client Size

Based on the size of the number of clients, federated learning can be categorized into two types—cross-device federated learning and cross-silo federated learning. Cross-device federated learning refers to federated learning involving a large number of widely dispersed devices. Cross-silo federated learning involves a small number of organizations that store large amounts of sensitive data.

Cross-device federated learning is a federated learning approach trained using a large number of clients that have similar domains to the global model, such as IoT-based systems [21], where each device independently collects its usage data. The advantage of this method is that it maximizes the use of decentralized data sources while protecting user privacy.

Cross-silo federated learning typically involves large organizations or companies as clients, where different companies collaborate to build a federated system, usually ranging from as few as 2 to 100 devices, where each company maintains local data and performs model training based on its respective database. Cross-silo federated learning is more flexible in its implementation and can be trained on the data as needed by the organization.

2.2.3. Network Structure

Distinguished by network architecture, federated learning consists of centralized federated learning and decentralized federated learning. Centralized federated learning uses a central server to guide aggregation and synchronization. Decentralized federated learning allows clients to communicate directly with each other in a peer-to-peer (P2P) manner.

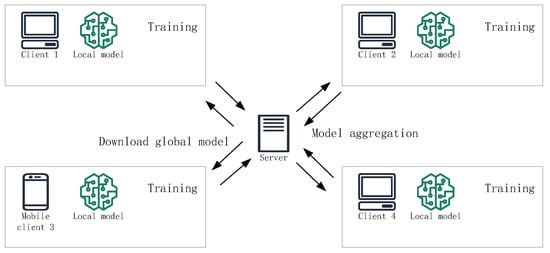

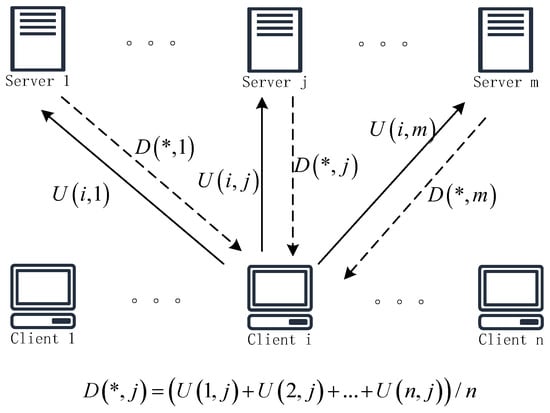

In centralized federated learning, each client trains a local model with its data, and the central server is responsible for model aggregation. Centralized federated learning is mainly for multi-user federated learning scenarios, where the enterprise acts as a server and coordinates the global model. The network topology of this model is shown in Figure 5.

Figure 5.

The network structures for centralized federated learning.

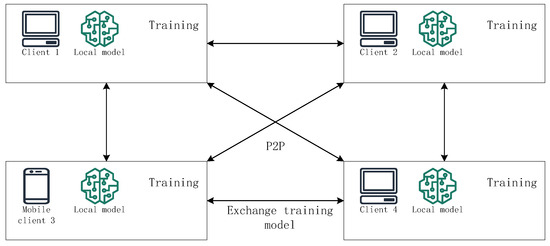

In decentralized federated learning, each client trains a local model based on its private data. After training, the client may choose to communicate with other clients, and the clients exchange or fuse local models. The network topology diagram adopted for decentralized federated learning is shown in Figure 6. Decentralized federated learning eliminates the dependence on a central server for model aggregation, and replaces it with algorithms that build trust and reliability, thus reducing the presence of untrusted servers and increasing resilience to network failures.

Figure 6.

The network structures for decentralized federated learning.

2.3. Applications

Federated learning is currently exhibiting its great potential in various fields, and applying federated learning to real-life applications can protect user data, improve the accuracy of models, and provide higher-quality services.

The main application areas of federated learning demand robust privacy protection, the ability to handle data heterogeneity, suitability for real-time scenarios, and efficient management of widely distributed data.

In the field of mobile devices, mobile applications are typically latency-sensitive. Federated learning supports model updates directly on local devices, reducing communication with central servers and enabling low-latency responses. Given the heterogeneity of mobile applications due to diverse user behaviors, federated learning can aggregate individualized device models to deliver personalized services.

In agriculture, data is widely distributed across various sources such as sensors and drones. Federated learning minimizes the communication overhead associated with centralized data transmission, leverages data diversity, and enhances model generalization. Agricultural applications often require real-time predictive analytics, such as yield forecasting and disease classification. Federated learning supports edge device-based model updates, improving real-time performance and alleviating cloud computing burdens.

In healthcare, sensitive data (e.g., medical records, imaging data, genetic information) poses significant privacy risks if exposed. Federated learning provides robust privacy protection by enabling multi-institutional collaborative modeling without sharing raw data. It addresses the challenges of data ownership and privacy in clinical research, drug development, and other fields by facilitating the integration of multi-center data while safeguarding patient confidentiality.

In the field of renewable energy, it is challenging for a single model to adapt to complex environments due to significant regional variations in meteorological conditions, such as wind speed and solar irradiance. Therefore, improving model generalization capability is essential. Federated learning has been widely adopted as it enables the sharing of modeling experience and enhances both the generalization and prediction accuracy of models across diverse scenarios.

Federated learning is more frequently applied in these fields due to its inherent advantages in privacy protection, real-time processing, support for training on distributed data, and the maturity of its technologies and ecosystem.

2.3.1. Mobile Device

Federated learning is applied to smartphones to predict human behavioral trajectories while preserving user privacy. Google built federated learning to train language models among Android mobile users [22] to further improve keyboard prediction of keystrokes, which improves keyboard prediction accuracy and protects privacy compared to the previous one. The use of federated learning to train word- and character-level recursive neural network models for predicting keystrokes and emoticons [23] enables server-based data collection and training in a commercial environment.

Mobile devices also include devices in IoT environments, and federated learning has also been applied to IoT. In [24], federated learning is combined with smart home IoT to build a federated multi-task learning framework that learns customized, context-aware policies from multiple smart homes while preserving privacy.

Applying federated learning to wireless communications, such as edge computing and 5G networks, can solve the energy, bandwidth, latency, and data privacy problems in wireless communications. In [25], federated learning is applied to wireless networks and edge mobile device computing, where a distributed stochastic gradient descent scheme over a shared noisy wireless channel enables faster model convergence, higher accuracy, and more efficient utilization of limited channel bandwidth resources. In [26], federated learning is applied to heterogeneous mobile edge devices to address three key challenges: communication efficiency, statistical heterogeneity, and system heterogeneity.

2.3.2. Agriculture

Currently, in agriculture, there is a growing demand for data decentralization and privacy protection, such as farm sensor data [27,28] and yield monitoring data [29,30]. Federated learning enables smart agriculture to efficiently collect specific environmental variables, optimize planting strategies, and predict the impact of environmental changes on crop yield and quality, thereby outperforming traditional data collection methods [31,32,33].

In the agricultural supply chain, federated learning facilitates the integration of data across various stages, enhancing transparency and efficiency [34]. For instance, an intelligent agricultural machinery collaboration model based on federated learning [35,36] can coordinate agricultural machinery systems with external supply chains, storage, and transportation networks. This integration significantly improves operational efficiency, task quality, and land utilization.

In precision agriculture, federated learning enables efficient management of agricultural resources, ensuring sustainable agricultural development. For example, it supports precise irrigation and water-saving systems [37], accurate crop growth predictions [38], and effective crop monitoring [39].

Using federated learning for smart agriculture enables disease classification and detection. In [40], federated learning combined with convolutional neural networks was utilized to detect watermelon leaf diseases. The scheme was evaluated across five different clients and five disease categories, as follows: healthy, anthracnose, downy mildew, powdery mildew, and bacterial blight mosaic. The results demonstrated a detection accuracy of 97% for these specific diseases.

2.3.3. Healthcare

Federated learning has a promising application in the healthcare domain because of its data privacy-preserving properties. For individual healthcare organizations, the amount of patient data may not be sufficient to train predictive models that can be put into use [41]; therefore, the use of federated learning techniques can build federated networks for cross-regional hospitals with similar medical information. The emergence of federated learning breaks down the barriers that prevent data from being shared between different healthcare organizations for disease analysis.

In [42], federated learning is applied to the collection and analysis of electronic health records, enabling the development of secure data harmonization and federated computational procedures to transform extensive electronic health records into meaningful phenotypic clinical concepts for analysis.

In [43], federated learning is applied to address the problem of searching for similar patient matches. The similarity between patients is efficiently computed using their hash codes, enabling healthcare organizations to summarize the general characteristics of similar patients.

Automatic extraction of medical information using an unstructured text format can be performed by federated learning. Liu et al. [44] combined federated learning with natural language processing for the first time, which can make full use of clinical records from different hospitals to train a machine learning model specific to a medical task and improve the quality of a particular clinical task.

2.3.4. Renewable Energy

In the field of renewable energy, wind and solar power generation heavily rely on sensitive data such as geographical and climatic conditions. Traditional centralized model training often suffers from privacy leakage and data silo issues. Thus, federated learning has emerged as a key approach to preserving data privacy while enabling collaborative model development across multiple parties.

In [45], deep reinforcement learning is integrated with federated learning to develop an ultrashort-term wind power forecasting method, which effectively protects data privacy while maintaining forecasting accuracy compared to traditional approaches.

In [46], a hybrid prediction model based on federated learning is proposed to address the limitations of traditional photovoltaic (PV) power prediction methods, particularly their inability to share data and their poor generalization performance. Experimental results show that the prediction accuracy of the proposed model improves by more than 20%.

3. Privacy-Preserving Mechanisms

In this section, privacy-preserving mechanisms such as homomorphic encryption, secure multi-party computation, differential privacy, and blockchain techniques will be introduced, along with privacy-preserving techniques for each privacy-preserving mechanism used for federated learning.

3.1. Homomorphic Encryption

Homomorphic encryption (HE) is a form of encryption that allows computations to be performed on encrypted data, and the results of these computations remain valid after decryption. The secret encryption and decryption of homomorphic encryption satisfy the equation . When the ciphertext is equal to , and , it follows that . Here, m is the data to be encrypted by each user, denotes the data to be encrypted by each user, is the decryption function, is the encryption function, refer to the public key used for encryption and the private key used for decryption, respectively, c is the value of the encrypted data after the execution of the function, f is the function to be executed; the function is generally set to its aggregation algorithm in federated learning.

Homomorphic encryption can be classified according to the number of arithmetic operations allowed on the encrypted data. The three classes of homomorphic encryption are as follows:

- Partially homomorphic encryption (PHE): Permits an unlimited number of operations, but restricts them to a single type, such as addition or multiplication.

- Somewhat homomorphic encryption (SWHE): Permits certain types of operations but limits the number of uses, such as allowing only one multiplication.

- Fully homomorphic encryption (FHE): Allows infinite types of algorithmic operations and an infinite number of times.

The homomorphic encryption algorithms applied in federated learning are described as follows:

- Paillier partially homomorphic encryption: The Paillier encryption algorithm is an asymmetric encryption algorithm with additive homomorphism properties and consists of three main steps:

- 1.

- Key generation: (1) Randomly select large primes such that p is not equal to q. (2) Calculate , , is the least common multiple. (3) Randomly select , and calculate the auxiliary value . Here, , is the multiplicative group modulo . (4) Use as the public key and as the private key.

- 2.

- Encryption: For plaintext , randomly select and compute the ciphertext .

- 3.

- Decryption: For ciphertext , compute the intermediate value required to restore the plaintext .

- ElGamal partially homomorphic encryption: The ElGamal encryption algorithm is a public key encryption scheme with multiplicative homomorphism properties, comprising three main steps:

- 1.

- Key generation: (1) Choose a large prime p and a generator g of the multiplicative group . (2) Select a private key . (3) Calculate and use as the public key.

- 2.

- Encryption: For plaintext , randomly select an ephemeral key , compute , , and represent the ciphertext as the pair

- 3.

- Decryption: Using the private key x, compute the shared secret , and then restore the plaintext , where is the modular inverse of s modulo p.

- CKKS Fully Homomorphic Encryption: The CKKS encryption algorithm is a fully homomorphic encryption algorithm designed for approximate computation, consisting of the following five main steps:

- 1.

- Key generation: (1) Parameter selection: Defining the ring polynomial , which serves as the polynomial modulus in the CKKS encryption scheme. is the modular inverse of s modulo p. N is a power of 2, and X is a formal variable. Choose a set of prime moduli to control noise growth. Define the scaling factor to map floating-point numbers to integer polynomials. Select a noise distribution, such as a discrete Gaussian distribution, to generate a small noise polynomial e. (2) Generating keys: Generate private key , where s is a small polynomial randomly selected from the ring. Generate public keys ,, where a is a random polynomial, and e is noise.

- 2.

- Data encoding: (1) Data vectorization: Encodes the plaintext data to be encrypted by the user as a polynomial . (2) Scaling polynomials: Scale the polynomial by a factor of ,

- 3.

- Encryption: Generate ciphertext , , , r is a random polynomial, , are noise polynomials.

- 4.

- Decryption: Decrypt the ciphertext using private key s,.

- 5.

- Plaintext recovery: , after which it is converted to plaintext data .

The above three homomorphic encryption schemes are relatively mature and have distinct application scenarios. Paillier and ElGamal are PHE schemes, and they are generally more efficient than the CKKS scheme. Paillier supports additive homomorphism properties, offering higher precision in additive aggregation and lower algorithmic complexity. ElGamal supports multiplicative homomorphism properties, making it suitable for weighted multiplications and key exchange scenarios. Unlike Paillier and ElGamal, CKKS supports the direct encryption of real numbers, making it well-suited for high-precision model training tasks.

3.2. Secure Multi-Party Computation

Secure multi-party computation (SMPC) enables multiple participants to collaboratively perform a computational task while preserving the confidentiality of their individual private data. That is, there are n participants in a mutually untrusting network. These participants aim to keep their data private from others while collaboratively computing a function to obtain the result .

SMPC encompasses cryptographic techniques with privacy-preserving properties for multiple parties, including but not limited to secret sharing, homomorphic encryption, bit commitment, zero-knowledge proofs [47], hybrid networks [48], and inadvertent transmission [49]. Current applications of SMPC in federated learning primarily involve integrating fundamental secret-sharing schemes into federated learning systems. Secret sharing is a cryptographic technique where a secret holder S divides a secret m into n parts and distributes them to a group of participants ; each participant receives a corresponding share . To reconstruct the secret, only an authorized subset of participants can collaboratively recover m, while unauthorized participants cannot gain any information about the secret. The secret-sharing schemes applied to federated learning are described as follows:

- Shamir’s secret sharing: In 1979, Adi Shamir proposed a secret sharing scheme rooted in the Lagrange interpolation theorem. The scheme leverages polynomial operations over finite fields to securely distribute a secret among multiple parties. Specifically, the Shamir secret sharing scheme for works as follows: To share the secret, s, a trusted dealer first selects a large prime number, p, such that . Then, random elements are chosen from the finite field to construct a polynomial , . Then, the trusted dealer selects n distinct non-zero elements from the finite field , computes , and assigns the share to . In the secret reconstruction phase, at least t shares from the participants are required to reconstruct the secret. The reconstruction formula is as follows:

- Verifiable secret sharing: A verifiable secret-sharing scheme is an enhancement of the traditional secret-sharing scheme, addressing the issue of malicious or deceptive behavior that may occur among participants in the traditional approach. This improvement makes the scheme more suitable for federated learning environments, where malicious clients and servers may exist. In scenarios where not all participants are necessarily honest, verifiable secret sharing allows honest participants to use cryptographic tools, such as commitment schemes or zero-knowledge proofs, to validate the consistency of secret shares provided by others. When dishonest participants are present, a verifiable secret-sharing scheme with fault-tolerance mechanisms enables the remaining participants to recover the secret value by adhering to the protocol rules.

- Additive secret sharing: In additive secret sharing, all secret sharing processes are implemented using additive methods, where the secret s is represented as . The secret distributor assigns the secret shares m and d to the participants. Each participant receives their respective secret shares as follows:Participants calculate as follows:The secret can be recovered by using the reconstruction formula for any t participants.

The above secret sharing schemes each present specific advantages and limitations when applied in federated learning. Verifiable secret sharing schemes can detect malicious clients, whereas Shamir’s secret sharing and additive secret sharing lack this capability. Shamir’s secret sharing supports both addition and multiplication operations and generally provides acceptable computational efficiency. Additive secret sharing is generally more efficient but typically supports only addition; implementing multiplication requires additional mechanisms. Verifiable secret sharing offers strong security against malicious clients, but it typically incurs significantly higher computational and communication overhead.

3.3. Differential Privacy

Differential privacy (DP) [50], introduced by Dwork, is a mathematical framework for quantifying privacy, specifically designed to address the issue of information leakage in statistical databases. DP assumes a randomized algorithm and two neighboring datasets, D and , differing by only one record. Algorithm satisfies -DP if it adheres to the following equation.

where is the privacy budget, denotes the probability, S represents the set of all possible outputs of the algorithm , and is a relaxation term used to bound the probability of model failure. The smaller is, the more indistinguishable the outputs of on D and are, resulting in a higher privacy level but lower utility.

The primary method for achieving DP involves adding noise, which is randomized based on the input or output to obscure the actual data and ensure privacy. Sensitivity plays an important role in determining the appropriate noise level to add. It is formally defined as follows:

where is the query function, and p represents the norm type of the vector. The noise generation mechanism applied in federated learning is elaborated as follows:

- Laplace mechanism [51]: DP is achieved by adding noise drawn from the Laplace distribution to the input or output. The probability density of the Laplace distribution is as follows:The sensitivity of the query function in the Laplace mechanism is defined as follows:Based on the above, the definition of Laplace DP can be stated as follows: Assume a query function , , where represents Laplace random noise. The random algorithm is defined to satisfy -differential privacy.

- Gaussian mechanism [52]: The Gaussian mechanism is used to achieve DP by adding Gaussian noise to the input or output. The Gaussian distribution is mathematically defined as follows:The sensitivity of the query function in the Gaussian mechanism is defined as follows:Based on the above, the definition of Gaussian differential privacy can be stated as follows: Assume a query function , , where for any , , and . Then, the randomized algorithm satisfies -differential privacy.

- Exponential mechanism [53]: The exponential mechanism is designed for non-numerical DP, and its sensitivity is defined as follows:where D is the dataset, q is the quality function that evaluates the quality score of each output result on the dataset, and r is the output result. When the randomized algorithm outputs r with a probability proportional to , then satisfies -differential privacy.

Each of the aforementioned noise mechanisms applied in federated learning has a distinct focus. The Laplace mechanism allows for direct noise control and is suitable for parameter updates and gradient uploads; however, it introduces relatively high noise, which can significantly degrade model accuracy. The Gaussian mechanism produces smoother noise compared to the Laplace mechanism, but requires careful calibration of the sensitivity parameter. The exponential mechanism is suitable for discrete selection problems and is often applied in scenarios involving categorical outputs or limited candidate sets. However, it requires the design of a well-defined quality function to guide the selection process.

3.4. Blockchain

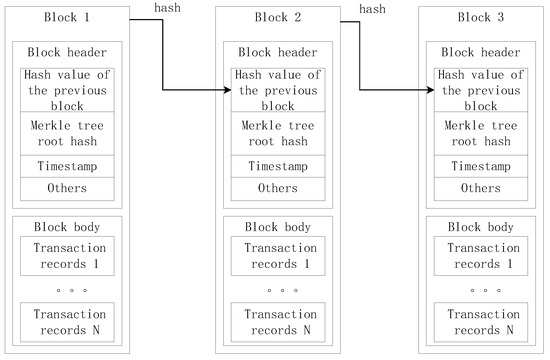

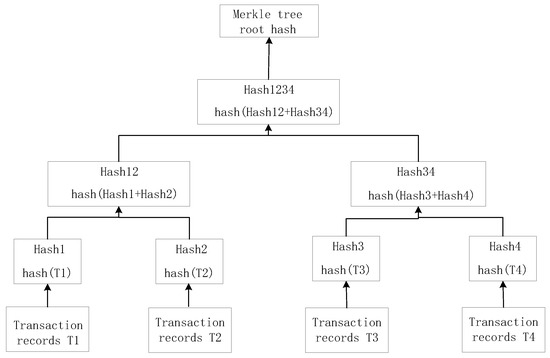

A blockchain is an append-only distributed ledger designed to record transactions among participants, ensuring immutability and resistance to tampering. The blockchain structure is viewed as a linear chain, with transactions appended in batches of blocks. Each block contains transaction data and block header information, and is linked to its immediate predecessor to form the chain. The block header comprises the previous block hash, the Merkle tree root hash, and a timestamp. The structure of the block is illustrated in Figure 7, and the Merkle tree structure is illustrated in Figure 8, using four transactions as examples.

Figure 7.

The structure of the block.

Figure 8.

The structure of a Merkle tree is illustrated using four transaction records as examples.

Blockchain provides a unified quantitative benchmark for federated learning, including evaluation metrics for model contribution (accuracy gain), data quality (label accuracy), and training behavior (convergence curve). These standards are enforced through smart contracts, ensuring that all participants collaborate on a fair and transparent basis, thereby enhancing both system credibility and model performance.

The consensus mechanism of the blockchain is the core technology that ensures data consistency among network nodes. It enables agreement on the blockchain state through a set of protocols. The consensus algorithm, as the core mathematical method of the mechanism, ensures that all nodes agree on each new block added to the blockchain. The consensus algorithm used in federated learning is as follows:

- Proof-of-work (PoW): Originally implemented in Bitcoin, the PoW algorithm addresses the issue of consensus through a computational puzzle that is difficult to solve but easy for others to verify. The core idea is to demonstrate validity through computational effort. PoW uses the process of solving puzzles to determine member selection. While PoW enables blockchain membership selection, it demands significant computation, leading to high resource consumption and low transaction throughput [54,55,56].

- Proof-of-stake (PoS): The core idea of PoS [57] is that a node demonstrates ownership of virtual resources by staking assets. This ownership determines its eligibility to participate in the consensus process and its level of influence. However, the security of PoS depends on the distribution of assets and places nodes with more assets in a more influential position in system operations.

- Delegated proof-of-stake (DPoS): DPoS [58] is a variant of proof-of-stake that introduces a democratic, voting-based membership selection process. Nodes holding stakes vote to select a delegate node, which enables the delegate node to verify and produce blocks.

- Practical Byzantine fault tolerant (PBFT): PBFT [59] is a mechanism that remains operational even in the presence of faulty nodes. The PBFT algorithm consists of three primary phases, as follows: the pre-preparation phase, the preparation phase, and the submission phase. The pre-preparation and preparation phases establish a total order of messages, while the preparation and submission phases guarantee consistent request ordering across all nodes.

The above consensus algorithms are applied to federated learning primarily to enhance decentralization, auditability, participant management, and fault tolerance against malicious clients. The proof-of-work consensus algorithm provides strong tamper-resistance and ensures the integrity of model uploads, offering high security. However, its high latency and computational energy consumption make it unsuitable for real-time federated learning tasks. The proof-of-stake and delegated proof-of-stake algorithms improve training and consensus efficiency compared to proof-of-work, making them more suitable for large-scale federated learning scenarios. However, they offer relatively limited decentralization. The practical Byzantine fault tolerance algorithm can resist Byzantine attacks and is suitable for scenarios involving a small number of trusted nodes. However, it incurs high communication overhead, which limits its scalability.

4. Aggregation Techniques for Federated Learning

Federated learning is a distributed machine learning approach that generates a global model by aggregating local models trained by multiple clients while protecting client data privacy.

In centralized federated learning, model aggregation is performed by a server. In this process, each client trains the model on its dataset and uploads the training results to the server. The exchanged information may include model parameters, gradients, or the model itself. Finally, the server integrates all models to generate the global model, and this information is used for subsequent model updates.

In decentralized federated learning, model aggregation occurs through client-to-client communication, where one client initiates cooperation with its neighbors to aggregate models. The process of merging locally generated models into a global model in federated learning is called aggregation techniques.

The aggregation technique in federated learning involves more than simply merging models for updates; other metrics, such as dataset size, model performance, and loss function, are also considered in aggregation algorithms. During federated learning, the aggregation algorithm plays a crucial role in integrating results and enhancing training efficiency.

4.1. Fundamental Aggregation Algorithms

Traditional aggregation algorithms have been proposed to address communication efficiency and privacy preservation issues in federated learning. These traditional aggregation algorithms do not optimize the aggregation mechanism itself; instead, they enhance the efficiency and accuracy of model training by employing mathematical techniques and tuning various parameters. These algorithms are usually integrated into the federated learning framework as foundational algorithms. The commonly used traditional aggregation algorithms are as follows:

- FedAvg: The FedAvg [60] algorithm is a classic federated learning aggregation algorithm, where a subset of clients is randomly selected for aggregation in each training round. During aggregation, client parameters are weighted and averaged, with weights determined by the client’s data volume relative to the total data volume. FedAvg has efficient communication, supports non-independent and non-identically distributed (non-IID) data, and achieves high accuracy. However, when the degree of non-IID data distribution is too high, model convergence slows down. Furthermore, security considerations are lacking, failing to ensure participant trustworthiness. FedAvg updates the global model using the following equation:where is the aggregated global model, represents the set of selected clients, denotes the locally updated model for client k after local training, and is the weight factor.

- FedProx: FedProx [61] generalizes and reparameterizes FedAvg to address local optimization challenges in stochastic gradient descent (SGD)-based algorithms. It introduces a correction term to the client-side loss function, improving model performance and enhancing convergence speed. The correction term is defined as , the L2 norm of the difference between the local model and the global model, where is the penalty constant for the regularization term, designed to penalize clients with large deviations from the global model, thereby constraining the behavior of clients participating in training.

- Scaffold: Scaffold [62] is an update process that corrects both the global and local models by calculating the differences between the server-side and client-side control variables. This method effectively addresses the client drift problem caused by data heterogeneity in FedAvg. The model update in Scaffold consists of the following four steps:

- The client-side local model is updated as follows:

- The client-side control variable is updated as follows:

- The server global model is updated as follows:

- The server control variable is updated as follows:where represents the learning rate, K denotes the number of local update steps, is the gradient computation function, S stands for the selected client set, and N is the total number of clients.

- FedBN: FedBN [63] trains a local model on each client and incorporates a batch normalization (BN) layer for normalization. FedBN is designed to address the heterogeneity of federated learning data, particularly the feature drift issue. The FedBN normalization layer algorithm is as follows:where t is the training period, k is the client serial number, l is the number of neural network layers, and P is the set of clients.

- FedDF: FedDF [64] applies the concept of distillation to model fusion. Each client trains a local model, which is subsequently fused through distillation. FedDF is designed to address model heterogeneity and data heterogeneity with a certain level of robustness. The FedDF distillation algorithm is as follows:where represents the Kullback–Leibler divergence, is the softmax function, is the step size, and denotes the output of the k-th client model in the t-th round at the d-th batch.

4.2. Secure Aggregation Algorithms

Although federated learning does not disclose local data, sensitive information may still leak during model exchanges. Secure aggregation algorithms integrate privacy-preserving mechanisms with aggregation to ensure the confidentiality of model information. This section introduces some of the commonly used secure aggregation algorithms.

4.2.1. Secure Aggregation Based on Homomorphic Encryption

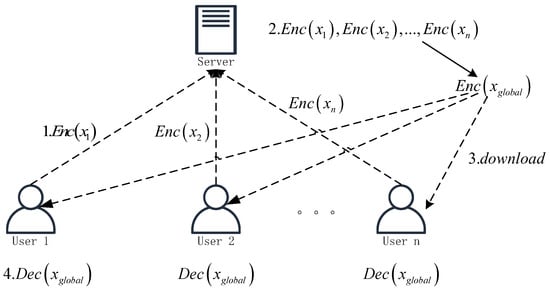

In federated learning, honest-but-curious servers may attempt to infer private data from the model information uploaded by users. Homomorphic encryption allows servers to perform aggregation without decryption, preventing access to the original data. Secure aggregation based on homomorphic encryption typically involves a four-step process:

- Each user locally encrypts the trained model using homomorphic encryption to obtain , where represents the trained model data. The encrypted data is then uploaded to the server.

- The server performs the aggregation operation directly on the encrypted data to obtain the global model.

- The user downloads the global model.

- The user decrypts the global model locally using their private key.

The schematic diagram of the aggregation process is shown in Figure 9.

Figure 9.

Schematic diagram of the homomorphic encryption-based secure aggregation process.

Secure aggregation based on homomorphic encryption in federated learning is realized through underlying cryptosystems. Currently, there are three main types of encryption schemes based on cryptosystems, as follows:

Partially homomorphic encryption. Partially homomorphic encryption is now widely used in secure aggregation for federated learning [65,66,67,68,69,70,71,72,73,74,75,76,77,78,79,80,81,82]. Among these, Paillier cryptosystems are employed in studies [65,66,67,68,69,70,71,72], where various schemes process data differently to improve model performance and reduce communication overhead. For instance, in [71], gradients are selected and quantized using batch cropping to reduce communication overhead. Experiments on the MNIST and CIFAR-10 datasets with 50 edge nodes demonstrate that the proposed scheme achieves higher accuracy than plaintext models, with the single aggregation time on the MNIST dataset ranging from 0.01 s to 0.10 s, indicating a significant improvement in communication efficiency. In [72], a federated learning scheme that integrates gradient compression and homomorphic encryption techniques is proposed to reduce the communication overhead on the client side through gradient compression, while preventing gradient privacy leakage. Security is enhanced by introducing noise, encrypting, and signing the aggregated model before uploading it to the aggregation server. Experimental results on the CIFAR-10 dataset show that gradient compression reduces data transmission and helps preserve privacy, albeit at the cost of slightly reduced accuracy. When the compression rate reaches 50%, the model achieves an accuracy of 89.12%, which is comparable to the uncompressed model’s accuracy of 89.15%.

A lightweight secure aggregation scheme based on ElGamal homomorphic encryption is proposed in [73]. This scheme integrates federated learning into the deep learning of medical models for IoT-based healthcare systems. Employing cryptographic primitives such as masking and homomorphic encryption enhances local model protection, preventing attackers from inferring private medical data through model reconstruction or inversion attacks. Experimental results show that this approach improves communication efficiency by over twofold compared to baseline algorithms and achieves 99.1% accuracy on MNIST after 506 training rounds.

The scheme presented in [76] employs the Joye–Libert partially homomorphic encryption system to implement an additive homomorphic encryption scheme. Its core involves secure multi-party global gradient computation based on active user data, demonstrating low communication overhead and robustness against user dropouts.

To mitigate risks posed by malicious participants colluding with the server to compromise other participants, a federated learning framework combining the Rivest–Shamir–Adleman (RSA) algorithm with Paillier encryption is proposed in [77]. In terms of accuracy, this scheme achieves performance equivalent to unencrypted models after 30 training rounds on the MNIST dataset. Regarding computational overhead, it introduces only a linear increase proportional to the number of parameters.

Fully Homomorphic Encryption. In [83,84,85,86,87], fully homomorphic encryption is also performed on the global model for federated learning to further protect the global model rather than just the local model. In this case, the global model is encrypted for the user, who needs to train their model on the ciphertext, which requires multiplicative homomorphic operations in addition to additive homomorphic operations. This necessitates fully homomorphic encryption systems capable of supporting these operations.

Hybrid Scheme. Refs. [81,82] propose combining homomorphic encryption with other privacy-preserving mechanisms to make federated learning more adaptable to various scenarios. For example, Ref. [81] combines differential privacy with homomorphic encryption, proposing a new multi-party learning framework for privacy preservation in vertically partitioned environments. The core idea leverages the functional mechanism, addressing the challenge of applying differential privacy in vertically partitioned scenarios. This is achieved by adding noise to the objective function. Experiments demonstrate that for linear regression, the scheme achieves a mean square error of 0.007, which is very close to the 0.0044 of privacy-preserving linear regression. For logistic regression, the scheme’s utility deteriorates as the privacy budget decreases, achieving an accuracy of 0.8132 ± 0.0231 when the privacy budget is 10, approximating privacy-preserving logistic regression.

In [82], secure multiparty computation is combined with homomorphic encryption to implement the scheme in a cloud-based federated learning service scenario. However, the appropriate number of clients must be carefully determined, as the communication overhead increases when the server and clients use encrypted model vectors to protect model parameters. Experiments show that the computational overhead of the baseline algorithm in the same scenario is 2.3 times higher than this scheme. The key size of homomorphic encryption significantly impacts runtime. Combining it with other privacy-preserving mechanisms can result in high computational overhead, highlighting the need to balance security and computational efficiency.

Partially homomorphic encryption offers faster encryption and decryption, low communication overhead, and minimal impact on model accuracy. For example, Refs. [73,76] demonstrates lower communication costs and computational overhead compared to [83], while achieving higher model accuracy. However, Ref. [76] does not support multiplication operations and is, therefore, unsuitable for complex model updates.

Generally, fully homomorphic encryption incurs longer training times per round and higher communication costs, making it unsuitable for edge deployments. Nevertheless, it provides strong security guarantees, supports complex computations, and ensures controllable accuracy degradation. For example, Ref. [83] defends against N-1 attacker inference, reconstruction attacks, and membership inference, whereas Ref. [73] fails to defend against malicious clients tampering with model updates.

Hybrid encryption schemes can mitigate accuracy loss through mechanisms such as differentially private balancing. They typically incur moderate communication costs, which can be further reduced using compression or pruning techniques. For example, Ref. [81] incurs lower communication costs and computational overhead than [76], but achieves lower model accuracy. However, these schemes tend to be more complex to implement and less practical in real-world deployment scenarios.

In this paper, secure aggregation schemes based on homomorphic encryption are categorized into partially and fully homomorphic encryption, as summarized in Table 1.

Table 1.

Secure aggregation schemes based on homomorphic encryption.

4.2.2. Secure Aggregation Based on Secure Multi-Party Computation

Secure multi-party computation addresses the collaborative computation challenge by splitting each local model into multiple secret shares and distributing them among participants. The aggregated model is then computed using these secret shares, ensuring privacy throughout the process. A schematic diagram of secure aggregation based on secure multi-party computation is shown in Figure 10.

Figure 10.

Schematic of secure aggregation based on secure multi-party computation.

Some previous works have investigated the privacy preservation of secure multi-party computation for machine learning. Bonawitz et al. [88] first introduced the concept of secure aggregation into federated learning by analyzing the security of prior methods and constructing SecAgg, a secure aggregation protocol based on Shamir’s secret sharing. Currently, secure aggregation for multi-party computation can be categorized into three types depending on the secret sharing scheme:

Shamir’s secret sharing. Once Shamir’s secret sharing was applied to federated learning, several studies [89,90,91,92,93,94] have focused on improving aggregation schemes based on Shamir’s secret sharing. In [89], a variant of SecAgg, a secure aggregation protocol, was proposed. The core idea is to model node sharing as a sparse random graph instead of the complete graph used in SecAgg, by selectively performing secret sharing on only a subset of clients. Experiments show that this protocol achieves comparable reliability in learning algorithms and data confidentiality while requiring only 20-30% of the resources needed by SecAgg.

To address user dropout and mitigate attacks by semi-honest and active malicious adversaries, Ref. [90] proposed a scalable privacy-preserving aggregation scheme. Theoretical and experimental analyses demonstrate that the scheme tolerates participant dropout at any time and defends against such attacks through appropriate system parameter configurations. Moreover, the runtime improves by a factor of 6.37 compared to existing schemes.

In [91], a scheme was introduced for dynamically designing the content and objects of secret sharing. This approach enables participants to incorporate secret gradient sharing within their respective groups and significantly reduces communication costs by replacing high-dimensional random vectors with pseudo-random seeds. Experiments indicate that the scheme ensures robust privacy preservation even with honest-but-curious servers and participant collusion. Regarding communication costs, training a LeNet network with 136,886 parameters on the MNIST dataset incurs a communication cost of , compared to for the baseline algorithm.

To protect decentralized learning models from inference attacks and privacy leakage, Ref. [92] proposed a secure aggregation scheme based on secret sharing for decentralized learning. This work provides valuable insights for designing more efficient decentralized learning architectures. Experimental results reveal that this scheme requires only 0.21 s of computation time per iteration on the MNIST dataset, compared to 59.54 s for traditional schemes.

For long-term privacy preservation in federated learning, Ref. [93] proposed a privacy-preserving aggregation protocol designed for multiple training rounds. Experimental results show that the protocol enhances privacy preservation while increasing the communication cost by only 0.04 times in a system with 100,000 users compared to previous schemes.

In [94], federated learning is applied to the healthcare domain to predict patient severity. Shamir’s secret sharing is employed to ensure secure communication between clients and the edge aggregator. A dynamic edge thresholding mechanism is integrated, enabling an adaptive update strategy that can accept or reject model updates in real time. Experiments conducted on real-world ICU datasets (MIMIC-III) show that encryption of model updates increases computation time by approximately 50–60% per round and memory consumption by 35–40%. However, these overheads remain within a feasible range for real-world deployment. The accuracy degradation compared to the baseline scenario without secure multi-party computation is limited to 1–2 percentage points.

Verifiable secret sharing. Most aggregation schemes based on secure multi-party computation focus on protecting local models, without considering the security of the global model. This limitation allows malicious servers to tamper with the global model, potentially reducing training efficiency and increasing the risk of exposing users’ sensitive information. To verify the correctness of aggregation results returned by servers, several studies [95,96,97,98,99] have proposed methods based on verifiable secret sharing schemes.

The first privacy-preserving and verifiable federated learning framework, VerifyNet, was introduced in [95]. It ensures the confidentiality of users’ local gradients during the federated learning process through a double-masking protocol. Additionally, it allows the cloud server to provide proof of the correctness of aggregation results, ensuring they are not tampered with. Experimental results show that training a convolutional neural network on the MNIST dataset, with 500 users and a total of 500,000 gradients, requires 70 MB per user for a single parameter update iteration.

In [96], a non-interactive, verifiable, and decentralized federated learning scheme was proposed. This scheme decentralizes the process by splitting users’ secret inputs and distributing them to multiple servers. A subset of these servers collaborates to correctly reconstruct the outputs, ensuring the results are not tampered with. Experiments demonstrate that this approach enables distributed aggregation of secret inputs across multiple untrusted servers and outperforms prior methods.

To enhance the efficiency of federated learning while reducing communication, computational, and storage costs for low-resource devices, Refs. [97,98] proposed optimizations that maintain the confidentiality of users’ local gradients and verify aggregation results. Experimental results indicate that the schemes reduce device power consumption due to lightweight computation.

In [99], a verifiable federated learning scheme was designed to protect sensitive training data in industrial IoT environments and prevent malicious servers from returning falsified aggregated gradients. This scheme employs Lagrangian interpolation, carefully setting interpolation points to verify the correctness of aggregated gradients. By combining Lagrangian interpolation with blinding techniques, it achieves secure gradient aggregation. Notably, the scheme maintains a constant validation overhead compared to existing methods, regardless of the number of participants.

Additive secret sharing. The traditional secret sharing scheme uses Shamir’s secret sharing, which splits the secret into multiple shares based on polynomial interpolation and recovers the secret through Lagrange polynomials. However, this algorithm has high computational and communication costs, so some research efforts have focused on lightweight improvements [100,101,102,103,104,105]. Among them, Refs. [100,101] focus on preventing information leakage in centralized federated learning systems and adopt encryption methods that differ from traditional schemes.

In [100], a partial encryption method is used, encrypting only the first layer of the local model with additive secret sharing, while the rest is sent directly to the central server. Experiments show that the secret share generation time decreases from 2.7081 s to 0.6951 s and the secret share aggregation time decreases from 0.0019 s to 0.0008 s compared to applying secret sharing to the entire local model.

Ref. [101] focuses on training split models in a vertical federated learning scenario. To address performance degradation caused by client discretization and privacy leakage from client-embedded information, the FedVS framework for synchronous split vertical federated learning is proposed, which reconstructs the set of user embeddings in a lossy manner by designing an additive secret sharing scheme. Experiments show that this framework guarantees user privacy and improves performance in scenarios with discrete clients compared to baseline methods.

Refs. [102,103] study decentralized federated learning systems based on secure multi-party computation, exploring optimizations from different perspectives to achieve high accuracy, low communication costs, and scalability without compromising privacy.

In [102], the optimization of the additive secret sharing algorithm is considered by replacing the secure summation building block from [106] with the secure summation algorithm from [107], simplifying the protocol while eliminating the need for public key encryption.

Considering client selection prior to aggregation, Ref. [103] proposes a hierarchical mechanism based on secure multi-party computation that restricts model aggregation to a small number of aggregation committee members. Experiments demonstrate a 90% reduction in exchanged messages on the MNIST dataset and a 22% accuracy improvement compared to locally trained models.

In [104], to reduce the computational and communication costs of existing secure aggregation protocols and improve robustness to client dropout, a secure aggregation protocol is proposed with a novel multi-secret sharing scheme based on the Fast Fourier Transform. Experimental results show that this protocol achieves significantly lower computational costs than existing schemes while maintaining comparable communication costs.

In [105], a scheme combining secure multi-party computation and differential privacy techniques is proposed to prevent local and global models from leaking user data information, protecting the privacy of both the computation process and the results. Experimental results show that the scheme is scalable and robust to client dropouts, and when the number of clients reaches 1000, its efficiency is comparable to that of FedAvg.

Shamir’s secret sharing, verifiable secret sharing, and additive secret sharing do not theoretically affect the model training process or the numerical representation of gradients. For example, Refs. [92,97,100] report modeling accuracies consistent with FedAvg.

In terms of security, verifiable secret sharing offers the highest level of protection, as it guards against malicious clients. Shamir’s secret sharing provides a moderate level of security, while additive secret sharing offers the most basic protection. For example, Ref. [97] can verify aggregation integrity and protect against server tampering, while Ref. [92] provides drop tolerance for clients.

However, compared to [92,97], Ref. [100] offers only basic protection against server–client collusion.

Regarding communication and computational overhead, the order from highest to lowest is as follows: verifiable secret sharing, Shamir’s secret sharing, and additive secret sharing.

In this paper, secure aggregation schemes based on secure multi-party computation are categorized according to the secret sharing schemes they employ, as summarized in Table 2.

Table 2.

Secure aggregation schemes based on secure multi-party computation.

4.2.3. Secure Aggregation Based on Differential Privacy

Differential privacy protects individual records from inferential attacks by introducing random noise to the model data. Typically, the added noise ensures that the risk of privacy leakage remains minimal and acceptable. Unlike cryptographic approaches, differential privacy-based methods generally incur low computational overhead. However, the introduction of noise during the learning process can lead to a reduction in the accuracy of the trained model.

Differential privacy frameworks in federated learning can be classified into three categories, as follows:

- Local differential privacy (LDP): Local differential privacy is designed for scenarios with untrusted servers, where each client adds perturbation noise to its data before sending it to the central server. By ensuring that the added noise satisfies the client’s differential privacy requirements, the client’s privacy remains protected regardless of the actions or behaviors of other clients or the server.

- Global differential privacy (GDP): Global differential privacy is typically employed in scenarios involving a trusted server, where the server adds differential privacy-compliant noise during the aggregation process. This approach protects user privacy while enabling the construction of a more practical model by introducing controlled noise into the global model maintained on the server.

- Distributed differential privacy (DDP): Distributed differential privacy does not require a trusted server and aims to achieve strong privacy guarantees with minimal noise addition. The system must pre-determine the noise budget required for each training round to ensure sufficient privacy protection. In each round, the noise addition task is allocated evenly among clients, each of which adds the minimum necessary noise to perturb its model update. Subsequently, the client’s update is masked before transmission to ensure that the server learns only the aggregated result. Distributed differential privacy typically incurs substantial communication overhead.

Secure aggregation schemes based on differential privacy can be categorized according to their noise generation mechanisms. Existing studies are typically divided into the following three categories:

Laplace mechanism. Data models for federated learning are protected by differential privacy based on the Laplace mechanism, as presented in [108,109,110].

In [108], to prevent attackers from partially reconstructing the original training samples using model parameters, a federated learning scheme that combines model segmentation techniques with differential privacy methods is proposed for mobile edge computing. Experimental results demonstrate that the scheme provides strong privacy guarantees while maintaining high model accuracy. Specifically, training a convolutional neural network on the MNIST dataset with 300 users, 50 communication rounds, and a high perturbation strength still achieves over 85% accuracy.

In [109], recognizing that existing protection methods cannot fully safeguard user privacy during iterative global model updates in federated learning, a scheme combining differential privacy with lightweight encryption is proposed. This scheme perturbs the privacy-sensitive parameters and transmits them in ciphertext form. Trained on the MNIST dataset with a convolutional neural network, experiments show that the scheme ensures strong security in client–server interactions and achieves a model accuracy of 97.8% when the privacy budget and number of users are set to 10 and 100, respectively.

In [110], federated learning is applied in an IoT environment to implement an adaptive gradient Laplace noise addition mechanism. The noise level in each round is determined by a dynamically allocated privacy budget. Experiments are conducted on the Tiny ImageNet and DeepFashion datasets, comparing the proposed method with both an equal privacy budget allocation strategy and a baseline model without privacy protection. The proposed method consistently outperforms the baseline, with the accuracy gap reaching its maximum at 50 iterations.

Gaussian mechanism. The commonly used mechanisms in differential privacy are the Laplace mechanism and the Gaussian mechanism. However, the mean squared error of the Laplace mechanism is significantly higher than that of other mechanisms, making the Gaussian mechanism the most commonly used in federated learning based on differential privacy, e.g., [111,112,113,114].

In [111], the privacy budget and communication rounds under convergence constraints are analyzed using an adaptive algorithm. Theoretical analysis is conducted to determine the optimal number of local differential privacy stochastic gradient descent iterations between any two consecutive global updates. The scheme dynamically searches for the optimal performance of differential privacy federated learning. Experiments on the MNIST, FMNIST, and CIFAR-10 datasets show that, with a privacy budget of 5.25, accuracies of 98.82%, 84.58%, and 57.25% are achieved, respectively.

In [112], the local dataset is pre-partitioned into multiple subsets for parameter updates. The issue of increased parameter sensitivity is addressed through data splitting and parameter averaging operations. Experiments on the FEMNIST dataset, with a training duration of 180,000 s, demonstrate that this approach improves accuracy by 8% compared to the baseline scheme.

In [113], the heterogeneous privacy requirements of different clients are explicitly modeled and exploited. The work investigates optimizing the utility of the federated model while minimizing communication costs. The proposed scheme significantly improves model utility, achieving better noise reduction and performance compared to FedAvg.

In [114], to address privacy leakage caused by model parameters encoding sensitive information during training, a training scheme using global differential privacy is proposed. A dynamic privacy budget allocator is implemented to enhance model accuracy. Experimental results demonstrate that the scheme effectively improves training efficiency and model quality for a given privacy budget.

Exponential mechanism. To select the optimal term among multiple candidate output terms and handle high-dimensional feature parameters, the application of exponential mechanisms in federated learning has been proposed, as demonstrated in [115,116,117].

In [115], a federated learning framework based on joint differential privacy is introduced to incentivize client participation in model training and prevent malicious participants from poisoning the model. The framework employs two game-theoretic mechanisms, formulating client selection as an auction game. Clients report their costs as bids, and servers adopt payment strategies to maximize returns. Experimental results indicate that, under dirty label attacks, the traditional scheme suffers a 4–5% accuracy degradation, whereas this framework limits the degradation to 1–2%.

In [116], the authors focus on model parameter updates and observe that these updates in federated learning involve high-dimensional, continuous values with high precision, rendering existing local differential privacy protocols unsuitable. To address this, the LDP-Fed algorithm is proposed, leveraging the exponential mechanism to handle high-dimensional, continuous, and large-scale parameter updates. Experiments on the FashionMNIST dataset demonstrate that this scheme achieves a final accuracy of 86.85%, outperforming the baseline algorithm.

In [117], the impact of parameter dimensions on privacy budgets was analyzed. It was found that increasing parameter dimensions significantly inflates the privacy budget, and the larger variance induced by the perturbation mechanism degrades model performance. To mitigate this, a filtering and screening mechanism based on the exponential mechanism is proposed. This mechanism selects better parameters by evaluating their contribution to the neural network. Experiments on the MNIST dataset, with five edge nodes under non-independent and homogeneous distribution, show that each participant reduces communication costs by at least 60%, while achieving an accuracy of up to 94.63%.

To balance privacy guarantees with model accuracy and practicality in differential privacy-based federated learning systems, distributed differential privacy mechanisms have been proposed in studies such as [118,119,120]. For instance, the work of [119] introduces a distributed differential privacy federated learning framework named Dordis, which implements a novel “add-delete” scheme to precisely enforce the desired noise level in each training round, even when some sampled clients drop out. Experimental results indicate that this framework achieves an optimal balance between privacy protection and model utility, while enhancing training speed by a factor of 2.4 compared to existing methods addressing client dropouts.

The differential privacy schemes described above are commonly used in federated learning. In terms of accuracy, the exponential mechanism has minimal impact when its quality function is well-designed. The introduction of the Laplace mechanism may reduce accuracy by approximately 3–5%, while the Gaussian mechanism typically leads to a smaller accuracy drop of around 1–2%.

Regarding communication cost, the exponential mechanism incurs extremely low overhead. The Gaussian mechanism introduces only mild noise, which allows for the use of pruning, quantization, and compression techniques, keeping communication cost controllable. In contrast, the Laplace mechanism introduces higher noise amplitude, making it unsuitable for compression; maintaining sufficient precision during transmission leads to increased communication cost. For example, Ref. [117], which employs the exponential mechanism, has the same communication cost as FedAvg. In contrast, Ref. [108], which adopts the Laplace mechanism, incurs a slight increase in communication cost, while [111], which applies the Gaussian mechanism, achieves a reduction of approximately 30%.

As for computational overhead, both the Laplace and Gaussian mechanisms are relatively lightweight, while the exponential mechanism imposes a moderate computational burden.

Secure aggregation schemes based on differential privacy are categorized in this paper according to their noise generation mechanisms, as shown in Table 3.

Table 3.

Secure aggregation schemes based on differential privacy.

4.2.4. Secure Aggregation Based on Blockchain

In terms of security, blockchain is characterized by decentralization, minimizing reliance on a central server. This architecture distributes trust from a single entity to selected aggregators, thereby reducing the risk of a single point of failure. In the context of federated learning, this property is particularly beneficial for secure aggregation, as it prevents any single aggregator from becoming a privacy bottleneck or an attack target. Blockchain’s authentication and traceability features ensure that data is both tamper-proof and auditable, which can be directly leveraged to verify the integrity of model updates during the aggregation process. Additionally, the open ledger functionality allows all transactions to be validated by other nodes, thereby enhancing system transparency and providing verifiable guarantees of aggregation correctness.

Every model update uploaded to the blockchain must satisfy consensus conditions, and the combination of consensus mechanisms and cryptographic techniques (e.g., digital signatures, zero-knowledge proofs) provides strong resistance against Byzantine attacks, thereby improving the robustness of secure aggregation against malicious participants. Furthermore, interactions between users in a blockchain system are conducted anonymously, with no central server maintaining user information, thereby preserving user privacy during aggregation—a key requirement in federated learning.

In terms of efficiency, blockchain can offer incentive mechanisms to ensure that high-performance edge devices are adequately motivated to contribute to the federated learning system. These incentives can also promote honest participation in secure aggregation, encouraging nodes to follow protocols that uphold data privacy. Moreover, participants in the blockchain network can dynamically join or leave while maintaining high availability, which is essential to ensure the continuity of secure aggregation in real-world federated settings.

The inherent characteristics of blockchain make its integration with federated learning a promising research direction, and numerous studies have begun to explore this synergy [121,122]. Unlike traditional federated learning systems, this approach enables fully decentralized federated learning, offering greater flexibility. It can be tailored to diverse scenarios using dynamic aggregation schemes governed by smart contracts, thus enabling secure, automated aggregation without relying on a trusted third party. These smart contracts can enforce privacy-preserving rules and detect anomalies during the aggregation phase, providing an effective solution for handling non-IID data while ensuring security and trust.

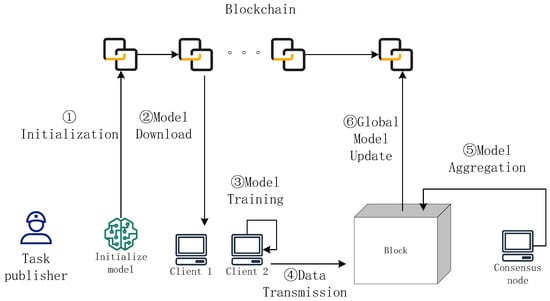

Figure 11 shows a schematic diagram of secure aggregation based on blockchain. The specific process is as follows:

Figure 11.

Schematic of secure aggregation based on blockchain.

- Initialization: The task publisher initializes the parameters of the global model and publishes them to the blockchain.

- Model download: Each client obtains the global model and determines whether to proceed to the next training round.

- Model training: Each client trains local models on its local data.

- Data transmission: All trained local models are uploaded and recorded in a blockchain block.

- Model aggregation: The selected consensus node uses the consensus algorithm to aggregate the local models and generate the global model.

- Global model update: The resulting global model is updated and recorded in a block, which is then appended to the blockchain.

The framework for applying blockchain technology in federated learning can be classified into two categories:

- Full decentralization: In fully decentralized federated learning, each node can act as a consensus node, playing the role of a central server to lead a training round, with a probability proportional to its available resources.

- Partial decentralization: In partially decentralized federated learning, the system balances computational cost and security by algorithmically selecting candidate blocks or designating nodes responsible for selecting candidate blocks to achieve an optimal trade-off.

Secure aggregation based on blockchain can be categorized according to the consensus algorithms used, with existing approaches divided into the following four categories: