1. Introduction

In recent years, with the rapid development of science and technology, the railway industry, as a traditional industry closely related to people’s livelihoods, has undergone an overall process of informatization and digitization. The safety and robustness of the railway system are crucial to the survival and development of the nation, society, and the people. Among them, railway data are a crucial operational resource and a key driving force for development in the railway industry, and its security has become an indispensable part of the stable operation of railway systems. At present, the railway industry has moved away from traditional paper-based storage and adopted digital formats, which increases the risk of data theft by malicious actors [

1]. Among a large number of data in the railway domain, there is a significant amount of sensitive data related to national security and citizens’ privacy. How to successfully identify and effectively protect them has always been a difficult problem to solve. After investigating a large number of railway data, we found that most of it is structured data. We thus need to design a set of methods for sensitive data identification and automatic classification for structured data in order to help the railway domain identify and protect sensitive data.

A number of sensitive information identification methods have been proposed for structured data. Wang [

2] classified structured data into three forms: single-attribute columns, multi-attribute columns, and the entire dataset. They proposed a double Bloom filter-based identification method for single-attribute columns and a non-frequent attribute mining algorithm for multi-attribute columns. Xie [

3] et al. proposed a privacy and data utility metric model for structured data. Their approach transforms structured data into matrices using numerical methods and introduces a privacy preference function to describe and analyze the impact of privacy-preserving mechanisms on sensitive data matrices. The works in [

4,

5,

6] proposed a method for identifying and classifying structured sensitive data by leveraging information entropy to assess attribute sensitivity, and employing machine learning techniques such as clustering and association rule mining to classify sensitive attributes.

Furthermore, a number of recent studies have explored hybrid approaches that integrate machine learning with topic modeling and related techniques to improve the identification and grading of sensitive data. Cong [

7] et al. proposed a method that combines a knowledge graph and BERT for document-level sensitive information classification. Specifically, they trained a Chinese entity encoding model based on a knowledge graph to capture domain-specific semantics. In parallel, they utilized BERT to represent sensitive information at the contextual level, enabling more accurate classification. Vjeko Kužina [

8] et al. developed a machine learning-driven framework for sensitive data identification and classification. The method leverages BERT for multi-label classification, using the column data as context. In addition, it combines regular expressions and keyword lists, with the match rate (i.e., the percentage of data matched by patterns) used to estimate the probability of an attribute belonging to a specific sensitivity category. Li Meng [

9] et al. proposed a sensitive data classification method tailored for the financial domain. Their approach combines thematic analysis and cluster analysis to construct thematic word sets, with sensitivity levels assigned based on domain expert knowledge. Zu [

10] et al. proposed an automated sensitive data categorization approach. They classify entities into three categories—no rules, weak rules, and strong rules—depending on whether or not they exhibited identifiable fixed patterns. To achieve sensitive data recognition, the method integrates regular expressions and keyword lists, with the latter enhanced through a synonym recognition model. Jiang [

11] et al. proposed a hierarchical topic analysis-based approach for identifying and classifying sensitive data. The sensitivity level of each attribute was computed through a weighted summation of segmented attribute names and topic terms. To further improve classification accuracy, the authors applied a cohesive hierarchical clustering algorithm to adjust the hierarchical structure of attributes.

Several of the aforementioned studies perform sensitivity classification at the individual attribute level without considering the inter-attribute correlations. This limitation may result in the omission of certain sensitive attributes that can only be identified through their relationships with other attributes. In addition, most of the aforementioned literature focuses on generic or domain-specific identification and classification methods, such as those in the financial domain. In contrast, our approach is specifically tailored to the characteristics and requirements of the railway domain.

In addition, existing studies have not adequately considered the characteristics of different data types within structured data during the sensitive data identification stage. In particular, traditional identification methods are less effective when dealing with data based on Chinese characters. Additionally, during the identification of sensitive data, traditional data mining methods typically focus on an attribute’s association relationship within a single scenario and fail to effectively capture association relationships across the entire system. This limitation results in shallow and incomplete association relationship mining, which may cause certain sensitive attributes to be overlooked and ultimately lead to suboptimal performance in identification and classification. Furthermore, in the final classification stage, existing approaches generally adopted threshold-based static classification methods, which suffer from several limitations, including subjectivity in threshold selection and a lack of flexibility in adapting to varying data characteristics.

To address the above issues, we classify structured data into canonical data and semi-canonical data based on different data patterns, and perform identification for each type separately to improve identification accuracy. Semi-canonical data refers to textual content, such as Chinese or English character strings, that exceed a specified length threshold and do not conform to any specific data format. Examples include equipment descriptions, railway section descriptions, and similar free-text fields. In contrast, canonical data refers to data with well-defined structural formats, such as ID numbers, dates, and cell phone numbers. In the classification stage, we introduce a clustering-based dynamic classification, considering the relative sensitivity of attributes across different scenarios to further enhance the accuracy of classification. To further improve sensitive data identification and classification accuracy, we have also built a rule base for the identification and classification of sensitive data in the railway domain, which helps adjust the sensitivity levels of various attributes and manages the expiry of sensitive attribute labels.

Our main contributions are as follows:

In the identification stage, we propose to further subdivide structured data into canonical data and semi-canonical data and identify each type of data separately. This improves the identification accuracy of structured data containing text classes.

In the classification stage, we propose a clustering-based dynamic classification method that classifies attributes based on the relative degree of their sensitivity across different scenarios. This enhances classification accuracy and flexibility by adapting to the relative distribution of attribute sensitivities.

We develop a rule base specifically for data in the railway domain. The rule base assists in the identification of semi-canonical data and provides classification mechanisms to enhance the accuracy of both identification and classification. At the same time, the rule base can be integrated with real-world business processes to enable expiry management of sensitive attribute labels.

We develop an automated system for identifying and classifying sensitive data in the railway domain. To evaluate its effectiveness, we conducted an empirical study on a real-world railway dataset, assessing the impact of our proposed fine-grained division, dynamic classification strategy, and rule base on the identification and classification of structured sensitive data. The results validate the performance and practicality of the proposed system.

2. Methods

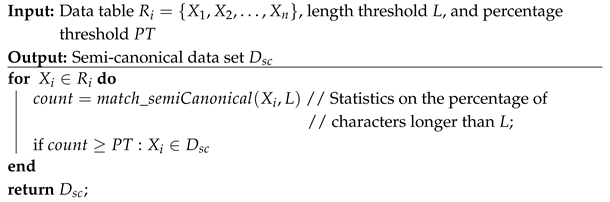

As shown in

Figure 1, the overall framework consists of 3 modules: the sensitive data identification, the sensitive data classification, and the rule base.

Sensitive data identification. Based on different data characteristics, we further divide structured data into canonical data and semi-canonical data to enhance accuracy. Furthermore, canonical sensitive data identification includes 4 steps: information entropy and maximum discrete entropy calculations to obtain the initial sensitivity; cluster analysis to obtain sensitive and suspicious sensitive attribute sets; association rule mining to obtain strong association relationships of attributes across system scope; and attribute sensitivity updating to obtain the final sensitivity. Semi-canonical sensitive data identification includes 2 collaborative parts: regular expression identification and keyword identification.

Sensitive data classification. To improve the flexibility and accuracy of classification, we adopt a dynamic and multi-granularity classified strategy to classify the railway data into three levels. It consists of 3 steps: clustering-based dynamic classification; threshold-based static classification; and rule-based classification adjustment. Dynamicity refers to the use of clustering-based dynamic classification for attributes, which allows the model to adapt to changes in business data without the need for manual threshold adjustment. Multi-granularity reflects the classified requirements specific to the railway domain, encompassing attribute-level, table-level, and system-level classification to support fine-grained and multi-layered sensitivity management. Rule-based adjustment serves as a supplementary mechanism for attribute classification.

Rule base. With the support of the rule base, we can identify semi-canonical data and adjust the classification of attributes to improve the accuracy of identification and classification. The rule base consists of two parts: identification rules and classification rules, while the identification rules include regular expressions and keyword lists. The rule base is constructed by experts in the relevant fields. But when constructing regular expressions, we can leverage large language models for assisted generation to improve the accuracy and efficiency of regular expression generation.

2.1. Canonical Data Identification

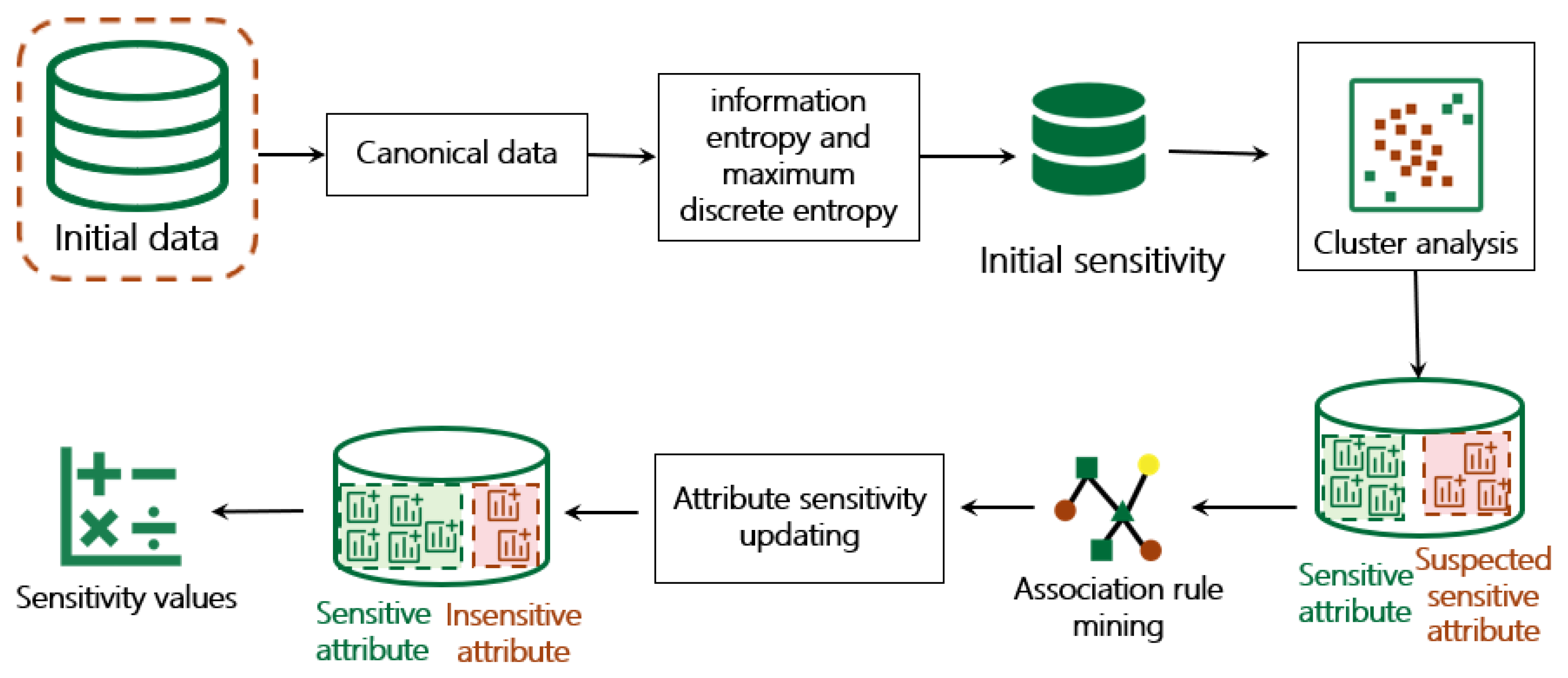

As shown in

Figure 2, we use information entropy and maximum discrete entropy to obtain the initial sensitivity of attributes, and then classify them into sensitive and suspicious sensitive attributes by cluster analysis. Moreover, in contrast to traditional approaches that mine association relationship within a single scenario, our method explores attribute correlations across the entire railway system to update both the set of sensitive attributes and their corresponding sensitivity values.

Algorithm 1 outlines the procedure for calculating the sensitivity of canonical data.

| Algorithm 1: Canonical data sensitivity calculations |

Input: n data tables R. Output: Attribute sensitivity for each attribute . |

1: //data encoding; 2: // Calculate the initial sensitivity of attribute X; 3: // Clustering yields the set of sensitive attributes ; and the set of suspicious sensitive attributes ; 4: // Mining correlations between attributes; 5: // Updating and by // ; return ; |

2.1.1. Sensitivity Measure Based on Information Entropy and Maximum Discrete Entropy

Information entropy [

12] quantifies the average information content per message and reflects the uncertainty of a data source. Therefore, in the context of massive data, using information entropy as a quantitative metric of information content often achieves good results. If the information entropy is defined as

H, the information entropy of a random variable

can be expressed as:

The maximum discrete entropy represents the maximum value of information entropy, which takes its maximum value when the random variable

X is taken to

n different values with equal probability. The maximum discrete entropy is calculated as follows:

The information entropy of an attribute is used to indicate the amount of information about the attribute. The maximum discrete entropy of an attribute is a metric that evaluates the highest uncertainty. According to information theory, data with higher uncertainty carries more information and is considered more sensitive. Therefore, we can measure the sensitivity of an attribute by measuring the distance between the information entropy of the attribute and the maximum discrete entropy of the attribute. The attribute sensitivity is calculated as follows:

According to Equation (

3), we can get the initial sensitivity

for each attribute

X (

). The smaller the value of

, the more information attribute

X carries, which indicates a higher level of sensitivity for attribute

X. At this point, the quantification of data sensitivity in a massive data environment can be completed. Thus, a solid foundation is laid for the subsequent intelligent identification of sensitive attributes.

2.1.2. Sensitive Attribute Division Based on Cluster Analysis

Equation (

3) only provides the initial sensitivity of attributes, which is insufficient for accurate classification. Therefore, we employ clustering techniques to further divide the sensitivity set into 2 groups: a suspicious sensitive attribute set and a sensitive attribute set.

Cluster analysis is an unsupervised learning method commonly used to group objects in a dataset into distinct clusters based on their similarity. As a result, data points within the same cluster exhibit high intra-cluster similarity, while those in different clusters exhibit significant inter-cluster dissimilarity [

13].

Clustering algorithms are generally categorized into 4 major types: partitioning clustering, hierarchical clustering, density-based clustering, and grid-based clustering [

14]. The choice of clustering algorithms typically depends on the nature of the data, the purpose of clustering, and the specific application scenario. Through research, it was found that partition clustering and hierarchical clustering were more suitable for our method.

Partition clustering. This divides the samples in a dataset into distinct groups, each represented by a prototype, such as the center or core sample of the category. The main representative algorithms include K-means [

15], k-medoids [

16], and so on.

Hierarchical clustering. Unlike partition-based methods, hierarchical clustering does not require the number of clusters to be specified in advance. Instead, it builds a nested tree-like structure based on similarity or distance measures between samples to construct a hierarchy of clusters. The main representative algorithms include AGNES [

17] and BIRCH [

18].

We apply partition clustering and hierarchical clustering to perform binary classification on the attribute sensitivity . This process generates a set of sensitive and suspicious sensitive attributes for each data table, forming the basis for subsequent in-depth association relationship mining across system attributes.

2.1.3. Sensitivity Update Based on Association Rule Analysis

Now we have preliminarily classified sensitivity attributes into sensitive and suspicious sensitive attribute sets. However, in practical scenarios, there often exist complex dependencies among attributes within each data table. Data thieves may exploit strong correlations between non-sensitive and sensitive attributes to infer or steal sensitive information.

Therefore, it is necessary to further mine the strong association relationship between sensitive and suspicious sensitive attributes. By extracting association relationships among attributes in the system, we can recalculate the informativeness of each attribute based on the entropy change of the rule conditions. This enables us to update the sensitivity values accordingly and improve the overall effectiveness of sensitive attribute identification.

Association rule mining [

6] could capture the association relationship between different items and can be used to discover meaningful patterns from large datasets. Several widely used algorithms for association rule mining are as follows:

Apriori [

19] is a widely used association rule mining algorithm that identifies strong association rules in a target dataset through an iterative candidate generation and pruning process.

FP-Growth [

20] compresses the dataset by constructing a data structure known as an FP-tree and generates frequent itemsets by traversing this tree. Strong association rules are then derived from the discovered frequent itemsets.

Eclat [

21] adopts a vertical data format and employs intersection operations to efficiently discover frequent itemsets, from which strong association rules are subsequently derived.

Support and confidence are two fundamental metrics in association rule mining. Support measures the importance or frequency of an association within the dataset, while confidence evaluates the reliability or predictive strength of the association. The support and confidence of an association rule are calculated as follows:

In practice, it is necessary to define thresholds for support and confidence. These thresholds help identify and retain strong association rules by filtering out those that do not meet the specified criteria. Strong association rules allow us to reclassify a subset of suspicious sensitive attributes into the sensitive attribute set. Attributes promoted from the suspicious sensitive set to the sensitive attribute set should have their sensitivity levels increased accordingly. Here, we update attribute sensitivity using mutual information between attributes and conditional transformation entropy.

Mutual information [

12], a fundamental concept in information theory, measures the degree of dependence between two random variables. A higher mutual information value indicates a stronger correlation. The mutual information between attributes

X and

Y is defined as follows:

The changed conditional entropy [

4] is defined as the sum of the original attribute’s information entropy and the conditional entropy of the attributes with which it is correlated. The changed conditional entropy for attribute

X is calculated as Equation (

7). Attribute

Y is the attribute that has a strong association with attribute

X.

Based on the maximum discrete entropy and the changed conditional entropy, the sensitivity update formula for attribute

X is defined as Equation (

8).

represents the final sensitivity value of attribute

X, which serves as the basis for the subsequent clustering-based classification.

2.2. Semi-Canonical Data Identification

Unlike canonical data, the identification of semi-canonical data relies on a combination of regular expressions and keyword lists. Regular expressions are used to identify data that follow specific patterns. In contrast, keyword lists are designed to detect explicit sensitive terms or phrases. In essence, regular expressions target pattern-based data types, while keyword lists focus on the identification of predefined sensitive content. This combination significantly improves both the accuracy and efficiency of sensitive data identification.

2.2.1. Filter of Semi-Canonical Data

In this paper, we define data containing Chinese and English characters with a length exceeding a specified threshold as semi-canonical data. When the proportion of semi-canonical data within a given attribute surpasses a certain threshold, and the length of individual data entries within the attribute exhibits significant variance, we categorize the attribute as a semi-canonical attribute. To distinguish between canonical and semi-canonical data in the dataset, we devised a semi-canonical data filter algorithm, as described in Algorithm 2.

| Algorithm 2: Filter of semi-canonical data |

![Futureinternet 17 00294 i001 Futureinternet 17 00294 i001]() |

2.2.2. Sensitive Data Identification Based on Regular Expressions

A regular expression is a sequence of characters, including both literals and special symbols, used to concisely define a text pattern to be matched [

22]. It is typically crafted manually by domain experts with deep knowledge of the specific field.

When identifying semi-canonical data, we use regular expressions to detect specific types of sensitive information, such as equipment descriptions, railway section descriptions, and similar free-text fields. Each data value is matched against a set of regular expressions to determine whether or not it contains any pattern-compliant information. If a match is found, the data are identified as sensitive; otherwise, they are considered non-sensitive.

Finally, the sensitivity of an attribute is determined based on the proportion of data values identified as sensitive within that attribute. Specifically, the sensitivity is defined as .

2.2.3. Sensitive Data Identification Based on Keyword Lists

To improve the accuracy of semi-canonical sensitive data identification, we also adopt a keyword-based approach. Specifically, based on a list of sensitive terms provided by domain experts, each data value is examined to determine whether or not it contains any of the predefined keywords.

Based on the keyword lists provided in the rule base, each semi-canonical data value is examined to determine whether or not it contains any sensitive terms. If a match is found, the data are identified as sensitive; otherwise, they are considered non-sensitive.

The method for calculating attribute sensitivity using the keyword lists is consistent with the approach used for regular expression-based sensitivity calculation.

Specifically, if both the regular expression and the keyword list apply to the same attribute and produce conflicting results, the final sensitivity value is computed as the average of the two.

In summary, the detailed steps of the semi-canonical data sensitivity calculations method are shown in Algorithm 3.

| Algorithm 3: Semi-canonical data sensitivity calculations |

![Futureinternet 17 00294 i002 Futureinternet 17 00294 i002]() |

2.3. Classification Based on Clustering and Thresholding

In terms of the railway domain, structured data can be organized hierarchically from fine to coarse granularity as attributes, tables, and systems. Our work firstly focuses on the identification and classification of sensitive data at the attribute level. Furthermore, based on the results of the attribute-level assessment, sensitivity classification is carried out at the table and system levels, thereby enabling multi-granularity classification of data within the railway domain.

Specifically, we adopted a hybrid classification strategy that combines dynamic and static methods. Firstly, we utilize a clustering-based method to dynamically classify attributes according to their computed sensitivity values, thereby determining the sensitivity level of each attribute. Building on the attribute-level classification, a static weighting-based approach is then employed to determine the sensitivity of each data table, enabling classification based on predefined threshold values. Finally, system-level classification is conducted using a static approach, based on the aggregated sensitivity of the associated data tables.

2.3.1. Clustering-Based Dynamic Classification

Data classification is the process of categorizing data into different levels based on their importance, sensitivity, scope of use, and potential impact on the organization. This enables us to implement targeted security measures during data storage, transmission, access, and processing [

23]. By applying protection at the data classification level, differentiated security strategies can be employed for data of varying sensitivity, thereby enhancing the effectiveness of data leakage prevention.

In current data classification practices, most of methods primarily rely on static classification, in which sensitivity levels are determined by manually set thresholds. Although such methods are instructive and operable, they also reveal many limitations in practical application. First, static classification is inefficient. Especially when facing large-scale, multi-format railway data, static classification is not only time-consuming and laborious but also prone to classification errors due to differences in subjective judgment. Second, static classification lacks flexibility. Even when automated approaches based on sensitivity calculation are employed, they typically require predefined sensitivity thresholds to distinguish between classification levels. However, these thresholds often depend on domain expertise, making them difficult to standardize and challenging to adjust dynamically in real-world scenarios.

To address the above issues, we propose a clustering-based dynamic classification strategy. It leverages the sensitivity values of data attributes and employs unsupervised clustering algorithms—such as K-means, k-medoids, or hierarchical clustering—to analyze the attributes within a data table. It thus enables the automatic classification of attributes into multiple sensitivity levels. The highlight is that it classifies attributes automatically without the need to define preset thresholds, offering strong adaptability while enhancing classification efficiency.

Additionally, to ensure that the results of sensitive attribute classification better align with practical requirements, we also developed a set of sensitive attribute classification rules (see

Section 2.4.2 for more information) based on domain expertise. These expert-defined rules serve as a supplementary mechanism to enhance the clustering-based classification results, making classification more accurate.

The clustering-based dynamic classification Algorithm 4 is as follows:

| Algorithm 4: Attribute dynamic classification algorithm |

Input: Output: |

// Clustering the set results in k subclusters.; return ; |

2.3.2. Threshold-Based Static Classification

As described in

Section 2.3.1, the sensitivity level of each attribute is obtained through a combination of clustering-based dynamic classification and expert-driven rule-based adjustment. To determine the overall sensitivity of a data table, we compute a weighted aggregate sensitivity value by considering the sensitivity levels of all its constituent attributes. Based on this aggregate value, the data table is then assigned a corresponding sensitivity level using a predefined static threshold. Moreover, for system-level classification, a weighted aggregation of table-level sensitivity scores is used to derive the overall system sensitivity, enabling static classification at the system level.

This classification takes into account sensitivities at the attribute, table, and system levels, enabling more accurate and structured protection strategies for sensitive data across varying granularities.

Based on Equation (

9), we calculate the sensitivity of each data table and classify the tables according to a predefined threshold. Similarly, the classification for systems involves calculating the weighted sensitivity of each system based on the associated data tables, followed by system classification using static thresholds.

The sensitivity weighting formula is as follows:

where

denotes the percentage of data with a sensitivity level of

i and

represents the corresponding weight of data with sensitivity level

i.

2.4. Rule Base Construction

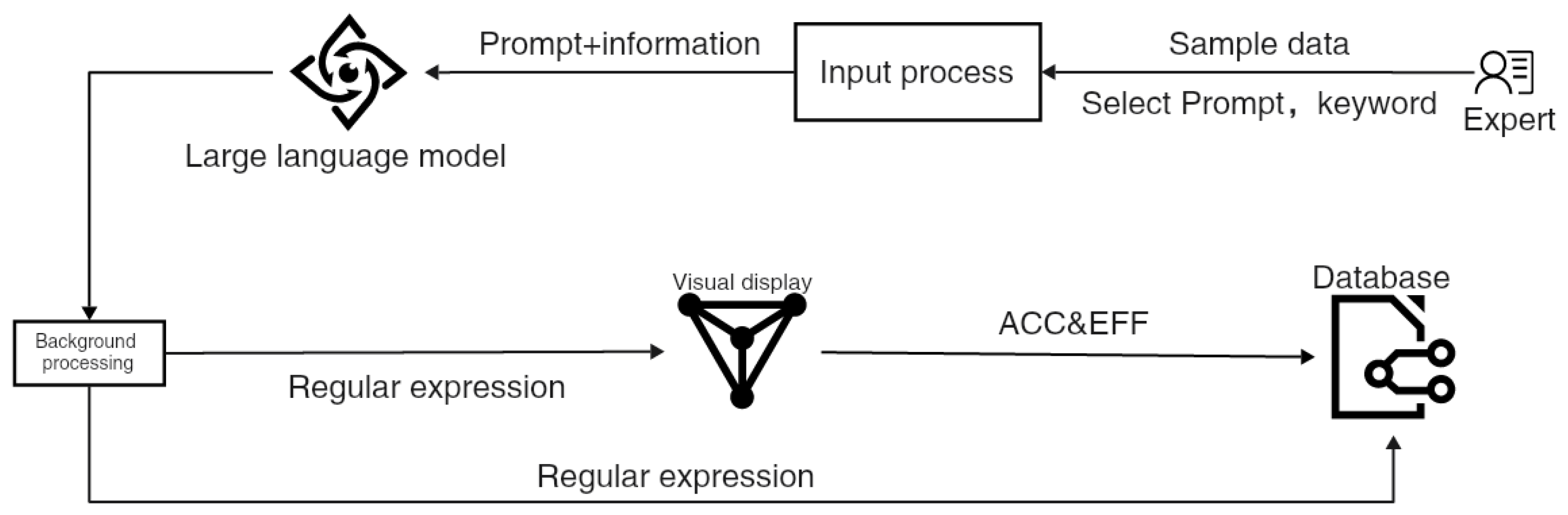

Railway data are highly domain-specific, making it essential to design a representative rule base tailored to typical railway business scenarios. This rule base aids in the identification of sensitive data and ensures that the final classification results are consistent with expert judgment. Therefore, as shown in

Figure 3, the rule base should consist of two components: identification rules and classification rules. In addition, to ensure the security of railway data and account for specific usage scenarios, the rules in the rule base should incorporate expiry constraints. These constraints define a validity period for each rule, ensuring that sensitive attribute labels are managed appropriately within designated time intervals.

2.4.1. Identification Rules

The identification rules comprise two components: regular expressions and keyword lists, which are used to identify semi-canonical sensitive data. Both the regular expressions and keyword lists are created by domain experts. However, to enhance the efficiency and accuracy of regular expression generation, experts can utilize our developed regular expression generation tool, which is designed to assist experts in generating regular expressions using a large language model. By inputting either sample data or a description of the data to be identified, the tool generates accurate and efficient regular expressions using a well-designed prompt. More important, with this regular expression generation tool, users can quickly and accurately produce regular expressions, even without prior knowledge of regular expression syntax.

- (1)

Large Language Modeling to Assist Regular Expression Generation.

The framework of the regular expression generation tool is illustrated in

Figure 4. Given that most existing large language models are not specifically tailored for regular expression generation, designing well-crafted prompts becomes a key strategy to guide their focus toward this task.

Prompt engineering refers to the methodologies and practices involved in utilizing large-scale language models (e.g., GPT) to generate, refine, or evaluate various types of prompts. Prompts are instructional texts that guide the model to produce specific outputs [

24]. Prompt engineering focuses on the design and optimization of these prompts to fully leverage the model’s capabilities and achieve the desired outcomes.

To enhance the efficiency and accuracy of Large Language Models (LLMs) in generating regular expressions, we propose the RICIOE prompting framework. This framework builds upon the ICIO prompting framework summarized by Elavis Saravia [

25] and the CRISPE prompting framework proposed by Matt Nigh [

26], integrating elements of the Few-Shot [

27] prompting approach. The RICIOE framework is composed of six key components: Role, Instruction, Context, Input Data, Output Indicator, and Examples.

R: Role. Clarifies the role that the LLM should fill when performing a task.

I: Instruction. Specifies the specific task or instruction that the LLM performs.

C: Context. Provides background information about the instruction to help the LLM understand the task.

I: Input Data. Specifies the specific data that the LLM needs to process.

O: Output Indicator. Specifies the type or format of the LLM output.

E: Example. A complete Prompt and an example of a sample LLM response message.

- (2)

Keyword Generation.

The keyword is primarily populated manually by experts. It plays a crucial role in identifying potential sensitive terms that may not be fully captured through canonical or semi-canonical data identification methods. By incorporating these expert-curated keywords, it acts as a supplementary to enhance the identification of sensitive data, ensuring that terms potentially associated with privacy risks or security concerns are accurately flagged.

The formal representation of the identification rules is provided in

Table 1.

2.4.2. Classification Rules

Classification rules, encompassing attributes, tables, and systems along with their respective sensitivity levels, are manually input by experts to complement the structured identification and classification of sensitive data, thereby enhancing the accuracy of sensitive information classification.

A sample of building a hierarchical rule base is shown in

Table 2.

3. Experiment

In this section, we conduct three experiments to evaluate the effectiveness of the three core modules proposed for structured sensitive data identification and classification. In addition, we perform experiments on the regular expression assisted generation tool to validate its accuracy and efficiency.

3.1. Experimental Setup

3.1.1. Deployment Method

Based on the technical architecture of the data service platform proposed by Liu [

28] et al., we integrated our automated sensitive data identification and classification system as a back-end module within the railway domain’s data platform. In addition, we developed corresponding interfaces and a front-end application to support the railway department in tracking and analyzing the accuracy of the entire sensitive data identification and classification process, as well as visualizing the final sensitivity grading results through a heat map.

3.1.2. Experimental Environment

All of our experiments were conducted in a consistent environment, as detailed in

Table 3.

3.1.3. Experimental Dataset

Two distinct datasets were used for the experiment: one for sensitive data identification and classification, and the other for regular expression generation.

The sensitive data identification and classification dataset used in our experiments is derived from a desensitized real-world dataset of the railway system. The dataset consists of 4four independent systems, with a total of 35 tables and 571 attributes. The specific number of tables and attributes for each system is presented in

Table 4.

The regular expression generation dataset was downloaded from public datasheets available on Kaggle. After careful screening, we selected 15 files containing only structured data to evaluate the efficiency and accuracy of our regular expression generation method. Detailed information about the dataset is provided in

Table 5.

3.1.4. Parameter Setting

We apply sequential number encoding to encode the raw data, facilitating the subsequent calculation of information entropy and maximum discrete entropy. After thoroughly reviewing a substantial body of literature and conducting a series of experiments, we established different levels of support and confidence for each of the four systems. The support and confidence threshold settings for the four systems are provided in

Table 6. Additional parameter settings are presented in

Table 7.

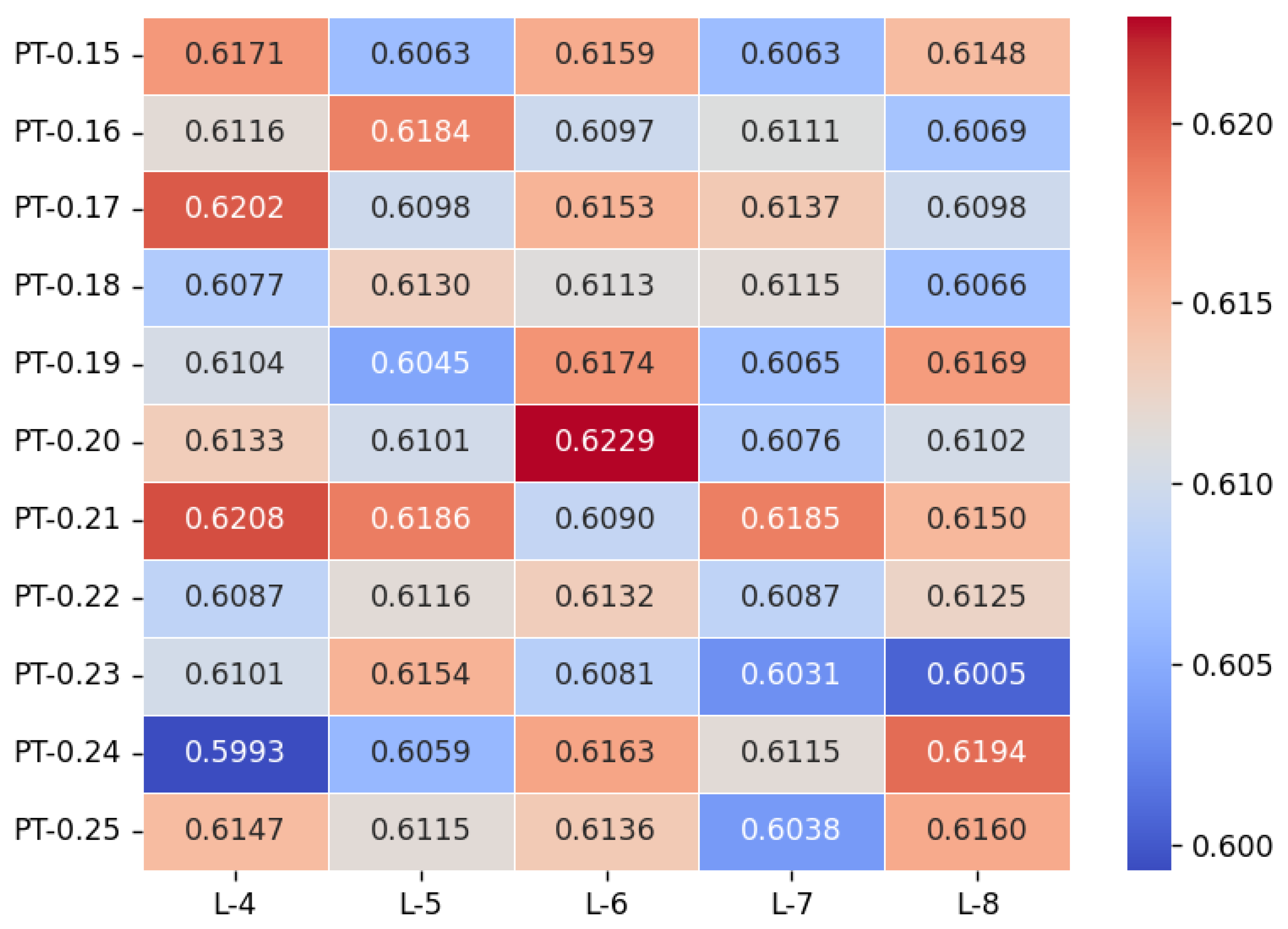

To determine the appropriate values for

L and

PT, we conducted an experimental evaluation of several threshold combinations on real-world datasets from the railway domain. The evaluation metric used was the average identification accuracy across the four systems. According to

Figure 5, the classification accuracy reaches its maximum when L = 6 and PT = 0.20. Based on this result, we set L to 6 and PT to 0.20 for the subsequent experiments.

As for the remaining parameters, cluster_k is set to 2 for two-class clustering, while cluster_radius and sample_N are chosen based on empirical observations. The parameter sample_N specifies the number of samples used in the semi-normalized data filtering process.

3.1.5. Assessment Indicators

- (1)

Sensitive data identification and classification.

ACC. Accuracy refers to the percentage of sensitive attributes that were successfully identified and accurately classified, in comparison to a standard set of manually labeled sensitivity grades, as determined by experts in the railway domain.

- (2)

Regular expression generation.

We use accuracy (ACC) and efficiency (EFF) as evaluation metrics.

ACC. Identify Accuracy refers to the percentage of valid regular expressions successfully generated by the Regular Expression Generation Tool.

EFF. Generation Rule Efficiency denotes the average time taken by our tool to generate each regular expression.

3.2. Experimental Design and Result Analysis

We conducted five distinct experiments to systematically evaluate the effectiveness of the proposed approach. Each experiment targeted a specific component to ensure a comprehensive assessment of its performance and overall effectiveness.

3.2.1. Experimental Comparison of Clustering and Association Rule Mining Algorithm Combinations

In the canonical data identification stage, we cluster the initial sensitivity values to obtain the sensitive attribute set and the suspicious sensitive attribute set. Subsequently, we apply association rule mining algorithms to uncover strong association relationships among system attributes, which are then used to update both the sensitive attribute set and the corresponding sensitivity values.

Given the wide variety of clustering and association rule mining algorithms, we selected four clustering algorithms and three association rule mining algorithms for experiment, aiming to identify the combination that yields the best identification performance.

To ensure variable consistency, all experiments were conducted on the real dataset of the railway domain in

Table 4, and we used a strategy that incorporates fine-grained data division and dynamic clustering-based classification.

Figure 6 presents a heat map illustrating the results of combined experiments involving different clustering and association rule mining algorithms across the four systems. As shown in the figure, the primary variation in identification accuracy among the systems is attributed to the choice of clustering algorithm, while the impact of the association rule mining algorithm on overall accuracy is comparatively minor.

However, it is difficult to have consistency since each of the four clustering algorithms performs well in at least one of the four systems. At the same time, to ensure that our method maintains a certain level of generalizability, we adopt a voting-based strategy to mitigate the variations introduced by different algorithm combinations and enhance the overall stability of the identification process.

Specifically, the voting mechanism operates on the 12 different combinations of classification results, and the final classification result is determined by selecting the outcome that receives the highest number of votes. The results based on the voting strategy are shown in the first row of

Figure 6. While the voting-based outcomes are not optimal in every system, they effectively balance the performance differences between various algorithm combinations and yield more stable and reliable results overall. Both subsequent experiments were conducted using the voting-based strategy as the foundation.

3.2.2. Experimental Validation of the Effectiveness of Fine-Grained Division

To validate the effectiveness of fine-grained division, we conducted comparative experiments using the dataset described in

Table 4. The experiments compare the identification accuracy achieved without division versus that achieved after applying fine-grained data division. Both experiments employed a clustering-based dynamic classification approach and the voting strategy.

Table 8 presents a comparison between the experimental results with fine-grained division of structured data and those without division. As shown, performance improves across all systems after fine-grained division, except for System 4. On average, the proposed method resulted in a 2.05% improvement in accuracy across all systems. The most notable improvement was observed in System 2, where accuracy increased by 4.08%. These results demonstrate our fine-grained division strategy lead to an improvement in the accuracy of structured sensitive data identification and classification. In System 4, both methods achieve the same level of accuracy, indicating that they correctly identify the same number of sensitive attributes. Upon analyzing the original data, we found that, since our method is non-incremental, it replaces rather than builds upon the results of information entropy. Although it is capable of identifying some semi-canonical attributes that information entropy alone cannot identify, it simultaneously results in the loss of attributes that were originally identified correctly by the entropy-based method. The offsetting effects of the two factors result in the overall accuracy remaining unchanged.

3.2.3. Experimental Validation of the Effectiveness of Clustering-Based Dynamic Classification

Similarly, to assess the effectiveness of our proposed clustering-based dynamic classification strategy, we carried out a comparative experiment on the dataset of

Table 4. To ensure a controlled single-variable comparison, all experiments were conducted using the same voting strategy and fine-grained division approach.

Table 9 compares the performance of the dynamic classification strategy and the static classification strategy across the four systems. As shown, each method exhibits advantages and disadvantages depending on the specific system. The static classification strategy is more suitable for Systems 1 and 3, while the dynamic strategy performs better in Systems 2 and 4. However, it is important to note that the static classification results are based on carefully tuned threshold values, while the dynamic classification strategy operates without the need for manual adjustment, offering greater adaptability and scalability in practical applications. In addition, in System 2, the static classification strategy achieved an accuracy of only 45.41%, whereas the implementation of the dynamic classification strategy resulted in a significantly higher accuracy of 73.47%, representing an improvement of 28.06 percentage points. Despite a decline in accuracy for System 1 and System 3, the dynamic strategy consistently achieves over 58% accuracy, demonstrating its robustness across different systems. Therefore, the dynamic classification strategy demonstrates greater overall advantages and is better suited for practical applications.

To clearly demonstrate how attribute sensitivity is used for classification, we use data sheet “big_view_szoptimizeintersect” from System 3 as a representative example. It contains 11 attributes, which were finally classified as six Level 1 (non-sensitive), three Level 2 (weakly sensitive), and two Level 3 (strongly sensitive) sensitivity attributes. The detailed sensitivity value and their associated classification results are provided in

Table 10.

It should be noted that the attribute “insectgdtype” was classified as non-sensitive (Level 1) based on clustering analysis and association rule mining. The attribute “insectgdtype“, which denotes the type of intersecting work point, is considered non-sensitive in the railway domain. Our method accurately identifies and classifies it as a Level 1 sensitive attribute. Therefore, only the remaining 10 attributes were subjected to clustering-based dynamic classification and majority voting to determine the final sensitivity levels. The results of this process yielded seven Level 3, three Level 2, and one Level 1 sensitivity classifications.

Subsequently, according to the expert-provided classification rules, all attributes containing “id” and “date” were reclassified as Level 1. This adjustment is based on the rationale that attributes in the “id” category are typically primary or foreign keys, which do not carry sensitive content, while attributes in the “date” category are time-related and similarly do not involve sensitive information.

The labeling results represent the expert-defined classifications used as the reference standard to evaluate the accuracy of the proposed method. As shown in

Table 10, six attributes were accurately classified, yielding an overall accuracy of 54.545%. While the classification accuracy is not high,

Table 10 shows that our method consistently assigns higher sensitivity levels than expert annotations. This conservative classification strategy offers stronger protection for sensitive attributes.

Furthermore, in addition to the attribute-level classification described above, we have also implemented sensitivity classification at both the table and system levels to meet the requirements of multi-granularity classification in the railway domain. Specifically, we employ a threshold-based static classification method, in which table-level sensitivity is determined by weighting the classification results of its constituent attributes. Similarly, system-level sensitivity is calculated by aggregating and weighting the sensitivity scores derived from table-level classifications. Taking System 3 as an example, the results of multi-granularity sensitivity classification under this system are presented in

Table 11. In the absence of expert-provided classification results at the table and system levels, our method serves as a useful reference for domain experts.

3.2.4. Experimental Validation of Generating Regular Expressions with Our Tool

To validate the effectiveness of our proposed RICIOE prompting framework, we designed five different versions of the prompt for experiments. Ranging from Prompt 1 to Prompt 5, the content of the prompts becomes progressively more comprehensive, with Prompt 5 encompassing the complete RICIOE framework. The details of Prompt 5 are presented in

Table 12. The results of the comparative experiments conducted on the dataset described in

Table 5 are presented in

Figure 7.

We observe that, as the prompt content becomes more detailed, the accuracy of the generated regular expressions improves; however, the generation efficiency tends to decrease. This trade-off aligns with our expectations. Based on the experimental results, we selected Prompt 5 as the optimal prompt for regular expression generation due to its superior accuracy and overall performance. Achieving an accuracy of 98.98%, the approach sufficiently satisfies the practical demands of regular expression generation in our context.

3.2.5. Time Complexity Analysis of Clustering and Association Rule Mining Algorithms

To validate the time complexity of the clustering and association rule mining algorithms used in our framework, we incorporated additional analyses, including closure-based association rule mining and attribute sensitivity updating. The corresponding results are shown in

Table 13.

Field “Cluster” indicates that association rule mining was disabled in this configuration. Field “Cluster-rules” represents the results of experiments that incorporate association rule mining and attribute sensitivity updating. Field “Δ” indicates the degree of improvement in system performance, measured in terms of execution time and accuracy. Field “Time” denotes the total execution time spanning from the calculation of initial attribute sensitivity based on information entropy to the final system-level classification.

The inclusion of association rule mining increases the average system execution time by 2.084 s. Classification accuracy declined only in System 3, while the remaining three system experienced some improvement in accuracy. The average accuracy improved by 4.81%. Therefore, although the introduction of association rule mining increases the processing time, it remains within an acceptable range. Moreover, association rule mining contributes to improvements in accuracy.

Among all systems, System 3 incurs the highest additional time cost due to mining the largest number of association rules, totaling 22. In comparison, Systems 1, 2, and 4 mine 10, 14, and 14 rules, respectively. The slightly decrease in accuracy for System 3 may be attributed to the fact that most of the attributes labeled by experts are insensitive. The introduction of association rules increases the number of attributes identified as sensitive, which consequently results in a reduction in accuracy.

4. Discussion

In

Section 3.2, we conducted four experiments. The results of the four experiments validate the effectiveness of each individual identification and classification module proposed for structured sensitive data. Experimental results show that the proposed method achieves an average accuracy of 62.03% across the four systems.

In addition, we have developed a domain-specific rule base for the railway domain to assist in the identification of classification-sensitive attributes. The rule base consists of two components: identification rules and classification rules.

Identification rules are employed to facilitate the identification of semi-canonical sensitive data. As shown in Experiment 2 (

Section 3.2.2), the use of identification rules combined with fine-grained division for identifying semi-canonical data yields an average accuracy improvement of 2.05%, validating the effectiveness of the rule base. The slight improvement accuracy is attributed to the current incompleteness of the identification rule base. As the rule base continues to be refined and expanded, its effectiveness in identifying and classifying sensitive attributes is expected to improve accordingly.

For example, in System 4, the attribute “risk_info” in the table “major_risk” was labeled as Level 3 (strong sensitive) by the expert. However, since the data had undergone a desensitization process, the corresponding entries for this attribute in the dataset were empty. As a result, it could not be identified using the identification rules. Based on the attribute name, we infer that it likely falls under the category of semi-canonical data. To verify the effectiveness of the identification rule, we manually constructed sample data for testing. We randomly generated 800 data entries containing the keyword “risk” for testing purposes. The calculated sensitivity score was 0.2, corresponding to a sensitivity Level 3 (strong sensitive). Subsequently, classification accuracy can be further improved by allowing experts to add additional identification rules based on the characteristics of the data.

The primary role of classification rules is not to improve the accuracy of our method, but rather to adapt the identification and classification process to better meet the practical requirements of sensitive data protection in the railway domain. For example, as shown in

Table 10, for the attribute “id” in the “big_view_szoptimizeintersect” of System 3, our method assigns it as Level 3. However, according to the guidance provided by experts in the railway domain, “id” and field name containing “_id” serve as primary keys or foreign keys in data tables. These are not considered sensitive information and should therefore be assigned as Level 1. Accordingly, we adjusted the sensitivity levels of attributes containing “id” or “_id” to Level 1 by configuring the classification rules. Similarly, fields containing “date” are time-related and generally do not include sensitive information; therefore, these attributes can also be reclassified by applying appropriate classification rules.

At the same time, the rule base also supports expiry management for sensitive attribute labels, enabling the timely revocation of expired sensitive attributes. As an example, the sensitive data “risk” is designated as sensitive until “31 December 2027”. After this period, its sensitivity status is revoked. This time-based sensitivity adjustment mechanism is designed to meet the practical needs of expiry in railway data protection. Furthermore, a front-end interface is provided to enable domain experts to manage the rule base by adding or modifying entries, thereby supporting ongoing rule maintenance and refinement. Meanwhile, the intermediate processes of sensitive data identification and classification can be monitored in real time through the front-end interface, allowing for effective control and verification of the classification results.

In summary, the rule base is a system module designed based on the practical needs of the railway domain to facilitate more effective management of sensitive railway data.

5. Conclusions

In this paper, we propose a sensitive data identification and automatic classification method for structured data in the railway domain. We classify structured data into canonical and semi-canonical types and compute attribute sensitivity using different methods tailored to each type. In addition, we propose a hybrid classification approach that combines both dynamic and static methods. Specifically, a clustering-based dynamic classification strategy is employed to classify attribute-level sensitivity, while a threshold-based static classification is used to determine the sensitivity levels of data tables and systems. This integrated approach effectively mitigates the subjectivity associated with manual threshold setting and provides greater flexibility and adaptability to diverse data scenarios. To enhance the accuracy of sensitive data identification and classification, we also developed a rule base specifically tailored to the railway domain, incorporating domain knowledge to guide the identification and classification processes more effectively. We conducted experiments on a real-world dataset from the railway domain, and the results demonstrated strong performance. Our method achieved an average accuracy of 62.03%, highlighting its effectiveness in identifying sensitive data. Furthermore, we evaluated the performance of our designed prompts for generating regular expressions. Among them, Prompt 5 achieved an impressive accuracy of 98.98%, underscoring the effectiveness of our prompt design in supporting precise and reliable regular expression generation.

Nevertheless, there are still some limitations in our approach. One of the main limitations of our approach lies in the discrepancy between information entropy-based sensitivity calculations and expert-based sensitivity judgments. While entropy measures sensitivity based on the amount of information contained in an attribute, experts often assess sensitivity based on the attribute’s semantic meaning. Consequently, this presents a significant challenge for accurately identifying and classifying sensitive attributes. Additionally, the construction of the rule base depends heavily on the expertise of professionals in the railway domain, and the current rule base remains incomplete. These represent several potential directions for future work.

6. Patents

Building on the contributions of this work, we have filed a patent application entitled “Method and Apparatus for Identifying and Automatically Classifying Sensitive Data in Structured Data”, which is currently pending approval.

Author Contributions

Conceptualization, Y.J. and H.C.; methodology, Y.J. and H.C.; software: Y.J. and H.C.; validation: Y.J. and H.C.; formal analysis, Y.J. and H.C.; data curation, Y.W. and Q.L.; writing—original draft preparation, Y.J. and H.C.; writing—review and editing, Y.J.; project administration, R.M. and Q.L.; funding acquisition, Y.W. and Q.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the China Academy of Railway Sciences Corporation Limited (Grant No. 2023YJ356).

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors upon request due to privacy concerns.

Acknowledgments

We are grateful for the financial support from the China Academy of Railway Sciences Corporation Limited. We would also like to thank all practitioners who participated in the focus group discussions, and we thank the anonymous reviewers for their constructive comments to improve the paper.

Conflicts of Interest

Authors Yanhua Wu and Qingxin Li were employed by the China Academy of Railway Sciences Corporation Limited. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest. Authors Yage Jin, Hongming Chen, and Rui Ma were partially funded through this project.

Correction Statement

This article has been republished with a minor correction to the Funding statement. This change does not affect the scientific content of the article.

References

- Wei, C.; Yang, Y.; Li, Q. Risk analysis and countermeasures of railway network data security. Railw. Comput. Appl. 2022, 31, 33–36. [Google Scholar]

- Wang, Y. Research and Implementation of Structured Privacy Data Desensitization System. Master’s Thesis, Harbin Institute of Technology, Harbin, China, 2019. [Google Scholar]

- Xie, M.; Peng, C.; Wu, R.; Ding, H.; Liu, B. Privacy and data utility metric model for structured data. Appl. Res. Comput. Yingyong Yanjiu 2020, 37, 1465–1469,1473. [Google Scholar]

- He, W. Research on Intelligent Recognition Algorithm and Adaptive Protection Model of Sensitive Data. Master’s Thesis, Guizhou University, Guizhou, China, 2020. [Google Scholar]

- Li, Q. Railway Data Security Governance System and Privacy Computing Technology Research. Master’s Thesis, China Academy of Railway Sciences, Beijing, China, 2023. [Google Scholar]

- Chen, J. Research and Application of Sensitive Attribute Recognition Method for data PUBLISHING. Master’s Thesis, Chengdu University of Technology, Chengdu, China, 2020. [Google Scholar]

- Cong, K.; Li, T.; Li, B.; Gao, Z.; Xu, Y.; Gao, F. KGDetector: Detecting Chinese Sensitive Information via Knowledge Graph-Enhanced BERT. Secur. Commun. Networks 2022, 2022, 4656837. [Google Scholar] [CrossRef]

- Kužina, V.; Petric, A.M.; Barišić, M.; Jović, A. CASSED: Context-based Approach for Structured Sensitive Data Detection. Expert Syst. Appl. 2023, 223, 119924. [Google Scholar] [CrossRef]

- Li, M.; Liu, J.; Yang, Y. Automated Identification of Sensitive Financial Data Based on the Topic Analysis. Future Internet 2024, 16, 55. [Google Scholar] [CrossRef]

- Zu, L.; Qi, W.; Li, H.; Men, X.; Lu, Z.; Ye, J.; Zhang, L. UP-SDCG: A Method of Sensitive Data Classification for Collaborative Edge Computing in Financial Cloud Environment. Future Internet 2024, 16, 102. [Google Scholar] [CrossRef]

- Jiang, W.; Liu, Z.; Xie, S.; Fu, Y.; Li, Q. Intelligent identification and classification and grading method of railway sensitive data based on hierarchical topic analysis. Railw. Comput. Appl. 2024, 33, 7–12. [Google Scholar] [CrossRef]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Jaeger, A.; Banks, D. Cluster analysis: A modern statistical review. Wiley Interdiscip. Rev. Comput. Stat. 2023, 15, e1597. [Google Scholar] [CrossRef]

- Mining, W.I.D. Data mining: Concepts and techniques. Morgan Kaufinann 2006, 10, 4. [Google Scholar]

- MacQueen, J. Some methods for classification and analysis of multivariate observations. In Proceedings of the Fifth Berkeley Symposium on Mathematical Statistics and Probability, Volume 1: Statistics; University of California Press; Berkeley, CA, USA, 1967; Volume 5, pp. 281–298. [Google Scholar]

- Song, H.; Lee, J.G.; Han, W.S. PAMAE: Parallel k-medoids clustering with high accuracy and efficiency. In Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Halifax, NS, Canada, 13–17 August 2017; pp. 1087–1096. [Google Scholar]

- Kaufman, L.; Rousseeuw, P.J. Finding Groups in Data: An Introduction to Cluster Analysis; John Wiley & Sons: Hoboken, NJ, USA, 1990. [Google Scholar]

- Zhang, T.; Ramakrishnan, R.; Livny, M. BIRCH: An efficient data clustering method for very large databases. ACM Sigmod Rec. 1996, 25, 103–114. [Google Scholar] [CrossRef]

- Huang, L.; Chen, H.; Wang, X.; Chen, G. A fast algorithm for mining association rules. J. Comput. Sci. Technol. 2000, 15, 619–624. [Google Scholar] [CrossRef]

- Borgelt, C. An Implementation of the FP-growth Algorithm. In Proceedings of the 1st International Workshop on Open Source Data Mining: Frequent Pattern Mining Implementations, Chicago, IL, USA, 21 August 2005; pp. 1–5. [Google Scholar]

- Han, J.; Pei, J.; Kanber, M. Data Mining: Concepts and Techniques; China Machine Press: Beijing, China, 2012; Volume 2. [Google Scholar]

- Kužina, V.; Vušak, E.; Jović, A. Methods for automatic sensitive data detection in large datasets: A review. In Proceedings of the 2021 44th International Convention on Information, Communication and Electronic Technology (MIPRO), Opatija, Croatia, 27 September–1 October 2021; pp. 187–192. [Google Scholar]

- Gao, L.; Zhao, Z.; Lin, Y.; Zhai, Z. Research on Data Classification and Grading Methods Based on the Data Security Law. Inf. Secur. Res. 2021, 7, 933–940. [Google Scholar]

- White, J.; Fu, Q.; Hays, S.; Sandborn, M.; Olea, C.; Gilbert, H.; Elnashar, A.; Spencer-Smith, J.; Schmidt, D.C. A prompt pattern catalog to enhance prompt engineering with chatgpt. arXiv 2023, arXiv:2302.11382. [Google Scholar]

- DAIR.AI. Prompt Engineering Guide. [EB/OL]. 2024. Available online: https://www.promptingguide.ai/introduction/elements (accessed on 27 June 2025).

- Nigh, M. ChatGPT3-Free-Prompt-List: A Free Guide for Learning to Create ChatGPT3 Prompts. [EB/OL]. 2023. Available online: https://github.com/mattnigh/ChatGPT-Free-Prompt-List/blob/main/dist/markdown/prompting-guide.md (accessed on 27 June 2025).

- Wang, Y.; Yao, Q.; Kwok, J.T.; Ni, L.M. Generalizing from a few examples: A survey on few-shot learning. ACM Comput. Surv. (csur) 2020, 53, 1–34. [Google Scholar] [CrossRef]

- Liu, M.; Ma, X.; Liu, Y.; Wang, J.; Yang, M. Data service platform oriented to enhance capabilities of digital railway infrastructure. Railw. Comput. Appl. 2025, 34, 56–62. [Google Scholar] [CrossRef]

Figure 1.

Structured sensitive data identification and classification framework diagram.

Figure 1.

Structured sensitive data identification and classification framework diagram.

Figure 2.

Canonical data identification diagram.

Figure 2.

Canonical data identification diagram.

Figure 3.

Rule Base construction.

Figure 3.

Rule Base construction.

Figure 4.

The framework of the regular expression generation tool.

Figure 4.

The framework of the regular expression generation tool.

Figure 5.

Heat map of experimental results for L and PT threshold combinations.

Figure 5.

Heat map of experimental results for L and PT threshold combinations.

Figure 6.

Comparison of different methods.

Figure 6.

Comparison of different methods.

Figure 7.

The experimental results of GPT−3.5 generating regular expressions under different prompts.

Figure 7.

The experimental results of GPT−3.5 generating regular expressions under different prompts.

Table 1.

Illustrative examples of identification rule base.

Table 1.

Illustrative examples of identification rule base.

| Regular Expressions and Keyword List | Type | Expiration Date |

|---|

| \d{17}(\d|x|X) | I.D. number | 31 December 2027 |

| 1[3-9]\d{9} | Telephone number | 31 December 2027 |

| \b(?:[0-9]{1,3}\.){3}[0-9]{1,3}\b | IP address | 31 December 2027 |

| [a-zA-Z0-9._%+-]+@[a-zA-Z0-9.-]+

\.[a-zA-Z]{2,} | E-mail address | 31 December 2027 |

| risk | Sensitive data | 31 December 2027 |

Table 2.

Illustrative examples of classification rule base.

Table 2.

Illustrative examples of classification rule base.

| Field Name | Sensitivity Level | Expiration Date |

|---|

| rock_level | 3 | 31 December 2027 |

| date | 1 | 31 December 2027 |

| id | 1 | 31 December 2027 |

Table 3.

Experimental environment.

Table 3.

Experimental environment.

| Environment | Version |

|---|

| CPU | IntelXeonW-2255 |

| OS | Ubantu 22.04 |

| GPU | Nvidia GeForce RTX 3090 |

| RAM | 64G |

| Software Environment | Python3.12.1, Pytorch2.0 |

Table 4.

Sensitive data identification and classification dataset.

Table 4.

Sensitive data identification and classification dataset.

| System Name | Number of Tables | Number of Attributes |

|---|

| System1 | 8 | 108 |

| System2 | 8 | 196 |

| System3 | 5 | 94 |

| System4 | 14 | 173 |

Table 5.

Regular expression generation dataset.

Table 5.

Regular expression generation dataset.

| Table | Number of Attributes |

|---|

| 2018-2019_Daily_Attendance_2024 | 6 |

| abnb_stock_data | 7 |

| heart_failure_clinical_records_ | 13 |

| liver_cirrhosis | 19 |

| melb_data | 19 |

| nvda_stock_data | 7 |

| salaries | 11 |

| Student_Satisfaction_Survey | 9 |

| cancer_reg | 33 |

| DataScience_salaries_2024 | 11 |

| Housing | 21 |

| loan_data | 14 |

| shipping | 12 |

| Student Attitude and Behavior | 19 |

| supermarket_sales | 17 |

Table 6.

Support and confidence threshold settings.

Table 6.

Support and confidence threshold settings.

| | System1 | System2 | System3 | System4 |

|---|

| supporting | 0.33 | 0.62 | 0.66 | 0.33 |

| confidence | 0.50 | 0.75 | 0.75 | 0.50 |

Table 7.

Additional parameter setting, where cluster_k is the parameter for the clustering algorithm to perform binary classification; cluster_radius is the clustering radius; sample_N, L and PT are semi-canonical data filter parameters.

Table 7.

Additional parameter setting, where cluster_k is the parameter for the clustering algorithm to perform binary classification; cluster_radius is the clustering radius; sample_N, L and PT are semi-canonical data filter parameters.

| Parameter | cluster_k | cluster_radius | sample_N | L | PT |

|---|

| Value | 2 | 0.3 | 100 | 6 | 0.20 |

Table 8.

Experimental results of fine-grained data division.

Table 8.

Experimental results of fine-grained data division.

| | System1 | System2 | System3 | System4 |

|---|

| Canonical and semi-canonical data | 58.33% | 73.47% | 58.51% | 57.80% |

| Structured data | 57.41% | 69.39% | 55.32% | 57.80% |

| Effect | | | | 0.00% |

Table 9.

Effectiveness of combined dynamic and static classification strategies.

Table 9.

Effectiveness of combined dynamic and static classification strategies.

| | System1 | System2 | System3 | System4 |

|---|

| Dynamic classification strategy | 58.33% | 73.47% | 58.51% | 57.80% |

| Static classification strategy | 66.67% | 45.41% | 72.34% | 49.13% |

| Effect | | | | |

Table 10.

Illustrative example of table classification.

Table 10.

Illustrative example of table classification.

| Attribute | Final | Cluster+Voting | Rules | Labeling |

|---|

| Name | Sensitivity | Classification | Adjustments | Results |

|---|

| insectgdtype | 0.9509 | 1 | 1 | 1 |

| useflag | 0.9435 | 2 | 2 | 1 |

| intersectmsprefix | 0.8775 | 2 | 2 | 1 |

| categoryline | 0.7835 | 2 | 2 | 1 |

| intersectms | 0.0000 | 3 | 3 | 2 |

| intersectlxms | 0.2568 | 3 | 3 | 2 |

| operate_date | 0.0000 | 3 | 1 | 1 |

| delete_date | 0.06119 | 3 | 1 | 1 |

| htdrgd_id | 0.06119 | 3 | 1 | 1 |

| intersectrgd_id | 0.06119 | 3 | 1 | 1 |

| id | 0.06119 | 3 | 1 | 1 |

Table 11.

System 3 and table classification results. The sensitivity levels decrease progressively from 3 to 1. Column 4 displays the number of attributes or tables in each table or system corresponding to each sensitivity level.

Table 11.

System 3 and table classification results. The sensitivity levels decrease progressively from 3 to 1. Column 4 displays the number of attributes or tables in each table or system corresponding to each sensitivity level.

| Name | Level | Level1:Level2:Level3 |

|---|

| big_view_szoptimizedayreport | 2 | 8:1:1 |

| big_view_szoptimizegongdian | 2 | 23:14:5 |

| big_view_szoptimizeintersect | 2 | 6:3:2 |

| big_view_szoptimizetunnel | 3 | 6:6:6 |

| big_view_szoptimizeweiyan | 2 | 5:6:2 |

| System3 | 3 | 0:4:1 |

Table 12.

RICIOE Prompt 5 Example.

Table 12.

RICIOE Prompt 5 Example.

| Role | You are a regular expression generation tool. |

| Instruction | Please generate a regular expression that identifies the following keywords and outputs them in the specified output format. |

| Context | These keywords are used to identify example data. |

| Input Data | The keywords and sample data are as follows: |

| | Keyword:Time |

| | Example data: |

| | 2023-01-12 06:00:01 |

| | 2019-11-01 16:24:00 |

| | 1999-10-07 12:31:04 |

| | 2022-01-12 19:21:59 |

| | 2035-10-01 00:00:00 |

| Output Indicator | The output is formatted as: |

| | Keyword: |

| | Regular expression: [ Starting with and ending with $ ] |

| Example | You are a regular expression generation tool. Please generate a regular expression that identifies the following keywords and outputs them in the specified output format. These keywords are used to identify example data. The keywords and sample data are as follows: |

| | Keyword: Gender |

| | Example data: |

| | Male |

| | Female |

| | Male |

| | Female |

| | Female |

| | Output: |

| | Keyword: Gender |

| | Regular expression: |

Table 13.

Time complexity analysis of cluster analysis, association rule mining, and mutual information computation.

Table 13.

Time complexity analysis of cluster analysis, association rule mining, and mutual information computation.

| System | Cluster | Cluster-Rules | Δ

|

|---|

| Name | Time | ACC | Time | ACC | Time | ACC |

|---|

| System1 | 2.7596 s | 57.40% | 3.5124 s | 58.33% | 0.7528 s | 0.93% |

| System2 | 3.4349 s | 58.16% | 4.2741 s | 73.47% | 0.8392 s | 15.31% |

| System3 | 2.6426 s | 59.57% | 8.1265 s | 58.51% | 5.4839 s | −1.06% |

| System4 | 4.1840 s | 53.75% | 5.4448 s | 57.80% | 1.2608 s | 4.05% |

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).