A Systematic Review of Cyber Range Taxonomies: Trends, Gaps, and a Proposed Taxonomy

Abstract

1. Introduction

1.1. Contextualizing Cyber Ranges

1.2. Overview and Limitations of Existing Taxonomies

1.3. Contribution of This Paper

1.4. Outline of This Paper

2. Materials and Methods

2.1. Research Objectives and Research Questions

- RO1: Systematically identify and analyze existing taxonomies and systematic literature reviews (SLRs) on cyber ranges published between 2014 and 2024.

- RO2: Evaluate convergences and divergences within existing cyber range taxonomies.

- RO3: Assess the impact of the recent literature (2019 to 2024) on existing cyber range taxonomies, determining whether an updated taxonomy is needed.

- RQ1: What existing taxonomies and systematic literature reviews (SLRs) on cyber ranges were conducted between 2014 and 2024?

- RQ2: Where do existing cyber range taxonomies converge and diverge?

- RQ3: What influence have recent papers (2019 to 2024) had on current cyber range taxonomies, and is there a need for an updated taxonomy?

2.2. Methodological Framework

- Mutually exclusive: No single entity could have two different characteristics within the same dimension;

- Collectively exhaustive: Every entity needed to fit exactly one characteristic in each dimension.

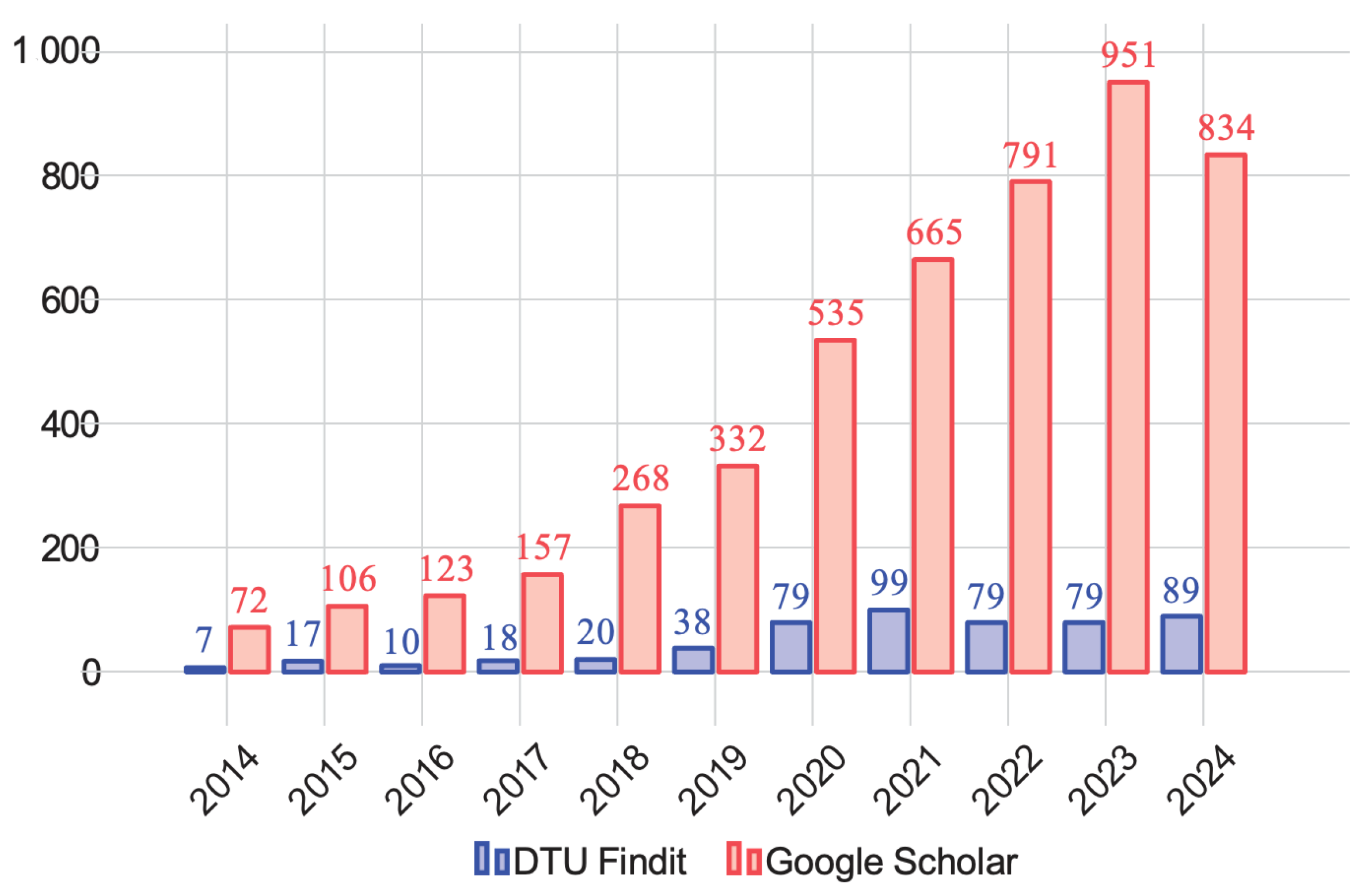

2.3. Search Strategy and Information Sources

2.4. Eligibility Criteria

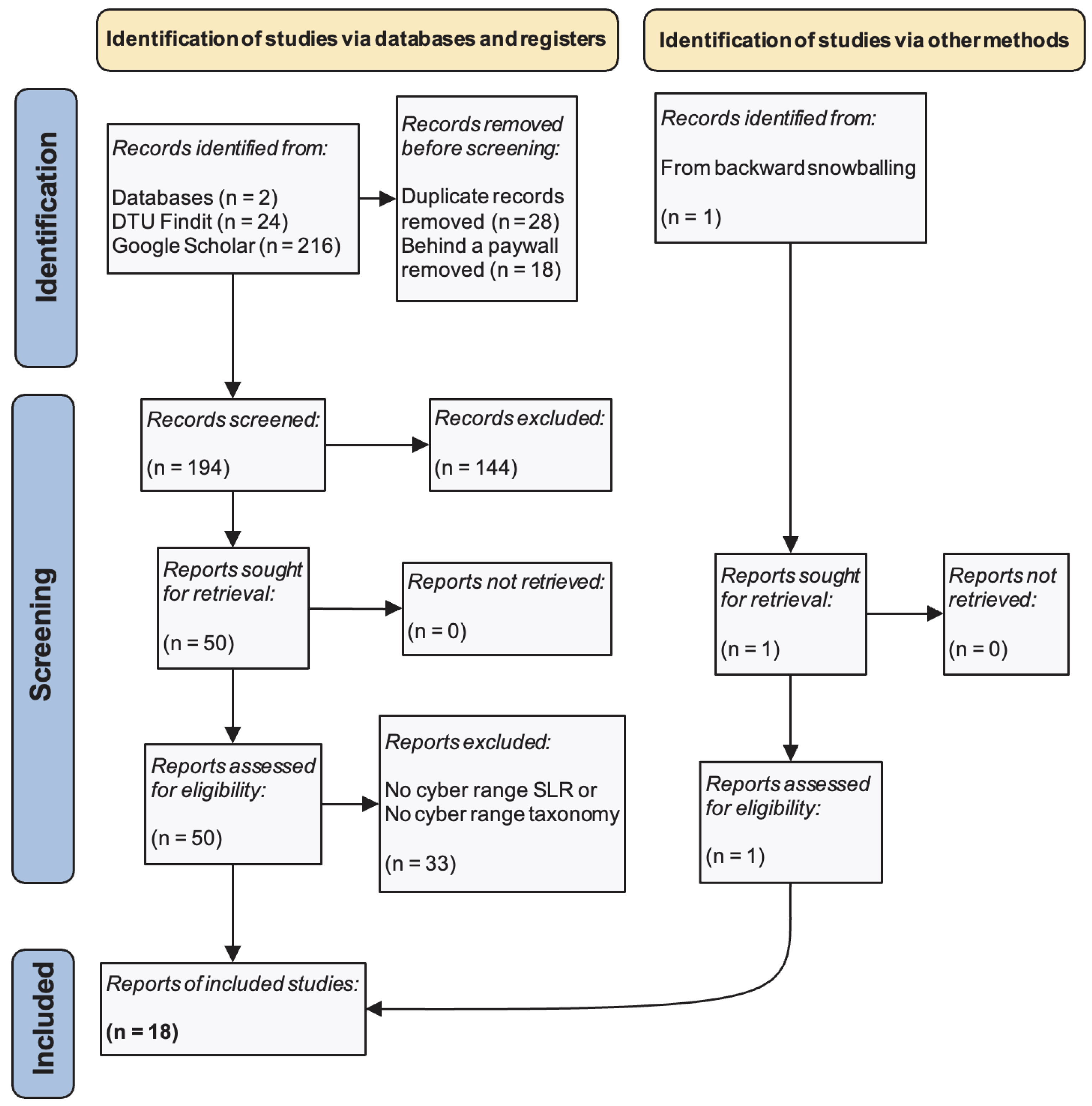

2.5. Selection Process

2.6. Risk and Bias Assessment

2.7. Use of Generative AI

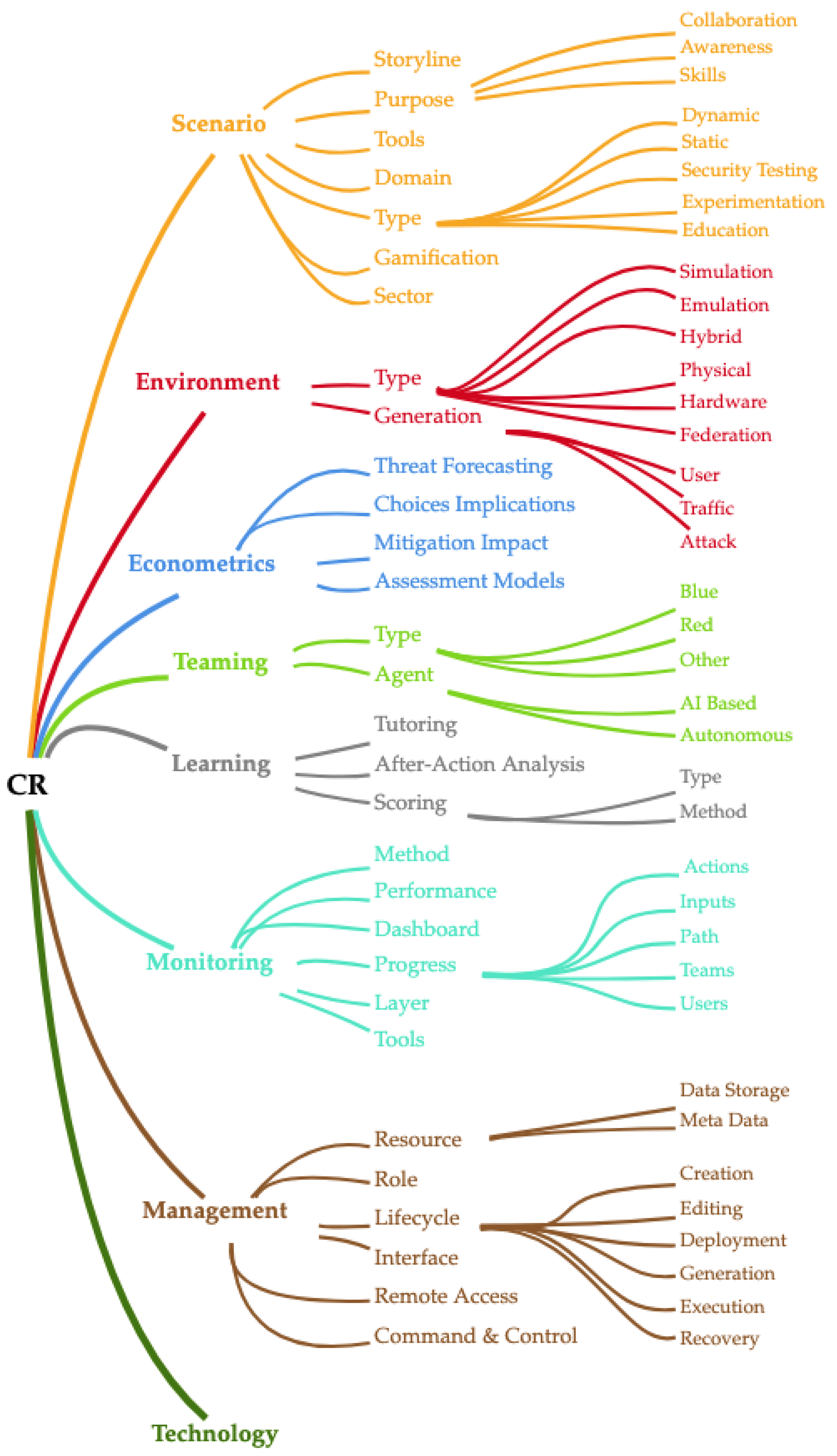

3. Proposed Taxonomy

3.1. Phase I: Taxonomy Reviews, Systematic Literature Reviews, and Baseline Taxonomy

3.1.1. Taxonomy Reviews

- Scenario: Describes the life-cycle of the cybersecurity scenario (creation, generation, editing, and execution).

- Monitoring: Covers the methods, dashboards, and tools used to track cyber range data or overall system health.

- Learning: Defines how a cyber range supports tutoring and after-action analysis (including scoring).

- Management: Concerns range management roles, interfaces, command and control, etc.

- Teaming: Describes different teams (e.g., Red, Blue) that might participate in an exercise.

- Environment: Focuses on the type of environment (e.g., physical, virtual) in which a cyber range is run, along with any event-generation tools.

- Type: Elevated to a dimension (Yamin et al. [11] treated it as a characteristic of Environment).

- Econometrics: Added as a new dimension, focusing on the economic consequences of participant actions (e.g., cost impacts during scenarios).

- Recovery: Introduced as its own dimension, ensuring that policies, patches, and post-incident measures were up to date.

- Testbeds: Added as a distinct dimension, effectively creating a mini-taxonomy within the broader taxonomy to capture how testbeds fit into a cyber range.

- Audience: Split into Sector, Purpose, Proficiency Level, and Target Audience.

- Management

- User Interface/API Gateway: Interfaces or APIs for easier interaction with management functions.

- Exercise Design: Tools that facilitate building complex, heterogeneous infrastructures and produce key scenario events.

- Competency Management: Conducts skill-gap analyses and user profiling and designs learning paths.

- Training Environment

- Scenario: Emphasizes the software/hardware needed to run a scenario; includes physical systems like IoT devices.

- Support Services: Enhances realism by interacting directly with the Scenario dimension.

- Toolset: Attack and defence tools for both Red and Blue teams.

3.1.2. Systematic Literature Reviews

3.1.3. Observed Trends and Gaps

3.1.4. Baseline Taxonomy

- Scenario;

- Environment;

- Econometrics;

- Teaming;

- Learning;

- Monitoring;

- Management;

- Technology.

Scenario

Environment

Econometrics

Teaming

Learning

Monitoring

Management

Technology

3.2. Phase II—Refined Taxonomy

Scenario

Environment

Monitoring

Management

Technology

- Connection TechnologiesTechnologies such as VPN, TCP/IP, and peer-to-peer (P2P) links enable secure communication between components and serve as foundational infrastructure for both stand-alone and federated cyber ranges.

- Virtualization LayerThis characteristic encompasses the different methods used to simulate or emulate computing environments [4,84]. We distinguished between Traditional Virtual Machines (VMs), Containerization, and Cloud Virtualization (including public, private, and hybrid models):

- Hybrid Cloud: Combines the scalability of public infrastructure with the control and security constraints of a private cloud [35].

- Traditional Virtualization: Involves hypervisor-based virtual machines that allow multiple isolated operating systems to run concurrently on a single physical machine [79].

- System ArchitectureThis characteristic reflects how the components of a cyber range are structurally organized. Across the literature, three primary architectural models were discussed: Monolithic, Microservice-based, and Federation-based:

- Federation: Federation serves both as an architectural style and a technical configuration. In the context of cyber ranges, it typically involves linking multiple geographically or organizationally distinct systems. Virág et al. [92] outlined four connectivity models: (1) Layer 1 physical interconnection, (2) Layer 2 datalink interconnection, (3) Software-Defined WAN (SD-WAN), and (4) Layer 3 logical interconnection, with the ECHO H2020 project identifying the latter—specifically a client-to-server VPN model—as the most scalable and practical solution for federated cyber ranges.

- Monolithic: This design consolidates all services into a single deployable unit. While this simplifies maintenance for small-scale deployments, it becomes less efficient at scale [95].

- Artificial IntelligenceAI technologies are gradually being integrated into cyber ranges, particularly in the domains of automation, threat detection, and adaptive learning.

- Large Language Models (LLMs): As a part of the now rapidly evolving field, LLMs are beginning to be used for log summarization and scenario narration.

- Security ToolsThese include both offensive and defensive tools, such as intrusion detection systems (e.g., Snort), deep packet inspection modules, and honeypots. These tools are typically made available to participants as part of exercise scenarios [97].

- Monitoring ToolsThis characteristic was moved to Technology from Monitoring as the technologies track both the technical health of the cyber range and user activity during exercises. Tools such as Zeek and ELK provide visibility into network traffic, system logs, and user behavior, supporting real-time scoring and after-action analysis [94].

- DatabaseThis characteristic encompasses the storage solutions used for scenario data, telemetry, vulnerability records, and user performance logs. Depending on the use case, relational, document-based, or vector databases may be employed [99].

- Orchestration ToolsOrchestration tools automate the deployment, configuration, and tear-down of cyber-range scenarios. TOSCA and CRACK support declarative infrastructure-as-code approaches, while Kubernetes orchestrates container-based environments. These tools are crucial for enabling repeatability and scalability in complex training scenarios [96,98].

3.3. Phase III—Proposed Taxonomy

- Conceptual necessity: A characteristic was retained only if it captured variability demonstrably discussed in the literature.

- Non-redundancy: Overlap between dimensions was eliminated wherever possible.

- Longevity: Items that risked rapid obsolescence (e.g., specific operating systems) were omitted in favor of higher-level abstractions.

| Dimension | Characteristic | Sub-characteristics |

|---|---|---|

| Type | Static; Dynamic (AI Based, Pre-defined) | |

| Storyline | ||

| Purpose | Collaboration; Awareness; Skills; Experimentation; Security Testing | |

| CRX | Marketplace; Level; Lifecycle (Creation; Editing; Deployment; Generation; Recovery; Execution) | |

| Domain | ||

| Scenario | Gamification | |

| Sector | ||

| Environment | Type | Simulation; Emulation; Hybrid; Cyber–Physical; Hardware; Federation |

| Generation | User; Traffic; Attack; Vulnerability Injection | |

| Teaming | Type | Blue; Red; Other |

| Agent | AI-Based; Autonomous | |

| Learning | Tutoring | |

| After-Action Analysis | ||

| Scoring | ||

| Type | ||

| Method | ||

| Method | ||

| Performance | ||

| Dashboard | ||

| Monitoring | Progress | Actions; Inputs; Path; Teams; Users |

| Layer | Network; Application | |

| Resource | ||

| Role | ||

| Interface | ||

| Management | Remote Access | |

| Command and Control | ||

| Data Storage | ||

| Meta Data | ||

| Tools | Security; Monitoring; Orchestration | |

| Virtualization | Traditional: Virtual Machine (Hypervisor); Conventional: Containerization; Cloud: SaaS, Public Cloud, Private Cloud, Hybrid | |

| Technology | Federation | |

| On-Premise | ||

| AI | Conventional ML; Deep Learning; LLM |

Key Modifications

4. Taxonomy Mapping Toolkit

4.1. Taxonomy Mapping Tool (TMT)

- Mapping literature to taxonomy elements: To allow for the seamless linking of records to specific elements in a taxonomy, ensuring that the taxonomy could be verified against existing research.

- Gathering and organizing data: To provide a structured way to capture data for each taxonomy element and allow for easy navigation and visualization.

- Collaboration and consistency: To create a uniform platform where team members could collaboratively map records to the taxonomy in a standardized way, avoiding discrepancies and ensuring consistency.

- Exposing the taxonomy to the research community: To make the taxonomy and the mapped records accessible to the cyber security community, enabling other researchers to inspect the records, validate the study group findings and contributions, and create customized versions of a taxonomy for their own research purposes.

- Exporting the taxonomy and literature to spreadsheet file format: To allow tool users to export the taxonomy and its associated literature into a structured spreadsheet file format, ensuring that the taxonomy could be shared, analyzed offline, and integrated with other workflows while maintaining a clear and structured format for the data.

- Expand/collapse nodes: Parent nodes can be expanded to reveal their child elements or collapsed to simplify the view. For example, the root node Cyber Range has multiple child nodes such as Scenario, Environment, and Teaming.

- Interactive controls: Each node includes interactive icons:

- (i)

- An information icon is a clickable button that can be used to view detailed descriptions and map records.

- (+)

- The plus icon is a clickable button that can be used to add child elements.

- (M)

- The modal icon is a clickable button that can be to manage literature references.

- Color coding: Each node is assigned a distinct color to differentiate categories visually. For instance, in Figure 6, the dimension Scenario is colored orange, Environment is colored red, and Teaming is colored green. Using colors enhances readability and helps users quickly identify and group related elements.

- Dynamic sizing: Nodes dynamically adjust their size based on the length of their labels, ensuring that the text remains legible.

4.2. Automated Literature Analysis Tool (ALAT)

- Automating term identification: To streamline the process of identifying relevant terms in an extensive collection of PDF documents based on a set of pre-defined search terms.

- Summarizing occurrences: To provide a structured and concise summary of the frequency and distribution of these terms across all analyzed documents, enabling better insights into their relevance.

- Guiding manual review: To serve as a guideline for researchers by highlighting key terms within each record, allowing for faster and more focused manual validation.

- Literature list: Lists analyzed records with their reference numbers.

- Summary: Summarizes main term and sub-term occurrences across all records.

- Details: Provides detailed term counts for each record.

- Word references: Maps terms to the records they appeared in.

5. Discussion

5.1. Key Insights

5.2. Design Decisions

5.3. Emerging Trends

5.4. Organizational and Training Implications

5.5. Addressing the Research Questions

5.6. Limitations and Unresolved Issues

6. Conclusions and Future Work

Future Work

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| ALAT | Automated Literature Analysis Tool |

| CI/CD | Continuous Integration/Continuous Delivery |

| CPS | Cyber–Physical Systems |

| CR | Cyber Range |

| CRATE | Cyber Range and Training Environment (Sweden’s national cyber-training facility) |

| CRF | Cyber Range Federation |

| CRX | Cyber Range Exercise Design |

| CRUD | Create, Read, Update, and Delete |

| ECHO | European Network of Cybersecurity Centres and Competence Hub for |

| Innovation and Operations | |

| IoT | Internet of Things |

| LLM | Large Language Model |

| ML | Machine Learning |

| OS | Operating System |

| PRISMA | Preferred Reporting Items for Systematic Reviews and Meta-Analyses |

| RQ | Research Question |

| SaaS | Software as a Service |

| SCADA | Supervisory Control and Data Acquisition |

| SLR | Systematic Literature Review |

| SS | Search String |

| TCP/IP | Transmission Control Protocol/Internet Protocol |

| TMT | Taxonomy Mapping Tool |

| URL | Uniform Resource Locator |

| VPN | Virtual Private Network |

References

- National Institute of Standards and Technology. Cyber Rages; Technical Report; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2018. [Google Scholar]

- Chouliaras, N.; Kittes, G.; Kantzavelou, I.; Maglaras, L.; Pantziou, G.; Ferrag, M.A. Cyber Ranges and TestBeds for Education, Training, and Research. Appl. Sci. 2021, 11, 1809. [Google Scholar] [CrossRef]

- Hallaq, B.; Nicholson, A.; Smith, R.; Maglaras, L.; Janicke, H.; Jones, K.; Hallaq, B.; Nicholson, A.; Smith, R.; Maglaras, L.; et al. CYRAN: A Hybrid Cyber Range for Testing Security on ICS/SCADA Systems, 1; IGI Global Scientific Publishing: Pennsylvania, PA, USA, 2018; ISBN 9781522556343. [Google Scholar] [CrossRef]

- Noponen, S.; Parssinen, J.; Salonen, J. Cybersecurity of Cyber Ranges: Threats and Mitigations. Int. J. Inf. Secur. Res. (IJISR) 2022, 12, 1032–1040. [Google Scholar] [CrossRef]

- Kampourakis, V.; Gkioulos, V.; Katsikas, S. A systematic literature review on wireless security testbeds in the cyber-physical realm. Comput. Secur. 2023, 133, 103383. [Google Scholar] [CrossRef]

- Kampourakis, V. Secure Infrastructure for Cyber-Physical Ranges. In Proceedings of the Research Challenges in Information Science: Information Science and the Connected World, Corfu, Greece, 23–26 May 2023; Nurcan, S., Opdahl, A.L., Mouratidis, H., Tsohou, A., Eds.; Springer: Cham, Swtzerland, 2023; pp. 622–631. [Google Scholar] [CrossRef]

- Kampourakis, V.; Gkioulos, V.; Katsikas, S. A step-by-step definition of a reference architecture for cyber ranges. J. Inf. Secur. Appl. 2025, 88, 103917. [Google Scholar] [CrossRef]

- Davis, J.; Magrath, S. A Survey of Cyber Ranges and Testbeds; Technical Report; Cyber Electronic Warfare Division DSTO Defence Science and Technology Organisation: Edinburg, SA, Australia, 2013. [Google Scholar]

- Nickerson, R.C.; Upkar, V.; Muntermann, J. A method for taxonomy development and its application in information systems. Eur. J. Inf. Syst. 2013, 22, 336–359. [Google Scholar] [CrossRef]

- Bailey, K.D. Typologies and Taxonomies: An Introduction to Classification Techniques, nachdr. ed.; Number 102 in Sage University Papers Quantitative Applicatons in the Social SCIENCES; Sage Publ: Thousand Oaks, CA, USA, 2003. [Google Scholar]

- Yamin, M.M.; Katt, B.; Gkioulos, V. Cyber ranges and security testbeds: Scenarios, functions, tools and architecture. Comput. Secur. 2020, 88, 101636. [Google Scholar] [CrossRef]

- Ukwandu, E.; Farah, M.A.B.; Hindy, H.; Brosset, D.; Kavallieros, D.; Atkinson, R.; Tachtatzis, C.; Bures, M.; Andonovic, I.; Bellekens, X. A Review of Cyber-Ranges and Test-Beds: Current and Future Trends. Sensors 2020, 20, 7148. [Google Scholar] [CrossRef]

- Russo, E.; Verderame, L.; Merlo, A. Enabling Next-Generation Cyber Ranges with Mobile Security Components. In Proceedings of the Testing Software and Systems, Naples, Italy, 9–11 December 2020; Casola, V., De Benedictis, A., Rak, M., Eds.; Springer: Cham, Swtzerland, 2020; pp. 150–165. [Google Scholar] [CrossRef]

- Rouquette, R.; Beau, S.; Yamin, M.M.; Mohib, U.; Katt, B. Automatic and Realistic Traffic Generation In A Cyber Range. In Proceedings of the 2023 10th International Conference on Future Internet of Things and Cloud (FiCloud), Marrakesh, Morocco, 14–16 August 2023; pp. 352–358. [Google Scholar] [CrossRef]

- Du, L.; He, J.; Li, T.; Wang, Y.; Lan, X.; Huang, Y. DBWE-Corbat: Background network traffic generation using dynamic word embedding and contrastive learning for cyber range. Comput. Secur. 2023, 129, 103202. [Google Scholar] [CrossRef]

- Doussau, A.; Souyris, C.C.P.; Yamin, M.M.; Katt, B.; Ullah, M. Intelligent Contextualized Network Traffic Generator in a Cyber Range. In Proceedings of the 2023 17th International Conference on Signal-Image Technology & Internet-Based Systems (SITIS), Bangkok, Thailand, 8–10 November 2023; pp. 9–13. [Google Scholar] [CrossRef]

- Saito, T.; Takahashi, S.; Sato, J.; Matsunoki, M.; Kanmachi, T.; Yamada, S.; Yajima, K. Development of Cyber Ranges for Operational Technology. In Proceedings of the 2023 8th International Conference on Business and Industrial Research (ICBIR), Bangkok, Thailand, 18–19 May 2023; pp. 1031–1034. [Google Scholar] [CrossRef]

- Kombate, Y.; Houngue, P.; Ouya, S. Securing MQTT: Unveiling vulnerabilities and innovating cyber range solutions. Procedia Comput. Sci. 2024, 241, 69–76. [Google Scholar] [CrossRef]

- Shin, Y.; Kwon, H.; Jeong, J.; Shin, D. A Study on Designing Cyber Training and Cyber Range to Effectively Respond to Cyber Threats. Electronics 2024, 13, 3867. [Google Scholar] [CrossRef]

- Kucek, S.; Leitner, M. Training the Human-in-the-Loop in Industrial Cyber Ranges. In Proceedings of the Digital Transformation in Semiconductor Manufacturing; Keil, S., Lasch, R., Lindner, F., Lohmer, J., Eds.; Springer: Cham, Switzerland, 2020; pp. 107–118. [Google Scholar] [CrossRef]

- Sharkov, G.; Odorova, C.T.; Koykov, G.; Nikolov, I. Towards a Robust and Scalable Cyber Range Federation for Sectoral Cyber/Hybrid Exercising: The Red Ranger and ECHO Collaborative Experience. Inf. Secur. 2022, 53, 287–302. [Google Scholar] [CrossRef]

- Braghin, C.; Cimato, S.; Damiani, E.; Frati, F.; Riccobene, E.; Astaneh, S. Towards the Monitoring and Evaluation of Trainees’ Activities in Cyber Ranges. In Proceedings of the Model-Driven Simulation and Training Environments for Cybersecurity (MSTEC), Guildford, UK, 14–18 September 2020; Hatzivasilis, G., Ioannidis, S., Eds.; Springer: Cham, Switzerland, 2020; pp. 79–91. [Google Scholar] [CrossRef]

- Ciuperca, E.; Stanciu, A.; Cîrnu, C. Postmodern Education and Technological Development. Cyber Range as a Tool for Developing Cyber Security Skills. In Proceedings of the INTED2021 Proceedings, 15th International Technology, Education and Development Conference 2021, Online Conference, 8–9 March 2021; pp. 8241–8246, ISBN 9788409276660. [Google Scholar] [CrossRef]

- Oh, S.K.; Stickney, N.; Hawthorne, D.; Matthews, S.J. Teaching Web-Attacks on a Raspberry Pi Cyber Range. In Proceedings of the 21st Annual Conference on Information Technology Education (SIGITE’20), Virtual, 7–9 October 2020; ACM: New York, NY, USA, 2020; pp. 324–329. [Google Scholar] [CrossRef]

- Shangting, M.; Quan, P. Industrial cyber range based on QEMU-IOL. In Proceedings of the 2021 IEEE International Conference on Power Electronics, Computer Applications (ICPECA), Shenyang, China, 22–24 January 2021; pp. 671–674. [Google Scholar] [CrossRef]

- Page, M.J.; Moher, D.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. PRISMA 2020 explanation and elaboration: Updated guidance and exemplars for reporting systematic reviews. BMJ 2021, 372, n160. [Google Scholar] [CrossRef]

- Okoli, C. A Guide to Conducting a Standalone Systematic Literature Review. Commun. Assoc. Inf. Syst. 2015, 37. [Google Scholar] [CrossRef]

- Bowker, G.C.; Star, S.L. Invisible Mediators of Action: Classification and the Ubiquity of Standards. Mind Cult. Act. 2000, 7, 147–163. [Google Scholar] [CrossRef]

- Wohlin, C. Guidelines for snowballing in systematic literature studies and a replication in software engineering. In Proceedings of the 18th International Conference on Evaluation and Assessment in Software Engineering, (EASE’14). London, UK, 13–14 May 2014; pp. 1–10. [Google Scholar] [CrossRef]

- Knüpfer, M.; Bierwirth, T.; Stiemert, L.; Schopp, M.; Seeber, S.; Pöhn, D.; Hillmann, P. Cyber Taxi: A Taxonomy of Interactive Cyber Training and Education Systems. In Proceedings of the Model-Driven Simulation and Training Environments for Cybersecurity, Second International Workshop (MSTEC 2020). Guildford, UK, 14–18 September 2020; Hatzivasilis, G., Ioannidis, S., Eds.; Springer: Cham, Swtzerland, 2020; pp. 3–21. [Google Scholar] [CrossRef]

- Glas, M.; Vielberth, M.; Pernul, G. Train as you Fight: Evaluating Authentic Cybersecurity Training in Cyber Ranges. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems (CHI’23), Hamburg, Germany, 23–28 April 2023; ACM: New York, NY, USA, 2023; pp. 1–19. [Google Scholar] [CrossRef]

- Bergan, S.; Ruud, E.M. Otnetic: A Cyber Range Training Platform Developed for the Norwegian Energy Sector. Master’s Thesis, University of Agder, Kristiansand, Norway, 2021. [Google Scholar]

- Pavlova, E. Implementation of Federated Cyber Ranges in Bulgarian Universities: Challenges, Requirements, and Opportunities. Inf. Secur. 2021, 50, 149–159. [Google Scholar] [CrossRef]

- Oruc, A.; Gkioulos, V.; Katsikas, S. Towards a Cyber-Physical Range for the Integrated Navigation System (INS). J. Mar. Sci. Eng. 2022, 10, 107. [Google Scholar] [CrossRef]

- Ear, E.; Remy, J.L.C.; Xu, S. Towards Automated Cyber Range Design: Characterizing and Matching Demands to Supplies. In Proceedings of the 2023 IEEE International Conference on Cyber Security and Resilience (CSR), Venice, Italy, 31 July–2 August 2023; pp. 329–334. [Google Scholar] [CrossRef]

- Evans, M.; Purdy, G. Architectural development of a cyber-physical manufacturing range. Manuf. Lett. 2023, 35, 1173–1178. [Google Scholar] [CrossRef]

- Päijänen, J. Pre-assessing Cyber Range-Based Event Participants’mNeeds and Objectives, 2023. Master’s Thesis, JAMK: University of Applied Sciences, Jyväskylä, Finland, 2023. Available online: http://www.theseus.fi/handle/10024/816339 (accessed on 8 June 2025).

- Bistene, J.V.; Chagas, C.E.d.; Santos, A.F.P.d.; Salles, R.M. Modeling Network Traffic Generators for Cyber Ranges: A Systematic Literature Review; ACM: New York, NY, USA, 2024. [Google Scholar] [CrossRef]

- Shamaya, N.; Tarcheh, G. Strengthening Cyber Defense: A Comparative Study of Smart Home Infrastructure for Penetration Testing and National Cyber Ranges; KTH School of Engineering Sciences in Chemistry, Biotechnology and Health: Stockholm, Sweden, 2024. [Google Scholar]

- Boström, A.; Hylander, A. Selecting Better Attacks for Cyber Defense Exercises: Criteria to Enhance Cyber Range Content; Linköping University, Department of Computer and Information Science: Linköping, Sweden, 2024. [Google Scholar]

- Stamatopoulos, D.; Katsantonis, M.; Fouliras, P.; Mavridis, I. Exploring the Architectural Composition of Cyber Ranges: A Systematic Review. Future Internet 2024, 16, 231. [Google Scholar] [CrossRef]

- Ni, T.; Zhang, X.; Zuo, C.; Li, J.; Yan, Z.; Wang, W.; Xu, W.; Luo, X.; Zhao, Q. Uncovering User Interactions on Smartphones via Contactless Wireless Charging Side Channels. In Proceedings of the 2023 IEEE Symposium on Security and Privacy (S&P), San Francisco, CA, USA, 22–24 May 2023; pp. 3399–3415. [Google Scholar]

- Huang, W.; Chen, H.; Cao, H.; Ren, J.; Jiang, H.; Fu, Z.; Zhang, Y. Manipulating Voice Assistants Eavesdropping via Inherent Vulnerability Unveiling in Mobile Systems. IEEE Trans. Mob. Comput. 2024, 23, 11549–11563. [Google Scholar] [CrossRef]

- Ni, T.; Lan, G.; Wang, J.; Zhao, Q.; Xu, W. Eavesdropping Mobile App Activity via Radio-Frequency Energy Harvesting. In Proceedings of the 32nd USENIX Security Symposium, Anaheim, CA, USA, 9–11 August 2023; pp. 3511–3528. [Google Scholar]

- Ni, T.; Zhang, X.; Zhao, Q. Recovering Fingerprints from In-Display Fingerprint Sensors via Electromagnetic Side Channel. In Proceedings of the 2023 ACM SIGSAC Conference on Computer and Communications Security (CCS), Copenhagen, Denmark, 26–30 November 2023; pp. 1–14. [Google Scholar]

- Cao, H.; Liu, D.; Jiang, H.; Luo, J. MagSign: Harnessing Dynamic Magnetism for User Authentication on IoT Devices. IEEE Trans. Mob. Comput. 2024, 23, 597–611. [Google Scholar] [CrossRef]

- Cao, H.; Xu, G.; He, Z.; Shi, S.; Xu, S.; Wu, C.; Ning, J. Unveiling the Superiority of Unsupervised Learning on GPU Cryptojacking Detection: Practice on Magnetic Side Channel-Based Mechanism. IEEE Trans. Inf. Forensics Secur. 2025, 20, 4874–4889. [Google Scholar] [CrossRef]

- Cao, H.; Liu, D.; Jiang, H.; Cai, C.; Zheng, T.; Lui, J.C.S.; Luo, J. HandKey: Knocking-Triggered Robust Vibration Signature for Keyless Unlocking. IEEE Trans. Mob. Comput. 2024, 23, 520–534. [Google Scholar] [CrossRef]

- Cao, H.; Jiang, H.; Liu, D.; Wang, R.; Min, G.; Liu, J.; Dustdar, S.; Lui, J.C.S. LiveProbe: Exploring Continuous Voice Liveness Detection via Phonemic Energy Response Patterns. IEEE Internet Things J. 2023, 10, 7215–7228. [Google Scholar] [CrossRef]

- Katsantonis, M.N.; Manikas, A.; Mavridis, I.; Gritzalis, D. Cyber range design framework for cyber security education and training. Int. J. Inf. Secur. 2023, 22, 1005–1027. [Google Scholar] [CrossRef]

- Lieskovan, T.; Hajný, J. Building Open Source Cyber Range To Teach Cyber Security. In Proceedings of the Proceedings of the 16th International Conference on Availability, Reliability and Security (ARES’21), Vienna, Austria, 17–20 August 2021; ACM: New York, NY, USA, 2021; pp. 1–11. [Google Scholar] [CrossRef]

- Yamin, M.M.; Katt, B. Modeling and executing cyber security exercise scenarios in cyber ranges. Comput. Secur. 2022, 116, 102635. [Google Scholar] [CrossRef]

- Friedl, S.; Glas, M.; Englbrecht, L.; Böhm, F.; Pernul, G. ForCyRange: An Educational IoT Cyber Range for Live Digital Forensics. In Information Security Education—Adapting to the Fourth Industrial Revolution; Drevin, L., Miloslavskaya, N., Leung, W.S., von Solms, S., Eds.; WISE 2022; Springer: Cham, Switzerland, 2022; pp. 77–91. [Google Scholar] [CrossRef]

- Balto, K.E.; Yamin, M.M.; Shalaginov, A.; Katt, B. Hybrid IoT Cyber Range. Sensors 2023, 23, 3071. [Google Scholar] [CrossRef]

- Priyadarshini, I. Features and Architecture of The Modern Cyber Range: A Qualitative Analysis and Survey. Ph.D. Thesis, Cornell University, Ithaca, NY, USA, 2018. [Google Scholar]

- Yang, H.; Chen, T.; Bai, Y.; Li, F.; Li, M.; Yang, R. Research and Implementation of User Behavior Simulation Technology Based on Power Industry Cyber Range. In Proceedings of the 2021 IEEE 2nd International Conference on Information Technology, Big Data and Artificial Intelligence (ICIBA), Chongqing, China, 17–19 December 2021; Volume 2, pp. 284–287. [Google Scholar] [CrossRef]

- Smyrlis, M.; Somarakis, I.; Spanoudakis, G.; Hatzivasilis, G.; Ioannidis, S. CYRA: A Model-Driven CYber Range Assurance Platform. Appl. Sci. 2021, 11, 5165. [Google Scholar] [CrossRef]

- Tagarev, T.; Stoianov, N.; Sharkov, G.; Yanakiev, Y. AI-driven Cybersecurity Solutions, Cyber Ranges for Education & Training, and ICT Applications for Military Purposes. Inf. Secur. 2021, 50, 5–8. [Google Scholar] [CrossRef]

- Russo, E.; Ribaudo, M.; Orlich, A.; Longo, G.; Armando, A. Cyber Range and Cyber Defense Exercises: Gamification Meets University Students. In Proceedings of the 2nd International Workshop on Gamification in Software Development, Verification, and Validation (Gamify 2023), San Francisco, CA, USA, 4 December 2023; ACM: New York, NY, USA, 2023; pp. 29–37. [Google Scholar] [CrossRef]

- Diakoumakos, J.; Chaskos, E.; Kolokotronis, N.; Lepouras, G. Cyber-Range Federation and Cyber-Security Games: A Gamification Scoring Model. In Proceedings of the 2021 IEEE International Conference on Cyber Security and Resilience (CSR), Rhodes, Greece, 26–28 July 2021; pp. 186–191. [Google Scholar] [CrossRef]

- Chaskos, E.; Diakoumakos, J.; Kolokotronis, N.; Lepouras, G.; Chaskos, E.; Diakoumakos, J.; Kolokotronis, N.; Lepouras, G. Gamification Mechanisms in Cyber Range and Cyber Security Training Environments: A Review, 1; IGI Global Scientific Publishing: Pennsylvania, PA, USA, 2022; ISBN 9781668442913. [Google Scholar] [CrossRef]

- Tizio, G.D.; Massacci, F.; Allodi, L.; Dashevskyi, S.; Mirkovic, J. An Experimental Approach for Estimating Cyber Risk: A Proposal Building upon Cyber Ranges and Capture the Flags. In Proceedings of the 2020 IEEE European Symposium on Security and Privacy Workshops (EuroS&PW), Genoa, Italy, 7–11 September 2020; pp. 56–65. [Google Scholar] [CrossRef]

- Hannay, J.E.; Stolpe, A.; Yamin, M.M. Toward AI-Based Scenario Management for Cyber Range Training. In Proceedings of the HCI International 2021—Late Breaking Papers: Multimodality, eXtended Reality, and Artificial Intelligence, Gothenburg, Sweden, 22–27 June 2021; Stephanidis, C., Kurosu, M., Chen, J.Y.C., Fragomeni, G., Streitz, N., Konomi, S., Degen, H., Ntoa, S., Eds.; Springer: Cham, Switzerland, 2021; pp. 423–436. [Google Scholar] [CrossRef]

- Sánchez, P.M.; Nespoli, P.; Alfaro, J.G.; Mármol, F.G. Methodology for Automating Attacking Agents in Cyber Range Training Platforms. In Proceedings of the Secure and Resilient Digital Transformation of Healthcare, Bergen, Norway, 25 November 2024; Abie, H., Gkioulos, V., Katsikas, S., Pirbhulal, S., Eds.; Springer: Cham, Switzerland, 2024; pp. 90–109. [Google Scholar] [CrossRef]

- Lieskovan, T.; Kohout, D.; Frolka, J. Cyber range scenario for smart grid security training. Elektrotech. Inftech. 2023, 140, 452–459. [Google Scholar] [CrossRef]

- Srinivas, K.S.; Suhas, M.; Srinath, P.; Sneha, K.C.; Narayan, D.G.; Somashekhar, P. CRaaS: Cyber Range as a Service. In Proceedings of the Innovations in Electrical and Electronic Engineering, New Delhi, India, 8–9 January 2022; Mekhilef, S., Shaw, R.N., Siano, P., Eds.; Springer: Singapore, 2022; pp. 565–576. [Google Scholar] [CrossRef]

- Damianou, A.; Mazi, M.S.; Rizos, G.; Voulgaridis, A.; Votis, K. Situational Awareness Scoring System in Cyber Range Platforms. In Proceedings of the 2024 IEEE International Conference on Cyber Security and Resilience (CSR), London, UK, 2–4 September 2024; pp. 520–525. [Google Scholar] [CrossRef]

- Lazarov, W.; Janek, S.; Martinasek, Z.; Fujdiak, R. Event-based Data Collection and Analysis in the Cyber Range Environment. In Proceedings of the 19th International Conference on Availability, Reliability and Security (ARES’24), Vienna, Austria, 30 July–2 August 2024; ACM: New York, NY, USA, 2024; pp. 1–8. [Google Scholar] [CrossRef]

- Park, M.; Lee, H.; Kim, Y.; Kim, K.; Shin, D. Design and Implementation of Multi-Cyber Range for Cyber Training and Testing. Appl. Sci. 2022, 12, 12546. [Google Scholar] [CrossRef]

- Xie, J.; Zhang, C.; Lou, F.; Cui, Y.; An, L.; Wang, L. High-Speed File Transferring Over Linux Bridge for QGA Enhancement in Cyber Range. In Artificial Intelligence and Security; Sun, X., Pan, Z., Bertino, E., Eds.; Springer International Publishing: Cham, Switzerland, 2019; Volume 11635, pp. 452–462. [Google Scholar] [CrossRef]

- Vekaria, K.B.; Calyam, P.; Wang, S.; Payyavula, R.; Rockey, M.; Ahmed, N. Cyber Range for Research-Inspired Learning of “Attack Defense by Pretense” Principle and Practice. IEEE Trans. Learn. Technol. 2021, 14, 322–337. [Google Scholar] [CrossRef]

- Hatzivasilis, G.; Ioannidis, S.; Smyrlis, M.; Spanoudakis, G.; Frati, F.; Braghin, C.; Damiani, E.; Koshutanski, H.; Tsakirakis, G.; Hildebrandt, T.; et al. The THREAT-ARREST Cyber Range Platform. In Proceedings of the 2021 IEEE International Conference on Cyber Security and Resilience (CSR), Rhodes, Greece, 26–28 July 2021; pp. 422–427. [Google Scholar] [CrossRef]

- Albaladejo-González, M.; Strukova, S.; Ruipérez-Valiente, J.A.; Gómez Mármol, F.L. Exploring the Affordances of Multimodal Data to Improve Cybersecurity Training with Cyber Range Environments. In Colección Jornadas y Congresos; Serrano, M.A., Fernández-Medina, E., Alcaraz, C., Castro, N.D., Calvo, G., Eds.; Ediciones de la Universidad de Castilla-La Mancha: Cuenca, Spain, 2021. [Google Scholar] [CrossRef]

- Karjalainen, M.; Kokkonen, T. Comprehensive Cyber Arena; The Next Generation Cyber Range. In Proceedings of the 2020 IEEE European Symposium on Security and Privacy Workshops (EuroS&PW), Genoa, Italy, 16–18 June 2020; pp. 11–16. [Google Scholar] [CrossRef]

- Sharkov, G.; Todorova, C.; Koykov, G.; Zahariev, G. A System-of-Systems Approach for the Creation of a Composite Cyber Range for Cyber/Hybrid Exercising. Inf. Secur. 2021, 50, 129–148. [Google Scholar] [CrossRef]

- Linardos, V. Development of a Cyber Range Platform. Master’s Thesis, University of Piraeus, Piraeus, Greece, 2021. [Google Scholar] [CrossRef]

- Hätty, N. Representing Attacks in a Cyber Range. Master’s Thesis, Linköping University, Linköping, Sweden, 2019. [Google Scholar]

- Wang, Y. An Attribution Method for Alerts in an Educational Cyber Range Based on Graph Database. Master’s Thesis, Kth Royal Institute of Technology, Stockholm, Sweden, 2023. [Google Scholar]

- Orbinato, V. A next-generation platform for Cyber Range-as-a-Service. In Proceedings of the 2021 IEEE International Symposium on Software Reliability Engineering Workshops (ISSREW), Wuhan, China, 25–28 October 2021; pp. 314–318. [Google Scholar] [CrossRef]

- Ficco, M.; Palmieri, F. Leaf: An open-source cybersecurity training platform for realistic edge-IoT scenarios. J. Syst. Archit. 2019, 97, 107–129. [Google Scholar] [CrossRef]

- Lateș, I. Automating Cyber-Range Virtual Networks Deployment Using Open-Source Technologies (Chef Software). Econ. Inform. 2020, 20, 36–43. [Google Scholar]

- Kim, I.; Park, M.; Lee, H.J.; Jang, J.; Lee, S.; Shin, D. A Study on the Multi-Cyber Range Application of Mission-Based Cybersecurity Testing and Evaluation in Association with the Risk Management Framework. Information 2024, 15, 18. [Google Scholar] [CrossRef]

- Gustafsson, T.; Almroth, J. Cyber Range Automation Overview with a Case Study of CRATE. In Secure IT Systems; Asplund, M., Nadjm-Tehrani, S., Eds.; Springer International Publishing: Cham, Switzerland, 2021; Volume 12556, pp. 192–209. [Google Scholar] [CrossRef]

- Luise, A.; Perrone, G.; Perrotta, C.; Romano, S.P. On-demand deployment and orchestration of Cyber Ranges in the Cloud. In Proceedings of the ITASEC 2021: Italian Conference on Cyber Security, Online, 7–9 April 2022. [Google Scholar]

- Guerrero, G.; Betarte, G.; Campo, J.D. Tectonic: An Academic Cyber Range. In Proceedings of the 2024 IEEE Biennial Congress of Argentina (ARGENCON), San Nicolás de los Arroyos, Argentina, 18–20 September 2024; pp. 1–8. [Google Scholar] [CrossRef]

- Costa, G.; Russo, E.; Armando, A. Automating the Generation of Cyber Range Virtual Scenarios with VSDL. JOWUA 2022, 13, 61–80. [Google Scholar] [CrossRef]

- Jaduš, B. Web Interface for Adaptive Training at the KYPO Cyber Range Platform. Master’s Thesis, Masaryk University, Brno, Czech, 2021. [Google Scholar]

- Mahmoud, R.V.; Anagnostopoulos, M.; Pedersen, J.M. Detecting Cyber Attacks through Measurements: Learnings from a Cyber Range. IEEE Instrum. Meas. Mag. 2022, 25, 31–36. [Google Scholar] [CrossRef]

- Oikonomou, N.; Mengidis, N.; Spanopoulos-Karalexidis, M.; Voulgaridis, A.; Merialdo, M.; Raisr, I.; Hanson, K.; de La Vallee, P.; Tsikrika, T.; Vrochidis, S.; et al. ECHO Federated Cyber Range: Towards Next-Generation Scalable Cyber Ranges. In Proceedings of the 2021 IEEE International Conference on Cyber Security and Resilience (CSR), Rhodes, Greece, 26–28 July 2021; pp. 403–408. [Google Scholar] [CrossRef]

- Russo, E.; Costa, G.; Armando, A. Building next generation Cyber Ranges with CRACK. Comput. Secur. 2020, 95, 101837. [Google Scholar] [CrossRef]

- Jiang, H.; Choi, T.; Ko, R.K.L. Pandora: A Cyber Range Environment for the Safe Testing and Deployment of Autonomous Cyber Attack Tools. In Proceedings of the Security in Computing and Communications, Online, 14–17 October 2020; Thampi, S.M., Wang, G., Rawat, D.B., Ko, R., Fan, C.I., Eds.; Springer: Singapore, 2021; pp. 1–20. [Google Scholar] [CrossRef]

- Virág, C.; Čegan, J.; Lieskovan, T.; Merialdo, M. The Current State of The Art and Future of European Cyber Range Ecosystem. In Proceedings of the 2021 IEEE International Conference on Cyber Security and Resilience (CSR), Rhodes, Greece, 26–28 July 2021; pp. 390–395. [Google Scholar] [CrossRef]

- He, Y.; Yan, L.; Liu, J.; Bai, D.; Chen, Z.; Yu, X.; Gao, D.; Zhu, J. Design of Information System Cyber Security Range Test System for Power Industry. In Proceedings of the 2019 IEEE Innovative Smart Grid Technologies—Asia (ISGT Asia), Chengdu, China, 21–24 May 2019; pp. 1024–1028. [Google Scholar] [CrossRef]

- Capone, D.; Caturano, F.; Delicato, A.; Perrone, G.; Romano, S.P. Dockerized Android: A container-based platform to build mobile Android scenarios for Cyber Ranges. In Proceedings of the 2022 International Conference on Electrical, Computer and Energy Technologies (ICECET), Prague, Czech Republic, 20–22 July 2022; pp. 1–9. [Google Scholar] [CrossRef]

- Grimaldi, A.; Ribiollet, J.; Nespoli, P.; Garcia-Alfaro, J. Toward Next-Generation Cyber Range: A Comparative Study of Training Platforms. In Proceedings of the Computer Security. ESORICS 2023 International Workshops, The Hague, The Netherlands, 25–29 September 2023; Katsikas, S., Abie, H., Ranise, S., Verderame, L., Cambiaso, E., Ugarelli, R., Praça, I., Li, W., Meng, W., Furnell, S., et al., Eds.; Springer: Cham, Switzerland, 2024; pp. 271–290. [Google Scholar] [CrossRef]

- Farhat, H. Design and Development of the Back-End Software Architecture for a Hybrid Cyber Range. Master’s Thesis, Politecnico di Torino, Torino, Italy, 2021. [Google Scholar]

- Beuran, R.; Zhang, Z.; Tan, Y. AWS EC2 Public Cloud Cyber Range Deployment. In Proceedings of the 2022 IEEE European Symposium on Security and Privacy Workshops (EuroS&PW), Genoa, Italy, 6–10 June 2022; pp. 433–441. [Google Scholar] [CrossRef]

- Fu, Y.; Han, W.; Yuan, D. Orchestrating Heterogeneous Cyber-range Event Chains With Serverless-container Workflow. In Proceedings of the 2022 30th International Symposium on Modeling, Analysis, and Simulation of Computer and Telecommunication Systems (MASCOTS), Nice, France, 18–20 October 2022; pp. 97–104. [Google Scholar] [CrossRef]

- Grigoriadis, A.; Darra, E.; Kavallieros, D.; Chaskos, E.; Kolokotronis, N.; Bellekens, X. Cyber Ranges: The New Training Era in the Cybersecurity and Digital Forensics World. In Technology Development for Security Practitioners; Akhgar, B., Kavallieros, D., Sdongos, E., Eds.; Springer International Publishing: Cham, Swizerland, 2021; pp. 97–117. [Google Scholar] [CrossRef]

- Lateș, I.; Boja, C. Cyber Range Technology Stack Review. In Proceedings of the Education, Research and Business Technologies, Bucharest, Romania, 26–27 May 2022; Ciurea, C., Pocatilu, P., Filip, F.G., Eds.; Springer: Singapore, 2023; pp. 25–40. [Google Scholar] [CrossRef]

- Chng, B.; Ng, B.; Roomi, M.M.; Mashima, D.; Lou, X. CRaaS: Cloud-based Smart Grid Cyber Range for Scalable Cybersecurity Experiments and Training. In Proceedings of the 2024 IEEE International Conference on Communications, Control, and Computing Technologies for Smart Grids (SmartGridComm), Oslo, Norway, 17–20 September 2024; pp. 333–339. [Google Scholar] [CrossRef]

- Dracotus, X. Automated Cloud Cyber Range Deployments. Master’s Thesis, University of Aegean, Department of Information and Communication Systems Engineering, Mitilini, Greece, 2021. [Google Scholar]

- Seem, J.A. Towards a Scenario Ontology for the Norwegian Cyber Range. Master’s Thesis, NTNU, Trondheim, Norway, 2020. [Google Scholar]

| Phase | Criterion | Description |

|---|---|---|

| Phase I | IC1 | Papers on cyber ranges (CRs) that conducted a systematic literature review (SLR) |

| IC2 | Papers on CR that proposed or analyzed a taxonomy | |

| EC1 | Publications written in languages other than English | |

| EC2 | Papers published before 2014 | |

| EC3 | Papers inaccessible due to a paywall | |

| EC4 | Opinion pieces, editorials, and other non-academic articles | |

| Phase II + III | IC1 | Papers on CR architectures, scenarios, functions, and tools |

| EC1 | Publications written in languages other than English | |

| EC2 | Papers published before 2019 | |

| EC3 | Papers inaccessible due to a paywall | |

| EC4 | Papers that discussed testbeds outside the CR context | |

| EC5 | Opinion pieces, editorials, and other non-academic articles |

| Dimension | Yamin et al. [11] | Ukwandu et al. [12] | Knüpfer et al. [30] | Russo et al. [13] | Glas et al. [31] |

|---|---|---|---|---|---|

| Scenario | Included | Included | Under Training Environment | Under Training Environment | Included |

| Monitoring | Included | Included | Not explicitly specified | Under Management | Included |

| Learning | Included | Included | Under Audience (Proficiency Level) | Not explicitly specified | Included |

| Management | Included | Included | Not explicitly specified | Included | Included |

| Teaming | Included | Included | Not explicitly specified | Not explicitly specified | Included implicitly |

| Environment | Included | Included | Under Training Environment | Under Training Environment (Mobile-specific) | Included implicitly |

| Technology | Not included explicitly | Not included explicitly | Not explicitly specified | Under Mobile-specific Technology | Explicitly included |

| Type | Not included | Included | Not explicitly specified | Not explicitly specified | Included |

| Econometrics | Not included | Included | Not explicitly specified | Not explicitly specified | Not explicitly specified |

| Recovery | Not included | Included | Not explicitly specified | Not explicitly specified | Included |

| Audience | Not explicitly specified | Not explicitly specified | Explicitly included | Not explicitly specified | Not explicitly specified |

| Testbeds | Integrated within Environment | Explicitly included | Not explicitly specified | Explicitly included (Mobile-specific) | Explicitly included |

| Authors/Title | Focus/Contribution |

|---|---|

| Chouliaras et al. [2] (2021) Cyber Ranges and TestBeds for Education, Training, and Research | Explored rising demand for cybersecurity professionals; surveyed ten cyber ranges (2011–2021) to examine design, implementation, and operations. |

| Bergan and Ruud [32] (2021) Otnetic: A Cyber-Range Training Platform Developed for the Norwegian Energy Sector | SLR reinforced exercise design and gamification practices for IT/OT staff in the energy sector; collaboration with NC-Spectrum. |

| Pavlova [33] (2021) Implementation of Federated Cyber Ranges in Bulgarian Universities: Challenges, Requirements, and Opportunities | Assessed EU regulatory context; proposed how federated ranges integrate into academic curricula. |

| Oruc et al. [34] (2022) Towards a Cyber-Physical Range for the Integrated Navigation System (INS) | Marine-system focus: evaluated cyber–physical testbeds for navigation hardware and emerging security solutions. |

| Ear et al. [35] (2022) Towards Automated Cyber Range Design: Characterising and Matching Demands to Supplies | Analyzed 45 architectures; introduced a three-dimension requirements framework (Purpose, Scope, Constraints) to match designs to organizational needs. |

| Evans and Purdy [36] (2022) Architectural Development of a Cyber-Physical Manufacturing Range | Concluded that manufacturing contexts need dedicated cyber–physical ranges; contrasted with broader taxonomy of [11]. |

| Päijänen [37] (2023) Pre-Assessing Cyber-Range-Based Event Participants’ Needs and Objectives | Showed that pre-assessment of participant goals improves exercise design and learning outcomes. |

| Bistene et al. [38] (2023) Modelling Network Traffic Generators for Cyber Ranges: A Systematic Literature Review | Classified traffic generators (model, trace, hybrid) and critiqued validation practices across 30 studies. |

| Shamaya and Tarcheh [39] (2023) Strengthening Cyber Defence: A Comparative Study of Smart-Home Infrastructure for Penetration Testing and National Cyber Ranges | Built an IoT penetration-testing environment and conducted an SLR on national cyber range infrastructures; offered building guidance. |

| Boström and Hylander [40] (2023) Selecting Better Attacks for Cyber Defence Exercises | Conducted SLR plus expert interviews; proposed a five-step process (selection, implementation, automation, deployment, evaluation) for realistic attack scenarios. |

| Stamatopoulos et al. [41] (2023) Exploring the Architectural Composition of Cyber Ranges: A Systematic Review | Focused on cyber–physical range architectures; identified design challenges and research gaps. |

| Characteristic | Sub-Characteristic | Description | Refs |

|---|---|---|---|

| Storyline | - | Sequence of incidents (e.g., data disclosure, DDoS, system manipulation) that structure the exercise narrative. | [12,53] |

| Purpose | Collaboration, Awareness, Skill Development | High-level intent of the scenario; collaboration is rarely mentioned, while awareness and skill development are common. | Own analysis |

| Scenario Type | Dynamic, Static, Security Testing, Experimentation, Education | Typology of scenarios based on structure and objectives. | Own analysis |

| Tools | - | Collection of hardware and software components used to simulate real-world environments. | [54] [11,13] |

| Domain | - | Technological scope of the scenario, like IoT, SCADA, Forensics, Web Security | [55] |

| Sector | - | Industry context in which the scenario is applied, for example, Energy, Maritime, Defence | [56,57,58] |

| Gamification | - | Competitive and game-like elements embedded into scenario design. | [59,60,61] |

| Characteristic | Sub-Characteristic | Description | Refs |

|---|---|---|---|

| Environment Type | Simulation | A simplified representation of a system designed to mimic general behaviors. | [22] |

| Environment Type | Emulation | A more detailed replication including real software or hardware to reflect operational systems. | [4,22] |

| Environment Type | Hybrid | A combined setup using both simulated and emulated components. | [22] |

| Environment Type | Physical | A tangible and often lab-based infrastructure used to mimic real-world systems. | [20,23,24] |

| Environment Type | Hardware | Uses physical devices such as routers and switches for realism. | [25] |

| Environment Type | Federation | Links multiple cyber ranges to create distributed or large-scale testbeds. | [21] |

| Generation Tools | Traffic Generation | Produces background or simulated user activity (e.g., HTTP requests, clicks, logs). | [14,15,16] |

| Generation Tools | Attack Generation | Automates or simulates known real-world attacks such as Stuxnet or Havex. | [17,18] |

| Generation Tools | User Emulation | Simulates typical user activity to produce background noise or interaction patterns. | [19,20,21] |

| Characteristic | Sub-Characteristic | Description | Refs |

|---|---|---|---|

| Threat Forecasting | — | Predicting the likelihood, timing, and financial consequences of future cyber-attacks. | [12,62] |

| Choices and Implications | — | Evaluating cost–benefit trade-offs, such as investing in cyber defence tools versus accepting residual risk. | [12,62] |

| Mitigation Impact | — | Analyzing the cost-efficiency of different defensive strategies under various threat conditions. | [12,62] |

| Assessment Models | — | Combining data from risk assessments, threat forecasts, and incidents to justify investment or gauge resilience. | [12,62] |

| Characteristic | Sub-Characteristic | Description | Refs |

|---|---|---|---|

| Team Type | Red Team | Offensive actors who simulate attacks. Can be human participants or system-generated. | [11,12,13,30] |

| Team Type | Blue Team | Defensive participants—typically the main trainees—focused on detecting and mitigating attacks. | [11,12,13,30] |

| Team Type | Yellow Team | Roles that increase scenario realism (e.g., simulated users, IT staff). May be played or emulated. | [11] |

| Team Type | White Team | Exercise facilitators, observers, and evaluators responsible for control and oversight. | [11] |

| Team Type | Green Team | Technical support team maintaining infrastructure during the exercise. | [11] |

| Team Type | Purple Team | Integrates Red and Blue team insights to improve tactical and strategic collaboration. | [11] |

| Agent Type | AI-Based Agents | Machine learning-driven systems that dynamically plan, adapt, or execute scenarios. | [63] |

| Agent Type | Autonomous Agents | Rule- or script-based agents that simulate behavior using deterministic logic (e.g., APT generators). | [64] |

| Characteristic | Sub-Characteristic | Description | Refs |

|---|---|---|---|

| After-Action Analysis | — | Structured post-exercise review of participant activity, often tied to debriefing and performance evaluation. | [11,51,54,65] |

| Tutoring | — | Educational support including documents, interactive hints, and training portals to scaffold participant learning. | [66] |

| Scoring | Type, Method | Performance evaluation based on impacts to Confidentiality, Integrity, and Availability. May include live or dynamic scoring dashboards. Scoring systems often implied but rarely defined. Existing models include penalty-based and mathematical evaluations. Represents a gap in the current literature. | [57,60,67] |

| Characteristic | Sub-Characteristic | Description | Refs |

|---|---|---|---|

| Method | — | Describes how systems or participants are monitored, including event-driven alerts, system resource tracking, and file system changes. Event-based, CPU, Filesystem | [68,69] |

| Dashboard | — | Interfaces used to visualize metrics like attack indicators, participant scores, and resource usage. Kibana, Custom UI. | [31,70] |

| Layer | — | Specifies the layer of the system or user activity being monitored, from low-level infrastructure to social/behavioral layers, fx. Network, Application, Hardware, Social. | [11] |

| Performance | — | Includes monitoring of CPU/memory performance and user progress or behaviors within the scenario. | [71,72] |

| Progress | Actions, Inputs, Path, Teams, Users | Tracks participants’ journey through exercises, including their discrete actions, inputs, decision paths, team dynamics, and scoring. | [59,73] |

| Tools | — | Frameworks such as OpenStack’s dashboard and the ELK stack to custom-built solutions. Some implementations rely on bespoke components designed to capture data from complex system interactions. | [74] |

| Characteristic | Sub-Characteristic | Description | Refs |

|---|---|---|---|

| Resource | Data Storage | Storing scenario models, logs, and backups. | [35,76] |

| Resource | Metadata | Configuration details about environments and exercises. | [77,78] |

| Lifecycle Management | Creation | Building or cloning infrastructures, including digital twins. | [79] |

| Lifecycle Management | Editing | Modifying existing scenarios, though less commonly detailed in the literature. | [80] |

| Lifecycle Management | Deployment | Automating the rollout of scenarios or network nodes. | [12,81] |

| Lifecycle Management | Execution | Starting, pausing, and stopping exercises. | [82] |

| Lifecycle Management | Recovery | Cleaning up or resetting systems post-exercise. | [12,83] |

| Lifecycle Management | Generation | Orchestrating attacks, traffic, and events. Emphasizes when and how these are triggered. | [11] |

| Remote Access | — | Enables administrative or authorized user control of the range infrastructure remotely. | [11,12,84] |

| Role | — | Defines user types and permissions (e.g., Instructor, Student, Admin). | [57,81,85,86] |

| Management Interface | — | Dashboards and portals used to manage and monitor the range. | [87,88] |

| Command and Control | — | Low-level engines or interfaces that allow direct command execution within the range. | [11,75] |

| Characteristic | Sub-Characteristic | Description | Refs |

|---|---|---|---|

| Connection Technologies | — | Secure data links between cyber range components (e.g., VPN tunnels, encrypted TCP/IP stacks, peer-to-peer meshes). | [92] |

| Virtualization Layer | Virtual Machines | Hypervisor-based VMs that isolate multiple OS instances on shared hardware. | [93] |

| Containerization | OS-level virtualization such as Docker or LXC enabling rapid, lightweight instantiation. | [94] | |

| Cloud Virtualization | Public, private, or hybrid IaaS provisioning on platforms like AWS or Azure; includes SaaS access to pre-built ranges. | [93,94] | |

| System Architecture | Monolithic | All services deployed as a single unit—suitable for small deployments but less scalable. | [95] |

| Microservice | Disaggregated services offering elasticity at the cost of orchestration overhead. | [95] | |

| Federation | Linking geo-distributed ranges via VPN or SD-WAN for joint exercises. | [92] | |

| Artificial Intelligence | ML/DL | Machine and deep learning pipelines for threat detection and digital twin generation. | [96] |

| Large Language Models | Emerging use of LLMs for log summarization and scenario narration. | [62] | |

| Tools | Security | Offensive/defensive toolsets such as IDS (Snort), DPI modules, and honeypots. | [97] |

| Monitoring | Telemetry and scoring frameworks (Zeek, ELK, Grafana) providing runtime visibility. | [94] | |

| Orchestration | Infrastructure-as-Code and lifecycle-automation frameworks (TOSCA, CRACK, Kubernetes). | [98] | |

| Database | — | Persistent or in-memory stores for scenario artifacts and telemetry (relational, document, vector, Redis). | [99] |

| ID | Title | Author | Date | URL |

|---|---|---|---|---|

| 1 | Reference Title 1 | Author A | 2023-01-01 | https://example.com/1 |

| 2 | Reference Title 2 | Author B | 2023-02-15 | https://example.com/2 |

| 3 | Reference Title 3 | Author C | 2023-03-20 | https://example.com/3 |

| Level | Name | Description | ID | Path |

|---|---|---|---|---|

| 0 | Root Category | Description of root category | 1, 2, 3 | Root Category |

| 1 | Subcategory A | Description of subcategory | 4, 5, 6 | Root → Subcategory A |

| 2 | Subcategory B | Detailed description | 7, 8, 9 | Root → Subcategory A → Subcategory B |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lillemets, P.; Bashir Jawad, N.; Kashi, J.; Sabah, A.; Dragoni, N. A Systematic Review of Cyber Range Taxonomies: Trends, Gaps, and a Proposed Taxonomy. Future Internet 2025, 17, 259. https://doi.org/10.3390/fi17060259

Lillemets P, Bashir Jawad N, Kashi J, Sabah A, Dragoni N. A Systematic Review of Cyber Range Taxonomies: Trends, Gaps, and a Proposed Taxonomy. Future Internet. 2025; 17(6):259. https://doi.org/10.3390/fi17060259

Chicago/Turabian StyleLillemets, Pilleriin, Nabaa Bashir Jawad, Joseph Kashi, Ahmad Sabah, and Nicola Dragoni. 2025. "A Systematic Review of Cyber Range Taxonomies: Trends, Gaps, and a Proposed Taxonomy" Future Internet 17, no. 6: 259. https://doi.org/10.3390/fi17060259

APA StyleLillemets, P., Bashir Jawad, N., Kashi, J., Sabah, A., & Dragoni, N. (2025). A Systematic Review of Cyber Range Taxonomies: Trends, Gaps, and a Proposed Taxonomy. Future Internet, 17(6), 259. https://doi.org/10.3390/fi17060259