1. Introduction

In healthcare emergency response systems, the set of activities to be performed must be promptly recorded for potential further investigations. In such cases, external authorities can challenge healthcare service providers regarding the timing of critical events, such as the arrival time of an ambulance at the designated location, the starting time of a required analysis, etc. Generally, the activities to be performed follow a well-established practice and, thus, they are not at the discretion of the intervening personnel; however, the timing of such activities can be subject to scrutiny. Disagreements about the proper timing of some events can escalate into disputes, and the healthcare service provider should demonstrate that the appropriate sequence of actions was implemented at the correct time.

A general practice is that the healthcare provider records events and their timestamps, as they occur, on a dedicated server. However, the integrity of such timestamps may be questioned when the authority responsible for recording the data is involved among the disputing parties. e.g., the challenging authority could argue that the data were tampered with in certain circumstances, making it appear that the ambulance arrived on time. In such cases, the defendant would not be able to demonstrate otherwise.

Although a centralized database would be under the control of some authority, when no authority can be considered trustworthy, blockchain technology is apt to ensure data integrity and access control, thanks to its mechanisms based on cryptographic signatures and hashes that confirm that the recorded data remain unmodifiable [

1].

Several blockchain applications have been proposed for healthcare systems, including the management of electronic health records (EHR) and patient data [

2,

3,

4], emergency medical services [

5,

6], patient-centric data sharing [

7,

8], integration with IoT devices [

9], clinical research [

10,

11], healthcare security and audit processes [

12,

13,

14,

15], and medical data sharing [

15,

16,

17]. Certainly, patient confidentiality and secure data exchanges should be ensured [

18]. Unlike methods that depend on trusted third parties or centralized timestamping servers, our framework leverages a blockchain model to eliminate such dependencies. In our solution, blockchain-stored hashes either provide a certification of data kept unaltered in the database or make alteration evident and easily detected. Previous blockchain-based timestamping approaches have not solved issues due to transaction delays, high costs, and privacy. Our dual-layered design, which combines real-time cloud storage with blockchain recording, resolves these issues by ensuring both efficiency and robust security even under high data loads. Importantly, our approach uniquely ensures non-repudiation of recorded events, a critical capability in healthcare incident response that, to the best of our knowledge, has not been addressed by previous solutions.

We propose a blockchain-based framework designed to certify the integrity and authenticity of data and their timestamps for events occurring in healthcare emergency response systems. The proposed system includes a health emergency aggregator (HEA), a software component that collects incident data, records such timestamped data on a cloud server, and hashes them using SHA-256, as soon as they occur. Then, data certification is ensured by storing hashes on an EVM-compatible blockchain via smart contracts. This dual-layered approach, which combines blockchain and cloud storage, is known as off-chain data storage, a well-known technique that provides scalability, security, and transparency, while reducing the costs related to the blockchain [

19,

20,

21]. Later, the authenticity of recorded events can be checked by comparing the hashes computed on the data stored on a cloud server with those stored on the blockchain [

22].

To assess the practicality of our solution, we performed a comprehensive evaluation of our prototype software system using a substantial data set extracted from the incident dispatch dataset provided by the NYC OpenData initiative [

23]. Our findings demonstrate the efficacy of our approach, making it a cost-effective strategy for aggregating the numerous data points and timestamps that would otherwise necessitate more recordings per day.

The main contribution of this paper is the development of a decentralized framework that leverages blockchain technology to certify the integrity and authenticity of incident data. By employing a client-server and blockchain-based software architecture, our framework guarantees that all recorded events become certified; thus, it holds a proof of the recorded timing. This solution facilitates secure and transparent data handling and enhances trust among actors. Additionally, we performed a thorough cost assessment to demonstrate the framework’s economic viability, highlighting its cost-effectiveness in real-world scenarios. This combination of features provides emergency system authorities and patients with a trusted third-party mechanism to certify incident-related data efficiently and effectively.

The remainder of the paper is structured as follows.

Section 2 reviews the most relevant approaches in the literature and compares them with our proposed method.

Section 3 describes the architecture and methodology for preserving data integrity using blockchain.

Section 4 presents experimental results from real-world simulations.

Section 5 discusses the results and limitations of our approach. Finally,

Section 6 draws our conclusion.

2. Related Works

Several approaches have been proposed to ensure the integrity and authenticity of long-term time-stamping (LTTS) systems, which leverage cryptographic techniques, trusted third parties, and decentralized architectures [

24]. While some focus on formal security models, others explore blockchain-based solutions to eliminate central authorities.

Meng et al. [

25] introduced a formal security model for LTTS schemes based on Message Authentication Codes (MACs), archives, and transient keys, addressing the lack of cryptographic analysis in alternative approaches. Despite enhancing robustness against cryptographic advancements, their solution depends on trusted third parties (e.g., archives and TSAs), incurs storage overhead, and requires frequent cryptographic updates. Similarly, Vigil et al. [

26] explored decentralized timestamping for long-term digital archives but still relied on timestamping servers, making their approach vulnerable to centralization risks. Unlike these methods, our approach leverages blockchain to eliminate the need for trusted third parties while ensuring verifiable timestamps and built-in dispute resolution.

Blockchain-based timestamping has gained traction as an alternative to centralized solutions. Meng and Chen [

27] proposed a scheme that records hash digests on a private blockchain, ensuring long-term verifiability without relying on external authorities. However, a private blockchain cannot provide guarantees as a public one, and the transaction delays introduced by the consensus mechanism and the risk of loss of keys affecting verification pose significant challenges. Wilson [

28] extended this concept by implementing a permissioned blockchain for secure document timestamping, integrating off-chain storage and a quarantine mechanism. Although document management was enhanced, centralized control over the blockchain raises concerns regarding governance. Similarly, Zhang et al. [

29] leveraged Ethereum blockchain to leverage transactions for timestamping, benefitting from its immutability but introducing privacy risks and high operational costs. To address these limitations, our approach adopts a blockchain model that stores only data hashes, thus eliminating privacy risks and significantly reducing operational costs.

Scalability and computational efficiency remain key concerns in long-term trusted timestamping (LTTS). Bin et al. [

30] introduced IoETTS, a decentralized scheme for the Internet of Energy (IoE), leveraging public blockchain to improve data integrity. However, the computational and energy overhead associated with blockchain operations, particularly those relying on Proof of Work consensus, conflicts with the efficiency goals of IoE. Similarly, Zhang et al. [

31] proposed a scalable LTTS scheme using commitment signatures and bilinear pairing accumulators to reduce transaction costs, but their approach lacks analysis of real-world deployment.

In addition to system implementations, theoretical analyses of LTTS security and efficiency have emerged. Meyer [

32] formalized timestamping mechanisms using transitive prefix authentication graphs, providing a structured comparison of efficiency and security trade-offs. However, maintaining authenticated prefix structures introduces storage and computational overhead, limiting large-scale applicability. Meng et al. [

33] examined client-side security risks, identifying vulnerabilities in key management and cryptographic resilience, but did not offer concrete mitigation strategies. Existing solutions often involve trade-offs between security, decentralization, scalability, and efficiency. In contrast, our approach leverages a public blockchain (Ethereum) to ensure decentralization and scalability, while maintaining the integrity of the stored hash and reducing operational costs.

Blockchain technology has been used in several contexts where it is fundamental that data remain unchanged, tamper-proof, and provable [

34,

35,

36]. In the healthcare domain, the need for advanced technologies is rapidly increasing, with blockchain playing a key role in the transformation of the sector [

37,

38,

39].

Odeh et al. [

3] provided an overview of blockchain-based applications in healthcare, focusing on its ability to improve electronic health records (EHR), enhance data security, and support patient monitoring and drug traceability. They emphasized blockchain ability to ensure that only authorized parties can access sensitive medical data. Le et al. [

4] proposed a permissioned blockchain system that uses the Hyperledger Fabric platform to allow quick and secure access to health records during emergencies. The system enables patients to define access rules for their data, which are enforced through smart contracts.

Suthaputchakun et al. [

5] explored blockchain’s potential to improve communication and security in emergency rescue operations, particularly in ambulance-to-everything communications. Their system provides secure data exchange between ambulances, hospitals, and other emergency services, preventing tampering and ensuring authenticity. Ksibi et al. [

6] designed a blockchain-based system that integrates the Internet of Vehicles and the Internet of Medical Things to enhance emergency medical responses in smart cities, allowing the real-time transmission of accident victim data to nearby healthcare services.

Yue et al. [

7] introduced a public blockchain architecture that empowers patients to control their healthcare data while ensuring privacy and enabling secure sharing among different healthcare providers. This decentralized approach improves data access and prevents unauthorized access and tampering. Griggs et al. [

9] proposed a system that integrates a private blockchain with IoT medical devices, using smart contracts to automate notifications for medical interventions and ensure HIPAA-compliant data handling.

Lin et al. [

13] presented a framework designed to address security concerns by ensuring secure mutual authentication and fine-grained access control. This framework leverages blockchain’s capabilities to provide confidentiality, auditability, and scalability, making it suitable for dynamic environments such as smart factories. Fernandez-Aleman et al. [

12] introduced a blockchain-based system for managing audit logs among healthcare organizations, enabling interoperability and ensuring the integrity of log data. Nugent et al. [

11] leveraged blockchain’s tamper-resistant features to secure clinical trial data, using Ethereum smart contracts to automate updates and ensure data transparency using a permissioned blockchain.

Benchoufi et al. [

10] discussed how blockchain can address challenges in clinical research, such as data reproducibility, sharing, privacy, and patient enrollment. They emphasize blockchain’s decentralized, transparent, and tamper-resistant nature, which is crucial for maintaining data integrity in clinical trials. Zheng et al. [

8] propose a hybrid blockchain-cloud system for secure sharing of personal health data, using blockchain for transaction management and validation, while cloud storage handles large datasets.

Shi et al. [

14] reviewed how blockchain technology was used to enhance the security and privacy of electronic health record (EHR) systems. They highlighted critical limitations of traditional EHR systems, such as data breaches, unauthorized access, and lack of transparency, and proposed that blockchain decentralization, immutability, and access control capabilities address these challenges. Practical use cases include secure patient data sharing, tamper-proof audit trails, and privacy enforcement through smart contracts. However, the study acknowledges significant barriers to adoption, including scalability, high computational costs, integration complexity, and regulatory constraints.

Xia et al. [

16] proposed MeDShare, a blockchain-based system designed to facilitate medical data sharing in a trust-less environment. MeDShare ensures data provenance, auditing, and control for shared medical data in cloud repositories among big data entities. The system monitors entities accessing data for malicious use and records all data transitions and sharing activities in a tamper-proof manner. It employs smart contracts and an access control mechanism to effectively track data behavior and revoke access to offending entities upon detecting permission violations.

Similarly, Xia et al. [

15] addressed the challenges of securely sharing electronic medical records (EMRs) in cloud environments, particularly concerning patient privacy and data breaches. The authors proposed a permissioned blockchain-based data-sharing framework that leverages the immutability and autonomy properties of blockchain technology to improved access control for sensitive data stored in the cloud. Their system utilizes a permissioned blockchain, granting access exclusively to invited and verified users, thus ensuring accountability by maintaining a log of all user actions.

Azaria et al. [

17] introduced a private blockchain-based system designed to streamline access management and permissions for medical data. It addresses the fragmentation and inaccessibility of medical records by enabling patients to retain control over their data while providing secure, auditable access to healthcare providers. However, MedRec faces several challenges, including scalability limitations, high transaction costs, privacy risks from metadata exposure, and the complexity of patient and provider adoption. Additionally, regulatory compliance, energy consumption due to Ethereum’s Proof of Work, and integration with existing EHR systems pose significant barriers to real-world deployment.

Patientory [

40] is a blockchain-based peer-to-peer network for securely storing and sharing electronic medical records (EMRs). It integrates with existing EHR systems, allowing patients and healthcare providers to access medical data while ensuring security and compliance through smart contracts and the token economy. However, the approach faces several challenges, including scalability limitations, high transaction costs, privacy risks from off-chain storage, and regulatory compliance issues, particularly with data immutability.

Previous studies, reported above, have explored a wide range of blockchain-based applications in healthcare, primarily focusing on authentication, data privacy, access control, and data integrity. However, privacy risks and scalability limitations remain significant challenges, restricting the real-world applicability of these approaches. In contrast, our approach innovatively leverages blockchain technology to ensure the authenticity and integrity of event timestamps by storing only their hashes. This design choice significantly reduces blockchain costs while enhancing system maintainability, addressing a key limitation of existing research, where escalating costs hinder scalability [

21]. Furthermore, our framework introduces event filtering mechanisms, allowing the selection and storage of only relevant events to optimize resource usage. It also incorporates data aggregation techniques to efficiently group multiple event records, further minimizing blockchain transaction costs. To validate its practicality, we conducted extensive experiments on real-world datasets, analyzing both transaction costs and the time required to securely store hashes on the blockchain. These strategies ensure that our solution is effective, cost-efficient, and feasible for the targeted medical domain.

3. System Architecture of the Decentralized Framework

3.1. Overview of the Main Components

Current limitations in healthcare emergency response systems highlight a critical need for effective solutions to certify the authenticity and integrity of events throughout the incident lifecycle. To address this shortcoming, we propose an innovative architecture that integrates blockchain technology to have a secure and transparent mechanism for event certification in emergency response systems. This architecture ensures that critical data related to each incident are certified, fostering trust and accountability in the system.

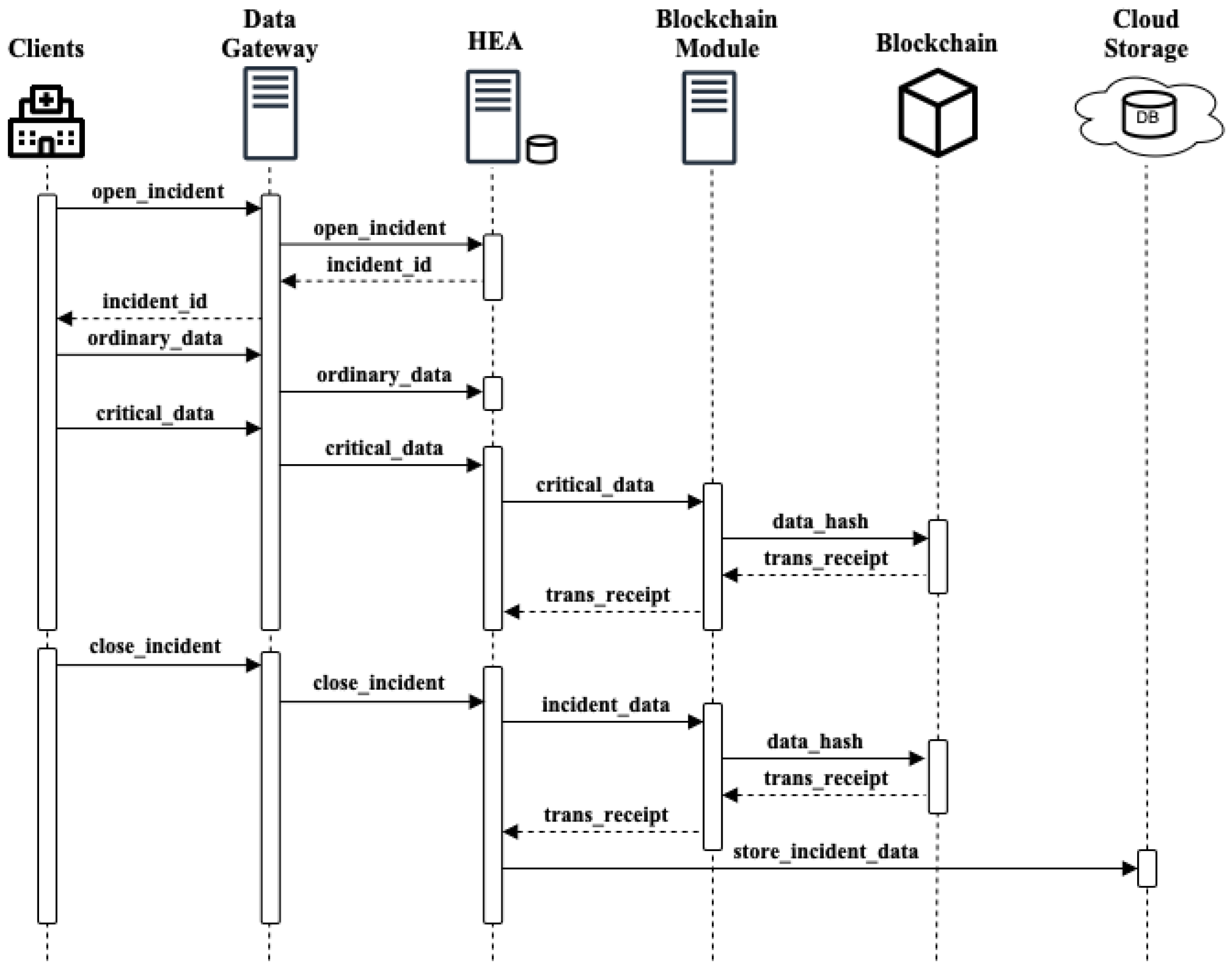

Figure 1 presents a high-level overview of the proposed architecture, outlining the key actors involved and the technologies employed.

At the heart of the architecture is the health emergency aggregator (HEA), the main component responsible for managing incidents and triggering the activities necessary to safeguard data integrity. The lifecycle of an incident begins with the emergency call, which yields to the creation of a new incident record. This call goes to the data gateway, a component that acts as a facade and handles all the data from the clients and filters and forwards all the incident data to the HEA, which will store them in a local database. The HEA collects all the data related to an incident until it is resolved and can be archived in the cloud for long-term storage. The HEA functions as a reliable storage system, ensuring that all incident-related data are securely held without undergoing further processing or analysis. This capability provides an accurate historical record of emergency events.

To further enhance the security, a mechanism that can demonstrate the authenticity of incident data has been put into place. Critical events identified by the HEA are submitted to the blockchain module, where they are permanently recorded. This blockchain integration allows checking whether stored data were kept unaltered and renders any tampering activities worthless, since it would be easily exposed in case of disputes. The benefit of this approach is twofold: The data are protected from potential tampering, and a transparent audit trail is kept for future reference. Timestamped events are periodically sent to the blockchain while they occur, whereas the analysis of data is performed when needed, much after the incident has been handled, e.g., when further analyses are started to gather statistics on the healthcare service, or to check whether proper activities were performed in due time.

The workflow followed by the HEA is presented in Algorithms 1 and 2, which outline a systematic data handling and aggregation. Algorithm 1 shows that payloads received from a data gateway and categorized into one of four types—open, close, ordinary, and critical—trigger specific operations to manage incidents and their associated data. For open payloads, a new incident record is created, having a unique identifier, and then stored. For closed payloads, data related to a specific incident are retrieved and aggregated; then, the results of their management are appended to a list of ordinary data. When processing ordinary and critical payloads, received data are stored; for critical cases, data are immediately aggregated and the results added to a dedicated critical data list.

| Algorithm 1 Healthcare Emergency Aggregator (HEA) workflow when receiving data |

- 1:

- 2:

- 3:

while isListening do - 4:

- 5:

switch (data_payload.type) - 6:

case open: - 7:

- 8:

- 9:

- 10:

break - 11:

case close: - 12:

- 13:

- 14:

- 15:

- 16:

break - 17:

case ordinary: - 18:

- 19:

- 20:

break - 21:

case critical: - 22:

- 23:

- 24:

- 25:

- 26:

break - 27:

end while

|

Algorithm 2 shows a timeout mechanism allowing us to periodically aggregate and secure both ordinary and critical data. Hashes of aggregated data are sent to a blockchain to ensure integrity and traceability, with transaction receipts stored for verification purposes. This workflow ensures efficient data management, secure storage, and streamlined incident handling in dynamic and high-stakes healthcare environments.

| Algorithm 2 Healthcare Emergency Aggregator (HEA) workflow when timeouts occur |

- 1:

while isUp do - 2:

switch (timeout) - 3:

case critical: - 4:

- 5:

- 6:

- 7:

- 8:

break - 9:

case ordinary: - 10:

- 11:

- 12:

- 13:

- 14:

break - 15:

end while

|

3.2. Healthcare Emergency Aggregator

The healthcare emergency aggregator (HEA) component serves as a central hub for managing all incidents and their associated data, ensuring seamless coordination and integration within the emergency response system. For each incident, the aggregator receives comprehensive data from the data gateway, which initiates the incident record. This record holds the time of the occurrence, the situational context, and detailed information about the stakeholders assigned to the incident. Such stakeholders are ambulances, medical professionals, such as doctors and paramedics, healthcare facilities, such as hospitals, and other emergency response units.

The data gateway acts as a component that abstracts system complexity by providing a unified interface. Clients interact exclusively with the gateway, which consolidates incident data and forwards them to HEA for processing and storage. Centralizing incident data through HEA facilitates efficient resource allocation and real-time decision making. This approach is critical for prompt emergency responses and maintaining a comprehensive repository for post-incident analysis and system optimization. Emergency assistance requests typically begin when a patient contacts the designated emergency telephone number. Upon receiving a call, the operator collects relevant information about the patient and their situation. These data is used to create a standardized incident record in the system, which HEA manages. Each incident is assigned a unique identifier to ensure seamless tracking and management throughout its lifecycle.

Figure 2 presents a sequence diagram to show the interactions among the proposed components throughout the lifecycle of an incident. By showing the interactions among components of our proposed solution, we provide details of the communication handling (requests and answers), data filtering (separating ordinary and critical events), data transformation (aggregation and computing hashes) and permanent storage of data (hashes on the blockchain and data on the cloud server).

The lifecycle begins with the formal creation of the incident (open_incident), initiated by a starting event that triggers the sequence of actions required for resolution. HEA assigns a unique identifier (incident_id) to each incident and communicates this ID to clients, enabling consistent reference during subsequent data transmissions related to the incident. As the incident unfolds, updates on status changes, resource allocation, and priority adjustments are recorded, along with communication traces arising from coordination within emergency response teams. The lifecycle ends with a final event (close_incident) marking the resolution or closure of the incident. This process provides a comprehensive chronological record of the incident’s progression, offering valuable insights for accountability and continuous improvement.

The data collected during the lifecycle are categorized into two priority levels: ordinary and critical. Ordinary data encompass ancillary information such as the geographical dispatch area, postal code, and descriptive details of the situation. As these pieces of data provide contextual understanding, they do not directly influence real-time decision making.

Critical data, in contrast, consist of timestamped events that document pivotal moments in the incident’s lifecycle, such as dispatch times, arrival times at the scene, and specific interventions performed. These data are essential for real-time coordination and post-incident analysis. To ensure operational efficiency, legal compliance, and process improvement, the integrity and accuracy of critical data must be maintained rigorously.

The HEA employs a multi-layered approach to incident data management that ensures both system responsiveness and data integrity. A local database serves as a temporary cache for incident data, enabling rapid access and updates during an incident’s active phase. When an incident is closed, the HEA consolidates all related information, including timestamps, resource allocations, stakeholder actions, and blockchain transaction receipts, into a coherent record. These data are then transferred to remote cloud storage, which serves as the permanent repository for historical data analysis and reporting, offering scalability, redundancy, and accessibility.

To further enhance data security and trust, HEA uses blockchain technology to save hashes of incident data. Such hashes, being immutable, would prove whether the exhibited data were the original data, or, vice versa, if hashes do not match, that the data were altered. After receiving critical data, the information is stored in the local database and then passed to the blockchain module for subsequent aggregation and submission. Likewise, when an incident is closed, all associated data are aggregated and their corresponding hashes are submitted to the blockchain. In the event that a blockchain transaction does not receive confirmation, the affected data batch and its hash remain securely stored in the local database, and the HEA subsequently reissues the hash-writing request to the blockchain module. This mechanism leverages the inherent resilience of blockchain technology, ensuring that, despite any transient failures, the hash writing is ultimately and reliably completed. Once a blockchain transaction is completed, a receipt is generated and attached to the incident record to facilitate later verification.

This dual-layered storage strategy, combining cloud storage with blockchain solutions, balances scalability with security. It reinforces the reliability and integrity of the emergency response system, fostering stakeholder confidence and ensuring compliance with legal and regulatory requirements.

3.3. Blockchain Module

Blockchain has emerged as an innovative technology in secure and decentralized data management, offering properties that are particularly relevant to sensitive domains such as the healthcare sector. At its core, blockchain is a distributed ledger system that records transactions in a series of cryptographically linked blocks. These blocks are designed to be immutable, ensuring that data, once added, cannot be modified or deleted, thus providing a high level of security and trust. Ethereum and other Ethereum Virtual Machine (EVM)-compatible blockchains have further advanced this technology by enabling programmable smart contracts. These contracts operate as self-executing code triggered by predefined conditions, eliminating the need for intermediaries while ensuring transparency.

In ambulance dispatch and patient data management, blockchain provides a secure and reliable framework to validate the critical events, while safeguarding sensitive information. The proposed system uses an Ethereum Virtual Machine (EVM)-compatible blockchain to certify the records, kept in a cloud storage, of incident data.

For enhanced security, incident data are hashed using the SHA-256 algorithm, generating a unique digital fingerprint that guarantees its integrity. This hash is then immutably recorded on the blockchain, establishing a transparent and unalterable record of all system transactions. This mechanism secures data and facilitates independent verification, as the blockchain serves as a reliable audit trail that can be referenced to confirm that the recorded data have not been tampered with.

The proof of the existence of an event generated by the client (mobile application) is represented by the concatenated hash of three components: a description of the event (e.g., a patient requesting an ambulance), its timestamp, and an optional document associated with the event (e.g., a picture of wound, the ID cards of a patient). The resulting hash is stored on the blockchain to guarantee the proof of existence of the event while preserving its integrity and validity. Hash writings and retrievals are performed by the DataHashStorage smart contract deployed on an EVM-compatible blockchain. The contract provides two core functions: (i) storeDataHash(bytes32 _dataHash), and (ii) getBlockTimestampFromHash(bytes32 _dataHash).

The storeDataHash() function takes as input the hash sent by the blockchain module and records it on the blockchain using an internal mapping. This mapping associates each hash with the timestamp of the block in which it was recorded. The block timestamp, represented as a uint256 type in Solidity, is measured in seconds since the Unix epoch. The HEA retrieves the block timestamp from the transaction receipt and associates it with the corresponding data batch in its local database.

The getBlockTimestampFromHash() function confirms the presence of a specific hash in the blockchain storage. It takes the hash as input and uses it to query the internal mapping, retrieving the corresponding block timestamp if the hash is found. This timestamp serves as proof of the hash’s existence on the blockchain, confirming the associated data’s registration. From the block timestamp, the existence of the block and the hash writing transaction can be verified. Conversely, if the function returns the default value of zero, it indicates that the provided hash is not present in the blockchain storage, showing it has not been recorded.

Disputes regarding data accuracy may arise when external parties (e.g., patients) report timestamps for critical events (e.g., the arrival time of an ambulance) that differ from those recorded by the system. In these cases, blockchain-stored data hashes are employed to verify the integrity and accuracy of the timestamps. The process involves retrieving the complete incident record from remote cloud storage and computing two hashes: (i) the system-recorded data hash, and (ii) the hash of the data with the disputed timestamp substituted. The smart contract function getBlockTimestampFromHash() is then called to verify the existence of these hashes on the distributed ledger. A matching hash confirms the data’s integrity and the correct timestamp, whereas a mismatch indicates invalid data, thereby resolving the dispute.

Table 1 illustrates an example using SHA-256 hashes computed from incident data.

In

Table 1, the first row shows the hash computed from the system-recorded incident data (including its timestamp), while the second row displays the hash computed with the external, disputed timestamp. The presence of only the first hash on the blockchain confirms the integrity of the stored data and the accuracy of its timestamp.

It is important to note that although the HEA manages incident data, our design incorporating a public blockchain provides independent external verification. In situations such as legal disputes or regulatory audits, external stakeholders require assurance that recorded event timestamps and data integrity have not been subject to unilateral modification. By utilizing a public blockchain rather than an Ethereum sidechain or a private blockchain under HEA’s control, the audit trail remains decentralized, trustless, and immune to centralized tampering. The immutability of the blockchain guarantees that once a hash is recorded, it remains unalterable, establishing a robust record that is time-stamped.

Only storing the data’s hash on-chain offers several advantages. The hash serves as an exact digital fingerprint of the original data, preserving its integrity and confidentiality. When data are retrieved from secure off-chain (cloud-based) storage, the system recomputes the hash and compares it with the corresponding blockchain entry, ensuring that the data remain unaltered. This process provides a cost-effective and scalable record of incident data. Moreover, because the hash is generated using a one-way cryptographic function, no sensitive information is revealed, thus ensuring compliance with privacy requirements. The blockchain’s immutability further guarantees that once a hash is recorded, it cannot be modified, creating a reliable audit trail that verifies the data’s existence at a specific time. Finally, only storing the hash, rather than the complete dataset, yields significant cost savings by avoiding the substantial expenses associated with on-chain storage of large data volumes.

To efficiently manage the storage of critical and ordinary data, the system employs two strategies: time-based and threshold-based hash storage. The time-based strategy ensures that blockchain updates occur at regular intervals, aggregating critical data every 10 min while appending ordinary data less frequently, such as every 60 min. This strategy provides predictability and consistency, ensuring that data are recorded regardless of fluctuations in event frequency. In contrast, the threshold-based strategy dynamically adapts the frequency of blockchain writings based on real-time data flow. A new hash is computed only when the number of accumulated critical records surpasses a predefined threshold, allowing this method to adaptively respond to variations in event occurrence. Unlike the time-based approach, which maintains a fixed schedule, the threshold-based method optimizes resource usage by writing data only when necessary, reducing suboptimal transaction requests.

The choice between these strategies depends on the specific system requirements: The time-based strategy is suitable when regular and predictable updates are required, while the threshold-based approach is more effective in scenarios where event frequency is highly variable and periodic writing may lead to inefficiencies. For enhanced flexibility and efficiency, the system can also adopt a hybrid strategy that dynamically combines time-based and threshold-based approaches. This combined strategy allows the system to remain cost-effective when varying the frequency of event occurrence, providing configurable parameters to specify the lower and upper bounds for event aggregation before writing. The experimental evaluation of these three strategies is provided in the following section.

4. Experiments and Results

To perform our experiments, we employed the Incident Dispatch Data publicly available on the NYC OpenData website [

23]. Each entry in the dataset contains detailed information about a specific incident, spanning from the time it was opened in the dispatch system to the moment it was marked as closed. At the time of writing, the dataset includes entries up until 3 October 2024.

To model critical data in our proposed approach, we extracted all dataset fields containing timestamps, which were generated and recorded by the dispatch system whenever significant events occurred. A complete list of these fields (7 out of 31 columns in the dataset), along with their descriptions, is provided in

Table 2.

The dataset contains 24 additional fields that provide supplementary details about each incident, such as the borough name, dispatch location zip code, and police precinct code. These fields, categorized as ordinary data in our system, are collected by HEA until the corresponding incident is officially closed. Due to space constraints,

Table 3 presents a representative subset of these fields, including example values to illustrate the data format. The complete list of fields and their descriptions is available in

Appendix A and on the dataset’s homepage [

23]. The salient characteristics of such a dataset are in

Appendix B.

HEA operated continuously on a central server, receiving and storing incoming incident data in its local database. The data gateway was deployed on a cloud node, ensuring robust client interaction and seamless forwarding of incident data to HEA. The blockchain module, operating on a separate node, processed the incident data by generating a hash and recording it on the blockchain according to a predefined storage strategy. Once the transaction was successfully written on the blockchain, a transaction receipt was generated and returned to HEA for logging it onto the local database. Subsequently, both the receipt and the corresponding incident data, along with the computed hash, were transmitted to the cloud database. In our experiments, we evaluated three storage strategies: (i) time-based hash storage, (ii) threshold-based hash storage, and (iii) combined hash storage strategy.

4.1. Time-Based Hash Storage

In the time-based strategy, the blockchain module selected data at fixed time intervals to form a transaction batch. We set a time interval of 10 min to select critical data, and a time interval of 60 min to select the complete record of closed incidents, which comprises all the ordinary data. Subsequently, the transaction batch was processed using the SHA-256 hash function, and the resulting data hash was submitted to the DataHashStorage smart contract for permanent storage on the blockchain. The contract was deployed on the Ethereum Sepolia testnet, and is accessible at the following address:

. The contract code, along with the complete transaction history, is accessible on the corresponding Sepolia Etherscan page (

https://sepolia.etherscan.io/address/0x2a5a789bebee29c8fc824d76a82d3ae787b6d1f5, (accessed on the 7 February 2025)).

The DataHashStorage contract implements the function storeDataHash(bytes32 _dataHash), which accepts the SHA-256 hash as input and stores it in an internal mapping. This mapping persistently associates each hash with the timestamp of the block in which it was included, consuming some portion of the storage space in the Sepolia network.

We ran extensive experiments to evaluate the transaction fees and the time required to write hashes to the blockchain. In total, we executed 2050 transactions on the Sepolia network, spanning different days of the week and different times of the day. The success rate for executing transactions was 100%.

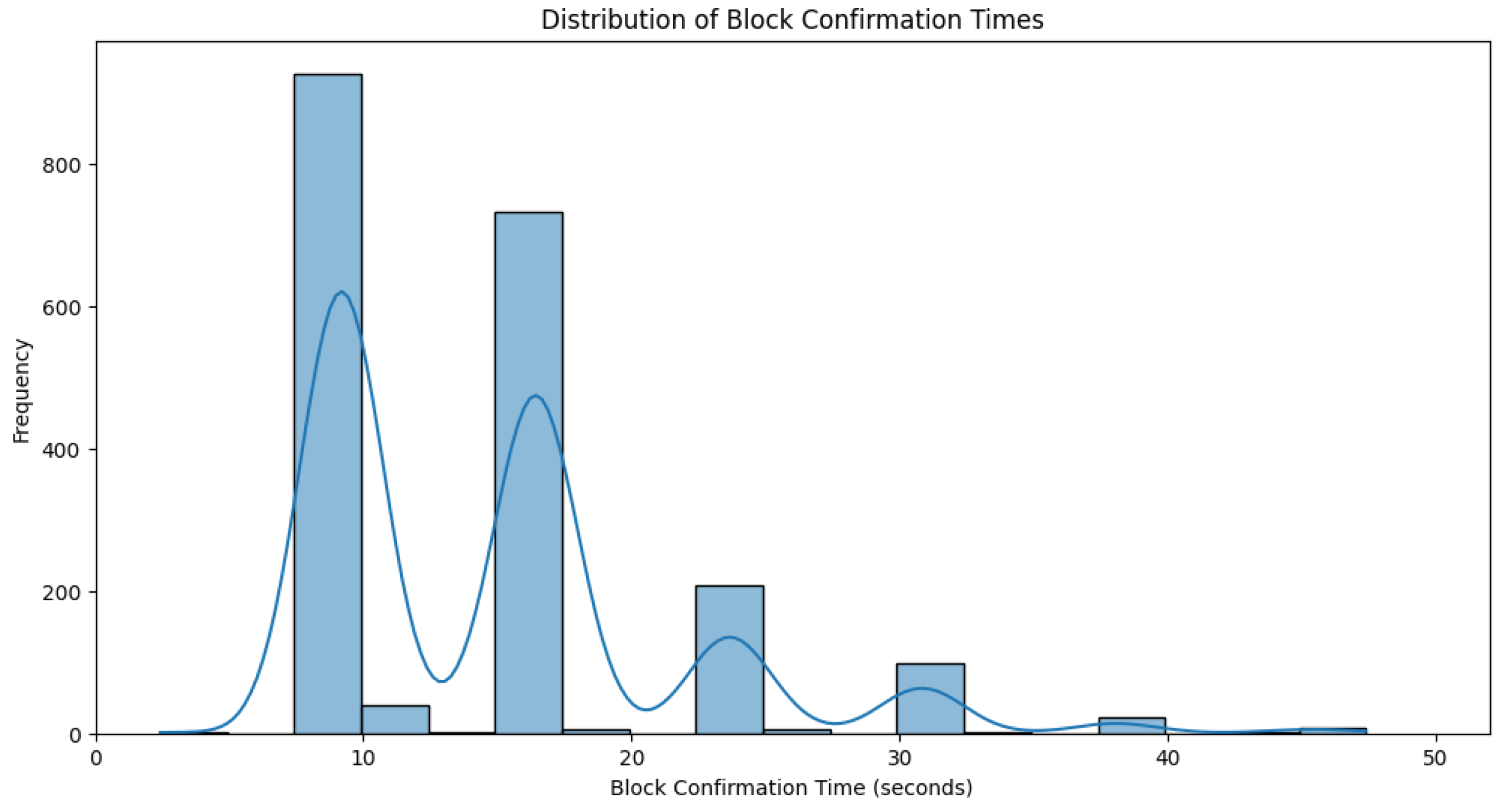

Figure 3 presents a histogram showing the distribution of block confirmation times.

Data distribution analysis revealed that block confirmation times tend to cluster. To identify the time intervals corresponding to each cluster, we used the kernel density estimate (KDE) inferred from the data. Specifically, we extracted the relative minima of the multimodal KDE plot (see

Figure 3) and observed the corresponding x-axis values as the boundaries for each time cluster.

Table 4 presents reference metrics and count values for each cluster.

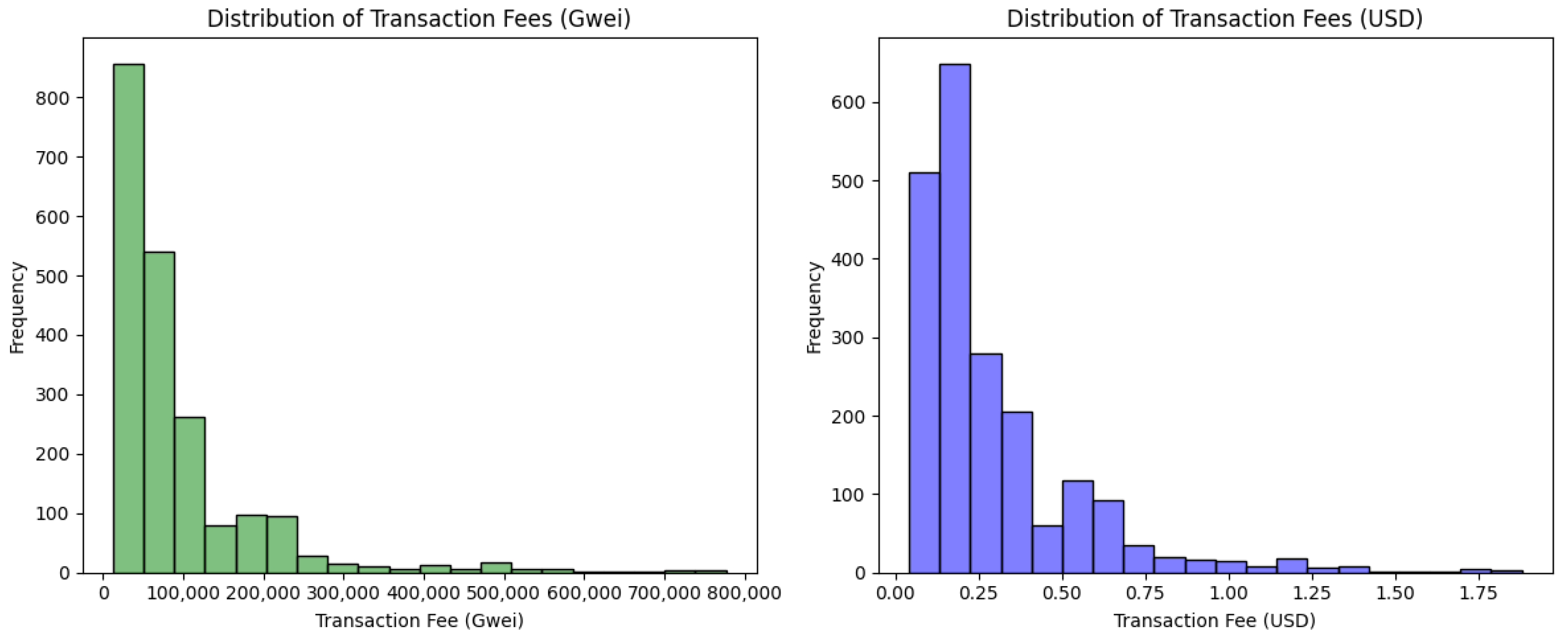

To assess the costs associated with hash writing, we analyzed the transaction receipts and calculated the fees in Gwei (1 Gwei ETH). The total fee was determined by multiplying the gas price at the time of block creation by the gas units consumed. In our experiments, we set a gas limit to 44,500 units and a maximum priority fee of 0.001 Gwei per transaction. To convert fees from Gwei to USD, we used CoinGecko, a publicly accessible cryptocurrency data aggregator that provides real-time exchange rates, including ETH-USD.

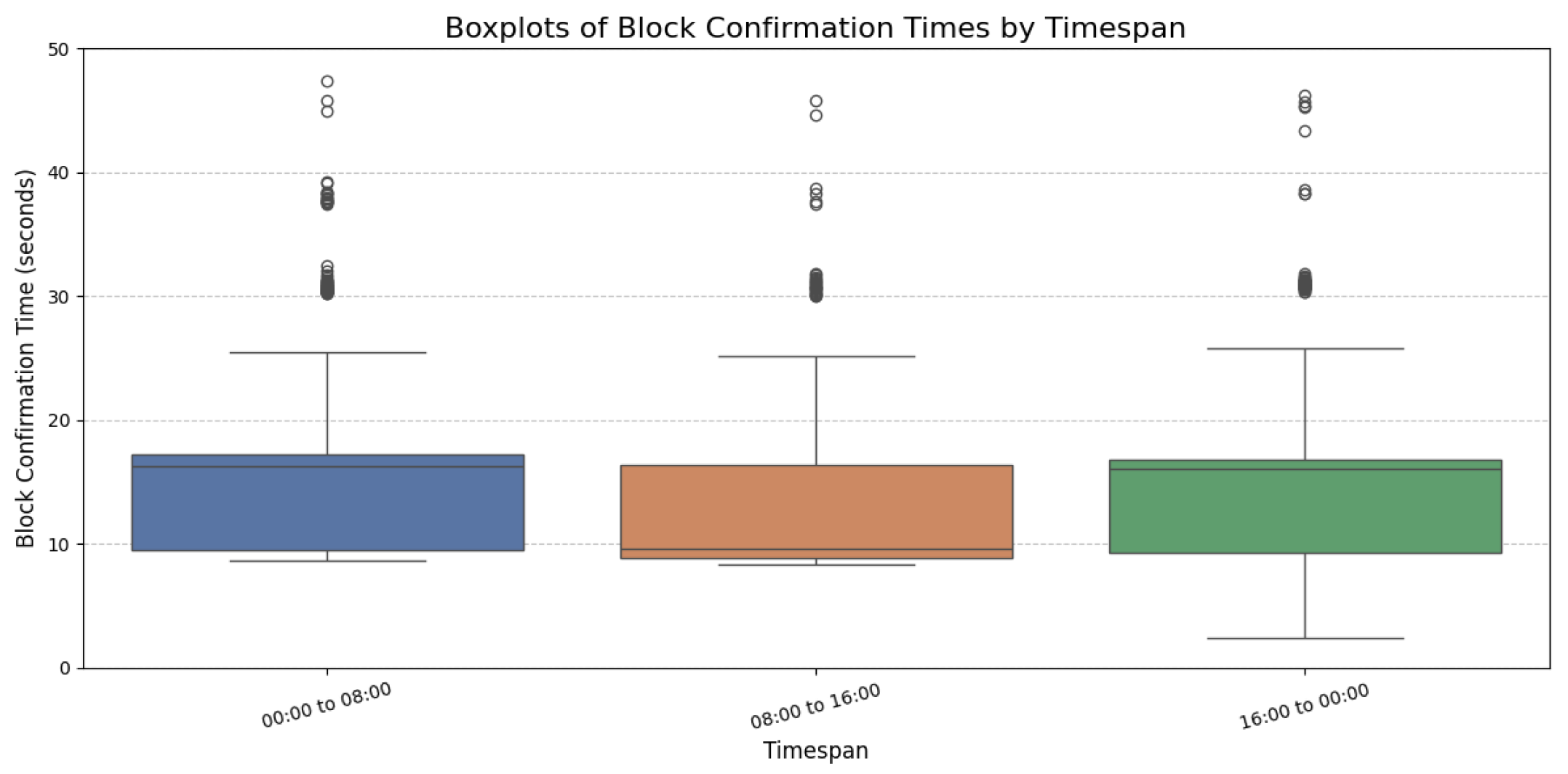

To evaluate the effect of the time of day on block confirmation times, we grouped transactions into three equally sized timespans, each spanning eight hours.

Table 5 reports the metrics for each transaction group.

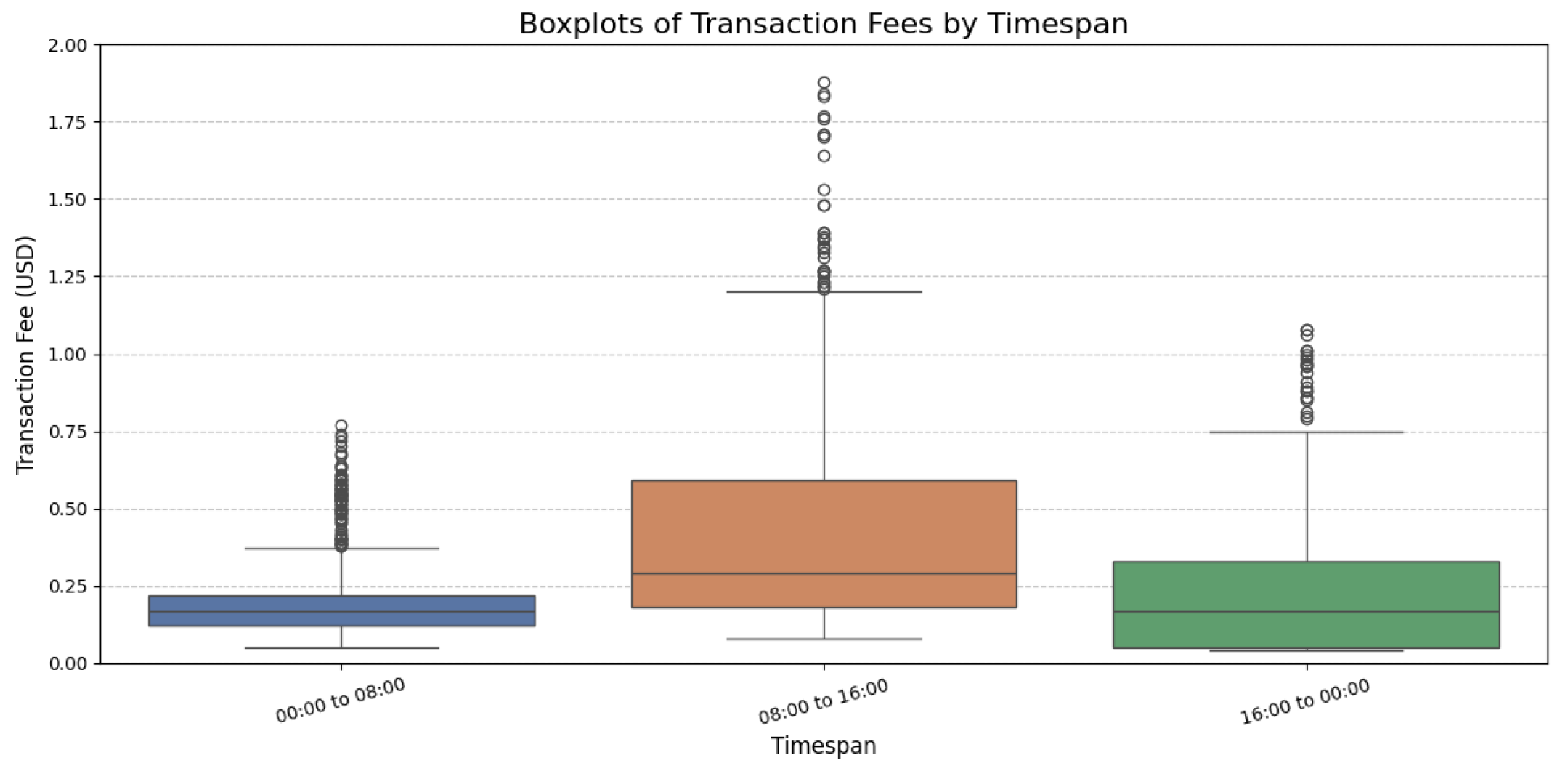

Figure 4 shows the results of this analysis as a plot of the distribution of transaction fees in Gwei and USD.

Figure 5 presents boxplots of transaction execution times, while

Figure 6 displays boxplots of transaction fees.

4.2. Threshold-Based Hash Storage

In the threshold-based strategy, the blockchain module selected critical data from its local database to compute a hash only when a predefined threshold for the number of critical data entries was reached. The handling of ordinary data remained unchanged: Every 60 min, complete records of closed incidents were included in the transaction batch.

To determine the frequency of critical events in a real-world scenario, we performed a comprehensive analysis of the Incident Dispatch Data dataset. The dataset was cleaned and filtered as follows: Girstly, records covering the span of one year, from 1 January 2023 to 31 December 2023, were extracted; subsequently, duplicates and incidents marked as cancelled were removed; and finally, entries lacking an incident conclusion timestamp were discarded. The resulting clean dataset consisted in 1,457,802 unique incident entries.

The analysis of the clean dataset revealed consistent daily incident counts throughout the year, with an average of 3994 incidents per day and a median of 4010. For an incident, a maximum of seven critical events were counted (see

Table 2), though not all entries include every critical timestamp. e.g., when an incident concludes with patient treatment at the location, timestamps for First_To_Hosp_Datetime and First_Hosp_Arrival_Datetime were not recorded and the incident was subsequently marked as closed.

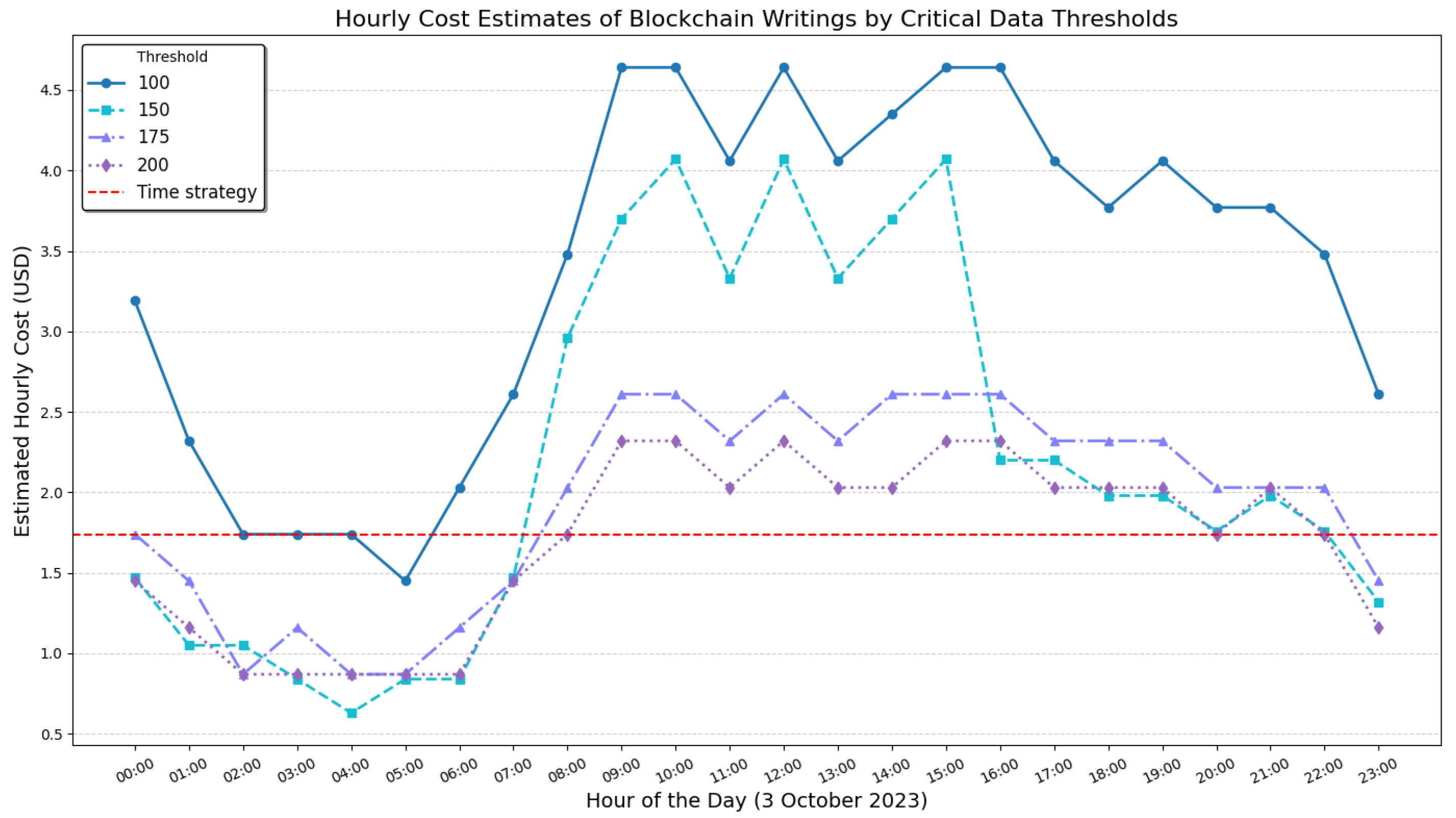

For our experiments, we selected 3 October 2023, the day with the highest recorded number of critical data entries. On this day, 4499 unique incidents were recorded, corresponding to a total of 28,119 critical timestamps. We evaluated the system’s performance for writing data hashes while using four threshold values for critical data: 100, 150, 175, and 200.

Figure 7 illustrates the hourly costs of writing transactions for each threshold, with the mean hourly cost of the time-based strategy included as a reference.

Table 6 gives the total number of hash writing operations and the corresponding estimated costs. The estimates were based on a mean transaction fee of USD 0.29 (see

Table 4). For comparison, the total values for the time-based strategy are also included at the bottom of the table.

4.3. Combined Hash Storage Strategy

To provide a more accurate cost assessment and demonstrate the system’s flexibility, we extended our analysis over one month and developed a hash writing strategy that combines both time-based and threshold-based approaches. This strategy defines a minimum and maximum threshold, which allows for an adaptive storage mechanism. At fixed 10-min intervals, the system evaluates the volume of accumulated critical data. If the volume falls below the minimum threshold, hash computation and writing are skipped, reducing unnecessary transactions and optimizing cost efficiency. Conversely, if the maximum threshold is reached before the next scheduled interval, the system immediately performs a hash computation and writes the critical data to the blockchain. This ensures timely data aggregation while maintaining an efficient balance between cost and performance.

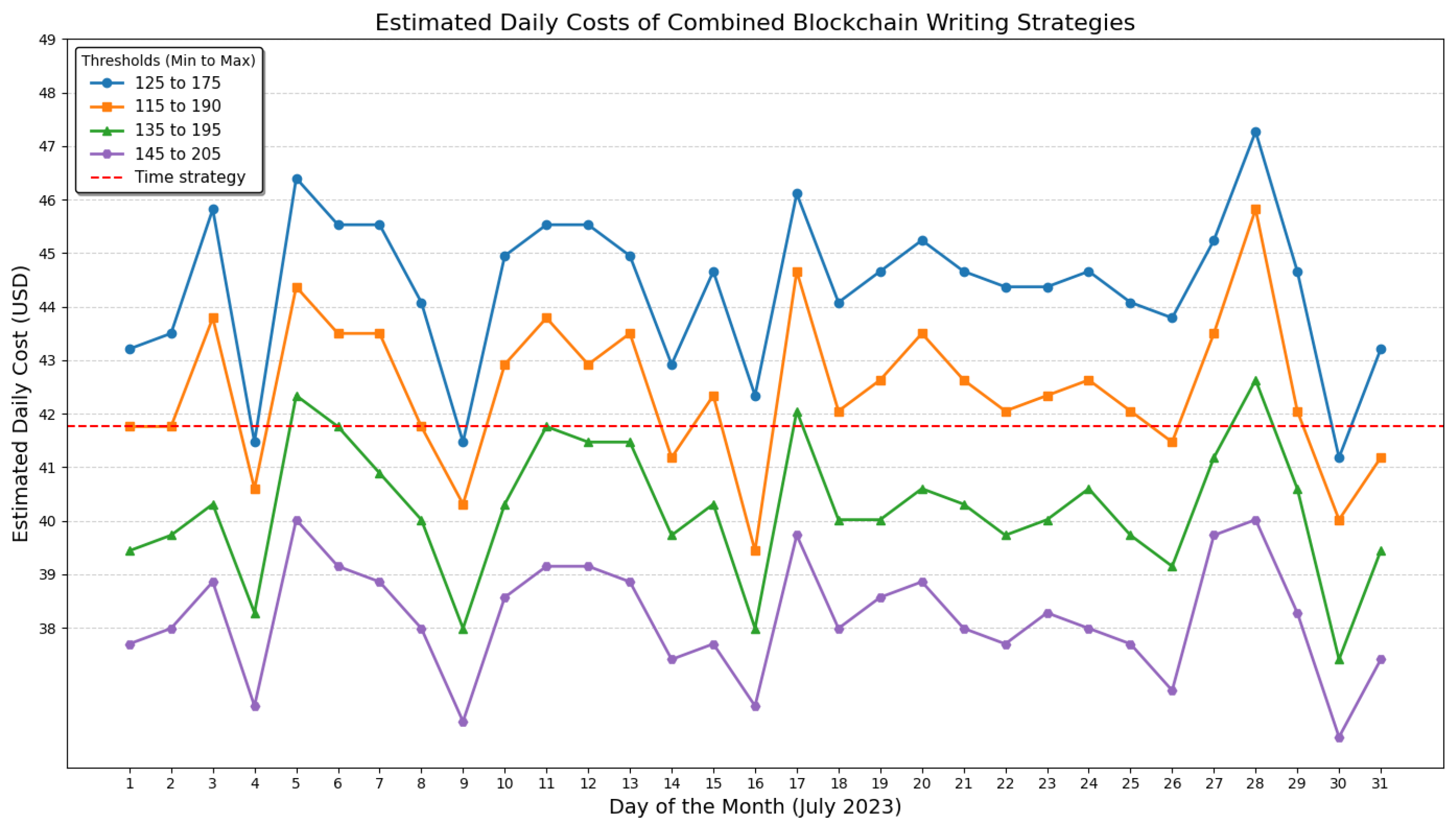

We conducted a detailed cost analysis of the combined strategy using various threshold pairs. The analysis was based on data from July 2023, the month with the highest recorded volume of critical data. In July 2023, 128,296 unique incidents were recorded, corresponding to a total of 799,008 critical timestamps.

Figure 8 illustrates the estimated daily costs for four threshold configurations (125–175, 115–190, 135–195, and 145–205), compared to the time-based strategy.

Table 7 summarizes the total number of hash-writing operations and the estimated costs associated with each threshold pair. These estimates are based on an average transaction fee of USD 0.29 (see

Table 4). For comparison, the corresponding values for the time-based strategy are also provided.

5. Discussion

A total of 2050 hash writing requests were confirmed on the Ethereum Sepolia blockchain, spanning various days of a week and several time slots of a day. The time-based strategy executed 144 daily transactions, whereas the threshold-based strategy ranged from 140 to 281 daily writings, depending on threshold values (see

Table 6). Consequently, the experiments covered over two weeks of operation for the time-based strategy and one to two weeks for the threshold-based strategy. This transaction volume, designed to assess the performance, timing, and cost efficiency of blockchain-based hash writing under realistic conditions, provides a sufficient basis for meaningful economic feasibility estimates.

The combined strategy was evaluated using comprehensive data spanning an entire month, ensuring a more extensive analysis of its adaptability and cost efficiency under varying operational conditions. This broader timeframe allowed us to assess long-term performance trends, capturing fluctuations in data volume and evaluating threshold configurations accordingly. Our experimental results indicate that nearly half (47.17%) of the total transactions were written on the blockchain in less than 13 s (see

Table 4), with a mean confirmation time of 9.18 s and a median of 9.16 s. Moreover, the vast majority of transactions (93.61%) were confirmed within 30 s, with overall mean and median confirmation times of 14.83 s and 15.88 s, respectively.

The confirmation time boxplots in

Figure 5 show that transactions were confirmed more rapidly between 08:00 and 16:00, with a mean time of 13.29 s and a median of 9.56 s (see

Table 5). In contrast, slower confirmation times were observed during the 00:00–08:00 and 16:00–00:00 timespans, with median values of 16.23 s and 16.01 s, respectively. This represents an increase of approximately 67–70% compared to the 08:00–16:00 timespan. Furthermore, the integration of blockchain technology significantly augments the system by providing a verifiable timeline of events. By permanently recording event hashes on the blockchain, any change to events stored in the database would be detected; hence, a dispute on recorded timestamps would be resolved. A match of the hash computed on recorded timestamps with the blockchain hash would demonstrate that timestamps were unaltered, while a difference would show that they were altered. This is vital for accountability and legal verification in emergency response scenarios. Notably, in our experiments, all blockchain transactions were confirmed, underscoring the reliability of established platforms such as Ethereum for secure data storage. In cases where a transaction is not confirmed, the affected data remain securely stored in the local database and the hash writing request is reissued, leveraging the inherent resilience of blockchain technology to ensure reliable completion. Collectively, these features enhance data integrity and fault tolerance and provide an indisputable record of incident timelines.

In every strategy we tested, the data aggregation performed by the HEA prevents congestion by avoiding overly frequent writing requests on the blockchain. In the transaction fee distributions (

Figure 4), we observed that 83.61% transactions incurred costs below USD 0.50. The difference between the Gwei and USD distributions is due to the actual fee in Gwei, which depends on the congestion of the blockchain network at the time of the transaction, and the ETH/USD exchange rate, which is subject to continuous fluctuations. To handle price fluctuations, some strategies can be employed. e.g., a polling mechanism can be activated some minutes before the timeout to monitor the current gas cost and request a transaction whenever the cost falls below a set threshold. Depending on the system requirements, either the transaction cost or the timeout to send the transaction can be prioritized.

The analysis of the transaction fee boxplots in

Figure 6 revealed that the 08:00–16:00 timespan was the most expensive, with a significant number of outliers: the median fee was USD 0.29 and the mean fee was USD 0.41 (see

Table 5). Conversely, the 00:00–08:00 and 16:00–00:00 timespans exhibited lower and less variable fees, with median values of USD 0.17 and mean values of USD 0.21 and USD 0.22, respectively.

We estimated the total daily transaction cost using the mean transaction fee of USD 0.29 (see

Table 5). In the time-based strategy, 144 daily transactions amounted to an average cost of USD 41.76. In the threshold-based strategy, daily costs ranged from USD 40.60 for a threshold of 200 to USD 81.49 for a threshold of 100 (see

Table 6). In the combined strategy, estimated daily costs varied from USD 38.19 for the threshold pair of 145–205 to USD 44.37 for the threshold pair of 125–175 (see

Table 7).

The effectiveness of the three strategies can be assessed through their impact on writing frequency and cost efficiency. The time-based strategy enforced a fixed update interval of one hash every 10 min, offering consistency but potentially incurring unnecessary transaction costs during low-activity periods. In contrast, the threshold-based strategy responded dynamically to data flow, increasing the frequency of writing operations when critical data accumulation exceeded predefined thresholds. Even in the worst case scenario (threshold = 100), the system maintained an average of 12 writings per hour, demonstrating a balance between responsiveness and cost (see

Table 6).

The combined strategy leveraged both approaches to optimize cost-effectiveness while ensuring timely data recording. Notably, its daily writing frequency remained closely aligned with that of the time-based approach (see

Table 7). The 125–175 threshold pair configuration resulted in 153 average daily writings, peaking at 163, reflecting only moderate increases of 6% and 13%, respectively, relative to the time-based model. Meanwhile, the 145–205 threshold pair maintained a lower transaction volume than the time-based approach, with a maximum of 138 daily writings, compared to 144 in the time-based strategy. This configuration also yielded a mean daily cost of USD 38.19, representing a daily operational cost reduction of USD 3.57 while retaining the combined strategy’s capacity to dynamically adapt to fluctuating data loads.

Our approach significantly mitigates the high costs typically associated with on-chain storage of large data volumes. In our system, only a 32-byte hash is written to the blockchain; hence, recording a fixed-length hash incurs only a small fraction of the cost required for storing complete data records. The experimental results confirm that transaction fees for hash writing operations remain low even under variable gas prices. These findings highlight the inherent trade-offs between predictability, cost-efficiency, and adaptability. The time-based strategy ensures a consistent recording schedule but may result in redundant blockchain interactions. Conversely, the threshold-based approach offers greater adaptability but introduces variability in update timing. The combined strategy, particularly with the 145–205 configuration, demonstrates an optimal balance, achieving cost savings while maintaining responsiveness to fluctuations in critical data volume.

Compared to third-party and commercial timestamp certification systems, our approach leverages the inherent properties of a public blockchain to ensure that the critical function of proving data integrity remains within the system rather than being outsourced to external entities that may impose arbitrary conditions for service access and utilization. By recording data hashes on a public blockchain, the proof of integrity can be independently verified by authorized entities in the event of disputes. This internal verification process enhances system trust and differentiates our approach from conventional timestamping methods, which rely on external certification providers.

We utilized a publicly available dataset of the New York metropolitan area, encompassing approximately eight million residents, and conducted experiments to derive average transaction cost values. These values, derived from analyzing multiple threshold configurations over various time intervals, offer a practical baseline for expense estimation. This baseline, supported by empirical data, allows for the projection of costs over different durations, thereby enabling more informed decisions regarding investment and operational expenses. The results demonstrate that our approach maintains feasible costs even amidst blockchain fee variability. By aggregating data and employing a threshold-triggered strategy, the system avoids unnecessary network congestion and minimizes operational expenses. Consequently, these cost estimates underscore the scalability and applicability of our system in densely populated, data-intensive environments.

6. Conclusions

This paper presented a blockchain-based framework designed to allow healthcare service providers to hold (and, when necessary, exhibit) a proof of the integrity and authenticity of timestamped events, in case of disputes. The designed system ensures accurate and blockchain-certified timestamps for critical events, addressing a major limitation of centralized architectures that depend on a single authority. This capability was achieved through blockchain technology, which provides a transparent and verifiable record of events. The proposed framework permanently records event hashes on the blockchain, making the timeline of incidents (stored in the cloud) verifiable. Therefore, the tampering of cloud-stored data becomes worthless, as it would be easily detected in case of disputes.

Our experimental evaluation, based on a real-world incident dataset, confirmed that the proposed framework is feasible and effective for the management of secure incidents. The integration of regular, time-based recording with an adaptive threshold strategy enables the system to record and secure critical event data reliably, ensuring that the incident timeline is maintained accurately. This balanced approach ensures that essential information is logged without excessive redundancy, thus reducing resource utilization and maintaining system efficiency.

The dynamic nature of the combined strategy allows the framework to adapt seamlessly to varying operational conditions, ensuring that the level of data capture aligns closely with the actual flow of incident information. Such a continuous adaptation ensures that the data are kept accurate and verifiable, which is essential in scenarios where swift response and strict accountability are paramount. In addition, the insights derived from our study highlight the broader applicability of blockchain technology in incident management systems. By combining server-side timestamping with blockchain-backed verification, the system guarantees the auditable history of emergency events, fostering trust among stakeholders such as emergency responders, healthcare providers, and patients.

Our future work will aim at proposing a solution enabling the exchange of anonymized data, gathered in emergency response systems, by using a blockchain-based storage that acts as an indexing system and a proof for data stored in a cloud-based system.