1. Introduction

Cybersecurity threats are rapidly evolving, creating a pressing need for educational methodologies that can bridge theoretical knowledge and hands-on skills. Despite the numerous artificial intelligence (AI)-driven intelligent tutoring systems (ITSs) found in the literature [

1], very few adequately address the multidisciplinary complexity of cybersecurity or incorporate generative AI (GenAI) in ways that are scalable and easy for educators to adopt [

2,

3]. Some of the primary concerns of educators that AI-enhanced ITSs have attempted to address are evaluation support, personalised adaptive learning, feedback for students [

4], and overall governance (including privacy and security). Whilst this is already a complex task, it is even more so for a multidisciplinary field such as cybersecurity. Further, even though “ChatGPT for Educators” [

5], test content generation bots, and tutor chat bots exist [

6,

7,

8], current chatbot solutions, although helpful, primarily address isolated instructional or evaluative tasks and lack a comprehensive architecture for scaling to larger, multidisciplinary educational contexts, particularly in cybersecurity graduate programs. They do not attempt to holistically address scalability, generalisability, and educator adoption in the context of graduate cybersecurity programs and the specific difficulties they face. Despite this, following the advances and support for generative AI tools for education, we see potential for their application in institutions [

9], imbibing all the appropriate governance. However, there is a distinct lack of evidence for a generalisable and scalable solution. As described by Latham A. (2022) [

10], challenges such as scalability and trust remain critical in the adoption of adaptive and personalised intelligent tutoring systems.

To effectively showcase the potential for generative AI in adapting multi-disciplinary subjects for learners in higher cybersecurity education, in this paper, we make three contributions: We present our conceptual design for a scalable and generalisable multi-agent ITS framework that accommodates a wide range of cybersecurity topics, from risk management to Python for security, using both theoretical and practical learning components. In addition, we integrate low-code development platforms (Streamlit, Relevance AI) to reduce the barrier of adoption for educators, thereby emphasising practical deployment over purely theoretical constructs. Finally, we propose a survey-based evaluation approach, adapted from established serious games and usability frameworks, to empirically measure (a) content quality, (b) platform usability, and (c) perceived effectiveness in improving student learning outcomes. This paper proposes a conceptual framework and highlights the qualitative evaluation of its potential applications. Empirical validation remains a task for future research.

In

Section 2 of our research, we identify the key features of similar tools in industry and the literature that use GenAI to varying degrees in educational contexts to deliver a personalised learning experience. We identify an appropriate evaluatory framework for the tool and its learning outcomes [

3]. In

Section 3, we explore the development of the platform while keeping educator adoption in mind, utilising modular development tools such as RelevanceAI [

11] and Streamlit [

12], which allow for a quick development process and improve adoptability [

13]. We use multiple agents [

14], each personalised for a student or teacher use case, to orchestrate tools designed for either ingestion, retrieval, or generation tasks. We map a user experience for educators to design and deploy existing assessment material for students in a personalised and engaging manner. In addition, in

Section 3, through the support of existing research and evaluation methods for platform engagement and usability metrics [

15,

16,

17], we extrapolate a basic evaluation methodology in the form of a survey. In

Section 4, we discuss the broader theoretical implications of developing and deploying a generalised and scalable GenAI teaching system for higher education in cybersecurity. This discussion is followed by a discussion of some common challenges and mitigation strategies implemented in the short term as well as a brief on the changes we plan for future works. We aim to further develop this concept as an optimised and deployable tool, further evaluate the applied large language models for efficiency and aptitude, and produce findings from survey respondents. We also enjoy the benefits of on-site hosting through Streamlit, allowing future implementations with improved security and privacy. We also consider various other forms of evaluation metrics such as web page metrics for performance and responsiveness as well as LLM model performance using its own relevant metrics [

18]. In conclusion, we emphasise the improved adoptability of GenAI in teaching systems for intelligent tutoring systems, as well as the applicability of the proposed generalised process for navigating a multidisciplinary field, as seen in the range of graduate cybersecurity topics. We also note the potential for future research and areas for the improved evaluation of the proposed systems.

2. Related Work

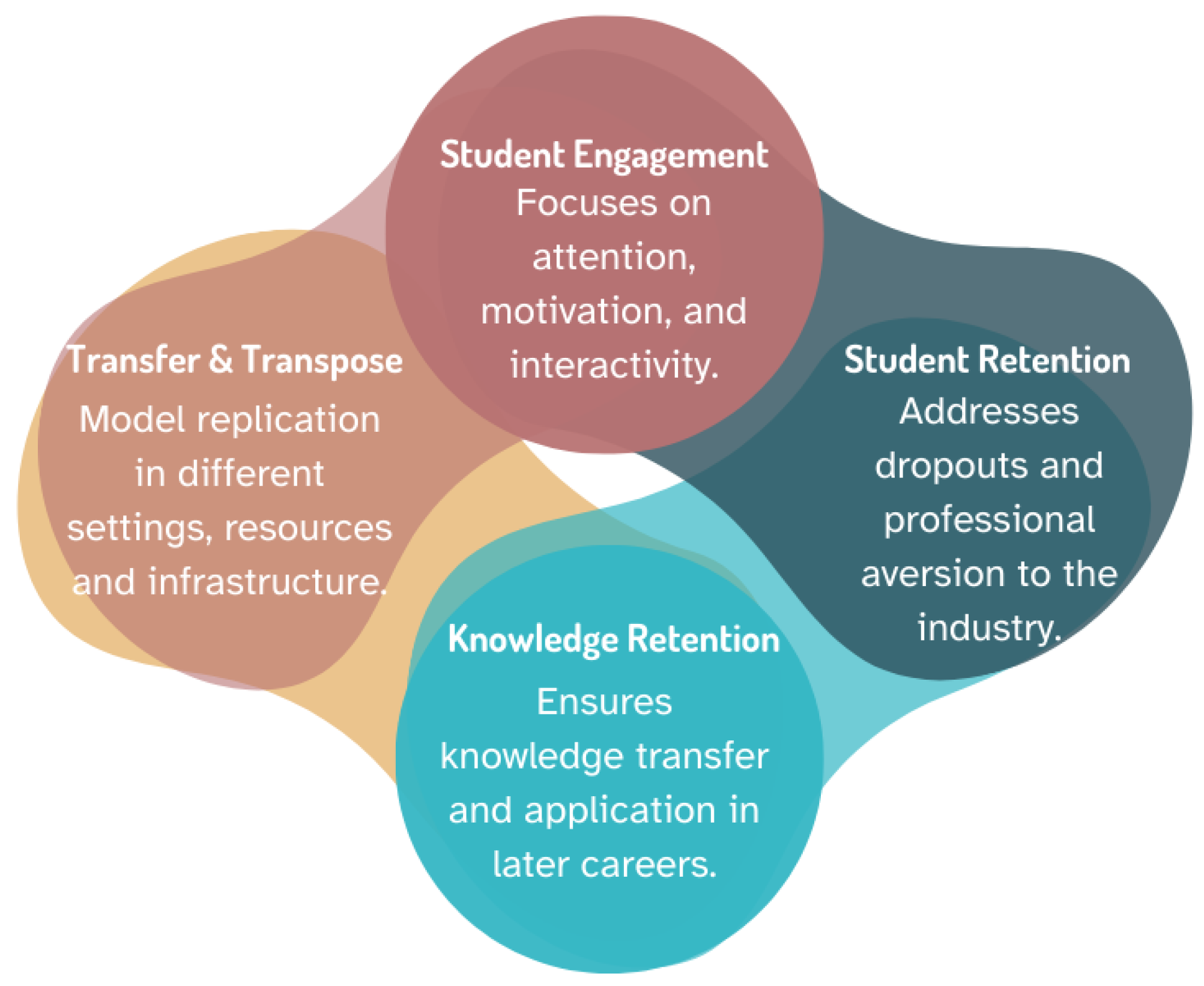

In earlier research, our scope was primarily driven by the prevalent cybersecurity skills gap. We were concerned with the study of existing frameworks and models of cybersecurity education in order to identify key objectives that could be applied across various learning contexts, as seen in

Figure 1. In our findings, we learned of several potential factors in determinants for the skills gap in industry, including the multidisciplinary nature of cybersecurity, the poor student retention and clarity of pathways, and a lack of engagement and hands-on learning.

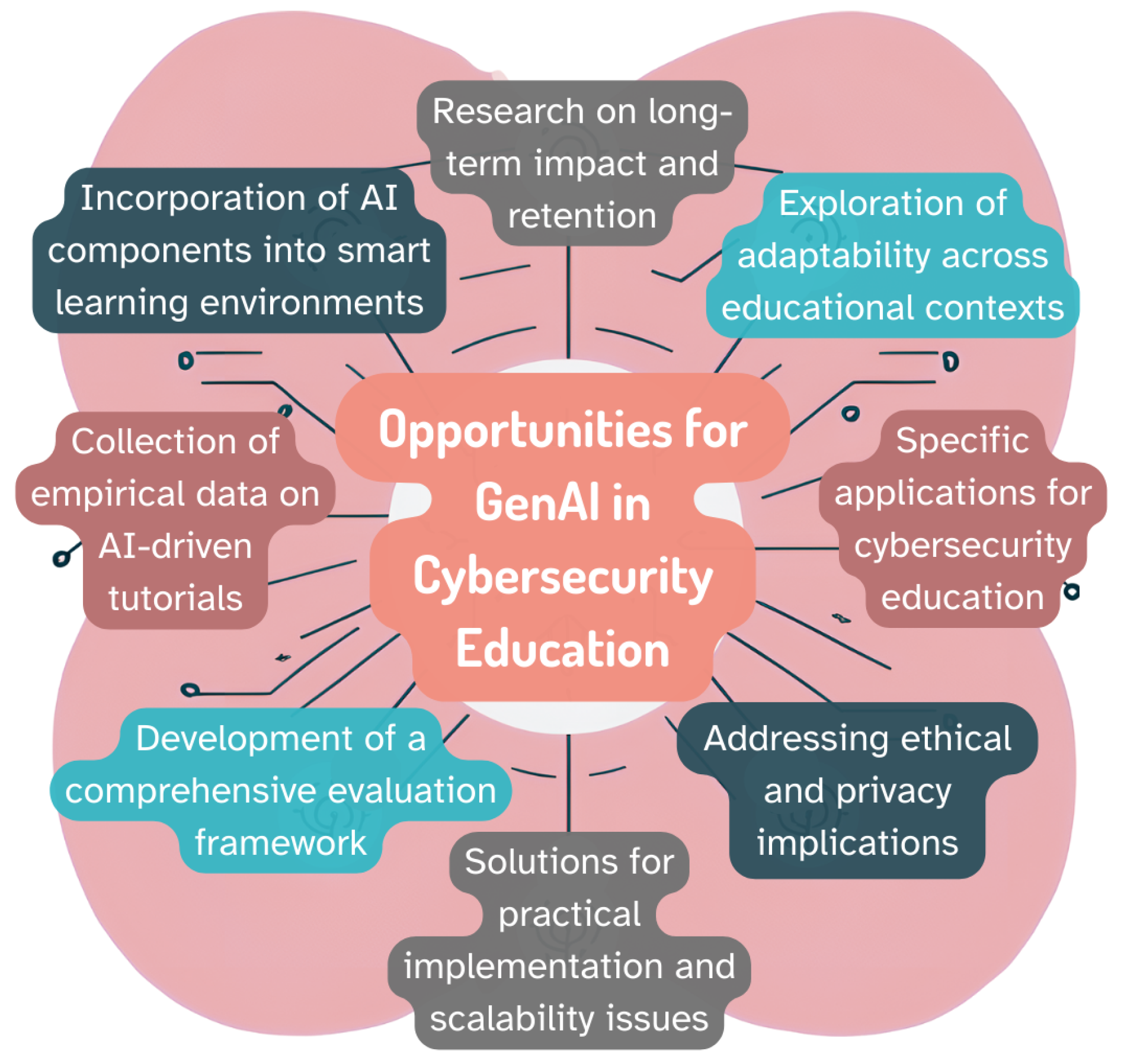

Mukherjee et al., 2024 [

3] detailed the opportunities within cybersecurity education for generative AI, incorporating guidelines from globally accepted cybersecurity educational frameworks and national cybersecurity frameworks. In distilling each impetus and extrapolating it to various educational contexts, we find that the opportunities for GenAI in each of these contexts are significant, as detailed in

Figure 2. Focusing on solutions for practical implementation and scalability issues, we further outline methods for the incorporation of AI components, the collection of empirical data and evaluation methodology, specific applications, and expectations of adaptability by addressing educator adoptability concerns.

Our search identified a shortfall in translating theoretical knowledge into practical applications; our findings are summarised in

Table 1. We distilled findings from the key literature to establish a foundation for understanding how GenAI can be effectively utilised within the Cybersecurity Graduate Certificate programs offered to those at the University of Wollongong in Australia.

The “Graduate Certificate of Cybersecurity” offered at the university offers a multidisciplinary approach to cybersecurity education, each having a practical component. The basic subject structure is split into theoretical lectures and weekly practical laboratories; some include case studies and major assessments. Briefly, they offer four subjects: “Python programming for Cyber Security” teaches the fundamentals of programming with a focus on safe practices and problem solving skills. “Web security” is a subject that teaches the fundamentals of web programming and techniques for tackling emerging web vulnerabilities. “Cyber Security Risk and Risk Management” details the current corporate threat landscape and the impacts of vulnerabilities, and the principles of risk assessment and cyber hygiene are introduced. “Databases and Security” introduces core topics and fundamental concepts in managing data systems and principles of data confidentiality through concepts of liability, security, weaknesses, and privacy in database systems [

19].

The utilisation of AI in educational tools and AI-assisted delivery is notably prevalent in the e-learning and training sectors. Mello et al. (2023) [

20] discuss the rise of GenAI in educational settings, highlighting its adoption by major platforms such as Khan Academy, Coursera, and Duolingo. These platforms have integrated GenAI to facilitate personalised learning, provide real-time assessment and feedback [

21], enhance predictive analytics, and deploy virtual teaching assistants, which aligns with the educational needs of contemporary cybersecurity programs.

In industry applications, as seen in

Figure 3, tools like Khanmigo, a GPT-4 powered assistant by Khan Academy, exemplify the practical benefits of GenAI. This assistant enhances learning by offering personalised support and adaptive feedback mechanisms, helping students progress at their own pace while deepening their understanding of complex subjects [

22]. Similarly, Duolingo utilises its birdbrain Long Short-Term Memory (LSTM) AI to tailor learning experiences according to individual user needs, further illustrating the potential of GenAI to create adaptive educational environments [

23]. There is also the learning assistant Duolingo Max [

24], showing the doubling down of AI research and development of GenAI for future learners.

Significant advances in the integration of AI into cybersecurity education highlight its ability to enhance learning outcomes. For instance, Beuran et al. (2022) [

18] showcased the application of Natural Language Generation (NLG) by creating dynamic and engaging educational content through the Cyber-Range Organisation and Design (CROND) datasets at the Japan Advanced Institute of Science and Technology (JAIST). The use of NLG on this platform to generate quizzes and educational games represents an innovative approach to increasing student engagement and learning effectiveness. They also presented an evaluation framework for such tutoring platforms. The research presents the strengths of content generation through generative AI; however, it primarily uses a singular dataset to generate all questions and does not include methods for a more nuanced yet more scalable solution.

We use this work to highlight one of the major concerns regarding the limited scope of the application of traditional AI in intelligent tutoring systems. As shown in Beuran et al. (2022) [

18], previous models were pre-trained and generated sample Q&A sets from a static library.Our research aims to extend this research application and scope using contemporary advances in AI. Addressing these concerns, our approach is also scalable, generalisable, and easy to adopt, addressing the unique challenges of cybersecurity’s multidisciplinarity. We include a holistic approach to study soft-factors related to the skills gap, such as engagement and retention.

Studies by Vykopal et al. (2023) and Diakoumakos (2023) [

25,

26] emphasise the relevance of adaptive learning environments and AI-assisted gameified systems in cybersecurity education. These approaches not only facilitate personalised learning, but also guide students toward mastering necessary skills, thus enhancing both engagement and educational outcomes that lead to student retention and inevitably supply industry.

The literature confirms that while GenAI holds significant promise in transforming cybersecurity education by bridging the gap between theory and practice, there are gaps in systematic application and evaluation. This paper builds on these insights by proposing a generalisable and scalable platform as a solution, with methodologies to assess the impact of GenAI on practical skill development and educational outcomes in cybersecurity programs. Through this structured approach, our aim is to contribute to the optimisation of GenAI tutoring systems in higher education, ensuring that they meet educational and industry demands effectively.

To clearly demonstrate the influence of existing GenAI solutions and highlight the specific gaps our research addresses, we summarise the relevant literature in

Table 1, explicitly outlining the key characteristics and limitations of existing methods. This comparative analysis directly informed our methodological choices and justified our proposed multi-agent orchestration approach, specifically addressing scalability, generalisability, and adaptability concerns within cybersecurity education.

Table 1.

A summary table of reviewed generative AI solutions, their key characteristics, and identified gaps.

Table 1.

A summary table of reviewed generative AI solutions, their key characteristics, and identified gaps.

| GenAI Solution | Key Characteristics | Identified Gaps |

|---|

| ChatGPT for Educators [5] | GPT-based platform offering personalised content generation and adaptive evaluations aimed at enhancing educator-driven learning outcomes through tailored, instructional support. | Limited in scalability and generalisability within specialised domains, particularly graduate-level cybersecurity education. The solution’s effectiveness in addressing multidisciplinary contexts requires further validation. |

| CyATP [18] | Uses Natural Language Generation (NLG) with the Cyber-Range Organisation and Design (CROND) dataset at JAIST to generate quizzes and educational games, enhancing student engagement and learning effectiveness. Includes a framework for evaluating tutoring platforms. | Relies on a single static dataset (CROND), limiting scalability and generalisability. Does not adequately address broader multidisciplinary cybersecurity education contexts or dynamic learning content generation. |

| Duolingo LSTM Birdbrain [23] | Employs birdbrain Long Short-Term Memory (LSTM) AI models to tailor personalised learning paths based on individual user needs, creating adaptive educational environments. | The applicability and adaptability to specialised, highly technical fields such as cybersecurity education remain unexplored. |

| Duolingo MAX [24] | Advanced learning assistant using the latest GenAI advancements to enhance personalised education experiences, combining language learning with AI-driven instructional strategies and real-time adaptive feedback. | Limited empirical evidence of effectiveness in graduate-level, multidisciplinary education contexts, particularly for cybersecurity. |

| Diakoumakos’ AI-Driven Gamification [26] | Implements standardised methodologies and gamification using AI co-pilots to deliver personalised exercises and promote active learner engagement, continuous improvement, and tailored educational experiences in cybersecurity training. | Does not fully address scalability, generalisability across multidisciplinary contexts, and the specific challenges of integrating gamification with robust, dynamic, LLM-based content generation frameworks. |

3. Platform Implementation

As discussed in earlier research, there are notable gaps with regards to the application of GenAI ITS and, due to the limited scope, this reduces the realisable advantage of generalisation that comes with advances in pre-trained LLMs today. The decision to adopt a multi-agent orchestration approach using specialised generative AI tools, such as GPT-4, Streamlit, and Relevance AI, was driven by previous limitations identified in existing ITS solutions, primarily those employing traditional, pre-trained transformer models. For instance, the CyATP solution, while innovative, utilised a singular, static dataset (CROND), thus limiting its adaptability and scalability. Conversely, tools like ChatGPT for Educators and Duolingo MAX demonstrate the effectiveness of dynamic personalisation and adaptive content generation in educational contexts but have not yet been evaluated extensively in graduate-level cybersecurity education. We aim to address concerns of scalability, generalised enough to be applied across the multidisciplinary field, with an added contribution of holistic alignment to industry concerns (e.g., educator adoption, educational context, cybersecurity skills gap). Below, we detail a proposed platform as a solution, a conceptual user flow, the features and applications aligned with opportunities and objectives, and, in addition, a method for the evaluation of key criteria identified in earlier works.

3.1. Conceptual User Flow

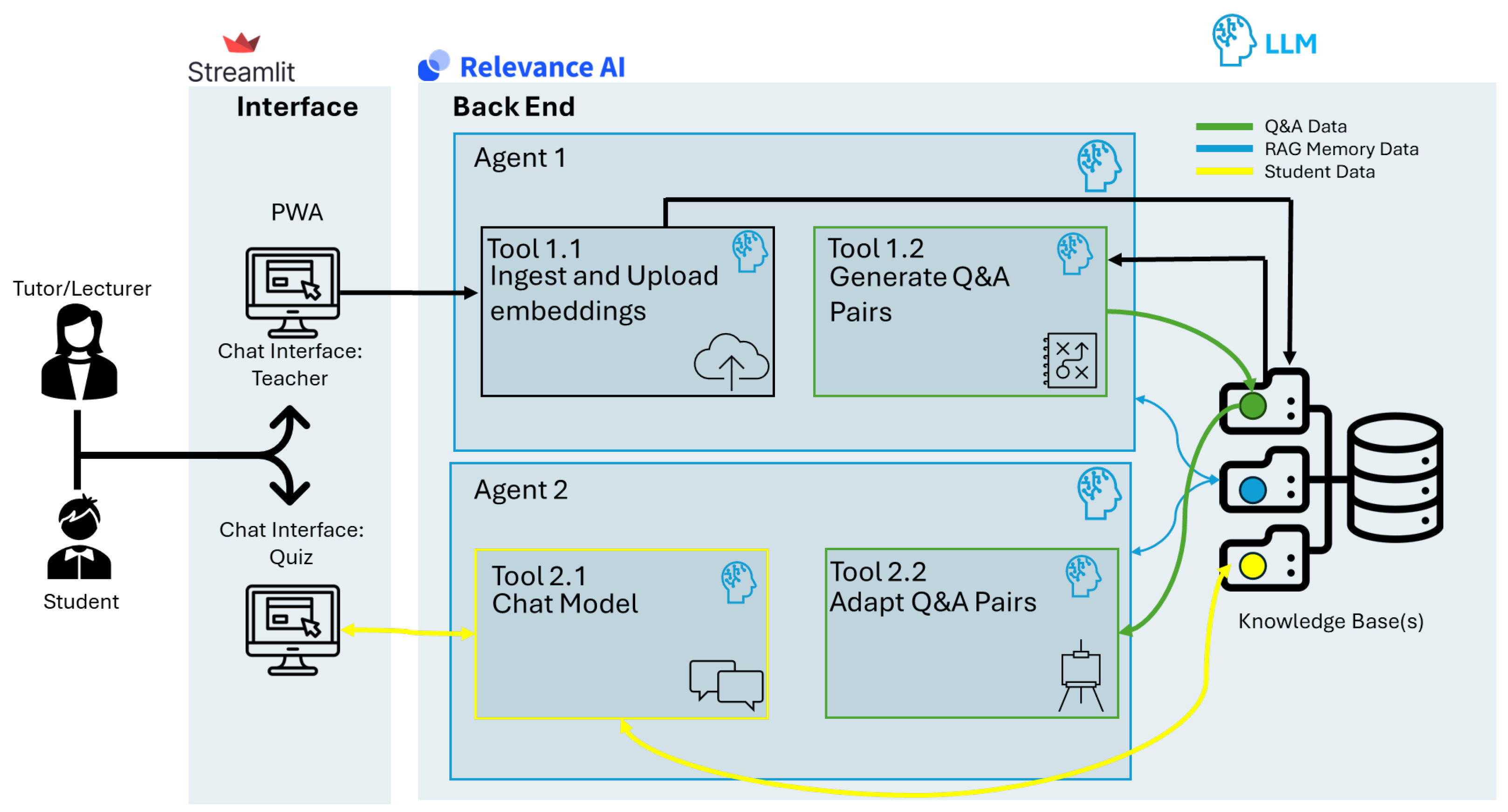

The platform was designed as a Progressive Web Application (PWA) or web-based application for availability purposes. As seen in

Figure 4, educators can sign in and begin creating an intelligent tutoring system (ITS) for their quiz assessments. They can input the content and configure the type of test (e.g., essay, multiple choice questions). Further, they can test the system themselves before publishing it to students via their Learning Management System (LMS), either as a hyperlink or as embedded content.

Once students access the link, they are then placed in their designated lab and presented with the relevant testing information. The test content and structure will vary based on the instructor’s pre-configuration prompts. Our design provides adaptive responses based on factors such as language and the presentation of information. If required, it can be extended to also analyse student responses based on deviations from the correct answer and gather additional performance metrics.

Students receive minor or major feedback if they answer correctly or incorrectly to assist them in reflection or arriving at the correct answer. Aligned with the features mentioned above, we designed a progressive web app and divided the orchestration between front-end and back-end.

Front-End: The front end application tool we used was a low-code Python package for quick GUI development called Streamlit [

12]. The front-end application is meant to provide a seamless portal for access to the agent system and a more engaging experience.

Back-End: The back-end uses an Australia-based quick-production AI tool called relevance.ai [

11] that allows for easy and modular programming and integrations with different OpenAI models and third-party tools.

As seen in

Figure 5, we developed a multi-agent architecture, each with its own ingestion and extraction tools, and two use-specific generation tools. An educator was prompted by “Agent 1” to upload sample test content; in this case, we usde a sample quiz assessment from a graduate cybersecurity subject, e.g., “Python Programming for Cyber Security”. The agent fed an ingestion “tool” that structured the uploaded pdf and created embeddings using the selected embeddings model, in this case, ‘text-embeddings-3-small/large’ [

27].

In the next step, those embeddings were used by the extraction and generation tool, orchestrated by Agent 1, to generate a set of “Core questions and Answer Sets” meant to get at the root of what the original sample query was attempting to test. For example, the question “Why does the following print statement return an error?” could be attempting to help the student understand a specific type of compile error or underlying mechanism leading to that error.

As such, we used this trained LLM tool to generate “Core Questions and Sample Answer Sets” based on the provided sample. These core questions were stored in a vector database or knowledge base and used by “Agent 2”. This was primarily used to deliver test content; it was meant to behave more conversationally with the user, provide them with questions, evaluate their answers against the provides sample, provide feedback, and, most importantly, be seemingly adaptive. Agent 2 used an extraction tool to ingest the correct question-and-answer pairs and then utilised another tool driven by GPT-4 to differentiate the content. This adaptiveness was described by two features: adaptiveness to the student’s level of understanding and differentiating the question content for each user instance.

This differentiation was uniquely achieved by using the first tool of Agent 2, extracting the stored question and answer sets, and using an LLM like GPT-4 in another tool orchestrated by Agent 2. Furthermore, using simple “system prompt” engineering techniques and variables, such as “Explain this (concept) to me, like I am…(10 years old/a graduate student/an industry professional…)”, we could improve individualised knowledge delivery.

In the future, we aim to improve the individuality of each response by providing an updateable knowledge base for each individual student and introducing a way of understanding their performance metrics to adjust and improve ease of understanding.

3.2. Feature and Application Development

As Calo et al., 2024 [

13] highlights, one of the many barriers to the widespread adoption of AI-powered ITSs today is the specialised programming and design skills required to create effective systems. While no-code or low-code authoring tools address programming limitations, one cannot assume that educators possess the necessary design skills to create engaging and effective interfaces. Adoptability and generalisability are a major focus of this research. With only a foundational understanding of chaining, tools, and agents as building blocks in modern application development, we implemented a practical application using low-code development tools like Streamlit and Relevance AI. Furthermore, to enhance reproducibility, we used existing endpoints for OpenAI’s APIs for models and chains, like text-embeddings-3 and GPT-3.5 Turbo and GPT-4, and each was used for the task at which they were most efficient, e.g., conversational RAG or creativity/specificity for content generation and embeddings, in no particular order.

Taking inspiration from the EduTech industry and the use cases developed on competing platforms delivering training with the assistance of GenAI [

2,

5,

22,

23], we could align the specific, deliverable and testable application features identified in

Figure 3 by utilising our knowledge of the drivers of positive student outcomes in cybersecurity higher education, seen in

Figure 1.

In this study, we developed features by adapting

Figure 4 and developed our detailed framework, shown in

Figure 5, for which we adopted an agent orchestration approach using multiple LLM models, either for embeddings or for content generation. Considering the key aspects of this application, we developed two agents for specific uses: one to support content ingestion and content generation and the other agent was more conversational and orchestrated two primary tools: one that supported Retrieval Augmented Generation (RAG) and another to support a more adaptive quiz delivery.

Our proposed adaptivity relies primarily on two distinct mechanisms. The first is using prompt engineering techniques, such as adjusting the complexity and depth of generated responses based on predefined user-profiles (e.g., beginner, intermediate, or advanced learners). For instance, our LLMs use varied system prompts like persona creation, e.g., “Explain this concept as if I am a graduate cybersecurity student” to provide tailored responses. The second is enhancing personalisation by leveraging interaction-based data gathered during the student’s engagement with quizzes, where responses indicating an incorrect understanding prompt immediate, adaptive, and tailored explanations. We can include an iterative response template, if required. While the current proof-of-concept does not include persistent storage or user profiles, our future development will incorporate persistent performance tracking, allowing for even deeper personalised adaptivity based on historical student interactions.

In order to quickly develop a solution that held all the required features while also addressing steep adoption and development curves, we narrowed down the key features of a minimally viable solution. We opted to use Streamlit because it is a free, open-source, and low-code package for quickly developing deployable web apps, with hosting options for a small fee. Alongside this, we needed to identify a viable LLM orchestration and development platform. A relatively new but well-regarded organisation called Relevance AI hosts a customisable, low-code, and free development platform for LLM app developers. It offers the option to use various LLM API service endpoints and in-built storage solutions [

11,

12].

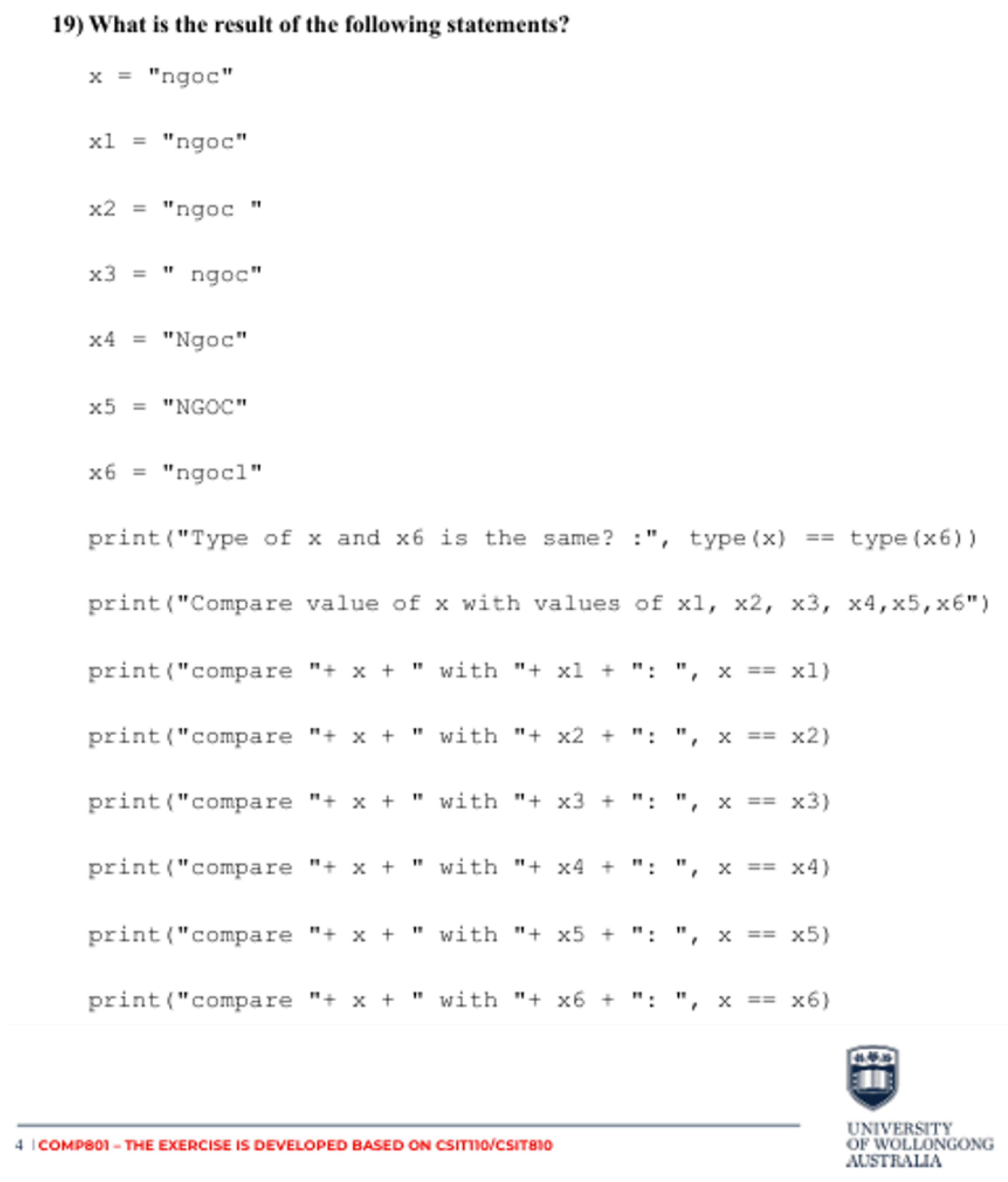

The user guides and resources provide ample support to address adoptability with limited prior experience. The selected tools were able to provide the infrastructure necessary to develop the key features of the proposed solution and form a functional basis for further development. It is important to note that there are many limitations to this solution, as this study aimed to develop key features only; the solutions do not provide a simplified solution to develop with a local LLM, nor do they provide the customisability needed to develop scalable solutions and methods without paying a fee but still sacrificing certain modularity. However, this method does allow you to create the basic architecture and export the developed files for use in other projects, making it ideal to begin any LLM app development project. Streamlit has shown great customisability and is a solution that shows scalability and easy adoptability while being reliable; it is a tool we can use to develop this research well into the future.

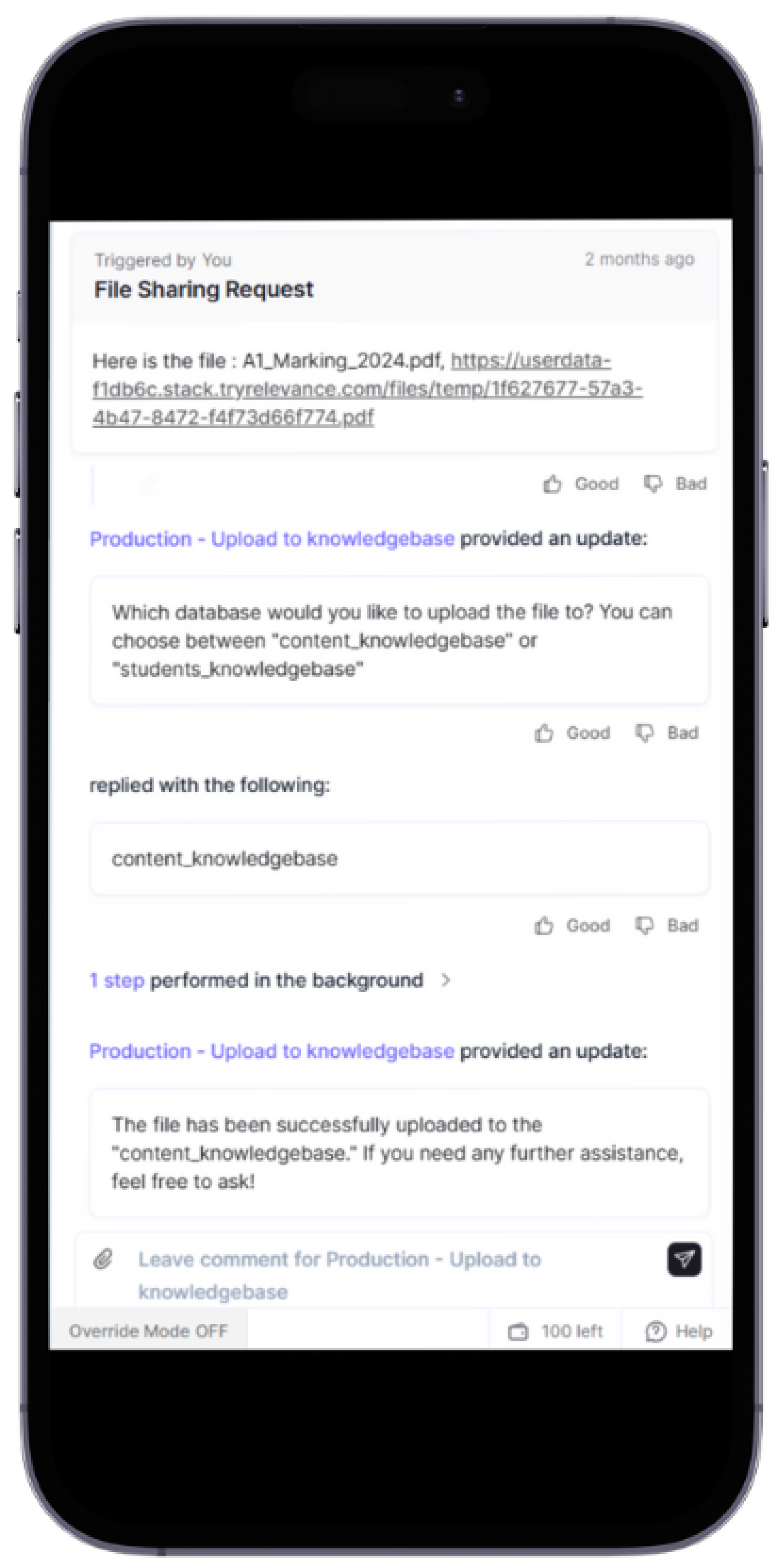

Figure 6 shows a Streamlit-implemented web app interface showing an embedded published workflow built on Relevance AI. Using this interface, we can see how a tutor or teacher would be able to upload all relevant content, specifically course content and sample quiz material, in

Figure 7.

We know that the information is processed by the individual extraction and generation tool of Agent 1 into question-and-answer pairs; this document is then fed into the knowledge base in-built within the tools sandbox environment, which can be designed to be used as a vector store as well.

Agent 2 is responsible for delivering test content to a student. The ideal features it requires are the ability to ingest a knowledge base of subject content, differentiate questions, and provide immediate feedback based on user responses to questions. This was achieved by orchestrating an ingestion tool and a differentiation tool; the agent itself was powered by a conversationally trained Chat LLM, like GPT-4(Chat). This feature aimed to enhance the student engagement experience, in addition to adding a layer of differentiation to provide bespoke feedback responses each time.

This feedback response can be individualised to each student in future implementations; for the moment, we have not created a user identification system. However, once instantiated, it can easily be used to track performance and inform the LLMs responses to each user, further improving their engagement with relevant responses and perhaps retention with “difficulty”-adjusted feedback.

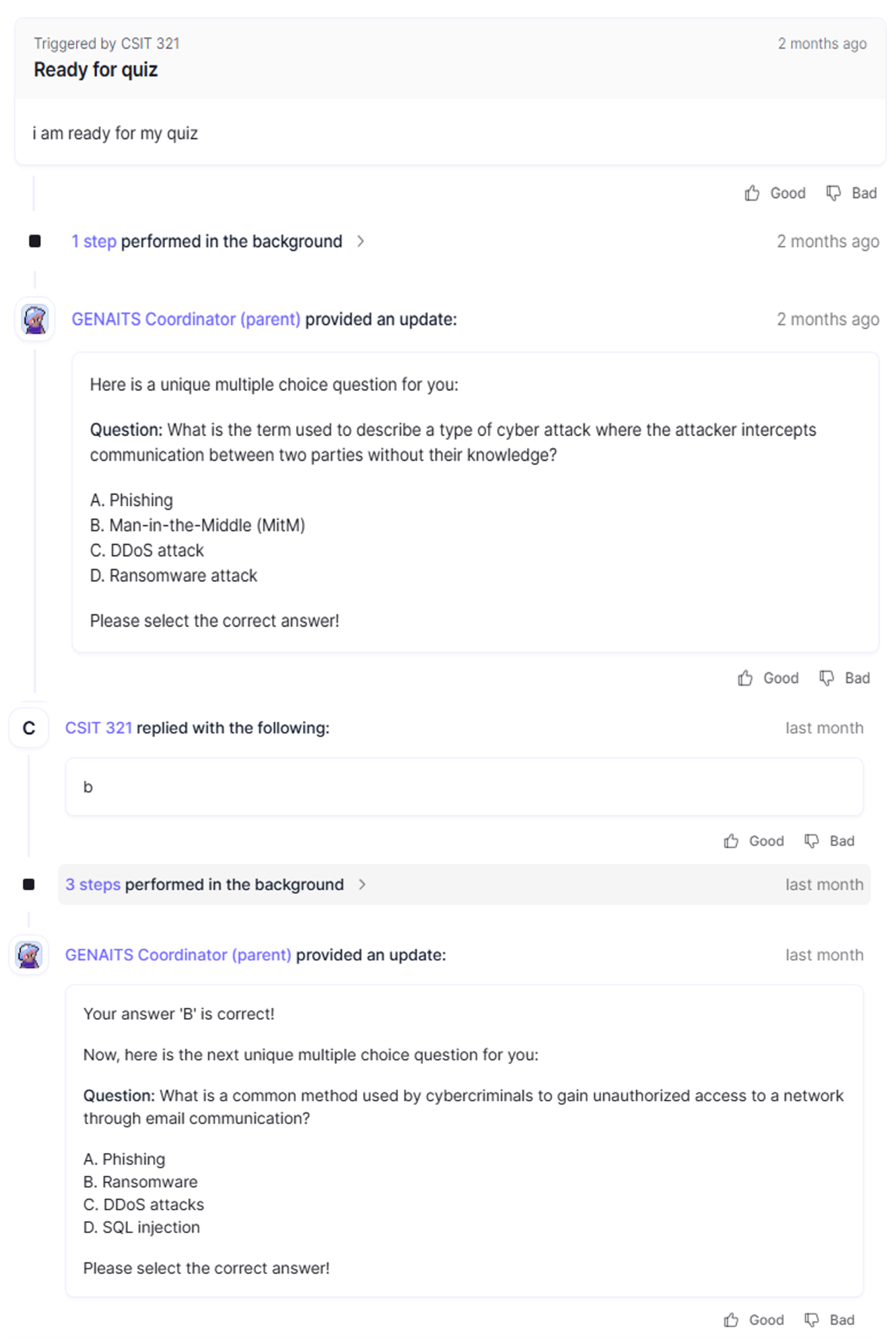

In

Figure 8, we see an exchange between a student taking a quiz and Agent 2. It shows a sample programming question differentiated from the original content while keeping the core competency being tested the same. In addition, we see the student provide an incorrect answer and receive immediate feedback with an explanation.

In

Figure 9, we see a similar exchange between a student taking a quiz and Agent 2, except we see the student provide a correct answer and receive immediate praise. The figure shows another question differentiated from the original content while keeping the core competency being tested, as expected.

3.3. Proposed Evaluatory Method

Our selection of a multi-agent architecture addresses critical gaps observed in earlier solutions, notably, scalability, adaptability, and generalisability within multi-disciplinary cybersecurity education. Compared to prior implementations such as static, single-dataset systems (CyATP) and general-purpose conversational AI systems (ChatGPT for Educators), our solution uniquely offers structured agent roles, allowing for differentiated content creation tailored specifically to educator and student interactions. This approach ensures dynamic content generation and continuous adaptability based on individual learner interactions, improving upon existing methods by enabling real-time differentiation and feedback.

As this research aimed to contribute to a solution for improving a learning experience, this required gathering empirical evidence on specific subjective opinions. Taking inspiration from previous research, we utilised evaluatory frameworks and selected metrics. We aimed to evaluate the GenAI ITS on three aspects: the general effectiveness of the ITS, platform usability, and the quality of the content generated.

Aligned with our identified learning objectives and the relevance of content quality, we created a survey to evaluate three aspects: general effectiveness, learning content quality, and the platform itself, as seen in

Table 2. Adapted for relevance, we took inspiration from Beuran et al. (2021) [

18], who implemented an adapted version of the Serious Games evaluation scale suggested by Emmanuel Fokides [

15]. Although adapted for awareness training, Beuran’s modified scale and content quality evaluation survey was suitable for our purposes as the evaluated subjective criteria aligned with the identified learning objectives, of which many have subjective metrics described in the evaluation scale. The Content Quality section focuses on evaluating the learning material of the learning activity, evaluating the generated test content for errors, and overall satisfaction. Further, we adapted a simple System Usability Scale [

16] as well as empirical platform performance metrics such as accessibility, availability, and reliability. This type of approach can be dependent on the deployed platform; for our research, we deployed this POC as a web app and, as such, we used aligned metrics.

The final survey consisted of several sections ranging from demographic questions to Likert-scaled and open-ended questions. This was to evaluate three things: the quality of content, the general effectiveness, and platform usability. We go into more detail in

Table 2 about the structure of these longitudinal surveys.

The intention was to collect samples of results from students in higher education enrolled in a cybersecurity component; this allowed us to gain a baseline understanding of participating students. Following this, they were asked to take part in a simulated tutorial using the proposed solution, allowing us to present another survey with similar questions to analyse any significant variations post-intervention.

By utilising agent-specific orchestration and dynamic content generation, the proposed solution enhances existing methods by improving individual student engagement, the real-time adaptability of quizzes, personalised feedback, and, ultimately, learning retention, addressing key shortfalls in traditional ITS implementations. These features align with the identified gaps from previous works (e.g., CyATP’s limited scalability and ChatGPT’s lack of specialised personalisation), thus directly contributing to measurable improvements in educational outcomes.

4. Implications and Future Directions

Here, we develop the insights gained from the application of the proposed methodologies, discuss the implications of these findings, and address how GenAI can effectively enhance cybersecurity education. This study focused on proposing methodologies and exploring potential outcomes and theoretical implications based on a structured analysis and review of the literature.

By discussing these results and theoretical implications, this section not only highlights the potential benefits of integrating GenAI into cybersecurity education but also addresses the critical considerations for its successful implementation. These insights aim to contribute to the ongoing discourse on enhancing educational practices through innovative technologies and pave the way for future research in this burgeoning field.

4.1. Theoretical Implications

Public opinion highlights the need for greater awareness regarding the benefits and risks of AI in education, suggesting that further public education on these matters is necessary for wider acceptance [

28]. Further, the proposed conceptual solution and evaluation methodology were designed to systematically evaluate the impact of generative artificial intelligence (GenAI) on enhancing the practical application of theoretical knowledge in cybersecurity education. This section discusses the potential outcomes of these evaluations and the broader implications for integrating GenAI into cybersecurity educational programs.

As noted by As’ad (2024) [

14], the future of AI-enhanced ITSs lies in interdisciplinary efforts that promote both technological innovation and adherence to core educational and ethical principles.

Enhanced Student Engagement: GenAI’s ability to provide personalised learning experiences and adaptive content is expected to significantly increase student engagement. This personalisation allows for a more tailored educational approach that meets individual student needs and learning styles.

Alignment with Educational Theories: The application of GenAI in cybersecurity education aligns with several educational theories, including constructivism, which posits that learners construct knowledge through experiences and reflections. GenAI facilitates these experiences by creating realistic simulations and scenarios where students can apply their theoretical knowledge.

Pedagogical Enhancements: GenAI can also enhance pedagogical strategies by providing instructors with advanced tools to assess student progress in real-time, adjust teaching approaches, and offer immediate feedback. This dynamic adjustment helps in addressing educational context gaps and ensuring that all students can achieve their learning objectives.

4.2. Challenges

Here, we aim to showcase the various nuanced and common challenges with the use of generative AI and other supporting developer tools in the development of an LLM-based ITS. Further, we discuss measures taken to address those common challenges and bolster ethics around future research and the collection of data.

Generative AI Limitations: As with any technology, GenAI is plagued by well-known concerns, such as hallucinations, short attention spans, a lack of moral guidance, and filtering. There were some great challenges with document ingestion and, appropriate parsing proved an integral step in the correct extraction of questions. Concerns like these are common with developers; as such, we have seen an increasing maturity of LLM chains and other libraries meant to standardise development practices and mitigate all general concerns related to generative AI development.

Another major concern we aimed to address in the development of LLM applications aimed at assisting education delivery is the steep learning curve of new technologies. We have seen through the writings of [

13], highlighting the lack of specialised skills required to develop efficient systems, the barrier to adopting intelligent tutoring systems, which is accompanied by a lack of confidence in the technology’s maturity and individual educators’ shallow understanding of the underlying technology.

Addressing GenAI Limitations: This concept architecture was built using low-code tools and libraries for quick deployment, such as RelevanceAI. These tools include in-built vector memory and knowledge databases, knowledge base integration, OpenAI LLM integration, and agent tool calling.

While GenAI presents many opportunities for enhancing education, it also comes with challenges, such as the risk of generating misleading information or biases leading to hallucinations and ethical limitations. It is crucial to implement robust validation mechanisms to ensure the accuracy and reliability of GenAI outputs. We need simple prompt engineering and system prompts to whitelist appropriate response formats and content. Currently, we can bolster this by using pre-trained models and chains that handle basic shortfalls of generative AI and assuage most concerns around appropriately filtered outputs. Further, we can tweak parameters to address concerns of attention span and other memory-related issues by, for example, increasing the context window and introducing memory context parameters.

Addressing Challenges of Privacy and Security in a University System:

The integration of GenAI must also consider ethical implications, including privacy concerns and the transparency of AI-driven decisions. Ensuring that GenAI systems are designed with accountability in mind is essential. As such, a modular and dynamic system can be developed in accordance with an individual university’s data and privacy policies. We know how this can also be achieved through the modular development environment, which allows for ease in the implementation of various parameters and policy-driving mechanisms.

As part of our research, we also plan to collect and store anonymised individual survey responses from people. This involves attaining approval from the University of Wollongong’s Human Research Ethics Committee (HREC), a task we have undertaken. We have received approval for our survey design. This showed us the standard requirements for the collection, storage, and usage of data within a university system.

In this proof of concept (POC), we mitigated specific challenges of data integrity through the silo-ed nature of the in-built knowledge base within our selected tool. However, in future implementations, in order to address privacy concerns with any university/organisation, one will have to integrate any development with fully managed storage solutions.

This informed our future direction to develop a fully localised GenAI ITS, allowing for ease of integration into any storage solution and reducing risk from off-site computation and thereby the risk of loss of data privacy and integrity. Despite the conveniences of using cloud-based API integrations, which come with in-built chaining, constantly updated pre-trained models, and zero dependency on local hardware, with this POC, we know that the proposed architecture can be transposed to meet those standards.

4.3. Future Research Directions

The future of GenAI in cybersecurity education presents several promising avenues for research and development. Key areas for future exploration of this work include the following: Rigorous empirical studies are necessary to validate the conceptual framework and test the real-world effectiveness of a GenAI-driven ITS and its effectiveness in improving learning outcomes and practical skills delivery in cybersecurity. These studies should employ a mix of longitudinal analysis and controlled experiments to evaluate how GenAI influences student learning over time.

Our proposed survey will collect empirical data on qualitative and functional areas of perceived impact of this GenAI ITS. This perception can be identified through the longitudinal application of this survey, once immediately prior to use of the tool and once immediately after, with some never using the tool at all, treated as baseline values.

We can extend this to include metrics for a comparative analysis of the ideal LLM models for output standardisation and efficiency for each aspect of LLM use, e.g., tool-calling, embeddings, and content summarisation.

Investigating how GenAI tools can be adapted and scaled to various educational contexts and learning environments is crucial for maximising their impact. Ensuring that these tools can be integrated into different institutional structures and tailored to diverse learner needs will be a key challenge in the coming years.

Future research must also focus on addressing the ethical concernssurrounding AI in education. Establishing ethical frameworks for data usage, transparency, and accountability in AI-driven decisions will be essential for ensuring that these technologies are adopted responsibly.

Another area for future research is the integration of GenAI in broader cybersecurity curricula. This includes specific areas like cybersecurity risk management and Python programming but also broader cybersecurity curricula. Expanding the scope of GenAI applications could lead to a more holistic improvement in cybersecurity education.

5. Conclusions

Through our contributions, personalised learning powered by GenAI ITSs can become more accessible to various educational institutions aimed at fostering higher student engagement, improved comprehension of complex cybersecurity topics, and increased retention rates. However, the implementation of GenAI tools also comes with ethical and operational challenges.

Highlighting our contributions, a scalable and generalisable framework showing GenAI’s ability to provide adaptive, real-time feedback and dynamic learning experiences offered a hands-on solution to one of the most persistent challenges in cybersecurity education: translating the gap between theoretical concepts and practical experience. Additionally, with its potential impact on educator adoption in various educational environments and contexts, increasing student engagement and retention is significant. We implemented a practical solution using this framework, keeping in mind educator adoption. This solution revealed the potential to improve and scale features and abilities using agentic workflows, deploying associated tools as necessary. The use of quick development tools also allowed for higher customisability to educator contexts. Finally, we identified a combination of evaluation methods, proposing a pre-intervention and post-intervention survey, appropriate for a platform as as solution. We designed a way to consolidate human feedback on platform usability, general effectiveness, and content quality, providing a more holistic collection of data and thereby analysis of key indicators.

The methodology proposed in this paper emphasises the importance of qualitative and theoretical analysis to assess GenAI’s impact on education before empirical trials are undertaken. In the future, we will extend this to the empirical analysis of localised or hosted model performance on individual tasks, security assessments, and platform evaluations.The findings suggest that while GenAI offers considerable benefits, there is still much to learn about the long-term impacts and scalability of these technologies.

By continuing to investigate these areas, future research can provide clearer insights into how GenAI technologies can be fine-tuned and optimised to meet the evolving needs of cybersecurity education. Ultimately, GenAI has the potential to transform the way we train the next generation of cybersecurity professionals, but its successful integration will require ongoing evaluation, adaptation, and careful attention to both educational and ethical considerations.