Abstract

With the availability of a virtually infinite number of text documents in digital format, automatic comparison of textual data is essential for extracting meaningful insights that are difficult to identify manually. Many existing tools, including AI and large language models, struggle to provide precise and explainable insights into textual similarities. In many cases, they determine the similarity between documents as reflected by the text, rather than the similarities between the subjects being discussed in these documents. This study addresses these limitations by developing an n-gram analysis framework designed to compare documents automatically and uncover explainable similarities. A scoring formula is applied to assigns each of the n-grams with a weight, where the weight is higher when the n-grams are more frequent in both documents, but is penalized when the n-grams are more frequent in the English language. Visualization tools like word clouds enhance the representation of these patterns, providing clearer insights. The findings demonstrate that this framework effectively uncovers similarities between text documents, offering explainable insights that are often difficult to identify manually. This non-parametric approach provides a deterministic solution for identifying similarities across various fields, including biographies, scientific literature, historical texts, and more. Code for the method is publicly available.

1. Introduction

In the modern digital landscape, the need for effective methods to analyze, compare, and interpret large textual data is paramount. The ability to detect patterns of similarities between text documents can provide immediate insights that would otherwise be difficult to make. For instance, users who study certain political figures can use large amounts of text to identify certain similarities between them. Researchers in the medical domain can identify similarities or common causes between two clinical conditions by comparing a large corpus of medical records that describe these conditions. Similarly, health records associated with certain drugs or vaccines can be compared to identify symptoms or side effects, which can be analyzed even by scanning user-generated documents through social media. In the humanities domain, comparisons can be used to identify specific similarities between novels, literary styles, or characters. However, despite the potential usefulness of the ability to quickly perform an explainable identification of similarities between documents, such algorithms have attracted relatively little attention.

Automatic analysis of text has been studied intensively for tasks such as automatic document classification [1,2,3,4], automatic translation of documents [5,6], document summarization [7,8,9], and automatic generation of documents, which is often performed through large language models [10].

Text document comparison is a common feature in modern word processors, but its goal is merely to compare and match words and sentences in the body of the text. It cannot be used effectively for comparing specific topics of similarities discussed in the documents. Therefore, while automatic document comparison is commonly used feature, it is irrelevant to the task of identifying specific similarities between two or more entities as they are studied through the text documents.

One of the most common forms of identifying similarities between documents is automatic plagiarism detection [11,12,13,14,15]. Other research efforts focused on similarities between scientific documents, including similarities in keywords and references [16]. Term extraction was also used to determine document similarities [17]. Domains in which automatic document similarities are commonly used include law [18,19] and accounting [20]. Using machine learning, the task of clustering was applied to text documents to identify the most similar documents inside a text corpus [21]. Similarities between text documents can also be identified using stylistic elements of the writing [22,23].

Document similarity comparison can include string-based methods, knowledge-based methods, corpus-based methods, and hybrid methods that combine different approaches [24]. String-based similarities aim at lower level comparison of the text by comparing two or more sequences of letters. For instance, that can be achieved by the minimum number of primitive operations required to transform one strong into the other [25,26,27]. The approach of edit distance has been used intensively also outside the task of text comparison, for instance in field of genetics to compare and identify matching DNA sequences [28].

Knowledge-based methods use semantic networks to extract information and make conclusions from text. It uses pre-defined ontologies to make connections between meaning and words, often in specific predefined domain. The difference between two pieces of text can be measured through the path on the semantic network that needs to be taken in order to lead to a match [29,30]. Another form is through collections of common terms or concepts [31,32].

Corpus-based similarity of text is based on applying similarities between sub-parts of the text, normally sentences, and a defined collection of text documents. The comparison to single yet very large set of text documents allows to find similarities to common sentences that appear in the corpus, which allows to identify similarities or to transform text documents [33,34,35].

Automatic text similarity has been used intensively for the purpose of document clustering and for data mining in text [36,37,38]. For instance, cosine similarity can be used by comparing a feature vector made of the similarities between different components [39]. Association rule is another common method to identify similarities between text by using certain association procedures [40,41,42].

While these methods have been useful for their designated purposes, they are less applicable for the purpose of discovering patterns of similarities between entities described in text documents. The similarity scores are used effectively for the purpose of text data mining, text information retrieval, and document clustering. However, they do not necessarily provide direct insights on the specific elements that assist to study similarities between entities. Also, these methods are often based on complex rules, and therefore not always explainable in the sense that the user can understand why different patterns express similarities between the documents. Existing AI and large language models can also be used for the task of identifying similarities between different entities, but they often provide generalized summaries that do not necessarily capture subtle similarities nuances.

Therefore, many of these current solutions struggle with delivering explainable insights that are trivial for the user to understand. This study seeks to enhance existing natural language processing (NLP) tools by developing an explainable framework that can identify specific similarities between large text documents. Ultimately, these similarities should be communicated to the user in a clear and intuitive manner. The analysis is domain-independent, and can be applied without training a machine-learning system, collecting data, or crafting semantic networks based on existing knowledge.

By focusing on detailed comparisons, this framework aims to facilitate more informative assessments of similarities between pieces of text. The analysis is explainable, and allows the user to understand the meaning of the outcomes and reconstruct the path that led to the results. The proposed approach can therefore work in concert with other approaches such as solutions based on large language models, and provide additional insights that large language models often struggle to identify. Since the algorithm is deterministic and clear, users can easily evaluate and understand the nature of the output.

2. Automatic Similarity Analysis Method

The purpose of the method is to provide a quick overview of topics that are common between the two documents, and might therefore be relevant to similarities between the entities being studied. A simple comparison of words is not necessarily effective since it will provide a majority of terms that are common to the documents being compared, but provide no specific information about similarities between the entities. For that purpose, n-grams are used in combination with several other NLP tools.

2.1. N-Grams

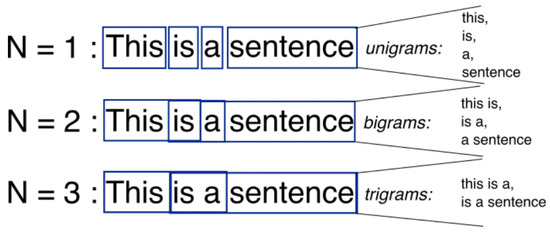

In the context of NLP, n-grams are contiguous sequences of ‘n’ items, typically words [43,44,45,46]. These items can also be characters or syllables, depending on the granularity of the analysis. The value of ‘n’ determines the order of the n-gram. In this study, the n-grams are key to understanding the similarities between texts by focusing on patterns in word sequences. They can be used to pinpoint common phrases and structures, which allows for a more informative comparison between documents. Figure 1 shows an example of 1-grams, 2-grams, and 3-grams.

Figure 1.

Examples of 1-grams, 2-grams, and 3-grams.

Analysis through n-grams requires a step of pre-processing of the text data such as tokenization and lemmatization of the text. That was performed with the two commonly used NLP libraries: SpaCy and NLTK. SpaCy is known for high efficiency and accuracy, making it optimal for real-time applications or tasks that involve the analysis of very large quantities of text [47,48,49]. One of the advantages of SpaCy in the context of this study is that it supports models of named entity recognition (NER) and dependency parsing. On the other hand, SpaCy’s limited focus on high-level processing requires additional tools for detailed corpus-based analysis.

NLTK is comprehensive library supporting diverse text processing techniques such as stemming, stopword removal, and part of speech tagging. While NLTK may be slower on large datasets, it offers capabilities for detailed analysis and pre-processing. In this study, it is used for part of speech tagging and entity identification.

2.2. Weighted n-Gram Analysis for Identification of Similarities

The analysis of identifiable similarities between documents of text is based on which identifying and assigning weights to n-grams that appear in both documents. The analysis receives two chunks of text data as its input, and identifies n-grams that are common between them. For that purpose, the words need to be converted to their stem form to identify similar n-grams. This is achieved through the SpaCy library, which provides mature automatic lemmatization of text.

The concept of n-grams is needed since many patterns of similarities can not be detected through a single word. For instance, in a comparison of two preeminent scientists, a possible pattern of similarity between them is that both won a Nobel Prize. Since “Nobel Prize” is two words, the word “Nobel” and “Prize” will not be associated if each is used as a single word. Another example could be the term “climate change”, where each of the two words has a meaning in its own, but when combined they reflect a different term. The use of n-grams can ensure that terms that are reflected by multiple words are also analyzed, and can be detected.

The primary weakness of this technique is that the vast majority of matching n-grams in two documents are irrelevant to the similarities between the subjects discussed in these documents. That is, while the text might be similar due to common words that appear in both of them, the similarity of the text does not provide any useful insights to the user. For instance, simple words or phrases such as “it is” might appear numerous times in the documents, but the fact that they appear in both documents does not provide any useful insights. In that sense, the similarity between text documents provides similarity of the text, but not the similarity between the subjects that are being compared. To address that weakness, the analysis must be able to trim n-grams of common words that are frequent in both documents. Such n-grams are not expected to include useful insights, and will overwhelm the user with unimportant data, making it difficult to identify the true informative insights. That can be achieved by penalizing topics or words that are frequent in the English language. Algorithm 1 provides the high-resolution description of the method.

| Algorithm 1 High-level algorithm for common n-grams analysis. |

|

Arguments

- doc1_data and doc2_data: N-gram data, noun chunks, and named entity information for each document.

- eng_dict: A dictionary of English word frequencies for significance calculation.

The backbone of the method is the weighting of the n-grams as they are evaluated for their ability to provide useful similarities between two text documents. Such weighting can be conceptualized as the identification of n-grams that are common to the two documents being compared, but are not so common in the general language used in these documents. That is, the weight of an n-gram increases when the n-gram is common in the two document, but decreases when the n-gram is common in the language of the document. That provides an explainable method that can identify specific n-grams that indicate on similarities between documents.

In its most simple form, the weights can be determined using a basic frequency comparison:

where and represent n-gram frequencies in the two documents, and represents baseline frequency for common words derived from a large and representative Corpus of Contemporary American English [50]. The weight can therefore be conceptualized as the frequency of the word in the document in which it appears compared to its frequency in the English language. Words that are more frequent in the document are assigned with a higher weight, but that weight is also reduced when the word is more frequent in the English language in general.

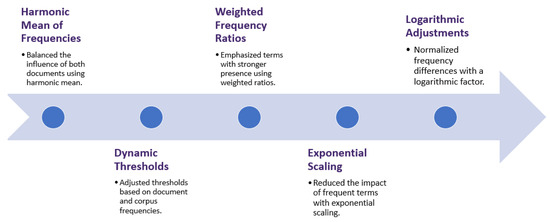

To allow adjusting the noise level through the weighting, a logarithmic scaling was applied as shown by Equation (2). The logarithmic scaling provided a smoother weighting that further lowered the weight of n-grams that are common in the English language, therefore reducing the amount of similarity insights that reflect the use of language rather than similarity insights that are relevant to the user.

The weights can be refined with thresholding based on average frequency. That is, if the weight is smaller than the threshold, the weight is set to zero. The intuition of the rule is that if the word is more frequent in the English language than it is in any of these documents, that word might not be able to provide useful insights about these documents. The thresholding is required to eliminate common terms that were not meaningful in the distinction across documents.

The final formula combines frequency differences, entity presence, and noun chunk recognition for an enriched analysis:

where:

- Base multiplier =

- and are the squared differences between the n-gram’s frequency in documents 1 and 2 compared to the English corpus frequency:

- Entity presence is 0.5 if the n-gram is a named entity.

- Noun chunk presence is 0.5 if the n-gram is a noun chunk.

This formula synthesizes linguistic features and frequency adjustments to provide a more meaningful representation of textual similarity. If the n-gram contains an entity or noun chunk, the weight assigned to the n-gram will be increased. Rather than relying on raw counts alone, it makes the comparison meaningful beyond mere frequency matching, allowing to prioritize n-grams that are more likely to be meaningful to the user. Figure 2 illustrates the steps of the analysis.

Figure 2.

Iteration of the analysis.

The intuition of the method is that it identifies topics that are common to the two text documents, but are not common in the English language. That means that the formula assigns higher weights to words that appear more frequently in both documents, but penalizes words that are frequent in other documents that are not related to the documents or topics that are being compared. The frequency of words in other documents is determined by their frequency in the English language.

For instance, words such as “is” or “the” that are common between virtually any two English documents will not be assigned with high weights by the method since these words are also common in the English language in general. That leads to high weights assigned to topics that are relevant to the specific similarities between the two documents. Words that are less common in the English language but appear in both documents in relatively high frequency will be assigned higher weights, and therefore are likely to be identified.

Because the formula is deterministic, and the reason for the weight assigned to each word is clear, the model is explainable. That is different from methods based on deep neural networks such as large language models, where the rules are highly complex and non-intuitive, and users or developers of these methods cannot fully understand how the output is generated. The results are visualized as a word cloud, where the cloud is driven by the weight assigned to each word. That provides an intuitive and user-friendly visualization, as will be demonstrated in Section 3.

3. Experimental Results

The method was tested using a variety of text documents. While the focus was primarily on biographical texts and classical literature for testing, our methodology is not dependent on training or pre-existing knowledge, and therefore flexible enough to work with different types of content. This adaptability helps to handle different writing styles and different topics without the need to re-train the algorithm or adjust its parameters.

Main sources of the documents were Wikipedia articles and classical texts from Project Gutenberg. The biographies included the Wikipedia articles for Michael Jackson (9603 words), Lionel Messi (21,465 words), Cristiano Ronaldo (12,784 words), Stephen Hawking (9119 words), Taylor Swift (9174 words), Jeff Bezos (7279 words), and Albert Einstein (13,116 words). Classical text included “War and Peace” (534,562 words) and “Anna Karenina” (353,828 words).

The results of the method were compared to the results generated by a large language model implemented by ChatGPT 3.5 [51] as provided by OpenAI. ChatGPT 3.5 is based on deep neural networks implementing large language models trained with very substantial amount of text documents, primarily retrieved from the internet. The proposed method was also compared to the results of the commonly used Doc2Vec algorithm [52]. That was achieved by applying word similarities to the two documents with vector size of 1000 words and window size of 50. The similarity was applied by identifying the 50 words most similar to the first entity, and comparing the output to the 50 words that are the most similar to the second entity. The two lists of words are then compared to identify words that appear in both lists. Words that are among the most similar words to both entities, as determined by Doc2Vec, can be assumed to be words that are common to both entities.

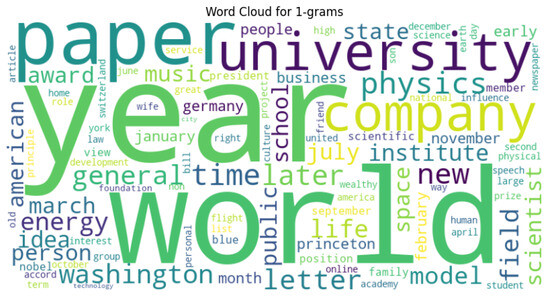

3.1. Comparison of Albert Einstein and Stephen Hawking

This experiment analyzes the linguistic patterns from the biographies of Stephen Hawking and Albert Einstein, sourced from their Wikipedia pages. Hawking’s biography comprises 9919 words, while Einstein’s contains 13,116 words. This comparison aims to highlight the distinct contributions of each physicist and the effectiveness of the n-gram analysis in capturing similarities between their biographies and scientific legacies.

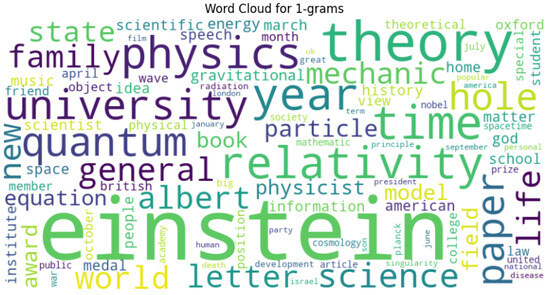

Figure 3 shows the word cloud of the results of the unigram analysis applied to the two documents. The unigram analysis reveals key terms such as “theory”, “universe”, “energy”, and “relativity”, highlighting Einstein’s work in relativity and Hawking’s work based on the foundations of Einstein, and specifically on black holes. Terms such as “paper”, “scientific”, “physics”, and “university” reflect that both persons were scientists, and specifically physicists. Terms such as “energy" and “time” reveal their specific work related to physics. While these similarities between these two scientists are known, a person who is not aware of their background can make quick initial insights from the results. Words such as “divorce” and “music” are less trivial, but provide useful information since both men went through a divorce, and both were very interested in classical music. The method therefore helps to identify these similarities automatically, without the need to read and memorize facts mentioned in the documents, and then match facts that are common to both biographies.

Figure 3.

Word cloud for the results of the Unigram analysis of the similarities between for Stephen Hawking and Albert Einstein.

The word cloud also shows some terms that do not provide useful insights such as “state” or “family”. Such terms are provided by the equation, but do not provide any useful insight even for those who are not familiar with these scientists.

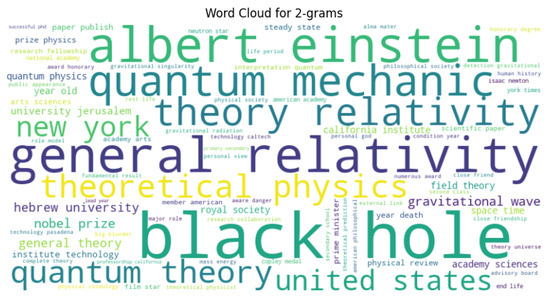

Figure 4 shows the word cloud after applying the bigram analysis. The analysis provides insights such as “general relativity”, “quantum mechanic”, and “black hole”, as these topics clearly were of interest to both scientists. “Nobel Prize” is also mentioned although Hawking did not win the award, but was still mentioned as a candidate. Some academic societies where both scientists were members are also mentioned.

Figure 4.

Word cloud for the bigram analysis of the similarities between Stephen Hawking and Albert Einstein.

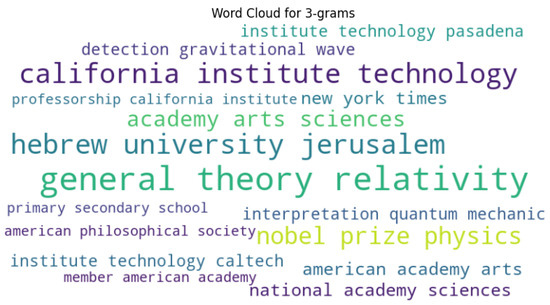

Figure 5 shows the resulting word cloud from the trigram analysis. As the figure shows, it highlights phrases such as “general theory relativity”, and several societies or institutions where the two spent time, such as Caltech or the Hebrew University of Jerusalem. Einstein was a founding father of the Hebrew University of Jerusalem, while Hawking lectured there, including during the university’s 70th anniversary in 2009. Both were elected to the American Philosophical Society—Einstein in 1937 and Hawking in 2008—for their significant scientific contributions. They were also affiliated with the California Institute of Technology, where Einstein predicted gravitational waves, and Hawking advanced the understanding of cosmic phenomena like black hole mergers. Caltech was also pivotal in the first detection of gravitational waves in 2015.

Figure 5.

Word cloud of the trigram analysis for Stephen Hawking and Albert Einstein.

The results from Figure 3, Figure 4 and Figure 5 can be compared to a request from ChatGPT 3.5 to provide the similarities between Stephen Hawking and Albert Einstein, following the question “How do Stephen Hawking and Albert Einstein compare in terms of their contributions to physics?”. The summary made by ChatGPT large language model is the following:

“Stephen Hawking and Albert Einstein are famous physicists. Einstein is known for his theory of relativity, and Hawking is known for his work on black holes. They both made important contributions to physics.”

While this summary provides a basic overview that is correct, it mostly summarizes the work of each scientist. ChatGPT therefore can focus on the major similarities, but might struggle to identify specific details of the similarities that can be deduced by very careful reading of their brief biographies. The summary provided by the large language model therefore provides little direction for a user interested in exploring the similarities between the two persons.

When using the most similar words as determined by Doc2Vec, the words among the top 50 similar words that appear for both Einstein and Hawking are “He”, “his”, “the”, “and”, “from”, “by”, “”it”, “new”, “that”, “first”, “physics”, “would”, “including”, “on”, “Jewish”, “were”, “is”, and “with”. That list mostly includes common English words that do not necessarily provide useful information. Since Doc2Vec does not penalize words that are frequent in the English language, the high frequency of these words can lead Doc2Vec to associate them with the entities, therefore leading the algorithm to select these words. The word “physics” provides the information that both were physicists. The word “new” might indicate that both were innovative. The word “Jewish” also appears as a common word for both people, although Stephen Hawing was not Jewish. The male pronouns can indicate that both entities were people, and both were men.

3.2. A Comparison Between Soccer Players Cristiano Ronaldo and Lionel Messi

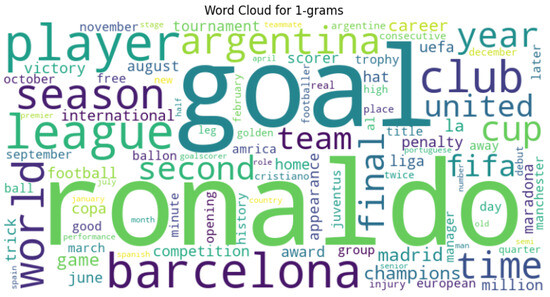

This study analyzes the patterns of similarities from the biographies of Cristiano Ronaldo and Lionel Messi, as taken from their Wikipedia articles. Figure 6 shows the word cloud that results from the comparison between Messi and Ronaldo as reflected by the 1-gram analysis. As in the experiment with Einstein and Hawking, the insights gained from n-gram analysis are also compared with those generated by the large language model provided by ChatGPT.

Figure 6.

Word cloud of the results of the unigram comparison between Cristiano Ronaldo and Lionel Messi.

Figure 6 shows the word cloud of the results of the unigram analysis of the similarities between Ronaldo and Messi. The analysis provides terms such as “goal”, “team”, “league”, “football”, “ball”, and “match”, showing the user that the two are soccer players. The analysis shows the tournaments the two played in, countries, teams, and awards they received. For instance, the term “Ballon” reveals that the two hold the record in winning and nomination for Ballon d’Ors. Naturally, soccer experts might not be surprised from these results, but it demonstrates that the method can identify similarities through terms that lead to insights about the players. Such terms can be used by the user as insight to obtain further information about possible similarities they were not aware of initially.

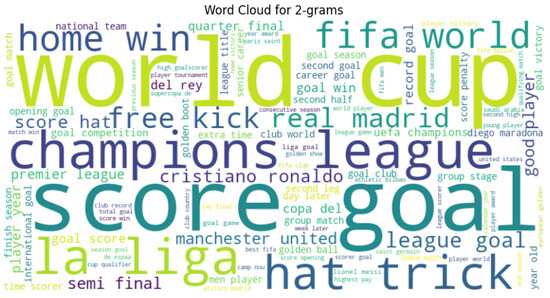

Figure 7 shows the word cloud of the bigram analysis, revealing terms related to soccer, including “Champions League” and “La Liga”, two leagues in which both soccer players played. Terms like “total goals” and “club record” indicate that both players hold the scoring record for their respective soccer clubs. “World Cup”, “FIFA World”, and “del Rey” indicate on some of the tournaments both players competed in. “Diego Maradona” did not play at the same time as these players, but both were often compared to him. “Saudi Arabia” is mentioned as both players received offers to play in that country, and Ronaldo did play in the Saudi Arabia soccer league. “Golden Boot” is another term that the two players share, as both won that award. Terms such as “Manchester United”, “Real Madrid”, and “FC Barcelona” highlight the teams where the two players played against each other.

Figure 7.

Word cloud of the results of the bigram comparison between Cristiano Ronaldo and Lionel Messi.

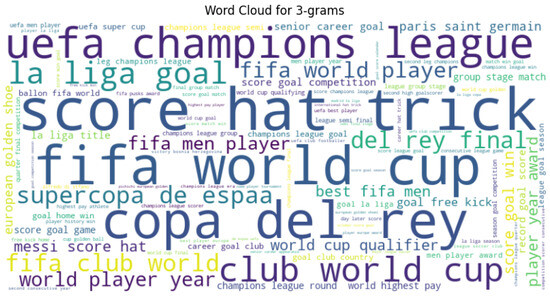

The trigram analysis of the two soccer players is shown by Figure 8. Phrases like “Golden Boot winner” and “World Cup final” highlight achievements that are common to both players. “Copa del Rey”, “UEFA Champions Leagues”, and “FIFA World Cup” mostly refer to competitions where both players played. Terms such as “highest paid player” refer to the fact that the two players were the highest paid players during their career. For a person not familiar with these two figures, that information can provide useful insights that can be used as a base for a more thorough investigation.

Figure 8.

Word cloud of the trigram analysis of the similarity between Cristiano Ronaldo and Lionel Messi.

The large language model by ChatGPT was asked to provide a comparison between the two players with the question “How do Lionel Messi and Cristiano Ronaldo compare in terms of their football careers?”. It provided the following basic summary:

“Cristiano Ronaldo and Lionel Messi are two of the greatest football players of all time. Ronaldo is known for his athleticism, while Messi is known for his playmaking and dribbling. They have won numerous awards and broken many records in football.”

This summary provides a basic overview, and a quick summary of each of the two players. Yet, it lacks specific terminology and nuances critical for a comprehensive understanding of the specific achievements and career stages that are common to the two players. The large language model therefore provides a correct overview, but that overview does not provide insights that can be used for further and more detailed understanding of the similarities between the two subjects.

Analysis of the 50 most similar words to both players provided several words that were in both Messi’s and Ronaldo’s lists. These included words such as “a”, “on”, “at”, “the”, “number”, “player”, “him”, “in”, “became”, “for”, “by”, “shirt”, “years”, “their”, “with”, “Ronaldo’s”, “new”. The list of words that are common in both lists of most similar words shows some indication that the two are athletes. However, since it is saturated by words that are frequent in the English language and have no informative meaning, they do not provide many useful insights about the similarities between the two players.

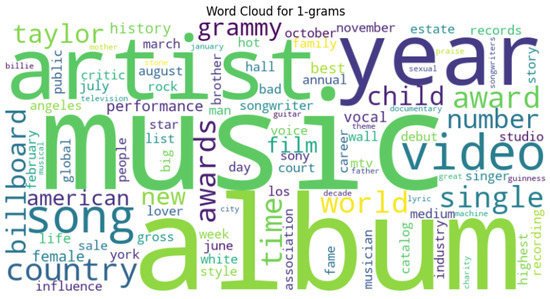

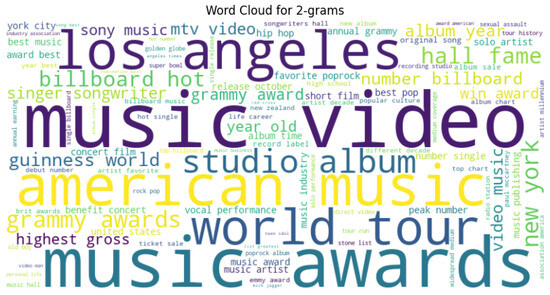

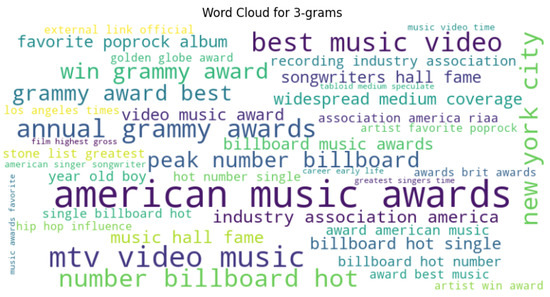

3.3. Comparison Between Pop Icons Michael Jackson and Taylor Swift

Figure 9 shows the word cloud resulting from comparing the Wikipedia articles describing Michael Jackson and Taylor Swift. Terms such as “music, “singer”, “album”, “song”, “video”, “artist”, “performance”, “records”, “voice”, “studio”, “mtv”, and “music”, indicate that the two are musicians and singers. Words such as“songwriter” identify another similarity, as the two normally wrote their own songs. The word “court” indicates that both musicians tended to be involved in legal affairs. The word “Sony” reveals that both musicians made major deals with Sony Music Group, and both signed with Sony. The word “American” indicates the similarity that both singers share the same nationality. “Grammy” indicates that both musicians won the Grammy award, which is another commonality between them. The word “bad” seems incidental, as both musicians had highly popular songs with the word “bad” in them.

Figure 9.

Word cloud of the unigram analysis of the similarity between Michael Jackson and Taylor Swift.

Figure 10 shows the resulting word cloud of the bigram analysis. “Grammy Award” and “Billboard Chart” are naturally common to the successful musicians, as both won the Grammy Award and both had songs in the Billboard Chart. The term “Los Angeles” reveals that the two musicians lived in the same city. The term “Guinness World” is another interesting insight, as both musicians hold numerous Guinness records. For instance, Jackson is the record holder for being the best-selling artist of all time, while Swift holds the record for highest-grossing music tour among others.

Figure 10.

Word cloud of the bigram analysis of the similarity between Michael Jackson and Taylor Swift.

The trigram analysis is displayed by Figure 11. The diagram shows awards that both musicians won, and are therefore common to both musicians. These awards include the best video award, the Grammy award, Golden Globe, and the songwriters hall of fame. These terms show that the analysis can identify similarities between documents in a manner that is non-parametric yet clear.

Figure 11.

Word cloud of the trigram analysis of the similarity between Michael Jackson and Taylor Swift.

When asked to compare the careers of Michael Jackson and Taylor Swift, ChatGPT generated the following basic summary:

“Michael Jackson was a legendary performer known as the King of Pop, and Taylor Swift is a singer-songwriter known for her storytelling. Jackson is famous for his iconic albums and dance moves, while Swift is recognized for her ability to connect with fans through her lyrics.”

While this summary provides a basic overview, it lacks direct identification of similarities between them, and in fact highlights differences between the two musicians. A person who is not familiar with any of these musicians will therefore struggle to start a comprehensive analysis of the similarities based on this information alone.

Analysis using Doc2Vec to identify common terms that are similar to both artists led to the following words: “has”, “year”, “a”, “2024”, “time”, “the”. This is a basic list that does not provide the information that allows for learning about the similarities between the artists. When applied to each artists, Doc2Vec provides relevant terms. For instance, the list of more similar words to Taylor Swift included words like “album”, “Nashville”, “woman”, “country”, “songs”, “Billboard”, “songwriter”, “musician”, “singer”, and “pop”. However, Doc2Vec did not find any of these words among the top words similar to Michael Jackson in his Wikipedia article, and therefore these words were not highlighted as insights of similarities between the two artists.

3.4. Comparison of Albert Einstein and Jeff Bezos

The comparisons above were made between topics that share known commonalities. To test a situation where the topics do not share obvious commonalities, we compared Jeff Bezos to Albert Einstein. A business person such as Jeff Bezos is not expected to have obvious things in common with a science figure such as Albert Einstein. Figure 12 shows the unigram analysis of the similarities of Einstein and Bezos.

Figure 12.

Word cloud generated by the unigram analysis of the comparison between Albert Einstein and Jeff Bezos.

The analysis provides similarities that might not necessarily be well-known. For instance, the term “Princeton” indicates that Jeff Bezos earned a Bachelor’s degree from Princeton University, and Einstein taught at that institution. The word “Germany” could provide an insight that Einstein lived part of his early life in Germany, while Bezos have substantial business connections with Germany and he is even a member of the Germany Logistics Hall of Fame. The word “space” can provide insights that Einstein studied space, while Bezos visited it and has substantial business interest in it. The word “American” identifies their common nationality. The word music indicates that Einstein was highly interested in classical music, while there are several songs about Jeff Bezos, perhaps most notably by musician Bo Burnham.

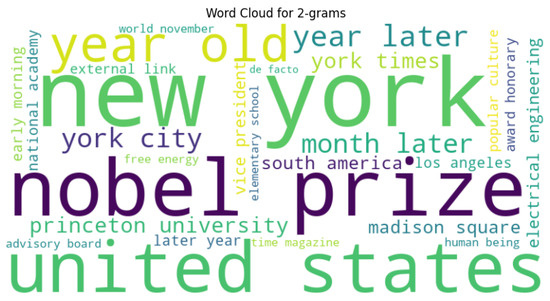

The bigram analysis displayed by Figure 13 shows terms that identify their nationality and places where the two lived. These include “United States”, “Los Angeles”, and “New York”. The topic “electrical engineering” indicates that Einstein had revolutionary impact on that field, while Bezos studied in the department of electrical engineering at Princeton University. Einstein was awarded a Nobel Prize, while Bezos funded Nobel Prize winner Shinya Yamanaka to study longevity and aging. The topic “National Academy” can also be considered surprising, but provides insights for similarities. Einstein was a member of the National Academy of Science, and Bezos is a member of the National Academy of Engineering.

Figure 13.

Word cloud generated by the bigram analysis of the comparison between Albert Einstein and Jeff Bezos.

ChatGPT was used to identify the similarities between Albert Einstein and Jeff Bezos through the question “How do Albert Einstein and Jeff Bezos compare in terms of their careers and achievements?”. ChatGPT generated the following basic summary:

“Albert Einstein was a scientist known for his theories, and Jeff Bezos is the founder of Amazon. Einstein won awards for his work in physics, and Bezos is one of the richest people in the world because of Amazon.”

While this summary provides a basic overview, it basically provides a brief summary of each person. It does not identify the similarities between them.

3.5. Comparison Between “War and Peace” and “Anna Karenina”

The proposed method can be used for the field of digital humanities. Digital humanities enables the study of the humanities with the aid of digital and computational tools. The method shown here can identify not merely informative insights, but also words and phrases that are unique to two different creations. This can reveal insights regarding the selection of words and phrases that make the style of books or authors.

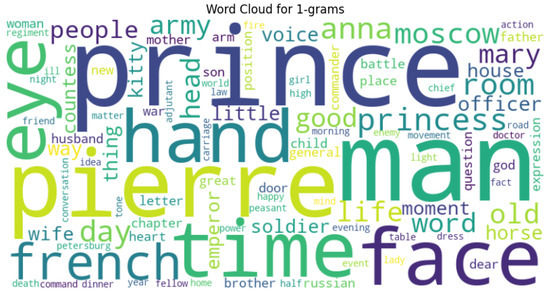

Here, we tested two of Tolstoy’s masterpieces: “War and Peace” and “Anna Karenina”. Figure 14 shows the word cloud of the unigram analysis. The word cloud shows words such as “prince”, that could be explained by the characters in the two books—Prince Andrei Nikolayevich Bolkonsky in “War and Peace” and Prince Stepan Arkadyevich Oblonsky in “Anna Karenina”. “French” is expected because of the war against France in “War and Peace”, and the fact that Anna Karenina often spoke French to display her social status.

Figure 14.

Word cloud generated by the unigram analysis of the comparison between Tolstoy’s “Anna Karenina” and “War and Peace”.

Some names were also common. The name “Anna” is mentioned in “War and Peace” referring to socialite Anna Pavlovna Scherer and Princess Anna Mikhailovna Drubetskaya. The name “Pierre” refers to Pierre Bezukhov in “War and Peace” and Petritsky in “Anna Karenina”, who was addressed as “Pierre”. However, while the common names are expected, the common words and phrases show similarities in style and concepts emphasized in the books, and might be of interest to humanists.

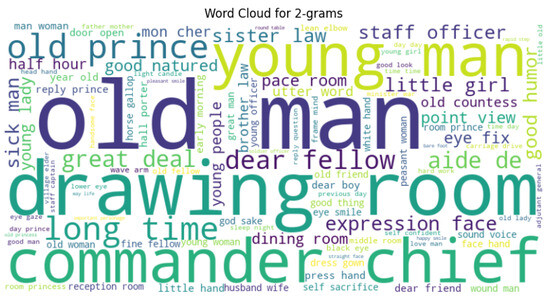

For instance, the 2-gram word cloud shown in Figure 15 highlights terms related to age such as “old man”, “young man”, “little girl”, “young girl”, “young lady”, “year old”, “young officer”, “old countess”, “old prince”, “young people”, and “old woman”, showing a focus on age in Tolstoy’s work. Similarly, the word cloud shows a large number of terms related to family relations or parts of a house, which might be of interest to those trying to study and profile Tolstoy’s style.

Figure 15.

Word cloud generated by the bigram analysis of the comparison between Tolstoy’s “Anna Karenina” and “War and Peace”.

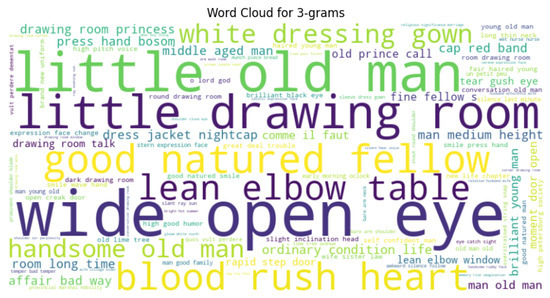

Figure 16 shows the 3-gram analysis, showing combinations used by Tolstoy in both books. While they do not necessarily provide information about the two books, they show the wording common to Tolstoy’s two books, and can reveal information about Tolstoy’s unique style and wording. These patterns are difficult to notice by manual reading of the books since most combination of words are common and are not unique to a certain author.

Figure 16.

Word cloud generated by the trigram analysis of the comparison between Tolstoy’s “Anna Karenina” and “War and Peace”.

4. Conclusions

The ability to provide an analysis of similarities between two topics or subjects of interest can assist in knowledge extraction. Reading and comparing documents is a time-consuming task, making it more difficult for humans to perform such comparisons without automated assistance. When the documents are large, such comparisons are also limited by the capacity of the memory of the person trying to perform the task.

Here, we describe a method that can perform the task in an explainable and non-parametric manner. It reads directly from the documents without the need to train a machine-learning system, and provides a list of recommended insights of possible similarities. While the list may contain terms that are not necessarily similarities between the subjects, it also provides terms that lead to similarities that are difficult to identify manually. The method is general, and can be applied to any kind of text.

The solution is based on a defined mathematical formula that assigns higher weight to terms that appear in the two document, but penalizes terms or words that are common in the English language regardless of the topic. The output of the method is therefore explainable, and the user can understand the reasons for selecting each of these terms. That makes it different from solutions that are based on large language models, where the rules are data-driven and highly complex, and a user cannot practically understand why a certain answer is given. It is also different in that sense from Doc2Vec, which also uses a neural network in its analysis. The proposed method is certainly different from document comparison methods that are commonly used by word processing applications. These document comparison methods aim at identifying text differences between the documents rather than topics or patterns of similarity.

The output of the method is not necessarily a readable paragraph, but a set of weighted terms visualized through a word cloud. Each term can be considered a possible insight, and the user can therefore observe these terms and explore them further to learn to full nature of these similarities. In that sense, the proposed method is used to assist handling large amounts of text documents.

While large language models can also be used to analyze similarities between documents, the output provided by these models is different from the results of the analysis shown here. Large language models can provide readable paragraphs, but at the same time it is more difficult to understand how these models are producing their output. It is therefore more difficult to understand how complete or comprehensive the analysis is.

The proposed method is limited to common terms, and therefore cannot identify more complex patterns that require deep understanding of the subjects being studied. It therefore cannot fully mimic the way humans identify similarities between subjects. The goal of the proposed method is to provide a tool that can aid a person studying the similarities between two topics. In that sense, it is a solution that can be used in combination with other methods to provide quick and explainable insights to the user. In its present form, the solution is limited to the English language, although adjustments to other languages can be implemented by using similar NLP tools designed for each target language. Another limitation of the method is that its performance depends on NLP tools that perform tasks such as tokenization and lemmatization. These tools are not necessarily perfect, and their imperfectness might affect the performance of the propose method.

Author Contributions

Conceptualization, A.J., S.T.E. and L.S.; methodology, A.J. and L.S.; software, A.J.; validation, A.J.; formal analysis, A.J. and L.S.; investigation, A.J., S.T.E. and L.S.; resources, A.J., S.T.E. and L.S.; data curation, A.J.; writing—original draft preparation, A.J. and L.S.; writing—review and editing, A.J., S.T.E. and L.S.; visualization, A.J.; supervision, L.S.; project administration, L.S.; funding acquisition, L.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded in part by the USA National Science Foundation grant number 2148878.

Data Availability Statement

The code that implements the method is available at https://github.com/AkhilJoshi15/Language_Pattern_Analyzer (accessed on 24 February 2025).

Acknowledgments

We would like the thank the three knowledgeable reviewers for the insightful comments.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Kowsari, K.; Jafari Meimandi, K.; Heidarysafa, M.; Mendu, S.; Barnes, L.; Brown, D. Text classification algorithms: A survey. Information 2019, 10, 150. [Google Scholar] [CrossRef]

- Gasparetto, A.; Marcuzzo, M.; Zangari, A.; Albarelli, A. A survey on text classification algorithms: From text to predictions. Information 2022, 13, 83. [Google Scholar] [CrossRef]

- Minaee, S.; Kalchbrenner, N.; Cambria, E.; Nikzad, N.; Chenaghlu, M.; Gao, J. Deep learning–based text classification: A comprehensive review. ACM Comput. Surv. (CSUR) 2021, 54, 1–40. [Google Scholar] [CrossRef]

- Yu, B. An evaluation of text classification methods for literary study. Lit. Linguist. Comput. 2008, 23, 327–343. [Google Scholar]

- Bar-Hillel, Y. The present status of automatic translation of languages. Adv. Comput. 1960, 1, 91–163. [Google Scholar]

- Ablanedo, J.; Aiken, M.; Vanjani, M. Efficacy of English to Spanish automatic translation. Int. J. Inf. Oper. Manag. Educ. 2007, 2, 194–210. [Google Scholar]

- Campr, M.; Ježek, K. Comparing semantic models for evaluating automatic document summarization. In Proceedings of the 18th International Conference on Text, Speech, and Dialogue, Pilsen, Czech Republic, 14–17 September 2015; Springer: Berlin/Heidelberg, Germany, 2015; pp. 252–260. [Google Scholar]

- Carenini, G.; Cheung, J.C.K.; Pauls, A. Multi-document summarization of evaluative text. Comput. Intell. 2013, 29, 545–576. [Google Scholar]

- Ma, C.; Zhang, W.E.; Guo, M.; Wang, H.; Sheng, Q.Z. Multi-document summarization via deep learning techniques: A survey. ACM Comput. Surv. 2022, 55, 1–37. [Google Scholar]

- Wu, Y. Large Language Model and Text Generation. In Natural Language Processing in Biomedicine: A Practical Guide; Springer: Berlin/Heidelberg, Germany, 2024; pp. 265–297. [Google Scholar]

- Parker, A.; Hamblen, J.O. Computer algorithms for plagiarism detection. IEEE Trans. Educ. 1989, 32, 94–99. [Google Scholar]

- Foltỳnek, T.; Meuschke, N.; Gipp, B. Academic plagiarism detection: A systematic literature review. ACM Comput. Surv. (CSUR) 2019, 52, 1–42. [Google Scholar]

- Naik, R.R.; Landge, M.B.; Mahender, C.N. A review on plagiarism detection tools. Int. J. Comput. Appl. 2015, 125, 16–22. [Google Scholar]

- Chandere, V.; Satish, S.; Lakshminarayanan, R. Online plagiarism detection tools in the digital age: A review. Ann. Rom. Soc. Cell Biol. 2021, 25, 7110–7119. [Google Scholar]

- Kulkarni, S.; Govilkar, S.; Amin, D. Analysis of plagiarism detection tools and methods. In Proceedings of the 4th International Conference on Advances in Science & Technology (ICAST2021), Bahir Dar, Ethiopia, 27–29 August 2021. [Google Scholar]

- Ahlgren, P.; Colliander, C. Document–document similarity approaches and science mapping: Experimental comparison of five approaches. J. Inf. 2009, 3, 49–63. [Google Scholar] [CrossRef]

- Milios, E.; Zhang, Y.; He, B.; Dong, L. Automatic term extraction and document similarity in special text corpora. In Proceedings of the Sixth Conference of the Pacific Association for Computational Linguistics, Sapporo, Japan, 7–12 July 2003; Citeseer: University Park, PA, USA, 2003; pp. 275–284. [Google Scholar]

- Bhattacharya, P.; Ghosh, K.; Pal, A.; Ghosh, S. Legal case document similarity: You need both network and text. Inf. Process. Manag. 2022, 59, 103069. [Google Scholar] [CrossRef]

- Chen, H.; Wu, L.; Chen, J.; Lu, W.; Ding, J. A comparative study of automated legal text classification using random forests and deep learning. Inf. Process. Manag. 2022, 59, 102798. [Google Scholar] [CrossRef]

- Bochkay, K.; Brown, S.V.; Leone, A.J.; Tucker, J.W. Textual analysis in accounting: What’s next? Contemp. Account. Res. 2023, 40, 765–805. [Google Scholar] [CrossRef]

- Bezdan, T.; Stoean, C.; Naamany, A.A.; Bacanin, N.; Rashid, T.A.; Zivkovic, M.; Venkatachalam, K. Hybrid fruit-fly optimization algorithm with k-means for text document clustering. Mathematics 2021, 9, 1929. [Google Scholar] [CrossRef]

- Shamir, L. UDAT: Compound quantitative analysis of text using machine learning. Digit. Scholarsh. Humanit. 2021, 36, 187–208. [Google Scholar] [CrossRef]

- Swisher, C.; Shamir, L. A data science and machine learning approach to continuous analysis of Shakespeare’s plays. J. Data Min. Digit. Humanit. 2023, 2023, 10829. [Google Scholar] [CrossRef]

- Prasetya, D.D.; Wibawa, A.P.; Hirashima, T. The performance of text similarity algorithms. Int. J. Adv. Intell. Inform. 2018, 4, 63–69. [Google Scholar] [CrossRef]

- Wang, J.; Li, G.; Fe, J. Fast-join: An efficient method for fuzzy token matching based string similarity join. In Proceedings of the IEEE 27th International Conference on Data Engineering, Hannover, Germany, 11–16 April 2011; IEEE: New York, NY, USA, 2011; pp. 458–469. [Google Scholar]

- Levenshtein, V.I. Binary codes capable of correcting spurious insertions and deletions of ones. Probl. Inf. Transm. 1965, 1, 8–17. [Google Scholar]

- Damerau, F.J. A technique for computer detection and correction of spelling errors. Commun. ACM 1964, 7, 171–176. [Google Scholar] [CrossRef]

- McGinnis, S.; Madden, T.L. BLAST: At the core of a powerful and diverse set of sequence analysis tools. Nucleic Acids Res. 2004, 32, W20–W25. [Google Scholar]

- Meng, L.; Huang, R.; Gu, J. A review of semantic similarity measures in wordnet. Int. J. Hybrid Inf. Technol. 2013, 6, 1–12. [Google Scholar]

- Rada, R.; Mili, H.; Bicknell, E.; Blettner, M. Development and application of a metric on semantic nets. IEEE Trans. Syst. Man Cybern. 1989, 19, 17–30. [Google Scholar]

- Huedo-Medina, T.B.; Sánchez-Meca, J.; Marín-Martínez, F.; Botella, J. Assessing heterogeneity in meta-analysis: Q statistic or I2 index? Psychol. Methods 2006, 11, 193. [Google Scholar]

- Tversky, A. Features of similarity. Psychol. Rev. 1977, 84, 327. [Google Scholar]

- Landauer, T.K.; Dumais, S.T. A solution to Plato’s problem: The latent semantic analysis theory of acquisition, induction, and representation of knowledge. Psychol. Rev. 1997, 104, 211. [Google Scholar] [CrossRef]

- Gabrilovich, E.; Markovitch, S. Computing semantic relatedness using Wikipedia-based explicit semantic analysis. In Proceedings of the International Joint Conference on Artificial Intelligence, Hyderabad, India, 6–12 January 2007; Volume 7, pp. 1606–1611. [Google Scholar]

- Kuipers, P. Empowerment in community-based rehabilitation and disability-inclusive development. Disabil. CBR Incl. Dev. 2013, 24, 24–42. [Google Scholar] [CrossRef][Green Version]

- Alqahtani, A.; Alhakami, H.; Alsubait, T.; Baz, A. A survey of text matching techniques. Eng. Technol. Appl. Sci. Res. 2021, 11, 6656–6661. [Google Scholar]

- Xinwu, L. A new text clustering algorithm based on improved K-means. J. Softw. 2012, 7, 95–101. [Google Scholar]

- Ghaemi, R.; Sulaiman, M.N.; Ibrahim, H.; Mustapha, N. A survey: Clustering ensembles techniques. World Acad. Sci. Eng. Technol. 2009, 50, 636–645. [Google Scholar]

- Basha, M.J.; Kaliyamurthie, K. An improved similarity matching based clustering framework for short and sentence level text. Int. J. Electr. Comput. Eng. 2017, 7, 551. [Google Scholar]

- Kulkarni, M.; Kulkarni, S. Knowledge discovery in text mining using association rule extraction. Int. J. Comput. Appl. 2016, 143, 30–35. [Google Scholar]

- Manimaran, J.; Velmurugan, T. A survey of association rule mining in text applications. In Proceedings of the IEEE International Conference on Computational Intelligence and Computing Research, Enathi, India, 26–28 December 2013; IEEE: New York, NY, USA, 2013; pp. 1–5. [Google Scholar]

- Oliinyk, A.; Subbotin, S. A stochastic approach for association rule extraction. Pattern Recognit. Image Anal. 2016, 26, 419–426. [Google Scholar]

- Sidorov, G.; Velasquez, F.; Stamatatos, E.; Gelbukh, A.; Chanona-Hernández, L. Syntactic n-grams as machine learning features for natural language processing. Expert Syst. Appl. 2014, 41, 853–860. [Google Scholar] [CrossRef]

- Robertson, A.M.; Willett, P. Applications of n-grams in textual information systems. J. Doc. 1998, 54, 48–67. [Google Scholar]

- Damashek, M. Gauging similarity with n-grams: Language-independent categorization of text. Science 1995, 267, 843–848. [Google Scholar]

- Silva, J.F.; Cunha, J.C. How large corpora sizes influence the distribution of low frequency text n-grams. In Proceedings of the Pacific-Asia Conference on Knowledge Discovery and Data Mining, Taipei, Taiwan, 7–10 May 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 210–222. [Google Scholar]

- Schmitt, X.; Kubler, S.; Robert, J.; Papadakis, M.; LeTraon, Y. A replicable comparison study of NER software: StanfordNLP, NLTK, OpenNLP, SpaCy, Gate. In Proceedings of the Sixth International Conference on Social Networks Analysis, Management and Security (SNAMS), Granada, Spain, 22–25 October 2019; IEEE: New York, NY, USA, 2019; pp. 338–343. [Google Scholar]

- Altinok, D. Mastering spaCy: An End-to-End Practical Guide to Implementing NLP Applications Using the Python Ecosystem; Packt Publishing Ltd.: Birmingham, UK, 2021. [Google Scholar]

- Fantechi, A.; Gnesi, S.; Livi, S.; Semini, L. A spaCy-based tool for extracting variability from NL requirements. In Proceedings of the 25th ACM International Systems and Software Product Line Conference, Leicester, UK, 6–11 September 2021; Volume B, pp. 32–35. [Google Scholar]

- Davies, M. The Corpus of Contemporary American English as the first reliable monitor corpus of English. Lit. Linguist. Comput. 2010, 25, 447–464. [Google Scholar]

- OpenAI. ChatGPT (3.5 Version) [Large Language Model]. 2023. Available online: https://chatgpt.com/g/g-F00faAwkE-open-a-i-gpt-3-5 (accessed on 1 December 2024).

- Le, Q.; Mikolov, T. Distributed representations of sentences and documents. In Proceedings of the International Conference on Machine Learning, PMLR, Beijing, China, 21–26 June 2014; pp. 1188–1196. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).