1. Introduction

Multiprocessor systems and data-intensive tasks require scheduling methods that account for both computation and communication. Divisible Load Theory (DLT) provides a linear and closed-form framework to divide workloads into fractions and to optimally distribute them across processors and links [

1]. Over three decades, DLT has been applied to parallel and distributed computing, cloud systems, and sensor networks, showing its usefulness as a performance modeling tool. Its strength lies in its ability to offer closed-form analytical results that explicitly capture the effect of communication delay, bandwidth, and load partitioning, making it one of the few theories that describe distributed performance quantitatively [

2,

3,

4].

Parallel speedup laws give another view of scalability. Amdahl’s Law [

5] emphasizes the constraint of serial fractions, while Gustafson’s Law [

6] shifts the focus to problem scaling with processor count. Juurlink extended Gustafson’s model by including heterogeneity effects [

7]. Embedding these laws into DLT creates an integrated framework that links workload distribution with scaling analysis, offering a more realistic picture of parallel performance [

8].

Modern multicore architectures add further complexity. Symmetric, asymmetric, and dynamic designs change how workloads are balanced and how theoretical speedups are achieved [

9,

10,

11,

12]. Combining DLT with these speedup laws under multicore designs enables the evaluation of scheduling strategies in terms of both efficiency and scalability.

The objective of this study is to develop such integrated models. We analyze how DLT interacts with Gustafson’s and Juurlink’s laws and extend the framework to multicore environments. Results demonstrate how load distribution strategies and processor organization influence achievable speedup, providing insights for scheduling in multicore and cloud systems. Ultimately, this work bridges idealized scaling laws and realistic distributed computation, contributing a unified mathematical perspective for analyzing performance in modern heterogeneous systems.

Our contributions in this work:

We integrate Divisible Load Theory (DLT) with Gustafson’s and Juurlink’s speedup laws, providing a unified framework that links workload distribution with scalability analysis.

We extend DLT to symmetric, asymmetric, and dynamic virtual multicore designs, showing how processor configuration and scheduling strategies affect achievable speedup.

We present numerical evaluations that illustrate how different load distribution models interact with scaling laws, offering practical insights for scheduling in multicore and cloud environments.

2. Related Works

Divisible Load Theory (DLT) has evolved over three decades as a foundational analytical tool for modeling distributed computation and communication delays. Early studies established its linear partitioning framework and explored extensions to heterogeneous systems and multi-installment scheduling [

1,

2,

3,

4]. Building on this foundation, Cao, Wu, and Robertazzi [

8] demonstrated that Amdahl-like laws can be effectively integrated with DLT to provide more realistic speedup estimations.

Recent research has further diversified its applications: Wang et al. [

13] extended DLT from fine-grained to coarse-grained divisible workloads on networked systems, while Kazemi et al. [

14] applied DLT to fog computing environments with linear and nonlinear load models to optimize resource allocation. At a more experimental level, Chinnappan et al. [

15] validated a multi-installment DLT-based scheduling strategy through synthetic aperture radar (SAR) image reconstruction on distributed clusters, bridging the gap between theoretical analysis and practical deployment.

Together, these studies reinforce DLT’s analytical role in understanding communication-bounded performance and scalability.

Beyond chip-level modeling, recent studies have explored communication–computation overlapping in large-scale numerical solvers [

16] and task mapping in heterogeneous architectures for visual systems [

17]. In parallel, reliability-aware scheduling and task offloading for distributed edge and UAV-assisted computing continue to highlight the broader challenge of balancing computation and communication under non-ideal conditions [

18]. These diverse efforts share the same analytical motivation as the present study: to quantify scalability through explicit modeling of delay, heterogeneity, and architectural design.

3. Divisible Load Theory Models

3.1. Real-World Applicability of Divisible Load Theory

Although Divisible Load Theory (DLT) is an analytical framework, it was originally motivated by the need to model realistic computing and communication processes in distributed systems [

4]. DLT provides a mathematically tractable framework that captures key performance behaviors observed in distributed and parallel systems. Rather than serving as an empirical model, it offers analytical insight into how computation and communication jointly determine system efficiency under various network and processor configurations. Its practical relevance can be summarized in several aspects.

First, DLT inherently captures the combined effects of computation and communication, allowing designers to analyze heterogeneous processors, link speeds, and topological constraints in a unified manner.

Second, the linearity of the model enables closed-form solutions that remain valid under latency, propagation delay, and finite link capacity, providing quantitative insight into performance saturation in congested networks.

Third, DLT has been extended to include time-varying loads and stochastic effects, Previous studies have shown that DLT-based analytical predictions closely match simulation and experimental trends in distributed systems, typically within single-digit percentage deviations [

2,

3].

Finally, its equivalence with circuit and queuing models makes it suitable for hybrid analysis of networks exhibiting nonlinear transmission characteristics.

Together, these attributes make DLT not only a mathematical abstraction but also a framework that remains robust under real-world constraints such as congestion, link asymmetry, and resource variability.

3.2. Preliminaries

We consider a single-level tree (star) network consisting of a root processor

and

m child processors

, as illustrated in

Figure 1.

The total workload is arbitrarily divisible. Processor

i receives fraction

of the load, with

Inverse processing speed of processor

i is denoted

, and inverse link speed is

. Constants

and

scale computation and communication. The computation and communication times are

The finishing time

is when the slowest processor completes. The baseline sequential time

gives the DLT speedup

These expressions follow the classical DLT formulation introduced by Agrawal and Jagadish [

1], where workloads are treated as continuously divisible and communication delays are explicitly modeled. The framework has since been extended to heterogeneous and sensor-driven systems [

2,

3] and formally summarized in recent texts on distributed scheduling [

4,

19].

DLT incorporates three main distribution strategies.

3.3. Sequential Distribution (SDLT1)

In SDLT1, the root distributes the load to one child at a time; each child computes only after receiving the full load. Load balance requires (see [

4] Section 5.2.1):

Normalization

closes the system. The equivalent processing rate is

For a homogeneous system (

,

),

3.4. Simultaneous Distribution, Staggered Start (SDLT2)

In SDLT2, the root sends to all children simultaneously; each child starts computing only after receiving its load. Timing relations are

Defining

the closed form speedup is

For a homogeneous system,

3.5. Simultaneous Distribution, Simultaneous Start (SDLT3)

In SDLT3, each child processor begins computation as soon as the first portion of its assigned load arrives, allowing full overlap between communication and computation. The load balance equations for this model are

with normalization

. Solving recursively yields

The corresponding speedup for a single-level tree is then

where

. For the homogeneous case (

) and negligible communication delay, this reduces to

3.6. DLT Summary

The three models differ in their treatment of communication and overlap:

SDLT1 suffers from the root bottleneck and scales poorly.

SDLT2 eliminates sequential transmissions, giving linear scaling in homogeneous systems.

SDLT3 achieves the closest to ideal linear speedup under overlapping assumptions.

All formulas above are complete for both heterogeneous and homogeneous systems, and can be directly used in subsequent integrations with speedup laws.

4. Integration with Classical Speedup Laws

Classical laws, including those of Amdahl, Gustafson, and Juurlink, split a workload into serial and parallel parts and then ask how speed scales [

5,

6,

7]. The usual input is the processor count

p, which implicitly assumes that

p processors deliver close to

p times performance. In real systems, this is not true. Communication, load imbalance, and heterogeneity result in losses. DLT provides a system-aware speedup that includes these losses. Our approach follows the integration concept proposed by Cao, Wu, and Robertazzi [

8], replacing

p with

so that the laws preserve their intuition but reflect realistic constraints. Similar considerations about practical scalability in multicore design have also been emphasized by Hill and Marty [

11,

12].

4.1. Amdahl’s Law with DLT

Amdahl assumes a fixed-size problem with parallel fraction

f [

5]:

We replace

p by the effective speedup from DLT:

This keeps the serial/parallel split but removes the ideal-core assumption, making Amdahl’s framework compatible with realistic distributed and multicore environments.

4.2. Gustafson’s Law with DLT

Gustafson points out that problem size typically scales with resources [

6]. The scaled-speedup form follows from fixing the normalized

p-core time to 1 and letting parallel work grow with

p, yielding

as derived from the ratio

with a scaled parallel term. Substituting

gives

This keeps the scaled-workload interpretation but grounds the scaling in measured distribution efficiency rather than a raw core count.

4.3. Juurlink’s General Law (GSEE) with DLT

Juurlink generalizes the growth of the parallel portion via a scale function

with bounds

, it serves as a middle ground between Amdahl (constant) and Gustafson (linear) [

7]. A common example is

to capture diminishing returns. From the same

construction one obtains the generalized scaled speedup equation (GSEE):

which reduces to Amdahl for

and to Gustafson for

. Replacing

p by

yields the DLT-aware form:

This links the shape of scaling (via ) with the achievable parallelism under communication/heterogeneity (via ).

4.4. Why Replace p ?

The reason is practical and direct:

Realism. p implicitly means “about performance,” which is not achievable once communication and imbalance are involved. is an attainable speedup that already accounts for distribution cost and overlap.

Model continuity. Different DLT policies (SDLT1–3) give different ; the laws remain the same algebraically, so we can compare policies without changing the law itself.

Parameter transparency. The resulting formulas retain f (serial/parallel mix) while adding measurable system parameters through .

This substitution is the glue between classical laws and system-level constraints. It retains the clarity of Amdahl/Gustafson/Juurlink while making the predictions match real multicore and network systems.

4.5. Bridging Theoretical and Architectural Limits

This study is divided into two tightly connected parts, each addressing a different layer of performance limitation in multicore systems.

The first part establishes a baseline model by integrating Divisible Load Theory (DLT) with the classical speedup laws of Amdahl, Gustafson, and Juurlink. DLT and the speedup laws approach scalability from two complementary perspectives: DLT quantifies system-level bottlenecks introduced by communication and scheduling, while speedup laws describe computational scaling under idealized assumptions. By embedding DLT into the structure of the classical laws, the model captures both scheduling constraints and parallel scalability within a unified analytical framework. This section therefore defines the theoretical ceiling of multicore performance when both computation and communication are taken into account.

The second part builds directly on this foundation by incorporating architectural constraints through the concept of virtual core design. While the first model represents the global scalability limit of a system, the virtual core model translates that limit into realizable performance under different chip organizations. By modeling symmetric, asymmetric, and dynamic multicore configurations, this section demonstrates how architectural structure determines how close real systems can approach the theoretical bounds established in the first part.

Together, the two parts form a complete and hierarchical methodology: the first defines the upper limit imposed by scheduling and communication, and the second measures the extent to which actual multicore designs can reach that limit. This separation ensures conceptual clarity while maintaining continuity between theoretical scalability and practical architectural realizability, bridging idealized performance models with real-world multicore implementations.

4.6. Introduction to Multicore Speedup Modeling

The classical speedup laws of Amdahl, Gustafson, and Juurlink [

5,

6,

7] provide important insights into the scalability of parallel systems, but they all share a strong simplification: the number of processors

p is treated as a direct proxy for performance. In the multicore era, this assumption is no longer valid. Chip design involves fundamental trade-offs in area, power, and complexity, and the simple idea that “

p processors yield

performance” fails to reflect reality.

Hill and Marty [

11] addressed this gap by introducing the concept of Base Core Equivalents (BCE). A base core is the smallest, most efficient unit of computation, and the chip as a whole is constrained by a budget of

n such cores. Different design choices—many small cores, a few large cores, or dynamically reconfigurable cores—must all fit within this BCE budget. This framework bridges classical laws with the realities of chip architecture and has since inspired further analytical and design extensions [

10,

12].

In their model, three design styles are distinguished:

Symmetric multicore, where the chip is partitioned into identical cores;

Asymmetric multicore, where a single large core is combined with many small ones;

Dynamic multicore, where cores can fuse into a wide pipeline for sequential work and split for parallel work.

Each design leads to a distinct formula for speedup, extending Amdahl’s structure into the multicore domain. In the following subsections, we summarize these results and then introduce our own extension by integrating Divisible Load Theory (DLT).

4.7. Symmetric Multicore Design (BCE)

We adopt the Base Core Equivalents (BCE) framework: a chip has a fixed budget of n BCEs; a core that uses r BCEs delivers sequential performance with (a common assumption is ). With r BCEs per core, the chip implements identical cores. The serial and parallel phases do not overlap: one r-BCE core runs the serial part; all cores (each r BCEs) execute the parallel part.

Let

f be the parallel fraction. The speedup relative to one BCE (baseline) is

Replacing

f by

for the parallel component (speedup measured w.r.t. one BCE), the scaled speedup is

Multiplying numerator and denominator by

gives the compact form

For a growth function

,

Equations (

20)–(

24) capture the trade-off between

how many cores (

) and

how strong each core is (

). Larger

r accelerates the serial part (numerator effect via

), but reduces the number of parallel workers (denominator effect via

). Amdahl’s form models fixed-size problems; Gustafson’s form models scaled problems; the general law interpolates via

between Amdahl (

) and Gustafson (

).

4.8. Integration of DLT into Multicore Models

While the BCE framework of Hill and Marty successfully maps classical speedup laws to chip design constraints, it still assumes that the parallel portion of a workload executes ideally once the number of cores is fixed. In practice, communication and scheduling overheads limit the achievable parallelism. Divisible Load Theory (DLT) provides a systematic way to quantify this effect. By explicitly modeling computation and communication costs, DLT produces an effective speedup, denoted as , which replaces the idealized core count n in the multicore formulas.

The general mapping is

where

is the growth function in Juurlink’s generalized law. When communication costs vanish,

, and the formulas reduce to their original forms. When communication dominates,

, and the speedup collapses to unity.

4.9. Symmetric Multicore with DLT

For the symmetric design, the original BCE-based laws can be extended by substituting n with . With parallel fraction f and performance of an r-sized core denoted by , the results are

These expressions preserve the consistency of the original symmetric multicore laws. They collapse to the baseline formulas when , and they reduce to unity when , showing that the framework correctly interpolates between the ideal and communication-limited extremes.

4.10. Asymmetric Multicore with DLT

In the asymmetric design, one large core of size r executes the sequential part, while the parallel part is executed by this large core together with the remaining base-equivalent units. The integration with DLT yields

These formulas capture the heterogeneous trade-off: a large core accelerates the sequential phase, while the remaining BCE budget contributes to parallel execution. DLT ensures that the effective parallelism is limited by communication overhead rather than raw resource count.

4.11. Dynamic Multicore with DLT

In the dynamic design, r base cores can be fused into a large core for the sequential part, and the chip reconfigures into all units for the parallel part. The DLT-integrated formulas are

Dynamic multicore represents the most flexible design, combining the advantages of symmetric and asymmetric organizations. With DLT integration, the model reflects the fact that even under ideal reconfiguration, communication and distribution costs cap the achievable performance.

4.12. Summary

In summary, the DLT-augmented framework unifies the workload aspects (Amdahl, Gustafson, and Juurlink), architectural design choices (symmetric, asymmetric, dynamic), and system-level realities (communication and scheduling). This provides a more realistic picture of multicore performance than either classical laws or virtual core design models alone.

5. Results

In this section, we evaluate the performance of the proposed DLT-extended multicore speedup models. Following the structure of the classical laws, we first present results for symmetric, asymmetric, and dynamic designs, and then compare their behavior under different workload scenarios (Amdahl, Gustafson, and General). Figures and tables are provided to illustrate trends in speedup as functions of the parallel fraction f, the large-core size r, and the effective parallelism .

5.1. Algorithmic Framework for SDLT Models

To enhance theoretical operability and reproducibility, Algorithm 1 summarizes a unified computational framework for the three principal models—SDLT1, SDLT2, and SDLT3. Given processor speeds

, link speeds

, and the computation and communication intensities

and

, the algorithm produces the analytical speedup curves for each scheduling strategy. The pseudocode follows MATLAB-style notation and was implemented and tested in MATLAB R2023a (MathWorks, Natick, MA, USA) for ease of reproducibility.

| Algorithm 1 Unified Computation of SDLT1, SDLT2, and SDLT3 Speedups |

- 1:

Input: , , , - 2:

Output: , , - 3:

Initialize - 4:

for to N do - 5:

- 6:

- 7:

- 8:

for to n do - 9:

- 10:

- 11:

if then - 12:

- 13:

for to i do - 14:

- 15:

- 16:

end for - 17:

- 18:

end if - 19:

end for - 20:

- 21:

- 22:

- 23:

end for - 24:

return all , , and curves

|

The algorithm corresponds to the analytical formulations in Equations (1)–(13) and provides an operational procedure for computing SDLT, SDLT2, and SDLT3 speedups.

The parameters used in the simulations are summarized in

Table 1. For both heterogeneous and homogeneous configurations, the number of child processors is

, and the parallel fraction is fixed at

unless otherwise stated. All numerical results presented here are derived from deterministic solutions of the proposed analytical equations rather than stochastic or discrete-time simulations, ensuring full reproducibility of the reported outcomes.

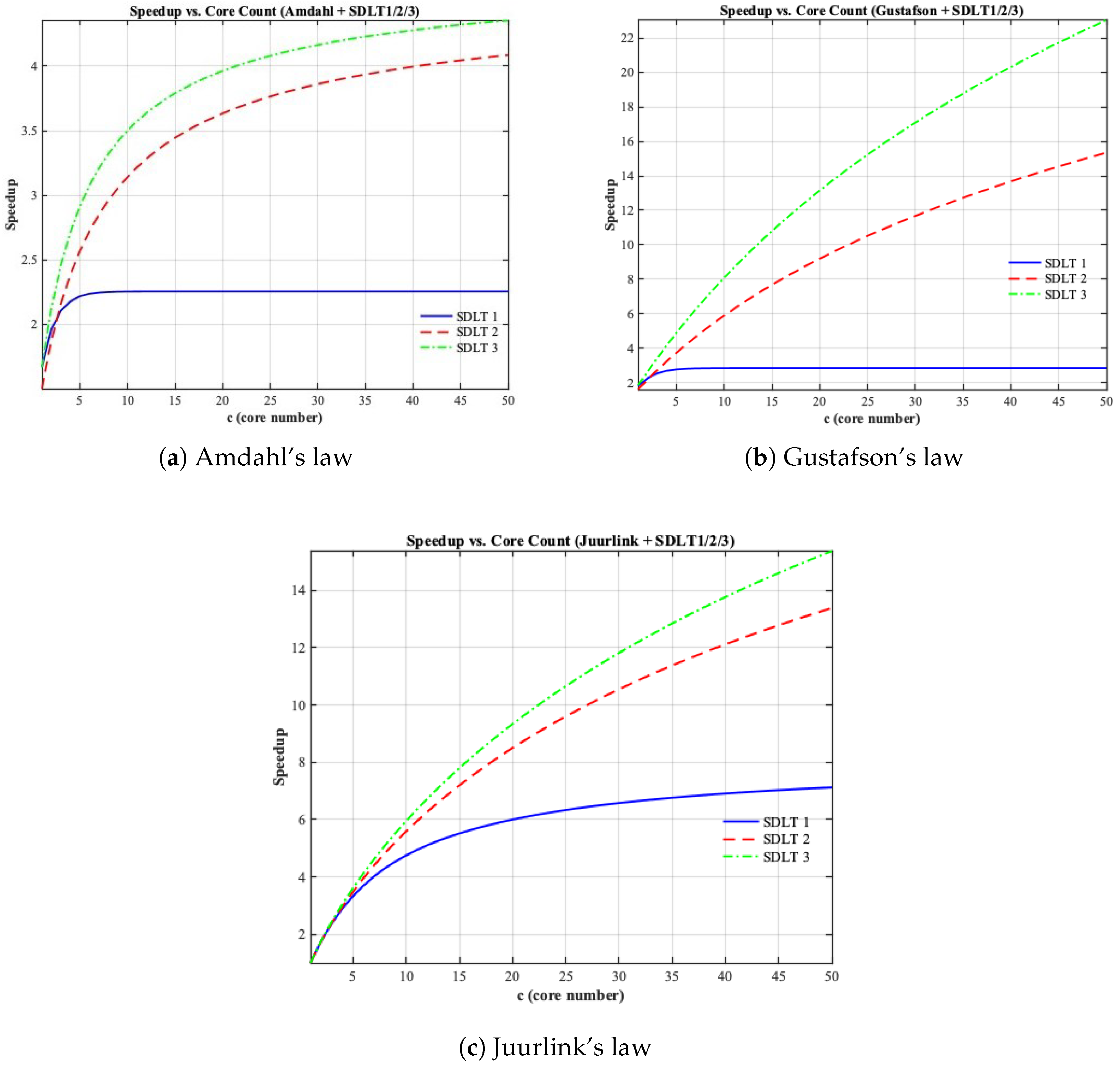

5.2. DLT-Extended Classical Speedup Laws

Before turning to multicore design trade-offs, it is instructive to examine the direct integration of Divisible Load Theory (DLT) with the classical speedup laws of Amdahl, Gustafson, and Juurlink.

Figure 2 presents the results for their combinations. In this case, the idealized processor count

c is replaced directly by the effective parallelism

, without incorporating the BCE resource model. This produces three DLT-adjusted baselines that illustrate how communication overhead modifies workload semantics.

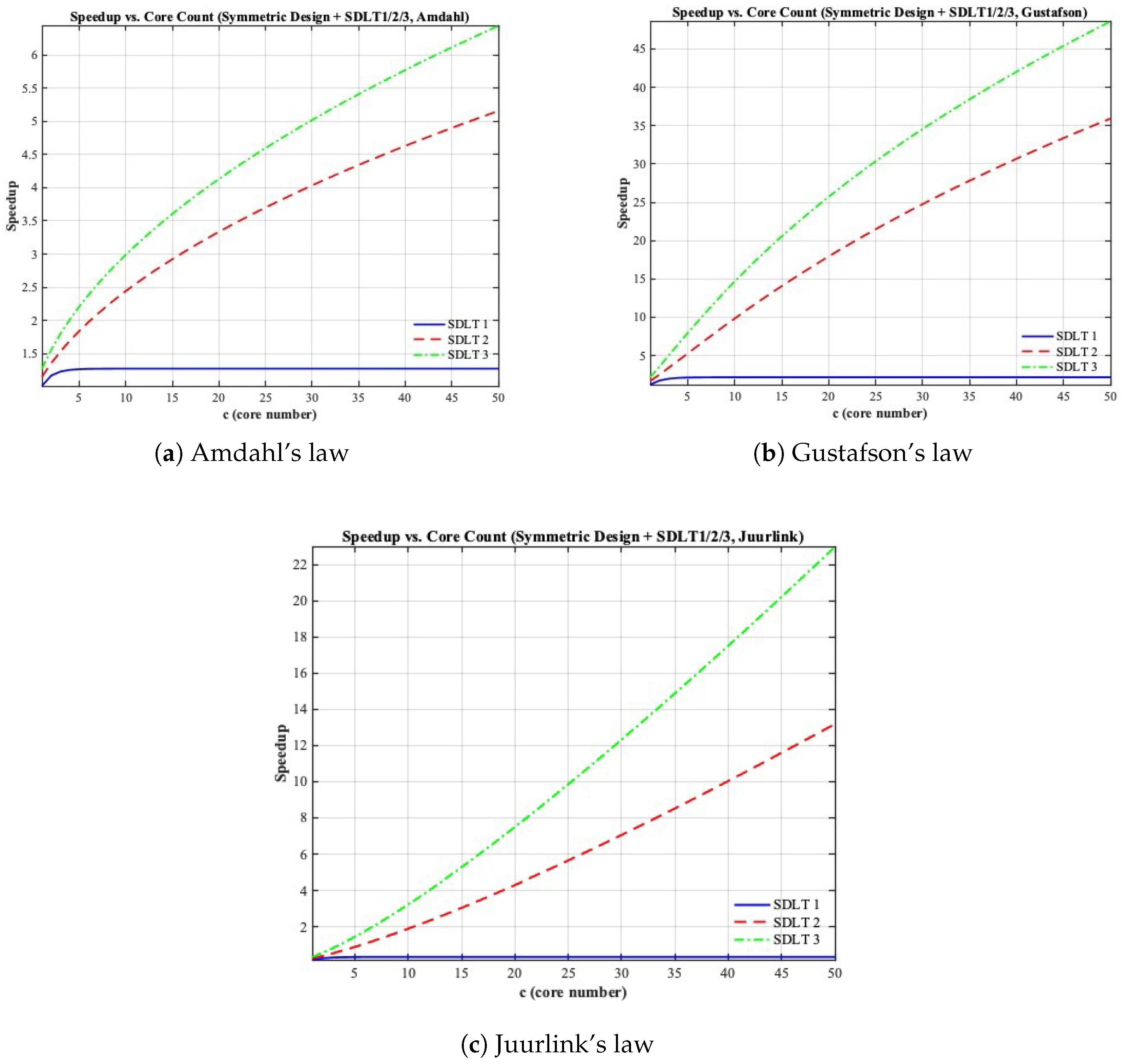

5.3. Symmetric Multicore Results

Figure 3 presents the symmetric multicore results obtained by integrating DLT with three classical scaling laws. The analysis assumes a fixed parallelizable fraction

while varying the number of cores determined by

r. Each curve replaces the ideal processor count in the original law with the DLT-based effective speedup

, which reflects both computation and communication effects.

For Amdahl’s law (

Figure 3a), speedup increases as

r decreases (i.e., more cores) but quickly saturates due to the serial component. When DLT is integrated, the achievable speedup drops further, showing how communication overhead amplifies the limitation of fixed-size workloads.

Under Gustafson’s law (

Figure 3b), the speedup grows almost linearly as the workload scales with the number of cores. With DLT integration, the slope becomes smaller, indicating that scalability is partially offset by the communication delay inherent in distributed systems.

Juurlink’s law (

Figure 3c) extends both models by introducing a tunable scaling parameter

p that transitions between Amdahl’s and Gustafson’s behaviors. The DLT-adjusted curves capture the realistic interaction between workload scalability and communication delay, forming a more accurate reflection of actual multicore performance.

5.4. Asymmetric Multicore Results

Figure 4 shows the performance of the asymmetric multicore design with DLT integration under Amdahl’s, Gustafson’s, and Juurlink’s speedup laws. In this configuration, one large

r-sized core executes the serial phase, while multiple smaller cores handle the parallel portion. Compared with the symmetric design, the asymmetric approach consistently achieves higher speedup for the same

, as the larger core effectively reduces the serial bottleneck.

Under Amdahl’s law (

Figure 4a), this configuration extends the saturation point of speedup, showing that heterogeneous cores mitigate the serial limitation more efficiently. For Gustafson’s law (

Figure 4b), the speedup grows faster with increasing cores but remains bounded by communication overhead captured in

. Juurlink’s model (

Figure 4c) lies between these two extremes, where the combined effects of scalability and DLT constraints yield a balanced and realistic estimate of system performance. Overall, the results confirm that heterogeneous multicore designs maintain higher efficiency under DLT-adjusted scaling laws, especially in communication-limited scenarios.

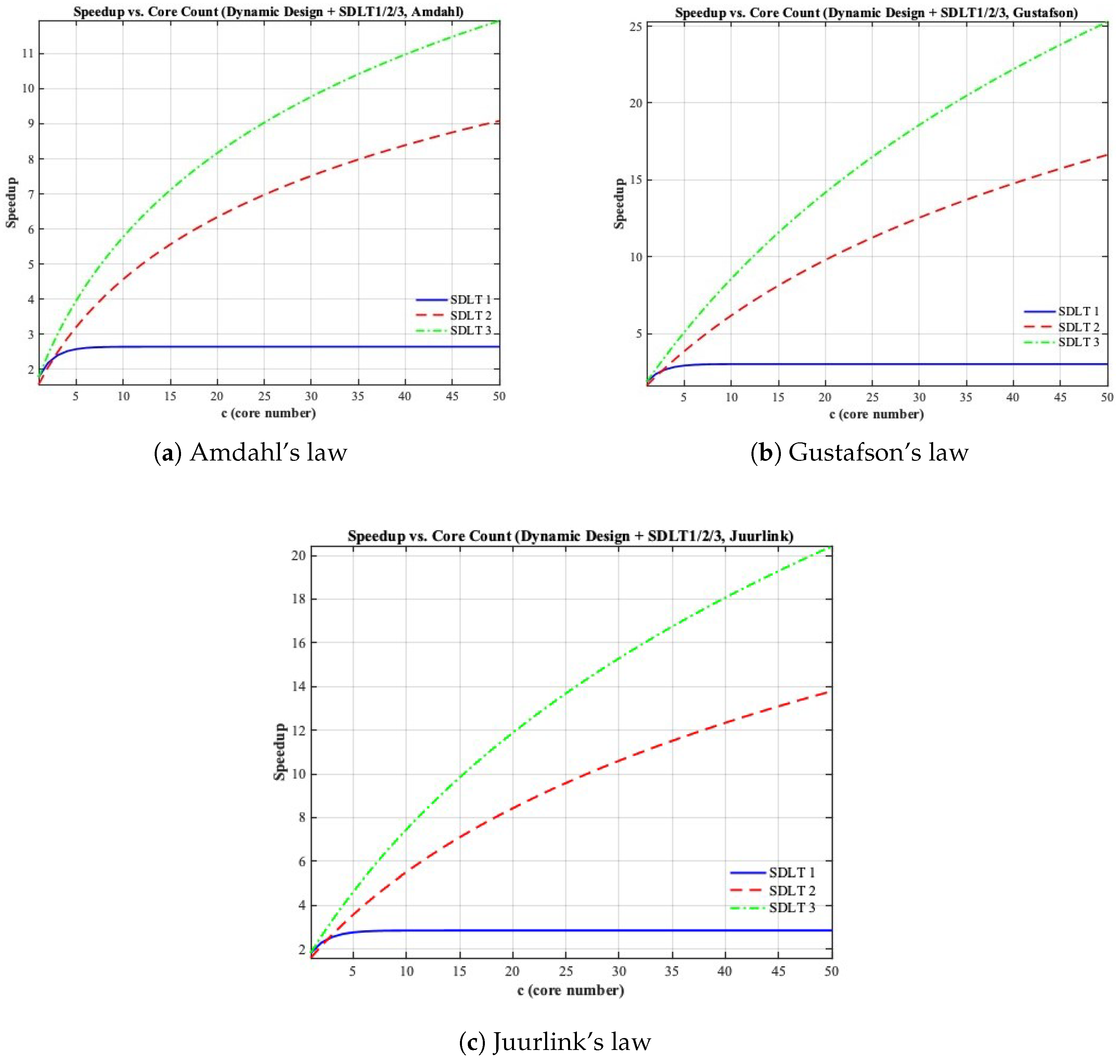

5.5. Dynamic Multicore Results

Figure 5 illustrates the performance of the dynamic multicore design integrated with DLT under Amdahl’s, Gustafson’s, and Juurlink’s laws. This configuration allows the system to dynamically reconfigure its

r BCEs: during the serial phase, BCEs are fused into a large core for sequential execution, and during the parallel phase, all

units are redistributed across smaller parallel cores. This flexibility maximizes resource utilization and represents the upper limit of multicore scalability under communication constraints.

For Amdahl’s law (

Figure 5a), the dynamic design alleviates the serial bottleneck entirely within the BCE budget, extending the achievable speedup beyond symmetric and asymmetric configurations. Under Gustafson’s law (

Figure 5b), the model achieves nearly ideal linear scaling, with DLT integration slightly reducing the slope as communication costs grow. Juurlink’s formulation (

Figure 5c) provides a smooth transition between these limits, confirming that dynamic reconfiguration consistently outperforms static organizations across all workload types. Even under DLT-imposed delays, this model remains the most optimistic yet realistic upper bound on multicore performance.

To compare the three virtual core design strategies more directly,

Table 2 summarizes their representative speedups under Juurlink’s law with DLT integration. Among the three scaling laws, Juurlink’s formulation is the most representative, as it generalizes both Amdahl’s and Gustafson’s behaviors by incorporating a tunable scalability parameter. It therefore provides a balanced evaluation of realistic multicore performance, capturing both partial workload scalability and communication overheads modeled by

. Across all three architectures, the asymmetric design achieves the highest attainable speedup, followed by the dynamic and symmetric configurations.

6. Discussion

This work integrates Amdahl’s, Gustafson’s, and Juurlink’s scaling laws and their virtual-core design augments with the Divisible Load Theory (DLT) framework to capture how computation and communication jointly determine achievable performance in multicore systems. Classical laws treat processor count as an ideal performance multiplier, assuming no cost for synchronization or data transfer. In contrast, DLT provides a more precise view of how delays, bandwidth, and load partitioning shape the effective speedup observed in practice.

The combined models show that when communication is explicitly considered, the theoretical boundaries of multicore speedup shift significantly. Across symmetric, asymmetric, and dynamic designs, similar performance trends emerge: communication overhead compresses the difference between architectures, and efficiency becomes a function of how each design manages both computation time and transfer delay. The asymmetric design remains the most adaptable and efficient, while the symmetric configuration is the most constrained under DLT-adjusted scaling.

These results highlight that reduced performance gaps among multicore architectures are not a limitation but rather a reflection of communication-constrained realism. Architectural design choices, though important, become secondary when communication delays dominate system performance—a phenomenon that classical scaling laws fail to expose. This integration thus forms a bridge between idealized scaling theory and the real, delay-bound behavior of modern processors, extending the reach of classic speedup laws into architectures where communication cannot be ignored.