1. Introduction

SER is the task of classifying emotional states from a speaker’s audio signal. Recent advances in deep learning and Self-supervised Learning (SSL) frameworks have significantly improved SER performance [

1,

2]. SSL models leverage large-scale unlabeled audio data to learn generalized acoustic representations, enabling efficient adaptation to downstream tasks using only a limited amount of labeled data [

3,

4,

5]. Among these, models such as wav2vec 2.0, HuBERT, and WavLM are widely adopted as backbone architectures in SER [

6,

7,

8].

Traditional SER systems generate frame-level embeddings using an SSL backbone and then aggregate them into a single utterance-level representation through pooling operations. The resulting utterance-level representation is passed to a classifier for final emotion prediction [

9,

10]. However, this approach has two key limitations. First, emotional content is often non-uniformly distributed within an utterance, meaning that some frames may express different emotions than others. Since conventional SER models are trained using only utterance-level labels, they struggle to learn consistent intra-class frame representations, leading to a mismatch between frame-level and utterance-level features and causing training instability [

11]. Second, traditional pooling methods such as mean or weighted sum cannot explicitly capture relationships between frames within the same emotion class. As a result, even utterances belonging to the same emotion category may produce inconsistent frame embeddings, obscuring decision boundaries and degrading overall performance.

To address these challenges, we propose two auxiliary loss functions: EAL and FUAL. Both loss functions are designed to improve representation learning by modeling the relationship between frames and the entire utterance, while requiring only labels at the utterance level. EAL encourages frames of the same emotion to cluster around a central representation while pushing frames of different classes apart, thereby sharpening class boundaries. FUAL enforces consistency between frame-level and utterance-level predictions, ensuring that each frame reflects the overall emotional structure of the utterance.

To effectively implement these objectives, we employ CLS-based self-attention pooling. from all frames into a single utterance-level representation through Multi-Head Attention (MHA), while simultaneously affecting the update of frame-level embeddings [

12,

13,

14]. In this process, EAL and FUAL jointly influence the learning procedure, enabling the CLS to capture a concise and representative utterance-level context.

The training process proceeds in two stages. In Stage 1, the SSL backbone is fine-tuned with CE loss only, stabilizing frame-level embeddings for downstream tasks [

15]. In stage 2, the model from Stage 1 is used as initialization, and EAL and FUAL are jointly optimized within the CLS-based self-attention pooling framework to refine emotional alignment and improve recognition performance.

Experiments on the IEMOCAP 4-class dataset demonstrate that the proposed method consistently outperforms baseline models in both Unweighted Accuracy (UA) and Weighted Accuracy (WA).

As Speech Emotion Recognition is a key component of affective computing and Human-Centered Artificial Intelligence, the proposed method directly contributes to advancing AI systems capable of understanding and responding to human emotions.

The remainder of this paper is organized as follows.

Section 2 reviews related work on Speech Emotion Recognition and Self-Supervised Learning.

Section 3 presents the proposed method and training strategy.

Section 4 describes the experimental setup and results.

Section 5 provides a discussion, and

Section 6 concludes the paper.

2. Related Work

2.1. Traditional SER Approaches

Previous SER research involved manually extracting designed features such as MFCCs, pitch, energy, and mel spectrograms from audio signals, then classifying emotions using traditional machine learning algorithms like Support Vector Machine (SVM) or K-Nearest Neighbor (KNN). However, these features had limitations. They could not sufficiently capture complex, nonlinear patterns of emotion, and their generalization performance across datasets was not robust [

16,

17,

18].

Subsequent advancements in deep learning enabled the application of Convolutional Neural Network (CNN) and RNN-based models to SER, significantly improving performance [

19,

20]. While Recurrent Neural Network (RNN)-based models demonstrated particular strength in learning time-series audio information, limitations persisted regarding data scarcity and the ability to learn diverse emotional expressions with precision [

21].

To address this, SSL-based models, which leverage large-scale unlabeled audio data to learn powerful representations, have recently become widely used as the backbone for SER.

2.2. Wav2vec 2.0

The SSL model learns frame-level generalizable representations from unlabeled audio data, achieving remarkable performance on diverse downstream audio tasks using only a small amount of labeled data. The representative model, wav2vec 2.0, learns rich acoustic representations by masking parts of the raw waveform and predicting those masked segments [

6].

It uses a convolutional feature encoder to capture local patterns and a Transformer network to model long-range temporal dependencies. Through contrastive learning, wav2vec 2.0 distinguishes true masked segments from negative samples, producing robust and discriminative features. Due to these characteristics, wav2vec 2.0 has become a widely used backbone for SER, offering superior performance to CNN and RNN-based models and reducing reliance on large labeled datasets.

2.3. Pooling Techniques for Utterance-Level Representations

SER models integrate frame-level audio representations to generate a single utterance-level vector, which is then used to classify emotion. Previous studies employed simple techniques like average pooling or max pooling, but these methods had limitations. They either processed all frames equally or extremely.

To improve this, attentive pooling was proposed, generating speech representations that assign higher weights to important frames through learned weights [

22]. However, in this approach, weights are determined only indirectly during model training, making it difficult to guarantee that emotionally significant frames are directly reflected. Furthermore, since the relationships between frames are not explicitly captured, different frames can be mixed. This increases the likelihood of noise and unnecessary information being included, ultimately limiting the final prediction performance.

2.4. Frame Representation Alignment in SER

Existing SER research primarily relies on utterance-level labels, meaning that the roles and relationships of individual frames are not explicitly learned. Attention pooling methods assign higher weights to frames that are relatively more important for classification, based on the relationships between frames, to generate an utterance-level representation. Consequently, even among utterances belonging to the same emotion class, frame representations may fail to align consistently, and the representations of frames sharing the same emotion may be learned in a highly inconsistent manner.

Such inconsistencies blur the boundaries between emotion classes and introduce unnecessary variability into the training process, ultimately degrading the final prediction performance. Therefore, to enhance both the performance and generalization ability of SER models, a new approach is required that enables mutual learning between utterance-level and frame-level representations.

3. Proposed Method

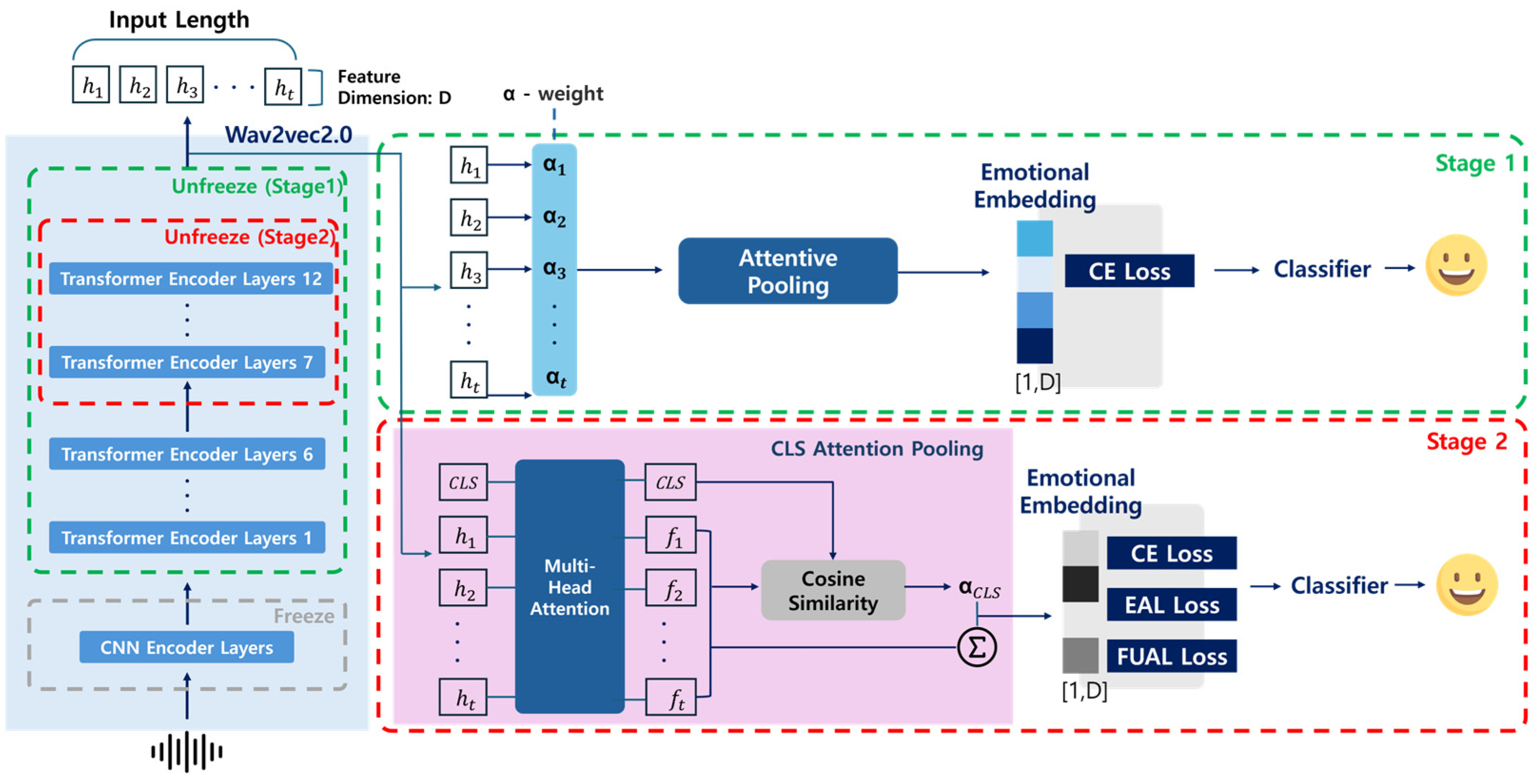

This study utilizes SSL-based audio frame embedding to learn stable and emotionally consistent Utterance-level representations through a two-stage training procedure. The overall two-stage training framework is illustrated in

Figure 1.

In stage 1, input audio is fed through the SSL model to extract frame-level embeddings. Subsequently, frame-specific weights are computed via attention pooling, which are then weighted to generate utterance-level representations. These generated utterance representations are learned through CE loss, enabling the SSL model to stably reflect frame-level embedded emotional information.

In stage 2, the SSL model trained in stage 1 is used as the initial value, and the frame representations that stably retain emotional information are utilized as input. At this stage, a CLS is added, and information from all frames is integrated via MHA, which is then learned as a global utterance vector. Subsequently, the utterance representation generated via CLS-based self-attention pooling is used for final emotion classification, simultaneously applying CE Loss, EAL, and FUAL throughout this process.

3.1. Stage 1: SSL Fine-Tuning with Attentive Pooling

We employ wav2vec 2.0 as the SSL backbone to extract frame-level embeddings from the input waveform . These frame embeddings are then integrated using an attentive pooling module to generate an utterance-level representation , which is subsequently passed to the final classifier for emotion prediction.

The frame-wise attention weights

and the utterance-level representation

are computed as follows:

The utterance-level representation

obtained in (1) is trained using CE Loss, defined as:

is the number of emotion classes, is the ground truth as a one-hot vector, and is the softmax probability for class .

Through this process, the frame-level weights are stabilized to accurately reflect emotional information. Thus, stage 1 focuses on learning a stable SSL-based initial model, providing a strong foundation for stage 2. This step ensures that the subsequent application of auxiliary losses in stage 2 is stable and effective, facilitating smooth training and improving overall performance.

3.2. Stage 2: CLS-Based Self-Attention Pooling & Joint Objectives

In stage 2, the SSL backbone from stage 1 is used for initialization. A CLS is prepended to the frame sequence and passed through a multi-head attention encoder to produce frame representations and a global utterance-level representation .

These outputs are aggregated to form the final utterance-level vector . At this stage, CE Loss, EAL, and FUAL are jointly optimized to enhance both frame-level alignment and utterance-level consistency.

3.2.1. Self-Attention Pooling with CLS

The frame embedding sequence from the SSL backbone is given by

. A CLS

is prepended to form the input sequence:

The sequence

is then processed by the multi-head attention encoder

:

represents the CLS output summarizing the entire utterance, and represents the final frame-level representations. By concentrating the utterance information into , this structure provides a foundation for EAL to guide emotion-specific alignment.

The similarity scores between the CLS and each frame are normalized using a softmax function to produce attention weights . These weights determine the contribution of each frame to the final utterance-level representation, similar to the process described in stage 1.

3.2.2. Emotional Attention Loss (EAL)

EAL encourages frames within the same emotion class to align closely with the CLS while separating frames from different classes.

The cosine similarity between the CLS and each frame,

, is calculated as follows:

To enforce alignment, a softplus-margin loss is applied so that emotionally salient frames maintain a similarity above margin

[

23].

The frame-wise loss for each frame is defined as:

The overall EAL is computed as the mean of these losses across all frames:

While EAL improves class boundary clarity, the softmax function can still amplify small differences in similarity scores, causing attention weights to become overly concentrated on certain frames. The margin mitigates this effect by limiting updates for frames already close to the CLS, but it does not fully resolve the disproportionate allocation of attention.

As a result, EAL alone may not fully prevent this weight concentration, which can make training less stable and reduce the diversity of frame-level representations.

3.2.3. Frame-to-Utterance Alignment Loss (FUAL)

Using only EAL may cause the model to over-focus on a few specific frames, which can lead to unstable representations for other frames. To address this issue, FUAL is introduced to ensure that frame-level predictions are consistent with the utterance-level prediction distribution. Each frame output is passed through a softmax function to generate a probability distribution , and the utterance representation is also passed through a softmax function to produce the utterance-level probability distribution . The frame-level predictions are then aggregated using attention weights to form a weighted average distribution .

Finally, FUAL minimizes the Kullback–Leibler (KL) divergence between these two distributions as follows [

24]:

FUAL minimizes this divergence to encourage consistency between frame-level and utterance-level predictions, resulting in more stable and coherent emotional representations across both levels.

3.2.4. Total Loss

The final loss function combines CE, EAL, and FUAL:

and are weighting factors controlling the influence of the auxiliary losses. By optimizing , the model improves utterance-level classification accuracy while ensuring that frame-level representations remain aligned and consistent with the overall emotional structure.

4. Experiments

In this section, we present the dataset, training configurations, baseline models, and experimental results used to evaluate the performance of the proposed method.

4.1. Dataset

For performance evaluation, this study utilized the IEMOCAP corpus [

25], a widely adopted benchmark dataset for SER. The corpus is multi-modal, containing audio, visual, and lexical modalities; however, only the audio modality was used in this work. It comprises five sessions, each consisting of dyadic conversations between one male and one female speaker.

To prevent speaker information leakage, we followed a leave-one-session-out five-fold cross-validation strategy. In this setup, four sessions were used for training, while the data from one speaker in the remaining session served as the validation set, and the data from the other speaker were used as the test set.

We focused on four emotion categories: Happy (including Excited), Sad, Neutral, and Angry. In total, 5531 audio utterances were selected for training and evaluation, and their distribution across the four classes is summarized in

Table 1.

Table 1 summarizes the distribution of utterances across the 4 emotion classes.

Within the held-out session, the data were further divided by speaker gender: utterances from the male speaker were used for validation, and those from the female speaker were used for testing.

4.2. Evaluation Metrics

The model performance was evaluated using UA and WA. UA accounts for class imbalance by equally weighting all classes, providing a fair assessment of performance across different emotion categories. In contrast, WA measures the overall accuracy based on the total number of samples, reflecting the model’s performance across the entire dataset.

denotes the number of classes, represents the number of correct predictions for class , and indicates the total number of samples in class .

In addition, a Confusion Matrix was used to visually inspect class-wise prediction tendencies, allowing for an intuitive understanding of potential misclassification patterns.

4.3. Implementation Details

All experiments were conducted on a single NVIDIA RTX 5080 GPU using the PyTorch 2.8.0 framework with CUDA 12.8 and Python 3.10.8. The training process consisted of two sequential stages. In stage 1, the SSL backbone was fine-tuned to obtain stable frame-level representations using only CE loss. In stage 2, the model was jointly trained with CE and the proposed auxiliary losses to refine frame-to-utterance emotional alignment. The CNN-based feature extractor remained frozen in both stages to preserve general speech representations. AdamW was used as the optimizer with different learning rates (LR) for the backbone, attention module, and classification head. The pretrained model, facebook/wav2vec2-base, was obtained from the Hugging Face Transformers library.

Table 2 summarizes the core hyperparameter settings for both stages, allowing a direct comparison of key experimental configurations.

In stage 2, CLS-based self-attention pooling was configured with four attention heads, a depth of one, and no dropout. The hyperparameters of both EAL and FUAL were determined experimentally, and further analysis is provided in

Section 4.4.2 (Ablation Study).

4.4. Results

4.4.1. Baselines

For comparison, two baseline models were established using the same dataset and the SSL backbone pre-trained in stage 1 to ensure a fair and consistent evaluation setting. The first baseline employed a conventional self-attention pooling mechanism, while the second utilized a CLS-based self-attention pooling approach, where the CLS serves as a global summary representation of the entire utterance. These baselines were designed to evaluate the effect of incorporating a global anchor token in emotion representation learning.

Building upon the CLS-based self-attention pooling baseline, we further examined the impact of the proposed auxiliary loss functions by gradually integrating the FUAL. Specifically, three CLS-based configurations were tested: one with EAL alone, another with FUAL alone, and a third with both EAL and FUAL applied together to form the proposed model. This progressive approach allowed us to systematically analyze the contribution of each individual loss function as well as their combined effect on overall performance.

All experiments were conducted using five-fold cross-validation, and performance was evaluated using UA and WA.

As shown in

Table 3, the CLS-based self-attention pooling showed moderate performance improvements compared to conventional self-attention pooling, indicating that incorporating a global summary token can provide a more stable foundation for utterance-level representation. When either EAL or FUAL was added to the CLS-based framework, the model exhibited additional improvements over the CLS baseline, suggesting that each loss plays a supportive role in enhancing frame-level alignment and prediction consistency. When both losses were applied together, the proposed model achieved the best overall performance among all configurations, demonstrating clear performance gains compared to the baseline. These results confirm that the proposed auxiliary losses are effective within the CLS-based framework and provide consistent performance improvements under the given experimental setting.

4.4.2. Ablation Study

To analyze the contribution of each component in the proposed framework, we conducted an ablation study focusing on the auxiliary losses, EAL and FUAL. The experiments were designed to investigate the effect of key hyperparameters that influence alignment learning.

EAL includes two key hyperparameters, the margin and the loss weight . The margin controls the degree of separation between frame-level representations, and we explored three different values, 0.4, 0.6, and 0.8. The loss weight determines how much influence the EAL term has on the overall loss function, and we tested three levels, 0.05, 0.10, and 0.15. Because these parameters can affect both the stability of training and the final recognition performance, we systematically investigated their impact through a structured hyperparameter search.

To conduct this study, we evaluated every possible combination of the margin and the loss weight, resulting in nine different experimental settings. All experiments were performed using five-fold cross-validation in order to provide reliable performance estimates. The results, reported in terms of UA and WA, are presented in

Table 4.

As shown in

Table 4, the performance of the model varied depending on the specific values of the margin and the loss weight. When the margin was either too low or too high, the improvement in accuracy was less consistent, which suggests that an appropriate balance is needed between encouraging frame clustering and maintaining sufficient separation. A similar trend was observed for the loss weight. When the loss weight was increased beyond a certain level, the performance did not continue to improve and slightly decreased in some cases, indicating that excessive weighting of EAL can interfere with the optimization of other objectives. Among all the tested combinations, the setting with a margin of 0.8 and a loss weight of 0.05 produced the most stable and consistent results.

To further validate the robustness of the proposed framework, an additional ablation study was conducted by varying

while fixing the best EAL parameters (

= 0.8,

= 0.05). The results are summarized in

Table 5.

As shown in

Table 5, the model achieved the highest performance when

= 0.05. Increasing the loss weight beyond this value slightly degraded performance, indicating that an excessive emphasis on FUAL can dominate the optimization and reduce generalization. These findings suggest that moderate weighting of FUAL effectively complements EAL, maintaining stable alignment between frame- and utterance-level representations.

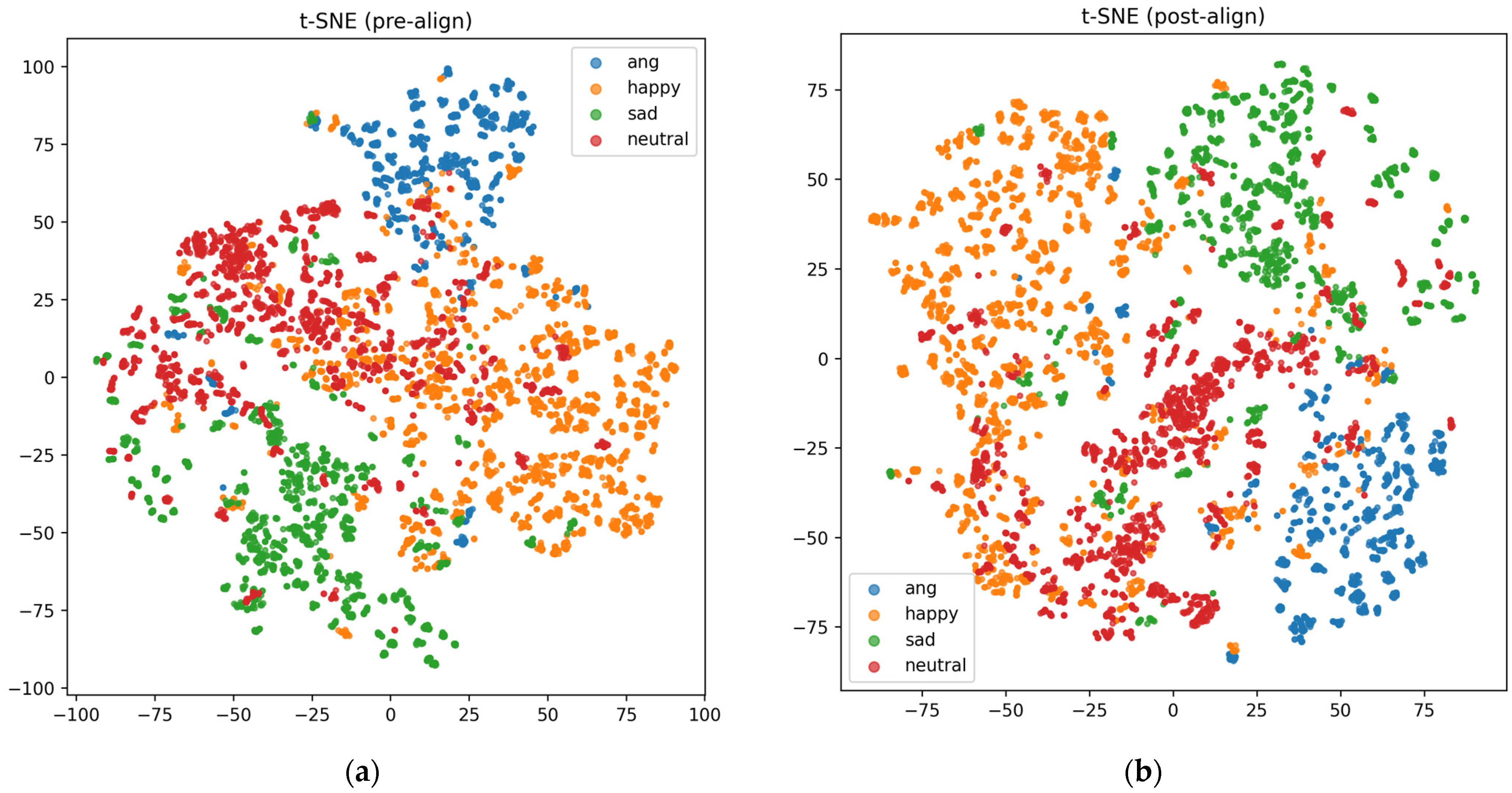

4.4.3. Visualization Analysis

To examine the effect of EAL and FUAL on aligning emotional representations across entire utterances, we visualized the learned embeddings using t-SNE [

26].

As shown in

Figure 2a,b, the comparison suggests that in

Figure 2b, the utterance-level emotion distributions appear more clearly differentiated, showing a relatively clear boundary around the CLS token. This implies that the proposed loss functions help improve the separation and organization of emotion representations at the utterance level, indicating their potential in structuring the emotional feature space.

4.4.4. Confusion Matrix

Figure 3 shows the confusion matrix of the final proposed model evaluated using 5-fold cross-validation. Each cell indicates the percentage of predicted classes for a specific true emotion class, clearly visualizing the model’s tendency to misclassify particular emotions. The results indicate that while this model achieves high accuracy for the Anger and Sad classes, it exhibits relatively lower accuracy for the Happy and Neutral classes, showing notable confusion between these two emotion categories.

As shown in

Table 1, both Happy and Neutral classes contain significantly more utterances than other emotions, which may lead to class imbalance and bias the model toward these categories. In addition, the boundary between Happy and Neutral emotions is often ambiguous in conversational speech, making them difficult to distinguish clearly. These factors together likely contribute to the observed confusion between the two emotions.

4.4.5. Comparison with Other SER Systems

As shown in

Table 6, the proposed model achieved a UA of 75.3% and a WA of 74.2%, demonstrating clear performance improvements over existing models such as SMW-CAT and ShiftCNN. Moreover, the proposed method achieved comparable performance to the state-of-the-art FLEA model, highlighting its effectiveness.

Importantly, our approach requires only utterance-level labels for training and does not rely on frame-level annotations. This demonstrates that the model can effectively achieve high SER performance without complex modules or additional labeling efforts, making it both efficient and scalable for practical applications.

4.4.6. Cross-Dataset Evaluation

To further assess the generalizability of the proposed method, additional experiments were conducted on two benchmark datasets, CREMA-D [

31] and RAVDESS [

32]. The CREMA-D dataset contains 7442 audiovisual clips of six emotions (happy, sad, neutral, anger, disgust, and fear) recorded by 91 actors [

31]. The RAVDESS dataset includes 1440 utterances from 24 actors expressing eight emotions (calm, happy, sad, angry, fearful, surprise, disgust, and neutral) [

32].

As shown in

Table 7, the proposed CLS-based model achieved higher UA and WA scores than the baseline model on both datasets, confirming consistent performance improvements. These results demonstrate that the proposed method maintains stable recognition capability across different emotional corpora, validating its robustness beyond the IEMOCAP dataset.

5. Discussion

This study aimed to address inconsistencies between frame-level and utterance-level representations in SER. To this end, we introduced two auxiliary loss functions: EAL and FUAL. EAL promotes clustering of frames belonging to the same emotion class around the CLS while separating frames of different classes, whereas FUAL encourages consistency between frame-level and utterance-level predictions, preventing the model from over-focusing on a few dominant frames. The CLS also plays a key role in integrating frame information into a coherent utterance-level representation, providing a stable reference point for subsequent alignment learning.

The results in

Table 3 show that incorporating the CLS into the pooling mechanism provided modest improvements over conventional self-attention pooling. Adding EAL or FUAL individually led to further gains, while combining both losses achieved the best overall performance, demonstrating their complementary effects. t-SNE visualizations confirmed this by showing clearer and more distinct emotion clusters when both losses were applied, indicating that the proposed framework reshapes the representation space to better capture emotional structures.

The ablation analysis further highlighted the importance of carefully tuning alignment-related hyperparameters such as the margin and loss weights. These parameters influenced training stability and overall recognition performance, emphasizing the need for empirical balance to achieve stable and effective optimization.

The confusion analysis indicated that most emotions were accurately recognized, though minor overlap was observed between acoustically similar categories such as Happy and Neutral. In addition, comparison with previous methods demonstrated that our approach outperforms prior models such as SMW-CAT and ShiftCNN while reaching performance levels comparable to the state-of-the-art FLEA model. Importantly, this was achieved without requiring frame-level annotations, highlighting the practicality and scalability of our method.

To further validate its robustness, additional experiments were conducted on the CREMA-D and RAVDESS datasets. The proposed method maintained consistent performance across these benchmarks, confirming its generalizability across different emotional speech conditions.

Future studies will aim to further assess adaptability using more diverse and spontaneous speech data.

In summary, integrating EAL and FUAL within a CLS-based framework effectively improves both alignment and representation consistency in SER. This approach provides a simple yet effective way to enhance emotion recognition using only utterance-level labels, supporting its potential for broader practical applications.

6. Conclusions

This study proposed a CLS-based framework that integrates two auxiliary loss functions, EAL and FUAL, to address inconsistencies between frame-level and utterance-level representations in SER. The proposed method leverages a two-stage training strategy, where the wav2vec 2.0 backbone is first fine-tuned to obtain stable frame embeddings, and then EAL and FUAL are jointly optimized within a CLS-based self-attention pooling mechanism.

Through experiments on the IEMOCAP dataset, we demonstrated that the combination of EAL and FUAL effectively improves alignment and representation consistency, leading to stable training and higher recognition accuracy without requiring frame-level annotations. Additional experiments on the CREMA-D and RAVDESS datasets further confirmed that the proposed approach maintains consistent performance across different corpora, supporting its generalizability to varied emotional speech conditions. Furthermore, comparison with existing SER systems showed that the proposed method achieves performance comparable to recent state-of-the-art models while maintaining a simpler and more efficient architecture.

In conclusion, this framework provides a practical and interpretable solution for enhancing SER using only utterance-level labels, achieving a good balance between model simplicity, scalability, and recognition performance. Future work will extend this approach to multimodal emotion recognition by incorporating visual or textual modalities, and will explore its application to more diverse and spontaneous speech data to evaluate adaptability in real-world scenarios.