5.1. Training and Experiment Setup

For performance evaluation, experiments are conducted using the CybORG environment, described in detail in

Section 3. CybORG provides a controlled yet realistic setting for adversarial cyber operations, enabling the training and assessment of defensive agents under diverse attack scenarios. The environment incorporates multiple types of agents and network configurations, allowing systematic benchmarking of defensive strategies.

In our framework, PPO is employed for RL-based training, as it has demonstrated superior stability and effectiveness over alternative methods such as Deep Q-Networks when designing defensive cyber agents [

18]. For the SL stage, multiple algorithms were explored, including Random Forests, XGBoost, and neural models. Among these, the Random Forest classifier achieved the most consistent performance following hyperparameter optimization. Consequently, the results presented in this section focus on blue agents trained via the proposed RL–SL framework with PPO for RL training and Random Forest for SL distillation.

Evaluation metrics are aligned with the reward structures defined in CybORG, which are designed to quantify the effectiveness of defensive actions in mitigating adversarial activity. Specifically, the blue agent receives good rewards for critical defensive outcomes, as summarized in

Table 4. Conversely, relatively large negative rewards are assigned when the red team achieves key objectives, such as escalating privileges or compromising operational hosts, as shown in

Table 5. Together, these reward mechanisms capture both the benefits of effective defense and the costs of adversarial success, providing a balanced and interpretable basis for evaluating defensive agent performance.

The following subsections present detailed evaluation results for both adversary-specific defensive agents and the generic defensive agent described in

Section 4. For performance evaluation, the following agents are evaluated:

- -

and : Defensive agents trained using RL to counter the and attacking agents, respectively.

- -

and : Defensive agents trained using the proposed framework specifically for the and attacking agents.

- -

and : Defensive agents trained with the proposed framework, where the observation space is augmented with the immediate reward received, tailored for the and attacking agents.

- -

and : Defensive agents trained using the proposed framework, with the observation space enhanced to include both the reward from the previous action and the action itself, designed for the and attacking agents.

The proposed framework seamlessly integrates RL with SL, enabling each trained defensive agent to engage in strategic encounters against its designated attacking counterpart. During these interactions, the agent systematically captures observation–action, observation–reward–action, or observation–previous–action–reward–action tuples to enhance its learning process. Each defensive agent undergoes rigorous training over 2000 episodes, with each episode spanning 100 steps. To ensure high-quality learning, data is selectively retained from only the top

of the highest-performing episodes, optimizing the SL training process for defensive agents. The training of defensive agents and the evaluation of performance are performed in the network topology shown in

Figure 1. Each defensive agent is evaluated against attacking agents for 100 episodes and each episode consists of 100 steps. The mean total episode reward is used as the performance metric along with the

confidence interval (CI).

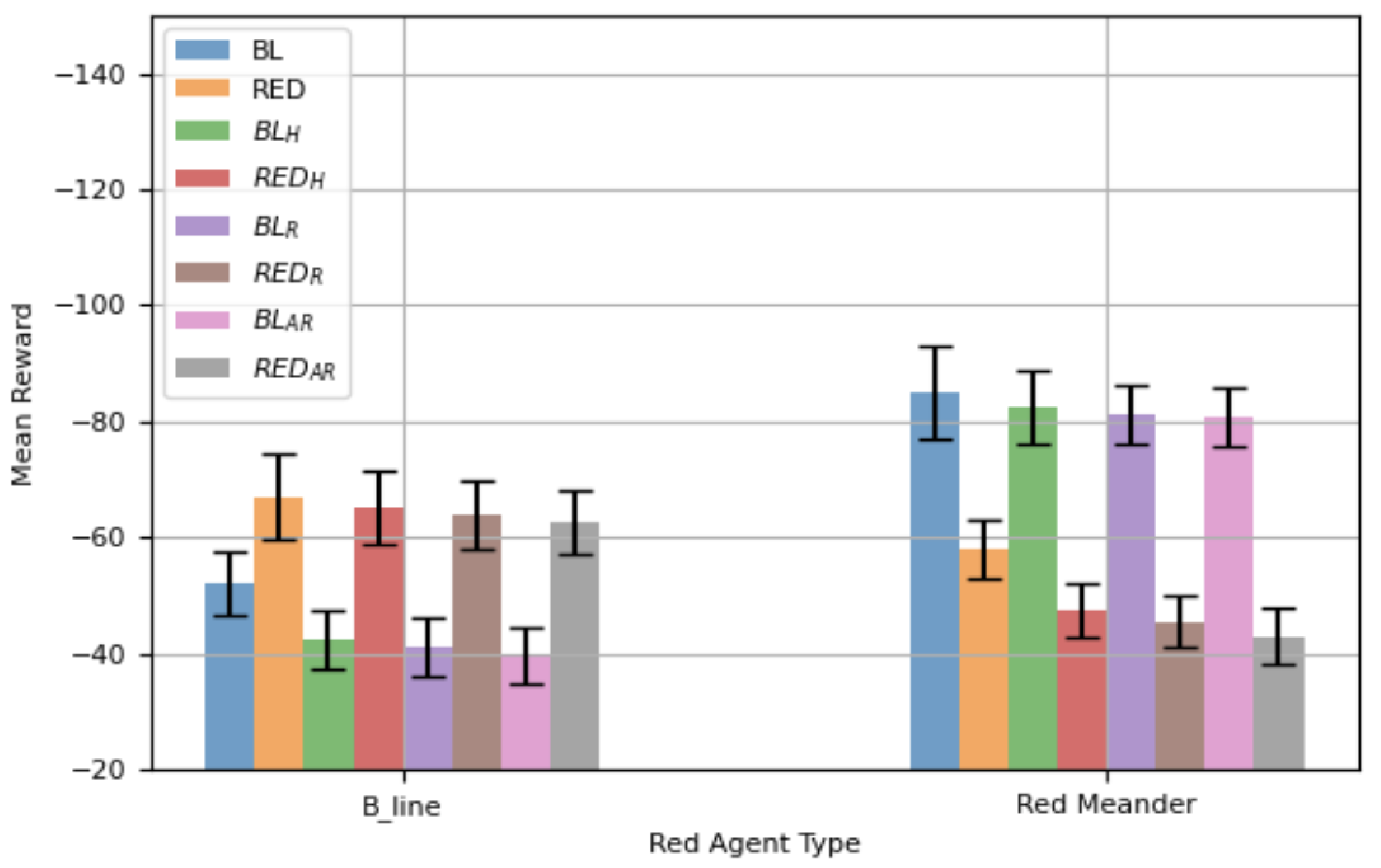

Figure 3 presents the mean total reward along with the

CI for different defensive agents evaluated against

and

. When

is attacking, defensive agents specifically trained against

using the proposed framework achieve a statistically significant improvement in mean reward compared to

(the agent trained solely using RL for

). Additionally, defensive agents trained using the proposed framework with augmented observations also outperform

and demonstrate better performance than

. However, while their improvement over

is evident, it is not statistically significant. Conversely, a defensive agent trained against

performs poorly against

, as it was not trained for that specific attack strategy. Similarly, when the network is attacked by

, defensive agents trained specifically against

using the proposed framework achieve a statistically significant performance boost compared to the RL-trained agent designed for

. However, a defensive agent trained for

struggles against

, reinforcing the importance of training against the specific attack type.

The proposed framework comes with a mechanism for training a generalized defensive agent, here referred to as

(since reward and action augmentation has shown similar performance above, therefore for this study results are reported without augmentation). The performance of

is evaluated against another generalized agent,

, which differs in its approach to SL. While

utilizes data from only the top

of highest-performing episodes,

leverages the entire dataset collected from interactions between defensive and attacking agents. Additionally, for comparison we consider an alternative strategy where a hierarchical agent can accurately predict the attack type and deploy the most appropriate defensive agent accordingly; here, the agent is referred to as

.

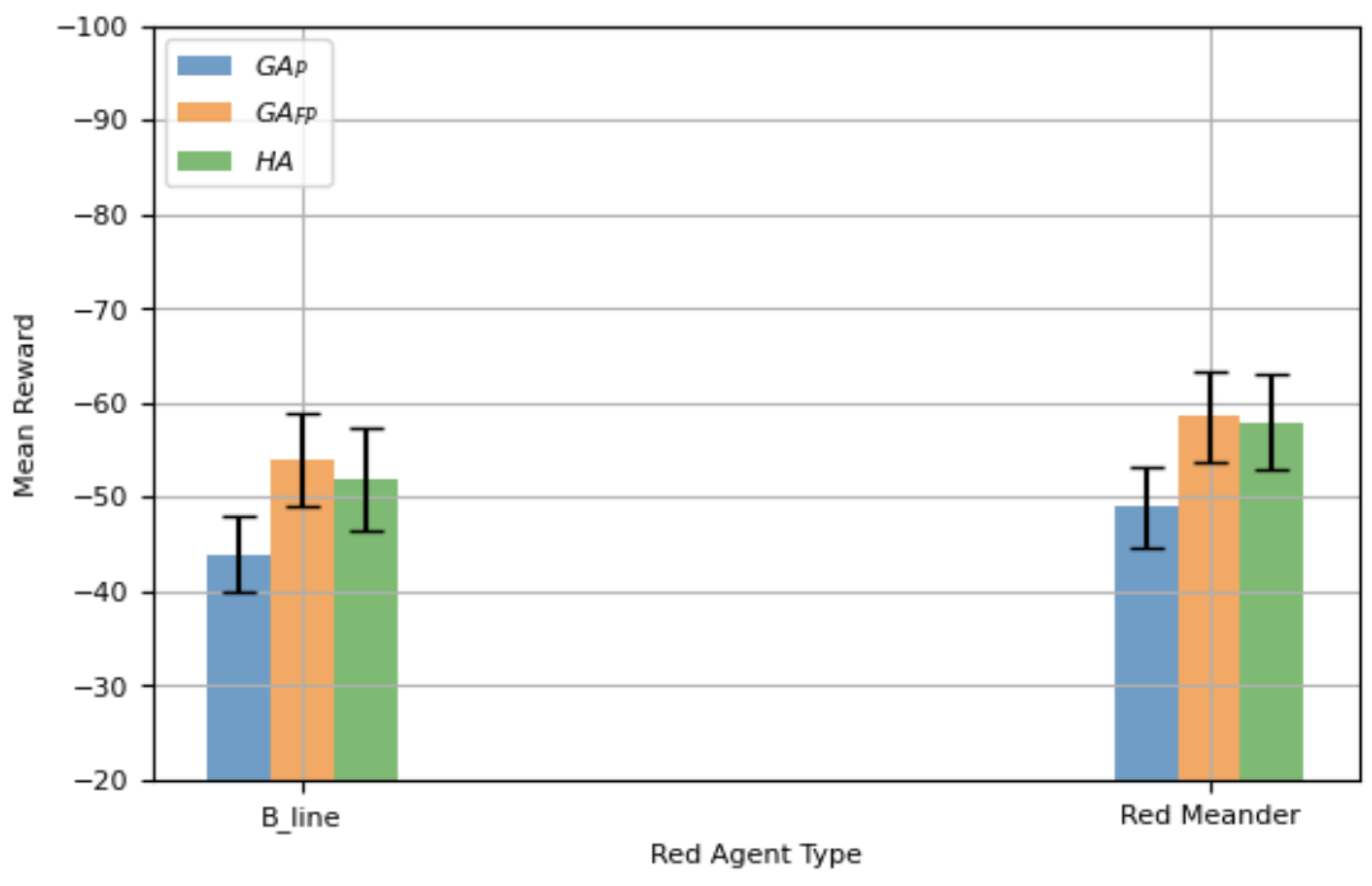

Figure 4 displays the mean total reward of generalized defensive agents with a

CI.

outperforms all competitors, including

which can accurately deploy a specialized agent based on the attack type. In contrast,

exhibits weaker performance due to its reliance on both high and low performance episodes data for SL.

To further validate the robustness of the proposed framework, we repeat the experiments using a reduced step size of 50. The motivation for this is that, by halving the step size, we can assess whether the observed performance trends with a larger step size remain consistent under different temporal resolutions. This evaluation is particularly important for scenarios where decision making may need to occur more rapidly, and thus the defensive agent must operate effectively with less information aggregated per step.

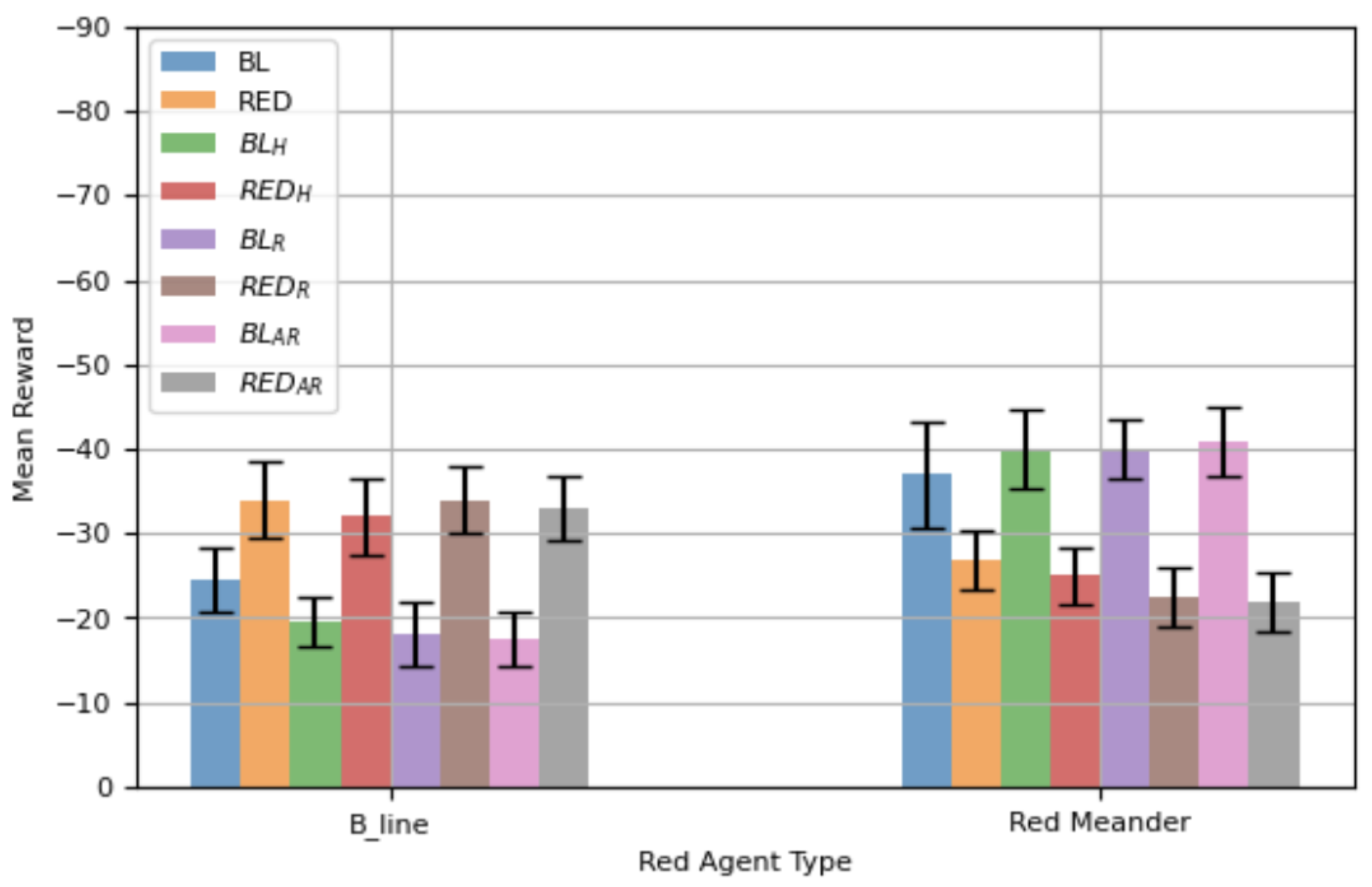

Figure 5 reports the mean total reward with 95% CIs for all variants of the defensive agent when episodes are shortened to 50 steps. When

is attacking, the agents trained through the proposed framework (

,

,

) demonstrate comparable performance, as their CIs substantially overlap and statistically significantly exceed

.

and

achieve slightly better mean rewards compared to

, but these improvements are not statistically significant. In contrast, the RED-based agents (RED,

,

,

) perform noticeably worse against

, with their mean rewards being more negative and CIs not overlapping with those of the BL family. This indicates a statistically significant degradation when defenders trained against red-oriented scenarios are deployed against

.

When the attacker is , the trend reverses. The BL-based agents now perform poorly, while the RED variants trained with the proposed framework (, , and ) achieve substantially better mean rewards. Their CIs do not overlap with those of the BL family, confirming that these improvements are statistically significant. In addition, these framework-trained RED agents also outperform the plain RED agent, which highlights the effectiveness of the proposed training approach under more complex adversarial behavior.

The comparison between

Figure 5 and the 100-step evaluation in

Figure 3 reveals consistent findings across both temporal horizons. In both cases, agents trained specifically against a given attack using the proposed framework achieve statistically significant improvements on that same attack, while generalization across attack types remains limited. The modest, non-significant advantage of

and

over

at 50 steps mirrors the 100-step results, where observation reward augmentation improves

performance but does not yield statistically significant gains over the best

. The same is true for the agents trained for

. Overall, the consistency of these results across different step sizes demonstrates the robustness of the proposed framework.

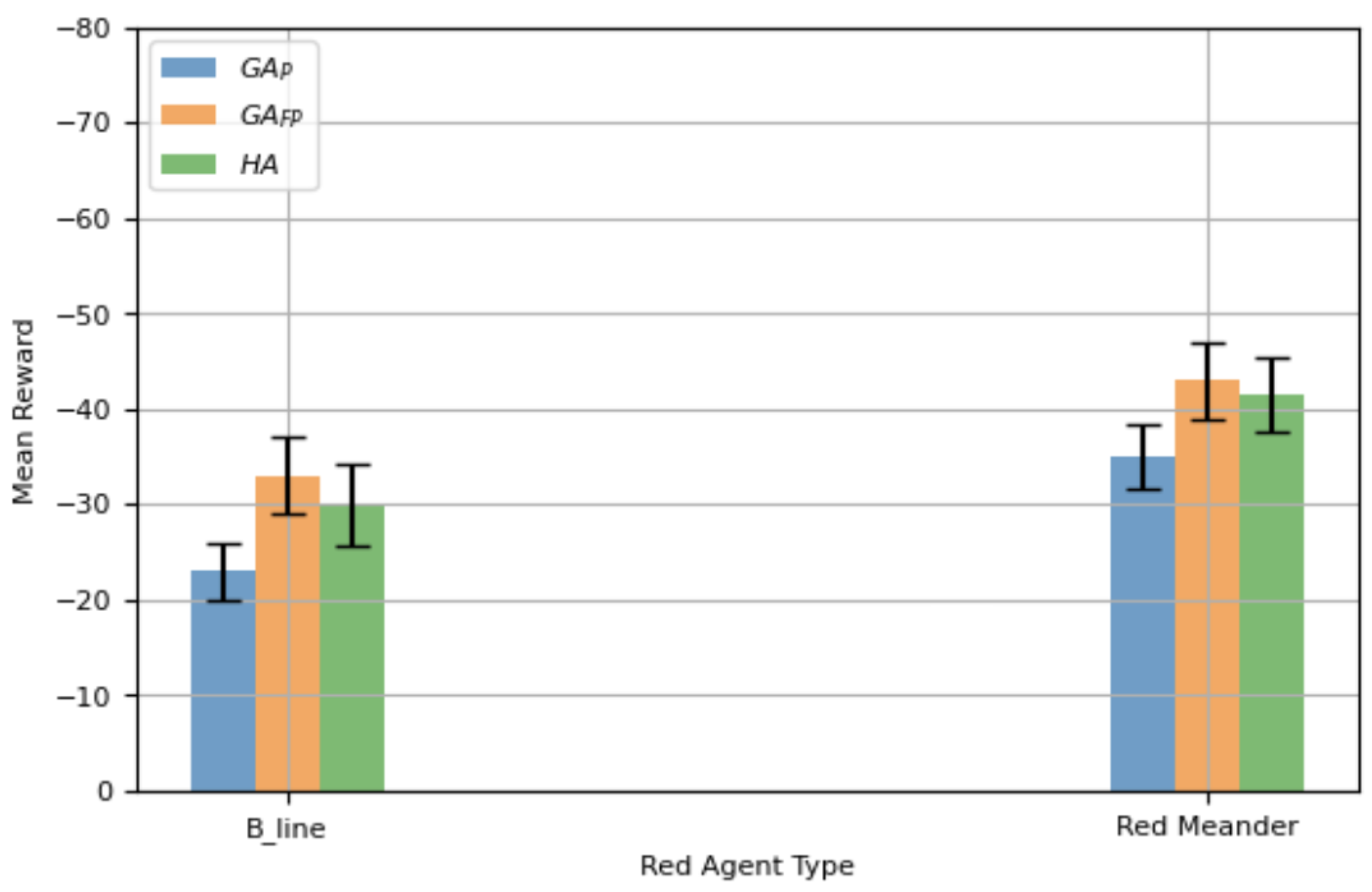

The evaluation is further extended to 50-step episodes in order to examine whether the performance of generalized defensive agents remains consistent under a finer temporal resolution.

Figure 6 presents the mean reward with a 95% CI for the same set of agents. The observed trends closely mirror those reported for the 100-step experiments. Specifically,

continues to outperform both

and

, despite

being able to identify the attack type and deploy a specialized agent accordingly.

again exhibits weaker performance due to its reliance on both high- and low-performing episodes for SL, which dilutes the quality of training data. The consistent superiority of

across both 100-step and 50-step settings demonstrates that the proposed framework is robust to changes in temporal granularity and is effective in training a single generalized agent capable of defending against diverse attack strategies.