To comprehensively evaluate the performance of the proposed framework in terms of catastrophic forgetting mitigation, minority class recognition, and robust learning under label scarcity, we conduct extensive experiments on multiple real-world intrusion detection datasets. The effectiveness of our method is benchmarked against representative baselines across a variety of metrics, including classification accuracy, forgetting rate, robustness to class imbalance, and training efficiency.

4.1. Datasets and Evaluation Metrics

We select three widely used intrusion detection datasets, UNSW-NB15 [

34], CIC-IDS2017 [

35], and TON_IoT [

36], to simulate realistic multi-task, label-incomplete, and heterogeneous network scenarios. Together, these datasets offer a diverse and challenging testbed for evaluating the proposed method’s adaptability and robustness.

UNSW-NB15 dataset [

34]: This dataset is a new generation of network intrusion detection benchmark datasets proposed by the University of New South Wales, Australia, in 2015, aiming to overcome the limitations of traditional datasets (such as KDD99 and NSL-KDD) in terms of attack types and network environments. This dataset contains about 2.5 million network traffic records by mixing real campus network traffic and simulated attack data, covering 9 types of modern network attacks (such as vulnerability exploits, DoS, worms, Web attacks, etc.) and normal traffic, and provides 49 finely divided network traffic features, including basic flow features, traffic statistics features, and content features. Compared with earlier datasets, UNSW-NB15 has significant advantages in the diversity of attack types and data balance and can better reflect the current network threat situation. However, this dataset still has the problem of insufficient samples of some attack categories, which may affect the generalization performance of the model in specific attack detection.

CIC-IDS2017 dataset [

35]: This dataset is a comprehensive network intrusion detection benchmark dataset released by the Canadian Institute for Cyber Security in 2017. It represents one of the most advanced data benchmarks in the current field of network intrusion detection research. This dataset simulates 15 types of modern network attacks (including brute force cracking, DDoS, Web attacks, penetration attacks, and Heartbleed vulnerability attacks, etc.) in a real network environment, while collecting normal background traffic, to construct a large-scale dataset containing about 2.8 million network flow records. Compared with earlier datasets, CIC-IDS2017 provides more abundant network traffic features, including flow duration, packet statistics, traffic behavior, and TLS/SSL encryption features, which can more comprehensively reflect the characteristics of modern network threats. The dataset is organized in time series, which supports the study of attack evolution patterns and is particularly suitable for deep learning-based intrusion detection methods. However, this dataset has challenges such as large data size and unbalanced samples of some attack categories. In practical applications, it is necessary to consider computing resource limitations and data balance issues. As one of the most comprehensive network intrusion detection benchmarks, CIC-IDS2017 is often used in conjunction with datasets such as UNSW-NB15, providing an important reference for evaluating the performance of intrusion detection systems in modern complex network environments.

TON_IoT dataset [

36]: This dataset is a dedicated IoT security dataset developed by the Cyber Security Team of the University of New South Wales, Australia, and represents one of the most advanced data benchmarks in the field of IoT threat detection. This dataset simulates a variety of typical attacks against IoT by building a real experimental environment containing smart home devices and industrial control systems (such as device hijacking, data leakage, ransomware, etc.) and collects about 22 million multimodal security data, covering network layer traffic (NetFlow/PCAP) and host layer logs (Windows/Linux event logs). Compared with traditional network security datasets, the outstanding advantage of TON_IoT is that it is specially designed for IoT security scenarios. It not only includes protocol-level attacks (such as MQTT spoofing and CoAP flooding) but also provides detailed attack stage annotations (reconnaissance, intrusion, and lateral movement), supporting end-to-end attack behavior analysis. The dataset adopts a collaborative recording method of network traffic and host logs, providing unique data support for the study of cross-layer attack detection methods. However, this dataset has challenges such as large data size and unbalanced samples of some attack categories. In practical applications, computing resource optimization and data balance processing need to be considered. As one of the most comprehensive IoT security benchmarks currently, TON-IoT is often used in conjunction with datasets such as Bot-IoT [

37], providing an important reference for evaluating the performance of intrusion detection systems in IoT environments, and is particularly suitable for the development of lightweight security models with limited resources.

For each dataset, a standardized preprocessing pipeline was applied as follows:

Feature Selection and Normalization: We retained relevant numerical and categorical features for traffic statistics and flow behavior. Continuous features were normalized to [0, 1], and categorical features were one-hot encoded to reduce scale variance and stabilize training.

Handling Missing Values: Missing numerical values were replaced with the class mean, and missing categorical values with an “UNK” token to ensure consistent input dimensionality.

Label Harmonization: Attack labels were harmonized across datasets. Synonymous attack types were mapped to unified categories following established taxonomies [

34,

35] to mitigate labeling discrepancies.

Incremental Task Sequence Construction: Each dataset was partitioned into 5 sequential tasks based on chronological flow order, introducing new attack categories successively. To simulate blurred boundaries, 30–50% of samples were shared between consecutive tasks. Earlier tasks introduced 3–5 categories, while later tasks added 1-2, creating an imbalanced distribution.

Label Scarcity Simulation: we randomly sampled 1%, 5%, 10%, and 20% of each task as labeled data, preserving class distribution, with the remainder unlabeled.

As shown in

Table 1, all three datasets are structured to simulate incremental intrusion detection scenarios, where new attack classes are introduced progressively across task rounds. Each dataset exhibits different characteristics: UNSW-NB15 provides a relatively balanced baseline; CIC-IDS2017 emphasizes temporal sequence and class confusion; and TON_IoT introduces device heterogeneity and distribution shift, making them ideal for evaluating CL under varying real-world conditions.

For each dataset, we define a sequence of incremental tasks, where each task introduces a new subset of attack classes. The labeled data for each task is limited to 10% of the total samples, while the remaining 90% are treated as unlabeled. This setup reflects realistic scenarios where labeled data are scarce, and the model must rely on SSL techniques to leverage abundant unlabeled traffic.

Table 2 illustrates the task-wise class distribution for the CIC-IDS2017 dataset under a 5-task incremental setting. Each task introduces a new set of attack types, with some tasks containing multiple classes to simulate realistic class overlap and confusion scenarios. This design allows us to evaluate the model’s ability to adapt to new threats while retaining knowledge of previously learned classes.

For quantitative assessment, we adopt evaluation metrics in

Table 3. Average Accuracy (AA) measures overall classification performance across tasks; accuracy is defined as the ratio of correctly predicted samples to total samples, i.e.,

, where

,

,

, and

represent true positives, true negatives, false positives, and false negatives, respectively. Average Precision (AP) measures the precision across tasks; for a specific class, precision is defined as the ratio of true positive predictions to the total predicted positives, i.e.,

. Average Recall (AR) measures the recall across tasks; for a specific class, recall is defined as the ratio of true positive predictions to the total actual positives, i.e.,

. F1-Score (F1) balances precision and recall, calculated as the harmonic mean of precision and recall, i.e.,

. Forgetting Measure (FM) quantifies the degree of catastrophic forgetting on previous tasks. Backward Transfer (BWT) assesses the influence of learning new tasks on old tasks. Imbalance Robustness (IR) evaluates performance consistency across imbalanced classes. Minority Recall (MR) focuses on the detection rate of minority classes. Top-k Error (Top-k) assesses multi-class confusion detection.

4.3. Implementation Details

The proposed framework is implemented using PyTorch (with version 1.13.1), with the following key hyperparameters: Memory size: 5000 samples per task, dynamically adjusted based on the number of classes; DP-Means clustering threshold: 0.5, controlling cluster granularity; Uncertainty sampling: Top-20% samples with highest entropy selected from unlabeled data; Loss weights: for balancing alignment losses, for meta-weighted loss; Learning rate: 0.001 for Adam optimizer, with cosine annealing schedule; Batch size: 128 for labeled data, 256 for unlabeled data.

The model architecture consists of a feature extractor followed by a multi-layer perceptron head for classification. The attention mechanism is implemented using self-attention layers to capture critical fields in network traffic data.

The model is trained for 100 epochs per task, with early stopping based on validation accuracy to prevent overfitting. The memory buffer is updated after each task, retaining the most representative samples according to the DP-Means clustering and uncertainty sampling strategy.

For a fair comparison, we carefully designed the training protocols for all baseline methods and our proposed framework. Specifically, for methods that are originally designed for CL but do not support semi-supervised settings (e.g., iCaRL, IL2M), we followed the same incremental task protocol as our method but only used the labeled subset of each task, which was restricted to 10% of the total training data. This ensures that these methods operate under the same label-scarce condition as our framework.

For SSL methods that are not inherently continual (e.g., FixMatch), we trained the model with both labeled and unlabeled data within each task, but without the incremental extension across tasks. This provides a strong semi-supervised baseline while keeping the comparison fair in terms of data utilization.

For our proposed framework, we integrated both continual and semi-supervised components: Labeled data (10% per task) were used with supervised loss, while the remaining unlabeled data were incorporated via pseudo-labeling and consistency regularization.

4.4. Results and Analysis

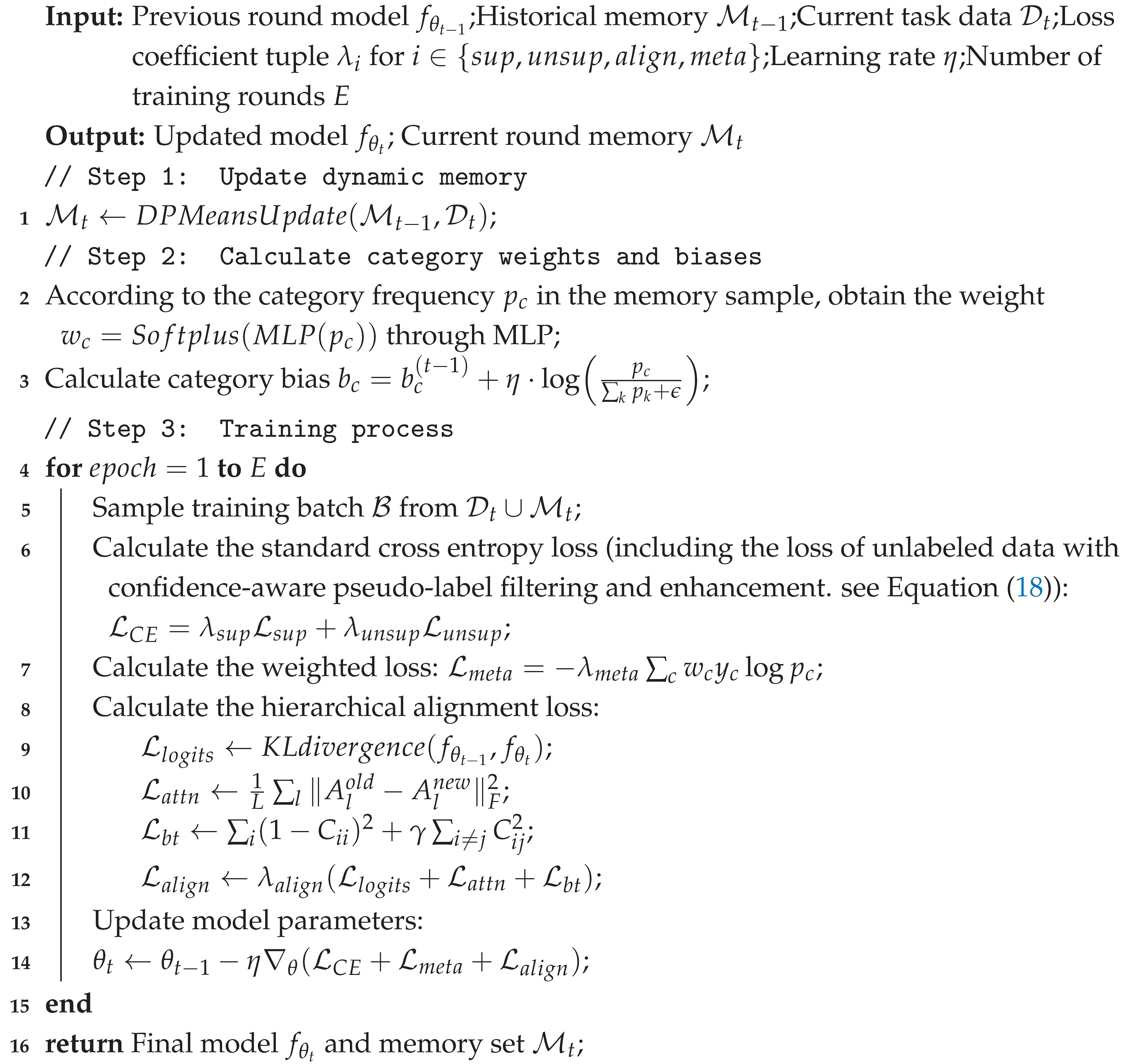

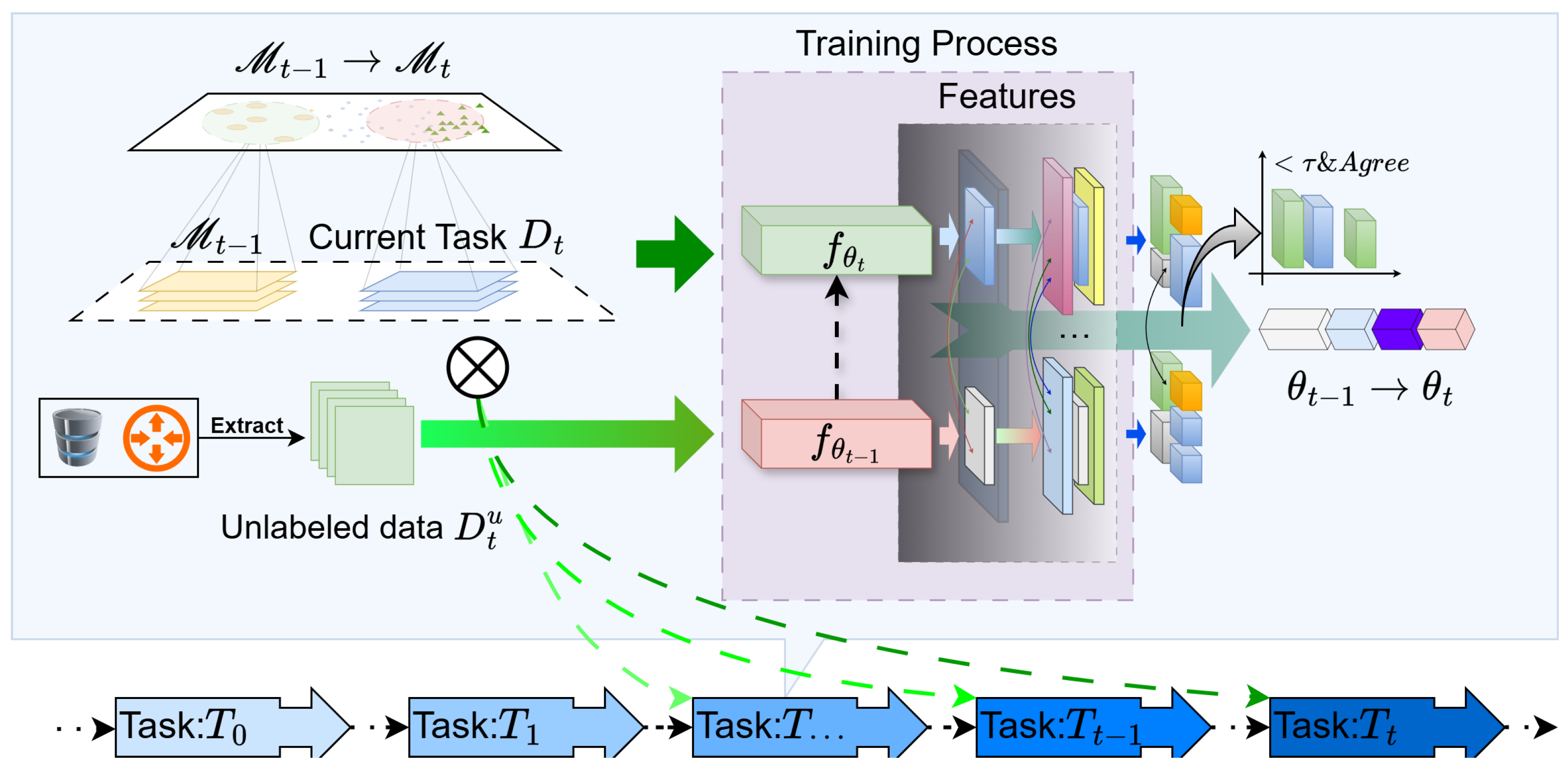

Figure 2 and

Table 6 present the detailed experimental results on the UNSW-NB15, CIC-IDS2017, and TON_IoT datasets, respectively. From these tables, it is evident that the proposed framework consistently achieves the best overall performance across all three benchmark intrusion detection datasets. This demonstrates its strong adaptability and robustness in addressing realistic challenges, including multi-task CL, label scarcity, and class imbalance. Specifically, our framework attains classification accuracies of 85.6% on UNSW-NB15, 83.4% on CIC-IDS2017, and 81.7% on TON_IoT—each surpassing the current strongest baseline method (PLCiL) by more than 5% points. These improvements indicate the framework’s stable learning capability under dynamic task sequences and data distributions. To further illustrate the advantages of our method, we provide a detailed analysis of key metrics below.

AA: Our method consistently outperforms all baselines across datasets, achieving significant gains of 4-5% over the next best method (PLCiL). This highlights its superior ability to learn and retain knowledge across incremental tasks.

FM: The proposed framework exhibits the lowest forgetting rates, indicating effective mitigation of catastrophic forgetting. The multi-layer alignment and meta-weighted loss components contribute to this stability.

MR: Our approach achieves the highest recall on minority classes, demonstrating its robustness in handling class imbalance. The dynamic memory update and uncertainty-based sampling strategies play a crucial role in enhancing minority class detection.

IR: The framework shows superior performance consistency across imbalanced classes, with IR values significantly higher than baselines. This reflects its ability to generalize well even under skewed class distributions.

Top-3: The lowest Top-3 error rates indicate that our method effectively reduces multi-class confusion, which is critical in intrusion detection scenarios with overlapping attack characteristics.

Table 6 summarizes the key performance metrics across all datasets. A high Avg-Precision indicates a low false alarm rate (few benign samples are misclassified as attacks), and a high Recall indicates a low missed detection rate (few attacks are misclassified as benign). Our method achieves an outstanding F-score of 84.3% on UNSW-NB15, 82.1% on CIC-IDS2017, and 80.1% on TON_IoT, which implies that both its Precision and Recall are high. This allows us to confidently state that the high accuracy of our model is not achieved by sacrificing one metric for the other but represents a robust balance between minimizing both false alarms and missed detections.

Figure 2a presents a detailed breakdown of performance metrics on the UNSW-NB15 dataset. Our framework not only excels in AA but also demonstrates significant reductions in FM and improvements in MR. The BWT metric indicates that learning new tasks has a less detrimental effect on previously learned tasks compared to baselines, showcasing the stability of our approach.

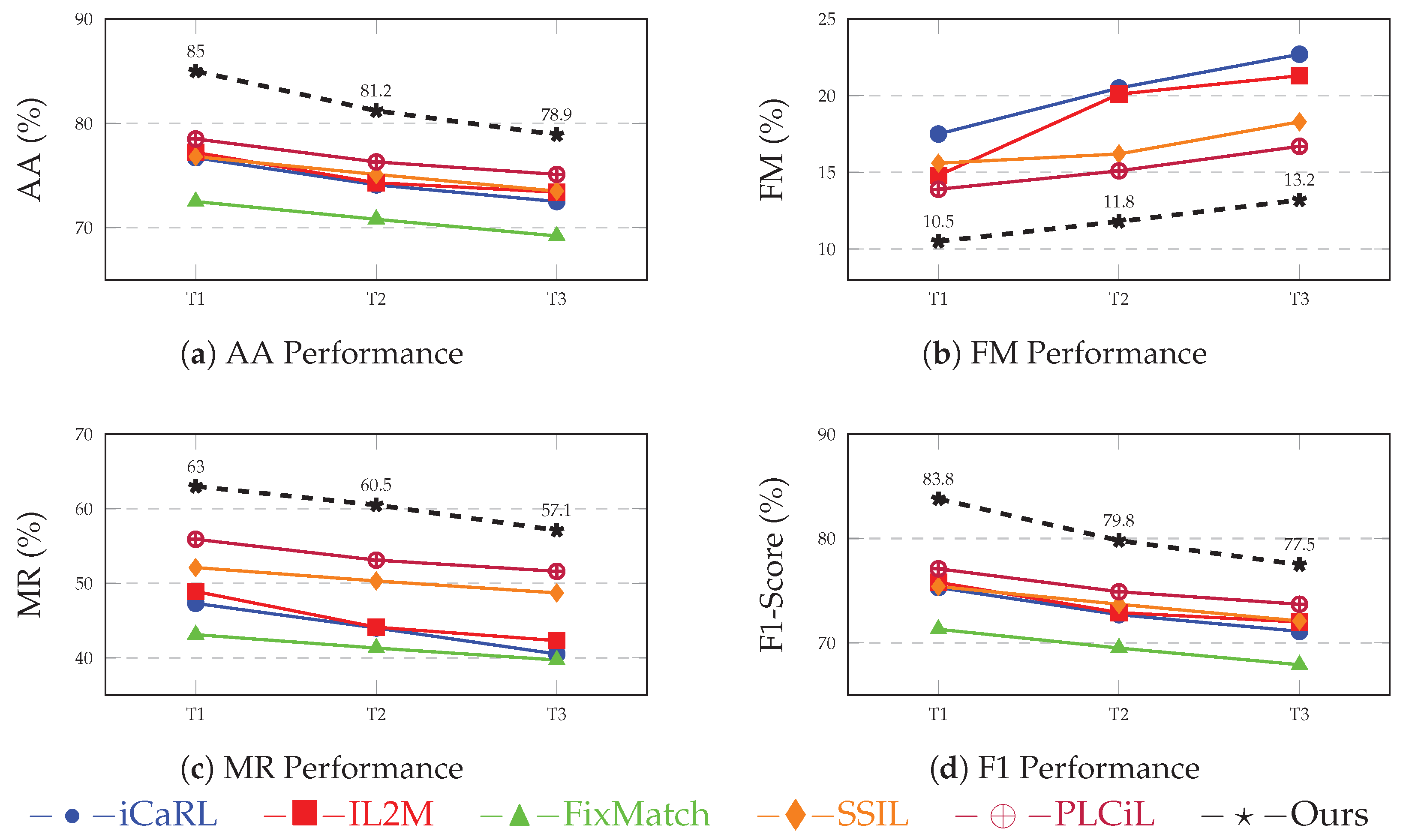

Figure 3a–d illustrate the performance trends of each method across different task sequences on the CIC-IDS2017 dataset. Our proposed framework consistently outperforms all baselines in AA, demonstrating its superior learning and retention capabilities. Additionally, it maintains the lowest FM values, indicating effective mitigation of catastrophic forgetting. The MR metric shows that our method excels in recognizing minority classes, a critical aspect in intrusion detection scenarios. Finally, the F1-Score trends further validate the robustness and reliability of our approach across sequential tasks. The detailed numerical results are provided in

Table 7.

To comprehensively assess the stability and adaptability of our framework in diverse and complex deployment scenarios, we design an extended set of experiments along the four axes below. Catastrophic Forgetting Analysis under Varying Task Sequence Lengths: Evaluate how the model retains prior knowledge as the number of sequential tasks increases, simulating long-term deployment. Semi-Supervised Robustness under Varying Labeled Sample Ratios: Test the model’s sensitivity to supervision sparsity by adjusting the ratio of labeled samples available per task. Memory-Efficient Knowledge Retention under Varying Buffer Sizes: Investigate the trade-off between memory constraints and knowledge preservation by altering the replay buffer capacity. Stress Testing under Extreme Scenarios: Analyze model behavior under challenging conditions—e.g., unbalanced task orders, adversarial class overlap, and label scarcity—designed to simulate worst-case real-world settings.

These extended evaluations offer a holistic perspective on the proposed framework’s real-world readiness, demonstrating not only strong average-case performance but also resilience to domain shift and resource constraints.

Table 8 summarizes the performance variations in each method under different labeled sample ratios. As expected, all models demonstrate improved accuracy and MR with increasing supervision levels. However, the proposed framework consistently maintains strong recognition capability even under extremely limited supervision. Notably, at a 1% label ratio, our framework achieves an MR of 60.1%, significantly outperforming all baseline methods. This result highlights the framework’s ability to effectively leverage unlabeled data through its multi-level alignment and confidence-aware pseudo-labeling mechanisms. Moreover, the model demonstrates superior performance on both MR and IR across all settings, showcasing its resilience to class imbalance and label sparsity—two pervasive challenges in real-world intrusion detection.

These findings suggest that our framework is particularly well-suited for weakly supervised deployment scenarios, such as industrial control systems and IoT environments, where high-quality labels are scarce and costly to obtain.

Table 9 shows the disaster forgetting performance of the proposed method and the comparison method under different memory capacity settings. It can be seen that as the memory capacity increases, the accuracy of all methods is improved, but this method can still maintain a low forgetting rate and a high minority class recall rate under a small memory capacity and can store more representative class center samples and boundary information, which is suitable for resource-constrained edge device scenarios.

Table 10 presents the performance of our proposed framework under varying degrees of class imbalance on the CIC-IDS2017 dataset. The experiments are designed to simulate real-world scenarios where certain attack types are significantly underrepresented compared to others, posing challenges for effective intrusion detection. In the balanced scenario (10:10:10), our method achieves an AA of 82.3%, demonstrating its ability to maintain high accuracy across all classes. The FM of 12.1 indicates a low level of catastrophic forgetting, while the MR of 62.5% highlights the model’s effectiveness in identifying minority classes. As the class imbalance increases to 10:50:50, the AA slightly decreases to 78.7%, but the model still maintains a robust performance with an FM of 13.4 and an MR of 56.3%. This suggests that our framework can adapt to moderate class imbalance without significant degradation in performance. In the more extreme imbalance scenario of 10:100:100, the AA further decreases to 73.4%, with an FM of 15.2 and an MR of 50.2%. Despite the challenges posed by severe class imbalance, our method continues to outperform baseline approaches, indicating its resilience and adaptability. Finally, in the most severe imbalance scenarios (50:100:100, 100:100:100, and 100:100:500), the AA drops to 61.1%, with corresponding increases in FM and decreases in MR. However, even under these challenging conditions, our framework demonstrates a commendable ability to detect minority classes, as evidenced by the MR of 38.5% in the 100:100:500 scenario. Overall, these results underscore the robustness of our proposed framework in handling class imbalance, making it a viable solution for real-world intrusion detection tasks where data distribution is often skewed.

Table 11 presents the performance of our proposed framework under various extreme scenarios designed to test its robustness and adaptability. Each scenario simulates challenging real-world conditions that an intrusion detection system might encounter. In the ESTE scenario, the model demonstrates strong stability with an AA of 75.8% and a low FM of 7.9, indicating its ability to retain knowledge over prolonged periods of minimal change. The MR of 62.1% further highlights its effectiveness in identifying rare attack types even when task changes are infrequent. In the FTC scenario, the model achieves an AA of 79.7% and an FM of 13.4, showcasing its capacity to quickly adapt to rapidly evolving threats. The MR of 59.9% indicates that while the model adapts quickly, it still maintains a reasonable level of performance on minority classes. The NICD scenario tests the model’s ability to handle uneven data flows, where it achieves an AA of 77.3% and an FM of 12.7. The high MR of 64.2% suggests that the model effectively manages class imbalance, ensuring that minority classes are not overlooked. Finally, in the EFLR scenario, the model maintains an AA of 78.5% and an FM of 12.1, demonstrating resilience to significant label noise. The MR of 60.3% indicates that the model can still identify minority classes despite fluctuations in label quality. Overall, these results underscore the robustness of our proposed framework across a range of challenging conditions, making it well-suited for deployment in dynamic and unpredictable network environments.

4.5. Ablation Study

To evaluate the contribution of each component within the proposed framework, we design a set of systematic ablation experiments. By removing or replacing specific modules, we observe the changes in key performance metrics such as overall accuracy, catastrophic forgetting, category robustness, and minority class recall to analyze the effectiveness and necessity of each sub-module in dynamic intrusion detection. All ablation experiments are conducted on the CIC-IDS2017 dataset. The task sequence is divided into 5 incremental rounds, with a 10% labeled sample ratio and a fixed memory buffer size of 5000. All other hyperparameters remain consistent with those used in the main experiments to ensure a fair comparison. The details of each configuration are introduced below. Full Model: keep the complete proposed framework, including all components. w/o Multi-Level Distillation: remove attention alignment and feature-level distillation. w/o DP-Means Memory: replace the DP-Means + entropy-based memory selection with random sampling (reservoir sampling). w/o Meta-Weighting: remove the category frequency-driven loss weight and bias adjustment, treating all classes equally. w/o Semi-Supervised Learning: completely remove the unlabeled data and train only on labeled samples, thus disabling the SSL mechanism. w/o Pseudo-Labeling: remove the confidence-aware pseudo-labeling mechanism.

Table 12 presents the results of the ablation experiments, highlighting the impact of each component on the overall performance of the proposed framework. The full model consistently outperforms all ablated versions across key metrics, demonstrating the effectiveness of each module in enhancing dynamic intrusion detection. Removing the multi-level distillation module results in a significant drop in AA to 79.2% and an increase in FM to 13.7, indicating that knowledge retention across tasks is compromised without this component. The MR also decreases to 59.6%, underscoring the importance of multi-level feature alignment in recognizing rare attack types. The absence of the DP-Means memory selection strategy leads to a further decline in performance, with AA dropping to 78.6% and FM rising to 15.1. This suggests that effective memory management is crucial for maintaining model stability and performance over time. Excluding the meta-weighting mechanism has a pronounced effect on IR, which falls to 0.71, and MR decreases to 52.4%. This highlights the role of class-aware optimization in addressing class imbalance and ensuring equitable learning across categories. The removal of the semi-supervised learning component results in the most substantial performance degradation, with AA falling to 74.9% and FM increasing to 17.4. The MR drops to 49.1%, indicating that the model struggles to learn effectively from limited labeled data without leveraging unlabeled samples. Finally, eliminating the pseudo-labeling mechanism leads to a decrease in AA to 81.3% and an increase in FM to 10.7, demonstrating that confident pseudo-labeling contributes significantly to the model’s learning process.