Assessing Student Engagement: A Machine Learning Approach to Qualitative Analysis of Institutional Effectiveness

Abstract

1. Introduction

- Q1. What does your institution do best to engage students in learning?

- Q2. What could your institution do to improve students’ engagement in learning?

- Q3. What are the positive elements of the online/blended learning experience, you want to keep when on-campus studies resume?

- Q4. In what way(s) could your higher education institution improve its support for you during the current circumstances?

2. Literature Review

3. Methodology

3.1. Topic Modelling

3.1.1. Latent Dirichlet Allocation (LDA)

3.1.2. Bi-Term Topic Modelling

3.1.3. BERTopic

3.2. Name Entity Recognition (NER)

3.3. N-Gram Analysis

4. Experiments

4.1. Dataset

4.2. Data Cleaning and Pre-Processing

- Elimination of Missing Values: A thorough dataset analysis revealed a significant number of missing values (unanswered entries) in both MCQs and open-ended questions. Therefore, these missing values were removed first.

- Filtering out Non-English Answers: Survey respondents were asked to answer in either English or Irish. Since the number of Irish responses was minimal (1229 out of 145,970 total answers), only English answers were retained, and Irish responses were excluded for the purposes of this study.

- Text Pre-Processing: Student responses varied in writing style, including case differences, use of non-ASCII characters, punctuation, alphanumeric words, and whitespace. The following steps were taken to normalise these style variations:

- Case Normalisation

- Removal of non-ASCII characters

- Punctuation Removal

- Removal of Alphanumeric Words

- Extra Whitespace Removal

- Lemmatisation

- Misspelling Correction: To efficiently identify topic themes, it is essential to correct misspelt words in the dataset in advance of any analysis. Therefore, we used the PySpellChecker https://pypi.org/project/pyspellchecker/ package in Python to identify and correct potentially misspelt words. Certain terms, such as “Sulis”, “Kahoot”, “Brightspace”, (VLE names) and abbreviations like “PSU” (Postgraduate Students’ Union) may be incorrectly flagged as misspellings, necessitating further manual intervention. Additionally, some terms, like “e-tivities,” which should be a single word, may be split. Since most of these terms are institution-specific and may not be recognised by the pre-trained models, manual correction is performed using a Python dictionary that maps each misspelt word to its correct spelling.

- Abbreviation Expansion: Upon further analysis of the dataset, it was found that for terms like “Postgraduate Student Union” and “Multiple Choice Questions,” some students used abbreviations, while others wrote out the full forms. To ensure consistency and clarity, a dictionary similar to the one used for spelling correction that automatically replaces abbreviations with their full forms in the text was used. However, certain institution-specific abbreviations posed challenges for correction, as their accurate full forms were unknown.

- Removal of irrelevant/unusable text: Generally, unusable or irrelevant text refers to the responses that are either shorter than three characters or too vague to understand such as “yes,” “fdgdg,” and “yeahh.” It is important to remove this type of text from the dataset to maintain its quality.

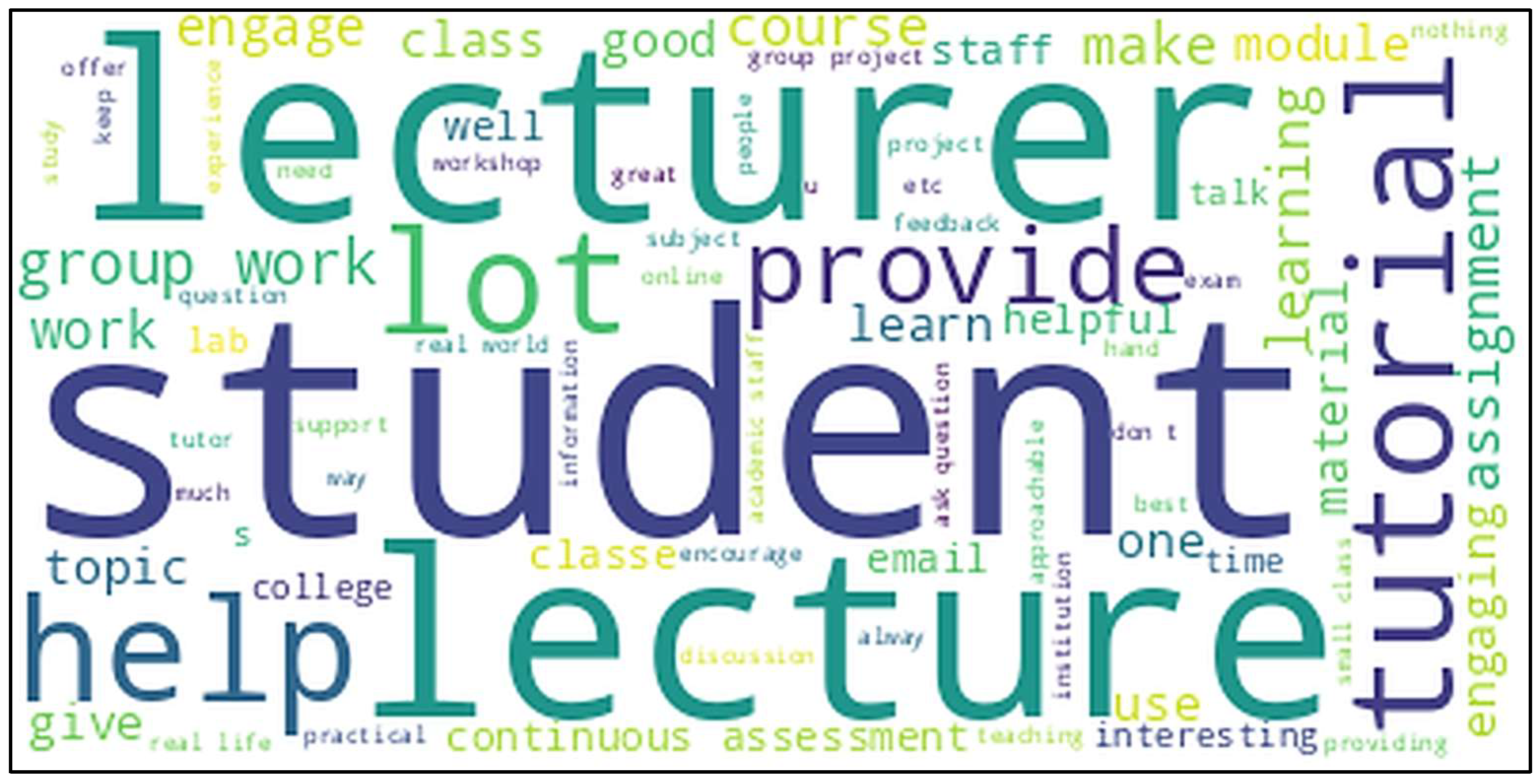

4.3. Experiments for Q1: “What Does Your Institution Do Best to Engage Students in Learning?”

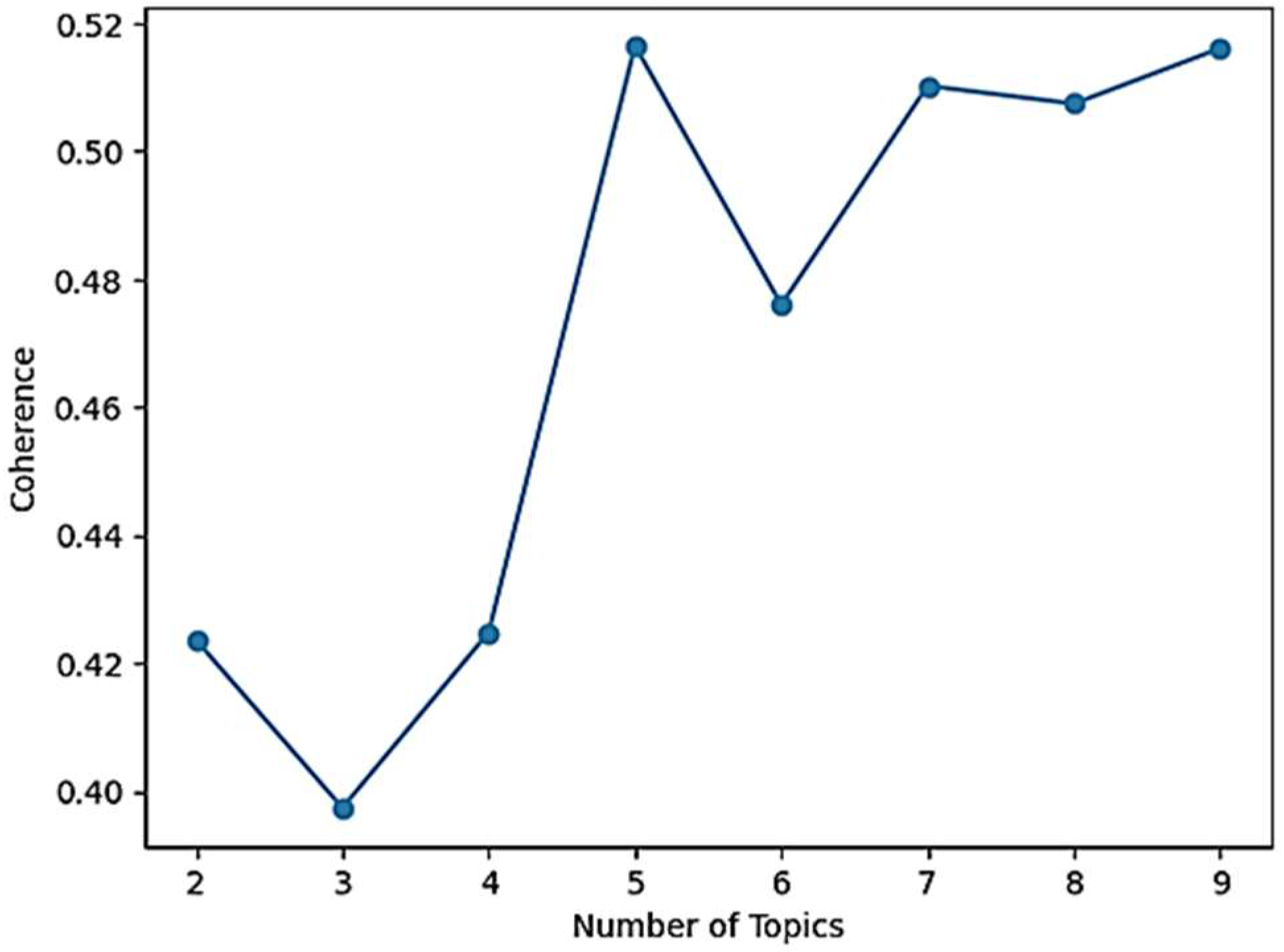

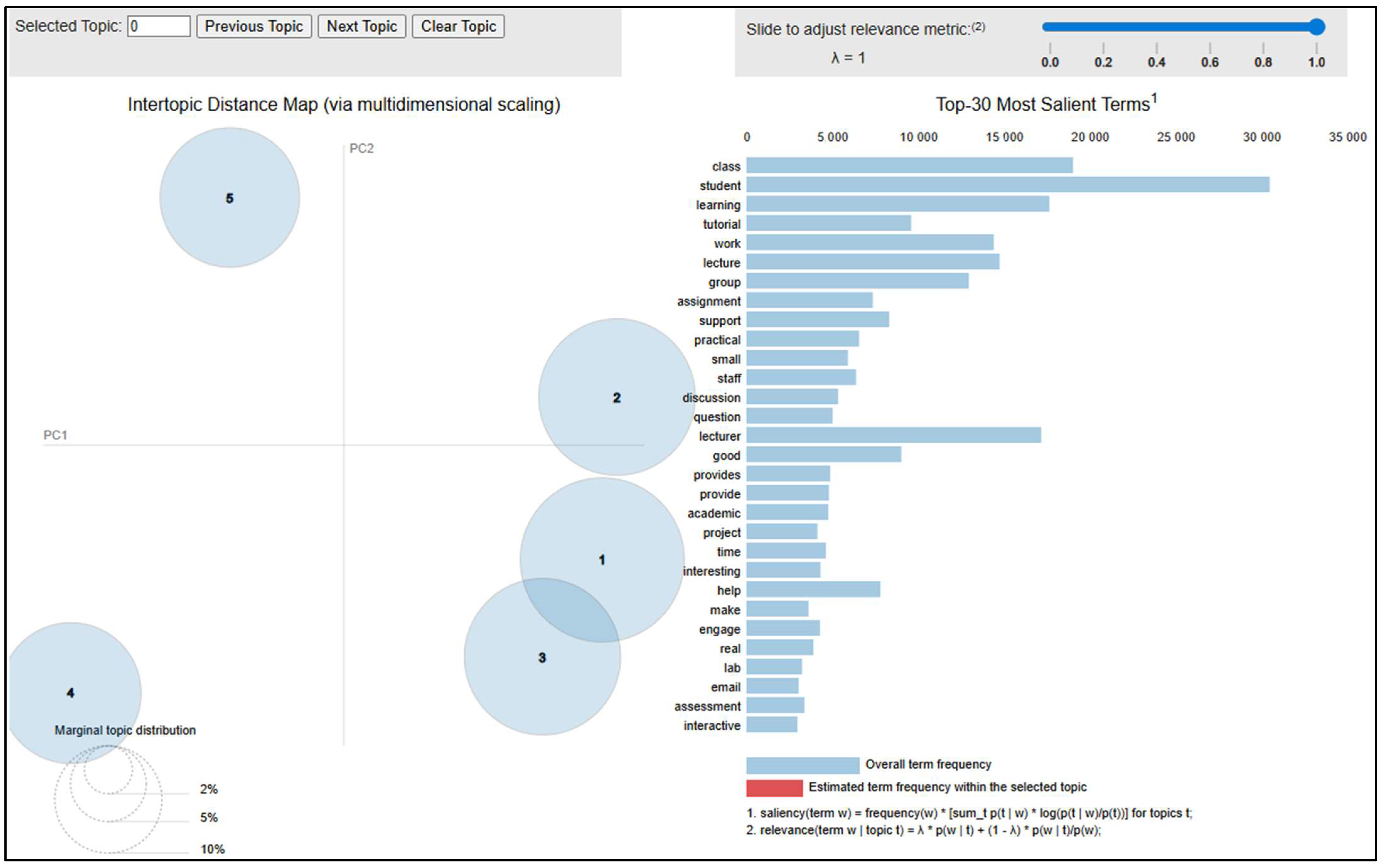

4.3.1. Topic Modelling

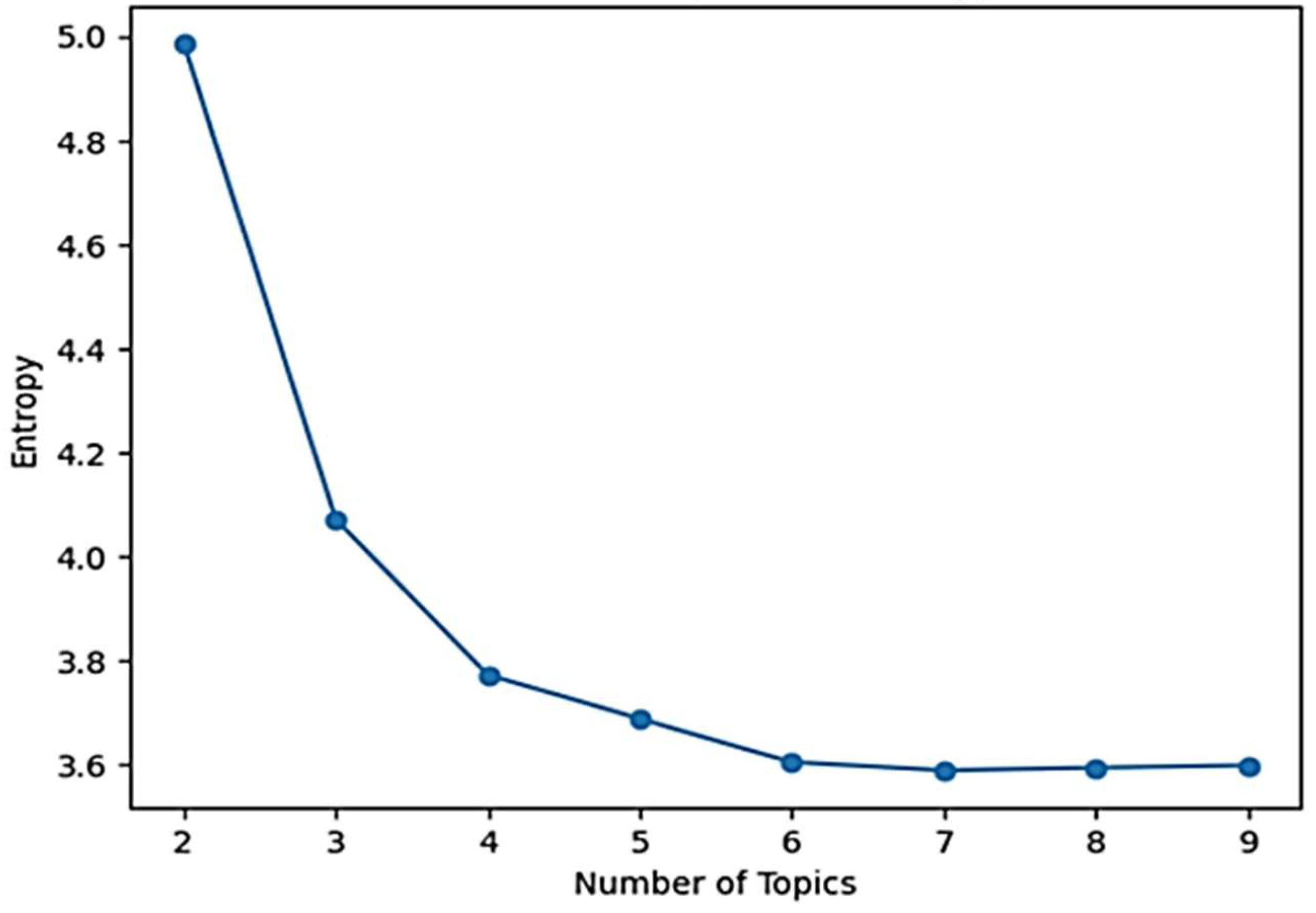

Model 1. Latent Dirichlet Allocation (LDA)

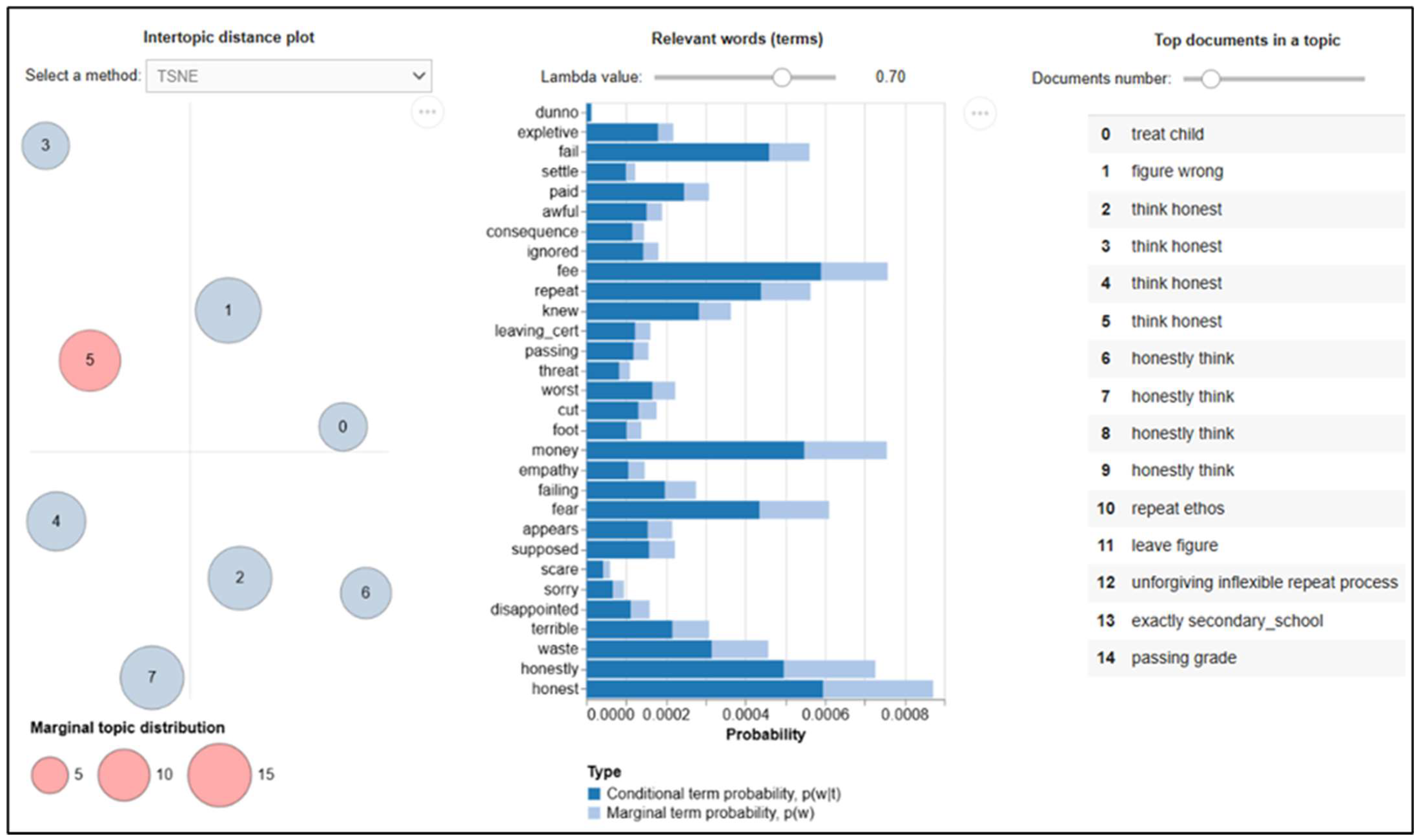

Model 2. Bi-Term Topic Model (BTM)

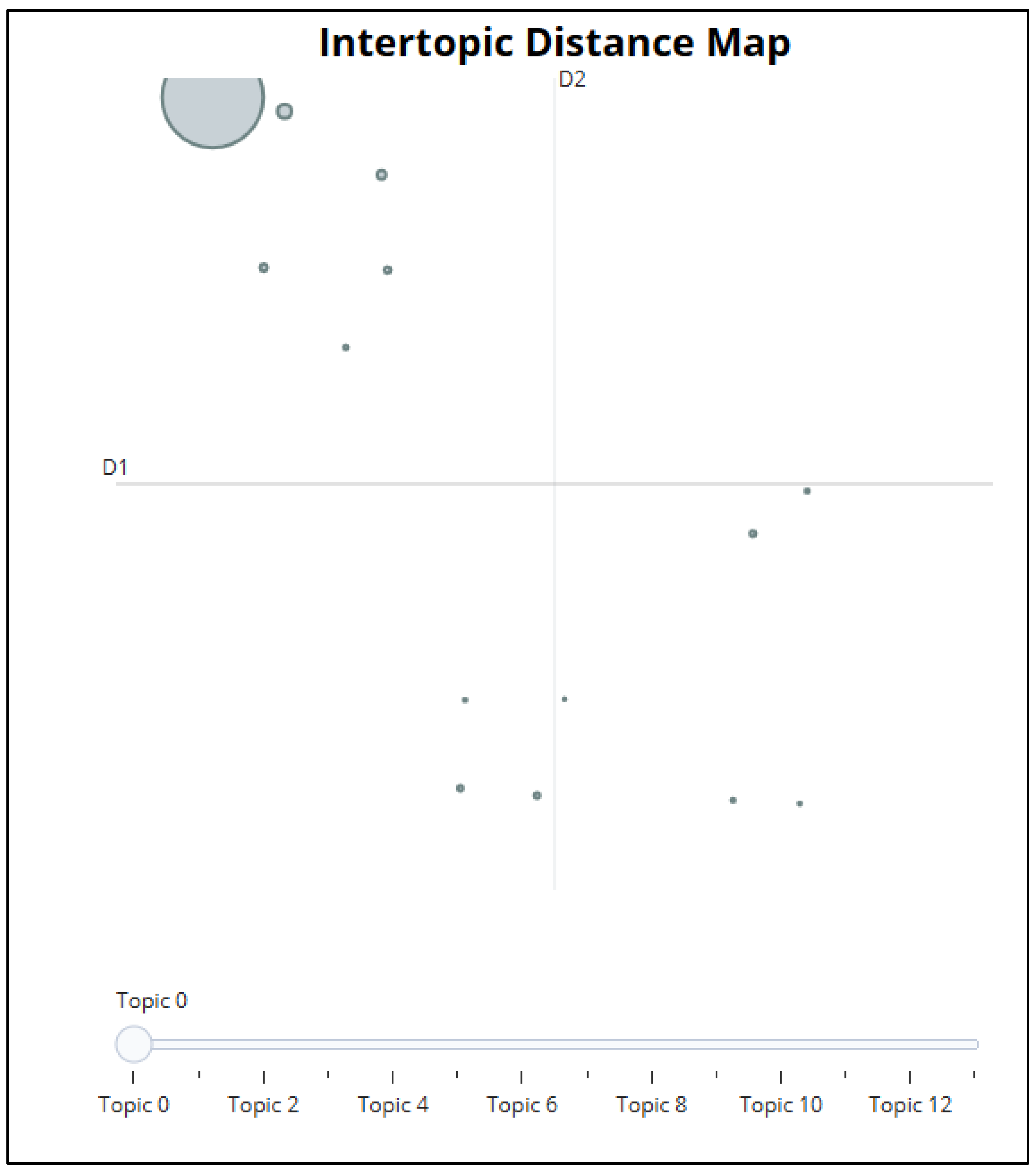

Model 3. BERTopic

4.3.2. Name Entity Recognition (NER) Analysis

4.4. Experiments for Q2: “What Could Your Institution Do to Improve Students’ Engagement in Learning?”

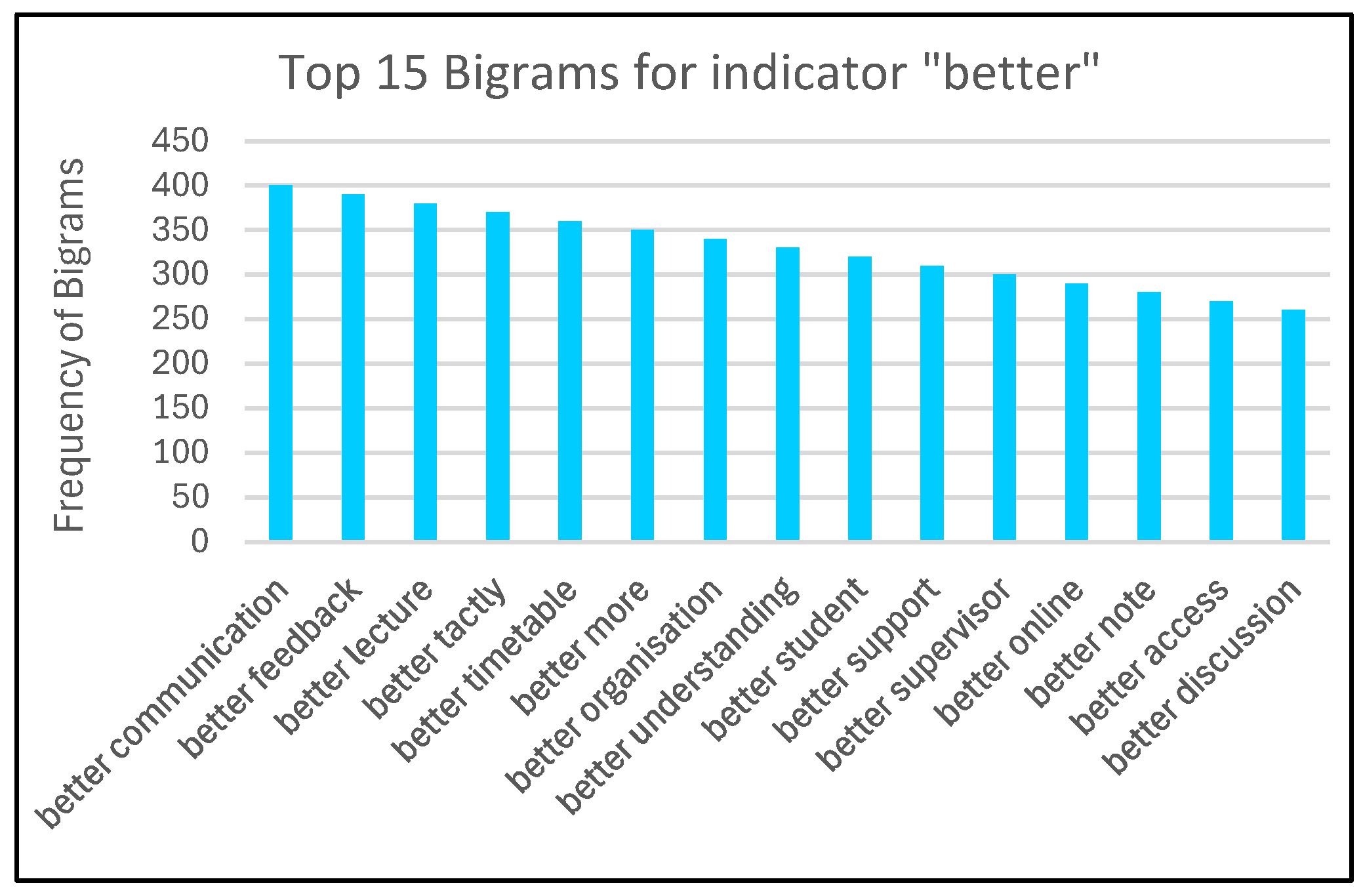

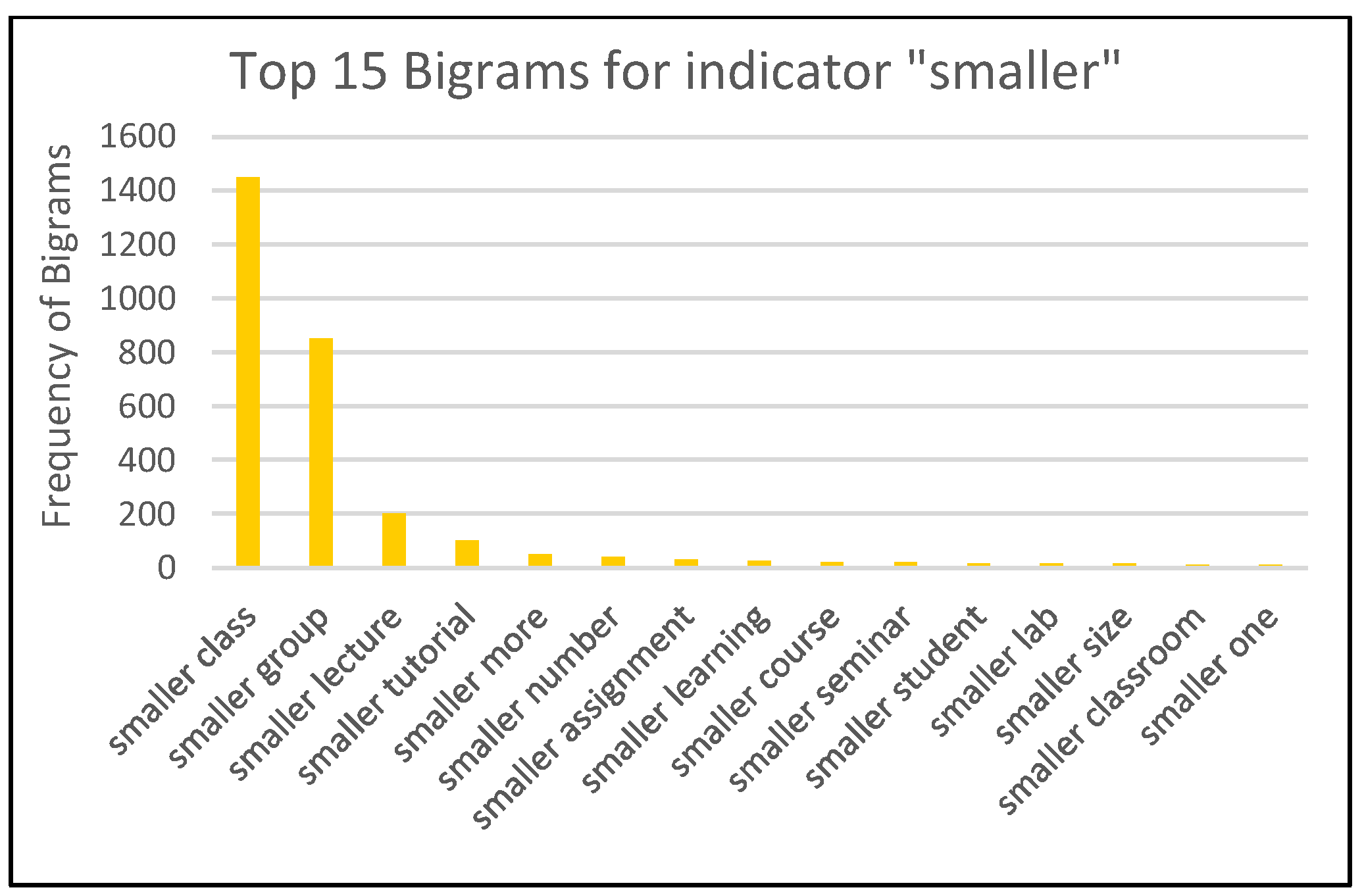

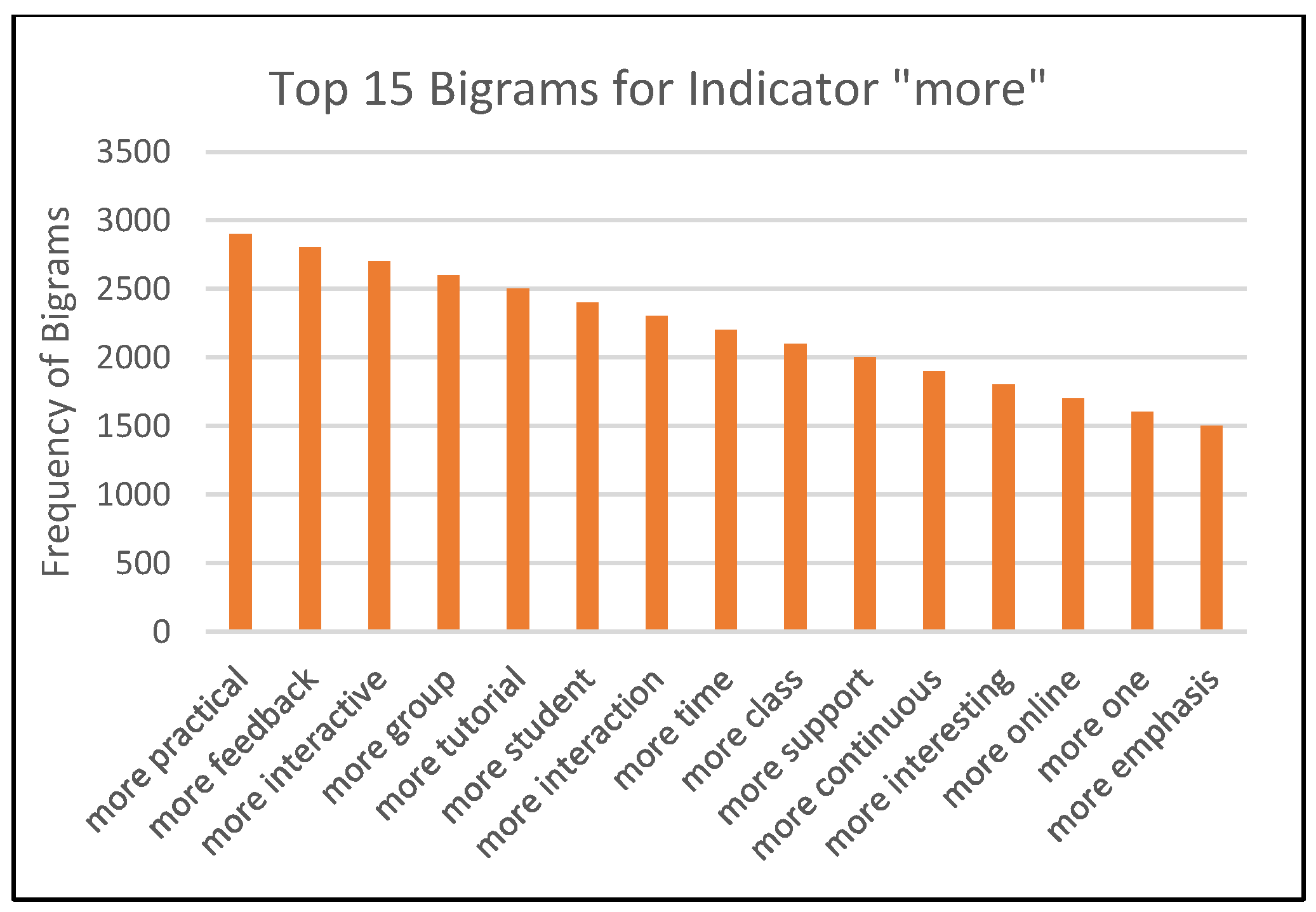

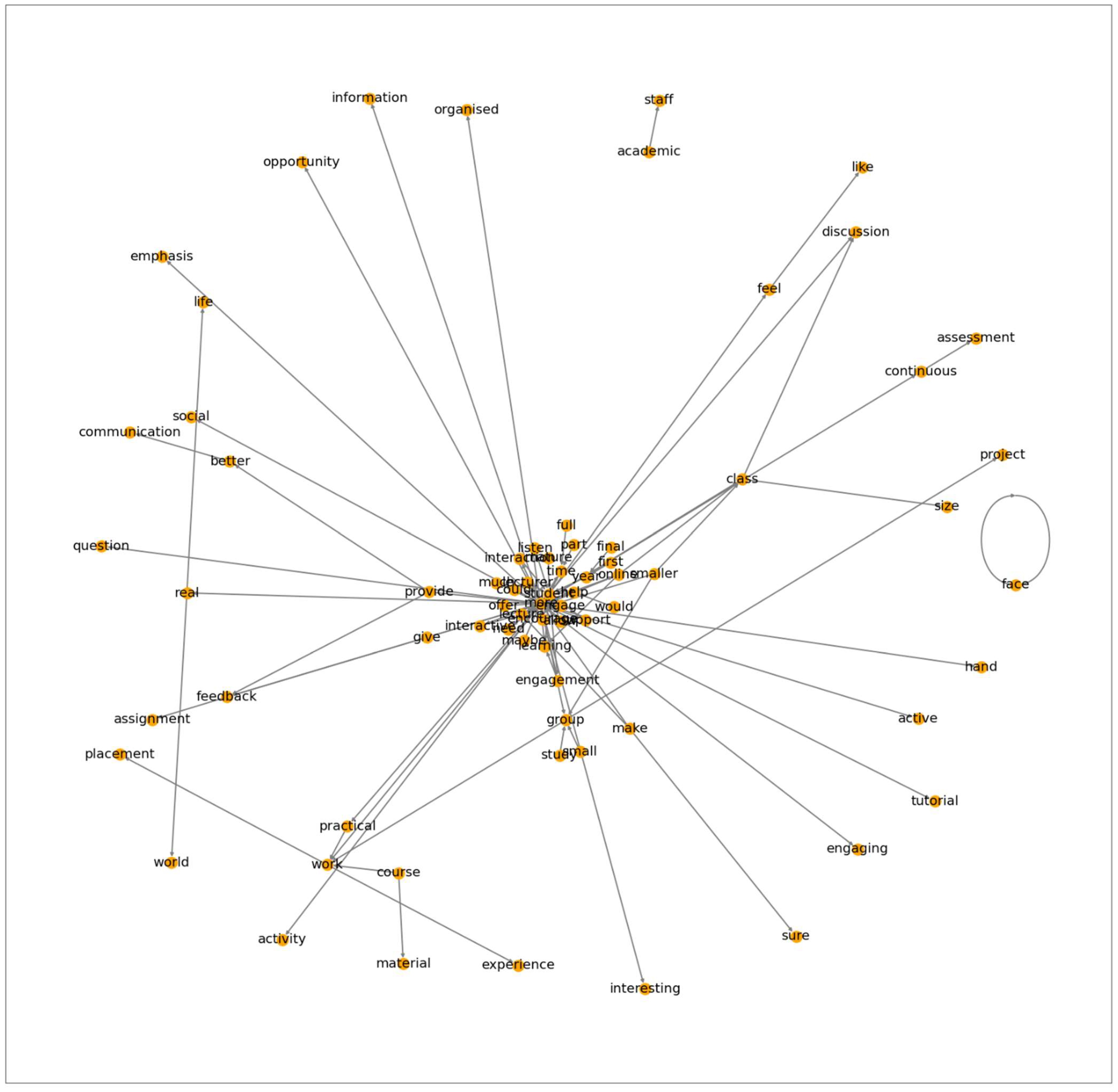

N-Gram Analysis

5. Results and Discussion

5.1. Evaluation of Topic Modelling Techniques

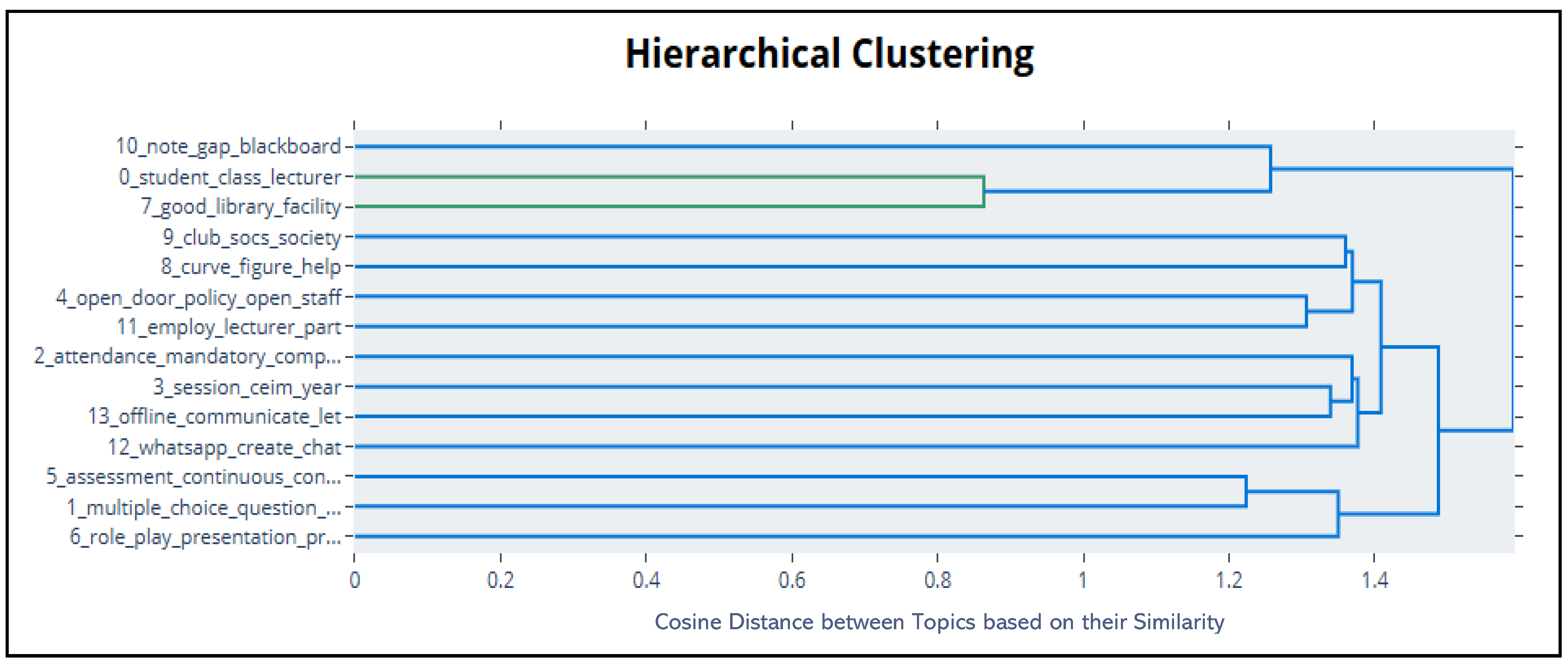

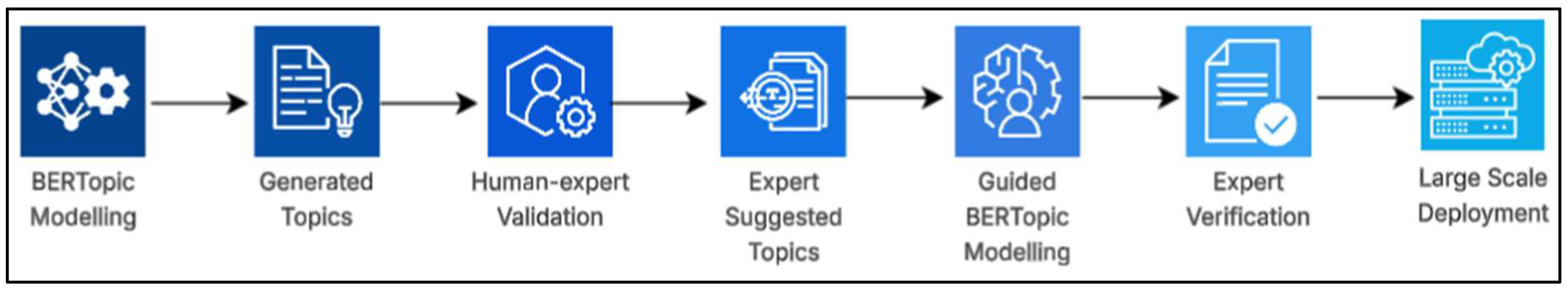

Qualitative Validation of BERTopic Classification

5.2. Evaluation of Name Entity Recognition

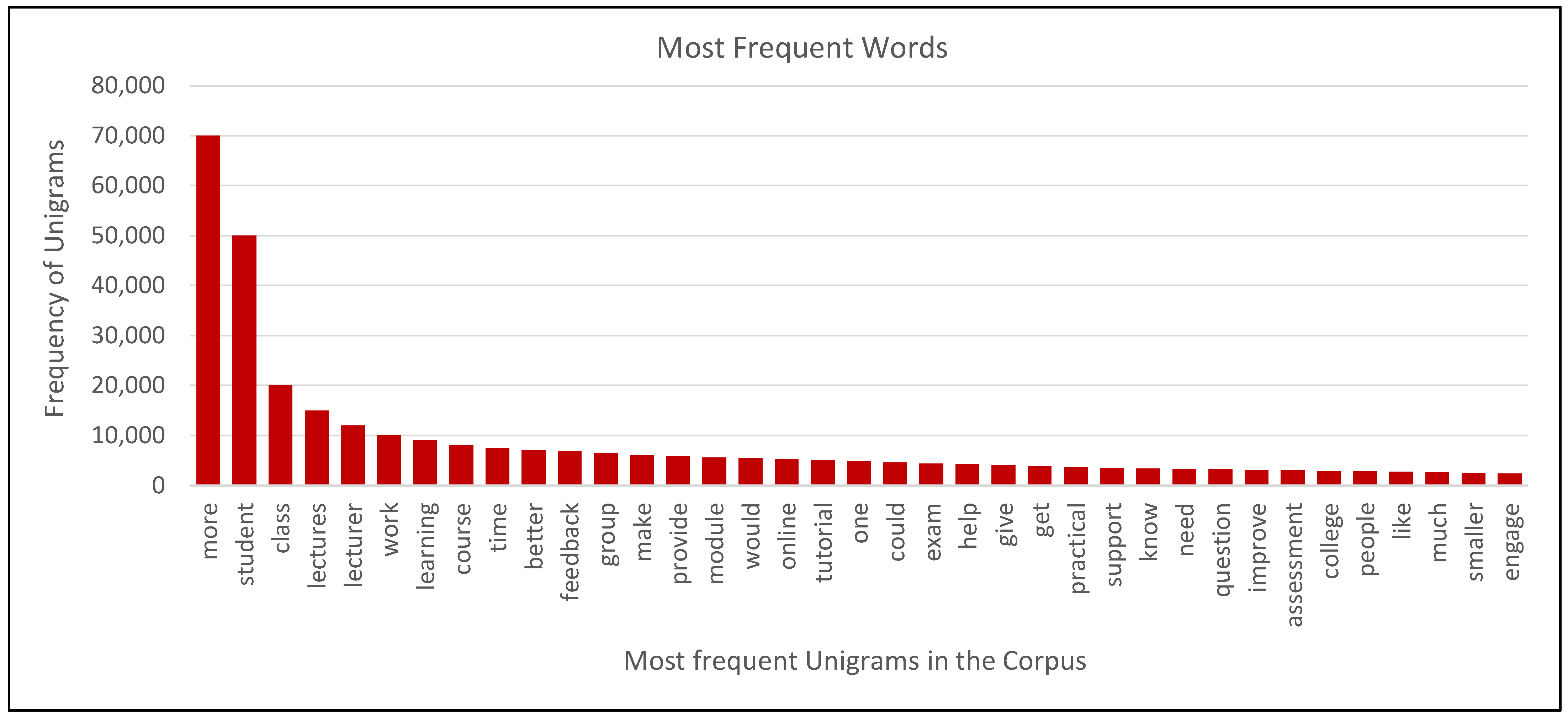

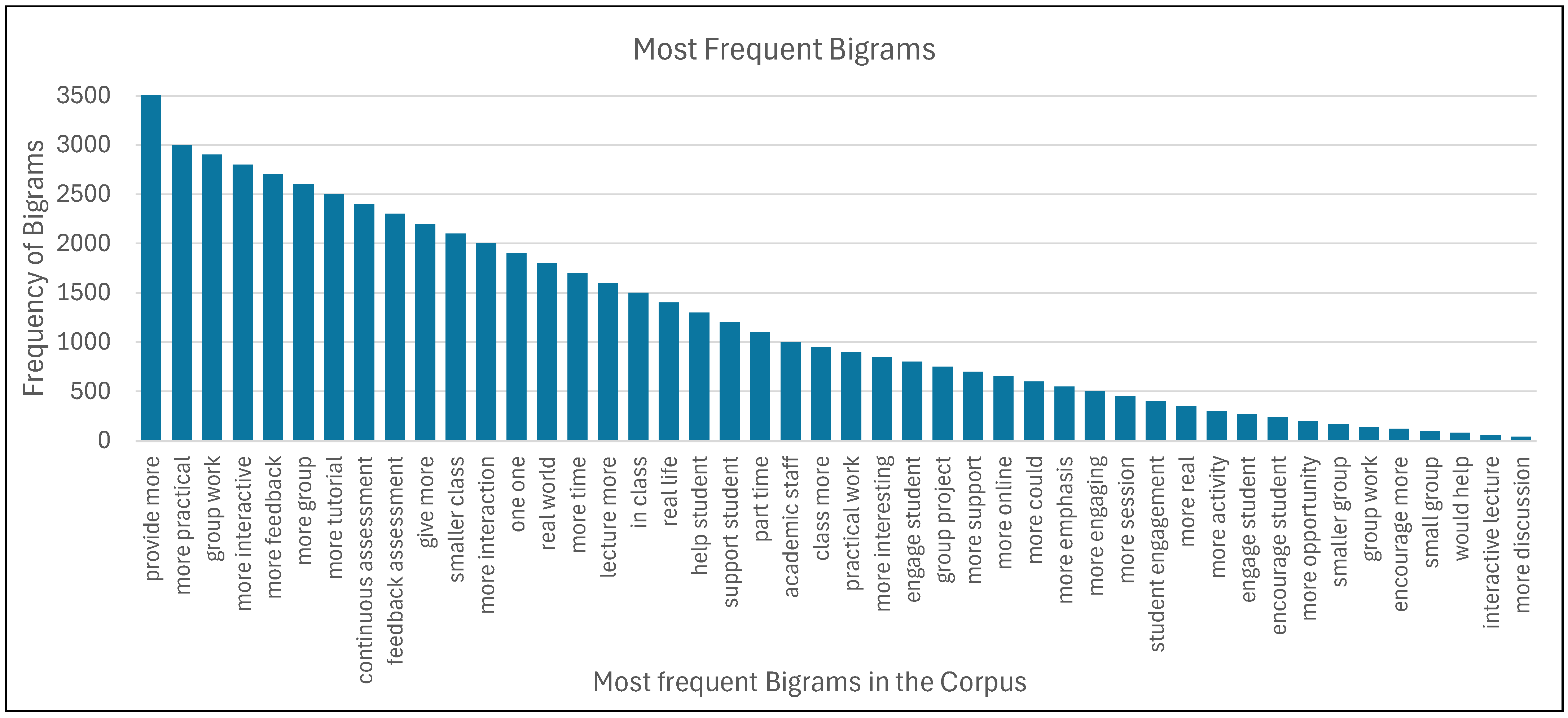

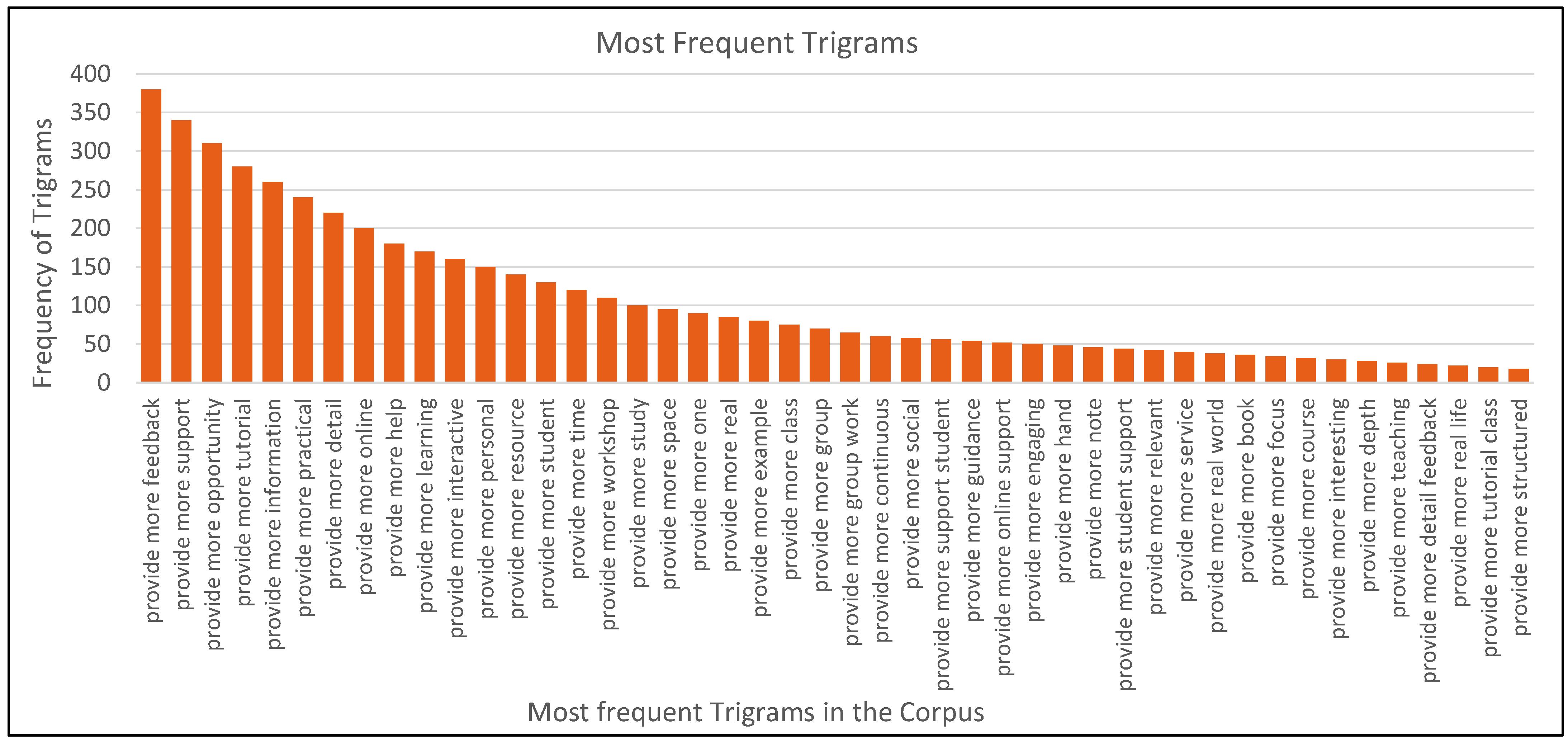

5.3. Evaluation of N-Gram Analysis

5.4. Limitations and Future Work

- Future Work:

6. Conclusions

- Q1: What does your institution do best to engage students in learning?

- Q2: What could your institution do to improve students’ engagement in learning?

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

| Index | Topic 1 | Topic 2 | Topic 3 | Topic 4 | Topic 5 |

|---|---|---|---|---|---|

| 0 | student | support | lecturer | class | learning |

| 1 | time | staff | interesting | tutorial | assignment |

| 2 | engage | provides | real | work | project |

| 3 | college | provide | lecture | practical | make |

| 4 | learn | academic | course | group | |

| 5 | get | learning | assessment | small | work |

| 6 | event | good | engaging | lecture | group |

| 7 | year | help | material | discussion | exam |

| 8 | much | library | life | question | feedback |

| 9 | talk | approachable | example | lab | method |

| 10 | institution | resource | continuous | interactive | presentation |

| 11 | help | available | really | ask | approach |

| 12 | best | student | teaching | size | regular |

| 13 | part | service | note | seminar | problem |

| 14 | lecturer | online | good | smaller | team |

| 15 | also | excellent | topic | interaction | based |

| 16 | speaker | friendly | relevant | hand | room |

| 17 | involved | lecturer | well | answer | active |

| 18 | course | center | world | session | give |

| 19 | attendance | offer | subject | activity | different |

| Index | Topic 0 | Topic 1 | Topic 2 | Topic 3 | Topic 4 | Topic 5 | Topic 6 | Topic 7 |

|---|---|---|---|---|---|---|---|---|

| 0 | informational | microteaching | learnonline | fascinating | hired | dunno | math | calling |

| 1 | fieldwork | uploading | anecdote | contactable | expletive | centre | smaller | |

| 2 | event | role_play | gapped | illustrate | intelligent | fail | center | breakout |

| 3 | campaign | experiential | upload | relatable | empathetic | settle | sum | size |

| 4 | socs | incorporates | bright_space | graph | competent | paid | loan | small |

| 5 | invitation | implementation | absent | real | talented | awful | writing | nervous |

| 6 | newsletter | project | recorded | relate | pleasant | consequence | suite | intimate |

| 7 | social | combining | panopto | illustration | caring | ignored | grind | sized |

| 8 | awareness | clinical | calendar | story | knowledgeable | fee | database | ask |

| 9 | club | portfolio | posted | humor | employ | repeat | alc | asking |

| 10 | society | carrying | uploaded | world | hire | knew | library | microphone |

| 11 | sends | product | recording | example | exception | leaving_cert | opening | camera |

| 12 | conference | placement | mid_term | practicality | qualified | passing | computer | question |

| 13 | sporting | based | cramming | graphic | calm | threat | ran | shy |

| 14 | medium | culinary | summary | image | approachability | worst | printing | intimidating |

| 15 | extracurricular | practical | slack | diagram | exceptional | cut | support | brainstorming |

| 16 | tour | participatory | record | relates | skilled | foot | library | uncomfortable |

| 17 | exhibition | reflection | missed | societal | warm | money | advisory | anonymous |

| 18 | inform | practical | layout | clip | enthusiastic | empathy | laptop | concentrated |

| 19 | informing | client | toe | memorize | friendly | failing | service | larger |

| Sample Responses | Keywords Generated by BERTopic | Expert-Selected Keywords | Suggested Themes |

|---|---|---|---|

| Most of our lecturers were superb and really knew their subjects | student, class, lecturer, learning | lecturer | knowledgeable |

| Approachable lecturers, encouraged to engage, small class size | student, class, lecturer, learning | lecturer | class-size, engagement |

| mixture of practical and theory classes | student, class, lecturer, learning | - | structure |

| Canvas can host a range of materials including extra supporting materials. | note, gap, blackboard, lecture | - | VLE, content |

| Social media updates | whatsapp, create, chat, emailing | - | socials, communication |

| Gets student involved in the learning, discussions, group work, role play etc. | role, play, presentation, practice, theory | practice | engagement, learning |

| Interactive learning is very prominent in my institution which is a great way to get students engaged in learning | student, class, lecturer, learning | learning | engagement |

| Students studying a part time MBA want to be succeed, and therefore will engage as they are in the workplace and have something to contribute. Also the lecturers promote engagement and inclusion of all students in the classes | student, class, lecturer, learning | Student, lecturer | engagement, inclusion |

| Practical assignments without the focus of essay writing followed by more essay writing, this university I found, provided many different ways of examining a student. | assessment, continuous, exam | Assessment, exam | structure |

| Variety of assessment types-more focus on continuous assessment rather than final exams. | assessment, continuous, exam | Assessment, exam | structure |

| Bring guest lecturers from different walks of life, giving us practical experience | role, play, presentation, practice, theory | practice | engagement, learning, lecturer, knowledgeable |

| holds seminars with people already in the workforce | role, play, presentation, practice, theory | practice | structure |

| There are many cafes with low prices on campus so it feels like they’re trying to encourage students to get a coffee and stay a while to work on assignments, readings, etc. | club, socs, society, sport | - | engagement |

| The staff are very approachable and make a point if letting you know they are there to help and guide you if you need it. | open, door, policy, staff, door | - | support, communication, approachability, knowledgeable |

| Patient-centred care labs are an engaging way to learn as they mimic real-world scenarios and help students to become more comfortable speaking with patients about their medicines and health concerns. | good, library, facility, access | - | engagement, practice, communication |

| Some tutors great on line and real enjoyed their interactions and break out rooms. | offline, communicate, let, online | - | engagement, practice, knowledgeable |

| Group team list and provide us the platforms for communication | offline, communicate, let, online | - | communication, collaboration |

| They organize small group chats where by they check on our progress and provide advice | whatsapp, create, chat, emailing | - | support, advice, engagement, collaboration |

| Carrying out continuous assessments with students to get them actively engaging in modules rather than one big exam at the end of term | assessment, continuous, exam | Assessment, exam | engagement |

References

- Gaftandzhieva, S.; Doneva, R.; Zhekova, M.; Pashev, G. Towards Automated Evaluation of the Quality of Educational Services in HEIs. Int. J. Adv. Comput. Sci. Appl. 2023, 14. [Google Scholar] [CrossRef]

- Mandia, S.; Mitharwal, R.; Singh, K. Automatic student engagement measurement using machine learning techniques: A literature study of data and methods. Multimed. Tools Appl. 2024, 83, 49641–49672. [Google Scholar] [CrossRef]

- Fredricks, J.A.; Blumenfeld, P.C.; Paris, A.H. School engagement: Potential of the concept, state of the evidence. Rev. Educ. Res. 2004, 74, 59–109. [Google Scholar] [CrossRef]

- Misuraca, M.; Scepi, G.; Spano, M. Using Opinion Mining as an educational analytic: An integrated strategy for the analysis of students’ feedback. Stud. Educ. Eval. 2021, 68, 100979. [Google Scholar] [CrossRef]

- Maeda, Y.; Wang, X.; Zhang, Y.; Banks, J.B.; Kenney, R.H. Balancing Human and Machine Coding: Evaluating the Credibility and Potential of Topic Modeling for Open-Ended Survey Responses. Comput. Hum. Behav. 2025, 172, 108703. [Google Scholar] [CrossRef]

- Shamshiri, A.; Ryu, K.R.; Park, J.Y. Text mining and natural language processing in construction. Autom. Constr. 2024, 158, 105200. [Google Scholar] [CrossRef]

- Diaz, N.P.; Walker, J.P.; Rocconi, L.M.; Morrow, J.A.; Skolits, G.J.; Osborne, J.D.; Parlier, T.R. Faculty Use of End-of-Course Evaluations. Int. J. Teach. Learn. High. Educ. 2022, 33, 285–297. [Google Scholar]

- Okoye, K.; Arrona-Palacios, A.; Camacho-Zuñiga, C.; Achem, J.A.G.; Escamilla, J.; Hosseini, S. Towards teaching analytics: A contextual model for analysis of students’ evaluation of teaching through text mining and machine learning classification. Educ. Inf. Technol. 2022, 27, 3891–3933. [Google Scholar] [CrossRef]

- Kuzehgar, M.; Sorourkhah, A. Factors affecting student satisfaction and dissatisfaction in a higher education institute. Syst. Anal. 2024, 2, 1–13. [Google Scholar] [CrossRef]

- Tan, K.H.; Chan, P.P.; Mohd Said, N.-E. Higher education students’ online instruction perceptions: A quality virtual learning environment. Sustainability 2021, 13, 10840. [Google Scholar] [CrossRef]

- Liu, B. Sentiment Analysis and Opinion Mining; Springer Nature: Berlin/Heidelberg, Germany, 2022. [Google Scholar]

- Kastrati, Z.; Dalipi, F.; Imran, A.S.; Pireva Nuci, K.; Wani, M.A. Sentiment analysis of students’ feedback with NLP and deep learning: A systematic mapping study. Appl. Sci. 2021, 11, 3986. [Google Scholar] [CrossRef]

- Onan, A. Sentiment analysis on massive open online course evaluations: A text mining and deep learning approach. Comput. Appl. Eng. Educ. 2021, 29, 572–589. [Google Scholar] [CrossRef]

- Schurig, T.; Zambach, S.; Mukkamala, R.R.; Petry, M. Aspect-based Sentiment Analysis for University Teaching Analytics. In Proceedings of the Thirtieth European Conference on Information Systems (ECIS 2022), Timișoara, Romania, 18–24 June 2022. [Google Scholar]

- Ren, P.; Yang, L.; Luo, F. Automatic scoring of student feedback for teaching evaluation based on aspect-level sentiment analysis. Educ. Inf. Technol. 2023, 28, 797–814. [Google Scholar] [CrossRef]

- Bhowmik, A.; Noor, N.M.; Mazid-Ul-Haque, M.; Miah, M.S.U.; Karmaker, D. Evaluating teachers’ performance through aspect-based sentiment analysis. In Proceedings of the 2024 IEEE 9th International Conference for Convergence in Technology (I2CT), Pune, India, 5–7 August 2024; IEEE: New York, NY, USA, 2024. [Google Scholar]

- Zhang, T.; Moody, M.; Nelon, J.P.; Boyer, D.M.; Smith, D.H.; Visser, R.D. Using Natural Language Processing to Accelerate Deep Analysis of Open-Ended Survey Data. In Proceedings of the 2019 SoutheastCon, Huntsville, AL, USA, 11–14 April 2019; IEEE: New York, NY, USA, 2019. [Google Scholar]

- Jayasudha, J.; Thilagu, M. A survey on sentimental analysis of student reviews using natural language processing (NLP) and Text Mining. In Proceedings of the International Conference on Innovations in Intelligent Computing and Communications, Bhubaneswar, India, 16–17 December 2022; Springer: Berlin/Heidelberg, Germany, 2022. [Google Scholar]

- Shaik, T.; Tao, X.; Dann, C.; Xie, H.; Li, Y.; Galligan, L. Sentiment analysis and opinion mining on educational data: A survey. Nat. Lang. Process. J. 2023, 2, 100003. [Google Scholar] [CrossRef]

- Kiely, E.; Quigley, C.; Ishmael, O.; Ogbuchi, I. Application of Natural Language Processing (NLP) for Sentiment Analysis and Topic Modelling of Postgraduate Student Engagement Data; PGR StudentSurvey.ie Qualitative Data Analysis Report; Atlantic Technological University: Dublin, Ireland, 2023. [Google Scholar]

- Erskine, S.; David, H. Report on the Analysis of Qualitative Data from StudentSurvey.ie (the Irish Survey of Student Engagement) 2022 Using Power BI; Reports Studentsurvey.ie; Studentsurvey.ie: Dublin, Ireland, 2023. [Google Scholar]

- Churchill, R.; Singh, L. The evolution of topic modeling. ACM Comput. Surv. 2022, 54, 1–35. [Google Scholar] [CrossRef]

- Sun, J.; Yan, L. Using topic modeling to understand comments in student evaluations of teaching. Discov. Educ. 2023, 2, 25. [Google Scholar] [CrossRef]

- Nanda, G.; Douglas, K.A.; Waller, D.R.; Merzdorf, H.E.; Goldwasser, D. Analyzing large collections of open-ended feedback from MOOC learners using LDA topic modeling and qualitative analysis. IEEE Trans. Learn. Technol. 2021, 14, 146–160. [Google Scholar] [CrossRef]

- Fang, Q. Research on Topic Mining of MOOC Course Reviews Based on the BERTopic Model. In Proceedings of the 2024 14th International Conference on Information Technology in Medicine and Education (ITME), Nanping, China, 13–15 September 2024; IEEE: New York, NY, USA, 2024. [Google Scholar]

- Dillan, T.; Fudholi, D.H. What can we learn from MOOC: A sentiment analysis, n-gram, and topic modeling approach. In Proceedings of the 2022 IEEE 7th international conference on information technology and digital applications (ICITDA), Yogyakarta, Indonesia, 4–5 November 2022; IEEE: New York, NY, USA, 2022. [Google Scholar]

- Parker, M.J.; Anderson, C.; Stone, C.; Oh, Y. A large language model approach to educational survey feedback analysis. Int. J. Artif. Intell. Educ. 2024, 35, 444–481. [Google Scholar] [CrossRef]

- Sheils, J.C.; Dampier, D.A.; Malik, H. A Comparative Study of Topic Models for Student Evaluations. In Proceedings of the 2024 ASEE North Central Section Conference, Kalamazoo, MI, USA, 22–23 March 2024. [Google Scholar]

- Nawaz, R.; Sun, Q.; Shardlow, M.; Kontonatsios, G.; Aljohani, N.R.; Visvizi, A.; Hassan, S.-U. Leveraging AI and machine learning for national student survey: Actionable insights from textual feedback to enhance quality of teaching and learning in UK’s higher education. Appl. Sci. 2022, 12, 514. [Google Scholar] [CrossRef]

- Onah, D.F.; Pang, E.L. MOOC design principles: Topic modelling-PyLDavis visualization & summarisation of learners’ engagement. In Proceedings of the 13th International Conference on Education and New Learning Technologies Online Conference, Online, 5–6 July 2021. [Google Scholar]

- Riaz, A.; Abdulkader, O.; Ikram, M.J.; Jan, S. Exploring topic modelling: A comparative analysis of traditional and transformer-based approaches with emphasis on coherence and diversity. Int. J. Electr. Comput. Eng. 2025, 15, 1933–1948. [Google Scholar] [CrossRef]

- Albanese, N.C. Topic Modeling with LSA, pLSA, LDA, NMF, BERTopic, Top2Vec: A Comparison. Towards Data Sci. 2022, 19. [Google Scholar]

- Grootendorst, M. BERTopic: Neural topic modeling with a class-based TF-IDF procedure. arXiv 2022, arXiv:2203.05794. [Google Scholar]

- Hayat, F.I.; Shatnawi, S.; Haig, E. Comparative analysis of topic modelling approaches on student feedback. In Proceedings of the 16th International Joint Conference on Knowledge Discovery, Knowledge Engineering and Knowledge Management, Porto, Portugal, 17–19 November 2024; SciTePress: Setúbal, Portugal, 2024. [Google Scholar]

- Murshed, B.A.H.; Mallappa, S.; Abawajy, J.; Saif, M.A.N.; Al-Ariki, H.D.E.; Abdulwahab, H.M. Short text topic modelling approaches in the context of big data: Taxonomy, survey, and analysis. Artif. Intell. Rev. 2023, 56, 5133–5260. [Google Scholar] [CrossRef] [PubMed]

- Albalawi, R.; Yeap, T.H.; Benyoucef, M. Using topic modeling methods for short-text data: A comparative analysis. Front. Artif. Intell. 2020, 3, 42. [Google Scholar] [CrossRef] [PubMed]

- Sood, M.; Kaur, H.; Gera, J. Information retrieval using n-grams. In Artificial Intelligence and Technologies: Select Proceedings of ICRTAC-AIT 2020; Springer: Berlin/Heidelberg, Germany, 2021; pp. 335–342. [Google Scholar]

- Şen, T.Ü.; Yakit, M.C.; Gümüş, M.S.; Abar, O.; Bakal, G. Combining N-grams and graph convolution for text classification. Appl. Soft Comput. 2025, 175, 113092. [Google Scholar] [CrossRef]

- Mageed, I.A. On the Rényi Entropy functional, Tsallis distributions and Lévy stable distributions with entropic applications to machine learning. Soft Comput. Fusion Appl. 2024, 1, 91–102. [Google Scholar]

| Year | Gender | Age Group (Yrs.) | Study Group | Mode of Study | |||||

|---|---|---|---|---|---|---|---|---|---|

| Female | Male | ≤23 | ≥24 | PGT | Y1 | YF | Full-Time | Part-Time | |

| 2016 | 59% | 41% | 63.24% | 36.62% | 15.24% | 48.25% | 36.51% | 88.82% | 11.18% |

| 2017 | 58.1% | 41.9% | 65.42% | 34.58% | 15.05% | 49.94% | 35.02% | 89.33% | 10.67% |

| 2018 | 59.3% | 40.7% | 65.22% | 34.78% | 17.03% | 48.35% | 34.62% | 87.96% | 12.04% |

| 2019 | 58.8% | 41.2% | 65.85% | 34.15% | 17.38% | 48.22% | 34.40% | 88.49% | 11.51% |

| 2020 | 58.9% | 41.0% | 66.47% | 33.53% | 19.47% | 48.93% | 31.61% | 88.29% | 11.71% |

| 2021 | 60.5% | 39.3% | 63.14% | 36.86% | 20.65% | 48.17% | 31.18% | 85.74% | 14.26% |

| 2022 | 60.8% | 38.7% | 62.17% | 38.22% | 23.81% | 45.57% | 30.63% | 85.77% | 14.23% |

| Year | Best_Aspects | Improve_How |

|---|---|---|

| 2016 | 14,972 | 14,194 |

| 2017 | 18,348 | 17,320 |

| 2018 | 19,010 | 17,968 |

| 2019 | 20,367 | 19,239 |

| 2020 | 25,928 | 24,174 |

| 2021 | 24,163 | 22,944 |

| 2022 | 22,373 | 21,060 |

| Year | Best_Aspects | Improve_How |

|---|---|---|

| Answered | Answered | |

| 2016 | 59% | 63.24% |

| 2017 | 58.1% | 65.42% |

| 2018 | 59.3% | 65.22% |

| 2019 | 58.8% | 65.85% |

| 2020 | 58.9% | 66.47% |

| 2021 | 60.5% | 63.14% |

| 2022 | 60.8% | 62.17% |

| Index | Topic 1 | Topic 2 | Topic 3 | Topic 4 | Topic 5 |

|---|---|---|---|---|---|

| 0 | student | support | lecturer | class | learning |

| 1 | time | staff | interesting | tutorial | assignment |

| 2 | engage | provides | real | work | project |

| 3 | college | provide | lecture | practical | make |

| 4 | learn | academic | course | group | |

| 5 | get | learning | assessment | small | work |

| Index | Topic 0 | Topic 1 | Topic 2 | Topic 3 | Topic 4 | Topic 5 | Topic 6 | Topic 7 |

|---|---|---|---|---|---|---|---|---|

| 0 | informational | microteaching | learnonline | fascinating | hired | dunno | math | calling |

| 1 | fieldwork | uploading | anecdote | contactable | expletive | centre | smaller | |

| 2 | event | role_play | gapped | illustrate | intelligent | fail | center | breakout |

| 3 | campaign | experiential | upload | relatable | empathetic | settle | sum | size |

| 4 | socs | incorporates | bright_space | graph | competent | paid | loan | small |

| 5 | invitation | implementation | absent | real | talented | awful | writing | nervous |

| Topic | Count | Name |

|---|---|---|

| −1 | 6 | −1_eispearas_chuireann_initative_goodstart |

| 0 | 121,229 | 0_student_class_lecturer_learning |

| 1 | 434 | 1_multiple_choice_question_test_weekly_quiz |

| 2 | 605 | 2_attendance_mandatory_compulsory_make |

| 3 | 371 | 3_session_ceim_year_first |

| 4 | 161 | 4_open_door_policy_open_staff_door |

| 5 | 881 | 5_assessment_continuous_continous_exam |

| 6 | 164 | 6_role_play_presentation_practice_theory |

| 7 | 2234 | 7_good_library_facility_access |

| 8 | 84 | 8_curve_figure_help_difficulty |

| 9 | 152 | 9_club_socs_society_sport |

| 10 | 321 | 10_note_gap_blackboard_lecture |

| 11 | 358 | 11_employ_lecturer_part_hire |

| 12 | 93 | 12_whatsapp_create_chat_emailing |

| 13 | 58 | 13_offline_communicate_let_online |

| Size of Training Dataset | Precision | Recall | F-Score |

|---|---|---|---|

| 1000 | 0.66 | 0.50 | 0.57 |

| 2000 | 0.71 | 0.49 | 0.58 |

| 3000 | 0.71 | 0.51 | 0.60 |

| Tag or Label | Explanation | Count of Entities in Training Dataset | Count of Entities in Test Dataset |

|---|---|---|---|

| UNI_PERSON | University Personal | 521 | 201 |

| ORG | Organisations | 37 | 12 |

| LEARNING_RESOURCES | Teaching and Learning Material | 1339 | 607 |

| ACTIVITY | Activities and Events | 322 | 198 |

| SUPPORT&FACILITY | Support Services and Facilities | 482 | 292 |

| ONLINE_TOOLS | Online Teaching, Learning and Communicating Tools | 163 | 54 |

| Bigrams | Trigrams |

|---|---|

| ice breaker | multiple choice question |

| marking scheme | extracurricular activity |

| white board | third level education |

| mental health | mental health service |

| comfort zone | power point presentation |

| old fashioned | self directed learning |

| mobile phone | mental health issue |

| curriculum vitae | power point slide |

| guest speaker | real world scenario |

| No. | BERTopic | BTM | LDA | |||

|---|---|---|---|---|---|---|

| Theme/Topic | Keywords | Theme/Topic | Keywords | Theme/Topic | Keywords | |

| 1 | Teaching And Learning | Student, class, lecturer, learning, lecture, work, group, tutorial, help, course | Illustrative Learning | Fascinating, Anecdote, Illustrate, Relatable, Graph, Story, Humor, Graphic, Image, Diagram | Teaching And Learning | Lecturer, Real, Lecture, Course, Assessment, Engaging, Material, Example, Teaching, Topic |

| 2 | Educational Amenities | Good, library, facility, access, resource, computer, lecturer, study, online, learning | Dedicated Student Support | Centre, Loan, Writing, Grind, Database, Library, Printing, Support, Advisory, Service | Student Support | Support, Staff, Provide, Academic, Learning, Help, Library, Approachable, Resource, Available |

| 3 | Teaching and Academic Staff | Employ, lecturer, part, hire, talented, good, excellent, passionate, teaching, staff | Teaching and Academic Staff | Contactable, Intelligent, Empathetic, Competent, Talented, Pleasant, Caring, Knowledgeable, Qualified, Skilled | Students Learning Experience | Student, Time, Engage, College, Learn, Event, Talk, Institution, Help, Lecturer |

| 4 | Extra-Curricular Activities | Club, socs, society, sport, social, student, join, journal, promote, activity | Extra-Curricular Activities | Event, Campaign, Social, Club, Society, Conference, Sporting, Extracurricular, Tour, Exhibition | In-class Activities | class, tutorial, work, practical, group, lecture, discussion, lab, interactive, seminar |

| 5 | Applied and Experiential Learning | Role_play, presentation, practice, theory, placement, discussion, powerpoint, groupwork, bring, group | Applied and Experiential Learning | Microteaching, Fieldwork, Role_Play, Experiential, Practical, Implementation, Project, Paticipatory, Clinical, Portfolio | Student Engagement | learning, assignment, project, email, exam, feedback, presentation, approach, team, active |

| 6 | Student’s Individual Experience | Curve, figure, help, difficulty, student, learning, bit, difficult, support, experiencing | Student’s Individual Experience | Expletive, Fail, Settle, Paid, Awful, Consequence, Ignored, Fee, Repeat, Leaving_Cert | ||

| 7 | Online Communication Channels | Whatsapp, create, chat, emailing, idea, mentor, set, group, start, done | Interactive Digital Education | Learningonline, Uploading, Upload, Bright_Space, Recorded, Panopto, Posted, Uploaded, Recording, Slack | ||

| 8 | Institutional Obligations | Attendance, mandatory, compulsory, make, lecture, mark, tutorial, class, lab, policy | In-Class Learning Aspects | Calling, Size, Nervous, Intimate, Asking, Microphone, Camera, Question, Shy, Brainstorming | ||

| 9 | Academic Assistance | Session, ceim, year, first, tutorial, learning, peer, leaving-cert, engineering | ||||

| 10 | Educational Resources | Note, gap, blackboard, lecture, slide, online, class, gapped, lecturer, powerpoint | ||||

| 11 | Accessible Support | Open_door_policy, open, staff, door, honestly, drop, student, tutor, lecturer, approachable | ||||

| 12 | Academic Evaluations | Assessment, continuous, multiple_choice_question, test, weekly, exam, continuous, assignment, quiz, regular | ||||

| Sample Responses | Keywords Generated by BERTopic | Expert-Selected Keywords | Suggested Themes |

|---|---|---|---|

| Most of our lecturers were superb and really knew their subjects | student, class, lecturer, learning | lecturer | knowledgeable |

| Approachable lecturers, encouraged to engage, small class size | student, class, lecturer, learning | lecturer | class-size, engagement |

| Variety of assessment types-more focus on continuous assessment rather than final exams. | assessment, continuous, exam | Assessment, exam | structure |

| Canvas can host a range of materials including extra supporting materials. | note, gap, blackboard, lecture | - | VLE, content |

| Social media updates | whatsapp, create, chat, emailing | - | socials, communication |

| Gets student involved in the learning, discussions, group work, role play etc. | role, play, presentation, practice, theory | practice | engagement, learning |

| Entities | Precision | Recall | F1-Score |

|---|---|---|---|

| ONLINE_TOOLS | 0.89 | 0.77 | 0.83 |

| UNI_PERSON | 0.80 | 0.81 | 0.81 |

| ACTIVITY | 0.72 | 0.32 | 0.44 |

| LEARNING_RESOURCES | 0.69 | 0.51 | 0.59 |

| ORG | 0.67 | 0.33 | 0.44 |

| SUPPORT&FACILITY | 0.62 | 0.40 | 0.49 |

| No. | Category | Keywords | ||

|---|---|---|---|---|

| Y1 | Y4 | PGT | ||

| 1 | ONLINE_TOOLS | Blackboard, Brightspace, Canvas, Clickers, Facebook, Interactive Polls, Kahoot, Learnsmart, Loop, MCQ Polls, Menti.Com, Moodle, Online Platforms, Panopto | Loop, Moodle, UCD Connect, Zoom, Blackboard, Brightspace, Canvas, Clicker, Facebook, Instagram, Kahoot, Online Polls, Panopto, Social Media, Sulis | Polls, Aws, Adobe, Blackboard, Brightspace, Canvas, Colab, Connect, Facebook, Instagram, Kahoot, Loop, Moodle, Springboard, Sulis |

| 2 | UNI_PERSON | Lecturers, Academic Adviser(s), Academic Advisor(s), Academic Staff, Academic Tutor(s), Admin, Admin Staff, Administrative Staff, Advisory Staff, Co-Ordinators, Colleagues, Course Administrator, Course Director, Course Leaders, Demonstrators | Academic Staff, Academic Mentors, Academic Supervisors, Co-Ordinator, Course Staff, Experts, Faculty, Head, It Staff, Lecturers, Law Lecturers, Mentors, Moderators, Professor(s) | Academic Advisor(s), Academic Staff, Academic Supervisor(s), Athlone Developers, Lecturer(s), Coordinator, Course Directors, Demonstrators, Experts, Facilitators, Faculty Staff, IT Staff, Professor(s), Tutor(s), Administrative Staff |

| 3 | ACTIVITY | Curricular Activities, Academic Activities, Academic Competitions, Academic Events, Academic Talks, Academic Workshops, Art Competitions, Ball Bashes, Campus Activities, Campus Events, Campus Trips, Class Debates, Class Discussion, Class Parties, Class Trips | Group Activities, Class Discussions, CV Workshops, Campus Events, Class Trips, Clinical Activities, Clubs/Events, Collaborative Projects, Critical Debates, Extra-Curricular Activities, Field Trips, Group Projects, Group Discussions, Guest Lecturers, Guest Speakers | ACM Competitions, Campus Events, Class Debates, Discussion Activities, External Talks, Field Trip, GUEST SPEAKERS, Group Workshops, Industry Talks, Networking, Online Activities, Online Events, Online Webinars, Opening Days, Role Plays. |

| 4 | LEARNING_RESOURCES | Groupwork Assignments, Online Recordings, Group Presentations, Problem Based Learning Worksheets, Lab Tutorials, Problem-Based Learning, Projects, In-Class Exams, Recorded Video, Notes from Lectures, Tutorial Classes, Teaching Material, Clinical Cases, Reading Tasks, Online Homework Assignments | Lectures, Practical Courses, Group Assignments, Reading Material, Notes, Revision Sheets, Virtual Learning, Online Labs, Interactive Classes, Labs, Supporting Material, Videos, Hands-On Activities, Online Sessions | Lecture Content, Online Study Materials, Case Study Examples, Experiential Classes, Labs And Presentations, Blended Lectures, Tutorial Classes, Practical Courses, Articles, Videos, Lecture Notes, Video Lectures, Slides, Online Books, Online Workbooks |

| 5 | ORG | Student Union, Clubs, Societies | ACL, ACM, AIT, CIT, DCU, DIT, IT Carlow, IT Sligo, LIT, Maynooth, NCI, NED, NUI Galway, TCD | ACM, AIT, CIT, DCU, Dublin Science Gallery, IT Carlow, LIT, LYIT, NUI Galway, Sligo IT, TCD, TU Dublin, UCC, UCD |

| 6 | SUPPORT&FACILITY | Writing Support, Help Desk, Curve, Ceim Mentoring, Student Services, Teaching Centres, Math Centres, Career Support, Computer Facilities, Academic Writing, Support Centres, IT Classes, Breakout Rooms, Workshops, Academic Services | Academic Writing Centres, Academic Learning Centre, Placement Opportunities, Student Services, IT Learning Centre, Placement Career Talks, Help Centres, Tutorial Rooms, Disability Office, Counselling Support, Seminars, Space To Study, Electronic Workshops, Placements/Internship, Peer Support Learning Centre | Skill Centre, Free Clubs, Computer Courses, Campus Facilities, Library Room, Drop-in Study Centre, Online Masters, Academic Writing Centres, Medical Centre, Gym, Job Fairs, FITNESS CAMPS, Library, Clubs/Societies, Academic Support Services |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ahmed, A.; Hayes, M.J.; Joorabchi, A. Assessing Student Engagement: A Machine Learning Approach to Qualitative Analysis of Institutional Effectiveness. Future Internet 2025, 17, 453. https://doi.org/10.3390/fi17100453

Ahmed A, Hayes MJ, Joorabchi A. Assessing Student Engagement: A Machine Learning Approach to Qualitative Analysis of Institutional Effectiveness. Future Internet. 2025; 17(10):453. https://doi.org/10.3390/fi17100453

Chicago/Turabian StyleAhmed, Abbirah, Martin J. Hayes, and Arash Joorabchi. 2025. "Assessing Student Engagement: A Machine Learning Approach to Qualitative Analysis of Institutional Effectiveness" Future Internet 17, no. 10: 453. https://doi.org/10.3390/fi17100453

APA StyleAhmed, A., Hayes, M. J., & Joorabchi, A. (2025). Assessing Student Engagement: A Machine Learning Approach to Qualitative Analysis of Institutional Effectiveness. Future Internet, 17(10), 453. https://doi.org/10.3390/fi17100453