Abstract

The rise of 5G networks is driven by increasing deployments of IoT devices and expanding mobile and fixed broadband subscriptions. Concurrently, the deployment of 5G networks has led to a surge in network-related attacks, due to expanded attack surfaces. Machine learning (ML), particularly deep learning (DL), has emerged as a promising tool for addressing these security challenges in 5G networks. To that end, this work proposed an exploratory data analysis (EDA) and DL-based framework designed for 5G network intrusion detection. The approach aimed to better understand dataset characteristics, implement a DL-based detection pipeline, and evaluate its performance against existing methodologies. Experimental results using the 5G-NIDD dataset showed that the proposed DL-based models had extremely high intrusion detection and attack identification capabilities (above 99.5% and outperforming other models from the literature), while having a reasonable prediction time. This highlights their effectiveness and efficiency for such tasks in softwarized 5G environments.

1. Introduction

The rise of 5G networks is driven by the increasing deployment of Internet of Things (IoT) devices and the expansion of mobile and fixed broadband subscriptions [1,2]. This growth has led to the emergence of new services and applications, such as augmented/virtual reality (AR/VR), smart factories, and autonomous vehicles, which are expected to be supported by 5G networks [1,2]. According to the International Telecommunication Union (ITU), these services fall into three main categories: enhanced mobile broadband (EMBB), massive machine type communication (MMTC), and ultra-reliable low latency communication (URLLC) [3,4]. Additionally, 5G networks must support existing applications and services from previous generations (2G/3G/4G), such as mobile voice, messaging, and Internet access. The COVID-19 pandemic further increased the demand for these services, with Austria reporting a significant increase in the percentage of individuals using the Internet for audio or video calls [3,4]. Similarly, Spain reported a surge of more than 200% for remote working and video-conferencing applications [5], while Germany observed a 50% increase in video-conferencing traffic [6]. The USA also reported a relative increase of around 25% in the average monthly data consumption between 2022 and 2023 [7]. This illustrates the global trend of increased network connections and data consumption. Therefore, 5G networks are expected to have a highly adaptable architecture across all levels, to be able to deal with the increased traffic demand. This is made possible by using softwarization and virtualization techniques, in which network functionalities are decoupled from the underlying hardware and replaced with software counterparts, to achieve a high degree of flexibility/programmability and reduce the total cost of ownership [8,9].

Coupled with the rise of softwarized 5G network deployment, there has been a rise in network-related attacks, driven by expanding attack surfaces. For example, Forbes reported a staggering increase in the number of such attacks, with over 2.9 billion events recorded in 2019, marking a threefold increase from 2018 [10]. Similarly, a report from Sonicwall indicated a 215.7% increase in IoT malware attacks between 2017 and 2018 [10]. While researchers have suggested various methods to safeguard these environments and networks, including software-defined perimeter (SDP) solutions and improved attack detection mechanisms [11,12,13,14], there remains a need for more effective detection mechanisms to address security concerns in 5G-enabled networks.

Machine learning (ML) in general, and deep learning (DL) specifically, has emerged as a promising solution for detecting security threats in 5G-enabled networks and environments, due to its ability to handle the vast amount of data generated. ML systems can "learn" without explicit programming, enhancing system flexibility and adaptability [15]. This approach has proven effective and computationally efficient in various applications [16,17,18], making it an ideal candidate for intrusion detection mechanisms in 5G networks.

Accordingly, this work proposes a complete exploratory data analysis (EDA) and deep learning (DL) pipeline that comprehensively described the considered dataset and correspondingly motivated the choice ML algorithms. As such, a summary of this work’s main contributions is as follows:

- Apply exploratory data analysis techniques to better understand the considered dataset’s characteristics.

- Propose a complete DL pipeline for softwarized 5G network intrusion detection.

- Evaluate the performance of the different algorithms and compare them to other literature works.

It is worth noting that, in this context, softwarized 5G network intrusion detection refers to the process of developing intrusion detection frameworks to be deployed as software-based network functions that can run on generic computing devices, rather than requiring dedicated proprietary computing hardware.

2. Related Work

As attacks on 5G software-based networks and services continue to increase, ensuring the security of these environments has become paramount. In response, several researchers have suggested using ML-based methods and frameworks to identify and address network attacks. The following provides a concise summary of the ML-based security solutions proposed in the existing literature, for both general networks and 5G networks specifically.

2.1. General ML-Based Security Works

Machine learning (ML) has been extensively studied in the context of network intrusion detection systems (IDSs), with various approaches proposed in the literature [19,20,21,22,23,24,25,26,27,28]. One study offered an overview of using ML and deep learning (DL) frameworks, for both network intrusion detection systems and computer vision tasks [19]. Another work provided a comprehensive taxonomy of ML models used in IDSs, with the key metrics used for their evaluation, as well as the strengths and weaknesses of each model [20].

In a different direction, researchers developed an optimized ML-based framework for intrusion detection, specifically focusing on improving the accuracy of the system [24]. Building on this work, they proposed a multi-stage optimized ML-based framework aimed at maintaining high detection performance, while reducing the computational complexity involved.

Additionally, researchers developed a series of tree-based supervised ML models designed to protect software-defined networks (SDN) [28].

Other approaches in the literature combined clustering-based classification techniques with ensemble feature selection methods to effectively identify unknown attacks [29].

2.2. ML-Based 5G Network Security Works

The existing literature has explored various security aspects of 5G and future networks, recently focusing on the applications and potential of ML for 5G network security. AI is seen as a key enabler for these networks [30]. ML algorithms, combined with software-defined networks (SDN) and network function virtualization (NFV), extract data from networks to enhance efficiency. Challenges such as network resource management, security, optimized services, and network traffic management have been discussed in multiple scenarios [31].

One study presented a comprehensive analysis of the threat landscape, core technologies, and existing works in 5G security, categorizing them into availability, authentication, non-repudiation, integrity, and confidentiality [32]. Another work proposed an intelligent ML-based device authentication mechanism for 5G networks, utilizing supervised, unsupervised, and reinforcement-based learning [33]. To counter emerging attacks in 5G networks, an adversarial machine learning method was proposed to identify and defend against attacks in the application layer [34,35,36].

Zero-trust architecture is gaining popularity for preventing attacks exploiting zero-day vulnerabilities, which are particularly relevant to the diverse hardware and software components of 5G networks. ML algorithms can enhance the defense measures in network infrastructure security [37]. Approaches using ML algorithms aim to protect SDN-based 5G networks against DDoS attacks and detect anomalies in network traffic [38].

Intrusion detection systems (IDS) within 5G architectures are implemented using ML under a software-driven environment [39]. These systems investigate security functions to identify network intrusions intelligently. Privacy concerns in 5G architectures focus on the technologies handling user data, with proposed solutions including GAN-based ML approaches to conceal user location and trajectory information [39]. Differential privacy techniques are also used to cloak user location data in edge devices [40].

3. Proposed Approach

This section outlines the proposed approach by providing a detailed description of each of its stages, starting with the data preprocessing step, through to the feature selection step, all the way to the DL-based intrusion detection step. More specifically, it provides a theoretical description and motivation for the algorithms and models considered as part of the proposed framework. This section also analyzes and discusses the computational complexity of the proposed approach.

3.1. Description

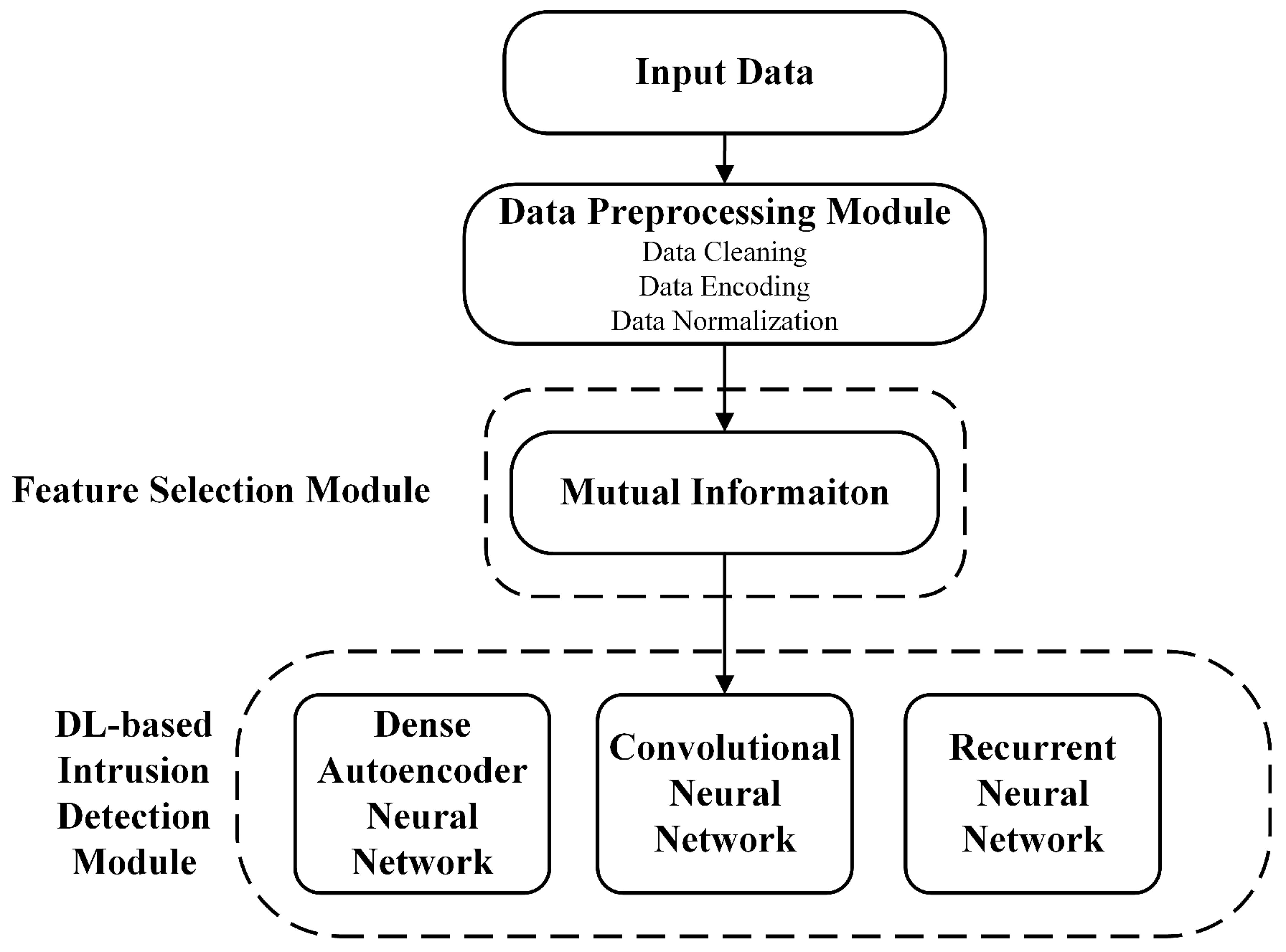

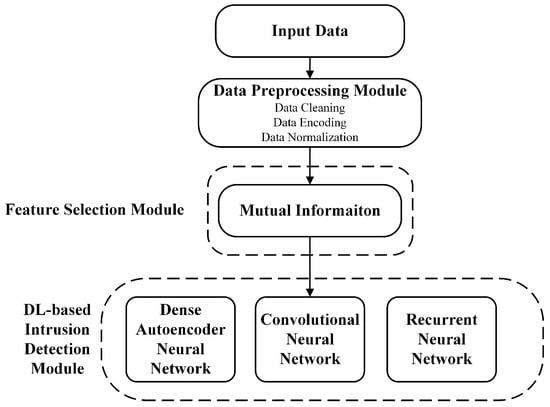

Figure 1 illustrates the proposed DL-based framework for softwarized 5G network intrusion detection. This framework consists of three modules, which are the “data preprocessing module”, the “feature selection module”, and the “DL-based intrusion detection module”. The “data preprocessing module” aims to prepare the input data in a suitable manner that maximizes the performance of the subsequent layers. Its output is then fed into the “feature selection module”, which aims to select important features, to reduce the overall computational complexity of the framework. Finally, the output of that module is then fed into the “DL-based intrusion detection module”, which aims to detect potential malicious traffic and attacks using three different DL-based models.

Figure 1.

Proposed EDA and DL framework for softwarized 5G network intrusion detection.

In what follows, a brief description of each of the three modules is provided.

- (1)

- Data Preprocessing Module: The first module of the framework is the “data preprocessing” module. It aims to adequately prepare the input data in such a manner that it maximizes the performance of the subsequent modules. It consists of three steps, namely “data cleaning”, “data encoding”, and “data normalization”.

- (a)

- Data Cleaning: The first step of data preprocessing is data cleaning. Data cleaning refers to the process of “fixing systematic problems or errors in messy data” [41]. The goal is that the quality of data is improved so that the underlying ML and DL models built upon the data are also of high quality [42]. This process consists of two main steps, error detection and error repair [41,42]. In this work, two main data cleaning techniques are used, namely feature deletion and mean/mode replacement (a statistical technique) [43]. More specifically, features that have a high correlation between missing values and the output label are deleted, as these are considered to be extraneous to the analysis. For other features with some missing values, mean/mode replacement is used. It is worth noting that, for categorical features, missing values are replaced with the mode of the feature. On the other hand, the missing values of numerical features are replaced with the mean value [43]. This is carried out to insure that numerical bias is not added by the data cleaning process when employing error repair techniques.

- (b)

- Data Encoding: The second step of data preprocessing is data encoding. This refers to the process of transforming data/features from one format into another. Typically, this is performed to transform categorical features into numerical or integer features. In this work, two different data encoding techniques are used. The first is “one-hot encoding”, which is used for the input categorical features [44]. This technique adds a new feature column for every potential value of an input feature using a binary indicator (i.e., either 0 or 1) to represent if the value matches the category or not [44]. The second technique is “label encoding”, which is used for the output categorical target label feature [45]. In this case, the target label value is assigned a higher integer if it appears more often, as this reflects the importance and probability of an attack. Therefore, this combination of data encoding insures that regular categorical input features, in which the order of the values is not essential, are represented adequately, without leading the underlying ML/DL algorithm to misinterpret their importance (through using one-hot encoding), while simultaneously guaranteeing that the order is accounted for in the output target label encoding (through label encoding).

- (c)

- Data Normalization: The third step of data preprocessing is data normalization. This refers to the process of scaling the features so that they have similar ranges [46]. This is performed to ensure that the ML/DL models trained are not biased to particular features, due to them having differing magnitudes in their values. To that end, this work proposed using a custom hybrid data normalization, to ensure consistent scaling of the features and consequently facilitating the convergence of the underlying ML/DL models. More specifically, this work proposed using “min-max normalization” for one-hot encoded features and “Z-score normalization” for regular numerical features (which often follow a Gaussian distribution [47]).In the case of “min-max normalization”, the scaled features can be calculated as follows [46]:where for data point X, is the original value of feature i, is the scaled value of the feature, is the minimum of the feature, and is the maximum value for the feature among the data points. As a result, all the min-max normalized features have a unified scale between 0 and 1 [46].In the case of “Z-score normalization”, the scaled features can be calculated as follows [46]:where for data point X, is the original value of feature i, is the scaled value of the feature, is the mean, and is the standard deviation of feature i. As a result, all the z-score normalized features have a unified scale with zero mean and unit standard deviation [46].

- (2)

- Feature Selection Module: The second module of the framework is the “feature selection” module. It aims to select important features, with the goal of reducing the computational complexity of the overall framework. The importance of this module is amplified by the presence of the data encoding step (specifically the one-hot encoding) in the previous module. To that end, the “mutual information” feature selection technique is considered in this work.

- (a)

- Mutual Information: Mutual information feature selection is the process of selecting the features that provide the highest amount of information for the output target label. Mutual information is defined as “the amount of information that a random variable contains in another random variable” [48]. In this case, the mutual information (referred to as ) between each of the input features and the output target label is calculated using the following equation [48]:where is the joint probability density function, and and are the marginal probability density functions of variables X (in this case one of the input features) and Y (in this case the output target label). The benefit of using mutual information feature selection is threefold [49]. The first is that it is not constrained by real-valued random variables, as it computes both the joint distribution and the marginal distribution [49]. This means it can deal with both continuous (numerical) and discrete data (numerical or categorical) [50]. This is crucial in this case, since all the features are either discrete numerical or categorical features. Thus, calculation of the mutual information score is easily applied. The second benefit is that it is capable of measuring both linear and non-linear relationships, which is also essential in such environments where many of the features exhibit non-linear behavior [49]. The third advantage is that this technique ensures invariability to any reversible and differentiable data transformations, which is also important given some of the techniques employed, such as data encoding [49]. Thus, mutual information feature selection is suitable for the application and the type of data observed in this work, as there are no scenarios that could pose difficulties for its implementation.

- (3)

- DL-based Intrusion Detection Module: The third module of the framework is the “DL-based intrusion detection” module. This module focuses on using three different types of DL models for softwarized 5G network intrusion detection, namely “dense autoencoder neural network”, “convolutional neural network”, and “recurrent neural network” models.

- (a)

- Dense Autoencoder Neural Network Model: The first DL model is the dense autoencoder neural network model. This model uses an autoencoder to enhance a dense neural network (DNN) model. An autoencoder is a type of unsupervised deep learning model that uses a combination of backpropagation and compression to reconstruct an output as accurately as possible [51,52]. It allows for an accurate latent representation of the original features, while minimizing the effects of linearity [51,52]. On the other hand, a DNN is a fully connected supervised learning neural network model, in which each neuron in each layer is connected to every other neuron in previous and subsequent layers [53]. The autoencoder component is used for feature extraction, while the DNN component learns the characteristics for accurate prediction. Hence, this model leverages the power of DL to learn complex patterns and representations from the input data, while reducing its dimensionality, thereby improving the computational efficiency and generalization performance.

- (b)

- Convolutional Neural Network Model: The second DL model considered is the convolutional neural network (CNN) model. CNNs are a type of feedforward neural network that can extract features using convolution operations [54,55]. Convolution operations are basically an inner product computation between the weight vector and the vectorized version of the input feature set of the previous layer in the neural network architecture [56]. The advantage of CNNs compared to other deep neural network models are threefold, namely allowing for local connections, which eliminates the need for a fully connected architecture; facilitating weight sharing, which reduces the number of parameters to be determined; and achieving dimensionality reduction by using a pooling layer to reduce the amount of data needed, while retaining useful information [54,55,56]. This makes them a reliable and attractive option for many applications and fields.

- (c)

- Recurrent Neural Network Model: The third DL model considered is the recurrent neural network (RNN) model. RNNs are a feedforward neural network architecture with at least one feedback loop [57,58]. The goal is to achieve some form of “memory” regarding the learning process [57,58]. Consequently, RNNs are able to perform “temporal processing” and “learn sequences”, i.e., they are capable of dealing with time-sequence data and can identify patterns that repeat over time [57,58]. This represents an advantage over other DL-based models and architectures, as they are able to not just learn patterns within a data sample, but also across several sequential data samples [59]. This makes them suitable for a variety of applications, such as natural language process, audio and video data processing, stock prediction, and cybersecurity [60].

The proposed approach has an attractive feature in that it can be adopted in various scenarios. More specifically, it can be deployed in a distributed manner at the network edge level or in a centralized manner at the core level, with each option having its own advantages and disadvantages. In the case of the distributed edge level deployment, the DL-based intrusion detection module would be deployed at the 5G base station. Consequently, traffic from users that is sent to the base station is investigated to determine if there are any malicious users that are initiating attacks. The advantage of such a deployment scenario is that it provides a more granular monitoring for network attacks. Additionally, this can offer real-time detection capabilities, as the data are analyzed closer to the source. However, the main disadvantage is that this approach may not be able to detect attacks that are distributed in nature, especially when they are performed in a distributed manner by multiple users rather than a single user. In contrast, for a centralized core level deployment scenario, the DL-based intrusion detection module would be deployed at the core network. In this case, the core network would collect data from multiple base stations and analyze them to detect any attacks. In this case, the servers forming the core network would be responsible for detecting attacks. The main advantage of such a deployment scenario is that it has a more global view of the data traffic, and thus is better capable of detecting distributed attacks. However, the main disadvantage is that this scenario may take longer to detect attacks (i.e., it cannot offer real-time detection), due to the larger amount of data to be processed/analyzed and due to the transmission delays from the edge back to the core. In this work, it is assumed that the proposed approach is deployed at the edge level, mainly at 5G base stations, to offer more granular intrusion detection capabilities. It is worth noting that models can be trained at the core and then deployed at the edge.

3.2. Computational Complexity

To determine the computational complexity of the proposed framework, each module’s computational complexity is explored. To that end, assume that the dataset consists of N training samples, M features, and L labels.

Starting off with the data preprocessing module, the complexity of the data cleaning step is , since all the samples for all the features need to be inspected to determine and replace missing values. The complexity of the data encoding step is also , since all the samples for all features should be inspected and potentially encoded. Similarly, the data normalization step also has a complexity of , since all the samples of all the features should be normalized. Thus, the data preprocessing step has an overall computational complexity of .

The computational complexity of the feature selection module is that of the mutual information feature selection process. The complexity of the mutual information technique is estimated to be , since it involves sorting the features based on the computation of the mutual information score [61].

Finally, to determine the computational complexity of the DL-based intrusion detection module, the complexity of each of the DL models needs to be explored. The complexity of the dense autoencoder neural network is , since it is a fully connected neural network architecture [62]. However, this can be reduced to using sparse connections and other mathematical operations [62]. In contrast, the computational complexity of a CNN architecture is , where d is the kernel size for the convolution layer within the architecture [63]. Since d and L are typically much smaller than N, we can see that CNNs can be more computationally efficient compared to DNNs. Lastly, the computational complexity of RNNs can be estimated as , where is the number of hidden layers and r is the number of recurrent units [64].

Thus, the overall complexity of the framework ranges between (in the case of dense autoencoder neural networks and CNN models) and (in the case of RNN models).

4. Dataset Description, Exploration, and Discussion

This section provides a detailed description of the dataset, along with all the steps implemented to explore it and preprocess it. The exploratory data analysis process provides insights into the nature and distribution of the dataset. It also explains the need for different data preprocessing steps such as data cleaning, data encoding, and data normalization. It then presents and discusses in detail the insights observed about the nature of the dataset and the impact this has on the choice of the DL models considered as part of the proposed DL-based intrusion detection framework.

4.1. General Description

For the purpose of this research, the 5G Network Intrusion Detection Dataset (5G-NIDD), which contains concatenated data encompassing different attack scenarios, is utilized [65]. This dataset provides a comprehensive representation of network traffic data, including both malicious and benign activities. This dataset was collected from a 5G wireless network in Oulu, Finland, using the 5G Test Network (5GTN) Finland, an open-source ecosystem dedicated to 5G and beyond research [65,66]. Additional equipment used to facilitate data collection included Nokia Flexi Zone Indoor Pico Base Stations, Huawei modems, Raspberry Pi 4 Model B (as the attacker node), an HP laptop running Ubuntu (as the victim server), and a Dell switch [65]. This setup created realistic benign and malicious data flows.

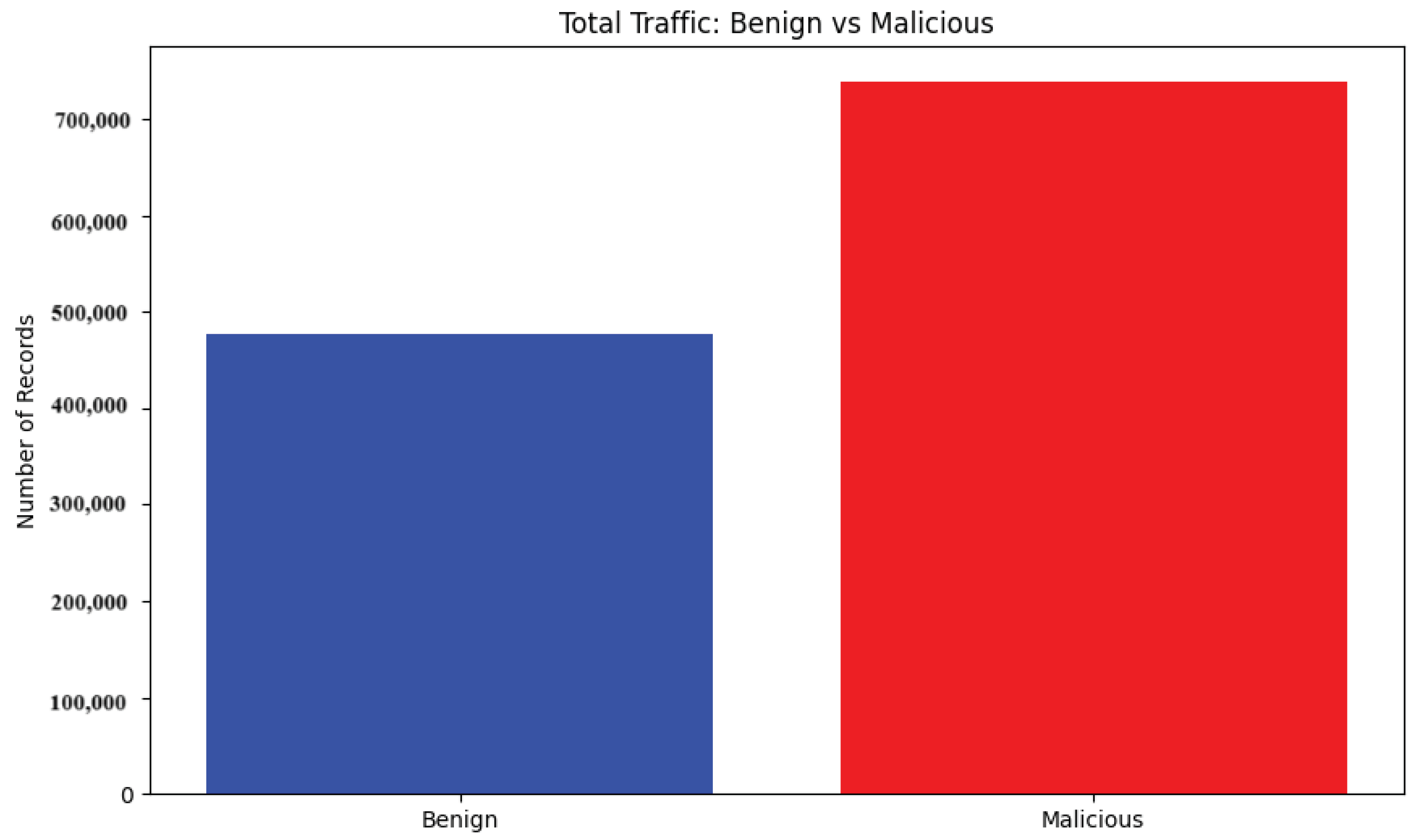

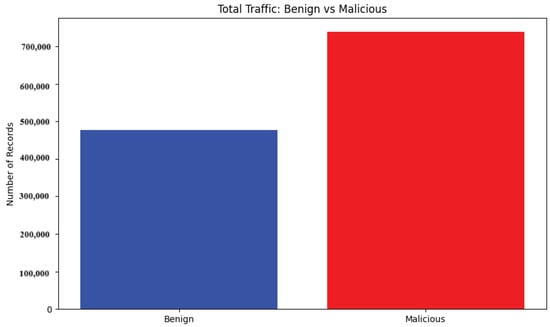

The dataset comprises 1,215,890 data points, with 477,737 representing benign activities and 738,153 representing various malicious activities, as shown in Figure 2. This indicates that the dataset is fairly balanced. This is in contrast to typical intrusion detection datasets, which are often imbalanced, as they have many more normal/benign data points compared to malicious/attack data points [67,68]. Each data point includes 112 collected and encoded features. The malicious activities encompass various attack types, including denial-of-service (DoS) attacks (e.g., ICMP flood, SYN flood, HTTP flood) and port scan attacks (TCP scan and UDP scan).

Figure 2.

Number of benign and malicious attack instances—overview.

Each data point represents a data flow rather than a single packet. A flow is a collection of packets that are transmitted from one source (i.e., one source IP address and one source port) to one destination (one destination IP address and one destination port) using the same protocol [65]. This format provides a high-level description of the common features between transmissions. More specifically, using this format, packet-level features (such as the source IP, source port number, destination IP, destination port number, protocol, etc.) and flow-level features (such as the duration, runtime, total number of bytes, total number of packets, etc.) are collected. As a result, both port scanning attacks (such as UDP scan, SYN scan, etc.) and denial of service attacks (such as UDP flood, SYN flood, TCP flood, etc.) can be easily depicted. Thus, the dataset can describe what happens to the network over time, without explicitly being a time-series dataset. For more details on the dataset, its generation process, and the collected features, refer to the original source [65].

As mentioned earlier, it is worth noting that, although having the number of attack instances is not typical in such an environment, the goal of having such a number of malicious attack instances is twofold. The first is to have fairly balanced data, so that any ML or DL intrusion detection framework is not biased, and hence there is no need for any oversampling to be conducted. The second reason is to have an ample number and variety of attack instances for the DL models to learn from. As a result, multiple performance evaluation metrics can be used, including accuracy, since its value would not be biased by a reduced number of attack data points or samples.

4.2. Data Pre-Processing

To ensure the input dataset was suitable for the DL models considered in this work, various data pre-processing stages were applied. This included data cleaning (tackling the issue of missing values), data encoding, and data normalization.

4.2.1. Data Cleaning

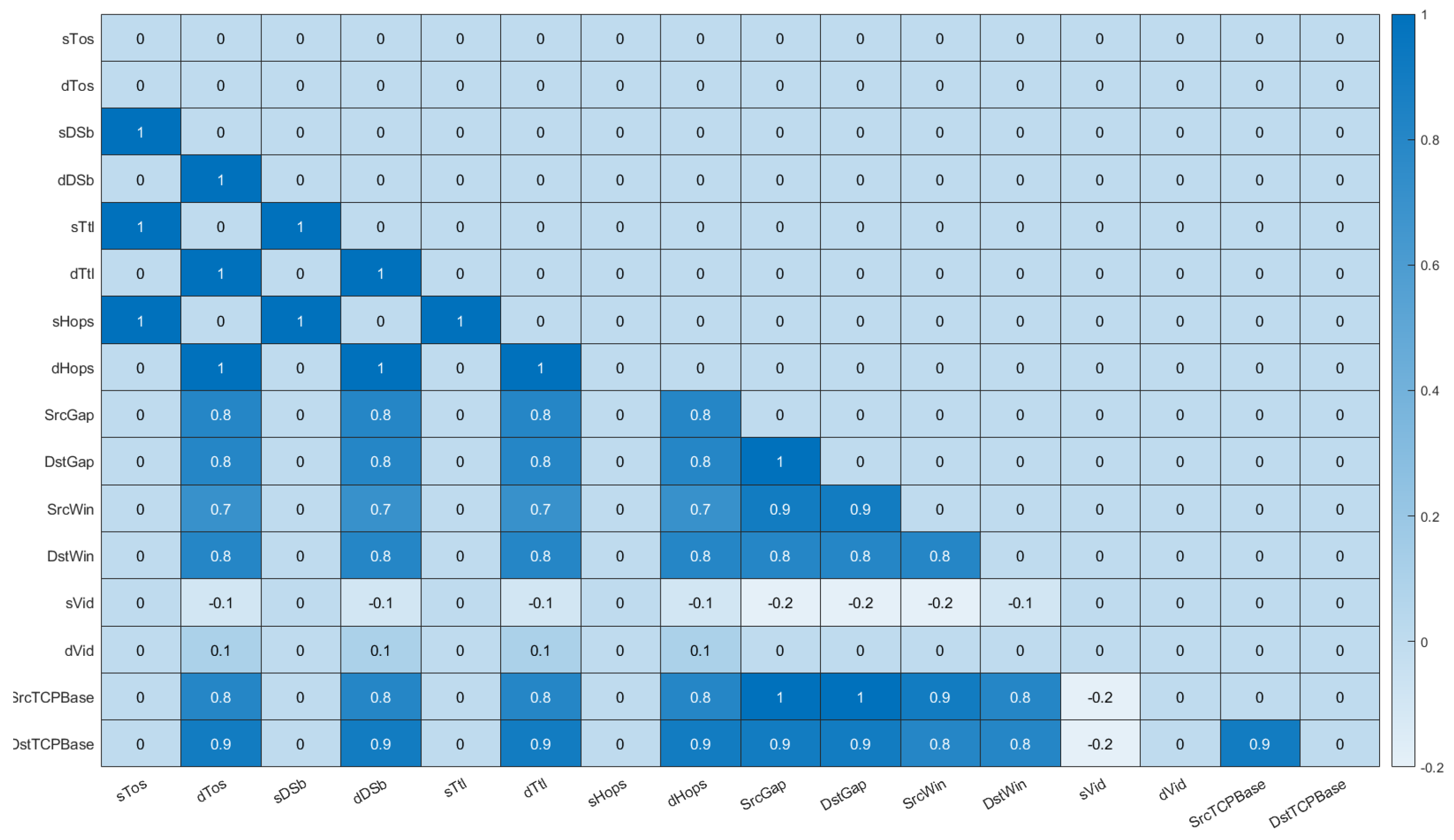

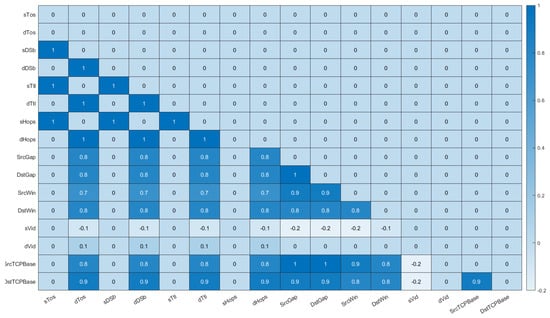

An initial data cleaning process was conducted for the dataset, where duplicate entries were removed. Additionally, a few rows belonging to the benign class were dropped from the dataset, due to a significant number of missing values across various columns. Similarly, certain columns (sVid and dVid) were removed, as they were deemed extraneous to the analysis. Additionally, missing values in each row were replaced with the most frequent value (mode) within each group, as determined by the attack type column, using a custom function. The mode was selected as the imputation method because each of the columns had a low number of unique values (often only 6–7 different values). This indicated that the data were categorical or ordinal in nature. Utilizing the mode in this instance preserved the categorical nature of the data, while avoiding the introduction of additional values that may not be representative of the original data distribution. For a subset of columns, an iterative imputer (MICE) was employed to handle any remaining missing data. Figure 3 depicts the degree of correlation between columns with missing values. More specifically, the figure shows the relationship between features that have missing values, to determine how often they both have a missing value at the same time. For example, it can be seen from the figure that the feature sTos had a correlation value of 1 with sDSb, sTtl, and sHops. This means that every time sTos had a missing value, the features sDSb, sTtl, and sHops all had missing values as well. This was expected, since all these features are related to the data traffic source. Hence, if the system was unable to collect a value for one of them, it would not be able to collect a value for any of them. In a similar fashion, the feature dTos had a correlation value of 1 with dDSb, dTtl, and dHops. Again, this meant that every time dTos had a missing value, the features dDSb, dTtl, and dHops all had missing values. This gave us a better understanding of the relationship that existed between the different features collected and helped us better select features in the “feature selection module”.

Figure 3.

Missing value analysis—the shade of blue represents the degree of correlation of missing values between columns.

4.2.2. Data Encoding

For the data encoding portion, two distinct data encoding techniques were employed. The first was the one-hot encoding technique, which was applied to the input features. This technique was utilized because it made it easier to use ML and DL methods by converting categorical variables into binary dummy variables. Every set of dummy variables had one category eliminated, in order to prevent duplication. On the other hand, label encoding using the LabelEncoder algorithm from Scikit-Learn was applied to the output target label [69]. In this case, the output target labels were transformed into integers to facilitate the work of the underlying DL models.

4.2.3. Data Normalization

In this work, a custom hybrid data normalization process was utilized that ensured consistent scaling and facilitated the convergence of the considered models. More specifically, min-max normalization was applied to the one-hot encoded features, while Z-score normalization was applied to the numerical features [46]. This was done because, with one-hot encoded features, the dummy variables created are always either 0 or 1. Hence, these features do not have a normal-like distribution and thus would provide potentially biased data if other normalization techniques (such as Z-score normalization) are applied. In contrast, input features which are already numerical in nature exhibit a normal-like distribution. Hence, applying Z-score normalization to them closely mimics their original distribution, rather than transforming it. As a result, the input features become more consistent and are better suited to being fed to the underlying DL models.

4.3. Exploratory Data Analysis Discussion

Exploratory data analysis (EDA) can be defined as the process of “using descriptive statistics and graphical tools to better understand data” [70]. Its goal is to gain additional insights into the nature of a dataset and facilitate the detection of any outliers and anomalies within it [70]. Other than helping visualize the data, EDA can help us create hypotheses that can later be tested [71]. That is why EDA is considered as a crucial first step in any data mining or ML/DL framework development, as it can guide the process and choice of models within the framework [71]. It is worth noting that the EDA acronym in this context (within AI/ML applications and frameworks) should not be confused with the commonly used acronym in electronic design (specifically electronic design automation). In this context, it specifically refers to the process of exploring the data and analyzing to uncover underlying patterns within the data.

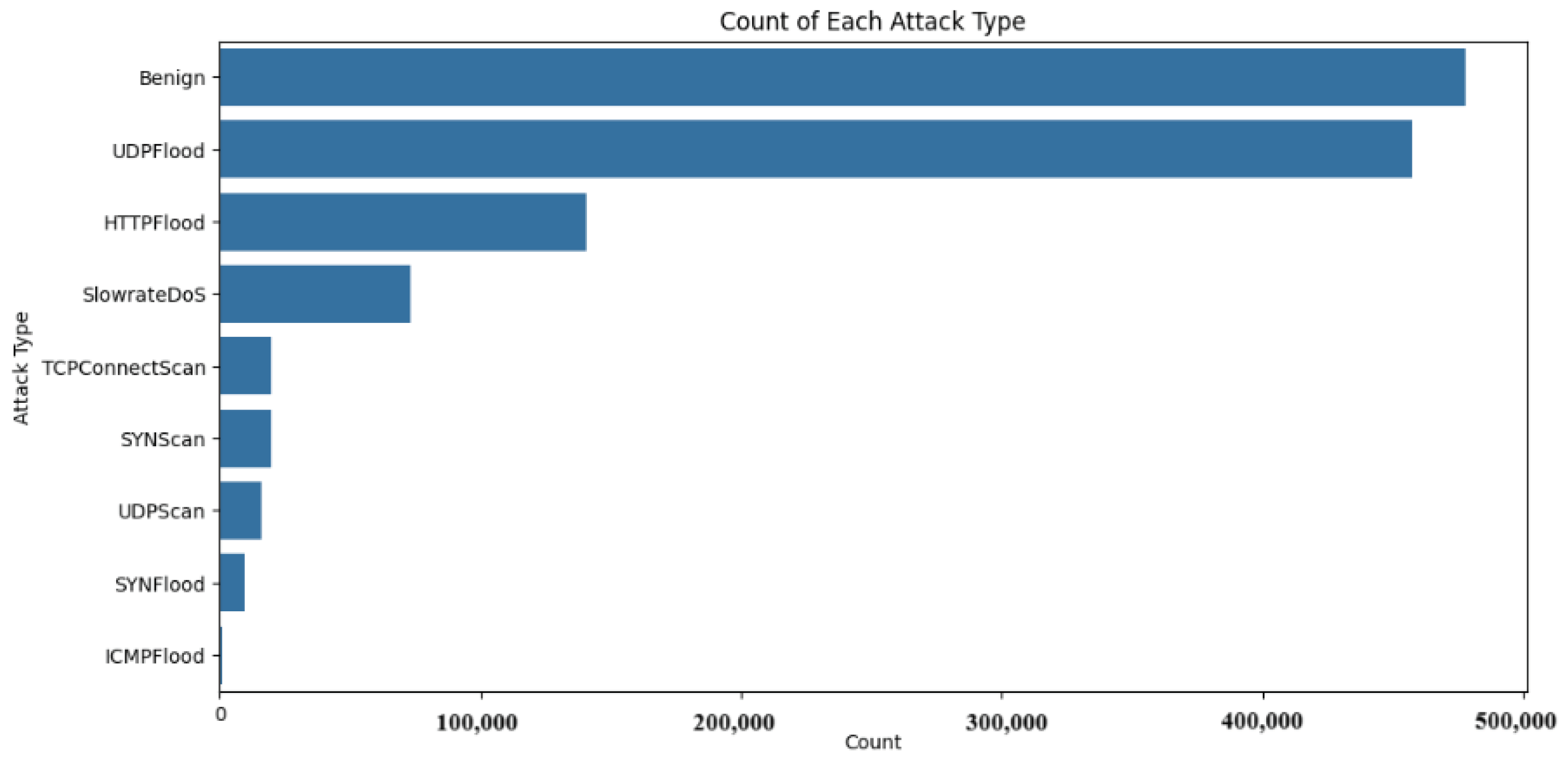

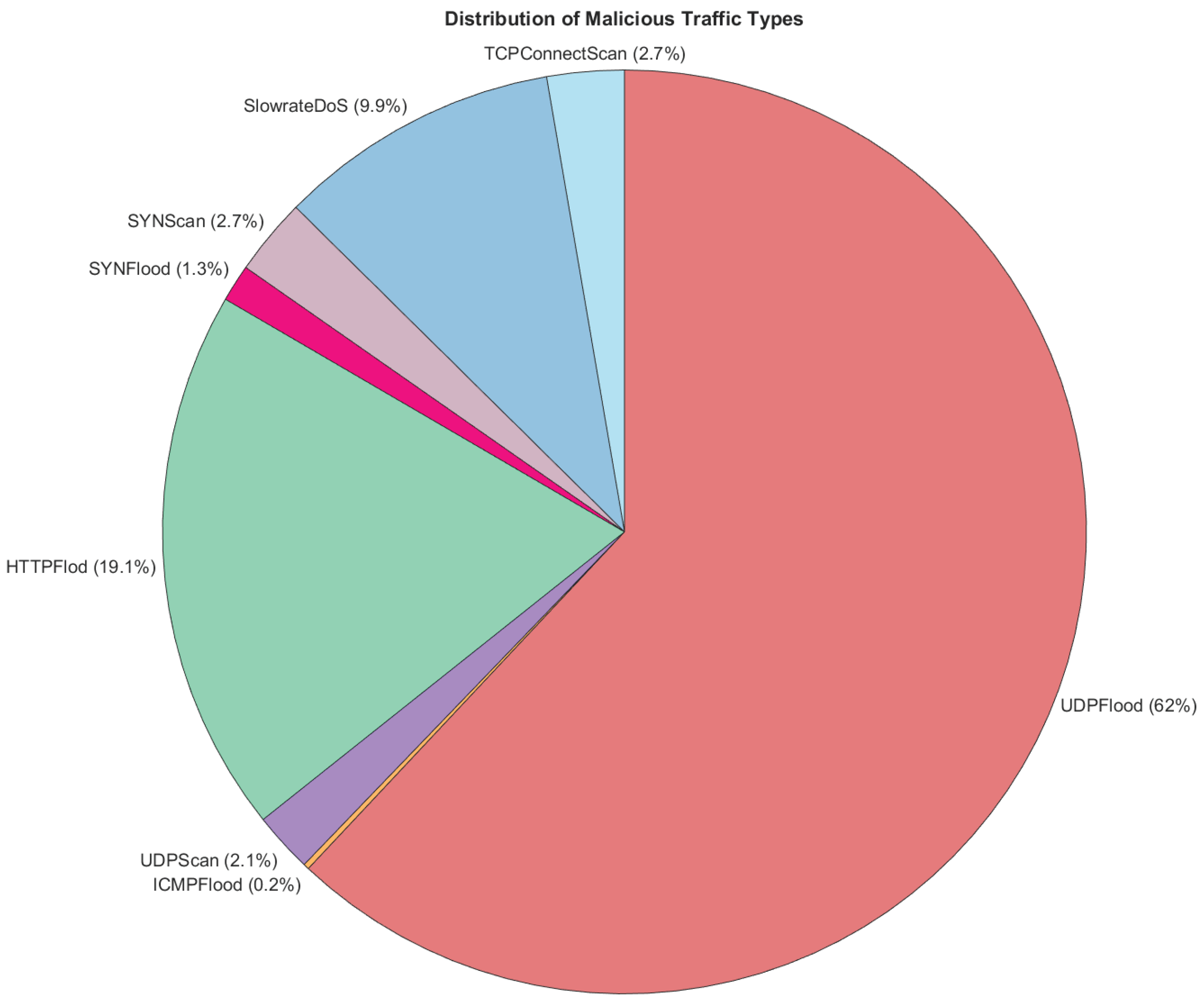

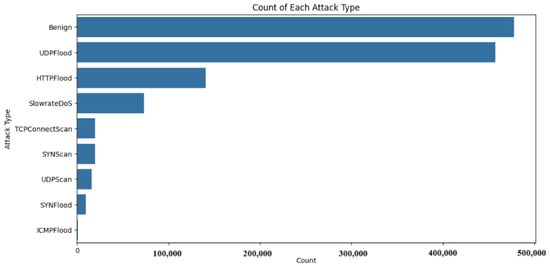

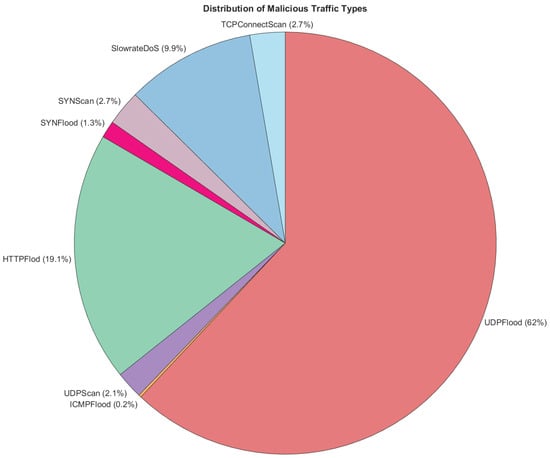

As part of the exploratory data analysis process, several steps were taken. Starting off with a simple counting, Figure 4 and Figure 5 outline the number and percentage distribution of malicious attack instances. It can be seen that the top attack types were UDPFlood and HTTPFlood, which are common distributed denial-of-service (DDoS) attacks. This mimics real-life attack patterns, with a recent report conducted by QRATOR labs indicating that UDP flood attacks constituted 60.2% of DDoS attacks in 2023 [72]. Similarly, the report stated that other flooding attack patterns such as IP flood (similar to HTTP flood) and SYN flood accounted for roughly 23% of attacks. This reinforces the realistic nature of the 5G-NIDD dataset generated in [65].

Figure 4.

Number of benign and malicious attack instances—categorized per attack type.

Figure 5.

Attack type distribution.

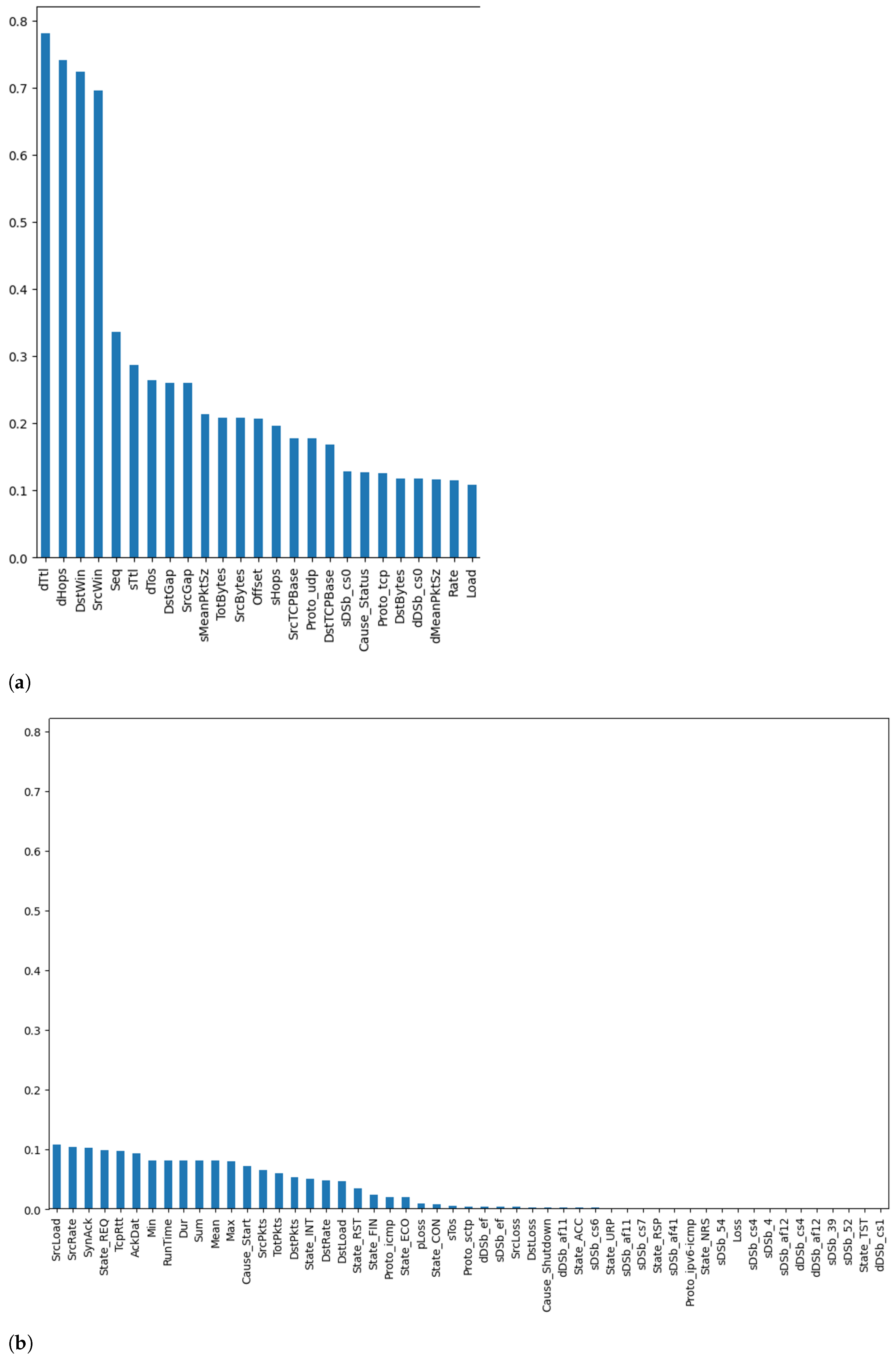

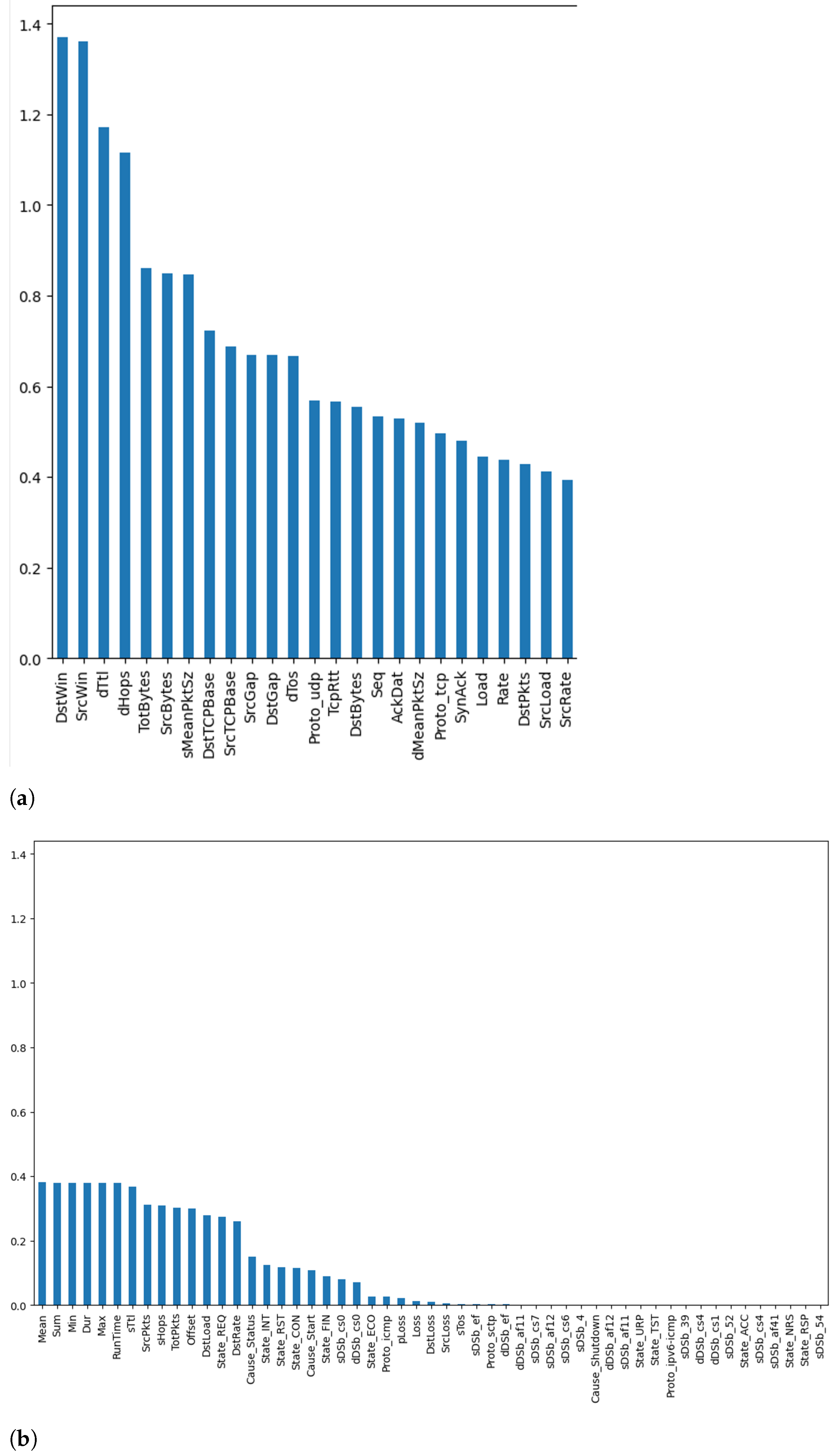

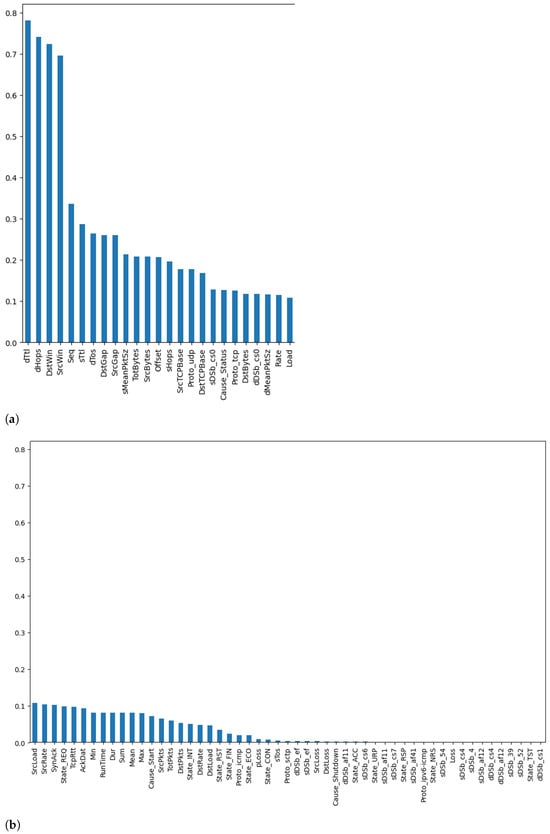

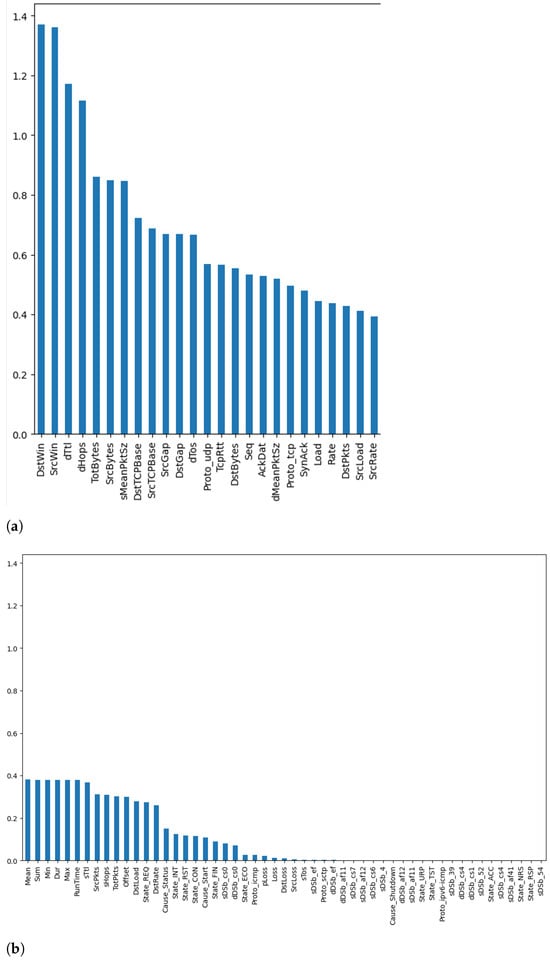

The next step was calculating the mutual information score of the different features with respect to the class label. Figure 6 and Figure 7 plot the mutual information score for the binary (i.e., benign or malicious) and multi-class (i.e., categorized by attack type) cases, respectively. More specifically, Figure 6a and Figure 7a represent the top 25 features, while Figure 6b and Figure 7b represent the remaining features for the binary and multi-class cases, respectively. It can be seen from these figures that the top features for both cases were destination time-to-live (dTtl), number of hops to destination (dHops), destination window (DstWin), and source window (SrcWin). The only difference was is the order. This implies that, from a holistic point of view, features such as dTtl and dHops provided more insights into whether the instance was an attack or not. However, when delving deeper into the data and trying to determine the attack type, features such as DstWin and SrcWin were better capable of determining the attack type, as they provide more information about it. These visualizations give us insights into the potential impact of these different features on the overall performance of the underlying DL models that are used for intrusion detection and attack classification.

Figure 6.

Mutual information—binary case: (a) Top 25 Features, (b) Remaining Features.

Figure 7.

Mutual information—multi-class case: (a) Top 25 Features, (b) Remaining Features.

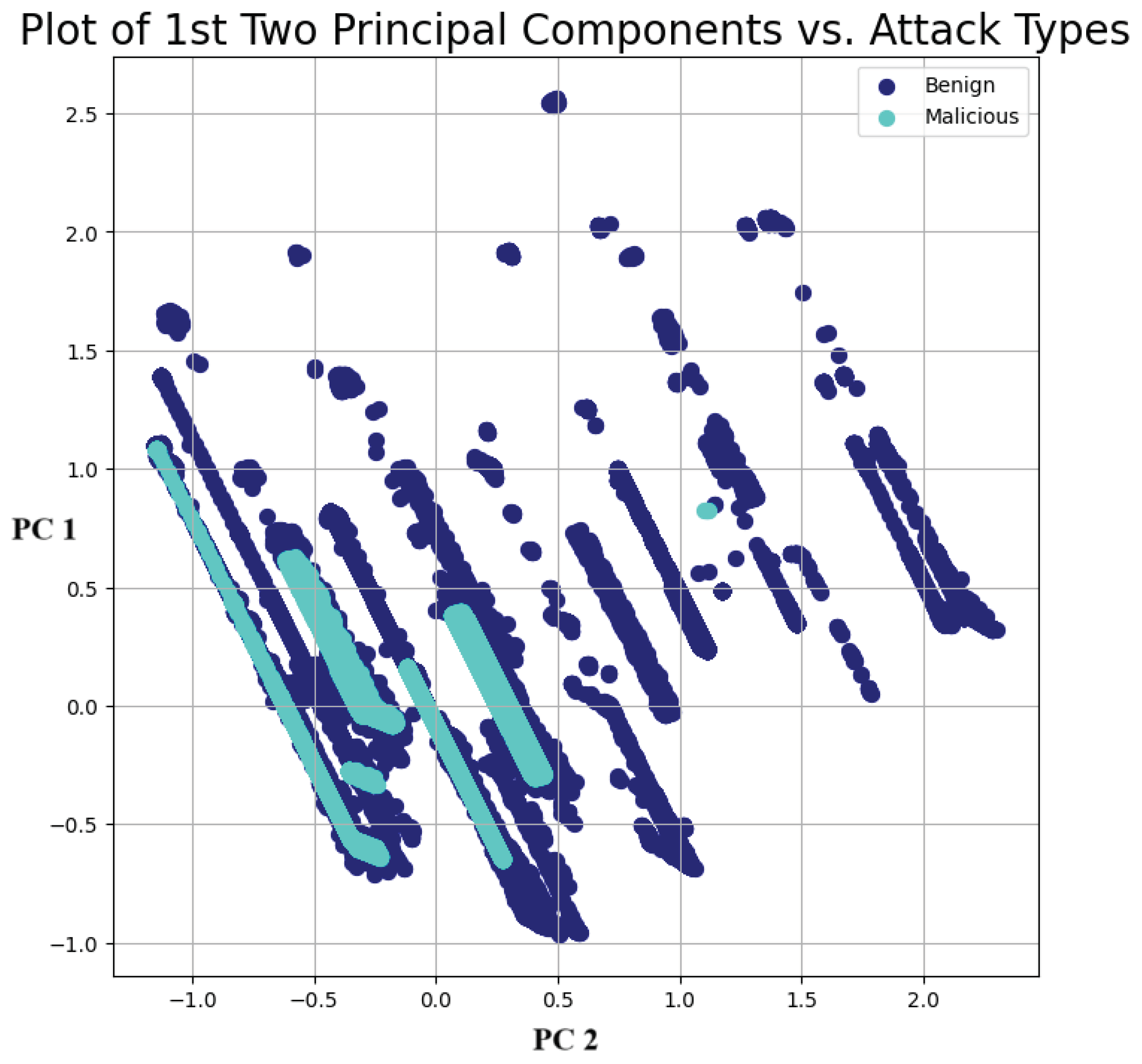

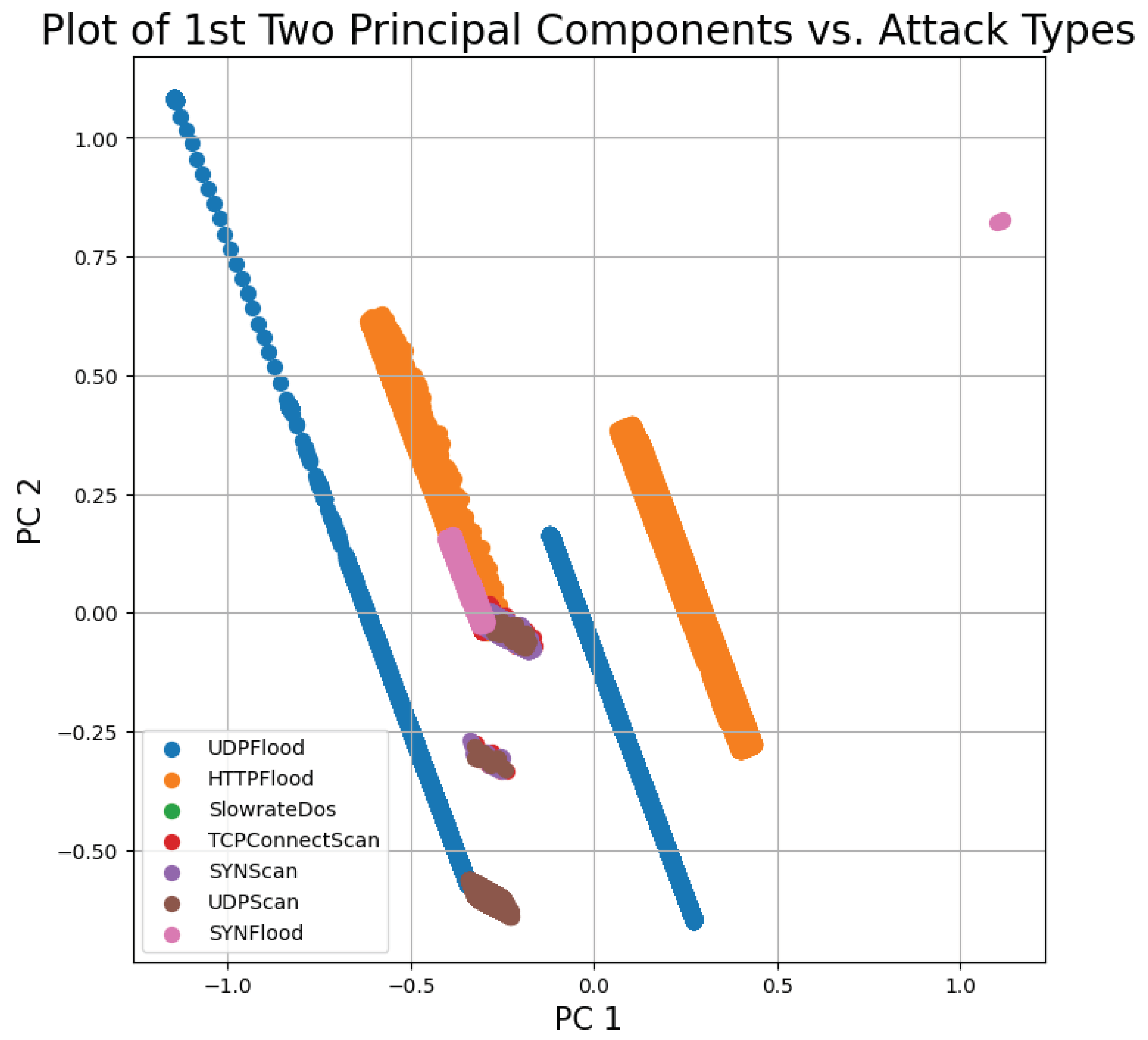

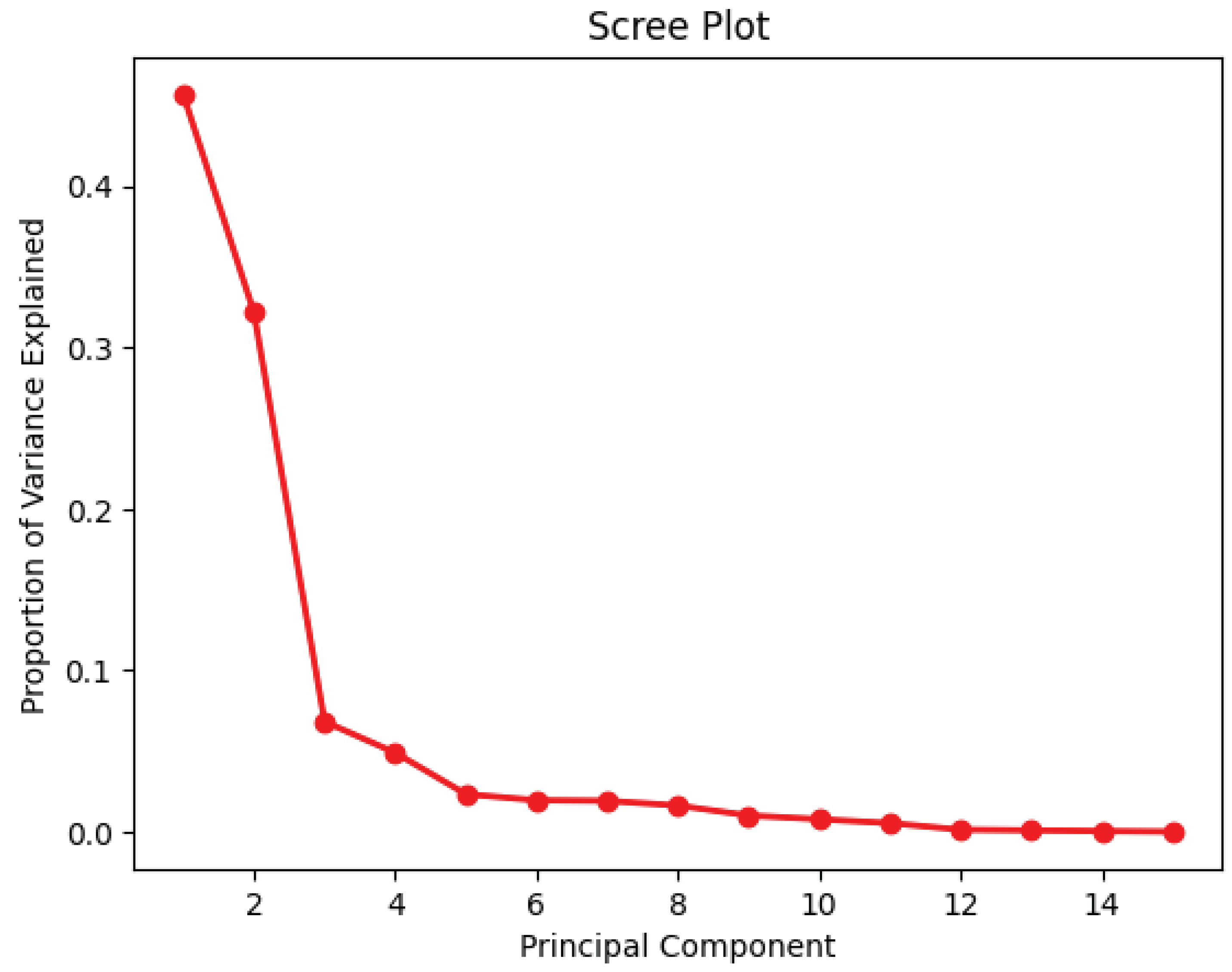

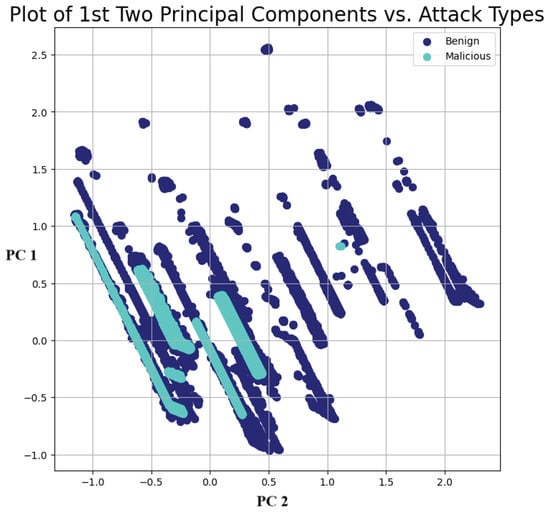

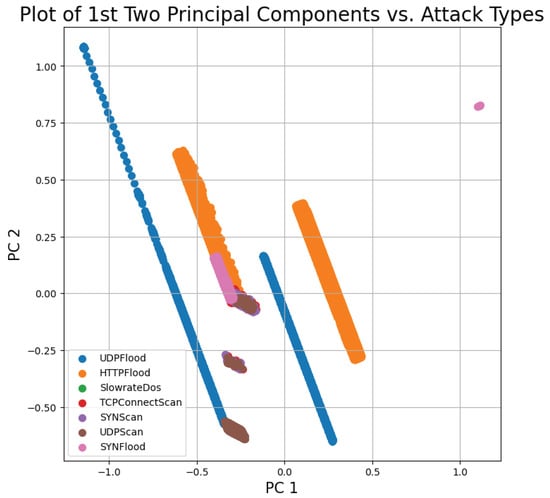

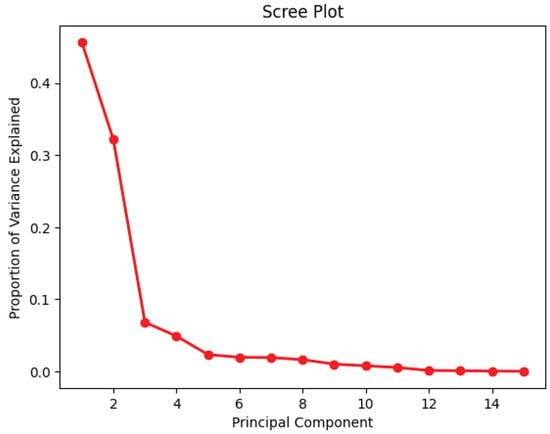

To further explore the data, a principal component analysis was conducted to obtain insights into the overall distribution of the data per class. Figure 8 and Figure 9 plot the 1st and 2nd principal components for the binary and multi-class cases, respectively, while Figure 10 plots the importance of each principal component. As can be seen from Figure 8 and Figure 9, the data were highly non-linear. This was expected, since network traffic data often exhibit non-linear trends, as observed in multiple previous works [15,16,17,27]. This again further highlights and emphasizes the need for ML and DL models that can effectively and efficiently deal with non-linear data. Thus, the choice of using DL models such as DNN with autoencoders, CNNs, and RNNs was justified. Additionally, the choice of plotting only the first two principal components was justified by the results shown in Figure 10, which illustrate that these two components explained nearly 80% of the variance within the data.

Figure 8.

Principal component analysis—binary case.

Figure 9.

Principal component analysis—multi-class case.

Figure 10.

Principal component analysis—importance.

5. Experimental Results and Discussion

This section presents the experiment setup, along with the performance metrics used as part of the evaluation. It also describes the architecture implementation details of the different DL-based models proposed. Additionally, it presents and discusses the results achieved for both the binary and multi-class scenarios.

5.1. Experiment Setup

The experiments were carried out on the Sol supercomputer at Arizona State University, utilizing NVIDIA A100 GPUs. Each node was equipped with 15 GB of memory, providing substantial computational power for the thorough evaluation of the proposed DL models. The setup ensured rigorous testing and training processes, with performance metrics meticulously analyzed to assess the effectiveness of the developed models in identifying various cyber threats in the context of softwarized 5G networks.

5.2. Performance Metrics

The performance of the proposed DL-based intrusion detection framework was evaluated using several metrics, including testing accuracy, precision, recall, and F1-score, calculated as shown in the equations below [16,73]:

Additionally, the training and testing time (also referred to as prediction time) were also evaluated. The training time was used to better illustrate the computational complexity in a more quantifiable manner. Thus, measuring it aimed to confirm the theoretical computational complexity analysis conducted. On the other hand, the testing/prediction time aimed to provide insights into the detection capabilities of the models and their suitability for real-time or near real-time deployment. Accordingly, the considered metrics provided valuable insights into both the capabilities and constraints of the models.

5.3. DL Architecture Implementations

The implementation details of each of the considered DL models are provided below.

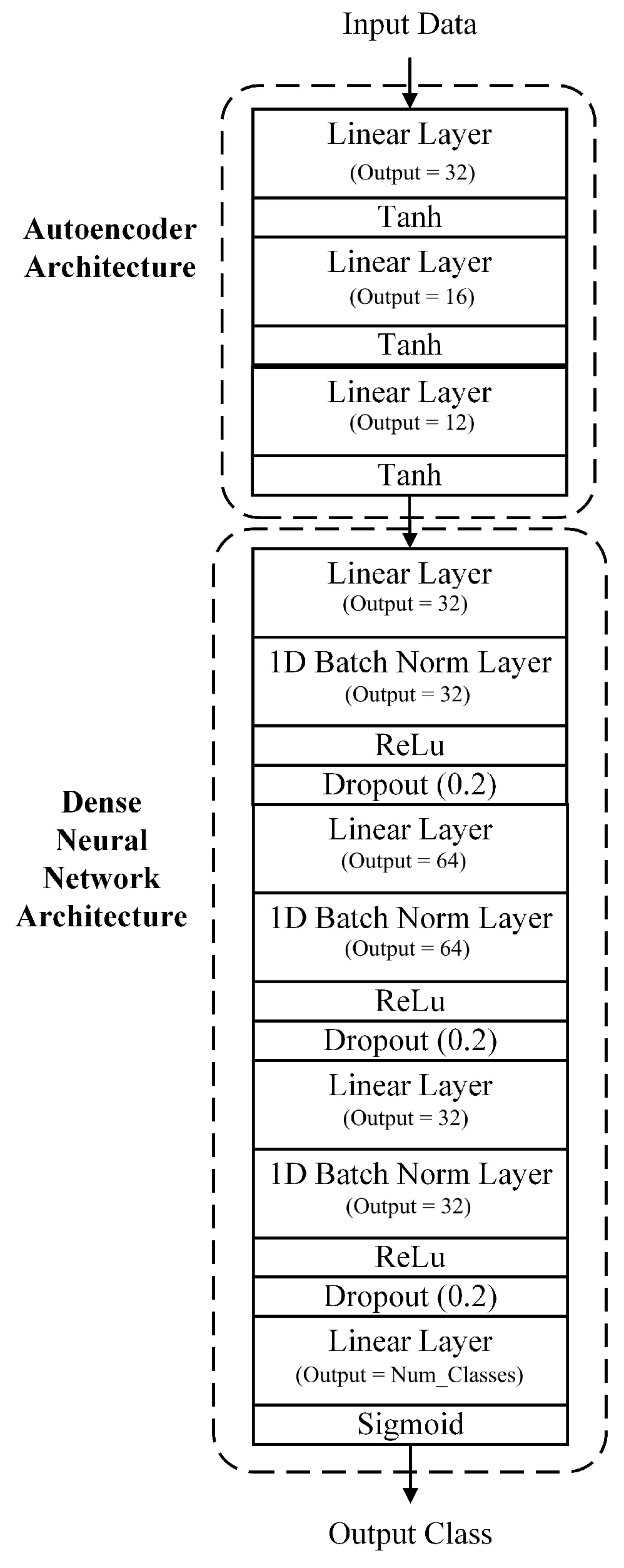

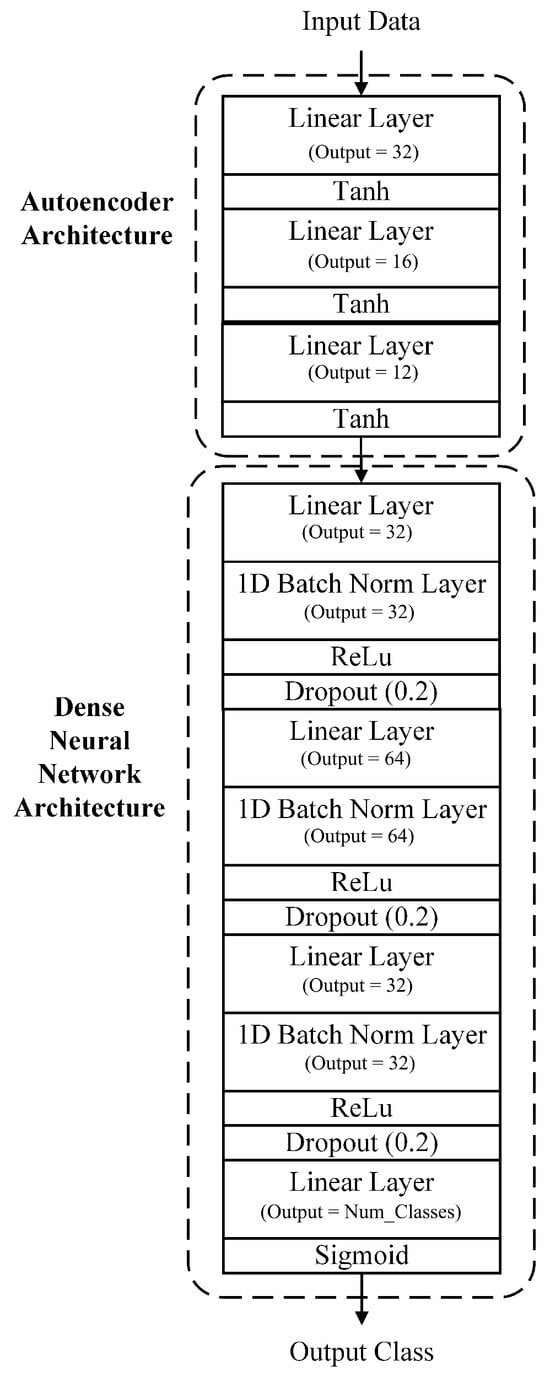

5.3.1. Dense Autoencoder Neural Network Architecture

The considered dense autoencoder neural network model consisted of two main portions, namely the autoencoder and the dense neural network portions. The autoencoder portion consisted of three blocks made up of one linear layer, followed by a tanh activation layer, with each of the linear layers having a different output size. More specifically, the first linear layer had an input of 112 (size of the input feature set) and an output of 32. The second linear layer had an input size of 32 and an output size of 16. Finally, the third linear layer had an input size of 16 and an output size of 12. For the autoencoder portion, the combination of the linear layer followed by the tanh layer allowed for the development of accurate models, while being able to deal with any potential negative values that might arise as part of the feature extraction process from the input given.

The output of the autoencoder portion was then fed into the dense neural network portion, which consisted of multiple blocks made up of a linear layer, a batch normalization layer, a ReLu activation layer, and a dropout layer. More specifically, the first linear layer had an input size of 12 (size of the input given by the autoencoder portion) and an output size of 32. The second linear layer had an input size of 32 and an output size of 64, while the third linear layer had an input size of 64 and an output size of 32. Finally, the last linear layer had an input size of 32 and an output size equal to the number of output classes (two in the binary case and eight in the multi-class case). At the end of the architecture, a sigmoid activation layer was used to produce the predicted output class. It is worth noting that the combination of the linear layers and the ReLu activation function allowed the model to deal with any non-linear relationship between the features extracted by the autoencoder part in an efficient manner, particularly given that the ReLu activation function avoided the vanishing gradient problem and provided excellent model accuracy. The dense autoencoder neural network architecture is shown in Figure 11.

Figure 11.

Dense autoencoder neural network architecture.

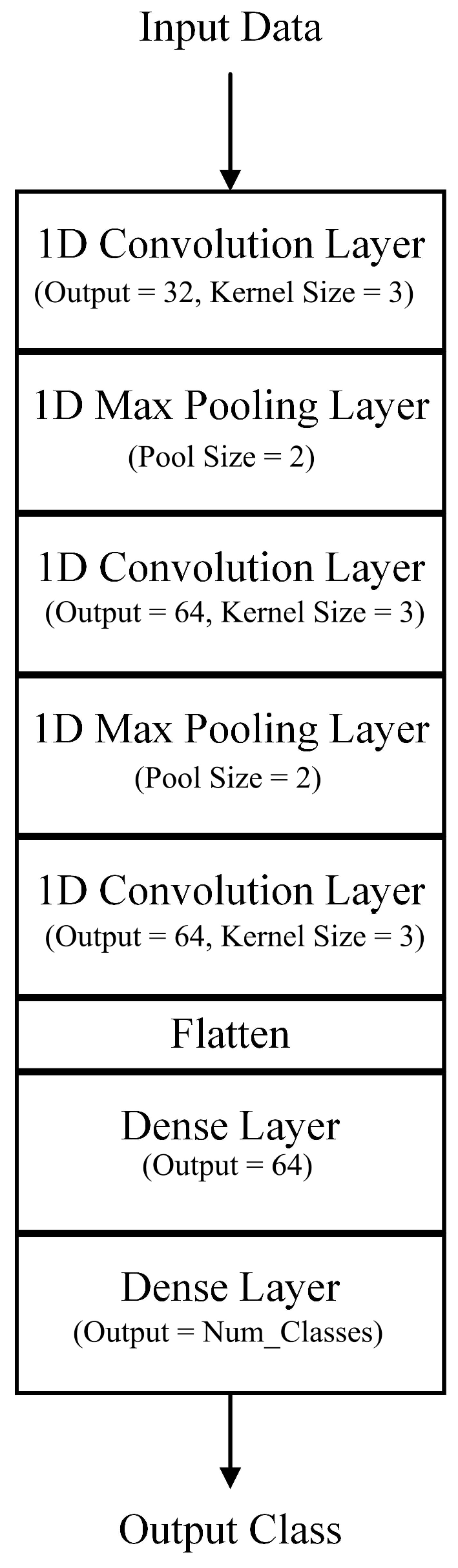

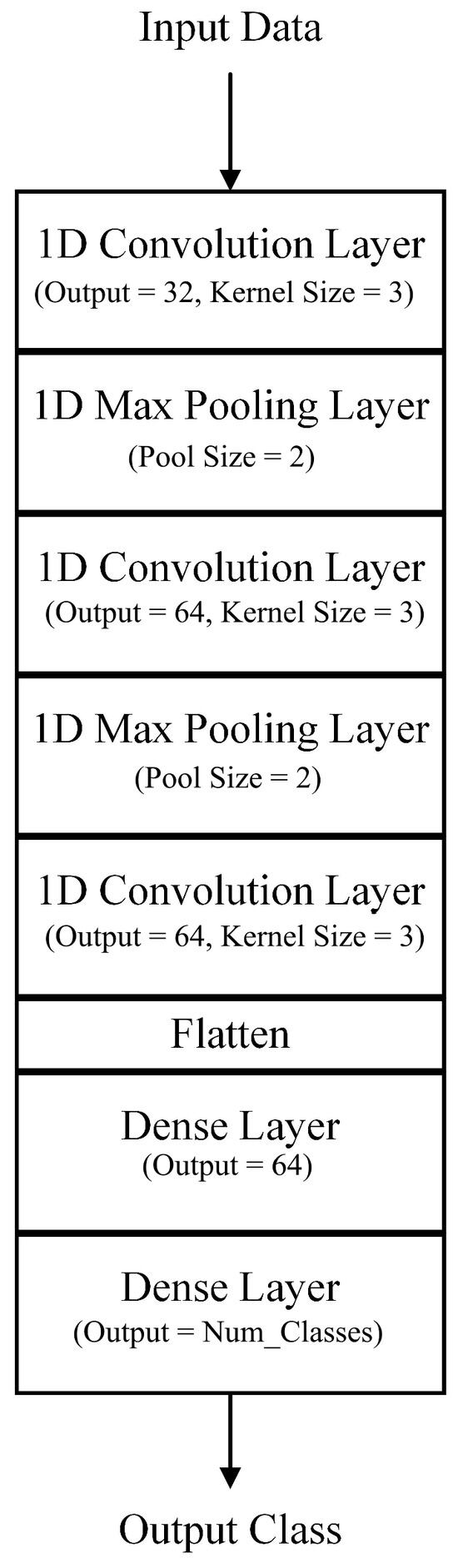

5.3.2. Convolutional Neural Network Architecture

The considered CNN model consisted of three one-dimensional convolution layers, two one-dimensional pooling layers, a flattening layer, and two fully connected dense layers. The convolution layer basically acted as a filter, to enable the effective and efficient building of the feature map based on the kernel size used. The pooling layer basically downsampled the feature map by creating a lower-resolution version of it that still encompassed its critical elements. In this case, max pooling was used to highlight the most present element within the feature map patch. Finally, the flattening layer converted the output of the previous convolutional and max pooling layers into an array that was suitable and understandable by the subsequent dense layers, which mimicked a traditional artificial neural network.

Each of these layers had a different output size, between 32 to 64, for the convolution layers, while that of the pooling layers was always set to 2. Similarly, the output sizes of the two dense layers were 64 and the number of classes was 2 for the binary case and 8 for the multi-class case, respectively. The CNN architecture is shown in Figure 12.

Figure 12.

Convolutional neural network architecture.

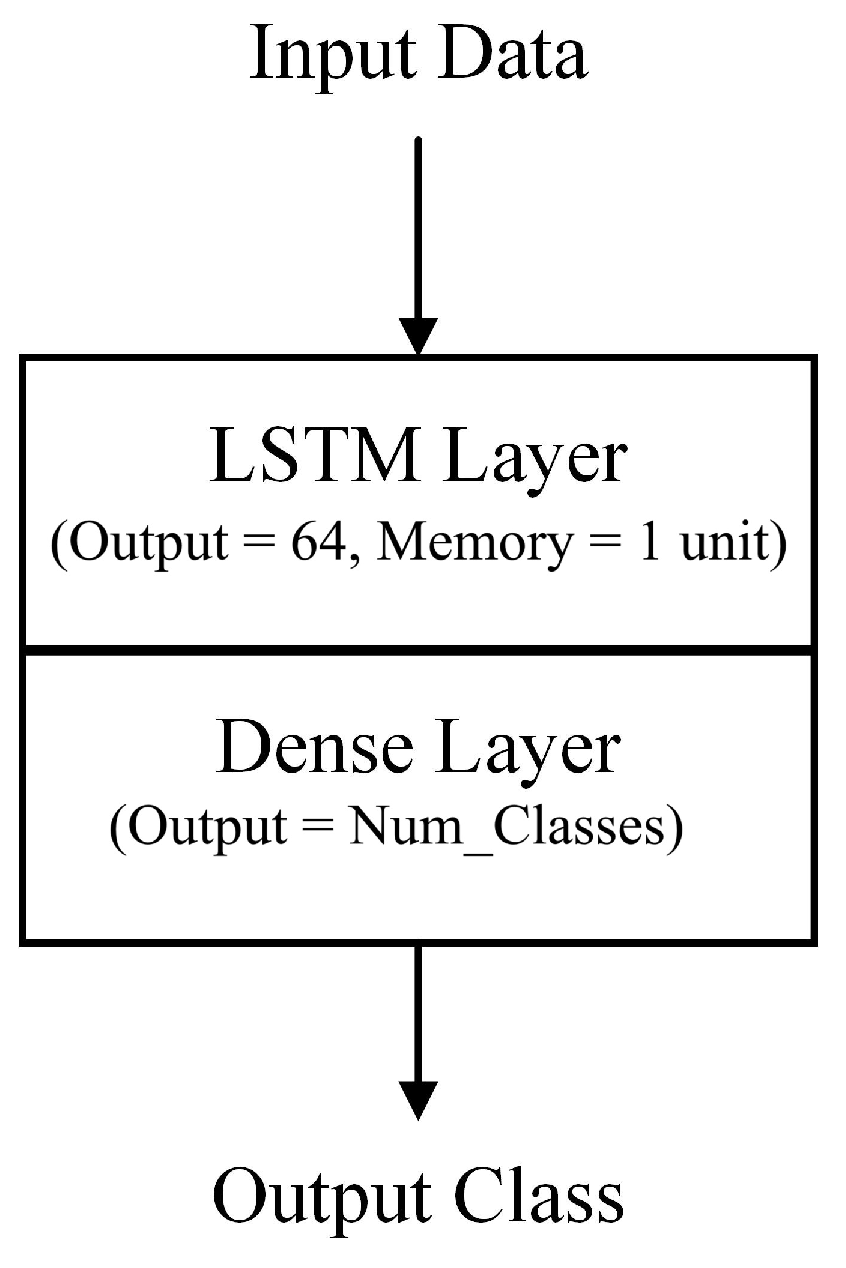

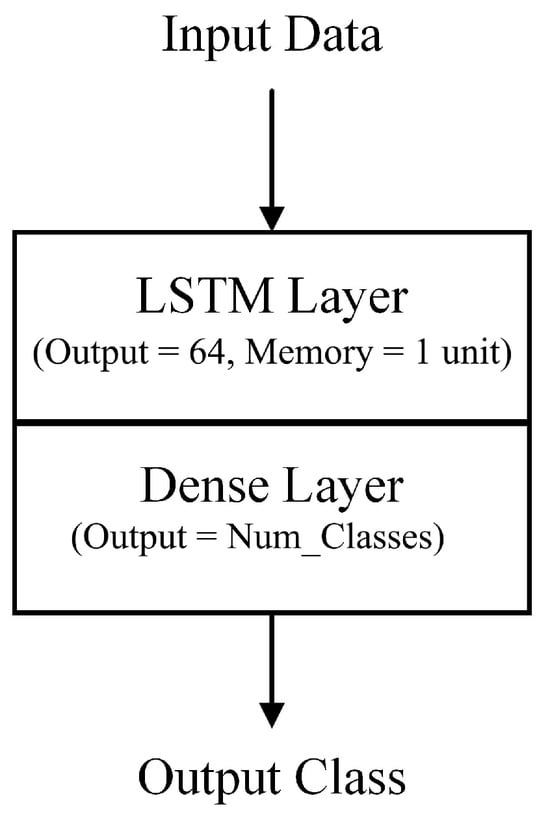

5.3.3. Recurrent Neural Network Architecture

The considered RNN model included a single long short-term memory (LSTM) layer, followed by a dense layer that used softmax activation for multi-class classification. The LSTM layer had an input size of 112 (size of the input feature set) and an output size of 64, while the dense layer had an input size of 64 and an output size of the number of classes (2 for the binary case and 8 for the multi-class case). The LSTM layer enabled the model to preserve and learn from historical data within the sequence, enabling it to effectively identify subtle patterns and correlations. It was designed to exploit the sequential nature of network flow data within softwarized 5G networks to detect intrusions.

Unlike CNNs, which are effective in capturing spatial relationships in images, RNNs excel in capturing temporal dependencies in sequential data, thus making them well-suited for analyzing evolving network traffic patterns. The RNN architecture is shown in Figure 13.

Figure 13.

Recurrent neural network architecture.

5.4. DL-Based Framework’s Results and Discussion

To evaluate the performance of the proposed DL-based framework for intrusion detection in softwarized 5G networks, the considered dataset was split into 80% for training and 20% for testing. In what follows, the performance results for the binary classification and multi-class classification scenarios are provided.

5.4.1. Binary Classification Scenario

Starting of with the binary classification scenario, Table 1 provides a summary of the different metrics for the three DL models considered, as well as two other models from the literature, namely the K-nearest neighbors (KNN) and random forest models from [65]. Several observations can be made. The first is that all three DL models were effective in detecting malicious attacks, with the accuracy, precision, recall, and F1-score all being above 99%, which is comparable to previous models from the literature. More specifically, the dense autoencoder neural network outperformed classical models previously proposed in the literature, such as KNN and random forest [65], in terms of detection accuracy, while the convolutional neural network and the recurrent neural network models only lagged by less than 0.1%. This reiterated the capability of such models for detecting attacks in softwarized 5G networks. The second observation was the relatively high computational complexity associated with training such models, which was highlighted by the high training time that was in the region of hundreds of seconds. This is in contrast to some previous models from the literature, which typically have lower training times, as illustrated by the KNN and random forest models. It was also observed that the dense autoencoder neural network and the convolutional neural network models had fairly similar training times, with only around a 4% difference. In contrast, the recurrent neural network model had a longer training time, which was roughly 11% longer than the dense autoencoder neural network model and 16% longer than the convolutional neural network model. This matched the computational complexity analysis conducted previously, and this was expected. However, it is worth noting that the testing/prediction time was fairly low (in the range of 1.5 min). This highlighted the models’ capability to quickly detect the presence of an attack, particularly given that many network attacks can last several hours [72]. Hence, detecting an attack within a few minutes represents a significant achievement. This again emphasizes the effectiveness of the proposed framework.

Table 1.

Testing performance evaluation—binary classification scenario.

5.4.2. Multi-Class Classification Scenario

The performance of the proposed framework was also evaluated for a multi-class classification scenario, in order to further investigate the framework’s capabilities. Table 2 provides an overview of the performance of the framework, while Table 3 provides a more detailed view of the performance per attack type. Similar observations to the binary scenario are made here, in terms of the effective detection capability of the different DL models, with all of them having an accuracy, precision, recall, and F1-score above 99%, as they outperformed the KNN and random forest models proposed in [65]. This highlights the importance and capabilities of the proposed DL-based models. More specifically, the proposed DL-based models in this work outperformed the classical models from previous literature works (e.g., in [65]) by up to 1% in terms of detection accuracy. Similarly, a comparable training time was required when training the dense autoencoder neural network and the convolutional neural network models, while that of the recurrent neural network model was significantly higher. Compared to models from previous works, the training time was actually considered to be improved, with models such as KNN requiring almost double the training time of the dense autoencoder neural network model. This again reiterates the value of adopting such models. On top of these observations, it was further observed that the testing/prediction time was longer (in the region of 3–4 min) compared to the binary classification scenario. This was attributed to the fact that, in this case, the goal was not to merely detect the attack, but also its type. Hence, this investigation often involved further analysis to determine the attack type. However, this testing/prediction time is still considered acceptable in a real-world scenario, as it would allow network providers to quickly take countermeasures to stop or mitigate the attacks. More specifically, attacks such as UDP Flood and SYN Flood can last anywhere between one to several days (around 72 h in the case of UDP Flood attacks) [72]. Therefore, detecting such attacks within minutes would allow network providers to deploy different mechanisms to stop or mitigate any negative impacts that such attacks might have.

Table 2.

Testing performance evaluation—multi-class classification scenario.

Table 3.

Testing performance evaluation per class—multi-class classification scenario.

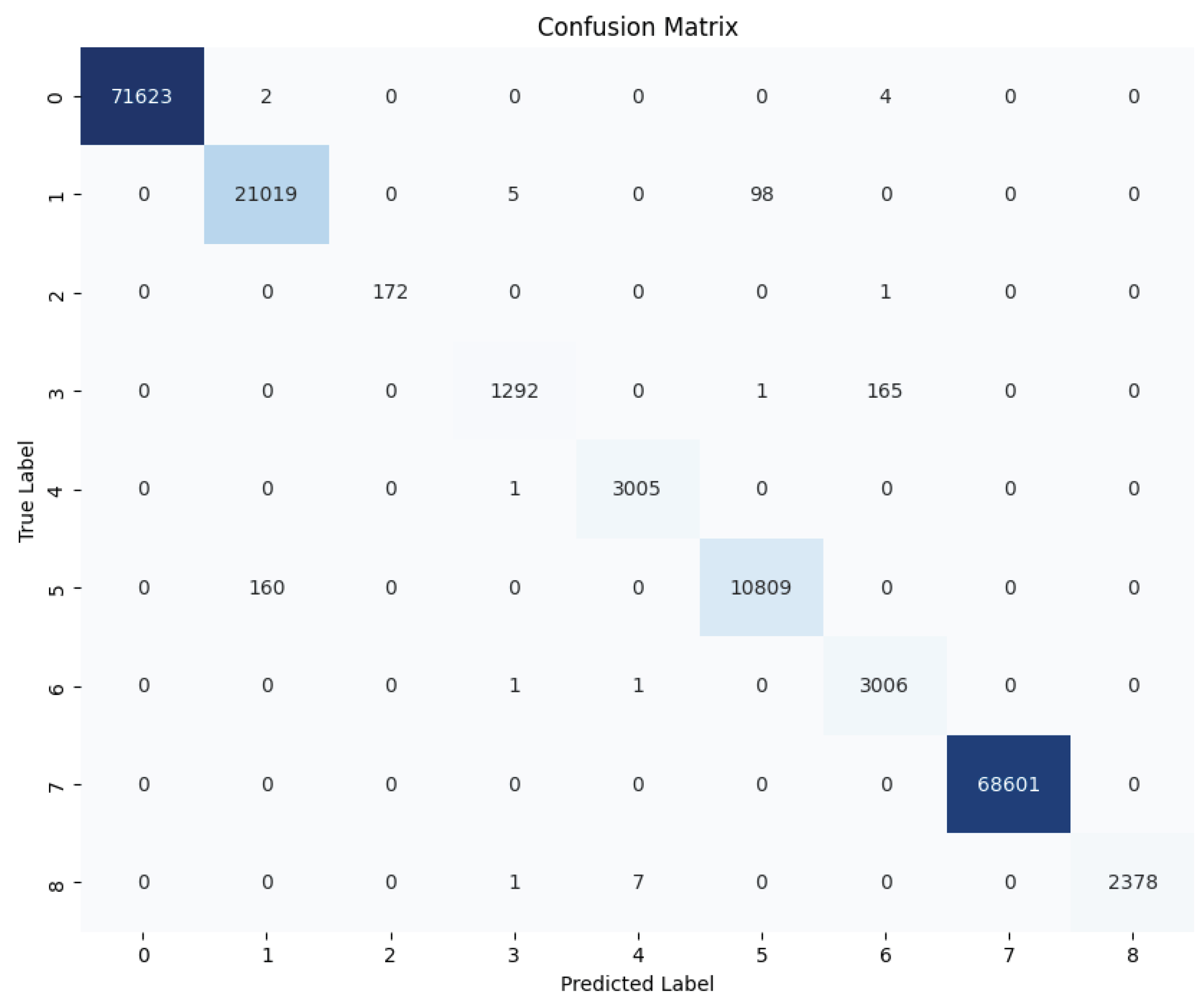

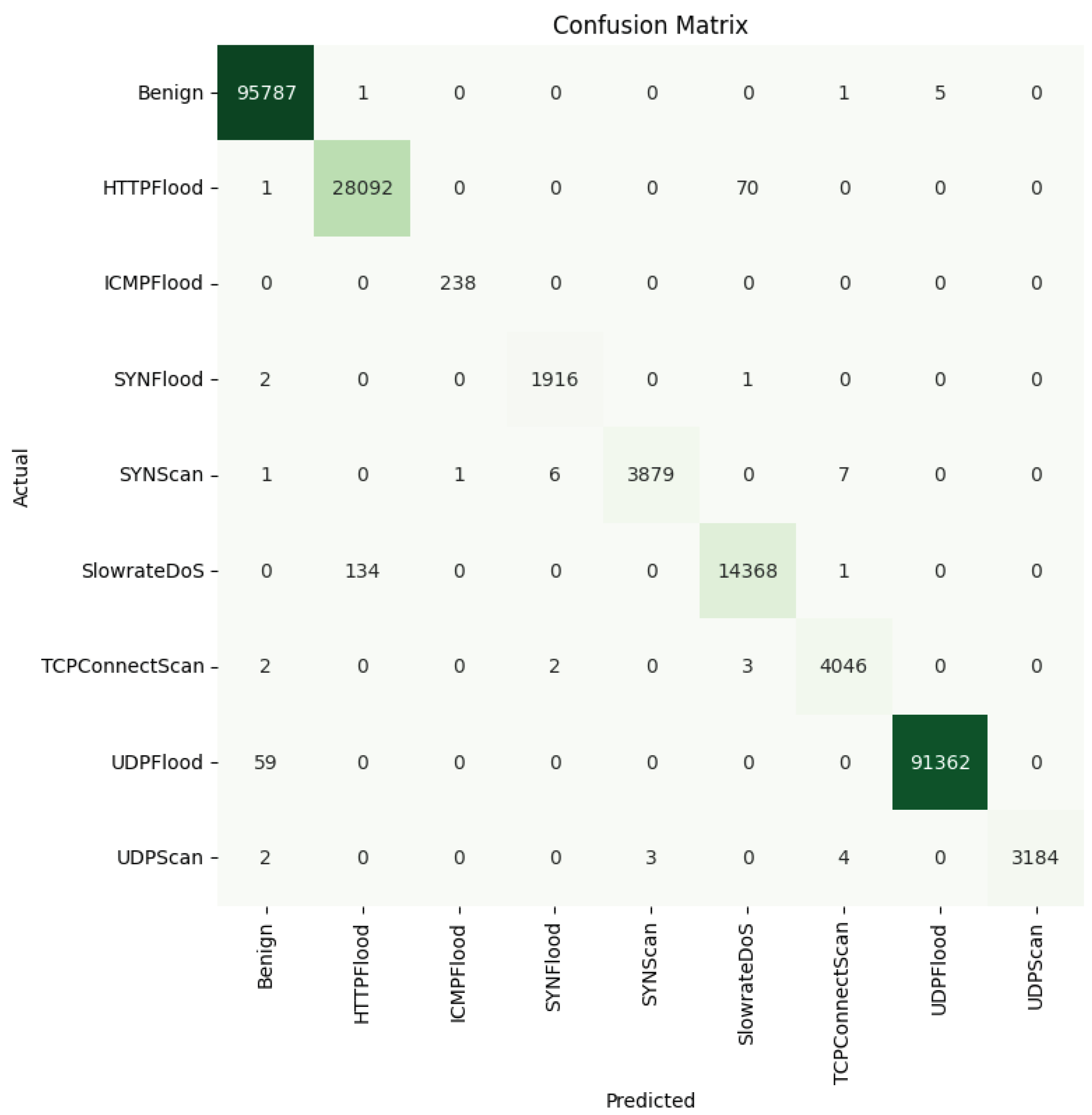

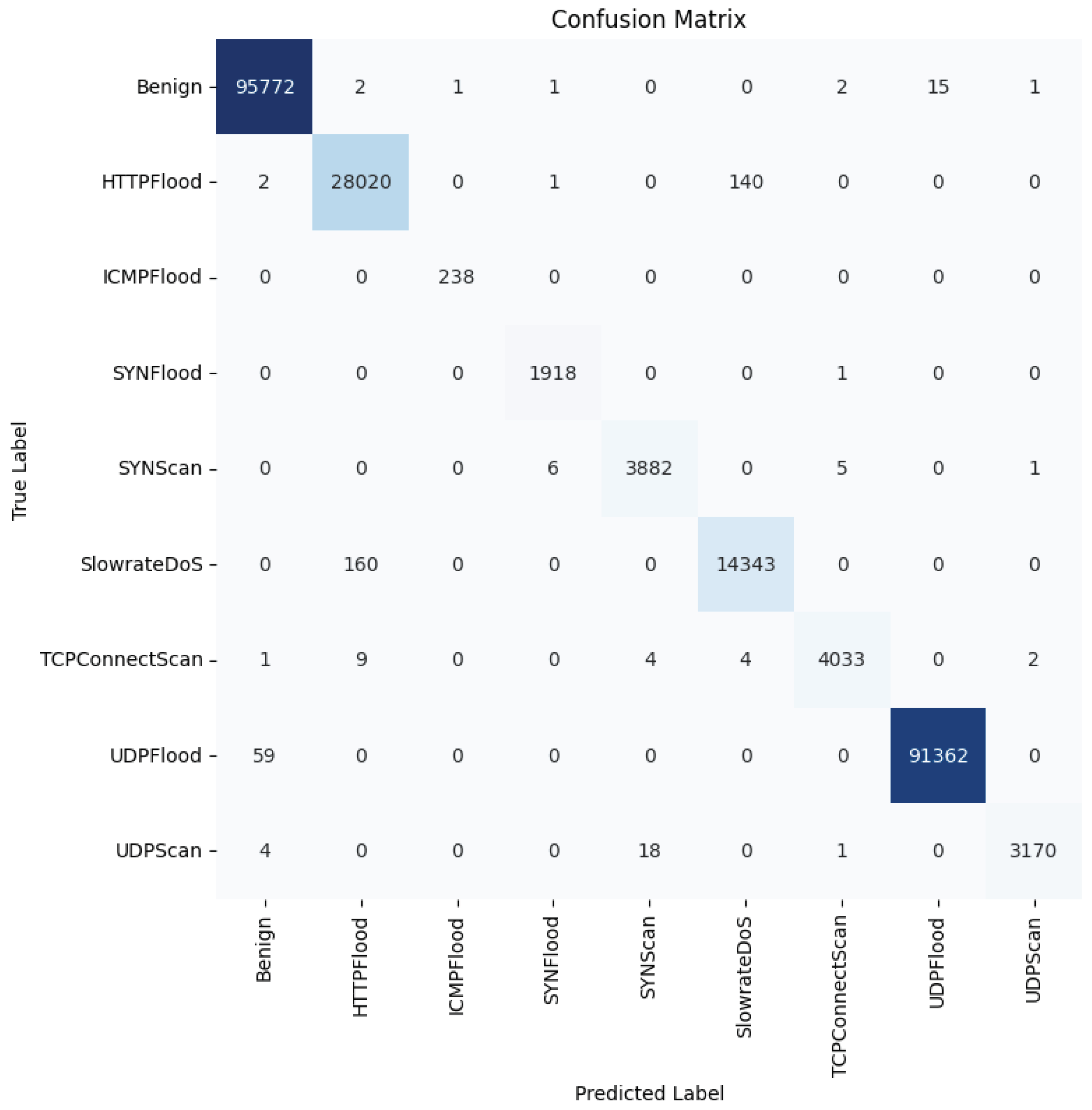

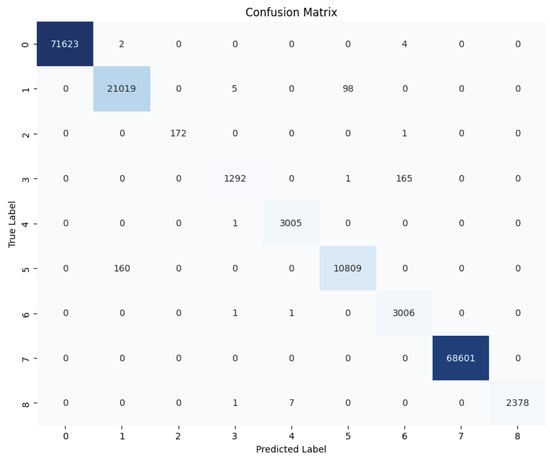

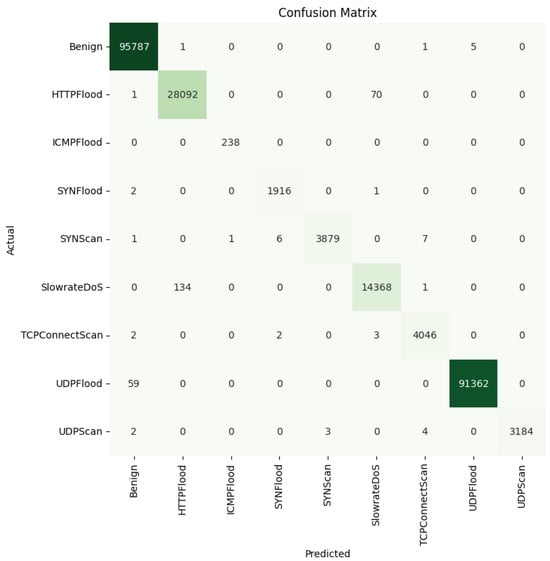

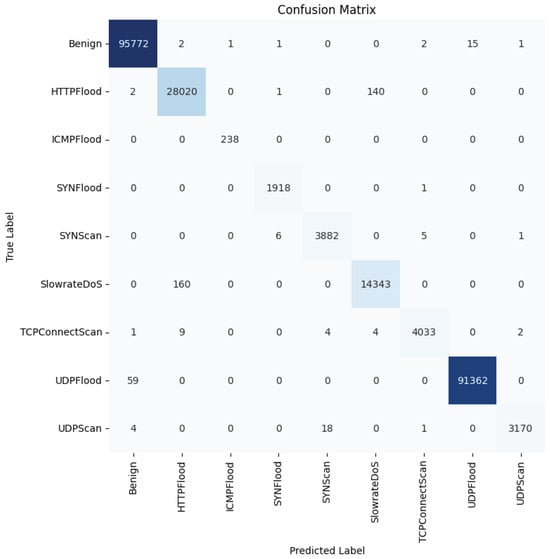

To further study the capabilities of the DL models in detecting the different attacks, Figure 14, Figure 15 and Figure 16 provide the confusion matrices for the dense autoencoder neural network, convolutional neural network, and recurrent neural network models, respectively. These figures provide insights into the detection capabilities of the DL models when it comes to detecting specific attacks. Most of the misclassification cases occurred between specific pairs of attack types that exhibited similar characteristics. For instance, the HTTPFlood attack mimics human behavior by generating a large volume of seemingly legitimate requests, which can lead to confusion with other attack types. Similarly, in SlowRateDOS attacks, a large volume of malicious activities occur, but at a slower pace. This potentially causes misclassification due to their subtle nature. Additionally, discrepancies can arise between SYNFlood and TCPConnectScan attacks, particularly in their request patterns. In SYNFlood attacks, the third request (ACK bit) is typically skipped, whereas it is sent in TCPConnectScan. Consequently, the first two requests in both attack types may exhibit similar characteristics, leading to misclassification. These nuances highlight the challenges inherent in accurately distinguishing between attack types and underscore the need for further refinements in feature extraction and model architecture to enhance the classification accuracy in 5G networks.

Figure 14.

Dense autoencoder neural network confusion matrix—multi-class classification scenario.

Figure 15.

Convolutional neural network confusion matrix—multi-class classification scenario.

Figure 16.

Recurrent neural network confusion matrix—multi-class classification scenario.

6. Conclusions and Future Works

The rise of 5G networks is driven by increasing deployments of IoT devices and expanding mobile and fixed broadband subscriptions. This growth supports new applications like AR/VR, smart factories, and autonomous vehicles. Concurrently, the deployment of 5G networks has led to a surge in network-related attacks, due to expanded attack surfaces. Reports have highlighted a significant increase in attacks, with IoT malware incidents rising sharply. Machine learning (ML), particularly deep learning (DL), has emerged as a promising tool for addressing these security challenges in 5G networks. Such systems can efficiently analyze vast amounts of data, enabling effective intrusion detection, without explicit programming. This approach has shown success in various applications and positions ML and DL as key technologies for enhancing security in 5G environments.

To that end, this work proposed a research initiative that adopted an exploratory data analysis (EDA) and DL pipeline designed for 5G network intrusion detection. This approach aimed to better understand dataset characteristics, implement a DL-based detection pipeline, and evaluate its performance against existing methodologies, thereby contributing to advancements in securing 5G networks. The experimental results using the 5G-NIDD dataset showed that the proposed DL-based models had extremely high intrusion detection and attack identification capabilities (above 99.5% and outperforming other models from the literature), while having a reasonable prediction time. This highlighted their effectiveness and efficiency for such tasks in softwarized 5G environments.

Several future research directions could be followed to build on this work. One future endeavor would be to investigate the impact of different hyperparameter tuning algorithms on the performance of the different models. This has the potential to further improve the detection effectiveness of the framework. A second endeavor worth exploring would be studying the impact of hybrid feature selection algorithms to reduce the computational complexity, and thus the training time, required for such models, without sacrificing their intrusion detection and attack identification capabilities.

Funding

This research received no external funding.

Data Availability Statement

Dataset is publicly available at https://dx.doi.org/10.21227/xtep-hv36 (accessed on 30 July 2024).

Conflicts of Interest

The author declares no conflicts of interest.

References

- Moubayed, A.; Javadtalab, A.; Hemmati, M.; You, Y.; Shami, A. Traffic-Aware OTN-over-WDM Optimization in 5G Networks. In Proceedings of the 2022 IEEE International Mediterranean Conference on Communications and Networking (MeditCom), Athens, Greece, 5–8 September 2022; pp. 184–190. [Google Scholar] [CrossRef]

- Moubayed, A.; Manias, D.M.; Javadtalab, A.; Hemmati, M.; You, Y.; Shami, A. OTN-over-WDM optimization in 5G networks: Key challenges and innovation opportunities. Photonic Netw. Commun. 2023, 45, 49–66. [Google Scholar] [CrossRef]

- International Telecommunication Union. Measuring Digital Development: Facts and Figures 2020; Technical report; International Telecommunication Union: Geneva, Switzerland, 2020. [Google Scholar]

- International Telecommunication Union. Setting the Scene for 5G: Opportunities & Challenges; Technical report; International Telecommunication Union: Geneva, Switzerland, 2018. [Google Scholar]

- Feldmann, A.; Gasser, O.; Lichtblau, F.; Pujol, E.; Poese, I.; Dietzel, C.; Wagner, D.; Wichtlhuber, M.; Tapiador, J.; Vallina-Rodriguez, N.; et al. A year in lockdown: How the waves of COVID-19 impact internet traffic. Commun. ACM 2021, 64, 101–108. [Google Scholar] [CrossRef]

- The World Bank. How COVID-19 Increased Data Consumption and Highlighted the Digital Divide; Technical report; The World Bank: Washington, DC, USA, 2021. [Google Scholar]

- Taylor, P. Monthly Internet Traffic in the U.S. 2018–2023; Technical report; Statista: New York, NY, USA, 2023. [Google Scholar]

- Condoluci, M.; Mahmoodi, T. Softwarization and virtualization in 5G mobile networks: Benefits, trends and challenges. Comput. Netw. 2018, 146, 65–84. [Google Scholar] [CrossRef]

- Lake, D.; Wang, N.; Tafazolli, R.; Samuel, L. Softwarization of 5G networks–Implications to open platforms and standardizations. IEEE Access 2021, 9, 88902–88930. [Google Scholar] [CrossRef]

- Doffman, Z. Cyberattacks on IOT Devices Surge 300% in 2019, ‘Measured in Billions’ Report Claims. Forbes, 14 September 2019. [Google Scholar]

- Lefebvre, M.; Nair, S.; Engels, D.W.; Horne, D. Building a Software Defined Perimeter (SDP) for Network Introspection. In Proceedings of the 2021 IEEE Conference on Network Function Virtualization and Software Defined Networks (NFV-SDN), Heraklion, Greece, 9–11 November 2021; pp. 91–95. [Google Scholar] [CrossRef]

- Hindy, H.; Brosset, D.; Bayne, E.; Seeam, A.K.; Tachtatzis, C.; Atkinson, R.; Bellekens, X. A Taxonomy of Network Threats and the Effect of Current Datasets on Intrusion Detection Systems. IEEE Access 2020, 8, 104650–104675. [Google Scholar] [CrossRef]

- Nti, I.K.; Narko-Boateng, O.; Adekoya, A.F.; Somanathan, A.R. Stacknet Based Decision Fusion Classifier for Network Intrusion Detection. Int. Arab. J. Inf. Technol. 2022, 19, 478–490. [Google Scholar]

- Muthiya, D.E.R. Design and Implementation of Crypt Analysis of Cloud Data Intrusion Management System. Int. Arab. J. Inf. Technol. 2020, 17, 895–905. [Google Scholar]

- Ghazal, T.M.; Hasan, M.K.; Abdullah, S.N.H.S.; Bakar, K.A.A.; Al-Dmour, N.A.; Said, R.A.; Abdellatif, T.M.; Moubayed, A.; Alzoubi, H.M.; Alshurideh, M.; et al. Machine Learning-Based Intrusion Detection Approaches for Secured Internet of Things. In The Effect of Information Technology on Business and Marketing Intelligence Systems; Alshurideh, M., Al Kurdi, B.H., Masa’deh, R., Alzoubi, H.M., Salloum, S., Eds.; Springer International Publishing: Cham, Switzerland, 2023; pp. 2013–2036. [Google Scholar] [CrossRef]

- Moubayed, A.; Aqeeli, E.; Shami, A. Detecting DNS Typo-Squatting Using Ensemble-Based Feature Selection Classification Models. IEEE Can. J. Electr. Comput. Eng. 2021, 44, 456–466. [Google Scholar] [CrossRef]

- Yang, L.; Moubayed, A.; Shami, A.; Boukhtouta, A.; Heidari, P.; Preda, S.; Brunner, R.; Migault, D.; Larabi, A. Forensic Data Analytics for Anomaly Detection in Evolving Networks. In Innovations in Digital Forensics; World Scientific: Singapore, 2023; pp. 99–137. [Google Scholar]

- Aburakhia, S.; Tayeh, T.; Myers, R.; Shami, A. A Transfer Learning Framework for Anomaly Detection Using Model of Normality. In Proceedings of the 2020 11th IEEE Annual Information Technology, Electronics and Mobile Communication Conference (IEMCON), Vancouver, BC, Canada, 4–7 November 2020; pp. 55–61. [Google Scholar] [CrossRef]

- He, K.; Kim, D.D.; Asghar, M.R. Adversarial Machine Learning for Network Intrusion Detection Systems: A Comprehensive Survey. IEEE Commun. Surv. Tutor. 2023, 25, 538–566. [Google Scholar] [CrossRef]

- Vanin, P.; Newe, T.; Dhirani, L.L.; O’Connell, E.; O’Shea, D.; Lee, B.; Rao, M. A Study of Network Intrusion Detection Systems Using Artificial Intelligence/Machine Learning. Appl. Sci. 2022, 12, 1752. [Google Scholar] [CrossRef]

- Manderna, A.; Kumar, S.; Dohare, U.; Aljaidi, M.; Kaiwartya, O.; Lloret, J. Vehicular network intrusion detection using a cascaded deep learning approach with multi-variant metaheuristic. Sensors 2023, 23, 8772. [Google Scholar] [CrossRef] [PubMed]

- Maseer, Z.K.; Yusof, R.; Bahaman, N.; Mostafa, S.A.; Foozy, C.F.M. Benchmarking of Machine Learning for Anomaly Based Intrusion Detection Systems in the CICIDS2017 Dataset. IEEE Access 2021, 9, 22351–22370. [Google Scholar] [CrossRef]

- Alamleh, A.; Albahri, O.; Zaidan, A.; Albahri, A.; Alamoodi, A.; Zaidan, B.; Qahtan, S.; Alsatar, H.; Al-Samarraay, M.S.; Jasim, A.N. Federated learning for IoMT applications: A standardization and benchmarking framework of intrusion detection systems. IEEE J. Biomed. Health Inform. 2022, 27, 878–887. [Google Scholar] [CrossRef] [PubMed]

- Injadat, M.; Salo, F.; Nassif, A.B.; Essex, A.; Shami, A. Bayesian Optimization with Machine Learning Algorithms Towards Anomaly Detection. In Proceedings of the 2018 IEEE Global Communications Conference (GLOBECOM), Abu Dhabi, United Arab Emirates, 9–13 December 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Ahmad, Z.; Shahid Khan, A.; Wai Shiang, C.; Abdullah, J.; Ahmad, F. Network intrusion detection system: A systematic study of machine learning and deep learning approaches. Trans. Emerg. Telecommun. Technol. 2021, 32, e4150. [Google Scholar] [CrossRef]

- Musa, U.S.; Chhabra, M.; Ali, A.; Kaur, M. Intrusion Detection System using Machine Learning Techniques: A Review. In Proceedings of the 2020 International Conference on Smart Electronics and Communication (ICOSEC), Trichy, India, 10–12 September 2020; pp. 149–155. [Google Scholar] [CrossRef]

- Yang, L.; Moubayed, A.; Shami, A.; Heidari, P.; Boukhtouta, A.; Larabi, A.; Brunner, R.; Preda, S.; Migault, D. Multi-Perspective Content Delivery Networks Security Framework Using Optimized Unsupervised Anomaly Detection. IEEE Trans. Netw. Serv. Manag. 2022, 19, 686–705. [Google Scholar] [CrossRef]

- Alzahrani, A.O.; Alenazi, M.J. Designing a network intrusion detection system based on machine learning for software defined networks. Future Internet 2021, 13, 111. [Google Scholar] [CrossRef]

- Salo, F.; Injadat, M.; Moubayed, A.; Nassif, A.B.; Essex, A. Clustering Enabled Classification using Ensemble Feature Selection for Intrusion Detection. In Proceedings of the 2019 International Conference on Computing, Networking and Communications (ICNC), Honolulu, HI, USA, 18–21 February 2019; pp. 276–281. [Google Scholar]

- You, X.; Zhang, C.; Tan, X.; Jin, S.; Wu, H. AI for 5G: Research directions and paradigms. Sci. China Inf. Sci. 2019, 62, 1–13. [Google Scholar] [CrossRef]

- Afaq, A.; Haider, N.; Baig, M.Z.; Khan, K.S.; Imran, M.; Razzak, I. Machine learning for 5G security: Architecture, recent advances, and challenges. Ad Hoc Netw. 2021, 123, 102667. [Google Scholar] [CrossRef]

- Park, J.H.; Rathore, S.; Singh, S.K.; Salim, M.M.; Azzaoui, A.; Kim, T.W.; Pan, Y.; Park, J.H. A comprehensive survey on core technologies and services for 5G security: Taxonomies, issues, and solutions. Hum.-Centric Comput. Inf. Sci 2021, 11, 2–22. [Google Scholar]

- Fang, H.; Wang, X.; Tomasin, S. Machine learning for intelligent authentication in 5G and beyond wireless networks. IEEE Wirel. Commun. 2019, 26, 55–61. [Google Scholar] [CrossRef]

- Sagduyu, Y.E.; Erpek, T.; Shi, Y. Adversarial machine learning for 5G communications security. Game Theory Mach. Learn. Cyber Secur. 2021, 270–288. [Google Scholar]

- Usama, M.; Ilahi, I.; Qadir, J.; Mitra, R.N.; Marina, M.K. Examining machine learning for 5G and beyond through an adversarial lens. IEEE Internet Comput. 2021, 25, 26–34. [Google Scholar] [CrossRef]

- Suomalainen, J.; Juhola, A.; Shahabuddin, S.; Mämmelä, A.; Ahmad, I. Machine learning threatens 5G security. IEEE Access 2020, 8, 190822–190842. [Google Scholar] [CrossRef]

- Ramezanpour, K.; Jagannath, J. Intelligent zero trust architecture for 5G/6G networks: Principles, challenges, and the role of machine learning in the context of O-RAN. Comput. Netw. 2022, 217, 109358. [Google Scholar] [CrossRef]

- Alamri, H.A.; Thayananthan, V.; Yazdani, J. Machine Learning for Securing SDN based 5G network. Int. J. Comput. Appl. 2021, 174, 9–16. [Google Scholar] [CrossRef]

- Li, J.; Zhao, Z.; Li, R. Machine learning-based IDS for software-defined 5G network. IET Networks 2018, 7, 53–60. [Google Scholar] [CrossRef]

- Qu, Y.; Zhang, J.; Li, R.; Zhang, X.; Zhai, X.; Yu, S. Generative adversarial networks enhanced location privacy in 5G networks. Sci. China Inf. Sci. 2020, 63, 1–12. [Google Scholar] [CrossRef]

- Brownlee, J. Data Preparation for Machine Learning: Data Cleaning, Feature Selection, and Data Transforms in Python; Machine Learning Mastery: Vermont, VIC, Australia, 2020. [Google Scholar]

- Li, P.; Rao, X.; Blase, J.; Zhang, Y.; Chu, X.; Zhang, C. CleanML: A Study for Evaluating the Impact of Data Cleaning on ML Classification Tasks. In Proceedings of the 2021 IEEE 37th International Conference on Data Engineering (ICDE), Chania, Greece, 19–22 April 2021; pp. 13–24. [Google Scholar] [CrossRef]

- Lin, W.C.; Tsai, C.F. Missing value imputation: A review and analysis of the literature (2006–2017). Artif. Intell. Rev. 2020, 53, 1487–1509. [Google Scholar] [CrossRef]

- Al-Shehari, T.; Alsowail, R.A. An insider data leakage detection using one-hot encoding, synthetic minority oversampling and machine learning techniques. Entropy 2021, 23, 1258. [Google Scholar] [CrossRef]

- Dahouda, M.K.; Joe, I. A Deep-Learned Embedding Technique for Categorical Features Encoding. IEEE Access 2021, 9, 114381–114391. [Google Scholar] [CrossRef]

- Singh, D.; Singh, B. Investigating the impact of data normalization on classification performance. Appl. Soft Comput. 2020, 97, 105524. [Google Scholar] [CrossRef]

- Chen, J.; Wu, D.; Zhao, Y.; Sharma, N.; Blumenstein, M.; Yu, S. Fooling intrusion detection systems using adversarially autoencoder. Digit. Commun. Netw. 2021, 7, 453–460. [Google Scholar] [CrossRef]

- Zhou, H.; Wang, X.; Zhu, R. Feature selection based on mutual information with correlation coefficient. Appl. Intell. 2022, 52, 5457–5474. [Google Scholar] [CrossRef]

- Cheng, J.; Sun, J.; Yao, K.; Xu, M.; Cao, Y. A variable selection method based on mutual information and variance inflation factor. Spectrochim. Acta Part A Mol. Biomol. Spectrosc. 2022, 268, 120652. [Google Scholar] [CrossRef]

- Rupak, B.R., II. Mutual Information Score—Feature Selection; Medium: San Francisco, CA, USA, 2022. [Google Scholar]

- Rezvy, S.; Petridis, M.; Lasebae, A.; Zebin, T. Intrusion detection and classification with autoencoded deep neural network. In Proceedings of the International Conference on Security for Information Technology and Communications; Springer: Cham, Switzerland, 2018; pp. 142–156. [Google Scholar]

- Hernandez-Suarez, A.; Sanchez-Perez, G.; Toscano-Medina, L.K.; Olivares-Mercado, J.; Portillo-Portilo, J.; Avalos, J.G.; Garcia Villalba, L.J. Detecting cryptojacking web threats: An approach with autoencoders and deep dense neural networks. Appl. Sci. 2022, 12, 3234. [Google Scholar] [CrossRef]

- Mudadla, S. Deep Neural Networks vs Dense Neural Networks; Medium: San Francisco, CA, USA, 2023. [Google Scholar]

- Li, Z.; Liu, F.; Yang, W.; Peng, S.; Zhou, J. A Survey of Convolutional Neural Networks: Analysis, Applications, and Prospects. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 6999–7019. [Google Scholar] [CrossRef] [PubMed]

- Ketkar, N.; Moolayil, J.; Ketkar, N.; Moolayil, J. Convolutional neural networks. In Deep Learning with Python: Learn Best Practices of Deep Learning Models with PyTorch; Apress: New York, NY, USA, 2021; pp. 197–242. [Google Scholar]

- Ghiasi-Shirazi, K. Generalizing the convolution operator in convolutional neural networks. Neural Process. Lett. 2019, 50, 2627–2646. [Google Scholar] [CrossRef]

- Bullinaria, J.A. Recurrent neural networks. Neural Comput. Lect. 2013, 12, 1–20. Available online: https://www.cs.bham.ac.uk/~jxb/INC/l12.pdf (accessed on 30 July 2024).

- Sherstinsky, A. Fundamentals of recurrent neural network (RNN) and long short-term memory (LSTM) network. Phys. D Nonlinear Phenom. 2020, 404, 132306. [Google Scholar] [CrossRef]

- Wang, Y.; Wu, H.; Zhang, J.; Gao, Z.; Wang, J.; Yu, P.S.; Long, M. PredRNN: A Recurrent Neural Network for Spatiotemporal Predictive Learning. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 2208–2225. [Google Scholar] [CrossRef]

- Tyagi, A.K.; Abraham, A. Recurrent Neural Networks: Concepts and Applications; CRC Press: Boca Raton, FL, USA, 2022. [Google Scholar]

- Estevez, P.A.; Tesmer, M.; Perez, C.A.; Zurada, J.M. Normalized Mutual Information Feature Selection. IEEE Trans. Neural Netw. 2009, 20, 189–201. [Google Scholar] [CrossRef] [PubMed]

- Hosseini, M.; Horton, M.; Paneliya, H.; Kallakuri, U.; Homayoun, H.; Mohsenin, T. On the complexity reduction of dense layers from o (n2) to o (nlogn) with cyclic sparsely connected layers. In Proceedings of the 56th Annual Design Automation Conference 2019, Las Vegas, NV, USA, 2–6 June 2019; pp. 1–6. [Google Scholar]

- Habib, G.; Qureshi, S. Optimization and acceleration of convolutional neural networks: A survey. J. King Saud-Univ.-Comput. Inf. Sci. 2022, 34, 4244–4268. [Google Scholar] [CrossRef]

- Akpinar, N.J.; Kratzwald, B.; Feuerriegel, S. Sample complexity bounds for recurrent neural networks with application to combinatorial graph problems. arXiv 2019, arXiv:1901.10289. [Google Scholar]

- Samarakoon, S.; Siriwardhana, Y.; Porambage, P.; Liyanage, M.; Chang, S.Y.; Kim, J.; Kim, J.; Ylianttila, M. 5G-NIDD: A Comprehensive Network Intrusion Detection Dataset Generated over 5G Wireless Network. arXiv 2022, arXiv:2212.01298. [Google Scholar] [CrossRef]

- 5GTN. Available online: https://5gtnf.fi/ (accessed on 30 March 2024).

- Bagui, S.; Li, K. Resampling imbalanced data for network intrusion detection datasets. J. Big Data 2021, 8, 6. [Google Scholar] [CrossRef]

- Balla, A.; Habaebi, M.H.; Elsheikh, E.A.A.; Islam, M.R.; Suliman, F.M. The Effect of Dataset Imbalance on the Performance of SCADA Intrusion Detection Systems. Sensors 2023, 23, 758. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Camizuli, E.; Carranza, E.J. Exploratory data analysis (EDA). In The Encyclopedia of Archaeological Sciences; John Wiley & Sons: Hoboken, NJ, USA, 2018; pp. 1–7. [Google Scholar]

- Mukhiya, S.K.; Ahmed, U. Hands-On Exploratory Data Analysis with Python: Perform EDA Techniques to Understand, Summarize, and Investigate Your Data; Packt Publishing Ltd.: Birmingham, UK, 2020. [Google Scholar]

- QRATOR Labs. 2023 DDoS Attacks Statistics and Observations. 20 May 2024. Available online: https://qrator.net/blog/details/2023-ddos-attacks-statistics-and-observations (accessed on 30 July 2024).

- Sharma, A.; Rani, R. Classification of Cancerous Profiles Using Machine Learning. In Proceedings of the International Conference on Machine Learning and Data Science (MLDS’17), Noida, India, 14–15 December 2017; pp. 31–36. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).