Abstract

Survey data play a crucial role in various research fields, including economics, education, and healthcare, by providing insights into human behavior and opinions. However, item non-response, where respondents fail to answer specific questions, presents a significant challenge by creating incomplete datasets that undermine data integrity and can hinder or even prevent accurate analysis. Traditional methods for addressing missing data, such as statistical imputation techniques and deep learning models, often fall short when dealing with the rich linguistic content of survey data. These approaches are also hampered by high time complexity for training and the need for extensive preprocessing or feature selection. In this paper, we introduce an approach that leverages Large Language Models (LLMs) through prompt engineering for predicting item non-responses in survey data. Our method combines the strengths of both traditional imputation techniques and deep learning methods with the advanced linguistic understanding of LLMs. By integrating respondent similarities, question relevance, and linguistic semantics, our approach enhances the accuracy and comprehensiveness of survey data analysis. The proposed method bypasses the need for complex preprocessing and additional training, making it adaptable, scalable, and capable of generating explainable predictions in natural language. We evaluated the effectiveness of our LLM-based approach through a series of experiments, demonstrating its competitive performance against established methods such as Multivariate Imputation by Chained Equations (MICE), MissForest, and deep learning models like TabTransformer. The results show that our approach not only matches but, in some cases, exceeds the performance of these methods while significantly reducing the time required for data processing.

1. Introduction

In many research fields, including economics [1], education [2], and healthcare [3], survey data are a critical resource for understanding human behavior and opinions. However, surveys often face the challenge of item non-response, where respondents skip certain questions, leading to incomplete data [4]. This not only compromises data integrity but also adversely impacts the accuracy of subsequent analyses. Particularly, when responses to critical key questions are missing, it can hinder precise analysis or even make analysis attempts impossible. Traditional approaches to address this issue have predominantly relied on statistical methods designed for imputation, such as mean imputation, KNN imputation [5], and multiple imputation [6,7,8]. These methods are well-suited for handling structured, tabular data where relationships between variables can be statistically modeled, offering robustness and consistency in maintaining the dataset’s overall structure and trends. Additionally, to achieve better performance, these models combine statistical methods with machine learning techniques like Logistic Regression [9], Random Forest [10], and LightGBM [11].

As the complexity of data increased, deep learning models specifically designed for tabular data learning and classification, such as DeepGBM [12], TabTransformer [13], and SAINT [14], were introduced to leverage deep learning architectures for capturing complex patterns in tabular datasets. They represent a significant advancement by being able to model non-linear relationships and interactions between features that imputation methods might overlook. Among the many tabular dataset learning and classification methods, TabTransformer is particularly suitable for addressing the item non-response prediction problem as it is capable of handling missing values effectively compared to other models.

However, previous imputation models inherently suffer from high time complexity and require manual selection of key features. In the context of deep learning models for tabular data classification, each feature needs to be learned individually, making these models less generalizable and more time-consuming as the model size increases. Particularly, both traditional imputation methods and advanced machine learning models require complex preprocessing steps, and the performance of the models is significantly influenced by the quality of the preprocessing. Furthermore, these models face significant limitations when applied to survey data, which often include rich linguistic content. Survey questions and responses are not merely numbers or simple categories; they encapsulate nuanced meanings, context, and subjective interpretations that purely imputation or tabular data classification approaches may fail to capture. As a result, there is a risk of losing valuable information, which could lead to suboptimal predictions. From the design phase of a survey to the subsequent analysis, the linguistic content plays a crucial role in shaping the insights drawn from the data [15]. Ignoring the semantic content means overlooking a wealth of information that could significantly enhance the accuracy of predictions and provide deeper analytical insights.

With the advent of Large Language Models (LLMs), which possess remarkable in-context learning [16] and reasoning abilities [17] in the processing of natural language, a new opportunity has emerged. LLMs have the ability to reason using only the information provided in the prompt, without the need for additional training. This capability allows for the rapid prediction of item non-response, bypassing the difficulties and complexities of preprocessing and the need for extensive training processes. Moreover, LLMs possess the ability to understand the intricate nuances of language, including both contextual subtleties and underlying intents, thereby providing a means to incorporate semantic information directly into the prediction of item non-response problems. In light of this, we introduce an approach that harnesses the similarities among respondents, the relevance among questions, and the semantic power of LLMs to create a more robust method for predicting missing data in surveys without the need for complex preprocessing or a lengthy training process.

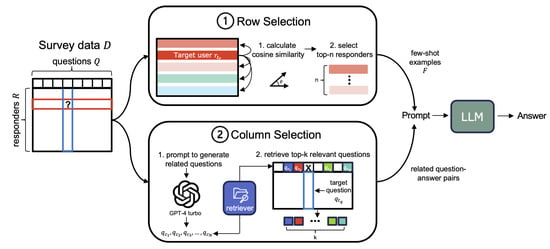

Our approach combines the statistical strengths of traditional imputation and tabular data classification methods with the advanced linguistic understanding provided by LLMs through prompt engineering. By integrating these three dimensions—respondent similarities, question relevance, and linguistic semantics—we enhance the overall accuracy and comprehensiveness of survey data analysis. This combined methodology is particularly effective in handling complex and nuanced scenarios, where both the interrelationships between features and rows as well as the linguistic context play crucial roles. Specifically, our approach generates the prompt to be input into the LLM in two main steps:

- Row Selection: This involves selecting relevant respondents for the few-shot examples by calculating the cosine similarity between the responses of the current target user and those of other respondents. Respondents whose answers are most similar to the target user’s responses are selected, ensuring that the few-shot examples include balanced options across all possible answer categories for the target question.

- Column Selection: This step is used to include only the most relevant context for answering the target question. First, the LLM is prompted to generate a diverse set of questions designed to elicit responses pertinent to the target question. Subsequently, question–answer pairs corresponding to these generated questions are extracted from the existing dataset by the retriever.

The LLM then uses the generated prompt, containing both the context from the target user and the selected few-shot examples, to predict the answer to the target question posed to the current respondent. A comprehensive overview of our approach is illustrated in Figure 1. Our contributions regarding our approach are summarized as follows:

Figure 1.

An overall illustration of our method. Our method consists of two main steps for predicting item non-responses in survey data using an LLM: (1) Row Selection: This step involves identifying similar respondents to the target user based on their responses. Cosine similarity is calculated between the target user and other respondents. The most similar respondents are selected as few-shot examples to be included in the prompt. (2) Column Selection: In this step, the GPT-4 turbo generates a set of relevant questions related to the target question. These questions are then used to retrieve corresponding question–answer pairs from the dataset. The most relevant pairs are included in the prompt provided to the LLM. Finally, the prompt, containing both selected respondents’ examples and relevant question–answer pairs, is then fed into the LLM to predict the missing answer for the target user.

- Our approach consistently achieves high performance across various types of survey questions, effectively leveraging diverse information sources while maintaining robust results.

- We propose a generalized LLM-based method for item non-response prediction that enables rapid inference without requiring complex preprocessing or additional training steps.

- Our method is capable of handling scenarios where existing questions have very few respondents and can address new questions with no prior responses—capabilities that previous methods could not achieve.

- Finally, our approach is scalable, maintaining effectiveness even as new respondents or data points are added in real-time.

To evaluate the effectiveness of our LLM-based approach for predicting item non-responses, we present experimental results highlighting its performance relative to traditional methods, evaluated using the F1-score. Our model was compared with two imputation methods, including Multivariate Imputation by Chained Equations (MICE) [7], MissForest [8], as well as the deep learning model TabTransformer [13]. The results indicate that our model demonstrates competitive performance relative to these established methods. Furthermore, we conducted an ablation study to search for optimal hyperparameters, providing insights into the model’s performance. Our approach shows that, due to its ability to operate without extensive preprocessing and training phases, it can deliver results more quickly than previous methods.

2. Related Work

2.1. LLM with Personalized Data

The use of LLMs with personalized data has been a significant focus in recent AI research. Studies such as [18] have demonstrated the potential of leveraging user data (e.g., review, watching history, ratings) to generate tailored recommendations using LLMs. For instance, in PALR [19], the authors utilized review data to predict future purchases, highlighting the model’s ability to understand and predict user preferences based on historical data. Similarly, LaMP [20] applied LLMs to various personalized tasks such as personalized movie tagging, product rating, and tweet paraphrasing. These approaches underscore the importance of personalized context in enhancing model accuracy. This principle underlies our method for predicting item non-responses in surveys by leveraging contextual information. However, these methods have the limitation that LLMs need to be fine-tuned to generate personalized responses.

2.2. Retrieval-Augmented Generation (RAG) for In-Context Learning

Retrieval-Augmented Generation (RAG) improves the output by providing knowledge that the LLM did not learn at the time of pre-training by searching externally [21,22]. However, RAG performance varies widely due to several factors, including context length, search query formulation, and prompting. Therefore, recent research has focused on efficiently controlling these variables to improve RAG performance. The study by [23] shows that the size of the top-k and the accuracy of the search results are not proportional even though LLM has a sufficient context window size. HyDE [24] improved performance by modifying the query to be more similar to documents in the knowledge base, thus retrieving more relevant search results. These studies enhanced performance by learning to remove documents that are not directly relevant to the query or by using another model to refine the retrieved results [25,26]. However, when predicting item non-response in surveys, the inability to retrieve the ground-truth answers to unanswered questions makes it difficult to apply existing methodologies. Furthermore, the topics covered by all survey data are vastly different, limiting the effectiveness of fine-tuning. Although there is a lot of research on RAG, it has not yet been explored as a broadly usable method for personalized data. Our approach seeks to bridge this gap by leveraging the contextual information inherent in the survey responses to predict non-responses without relying on fine-tuning or another model.

2.3. Predicting Survey Data Using LLMs

The application of LLMs to predict public or subpublic opinions from survey data has shown promising results. Ref. [27] studied how to fine-tune LLMs with repeated cross-sectional surveys to predict missing or unasked opinions in social science research. Ref. [28] evaluated how LLM-based subpopulation representative models generalize from empirical data, revealing that in-context learning benefits vary across demographics, posing challenges for equitable implementation. However, due to the imbalance and diversity of survey data in these studies, extensive preprocessing is essential. Additionally, the need for survey data-specific fine-tuning is also a major limitation, making existing methodologies to small survey data with relatively few respondents tricky. Despite these limitations, our approach aims to leverage the contextual information within survey responses to predict item non-responses without the need for extensive fine-tuning, thus offering a more scalable solution.

3. Methods

In this section, we detail our approach. First, in Section 3.1, we present the formulation of the problem and define the necessary equations. Next, in Section 3.2, we introduce the method for constructing the simplest possible prompt to input into the LLM. Then, we describe the Row Selection process for selecting the most relevant and diverse respondents for few-shot examples in Section 3.3.1. Next, we explain the Column Selection process, which selects the most relevant question–answer pairs for the target question, in Section 3.3.2. Finally, we discuss the prompt generation and answer extraction process in Section 3.3.3.

3.1. Problem Definition and Formalization

Consider a survey dataset D, represented as an table where M and N represent the number of respondents and the number of questions, respectively. The columns are denoted by , indicating the question codes for each of the questions, and the rows of D are denoted by , representing the vector of response values for each respondent. Additionally, each has the categorical options , and each response vector comprises elements , where each denotes a specific response value within the vector. Note that some values may be missing (i.e., ).

The survey data include not only the values present in the table but also the actual text data mapped to these values. Specifically, each can be translated into its corresponding survey question text , and each can be converted into its corresponding textual response , where denotes the mapping function from values to text.

In traditional methods, missing values are filled using a statistical imputation approach for each or tabular data prediction methods that learn correlations between each column . However, these methods fail to consider the linguistic semantics inherent in the survey questions and the responses , which carry information that is distinct from the correlations or statistical data. In this work, our goal is to predict the missing values for a specific question and a specific respondent in the survey dataset D using linguistic semantic information and , where is the index of the target question and is the index of the target respondent.

To leverage the linguistic semantic information present in the survey data D, we can calculate the probability of an answer using the following probability value with the use of a language model:

where denotes the set of tokens in the output generated by the LLM. Here, and denote elements of the index sets X and Y, respectively, with X representing the set of indices excluding and Y representing the set of indices excluding .

This approach utilizes all linguistic information obtainable from the survey data D within the LM to generate an accurate response to the target question and target respondent . However, this method can lead to increased computational costs and potential performance degradation due to the inclusion of irrelevant information. To address these challenges, we first select appropriate contexts and few-shot examples; then, we apply the function based on prompt engineering techniques in LLMs. The correct answer can then be generated using the following probability equation:

where is an element of and is an element of , with representing the set of indices of questions related to the target question and representing the set of indices of respondents related to the target respondent .

3.2. Naive Prompt: Methodology and Its Limitations

Building on the reasoning capabilities [29,30] of LLMs, we assume that incorporating non-target question–response pairs into the prompt can significantly improve the accuracy of predictions for a target question. To take advantage of the reasoning abilities, we include non-target question–response pairs as contextual information. Therefore, inspired by the Naive Retrieval-Augmented Generation (Naive RAG) approach described in [31,32], we rank respondent’s question–response text pairs based solely on the cosine similarity between the and in the dataset D, where denotes the function that converts word tokens into embeddings.

While the Naive Prompt approach offers simplicity and ease of implementation, it suffers from several critical limitations:

- 1.

- Vulnerability to Irrelevant Context:The Naive Prompt approach simply selects user question–response pairs that are similar to the target question for context. By relying solely on cosine similarity, this approach is prone to introducing context that may not be semantically relevant to the current target question. This may hinder the performance of LLMs, as unnecessary or off-topic context can lead to lower-quality generation [33].

- 2.

- Inability to Handle Complex Queries:Complex or nuanced queries often require a more sophisticated understanding of the context and nuance, something that simple cosine similarity cannot capture. The Naive Prompt approach struggles in such scenarios, where the absence of a refined selection mechanism leads to suboptimal context assembly.

- 3.

- Underutilization of Survey Data:Because the search is based only on similarity to the target question, the LLM constructs context without fully understanding or utilizing the available survey data. As a result, this method cannot leverage the full potential of survey data for making inferences.

The Naive Prompt approach offers a simple way to capture and utilize the linguistic features of survey data, but it remains suboptimal due to the limitations discussed above. Therefore, to improve data utilization and construct more relevant contexts that enhance the reasoning capabilities of LLMs, we introduce a more effective method in Section 3.3.

3.3. Our Methods

In this section, we present our approach for predicting item non-responses in survey data using LLMs. Our method is built on two core strategies: Row Selection and Column Selection, which together enhance the relevance and accuracy of the model’s predictions.

First, Row Selection involves identifying respondents in the dataset D whose response patterns closely resemble those of the target respondent vector . These selected vectors of the respondent serve as few-shot examples, guiding the LLM in making informed predictions. However, relying solely on response similarity can lead to the risk of choosing homogeneous examples, which may result in biased prompts. To mitigate this, we rank the response vectors based on cosine similarity and select the top-n respondents for each option to be included in the prompt. This approach helps the model generalize more effectively and capture the broader context needed for accurate inferences [34].

Next, Column Selection is employed to identify the most relevant question–answer pairs from the dataset. This step addresses the potential limitations of using solely response similarity as it introduces diverse and contextually rich information into the prompt, further enhancing the model’s reasoning capabilities.

To effectively leverage these strategies, we incorporate in-context learning (ICL) [16], which allows the LLM to generalize from the selected examples and context. This combination of Row Selection and Column Selection, guided by ICL, leads to more accurate and contextually appropriate predictions.

The overall structure of our method is illustrated in Figure 1.

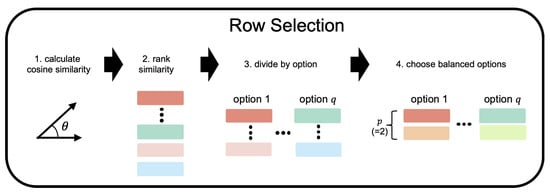

3.3.1. Row Selection

The Row Selection process is designed to strategically select the most relevant few-shot examples that closely resemble the current respondent, thereby enhancing the model’s performance in predicting missing data. The selection is based on the cosine similarity between the response vector of the target respondent and the vectors of other respondents in the dataset D. The detailed procedure for Row Selection, illustrated in Figure 2, is as follows:

Figure 2.

A detailed illustration of the Row Selection. The Row Selection process involves four key steps to select the most relevant few-shot examples for predicting missing survey responses: (1) Calculate Cosine Similarity: measure the similarity between the target respondent and others based on their responses. (2) Rank Similarity: rank all respondents by their similarity scores to the target respondent. (3) Divide by Option: group the top-ranked respondents according to the different answer options for the target question. (4) Choose Balanced Options: select a balanced number of respondents from each answer option to form a few-shot dataset, thereby enhancing diversity and reducing bias in the predictions. The Row Selection process is crucial for constructing a few-shot dataset that is both diverse and unbiased, resulting in enhanced predictive performance of the model.

- 1.

- Cosine Similarity Calculation: the cosine similarity is calculated as follows:where is the set of columns where both the vector and the vector have non-NaN values. By using Equation (3) as a scoring function, this ensures that the similarity is computed based on the commonly answered questions, effectively addressing the issue of missing data.

- 2.

- Ranking Based on Similarity: After calculating the cosine similarity scores for all respondents , the respondents are ranked in descending order of their similarity scores . This ranking allows us to identify the respondents most similar to the target respondents.

- 3.

- Option-Wise Selection: From the top-ranked respondents, p respondents are selected for each answer category of the target question . This step ensures that the few-shot examples include a balanced representation of all possible answer options for the target question.

- 4.

- Balanced Few-Shot Example Selection: Finally, the selected respondents from each answer category are combined to form a balanced few-shot dataset with examples, where q is the number of answer categories (options) for the target question . This balanced dataset is then used to provide context for imputing the missing responses of the target respondent.

Through this process, we can select respondents who are most similar to the target respondent as few-shot examples. Additionally, by selecting p respondents from each answer category of the target question, we ensure that the few-shot examples are not biased and provide a balanced representation of all possible answers.

3.3.2. Column Selection

The Column Selection process is designed to identify the most relevant question–answer pairs from the dataset D that can provide valuable context in answering the target question for the target respondent . This step addresses the limitations of the Naive Prompt approach by selectively utilizing the most relevant information. The procedure consists of the following steps:

- 1.

- Prompting for Related Questions for Enhanced Retrieval:To identify the most relevant questions to the target question , we prompt the LLM to produce alternative phrasings, expansions, or related inquiries that capture different aspects of . Specifically, we use GPT-4 Turbo to generate a set of candidate questions that are semantically related to . This approach allows us to uncover relevant questions that may not be directly similar in wording but are conceptually related, thereby enriching the pool of potential context for the LLM to use in prediction. By using these generated related questions as queries for retrieval, we can capture information that might be missed by using the target question directly, as discussed in Section 3.2.

- 2.

- Embedding with Dense Retriever:Once the candidate questions are generated, each candidate question and each actual question are embedded using a dense retriever model. Specifically, the retriever maps each actual question to an embedding by taking the [CLS] token of the last hidden representation over the tokens in . At query time, the same encoder is applied to the candidate question to obtain a candidate question embedding . This embedding process converts the textual content of the questions into dense vector representations that capture their semantic meaning, enabling more accurate similarity calculations.

- 3.

- Retrieving Similar Questions:After embedding the candidate questions and the actual questions Q using a dense retriever model, we calculate the cosine similarity between the embedded vectors and . For each candidate question , we select the question with the highest cosine similarity:This process yields a set of retrieved questions , where each , selected through Equation (4), is the most similar question to the corresponding candidate question . To ensure that the selected question in does not overlap, each question must be unique. If a question has already been selected for another candidate, it is excluded from the pool of options for subsequent selections, and the next most similar question is selected. This process ensures that all questions in are distinct.

- 4.

- Final Selection of Question–Answer Pairs:From the set of retrieved question–answer pairs , we select the top-k pairs that exhibit the highest relevance to the target question . The relevance is determined by the order of the , which is predetermined by the GPT-4 model per target question. This ensures that the selected pairs provide the most informative context, thereby enabling the LLM to generate more accurate predictions. Additionally, the few-shot respondents selected through the Row Selection process (Section 3.3.1) also undergo the same process of extracting relevant question–answer pairs, ensuring that both row and column selections are optimally aligned to support the LLM’s decision-making process.

By refining the selection of both questions and responses, the Column Selection method addresses the shortcomings of the Naive Prompt approach, ensuring that the LLM is provided with contextually appropriate and semantically relevant information. This method not only improves the relevance of the context used for imputation and tabular predicting but also leverages the full potential of the survey data, leading to more accurate and reliable predictions. Table A1 illustrates the prompt used for generating related questions and provides examples of the extracted questions.

3.3.3. Prompt Generation and Answer Extraction

Finally, we generate the prompt to predict the answer to the target question through the LLM. This involves constructing a comprehensive prompt that includes both the context from the target respondent and the selected few-shot examples. We integrate the variables identified through Row Selection and Column Selection into the final prompt, combining the context from the target respondent with the selected few-shot examples. The final prompt is then input into the LLM. The resulting answer is subsequently processed using regular expressions (regex) to extract the final predicted answer. The final prompt and examples of answers are shown in Table A2.

4. Experiments

In this section, we provide a comprehensive overview of the experimental setup and methodology. All experiments were conducted using an NVIDIA GeForce RTX 3090 Ti GPU, manufactured in Santa Clara, California, USA. We begin by describing the dataset and preprocessing steps in Section 4.1. The baseline models and their hyperparameter configurations are detailed in Section 4.2. Our proposed method’s configurations are presented in Section 4.3, followed by an interpretation of the results in Section 4.4, where we offer insights into the strengths and limitations of our approach. Finally, Section 4.5 presents ablation studies that analyze the impact of various hyperparameter settings on our method’s performance.

4.1. Dataset

For our experiments, we utilized the American Trends Panel (ATP) dataset from Pew Research Center, specifically focusing on the ATP W116 dataset, which covers the survey conducted between 10 October and 16 October 2022. The dataset consists of approximately 150 questions and responses from 5098 participants. This dataset was chosen for its relevance, as it captures timely and topical questions related to politics during that period.

Within the ATP W116 dataset, we selected a subset of questions with minimal missing data, specifically focusing on the SATIS, POL10, POL1JB, DRLEAD, PERSFNCB, and ECON1B questions in Table 1. These questions were chosen because they not only have a low incidence of missing responses, which helps ensure the reliability of our predictions, but also cover a diverse range of political topics, providing a robust test bed for our method.

Table 1.

Summary of survey questions used in the experiment, including the question codes, the full text of each question, and the corresponding answer options. Each question targets a specific aspect of respondents’ opinions on political and economic topics, with multiple-choice answers reflecting the possible range of responses.

We excluded columns that are not necessary for predicting missing values, such as QKEY, INTERVIEW_START, INTERVIEW_END, WEIGHT, LANG, FORM, DEVICE_TYPE, XW78NONRESP, and XW91NONRESP. These fields represent the respondent’s key ID, interview start time, interview end time, weight, language used, respondent form, device type used by the respondent, and response status for waves 78 and 91, respectively.

Finally, we selected 100 participants for the evaluation data and used the remaining participants for the row selection data. To avoid the risk of the model predicting the most frequent answer option and thus achieving a biased F1 score, we ensured that the responses for each question were evenly balanced. This approach allows us to rigorously evaluate the model’s performance across a variety of response types, ensuring that the model is not biased toward the most frequent answers but instead tested on a balanced dataset. Additionally, any unanswered or noisy data, such as Don’t Know (DK) and Refused (RF) answers, were left out of the examples used for both row and column selection, as well as the evaluation data.

4.2. Baselines

- 1.

- MICE: Multivariate imputation by chained equations (MICE) [7] is the most common method for imputing missing data. In the MICE algorithm, imputation can be performed using a variety of parametric and nonparametric methods. For our study, we configured MICE to use a non-parametric method, with the number of datasets set to 2 and the number of iterations also set to 2.

- 2.

- Missforest: MissForest [8] is an iterative imputation method that utilizes random forests to predict and fill in missing values within a dataset, capable of handling different types of variables, such as categorical and continuous variables. For our implementation, we configured the random forest using the default settings from the sklearn library [35] and performed 5 iterations.

- 3.

- TabTransformer: TabTransformer [13] is a tabular data modeling architecture that adapts Transformer layers to the tabular domain by applying multi-head attention on parametric embeddings, effectively managing missing values. For our approach, we set all hyperparameters to their default values as referenced in the original paper, with the exception of the epochs, learning rate, and batch size, which were set to 10, 0.0001, and 1, respectively. To handle missing values, we replaced all missing values with 0.

- 4.

- Naive Prompt: In addition to evaluating sophisticated methods, we also implemented a Naive Prompt approach as a baseline. This method is inspired by the Naive RAG [31] approach, where the selection of relevant questions is simplified to assess the impact of more advanced techniques. In the Naive Prompt approach, we performed column selection without leveraging any advanced techniques to rewrite or refine the selected questions. Specifically, during the column selection process, instead of asking the LLM to rewrite relevant questions, we simply selected the questions based on their cosine similarity between the target question and the user’s non-target question–response pairs. These selected questions were then added to the context in descending order of their cosine similarity scores.

4.3. Our Methods

In our experiments, we evaluated the performance of several language models: LLaMA 3-8B, GPT-4o-mini, and GPT-4-turbo (GPT-4o-mini and GPT-4-turbo were experimented with using the API). For the retrieval component, we utilized the BGE-m3 [36] model as the retriever. The retriever was employed to identify relevant user responses based on the similarity between the target question and the user’s non-target responses. To accurately capture the context of user responses, each response was formatted into a single string with the structure “Q: {question} A: {response}”. This approach ensures that the model considers both the question and the user’s specific answer, providing a more comprehensive context for similarity calculations. To ensure consistent experimental results, we used greedy sampling and set the temperature to 0. Additionally, to validate the effectiveness of our proposed method across different survey environments, we conducted experiments using two different configurations: the Full Context Method, which incorporates both row selection and column selection, and the Non-Row Selection Method, which excludes row selection. This comparison allows us to isolate the impact of row selection on model performance.

4.3.1. Full Context Method

Our primary method, referred to as the Full Context Method, incorporates both row selection (selecting relevant respondents) and column selection (selecting relevant questions). This approach provides the model with a rich and comprehensive context, enhancing its ability to generalize and predict accurately across diverse survey questions.

For this experiment, we fixed the number of few-shot examples (n-shot) to 1 and the number of top relevant questions (top-k) to 25. While these parameters can vary for optimal performance depending on the specific question, we found that these settings provided consistently strong results on average across different questions. A more detailed analysis of the impact of n-shot and top-k is presented in Section 4.5.

When comparing the Full Context Method with baseline methods, several key observations emerge from Table 2. Notably, the Full Context Method using GPT4-turbo achieved the highest F1-scores for the SATIS question (0.84). Similarly, GPT4o-mini under the Full Context Method excelled in predicting DRLEAD and ECON1B, with F1-scores of 0.73 and 0.63, respectively, again surpassing both baseline and Non-Row Selection Methods.

Table 2.

F1-scores of various methods across six different survey questions in Table 1. Bold numbers indicate the best performance achieved by each method. Asterisks (*) denote challenging questions with three options that may impact the model’s performance. The table provides a comparative overview of traditional baselines, Naive prompt methods (NP), and our Full Context (FC) and Non-Row Selection (NRS) Methods across multiple tasks.

4.3.2. Non-Row Selection Method

The Non-Row Selection Method is a variant of our Full Context Method, where the row selection step is excluded. This setting tests the model’s ability to generalize without relying on specific respondent data, making it particularly useful for smaller surveys or scenarios where respondent data are sparse (e.g., High missing rate). By focusing solely on column selection, we aim to evaluate the method’s robustness in predicting responses to completely new questions. However, the Non-Column Selection Method, which would exclude the use of user survey responses entirely, was not conducted as it would contradict the experimental goal of leveraging linguistic reasoning capabilities in the model.

This method emphasizes the importance of the questions themselves without leveraging the additional context provided by similar respondents. As shown in Table 2, while the Non-Row Selection Method performs well in certain cases, such as achieving an F1-score of 0.91 on POL1JB with GPT4-turbo, it generally underperforms compared to the Full Context Method in more complex scenarios like DRLEAD and ECON1B. This highlights the added value of incorporating respondent-specific context through row selection.

4.4. Results and Discussion

Table 2 presents the performance of our methods compared to various baselines across different survey questions. From these results, several key findings emerge:

- 1.

- Significant Performance Improvement:The Full Context Method consistently outperforms the baseline models across most survey questions. Specifically, GPT4-turbo outperformed TabTransformer on SATIS questions by a margin of 0.36. As such, GPT4o-mini on DRLEAD and ECON1B achieved F1-Scores of 0.73 and 0.60, respectively, which is a significant gap from the baseline. The Non-Row Selection Method also performed well. GPT4-turbo achieved the highest F1-Scores of 0.91 and 0.65 on the POL1JB question.

- 2.

- Robustness Across Different Question Types:The proposed method demonstrated robustness across various question types, particularly in more complex scenarios. For example, in predicting responses to the DRLEAD question, which requires a deeper understanding of leadership dynamics, GPT4o-mini achieved an F1-score of 0.73, outperforming both the Non-Row Selection Method and all baseline models. This result highlights the method’s ability to handle complex and nuanced survey questions effectively.

- 3.

- Practical Implications and Scalability:The results also underscore the practical benefits of using our approach in survey data analysis. A key advantage is its ability to consistently outperform traditional baselines without requiring complex additional tuning, which can be time-consuming and resource-intensive, particularly in large-scale survey analysis. Unlike MICE and Missforest, which suffer from high computational complexity and require significant time to process large datasets, our method—especially when leveraging LLMs—demonstrates a much more efficient workflow. For instance, MICE and Missforest can take between 4 h 18 min and 5 h 54 min to complete imputation. TabTransformer, on the other hand, requires approximately 4 h of training time per column to achieve the performance observed in our experiments. In stark contrast, our method significantly reduced the time required for predictions. Both LLaMA3 and GPT-based models take only about 4 to 6 min to predict responses for a single question. This substantial reduction in processing time not only makes our approach more practical for real-time or large-scale survey analysis but also highlights its scalability across various survey sizes and complexities.

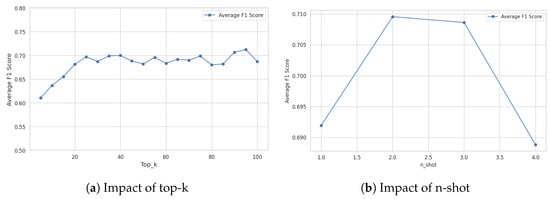

4.5. Ablation Study

In this section, we explore the effects of varying the top-k responses and n-shot respondents on the predictive performance of our methods. All ablation studies were conducted using the GPT-4o-mini model, which consistently demonstrated the best overall performance across our experiments. The results are supported by average F1-score figures, illustrating the impact of these parameters on the model’s accuracy.

4.5.1. The Impact of Top-k Responses

The selection of the top-k responses is crucial for optimizing the predictive performance of our methods, yet it is highly dependent on the specific question being analyzed. The optimal top-k setting can vary significantly due to factors such as the number of answer choices and the complexity of the question. For instance, more complex questions or those with a greater number of answer choices may require a higher top-k to capture the necessary context for accurate predictions.

Our experiments indicate that, on average, F1-Scores tend to increase as top-k approaches 25. Beyond this point, although there are fluctuations, the F1-Scores generally stabilize with no significant improvements. This suggests that while increasing top-k initially enhances the model’s ability to capture relevant information, there is a threshold after which additional context provides diminishing returns.

Figure 3a shows the trend of F1-Scores across different top-k values, clearly demonstrating the plateau effect as top-k exceeds 25. Given this trend, we recommend starting with a top-k value of 5 and incrementally increasing it until the improvements in F1-Score become negligible. Once the performance stabilizes, top-k can be fixed as a hyperparameter for the model. This approach allows for efficient optimization without the need for exhaustive search, balancing the trade-off between computational cost and predictive accuracy.

Figure 3.

The impact of top-k responses and n-shot respondents on the model’s performance, as measured by F1-Score. (a) The average F1-Score trend across different top-k values (ranging from 5 to 100) shows that performance generally increases until top-k reaches 25, after which the scores plateau with minimal improvements. (b) The average F1-Score trend across different n-shot values (ranging from 1 to 4) indicates that the highest performance is achieved at 2-shot, with scores slightly declining as n-shot increases beyond this point.

Future research could focus on developing methods to automatically determine the optimal top-k for different types of questions, potentially leading to even more refined and efficient survey analysis models.

4.5.2. The Impact of n-Shot Respondents

The number of n-shot respondents, which refers to the number of relevant examples provided to the model, plays a critical role in determining the overall performance of our method. To assess its impact, we conducted experiments by fixing top-k at 25—based on our previous findings that this value consistently yields strong performance across various questions—and varying n-shot from 1 to 4.

We limited our exploration to a maximum of 4-shot respondents for several reasons. First, increasing the n-shot significantly adds to the computational burden, as each additional example increases the context length that the model must process. This, in turn, can lead to longer inference times and greater resource consumption, which may not be practical in large-scale survey analysis. Additionally, empirical observations from our experiments showed that beyond 4-shot, the marginal gains in F1-Score tend to diminish, with little to no improvement in prediction accuracy. Thus, a cap of 4 was chosen to balance performance with computational efficiency.

Across most questions, we observed that setting n-shot to 2 typically resulted in the best performance. As shown in Figure 3b, the average F1-Scores generally peaked at 2-shot before stabilizing or slightly declining with higher values. This finding suggests that, in many cases, providing two well-chosen examples strikes an optimal balance, offering the model enough context to effectively generalize without overwhelming it with excessive information. When combined with a robust column selection process, this 2-shot setting appears to enhance the model’s ability to capture the nuances of the survey questions, leading to more accurate and reliable predictions.

5. Limitations and Future Works

5.1. Limitations

While our proposed method demonstrates significant improvements in predicting item non-responses in survey data, there are several limitations that should be acknowledged:

- 1.

- Challenges with Questions Having Numerous Options:The predictive accuracy of our method decreases when dealing with survey questions that offer a wide range of answer choices. As the number of options increases, the complexity and ambiguity in distinguishing between them can lead to difficulties in accurately predicting the correct response. This is particularly evident in questions where the options are nuanced or overlap in meaning.

- 2.

- Requirement for Hyperparameter Tuning:The optimal values for hyperparameters such as top-k (the number of top relevant questions) and n-shot (the number of few-shot examples) can vary between different survey questions. Each question may require a unique set of hyperparameters to achieve the best performance, necessitating a search process to identify these values.

- 3.

- Dependence of Linguistic Relevance:The effectiveness of our method relies heavily on the linguistic relatedness of the survey questions. For questions that are not semantically rich or lack clear connections to other items in the survey—such as “How did you access this survey?”—our method may struggle to provide accurate predictions. In such cases, the lack of relevant contextual information hinders the model’s ability to infer missing responses effectively.

- 4.

- Limitations with Smaller Language Models and Long ContextsWhen utilizing language models with a smaller number of parameters, such as LLaMA38B, performance issues become more pronounced as the context length increases. These smaller models are more susceptible to decreased accuracy and a higher incidence of hallucinations when processing long prompts.

5.2. Future Works

Building upon the findings of this study, there are several directions for future research that could enhance and extend our proposed method. Due to limitations in computational resources and the high inference cost of large language models, our experiments were conducted using only a single dataset. Expanding this work to include multiple datasets with diverse characteristics would allow for a more comprehensive evaluation of the method’s generalizability and robustness.

While we focused on three models and three baseline methods, exploring a broader range of models and techniques may uncover additional insights and potential improvements. Incorporating more advanced techniques for column and row selection could further enhance the effectiveness of our approach. By referencing prior research that delves into critical aspects of demonstration selection, ordering, and formatting, we can refine our selection process to choose better examples for the model.

From a retrieval perspective, there is an opportunity to apply fine-tuning techniques to the retriever to better align it with our dataset. Although our focus was on developing a method that can be easily and efficiently employed without the need for additional training, future work could explore the benefits of retriever fine-tuning on performance.

Addressing these areas in future studies could lead to a more robust, efficient, and accurate method for predicting item non-responses in survey data. Such advancements would not only contribute to the academic field but also have practical implications for enhancing data analysis in various real-world applications.

6. Conclusions

In this paper, we introduced an approach for predicting item non-responses in survey data using LLMs, in contrast to traditional methods using statistical methods or training deep neural networks from scratch. Our method outperformed traditional methods by effectively handling item non-response problems, achieved by inputting prompts into the LLM that are constructed from the most relevant question–answer pairs of other respondents alongside the respondent’s own question–answer pairs. Performance improvements in F1-scores ranged from 5.8% to 52%, highlighting the effectiveness of our advanced Row and Column Selection techniques. This approach offers a more efficient and accessible solution for addressing item non-responses in survey data, helping individuals extract valuable insights faster and easier.

Author Contributions

Conceptualization, J.J. and J.K.; methodology, J.J. and J.K.; software, J.J. and J.K.; validation, J.J. and J.K.; formal analysis, J.J. and J.K.; investigation, J.J. and J.K.; resources, J.J. and J.K.; data curation, J.J. and J.K.; writing—original draft preparation, J.J. and J.K.; writing—review and editing, J.J., J.K., and Y.K.; visualization, J.J. and J.K.; supervision, Y.K.; project administration, Y.K.; funding acquisition, Y.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research was financially supported by the Ministry of Trade, Industry and Energy (MOTIE) and Korea Institute for Advancement of Technology (KIAT) through the International Cooperative R&D program (Project No. P0025661). This work was supported by Institute of Information & communications Technology Planning & Evaluation (IITP) grant funded by the Korea government (MSIT) (No. RS-2022-00155885, Artificial Intelligence Convergence Innovation Human Resources Development (Hanyang University ERICA)).

Data Availability Statement

The data used in this study are available at https://www.pewresearch.org/dataset/american-trends-panel-wave-116/ and was downloaded on 5 July 2024. The scripts used can be accessed at any time at https://github.com/jiogenes/predicting_missing_value.

Acknowledgments

We sincerely thank the anonymous reviewers for their insightful and constructive comments, which greatly contributed to enhancing the content and quality of this paper.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. Full Prompts

In this section, the full prompt used in our method is presented. The prompt is structured into three primary components: the system prompt, the few-shot prompt, and the target user prompt. Each of these components consistently includes fixed plain text and variables enclosed in {} brackets. Examples of the variables used within each prompt are provided immediately below the respective tables.

Appendix A.1. Prompt to Generate Candidate Questions

Table A1.

The full prompt is designed to generate candidate questions. After populating the appropriate text into the designated variables, this prompt is employed as the input for the LLM to extract relevant questions that address the target question. "**" is used to emphasize key categories in the prompt, helping the language model to recognize the structure of the input.

Table A1.

The full prompt is designed to generate candidate questions. After populating the appropriate text into the designated variables, this prompt is employed as the input for the LLM to extract relevant questions that address the target question. "**" is used to emphasize key categories in the prompt, helping the language model to recognize the structure of the input.

| System | Prompt | |

| Your task is to generate a list of questions based on the provided user input from a survey. Assume the user has completed a survey with various questions, but only one question is provided to you. Here is a description of the survey: {description of the survey} Key sections of the survey include: {key sections} | ||

| Variable Examples | ||

| description of the survey | The ATP W116 survey, conducted by Pew Research Center, is a comprehensive pre-election questionnaire targeting a wide array of political and social issues. It was fielded from 10 October to 16 October 2022. The survey includes questions designed to gauge respondents’ satisfaction with the current state of the country, approval ratings of President Joe Biden, opinions on various institutions, and perspectives on upcoming congressional elections. | |

| key sections | 1. **Political Approval and Satisfaction**: Respondents are asked about their satisfaction with the country’s direction and their approval or disapproval of President Biden’s performance, including the strength of their opinions. … 10. **Personal and Employment Situations**: The survey includes sections on respondents’ current work status, pressures felt in their personal and professional lives, and their perceptions of economic issues affecting the nation and themselves personally. | |

| Few-shot | Prompt | |

| The example of the provided question is: {question} Then you would generate additional questions such as: {additional questions} | ||

| Variable Examples | ||

| question | Thinking about the state of the country these days, would you say you feel... | |

| additional questions | How satisfied are you with the current direction of the country? Do you approve or disapprove of President Biden’s performance? How strongly do you feel about your approval or disapproval of President Biden? … How important is the issue of racial equality in influencing your vote in the upcoming elections? | |

| Target Question | Prompt | |

| Now, generate {number of questions} useful questions for the following question. Provided question is: {provided question} Generate the additional survey questions: | ||

| Variable Examples | ||

| provided question | All in all, are you satisfied or dissatisfied with the way things are going in this country today? | |

| number of questions | 20 | |

Appendix A.2. Prompt to Extract Response

Table A2.

The full prompt designed to extract response. Once the appropriate text is populated into the designated variables, this prompt is subsequently utilized as the final input for the LLM.

Table A2.

The full prompt designed to extract response. Once the appropriate text is populated into the designated variables, this prompt is subsequently utilized as the final input for the LLM.

| System | Prompt | |

| You are tasked with predicting responses to targeted user survey questions through given user survey questions-responses. Read the provided user survey questions-responses and use it to select the most appropriate response from the given options to the target question. Ensure that your output includes only the selected response and does not include any additional comments, explanations, or questions. Choose the appropriate answer to the following target question from the following options. Target question: {question} Options: {options} | ||

| Variable Examples | ||

| question | All in all, are you satisfied or dissatisfied with the way things are going in this country today? | |

| options | Satisfied Dissatisfied | |

| Few-shot | Prompt | |

| Here are examples of respondents similar to this user: User n’s survey responses: {responses} Answer: {answer} | ||

| Variable Examples | ||

| responses | Q: How satisfied are you with the choice of candidates for Congress in your district this November? A: Somewhat satisfied … Q: Thinking about the nation’s economy…How would you rate economic conditions in this country today?…A: Only fair | |

| answer | Satisfied | |

| Target User | Prompt | |

| Now, read the following target user survey responses and query, and select the most appropriate response from the given options based on the other responses. Refer to the answers provided by respondents similar to the user provided above. Ensure that your output includes only in Options: User survey responses: {user response} Answer: | ||

| Variable Examples | ||

| user responses | Q: How satisfied are you with the choice of candidates for Congress in your district this November? A: Not too satisfied … Q: Thinking about the nation’s economy… How would you rate economic conditions in this country today?…A: Only fair | |

References

- De Wit, J.R.; Lisciandra, C. Measuring norms using social survey data. Econ. Philos. 2021, 37, 188–221. [Google Scholar] [CrossRef]

- Delfino, A. Student engagement and academic performance of students of partido state university. Asian J. Univ. Educ. 2019, 15, 42–55. [Google Scholar] [CrossRef]

- Khoi Quan, N.; Liamputtong, P. Social Surveys and Public Health. In Handbook of Social Sciences and Global Public Health; Liamputtong, P., Ed.; Springer International Publishing: Cham, Switzerland, 2023; pp. 1–19. [Google Scholar] [CrossRef]

- Brick, J.M.; Kalton, G. Handling missing data in survey research. Stat. Methods Med Res. 1996, 5, 215–238. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.; Shao, J. Nearest neighbor imputation for survey data. J. Off. Stat. 2000, 16, 113. [Google Scholar]

- Honaker, J.; King, G.; Blackwell, M. Amelia II: A Program for Missing Data. J. Stat. Softw. 2011, 45, 1–47. [Google Scholar] [CrossRef]

- van Buuren, S.; Groothuis-Oudshoorn, K. mice: Multivariate Imputation by Chained Equations in R. J. Stat. Softw. 2011, 45, 1–67. [Google Scholar] [CrossRef]

- Stekhoven, D.J.; Bühlmann, P. MissForest—Non-parametric missing value imputation for mixed-type data. Bioinformatics 2011, 28, 112–118. [Google Scholar] [CrossRef]

- Lever, J.; Krzywinski, M.; Altman, N. Points of Significance: Logistic regression. Nat. Methods 2016, 13, 541–542. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Ke, G.; Meng, Q.; Finley, T.; Wang, T.; Chen, W.; Ma, W.; Ye, Q.; Liu, T.Y. LightGBM: A Highly Efficient Gradient Boosting Decision Tree. In Proceedings of the Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Ke, G.; Xu, Z.; Zhang, J.; Bian, J.; Liu, T.Y. DeepGBM: A Deep Learning Framework Distilled by GBDT for Online Prediction Tasks. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, New York, NY, USA, 4–8 August 2019; pp. 384–394. [Google Scholar] [CrossRef]

- Huang, X.; Khetan, A.; Cvitkovic, M.; Karnin, Z. TabTransformer: Tabular Data Modeling Using Contextual Embeddings. arXiv 2020, arXiv:2012.06678. [Google Scholar]

- Somepalli, G.; Goldblum, M.; Schwarzschild, A.; Bruss, C.B.; Goldstein, T. SAINT: Improved Neural Networks for Tabular Data via Row Attention and Contrastive Pre-Training. arXiv 2021, arXiv:2106.01342. [Google Scholar]

- Moser, C.; Kalton, G. Question wording. In Research Design; Routledge: Abingdon, UK, 2017; pp. 140–155. [Google Scholar]

- Brown, T.B.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models are Few-Shot Learners. arXiv 2020, arXiv:2005.14165. [Google Scholar]

- Kojima, T.; Gu, S.S.; Reid, M.; Matsuo, Y.; Iwasawa, Y. Large Language Models are Zero-Shot Reasoners. arXiv 2023, arXiv:2205.11916. [Google Scholar]

- Liu, J.; Liu, C.; Zhou, P.; Lv, R.; Zhou, K.; Zhang, Y. Is ChatGPT a Good Recommender? A Preliminary Study. arXiv 2023, arXiv:2304.10149. [Google Scholar]

- Yang, F.; Chen, Z.; Jiang, Z.; Cho, E.; Huang, X.; Lu, Y. PALR: Personalization Aware LLMs for Recommendation. arXiv 2023, arXiv:2305.07622. [Google Scholar]

- Salemi, A.; Mysore, S.; Bendersky, M.; Zamani, H. LaMP: When Large Language Models Meet Personalization. arXiv 2024, arXiv:2304.11406. [Google Scholar]

- Lewis, P.; Perez, E.; Piktus, A.; Petroni, F.; Karpukhin, V.; Goyal, N.; Küttler, H.; Lewis, M.; tau Yih, W.; Rocktäschel, T.; et al. Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks. arXiv 2021, arXiv:2005.11401. [Google Scholar]

- Izacard, G.; Grave, E. Leveraging Passage Retrieval with Generative Models for Open Domain Question Answering. arXiv 2021, arXiv:2007.01282. [Google Scholar]

- Xu, P.; Ping, W.; Wu, X.; McAfee, L.; Zhu, C.; Liu, Z.; Subramanian, S.; Bakhturina, E.; Shoeybi, M.; Catanzaro, B. Retrieval meets Long Context Large Language Models. arXiv 2024, arXiv:2310.03025. [Google Scholar]

- Gao, L.; Ma, X.; Lin, J.; Callan, J. Precise Zero-Shot Dense Retrieval without Relevance Labels. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers); Rogers, A., Boyd-Graber, J., Okazaki, N., Eds.; Association for Computational Linguistics: Toronto, ON, Canada, 2023; pp. 1762–1777. [Google Scholar] [CrossRef]

- Asai, A.; Wu, Z.; Wang, Y.; Sil, A.; Hajishirzi, H. Self-RAG: Learning to Retrieve, Generate, and Critique through Self-Reflection. arXiv 2023, arXiv:2310.11511. [Google Scholar]

- Yan, S.Q.; Gu, J.C.; Zhu, Y.; Ling, Z.H. Corrective Retrieval Augmented Generation. arXiv 2024, arXiv:2401.15884. [Google Scholar]

- Kim, J.; Lee, B. AI-Augmented Surveys: Leveraging Large Language Models and Surveys for Opinion Prediction. arXiv 2024, arXiv:2305.09620. [Google Scholar]

- Simmons, G.; Savinov, V. Assessing Generalization for Subpopulation Representative Modeling via In-Context Learning. arXiv 2024, arXiv:2402.07368. [Google Scholar]

- Plaat, A.; Wong, A.; Verberne, S.; Broekens, J.; van Stein, N.; Back, T. Reasoning with Large Language Models, a Survey. arXiv 2024, arXiv:2407.11511. [Google Scholar]

- Huang, J.; Chang, K.C.C. Towards Reasoning in Large Language Models: A Survey. arXiv 2023, arXiv:2212.10403. [Google Scholar]

- Gao, Y.; Xiong, Y.; Gao, X.; Jia, K.; Pan, J.; Bi, Y.; Dai, Y.; Sun, J.; Wang, M.; Wang, H. Retrieval-Augmented Generation for Large Language Models: A Survey. arXiv 2024, arXiv:2312.10997. [Google Scholar]

- Ram, O.; Levine, Y.; Dalmedigos, I.; Muhlgay, D.; Shashua, A.; Leyton-Brown, K.; Shoham, Y. In-Context Retrieval-Augmented Language Models. arXiv 2023, arXiv:2302.00083. [Google Scholar] [CrossRef]

- Shi, F.; Chen, X.; Misra, K.; Scales, N.; Dohan, D.; Chi, E.H.; Schärli, N.; Zhou, D. Large Language Models Can Be Easily Distracted by Irrelevant Context. In Proceedings of the 40th International Conference on Machine Learning, Honolulu, HI, USA, 23–29 July 2023, Honolulu, HI, USA, 23–29 July 2023; Krause, A., Brunskill, E., Cho, K., Engelhardt, B., Sabato, S., Scarlett, J., Eds.; PMLR: Birmingham, UK, 2023; Volume 202, pp. 31210–31227. [Google Scholar]

- Liu, J.; Shen, D.; Zhang, Y.; Dolan, B.; Carin, L.; Chen, W. What Makes Good In-Context Examples for GPT-3? arXiv 2021, arXiv:2101.06804. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Chen, J.; Xiao, S.; Zhang, P.; Luo, K.; Lian, D.; Liu, Z. BGE M3-Embedding: Multi-Lingual, Multi-Functionality, Multi-Granularity Text Embeddings Through Self-Knowledge Distillation. arXiv 2024, arXiv:2402.03216. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).