Abstract

The rise of social media has transformed the landscape of news dissemination, presenting new challenges in combating the spread of fake news. This study addresses the automated detection of misinformation within written content, a task that has prompted extensive research efforts across various methodologies. We evaluate existing benchmarks, introduce a novel hybrid word embedding model, and implement a web framework for text classification. Our approach integrates traditional frequency–inverse document frequency (TF–IDF) methods with sophisticated feature extraction techniques, considering linguistic, psychological, morphological, and grammatical aspects of the text. Through a series of experiments on diverse datasets, applying transfer and incremental learning techniques, we demonstrate the effectiveness of our hybrid model in surpassing benchmarks and outperforming alternative experimental setups. Furthermore, our findings emphasize the importance of dataset alignment and balance in transfer learning, as well as the utility of incremental learning in maintaining high detection performance while reducing runtime. This research offers promising avenues for further advancements in fake news detection methodologies, with implications for future research and development in this critical domain.

1. Introduction

In recent years, the proliferation of fake news facilitated by the internet and social media has emerged as a pressing societal concern, exerting influence on public opinion, political decisions, and even national security [1,2]. The escalating volume of online content underscores the urgent need for effective automated fake news detection, where machine learning plays a pivotal role. Despite the availability of numerous machine learning libraries and tools, selecting the optimal algorithm and fine-tuning parameters remains daunting, requiring extensive experimentation, evaluation, and a nuanced understanding of algorithmic principles [3]. Additionally, tasks such as dataset creation, preprocessing, and building machine learning classifiers to discern between fake and real news entail their own challenges and complexities. While several studies analyze different facets of the fake news ecosystem, our work focuses on two key aspects: the efficacy of preprocessing techniques and the evaluation of machine learning algorithms.

The investigation and analysis of the existing literature related to fake news detection using machine learning (ML) models reveal several areas of uncharted research territories [4,5,6,7]. The first major gap in the current research corpus pertains to comparative analysis studies between different ML approaches, specifically those using term frequency–inverse document frequency (TF–IDF), Empath, and a hybrid model that combines the two [4,5]. In addition to comparing different ML models, there is a gap in exploring transfer learning applications with the Bernoulli naive Bayes (BNB) classifier in the context of fake news detection [6,7]. Lastly, applying incremental learning with the multinomial naive Baye (MNB) and BNB classifiers for fake news detection has not received adequate scholarly attention [6,7].

Hence, our research contributes novel insights in three main areas: First, we evaluate and compare the accuracy and performance of various machine learning algorithms. Second, we propose a novel hybrid word embedding technique that enhances news label prediction by integrating lexical, linguistic, and morphological features with vectorized text. Finally, we develop a web framework that facilitates a systematic and replicable approach for text classification, emphasizing dataset alignment and balance and the utility of transfer and incremental learning techniques.

To guide this research and ensure its focus and relevance, several research questions have been identified, each addressing a crucial aspect of the broader objective:

- What is the comparative effectiveness of different machine learning models and feature extraction methods in detecting fake news?

- What is the potential of transfer and incremental learning in improving the efficiency of fake news detection?

These research questions aim to address various technical issues related to using machine learning for fake news detection.

Our novel hybrid approach, which combines TF–IDF word vectorization and feature extraction, surpassed benchmarks by 4% (±0.5%), offering a potential state-of-the-art solution for future research in fake news detection. This study’s contributions lie in its multi-dataset approach, comprehensive performance evaluations, and open-source framework designed for continuous updates in the detection of misinformation.

The remainder of this paper is structured as follows: Section 2 reviews the related literature, Section 3 presents our methodology, Section 4 details the implementation, Section 5 discusses experimental results, and Section 6 offers concluding remarks.

Materials and Methods

During the preparation of this work, the author(s) used ChatGPT and Grammarly to edit and polish the writing of the final manuscript. After using these tools, the author(s) reviewed and edited the content as needed and take(s) full responsibility for the content of the publication.

2. Related Works

This section presents our findings from reviewing the existing literature on applying machine learning methods for detecting fake news.

2.1. Works on Comparing ML Models

Khan et al. [4] conducted a comprehensive benchmark study on fake news detection, evaluating various machine learning approaches on diverse datasets. They explore traditional machine learning and deep learning models, including advanced pre-trained language models like BERT, RoBERTa, and ELECTRA. Their study addresses the issue of dataset bias by assessing models on different types of news articles. In comparison, our work focuses on the automated detection of misinformation within written content through a novel hybrid word embedding model. We integrate traditional TF–IDF methods with advanced feature extraction techniques, considering the text’s linguistic, psychological, morphological, and grammatical aspects.

Ahmad et al. [8] proposed a machine learning ensemble approach for the automated classification of news articles. They conducted extensive experiments on four real-world datasets, including the ISOT fake news dataset, two datasets from Kaggle, and a combined dataset. The proposed framework involved training various machine learning algorithms, including logistic regression, support vector machine, multilayer perceptron, and k-nearest neighbors. Ensemble techniques such as bagging, boosting, and voting classifiers were employed to enhance classification accuracy further.

While both works explored a wide range of machine learning models on multiple datasets, our study emphasizes the effectiveness of the hybrid model in surpassing benchmarks and outperforming alternative experimental setups. Additionally, our work provides a systematic and replicable approach for text classification, emphasizing dataset alignment and balance and the utility of transfer and incremental learning techniques.

Albahr et al. [5] compared four machine learning algorithms using NLP techniques on a public dataset. Their study, while insightful, does not leverage a dedicated framework for comparing the performance of the models. This difference in approaches underscores the unique contribution of our work in offering a more structured and comprehensive assessment of machine learning models for fake news detection.

Barua et al. [9] employed an ensemble of advanced, recurrent neural networks—specifically, long short-term memory (LSTM) and gated recurrent unit (GRU)—to discern the veracity of news articles. To enhance accessibility and real-world applicability, the researchers have implemented an Android application that can be used to authenticate news articles from various sources on the internet. Our work differs in several key aspects. We adopted a broader range of machine learning models, including some innovative techniques not explored in this study. Also, while their research relied on a single custom dataset and some standard ones, our work engaged with multiple datasets to mitigate potential biases and enhance the generalizability of our findings.

2.2. The Use of Naive Bayes Classifiers for Fake News Detection

Hassan et al. [6] provided a taxonomy for fake news detection approaches. The work does not include any experiments or results. Therefore, it does not extensively explore comparing performance among different machine learning models, nor does it discuss the impact of various feature extraction methods or the potential of transfer and incremental learning.

Mandical et al. [7] proposed a system for reliable fake news classification using machine learning algorithms, including multinomial naive Bayes, passive aggressive classifier, and deep neural networks. The system’s performance is assessed across eight datasets from diverse sources. The paper details the analysis and results of each model, highlighting the potential to simplify fake news detection with appropriately selected models and tools. In comparison to this work, our study provides a wider range of ML models. Additionally, they solely focused on investigating the impact of ML and deep learning models with TF–IDF, whereas we explore the effects of TF–IDF, Empath, and the hybrid approach. Furthermore, Mandical et al. did not delve into the analysis of transfer learning or incremental learning with any of these models.

2.3. Hybrid Word Embedding Techniques in Fake News Detection

The study by Nasir et al. [10] explored the challenges of fake news detection in the context of social media’s rapid information dissemination. The authors proposed a novel hybrid model that integrates convolutional and recurrent neural networks for effective fake news classification, demonstrating superior performance compared to non-hybrid methods across two prominent datasets (ISO and FA-KES).

The research conducted by Ajao et al. [11] also addressed the dissemination of fake news on social media platforms, in particular the Twitter platform. Their proposed solution is based on combining both convolutional neural networks and long short-term memory networks to detect and classify this news. The testing of their hybrid framework resulted in achieving an accuracy of 82%. Perhaps the key finding of this approach was demonstrating the ability to intuitively identify relevant features in the fake news articles without prior domain knowledge.

Other interesting research includes human interaction merged with mainstream fake news detection mechanisms. Ruchansky et al. [12] proposed a model that considers three essential characteristics for a more accurate and automated prediction of fake news. The model considers the behavior of both users and articles and the group behavior of those who propagate fake news. Motivated by these three characteristics, the authors introduced the CSI model, which consists of three modules: capture, score, and integrate. Based on the response and text, the first module employs a recurrent neural network to capture the temporal pattern of user activity on a given article. The second module focuses on learning the source characteristic based on user behavior, and these two are combined in the third module to classify an article as fake or not. The results of experiments conducted on real-world data demonstrate that the CSI model achieves higher accuracy than existing models and extracts meaningful latent representations of users and articles.

As can be seen, numerous studies have used hybrid approaches in several steps of fake news detection. However, most of these studies focus on combining similar methodologies (i.e., merging two deep learning models like CNN and RNN), which can be computationally expensive and may require large labeled datasets. Furthermore, these approaches often do not address the evolving nature of misinformation, or the challenges posed by dataset bias and scalability. In our work, we demonstrate the benefits of merging two distinct embedding techniques—text tokenization (i.e., TF–IDF, glove) and feature extraction (morphological, lexical, psychological) to create a more efficient and interpretable solution. By integrating transfer learning and incremental learning techniques, we also address the limitations of previous research in handling dynamic and large-scale datasets, ensuring that our model remains adaptive and scalable for real-world applications. This allows us to contribute a more comprehensive and robust framework that fills the existing gaps in the literature.

3. Methodology

This section outlines the methodology employed in this work, covering key steps from dataset selection to model evaluation. It begins with an overview of the chosen datasets, followed by a discussion of preprocessing techniques used to prepare the data. The section then outlines the feature extraction methods applied to transform the data for machine learning analysis. Subsequently, the chosen machine learning models and their characteristics are described, along with the exploration of transfer and incremental learning possibilities. Finally, the evaluation metrics utilized to assess model performance are explained, providing insights into their selection and interpretation. This concise methodology overview ensures a comprehensive yet succinct explanation of the research approach.

3.1. Datasets

This section outlines the datasets utilized in the research. Each dataset offers unique characteristics and contributes to evaluating our novel approach and various machine learning algorithms. The criteria for dataset selection were based on the need for diversity in news sources, formats, and topics. The selected datasets represent a broad spectrum of media outlets and varying topics, allowing for robust testing of the model’s generalization capabilities. Our approach can be extended to other datasets by adjusting for linguistic and cultural contexts, making it applicable to a wide range of misinformation detection scenarios.

3.1.1. FA-KES: A Fake News Dataset around the Syrian War

The FA-KES dataset focuses on the Syrian conflict, presenting a departure from typical fake news datasets [13]. It comprises articles from diverse media outlets, covering various aspects of the conflict. It was generated using a semi-supervised fact-checking approach, human contributors and crowd-sourcing techniques to match articles against a ‘ground truth’ reference from the Syrian Violations Documentation Center. With 804 labeled articles as true or fake, this dataset is invaluable for training machine learning models in credibility assessment. Its construction method offers a generalized framework for creating fake news datasets in other conflict-related domains.

3.1.2. Simplified Version of the Fake and Real Dataset

A simplified, labeled version [14] of the fake and real dataset [15] is used. Around 10,000 articles are included in this dataset. This dataset will be referred to as the Merged dataset in the Experiment section.

3.1.3. The WELFake Dataset

The WELFake dataset, consisting of 72,134 news articles (35,028 real, 37,106 fake), merges four popular news datasets (Kaggle, McIntire, Reuters, BuzzFeed Political) to prevent classifier overfitting and provide more training data.

3.1.4. Datasets from Kaggle

We utilized three distinct datasets from Kaggle: The first dataset, comprising approximately 50,000 unique news articles, is meticulously scraped from various online sources [16]. It will be referred to as the Scraped dataset. The second dataset, from a Kaggle competition, contains 20,387 unique news articles with attributes including ‘title’, ‘author’, ‘text’, and ‘label’. It will be referred to as the Kaggle 1 dataset. The last dataset contains 3352 unique news articles, structured with attributes such as ‘URLs’, ‘Headline’, ‘Body’, and ‘Label’. The ‘Body’ section will be used for feature extraction. It will be referred to as the Kaggle 2 dataset.

3.1.5. Processed Datasets

To replicate real-world conditions for incremental learning, we prepared trimmed versions of the datasets WELFake, Scraped, and Kaggle 1, each containing the first 3000 rows. We wrote a Python script that shuffles the original datasets before rows extraction. Furthermore, the script displays the counts of 1s and 0s after dataset splitting. If unsatisfied with the resulting dataset, users can rerun the script until a balanced dataset meeting their criteria is obtained. Additionally, the script preserves the original columns while creating a manageable subset. The resulting trimmed datasets are labeled as trimmed-WELFake, trimmed-Scraped, and trimmed-Kaggle1, respectively.

These processed datasets will be utilized for initial model training. The remaining larger datasets serve as a testing ground for the model’s incremental learning capabilities, employing a naive Bayes classifier. This approach enables testing of the model’s scalability and adaptability in handling larger data volumes, simulating real-world conditions.

3.2. Preprocessing Techniques

The preprocessing stage in this work involves several crucial steps to refine raw datasets for model training and testing.

- Data Loading and Decoding: Initial steps include ingesting datasets of various formats to ensure compatibility and ease of use.

- Feature Extraction: Utilizing lexical features, the Empath tool conducts a comprehensive analysis of text for discernible linguistic patterns.

- Feature Selection: Employing a feature selection process, ANOVA F-value calculation is utilized for provided features, selecting attributes based on statistical significance to reduce dataset dimensionality.

- Data Cleaning and Preprocessing: Steps are taken to ensure dataset quality, including removing instances with missing values, excluding non-English text if necessary, and randomizing DataFrame rows. While non-English text exclusion was not necessary for this study, the framework supports it for multilingual datasets.

- Text Preprocessing: Crucial for natural language processing, this involves removing punctuation and stopwords, and word stemming to standardize text data, facilitating more effective machine learning.

- Additional Operations: Label assignment and selective column retention are conducted to streamline the dataset for machine learning algorithms, followed by merging the dataset and labels to form a unified structure.

- Text Vectorization: The final preprocessing stage transforms processed text into a numerical format suitable for machine learning algorithms. TF–IDF vectorization is employed, assigning scores to words based on their frequency of occurrence, effectively transforming textual data into a mathematical representation.

The final outcome of these steps is stored in one file, vectorizer.sav, saving vectorization results to a pickle file, facilitating consistency and reproducibility in data preprocessing and feature extraction for transfer and incremental learning.

3.3. The Novel Hybrid Approach

Our hybrid approach combines the strengths of TF–IDF and Empath to create a more robust set of features for text analysis. This section provides an overview of both techniques before detailing our approach.

3.3.1. TF–IDF

TF–IDF (term frequency–inverse document frequency) is a widely used text vectorization technique that assigns weights to words in a document based on their frequency in that document and their inverse frequency in the entire document corpus. It helps to understand the importance of a word in a document [17].

The term frequency (TF) is calculated as the number of times a word appears in a document divided by the total number of words in the document. The inverse document frequency (IDF) is calculated as the logarithm of the total number of documents divided by the number of documents containing the term. The TF–IDF value is the product of these two quantities:

where:

and

3.3.2. Empath

Empath is a powerful text analysis library that extracts a variety of features from text, including over 200 categories of human-centric lexicon such as emotions, attitudes, values, demographics, physical states, and behaviors. It also recognizes morphological and grammatical features, capturing syntactic and semantic patterns in text [18].

3.3.3. The Hybrid Approach

Our hybrid approach integrates TF–IDF and Empath features to enhance text analysis. By combining the quantitative measures of TF–IDF with the rich semantic understanding provided by Empath, we aim to achieve more nuanced and accurate analysis of textual data. This hybridization allows us to leverage both the statistical properties of TF–IDF and the contextual insights of Empath, resulting in a more comprehensive approach to text analysis. Our process is outlined step-by-step below:

- Step 1: Calculate TF–IDF Vectors: Begin by analyzing a collection of documents. For each document, compute the TF–IDF score for every word. This process yields a vector representation for each document, with TF–IDF scores as elements.

- Step 2: Extract Empath Features: Utilize Empath to identify relevant features from the same set of documents. This step generates another vector representation for each document, with Empath feature values as elements.

- Step 3: Combine Vectors: Merge the TF–IDF vector and the Empath feature vector for each document. This fusion results in a hybrid vector containing both TF–IDF and Empath features.

3.4. Overview of Machine Learning Algorithms and Libraries

This section provides an overview of the machine learning algorithms and libraries used in our web framework, emphasizing their relevance to fake news detection. The source code primarily utilizes Python, leveraging well-established machine learning libraries.

Logistic Regression(LR) serves as a fundamental classification algorithm, often employed as a benchmark for binary classification tasks. Our implementation utilizes the LogisticRegression module from the sklearn library, allowing customization of parameters such as solver, C, penalty, and max_iter to optimize model performance.

Decision Trees (DT) offer a simplistic yet powerful method for classification tasks. Our project adopts the DecisionTreeClassifier from the sklearn library, allowing customization of parameters like criterion, min_samples_split, splitter, and max_depth for effective model tuning.

Nearest Neighbors: The k-nearest neighbors (KNN) algorithm is a versatile instance-based learning technique. We employ the KNeighborsClassifier module from sklearn, enabling parameter adjustments such as algorithm, n_neighbors, weights, and p to optimize model performance for fake news detection.

Gradient Boosting Classifiers (GBC) are robust ensemble learning algorithms. Our project harnesses the GradientBoostingClassifier from sklearn, facilitating customization of parameters like criterion, loss, learning_rate, max_depth, and min_samples_split for enhanced model effectiveness.

Naive Bayes Classifiers are probabilistic models widely used for text classification tasks, including fake news detection. These classifiers are based on Bayes’s Theorem, making probabilistic predictions about the class of a sample given its features. Specifically, we focus on two variants: multinomial naive Bayes (MNB) and Bernoulli naive Bayes (BNB), both available in the scikit-learn library.

3.5. Customization of Naive Bayes Classifier

In this section, we delve into the customization of naive Bayes classifiers tailored specifically for fake news detection. We enable users to adjust three key parameters: ‘alpha’, ‘fit_prior’, and ‘class_prior’, offering flexibility and control over model performance.

The Value of ‘Alpha’: ‘Alpha’ is a smoothing parameter used to address zero probabilities and enhance model robustness, particularly crucial for handling unseen words in fake news detection tasks. By applying Laplace smoothing, we ensure non-zero probabilities for all words, mitigating overfitting and increasing model generalization.

The Impact of ‘Fit_prior’: ‘Fit_prior’ determines whether the model learns class prior probabilities from the data or uses a uniform prior. This parameter is essential for adapting to imbalanced class distributions, a common scenario in fake news datasets. Adjusting ‘fit_prior’ based on the dataset’s specific distribution optimizes the classifier’s predictive ability.

The Influence of ‘Class_prior’: ‘Class_prior’ allows users to specify prior probabilities of classes, offering flexibility in scenarios where domain knowledge diverges from the training set distribution. This parameter empowers users to incorporate external information about class distributions, enhancing the model’s adaptability and performance.

In summary, by customizing these parameters, we empower users to tailor the naive Bayes classifier to suit the unique challenges of fake news detection. This flexibility enables the development of more effective and reliable models, contributing to the ongoing efforts to combat misinformation.

3.6. Enhancing Naive Bayes Models with Transfer Learning and Incremental Learning

Harnessing the power of transfer learning and incremental learning offers a promising avenue for improving the effectiveness of naive Bayes models in detecting fake news. These advanced learning techniques hold significant potential in tackling the evolving challenges of information dissemination, where the ability to adapt quickly to new patterns is crucial.

Transfer learning involves leveraging existing knowledge from one domain to expedite and refine learning in a related domain. In the realm of fake news detection, this could entail applying insights gleaned from general text classification to distinguish between genuine and counterfeit news, thus reducing the need for extensive labeled training data specific to fake news [19].

Conversely, incremental learning continuously updates the model with new data, facilitating real-time learning and adaptation. This approach is particularly effective for handling large-scale data or situations with changing data distributions, characteristics often observed in news and social media contexts [20]. By continuously adapting to new information, incremental learning ensures that the naive Bayes model remains effective against the evolving strategies employed in fake news generation.

To fully explore the potential of these learning paradigms in the context of naive Bayes-based fake news detection, it is essential to delve into the advantages and limitations of each and examine their unique contributions to addressing the contemporary challenge of fake news.

3.6.1. Transfer Learning

Transfer learning, a methodology widely used in machine learning, involves leveraging knowledge gained from one task or domain to solve another task or problem in a different domain [19]. It provides an efficient approach to model training, particularly when the target task lacks sufficient labeled data or computational resources, and time is limited.

The significance of transfer learning can be understood from the following perspectives:

- Efficiency in Learning:Transfer learning allows the utilization of pre-trained models, reducing the need to train models from scratch and enhancing the speed and efficiency of model training [21].

- Addressing Data Scarcity: Many real-world problems suffer from a scarcity of labeled data. Transfer learning leverages pre-trained models on large-scale datasets, enabling model fine-tuning with a smaller amount of labeled data from the target task [22].

- Enhancing Model Performance:Transfer learning can improve model performance, especially when the target task’s dataset is small or noisy [23].

To illustrate transfer learning, consider the analogy of developing a deep learning model to recognize a specific breed of cat, such as the Maine Coon. Starting from scratch would require collecting and labeling a large number of Maine Coon images and training a deep learning model, a time-consuming and challenging process. However, with transfer learning, a pre-trained model, already trained on a large-scale dataset like ImageNet, can be fine-tuned with a smaller dataset of Maine Coon images. This approach leverages the knowledge encapsulated in the pre-trained model, resulting in a high-performing Maine Coon recognition model with less labeled data and training time.

Applying this concept to fake news detection, the complex problem can benefit from knowledge learned in related tasks. For instance, a pre-trained model on a large-scale text corpus would have learned language nuances, grammar, and context, which can be transferred to a fake news detection model. By fine-tuning the model with a smaller labeled dataset of fake and real news, its ability to detect subtle cues and patterns indicative of fake news can be improved, leading to enhanced performance.

In this work, the naive Bayes models are employed for identifying fake news and embracing transfer learning capabilities via utilizing the partial_fit function. This functionality enables the models to adapt and refine their parameters in real time, accommodating the dynamic nature of news distribution.

3.6.2. Incremental Learning Overview

Incremental learning, also referred to as online learning or out-of-core learning, is a machine learning paradigm that progressively learns from data over time, updating the model with each new piece of data. This approach is particularly effective when dealing with large datasets that cannot be loaded into memory simultaneously [24]. Additionally, it proves advantageous in scenarios involving data streams where data is continuously generated [25].

Incremental learning differs from batch learning, where the entire dataset trains the model simultaneously [26]. Instead, with incremental learning, the model can be trained and updated as new data arrives, making it more adaptable to changes in data distribution over time, a phenomenon known as concept drift.

In this research, both naive Bayes models utilized for fake news detection support incremental learning through the partial_fit function. This function allows the model to update its parameters on the fly as new labeled data becomes available, providing a real-time response to the evolving landscape of news dissemination [27].

Advantages of Incremental Learning: The following highlight the key advantages of incremental learning:

- Efficient Large-Data Handling: Incremental learning excels at processing vast datasets that exceed memory capacity constraints [28]. Learning iteratively from data in discrete segments effectively addresses challenges posed by large-scale data [24].

- Dynamic Adaptability: A primary advantage of incremental learning is its ability to adapt to changing data patterns over time [20]. This dynamic adaptability becomes particularly crucial when data distribution exhibits temporal variations, ensuring sustained model performance through continuous updates [29].

- Real-time Learning Capability: Incremental learning embodies the capacity to learn instantaneously from newly arriving data [20]. This real-time learning attribute is indispensable when models need to assimilate and respond to fresh information rapidly [25].

Limitations of Incremental Learning: Below, we discuss some of the limitations of incremental learning:

- Risk of Forgetting: A notable challenge in incremental learning is the risk of obliterating previously learned information [28]. This phenomenon may occur when newly introduced data significantly differs from or contradicts past data.

- The Stability–Plasticity Dilemma: Achieving a balance between integrating new information (plasticity) and preserving prior knowledge (stability) is critical in incremental learning [29]. Overemphasis on adapting to new data could lead to overfitting recent trends, while underemphasis may render the model inert to novel changes.

- Sensitivity to Noise: Incremental learning, by updating model parameters with every incoming data point, might exhibit heightened sensitivity to noisy or aberrant data points [30]. This sensitivity could potentially undermine model performance by overadjusting to the noise.

In essence, incremental learning is a delicate balance between accommodating new data while maintaining the integrity of previously acquired knowledge. Each new data point presents an opportunity for learning, but is also a risk of disruption. Therefore, successful incremental learning necessitates careful management of these conflicting pressures [25].

In this work, transfer learning and incremental learning will be implemented by saving a pre-trained model into a pickle file alongside the vectorization outputs, and then reloading these files for fine-tuning the model using the partial_fit function provided by scikit-learn.

3.6.3. Comparison: Transfer Learning vs. Incremental Learning

While both transfer learning and incremental learning aim to leverage prior knowledge, they employ distinct approaches and applications. Transfer learning entails applying knowledge acquired from one task to enhance learning in a related but distinct task. It serves as a strategy to improve learning efficiency or performance, particularly when there are similarities between the source and target tasks or domains.

Conversely, incremental learning focuses on adapting to new data over time within the same task. It serves as a method to handle large-scale or streaming data and cope with changes in data distribution. Unlike transfer learning, incremental learning does not necessarily involve learning from a different task or domain but instead emphasizes continual learning from an ever-growing dataset.

In the context of fake news detection, transfer learning may involve applying knowledge from a model trained on general text classification tasks to the specific task of classifying news as fake or real. In contrast, incremental learning for this task would entail updating the fake news detection model as new news articles become available, enabling the model to stay current with the latest trends and tactics used in fake news creation [31].

Based on the content of your paper, the most appropriate section to add a description of AI would be within Section 3, specifically after the subsection that discusses the machine learning algorithms and feature extraction methods. This placement makes sense because the AI techniques you are employing, such as machine learning models, transfer learning, and incremental learning, are integral to your approach for detecting misinformation.

3.7. Evaluation Metrics

We evaluate the performance of our classifiers using common metrics: accuracy, precision, recall, and F1 score. These metrics offer insights into the effectiveness of our classification models. Accuracy measures overall correctness, precision assesses exactness, recall gauges completeness, and F1 score balances precision and recall. These metrics, derived from true positive (TP), true negative (TN), false positive (FP), and false negative (FN), provide a comprehensive evaluation of classifier performance.

4. Implementation

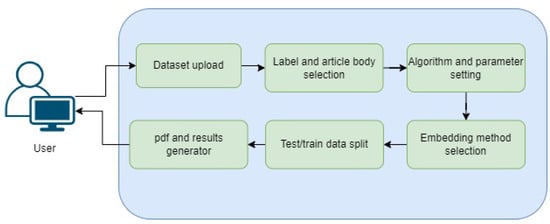

Figure 1 depicts our web framework’s architectural diagram. Designed to facilitate user-friendly and efficient machine learning prediction for selected datasets, the framework comprises predefined architectures complemented by frontend implementation for usability and technical efficiency. The framework’s workflow is straightforward:

Figure 1.

Overview of our fake news detection framework.

- Users upload their desired dataset.

- Users select algorithms and parameters for the classification process.

- Users specify the column needed for classification, filtering out unnecessary columns like date, URL, and ID.

- Users define the test and training data split.

- After classification performance is displayed, users can download the results and parameters.

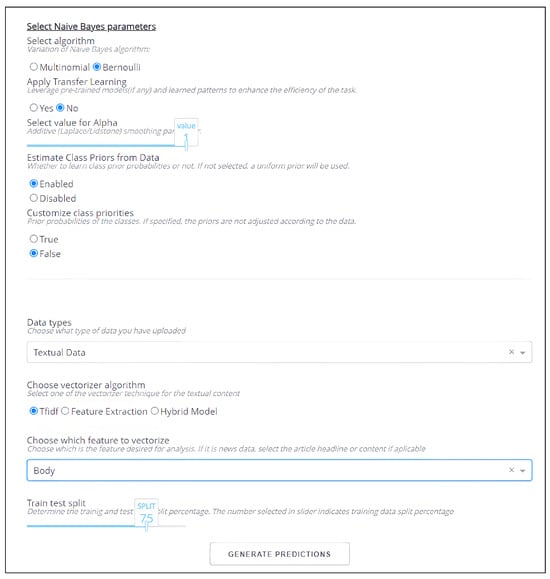

Figure 2 provides snapshots of the first page of our framework. The framework, constructed using Python’s Dash library 2.18.0 [32], offers an intuitive interface for building web-based analytical applications. Dash, often utilized alongside libraries like scikit-learn and TensorFlow, simplifies the creation of interactive visualizations and dashboards, facilitating the deployment and interaction of machine learning models via a web interface.

Figure 2.

The first page of our framework, in which users are prompted to select the database, the machine learning algorithms, and other parameters.

Appendix A, Appendix B, Appendix C and Appendix D contain selected excerpts and snapshots illustrating our framework’s internal implementation and output. Readers are encouraged to refer to the appendix for a more detailed view of our work. The source code and datasets utilized in this work will be made publicly available upon paper acceptance.

5. Experiments and Results

In this work, a total of 24 experiments were conducted to evaluate the performance of various machine learning (ML) models using different settings, datasets, and paradigms.

The first set of twelve experiments compared the performance of all six ML models across three distinct datasets, employing three feature extraction methods. The remaining 12 experiments focused on assessing the potential of transfer and incremental learning using multinomial and Bernoulli naive Bayes classifiers across various datasets.

It is crucial to remember that the overarching objective of these experiments is to highlight the significance of employing the hybrid feature extraction technique.

5.1. Experimental Setup

Each experiment will maintain a standardized setup to ensure a fair and consistent comparison across different models and feature extraction methods.

Dataset Selection: The Merged, Kaggle 1, Kaggle 2, and FA-KES datasets will be used for the first 12 Experiments. Kaggle 2 and Trimmed-Kaggle 1 will be used to train the pre-trained model for transfer learning, and all the trimmed datasets will be used to train the pre-trained models for incremental learning. These pre-trained models will be tested on larger datasets, namely WELFake, Kaggle 1, and Scraped. The Kaggle 2 dataset and all the trimmed datasets will also be referred to as the “pre-training” datasets in the following paragraphs. For a complete description and references to these databases, please refer to Section 3.1.

Train–Test Split: The data within each dataset will undergo a train–test split, employing a 90-10 ratio for the initial 12 experiments. Additionally, 10-fold cross-validation will be executed, with the mean value of each metric calculated. In the subsequent 12 experiments, 95% of the pre-training datasets will be allocated for training the pre-trained models, while 15% of the testing datasets will be utilized for fine-tuning these models.

Feature Extraction: Three distinct feature extraction methods will be employed across the first twelve experiments, namely, TF–IDF, Empath, and the novel hybrid approach. The TF–IDF method will be exclusively utilized for the remaining experiments.

Timing: For the first 12 experiments, the Runtime value indicates the time for vectorization and training the models, as we are also comparing the performance between different feature extraction techniques. In the remaining experiments, the Runtime value indicates the time to train (or pre-train and fine-tune) the models.

5.2. Comparing ML Models across Feature Extraction Methods

We explore three feature extraction techniques: TF–IDF, Empath, and our innovative hybrid approach, each paired with six models. Our findings reveal that models utilizing TF–IDF and the hybrid method consistently outperform others (see Table 1 for results on the Kaggle 1 dataset). Notably, logistic regression shines with an impressive accuracy of 0.96.

Table 1.

Results of testing six ML models using different feature extraction methods on Kaggle 1 dataset.

Regarding efficiency, the gradient boosting model with the hybrid approach exhibits the longest runtime, clocking in at 256.1 s. Conversely, multinomial naive Bayes (MNB) and Bernoulli naive Bayes (BNB) models leveraging TF–IDF demonstrate the shortest runtimes, both averaging around 11 s.

Furthermore, MNB consistently outperforms BNB across TF–IDF and the hybrid approach, showcasing higher accuracy alongside shorter runtimes.

Like the results from the Kaggle 1 dataset, all models tend to perform better with TF–IDF and the hybrid approach, especially the novel hybrid approach, which yields 99% and 98% accuracy for gradient boosting and logistic regression (see Table 2 for results on the Kaggle 2 dataset).

Table 2.

Results of testing six ML models using different feature extraction methods on Kaggle 2 dataset.

Regarding runtime, MNB and BNB are still the fastest among all six models. However, this time, BNB outperformed MNB with a higher value in all four measurements; meanwhile, MNB is still faster than BNB.

All models perform exceptionally well with TF–IDF, particularly decision trees and gradient boosting, achieving near-perfect accuracy and F1 scores (see Table 3 for results on the Merged dataset). This indicates that almost all predictions are correct. However, KNN lags slightly behind, with accuracy and F1 scores around 0.83. This could be attributed to KNN’s less effective handling of high-dimensional features. BNB and MNB also exhibit strong performance with accuracy and F1 scores in the 0.93–0.94 range.

Table 3.

Results of testing six ML models using different feature extraction methods on Merged dataset.

Except for KNN, all models perform exceedingly well with the hybrid method. Decision trees and gradient boosting models achieve near-perfect accuracy and F1 scores. Logistic regression, BNB, and MNB also show strong performance, with accuracy and F1 scores in the 0.94–0.98 range. Yet, as with the TF–IDF results, KNN is slightly weaker, with accuracy and F1 scores around 0.87.

Meanwhile, Empath is outperformed by the other two feature extraction techniques as in the previous six experiments.

In terms of runtime, gradient boosting takes the longest in all scenarios, particularly with the hybrid feature extraction method, requiring nearly 400 s. This is likely due to its iterative nature, requiring more time to improve its predictions gradually. Conversely, BNB and MNB consistently take the least time across all scenarios, possibly due to their simplicity as models based on naive Bayes theory, with lower computational demands.

The performance of the models generally declines on the FA-KES dataset, which is smaller in size compared to the first two datasets (see Table 4 for results on the FA-KES dataset). Regardless of the feature extraction method used, all models’ accuracy lies between 0.45 to 0.57, significantly lower than those on the first two datasets. This might be due to the smaller dataset size, making it harder for the models to learn sufficient patterns for accurate prediction. That is one major reason to consider these results unreliable [17].

Table 4.

Results of testing six ML models using different feature extraction methods on FA-KES dataset.

Another point worth noting here is that the MNB model achieved 100% recall with TF–IDF and Empath, meaning that the model recognizes all the news articles as fake. The same happened with LR using Empath. This can also be attributed to the lack of data.

Overall Analysis

Overall, it is evident that logistic regression consistently achieves the highest accuracy across all three datasets, positioning it as the most reliable model for prediction. When time efficiency is a priority, the multinomial naive Bayes (MNB) and Bernoulli naive Bayes (BNB) models stand out, clocking in the shortest runtime.

In terms of feature extraction methods, the hybrid approach invariably enhances all models’ performance. However, this comes with the trade-off of a considerably longer runtime. The TF–IDF method, on the other hand, provides a favorable balance between performance and speed, yielding respectable accuracy in a relatively short time.

An interesting dynamic is seen between MNB and BNB models. MNB shows superior performance when dealing with larger datasets, while BNB takes the lead in the context of smaller data volumes; ref. [33] demonstrates the importance of choosing the right model based on the specific characteristics of the dataset.

5.3. Results and Discussion on Transfer Learning

5.3.1. Pre-Training on Kaggle 2 for Testing on Kaggle 1

In Table 5 and Table 6, we show the results we obtained from training our models on the Kaggle 2 database and then testing on the Kaggle 1 database. It is worth noting that the time taken for training the pre-trained model was 0.0083 s (BNB) and 0.0059 (MNB). While both models exhibited a minor dip in accuracy, fine-tuning the pre-trained models proved significantly more time-efficient than training a new model from the ground up. Specifically, the Bernoulli naive Bayes (BNB) model demonstrated a speed increase by a factor of seven, while the multinomial naive Bayes (MNB) model was six times faster. This represents a considerable advantage, underscoring the potential benefits of leveraging pre-trained models in terms of computational efficiency [22].

Table 5.

Results of Kaggle 1 dataset without transfer learning.

Table 6.

Results of Kaggle 1 dataset with transfer learning.

In order to demonstrate the significance of transfer learning, we conducted a one-time experiment wherein we trained a model and saved it to a pickle file. We then directly applied this pre-trained model to a new dataset without utilizing transfer learning (Table 7).

Table 7.

Results of applying Kaggle 2 model on Kaggle 1 without transfer learning.

From these results, it can be noted that the performance of the models was notably diminished when applied to a new dataset without transfer learning. These results underpin the importance of transfer learning when applying pre-trained models to new datasets, highlighting its role in maintaining and potentially improving model performance.

It is important to note that this process was carried out only once for illustrative purposes and will not be repeated for each experiment in the project. The key takeaway from this experiment is the crucial role of transfer learning in ensuring the generalization capability of machine learning models.

5.3.2. Pre-Training on Kaggle 2 for Testing on FA-KES

In Table 8 and Table 9, we show the results we obtained from training our models on the Kaggle 2 database and then testing on the FA-KES dataset.

Table 8.

Results of FA-KES dataset without transfer learning.

Table 9.

Results of FA-KES dataset with transfer learning.

From the results tables, it is clear that the application of transfer learning to the FA-KES dataset did not lead to any significant improvements. Notably, the recall for the MNB model remains at 1 in both scenarios, indicating that the model has classified every news article in the dataset as fake. The previous experiment also observed this phenomenon, leading to concerns about the model’s validity. As such, these results have been deemed unreliable, prompting us to disregard them in our analysis.

5.3.3. Pre-Training on Trimmed-Kaggle 1 for Testing on Kaggle 2

In Table 10 and Table 11, we show the results we obtained from training our models on the Trimmed-Kaggle 1 dataset and then testing on the Kaggle 1 database. It is worth noting that the time taken for training the pre-trained model was 0.014 s (BNB) and 0.006 s (MNB).

Table 10.

Results of Kaggle 2 dataset without transfer learning.

Table 11.

Results of Kaggle 2 dataset with transfer learning.

Based on the results we have obtained, the application of transfer learning on the Kaggle 2 dataset has actually decreased model performance rather than enhancing it. This significant reduction in performance indicates that the transfer learning experiment was not successful in this context. It is possible that the pre-trained model did not align well with the Kaggle 2 dataset. Therefore, this experiment can be deemed unsuccessful, highlighting the importance of ensuring compatibility between the pre-training and target tasks in a transfer learning scenario [23].

5.3.4. Overall Analysis

The results from these experiments demonstrated that while transfer learning significantly enhances computational efficiency, its effectiveness in improving model performance varies based on the dataset used. In our case, an improvement in model performance was observed when testing on the Kaggle 1 dataset, but the application of transfer learning led to a reduction in performance for the Kaggle 2 dataset and yielded unreliable results on the FA-KES dataset.

These findings highlight the importance of dataset compatibility in transfer learning applications. It also underscores the need to consider the specific characteristics of the datasets when designing machine learning systems, as the success of transfer learning appears to be highly dependent on the similarity between the pre-training and target tasks.

5.4. Results and Discussion on Incremental Learning

In this section, we delve into the results of three experiments centered around incremental learning.

5.4.1. Pre-Training on Trimmed-Kaggle 1 for Testing on Kaggle 1

In Table 12 and Table 13, we present the results obtained with and without incremental learning. Notably, the pre-trained model required 0.014 s for training in the case of BNB and 0.006 s for MNB.

Table 12.

Results of Kaggle 1 dataset without incremental learning.

Table 13.

Results of Kaggle 1 dataset with incremental learning.

Both models utilizing incremental learning techniques exhibited superior performance compared to those trained from scratch. Despite requiring a similar time investment for fine-tuning as transfer learning, they demonstrated significantly higher accuracy. This improvement can be attributed to the alignment between the pre-training dataset and the final testing dataset. Leveraging this alignment allowed the models to more effectively learn and generalize, resulting in enhanced accuracy. This observation is supported by the findings of Schlimmer (1986) [30].

5.4.2. Pre-Training on Trimmed-WELFake for Testing on WELFake

In Table 14 and Table 15, we present the results obtained with and without incremental learning. Notably, the pre-trained model required 0.009 s for training in the case of BNB and 0.004 s for MNB.

Table 14.

Results of WELFake dataset without incremental learning.

Table 15.

Results of WELFake dataset with incremental learning.

The results demonstrate that implementing incremental learning on the WELFake dataset with both the BNB and MNB models leads to a decrease in runtime without a substantial compromise in performance. Prior to incremental learning, the BNB and MNB models achieved accuracy scores of 0.9 and 0.86, respectively, but incurred relatively higher runtimes at 0.14 and 0.06.

Upon implementing incremental learning, there was a slight reduction in accuracy, yet the runtime significantly improved to 0.028 and 0.01, respectively. This underscores the advantage of incremental learning in enhancing computational efficiency, particularly valuable in scenarios where computational resources or time constraints exist.

5.4.3. Pre-Training on Trimmed-Scraped for Testing on Scraped

In Table 16 and Table 17, we present the results obtained with and without incremental learning. Notably, the pre-trained model required 0.008 s for training in the case of BNB and 0.003 s for MNB.

Table 16.

Results of Scraped dataset without incremental learning.

Table 17.

Results of Scraped dataset with incremental learning.

The experimentation conducted with the Scraped dataset yielded mixed outcomes upon the implementation of incremental learning. While there was a notable improvement in runtime for both the BNB and MNB models, the performance metrics displayed significant fluctuations.

To elaborate, focusing on the Bernoulli naive Bayes (BNB) model, there was an enhancement in accuracy, rising from 0.77 to 0.82, alongside a reduction in runtime from 0.035 to 0.0114. Despite these relatively minor adjustments, they imply that incremental learning may offer advantages for this specific model.

Conversely, the multinomial naive Bayes (MNB) model experienced a substantial decrease in performance, with accuracy plummeting from 0.76 to 0.58. This emphasizes that the benefits of incremental learning may not be consistent across all models or datasets.

The discrepancy in performance is further underscored by the MNB model’s high precision but low recall score post incremental learning. This suggests that while the model correctly identifies positive instances (high precision), it fails to identify all positive instances (low recall), particularly regarding the classification of fake news. This inclination toward conservative labeling, where news articles are labeled as false only when the model is highly certain, may contribute to the observed decrease in accuracy.

One plausible explanation for these findings is rooted in the essence of incremental learning. This approach operates under the assumption that the model receives regular updates with new data. However, if the incoming data deviates from the overall distribution of the dataset or introduces novel concepts unfamiliar to the model, classification accuracy may suffer.

This observation aligns with the Scraped dataset’s characteristics, comprising news articles sourced from diverse web sources through web crawlers. Given this diversity, it is conceivable that the incoming data may not align closely with the distribution or features of the initial data used for model pre-training.

The overall analysis of the experiments with incremental learning highlights its potential to enhance computational efficiency, evidenced by reduced runtimes across all cases. However, its impact on model performance varied depending on the dataset and the model under consideration.

Incremental learning yielded improved accuracy for both models when tested on the Kaggle 1 dataset, attributed to the robust alignment between the pre-training and final testing datasets. Conversely, its application to the WELFake dataset led to a slight decrease in performance despite the observed enhancement in runtime efficiency.

Of particular interest is the disparate impact of incremental learning on the two models when applied to the Scraped dataset. While the Bernoulli naive Bayes (BNB) model showed marginal accuracy improvements, the multinomial naive Bayes (MNB) model experienced a notable decline in performance. This discrepancy could be attributed to inconsistencies between the data used for pre-training the model and the subsequent fine-tuning data.

These findings underscore that the benefits of incremental learning are not universally applicable and necessitate careful evaluation based on the specific characteristics of the models and datasets involved. Particularly for diverse datasets like Scraped, the advantages of incremental learning may be constrained due to the heterogeneous nature of incoming data.

6. Conclusions

In this study, we investigated various machine learning approaches for detecting fake news articles, leveraging diverse datasets from open platforms. We conducted evaluation experiments on six English news datasets, assessing performance using metrics including accuracy, precision, recall, and F1 score.

A significant focus was placed on identifying optimal machine learning algorithm parameters and word embedding techniques tailored to specific datasets. Our novel hybrid approach, which combines TF–IDF word vectorization and feature extraction, showed promising results, surpassing benchmarks by 4% (±0.5%) and representing a potential state-of-the-art method for future fake news detection studies.

Moreover, our experiments revealed that combining word embeddings such as TF–IDF and CountVectorizer with the logistic regression algorithm yielded superior performance across multiple metrics, while also offering faster processing times.

Our study highlights the importance of cross-dataset evaluation to assess method robustness and identify suitable algorithms based on dataset characteristics.

Lastly, we developed a web application framework to streamline evaluation metric generation and reporting for uploaded datasets. This open-source tool allows users to specify algorithm parameters, word embedding methods, and data splits for classification, enhancing accessibility to machine learning tools for fake news detection.

As part of future work, we plan to broaden the dataset scope, refine classification strategies, and address computational limitations to further enhance the robustness and scalability of the proposed solution.

Limitations and Future Directions

Despite the contributions this work made to our understanding of fake news detection, there are several limitations to note as follows:

- Language Limitations:Currently, the framework primarily supports datasets in the English language. Its functionality and performance may not translate well to datasets in other languages due to differences in linguistic structures, syntax, semantics, and cultural contexts.

- Binary Classification: At present, the framework operates on a binary classification model, differentiating only between ‘fake’ and ‘not-fake’ news articles. This approach oversimplifies the multifaceted nature of misinformation, which exists on a spectrum and includes elements like satire, misinformation, disinformation, and propaganda.

- Lack of Dataset Diversity: The datasets used in this work may not represent the full diversity and complexity of fake news. Fake news varies by source, topic, and stylistic features. If the training data does not reflect this variety, the models’ generalizability could be impacted.

- Runtime and Computational Resources: Despite improvements, the computational resources required for the novel hybrid approach when applied to large datasets may still be significant. This can limit the accessibility and scalability of the framework, particularly for users with less powerful computational resources.

Moving forward, there are several promising directions for enhancing the framework’s capabilities and addressing the current limitations:

- Refining Transfer Learning: The potential of transfer learning demonstrated in this work, despite some setbacks, suggests that it remains a promising avenue for further investigation. Future research could involve implementing transfer learning with other machine learning models and integrating transfer learning with the novel hybrid approach.

- Refining Incremental Learning: Our results suggest that incremental learning can reduce runtime with a slight trade-off in performance. Future work could involve combining this approach with the novel hybrid feature extraction method, potentially enhancing performance while maintaining efficiency.

- Addition of New ML Models and Deep Learning Models: The framework’s functionality could be broadened further by incorporating a wider array of machine learning models. The addition of deep learning models, in particular, could offer enhanced capabilities, given their well-documented efficacy in identifying intricate patterns within large datasets.

Author Contributions

Conceptualization, B.C., H.A. and F.M.; methodology, B.C. and F.M.; software, B.C. and K.W.; validation, B.C., F.M. and K.W.; data curation, B.C.; writing—original draft preparation, F.M., B.C. and K.W.; writing—review and editing, F.M., H.A. and D.K.; supervision, F.M. and H.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data and code presented in this study are available https://github.com/fmohsen/Fake-news-res.

Acknowledgments

The author(s) would like to acknowledge the use of ChatGPT GPT-4o mini and Grammarly to edit and polish the writing of the final manuscript.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. Output

Figure A1.

Snapshot of a PDF report produced by our framework.

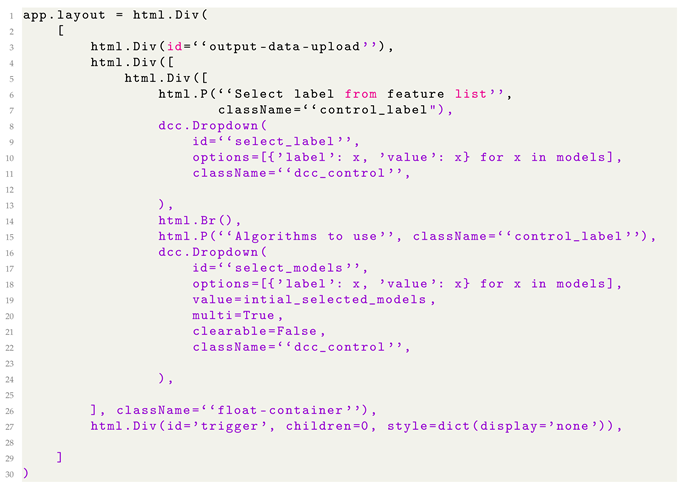

Appendix B. Application of Main Code Listings and Callbacks

| Listing A1. Example of implementing the Dash library in Python. |

|

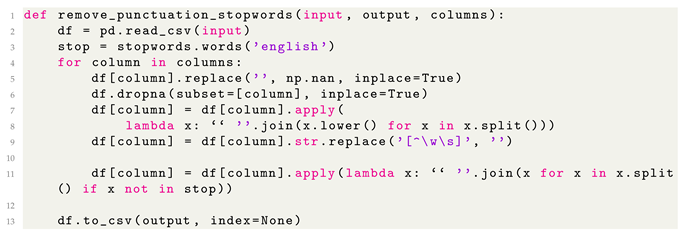

Appendix C. Data Preprocessing and Word Embedding Listings

| Listing A2. Internal implementation for stopword and punctuation removal. |

|

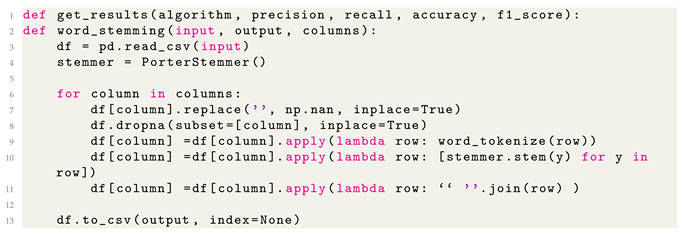

| Listing A3. Stemming and tokenization method. |

|

Appendix D. Feature Extraction and Selection Implementation

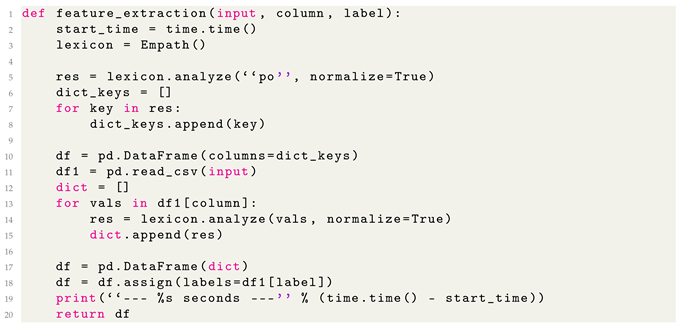

| Listing A4. Feature extraction method from input dataset. |

|

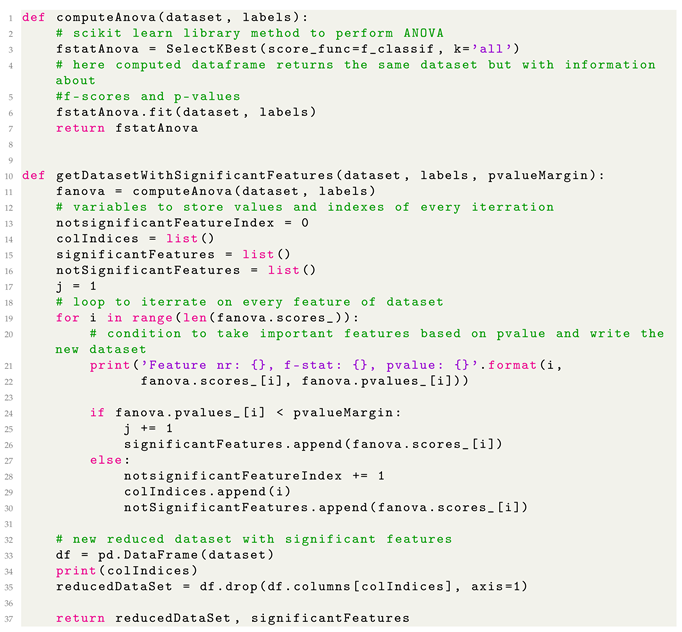

| Listing A5. Methods for ANOVA computation and selection of the most significant features. |

|

References

- Vosoughi, S.; Roy, D.; Aral, S. The spread of true and false news online. Science 2018, 359, 1146–1151. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Ghorbani, A.A. An overview of online fake news: Characterization, detection, and discussion. Inf. Process. Manag. 2020, 57, 102025. [Google Scholar] [CrossRef]

- Manzoor, S.I.; Singla, J. Fake news detection using machine learning approaches: A systematic review. In Proceedings of the 2019 3rd international conference on trends in electronics and informatics (ICOEI), Tirunelveli, India, 23–25 April 2019; IEEE: New York, NY, USA, 2019; pp. 230–234. [Google Scholar]

- Khan, J.Y.; Khondaker, M.T.I.; Afroz, S.; Uddin, G.; Iqbal, A. A benchmark study of machine learning models for online fake news detection. Mach. Learn. Appl. 2021, 4, 100032. [Google Scholar] [CrossRef]

- Albahr, A.; Albahar, M. An empirical comparison of fake news detection using different machine learning algorithms. Int. J. Adv. Comput. Sci. Appl. 2020, 11, 146–152. [Google Scholar] [CrossRef]

- Hassan, E.A.; Meziane, F. A Survey on Automatic Fake News Identification Techniques for Online and Socially Produced Data. In Proceedings of the 2019 International Conference on Computer, Control, Electrical, and Electronics Engineering (ICCCEEE), Khartoum, Sudan, 21–23 September 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Mandical, R.R.; Mamatha, N.; Shivakumar, N.; Monica, R.; Krishna, A.N. Identification of Fake News Using Machine Learning. In Proceedings of the 2020 IEEE International Conference on Electronics, Computing and Communication Technologies (CONECCT), Bangalore, India, 2–4 July 2020; IEEE: New York, NY, USA, 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Ahmad, I.; Yousaf, M.; Yousaf, S.; Ahmad, M.O. Fake News Detection Using Machine Learning Ensemble Methods. Complexity 2020, 2020, 8885861. [Google Scholar] [CrossRef]

- Barua, R.; Maity, R.; Minj, D.; Barua, T.; Layek, A.K. F-NAD: An Application for Fake News Article Detection using Machine Learning Techniques. In Proceedings of the 2019 IEEE Bombay Section Signature Conference (IBSSC), Mumbai, India, 26–28 July 2019; IEEE: New York, NY, USA, 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Nasir, J.A.; Khan, O.S.; Varlamis, I. Fake news detection: A hybrid CNN-RNN based deep learning approach. Int. J. Inf. Manag. Data Insights 2021, 1, 100007. [Google Scholar] [CrossRef]

- Ajao, O.; Bhowmik, D.; Zargari, S. Fake News Identification on Twitter with Hybrid CNN and RNN Models. In Proceedings of the 9th International Conference on Social Media and Society, Copenhagen, Denmark, 18–20 July2018; ACM: New York, NY, USA, 2018. SMSociety ‘18. pp. 226–230. [Google Scholar] [CrossRef]

- Ruchansky, N.; Seo, S.; Liu, Y. CSI: A Hybrid Deep Model for Fake News Detection. In Proceedings of the 2017 ACM on Conference on Information and Knowledge Management, Singapore, 6–10 November 2017; ACM: New York, NY, USA, 2017. CIKM ‘17. pp. 797–806. [Google Scholar] [CrossRef]

- Abu Salem, F.K.; Al Feel, R.; Elbassuoni, S.; Jaber, M.; Farah, M. FA-KES: A Fake News Dataset around the Syrian War. In Proceedings of the International AAAI Conference on Web and Social Media, Münich, Germany, 11–14 June 2019; Volume 13, pp. 573–582. [Google Scholar] [CrossRef]

- AMI. Simplified Fake News Dataset. Available online: https://www.kaggle.com/datasets/fanbyprinciple/simplified-fake-news-dataset?select=test.csv (accessed on 20 May 2023).

- Bisaillon, C. Fake and Real News. Available online: https://www.kaggle.com/datasets/clmentbisaillon/fake-and-real-news-dataset (accessed on 5 July 2023).

- SINGH, S.V. Fake News Scraped. Available online: https://www.kaggle.com/datasets/shashankvikramsingh/fake-news-scraped (accessed on 7 July 2023).

- Joachims, T. A Probabilistic Analysis of the Rocchio Algorithm with TFIDF for Text Categorization. In Proceedings of the Fourteenth International Conference on Machine Learning, San Francisco, CA, USA, 8–2 July 1997; ACM: San Francisco, CA, USA, 1997. ICML ‘97. pp. 143–151. [Google Scholar]

- Fast, E.; Chen, B.; Bernstein, M.S. Empath: Understanding Topic Signals in Large-Scale Text. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, San Jose, CA, USA, 7–12 May 2016; ACM: New York, NY, USA, 2016. CHI ‘16. pp. 4647–4657. [Google Scholar] [CrossRef]

- Weiss, K.; Khoshgoftaar, T.M.; Wang, D. A survey of transfer learning. J. Big Data 2016, 3, 9. [Google Scholar] [CrossRef]

- Joshi, P.; Kulkarni, P. Incremental learning: Areas and methods—A survey. Int. J. Data Min. Knowl. Manag. Process 2012, 2, 43. [Google Scholar] [CrossRef]

- Pan, S.J.; Yang, Q. A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- Rosenstein, M.T.; Marx, Z.; Kaelbling, L.P.; Dietterich, T.G. To transfer or not to transfer. In Proceedings of the NIPS 2005 Workshop on Transfer Learning, Vancouver, BC, Canada, 5–8 December 2005; Semantic Scholar. Volume 898. [Google Scholar]

- Agarwal, N.; Sondhi, A.; Chopra, K.; Singh, G. Transfer learning: Survey and classification. In Smart Innovations in Communication and Computational Sciences: Proceedings of ICSICCS 2020; Tiwari, S., Trivedi, M., Kumar, K., Misra, A.K., Kumar, K., Suryani, E., Eds.; Dr. RML Avadh University: Faizabad, India, 2021; pp. 145–155. [Google Scholar]

- Ade, R.; Deshmukh, P. Methods for incremental learning: A survey. Int. J. Data Min. Knowl. Manag. Process 2013, 3, 119. [Google Scholar]

- Giraud-Carrier, C. A note on the utility of incremental learning. AI Commun. 2000, 13, 215–223. [Google Scholar]

- van de Ven, G.M.; Tuytelaars, T.; Tolias, A.S. Three types of incremental learning. Nat. Mach. Intell. 2022, 4, 1185–1197. [Google Scholar] [CrossRef] [PubMed]

- Roure Alcobé, J. Incremental Learning of Tree Augmented Naive Bayes Classifiers. In Proceedings of the Advances in Artificial Intelligence— IBERAMIA 2002, Seville, Spain, 12–15 November 2002; Garijo, F.J., Riquelme, J.C., Toro, M., Eds.; Springer: Berlin/Heidelberg, Germany, 2002; pp. 32–41. [Google Scholar]

- Yang, Q.; Gu, Y.; Wu, D. Survey of incremental learning. In Proceedings of the 2019 Chinese Control And Decision Conference (CCDC), Nanchang, China, 3–5 June 2019; IEEE: New York, NY, USA, 2019; pp. 399–404. [Google Scholar] [CrossRef]

- Zhao, B.; Xiao, X.; Gan, G.; Zhang, B.; Xia, S.T. Maintaining Discrimination and Fairness in Class Incremental Learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; IEEE: New Orleans, LA, USA, 2020. [Google Scholar]

- Schlimmer, J.C.; Granger, R.H. Incremental learning from noisy data. Mach. Learn. 1986, 1, 317–354. [Google Scholar] [CrossRef]

- You, C.; Xiang, J.; Su, K.; Zhang, X.; Dong, S.; Onofrey, J.; Staib, L.; Duncan, J.S. Incremental Learning Meets Transfer Learning: Application to Multi-site Prostate MRI Segmentation. In Proceedings of the Distributed, Collaborative, and Federated Learning, and Affordable AI and Healthcare for Resource Diverse Global Health; Albarqouni, S., Bakas, S., Bano, S., Cardoso, M.J., Khanal, B., Landman, B., Li, X., Qin, C., Rekik, I., Rieke, N., et al., Eds.; Springer: Cham, Switzerland, 2022; pp. 3–16. [Google Scholar]

- Plotly: The Front End for ML and Data Science Models. Available online: https://plotly.com/dash/ (accessed on 2 August 2023).

- Singh, G.; Kumar, B.; Gaur, L.; Tyagi, A. Comparison between multinomial and Bernoulli naïve Bayes for text classification. In Proceedings of the 2019 International Conference on Automation, Computational and Technology Management (ICACTM), London, UK, 24–26 April 2019; IEEE: New York, NY, USA, 2019; pp. 593–596. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).