Abstract

Modern computing environments, thanks to the advent of enabling technologies such as Multi-access Edge Computing (MEC), effectively represent a Cloud Continuum, a capillary network of computing resources that extend from the Edge of the network to the Cloud, which enables a dynamic and adaptive service fabric. Efficiently coordinating resource allocation, exploitation, and management in the Cloud Continuum represents quite a challenge, which has stimulated researchers to investigate innovative solutions based on smart techniques such as Reinforcement Learning and Computational Intelligence. In this paper, we make a comparison of different optimization algorithms and a first investigation of how they can perform in this kind of scenario. Specifically, this comparison included the Deep Q-Network, Proximal Policy Optimization, Genetic Algorithms, Particle Swarm Optimization, Quantum-inspired Particle Swarm Optimization, Multi-Swarm Particle Optimization, and the Grey-Wolf Optimizer. We demonstrate how all approaches can solve the service management problem with similar performance—with a different sample efficiency—if a high number of samples can be evaluated for training and optimization. Finally, we show that, if the scenario conditions change, Deep-Reinforcement-Learning-based approaches can exploit the experience built during training to adapt service allocation according to the modified conditions.

1. Introduction

In the last few years, the research community has observed a reshaping of network infrastructures, with a growing interest and adoption of the Cloud Continuum (CC) paradigm. The CC brings together an assortment of computational and storage resources, spreading them across different layers, resulting in the establishment of a unified ecosystem [1]. In this way, users would rely not only on the resources available at the Edge, but could also exploit computing resources provided by Fog or Cloud providers. Consequentially, the CC opens new possibilities for distributing the load of computationally intensive services such as Machine Learning applications, online gaming, Big Data management, and so on [2,3]. It is important to note that all these new innovative options and the inclusion of a plethora of new devices in the ecosystem create a large number of potential threads, which require the adoption of new effective strategies and countermeasures [4]. In such a distributed and heterogeneous environment like the one that the CC brings to the table, effective service management becomes crucial to ensure seamless service delivery and resource optimization.

However, efficiently coordinating resource allocation, exploitation, and management in the CC represents quite a challenge [5]. There is a need for service fabric management solutions capable of efficiently allocating multiple service components using a limited pool of devices distributed throughout the CC, also considering the peculiar characteristics of the resources available at each layer. In addition, the significant dynamicity of the CC scenario calls for adaptive/intelligent orchestrators that can autonomously learn the best allocation for services.

The increasing use of Artificial Intelligence (AI) techniques has led to different and potentially very promising approaches [6], including self-learning ones. Among those, Reinforcement Learning (RL) is the one that for sure has accumulated most of the attention in the research community [7]. RL is a promising Machine Learning area that has gained popularity in a wide range of research fields such as Network Slicing [8], Network Function Virtualization [9], and resource allocation [10]. More specifically, Deep Reinforcement Learning (DRL) has recently emerged as a compelling technique increasingly proposed in service management research [11]. DRL approaches aim to extensively train an intelligent orchestrator to make it capable of effective service management decision-making in various conditions.

Computational Intelligence (CI) solutions represent another promising approach, leveraging smart and gradient-free optimization techniques that can explore a relatively large solution space efficiently [12]. Leveraging CI, it is possible to realize orchestrators that can explore relatively quickly even large solution spaces, thus being able to effectively operate in dynamic conditions with a reactive posture with a minimal re-evaluation lag. Advanced Computational Intelligence solutions seem to be well suited for expensive [13] and dynamic optimization problems [14,15].

Although some metaheuristic performance analyses in Fog environments have been conducted in recent times [16], establishing which of these solutions represents the most-suitable one for service management in the Cloud Continuum is still an open research question. In this paper, we aimed to investigate that question and, towards that goal, compared two DRL techniques, namely the Deep Q-Network (DQN) and Proximal Policy Optimization (PPO), with five Computational Intelligence techniques, namely Genetic Algorithms (GAs), Particle Swarm Optimization (PSO), Quantum-inspired Particle Swarm Optimization (QPSO), Multi-Swarm PSO (MPSO), and the Grey-Wolf Optimizer (GWO), to evaluate their performance for service management purposes.

First, we conducted simulations to train and test these techniques in a realistic Cloud Continuum scenario characterized by limited computing resources at the Edge and Fog layers and unlimited resources at the Cloud. Then, we evaluated the same techniques with a what-if scenario analysis that simulated the outage of Cloud resources. To find a new service allocation, we exploited the experience of the DRL agents without retraining, while for the CI approaches, we performed another optimization run. The results highlighted that PPO was capable of dealing with the modified scenario by distributing the service instances to the Edge and the Fog layer, without retraining the agent, while the DQN was not capable of achieving comparable results. On the other hand, CI algorithms require a cold restart to find an optimal relocation for service component instances.

The remainder of the paper is structured as follows. Section 2 lays out relevant efforts. Section 3 discusses service management in the Cloud Continuum. Then, in Section 4, we present the CI and DRL algorithms that we selected for the comparison outlined in this work. Section 5 describes the methodologies used in this manuscript to solve the service management problem. Then, Section 6 presents the experimental evaluation in which we compared the selected CI and DRL algorithms. Finally, Section 7 concludes the manuscript and outlines potential future works.

2. Related Work

Services and resource management in the Cloud Continuum comprise a challenging research topic that calls for innovative solutions capable of managing the multiple layers of computing resources. The work presented in [17] proposes a resource orchestration framework called ROMA to manage micro-service-based applications in a multi-tier computing and network environment that can save network and computing resources when compared to static deployment approaches. In [18], Pereira et al. propose a hierarchical and analytical model to overwhelm the resource availability problem in Cloud Continuum scenarios. They present multiple use cases to demonstrate how their model can improve the availability and scalability in Edge–Cloud environments. Moreover, the authors in [19] describe a model-based approach to automatically assign multiple software deployment plans to hundreds of Edge gateways and connected Internet of Things (IoT) devices in a continuously changing cyber–physical context.

In recent years, the advent of the Cloud Continuum paradigm has called for new resource management proposals and methodologies to evaluate them. Currently, simulation is one of the most-adopted evaluation techniques in the literature for evaluating the performance of different techniques in Edge–Fog–Cloud scenarios. The authors in [20] propose a simulation approach at different scales to evaluate their Quantum-inspired solution to optimize task allocation in an Edge–Fog scenario. Specifically, they use the iFogSim simulation toolbox [21] for their experiments and make a comparison between their concept and state-of-the-art strategies, showing improvement in prediction efficiency and error reduction. In [22] Qafzezi et al. present an integrated system called Integrated Fuzzy-based System for Coordination and Management of Resources (IFS-CMR), evaluating it by simulation. Thanks to its three subsystems, it integrates Cloud–Fog–Edge computing in Software-Defined Vehicular Ad hoc Networks with flexible and efficient management of the abundance of resources available. The authors in [23] suggest STEP-ONE, a set of simulation tools to manage IoT systems. Specifically, it relies on the Business Process Model and Notation standards to handle IoT applications, defined as a plethora of processes executed between different resources. After an in-depth analysis of the most-relevant simulators in the Edge–Fog scenario and an accurate description of each component of STEP-ONE, they define a hypothetical smart city scenario to evaluate its capabilities at choosing the right process placement strategy. In [24], Tran-Dang et al. present Fog-Resource-aware Adaptive Task Offloading (FRATO), a framework to select the best offloading policy adaptively and collaboratively based on the system circumstances, represented by the number of resources available. In their experiments, they conduct an expanded simulative analysis adopting FRATO to compare several offloading strategies and determine their performance by measuring the service provisioning delay in different scenarios.

Computational Intelligence and RL approaches have been widely adopted to solve service and network management problems. In [25], the authors propose ETA-GA, a Genetic-Algorithm-based Efficient Task Allocation technique, which aims at efficiently allocating computing tasks—according to their data size—among a pool of virtual machines in accordance with the Cloud environment. Furthermore, in [26], Nguyen et al. present a model of a Fog–Cloud environment and apply different metaheuristics to optimize several constraints, such as power consumption and service latency. Through simulation, they legitimize their modeling and prove that approaches based on GAs and PSO are more effective than the traditional ones in finding the best solution to their constraints. The authors in [27] use PSO to solve a joint resource allocation and a computation offloading decision strategy that minimizes the total computing overhead, completion time, and energy consumption. In [28], Li et al. propose a custom PSO algorithm to decide on a computing offloading strategy that aims at reducing system delay and energy consumption. Lan et al. adopt a GA to solve a task caching optimization problem for Fog computing networks in [29]. The authors in [30] propose DeepCord, a model-free DRL approach to Coordinate network traffic processing. They define their problem as a partially observable Markov decision process to exploit their RL approach and demonstrate that it performs much better than other state-of-the-art heuristics in a testbed created using real-world network topologies and realistic traffic patterns. In [31], Sindhu et al. design an RL approach to overcome the shortcomings of Task-Scheduling Container-Based Algorithms (CBTSAs) applied in the Cloud–Fog paradigm to decide the scheduling workloads. By exploiting the Q-Learning, State–Action–Reward–State–Action (SARSA) [32], and Expected SARSA (E-SARSA) [33] schemes, they show that their enhanced version of the CBTSA with intelligent resource allocation achieves a good balance between cost savings and schedule length than previous ones. In [34], the authors present an RL approach influenced by evolution strategies to optimize real-time task assignment in Fog computing infrastructures. Thanks to its ability to avoid incorrect convergence to local optima and the parallelizable implementation, the algorithm proposed overcomes greedy approaches and other conventional optimization techniques. To better visualize the contributions of related efforts, we report a summary in Table 1.

Table 1.

Summary of related efforts.

Although there are many other different works that analyze several CI and RL approaches in depth, there is still a lack of comparative studies that highlight their main differences, advantages, and disadvantages. This work aimed to address this gap by using a simulation-driven approach that brings together both the CI and RL methodologies to find the best solution of a resource optimization problem in a CC scenario.

3. Cloud Continuum

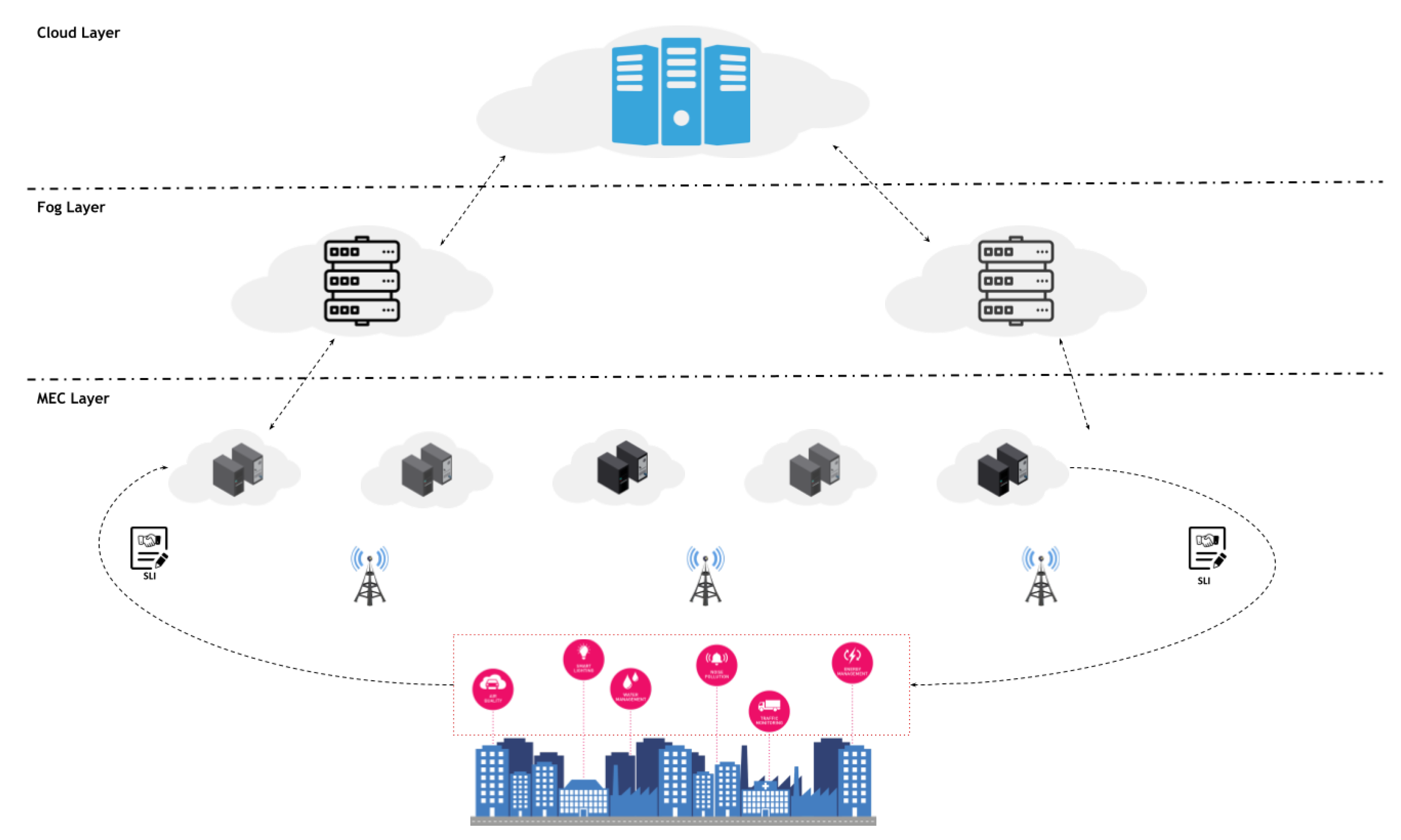

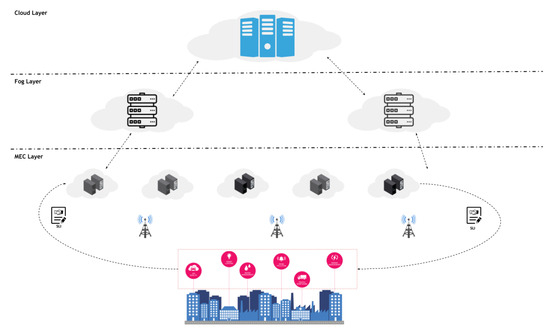

The Cloud Continuum is a term to indicate a plethora of interconnected computing resources deployed at the Edge, Fog, and Cloud computing layer, such as the one shown in Figure 1. Specifically, at the Edge layer, computing resources are mainly represented by MEC servers at Base Stations (BSs), which are responsible for connecting users to the MEC server and the rest of the core network. MEC servers are the closest resources to users that can reach them in a short communication time, i.e., 1–10 ms, thus making them the most-suitable resources for running latency-sensitive applications. On top, the Fog layer can provide higher computing capabilities at a slightly increased communication latency. Finally, at the top layer of the Cloud Continuum, the Cloud layer provides a possibly unlimited amount of resources to run batch processing or applications that do not mandate strict latency requirements.

Figure 1.

A Cloud Continuum scenario shows computing resources deployed at the three layers.

Concerning the service management perspective, we assumed that there could be multiple service providers that need to install one or more MEC applications using the resources available in the Cloud Continuum. Service providers would ask an infrastructure provider to deploy and manage their applications. Therefore, the infrastructure provider needs to accommodate all provisioning requests using a pool of computing resources distributed throughout the Cloud Continuum. To do so, the infrastructure provider needs to find a proper allocation that can accommodate as many services as possible considering the current conditions, e.g., the resource availability, and the heterogeneity of the computing resources. In addition, the infrastructure provider should also find an allocation that can maximize the performance of the given services.

This is a challenging problem that requires considering the different characteristics of the computing layers, e.g., latency requirements of mission-critical applications, and the limited resources available at the Edge.

The Computational Intelligence and Reinforcement Learning Approaches to Cloud Continuum Optimization

CI and RL are well-used techniques to solve complex service management problems. CI techniques, such as metaheuristics, represent a common solution to explore the search space of NP-Hard problems. Within the resource-management field, these methods have accomplished remarkable results thanks to their capability of efficiently exploring large and complex spaces [35,36]. In particular, CI can provide feasible and robust solutions that are particularly advantageous compared to more-traditional ones [37], which have been effective in some cases, but often struggled with barriers like scalability.

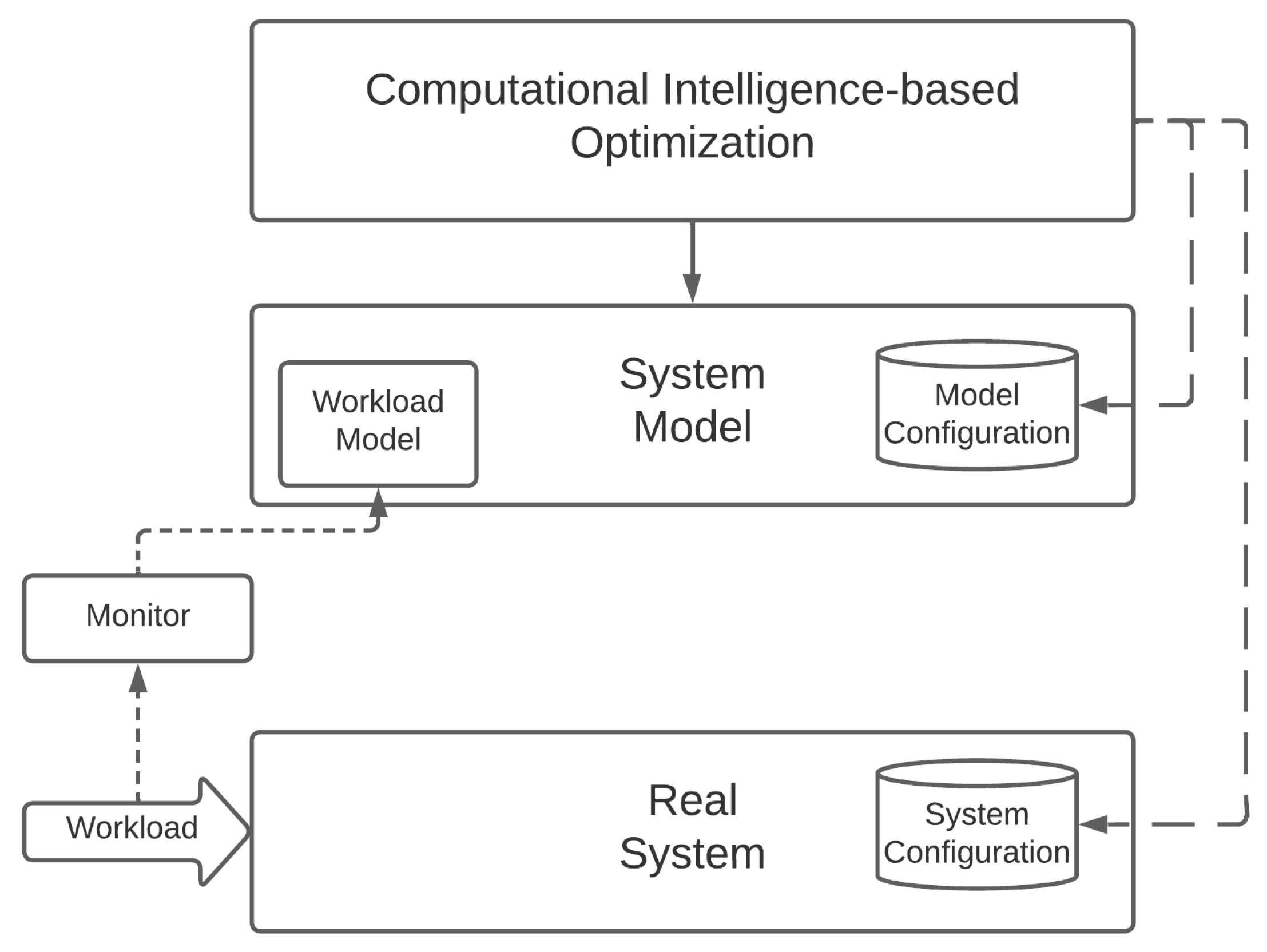

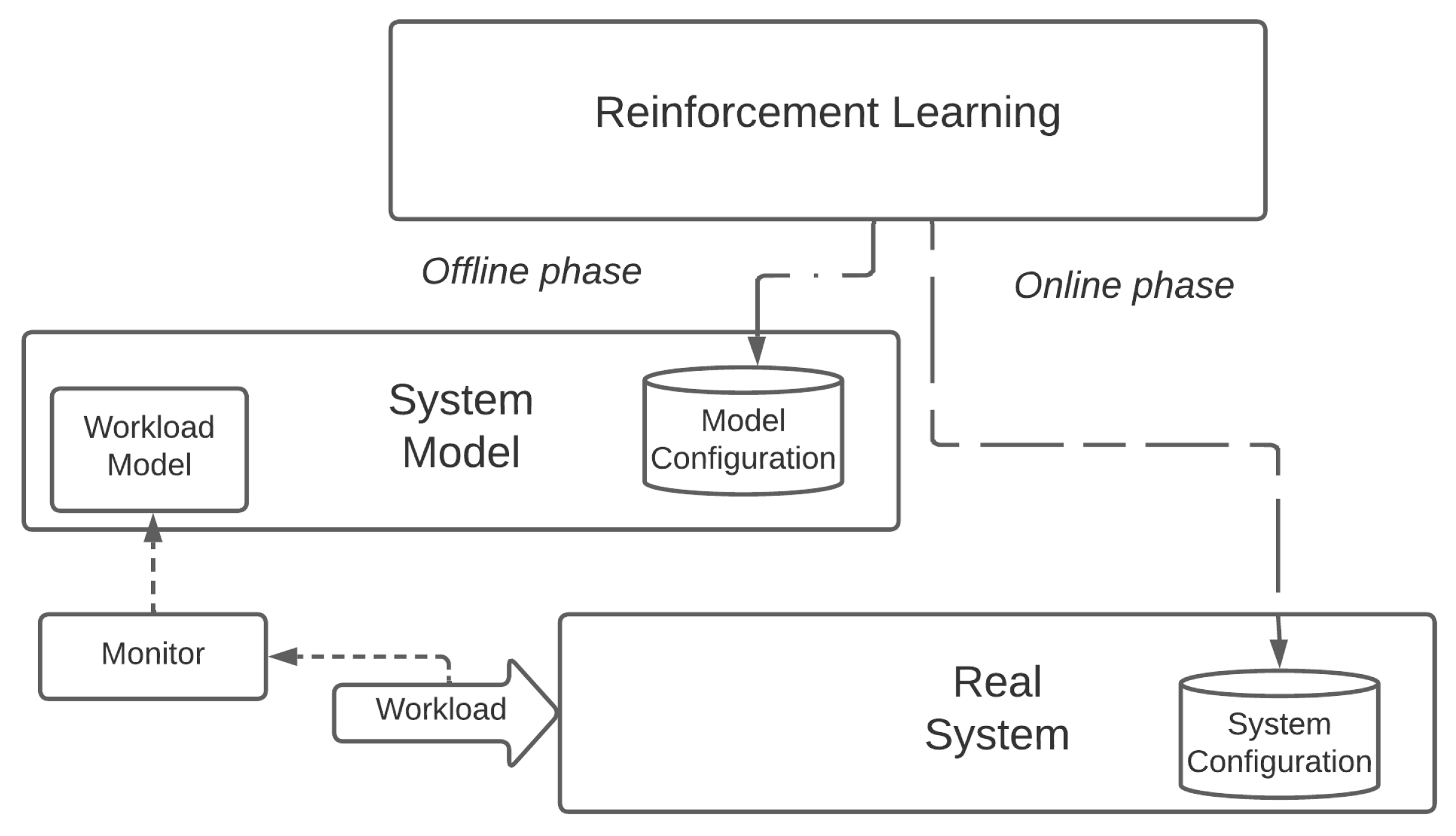

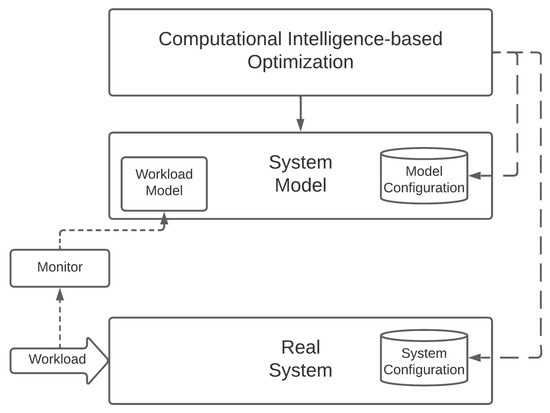

CI represents gradient-free (or black-box) optimization solutions, which perform on a relatively large number of samples across a wide portion of the search space to identify the global optimum. As a result, they cannot be directly applied to a real system, but instead, require some sort of a system model that can be used for evaluation. This is depicted in Figure 2, which provides a model of how CI solutions could be applied to optimize a Cloud Continuum system. As one can see, the real system is paired with a system model, which plays the role of the objective (or target) function to be provided to the CI method.

Figure 2.

Optimizing a real system with a Computational-Intelligence-based approach.

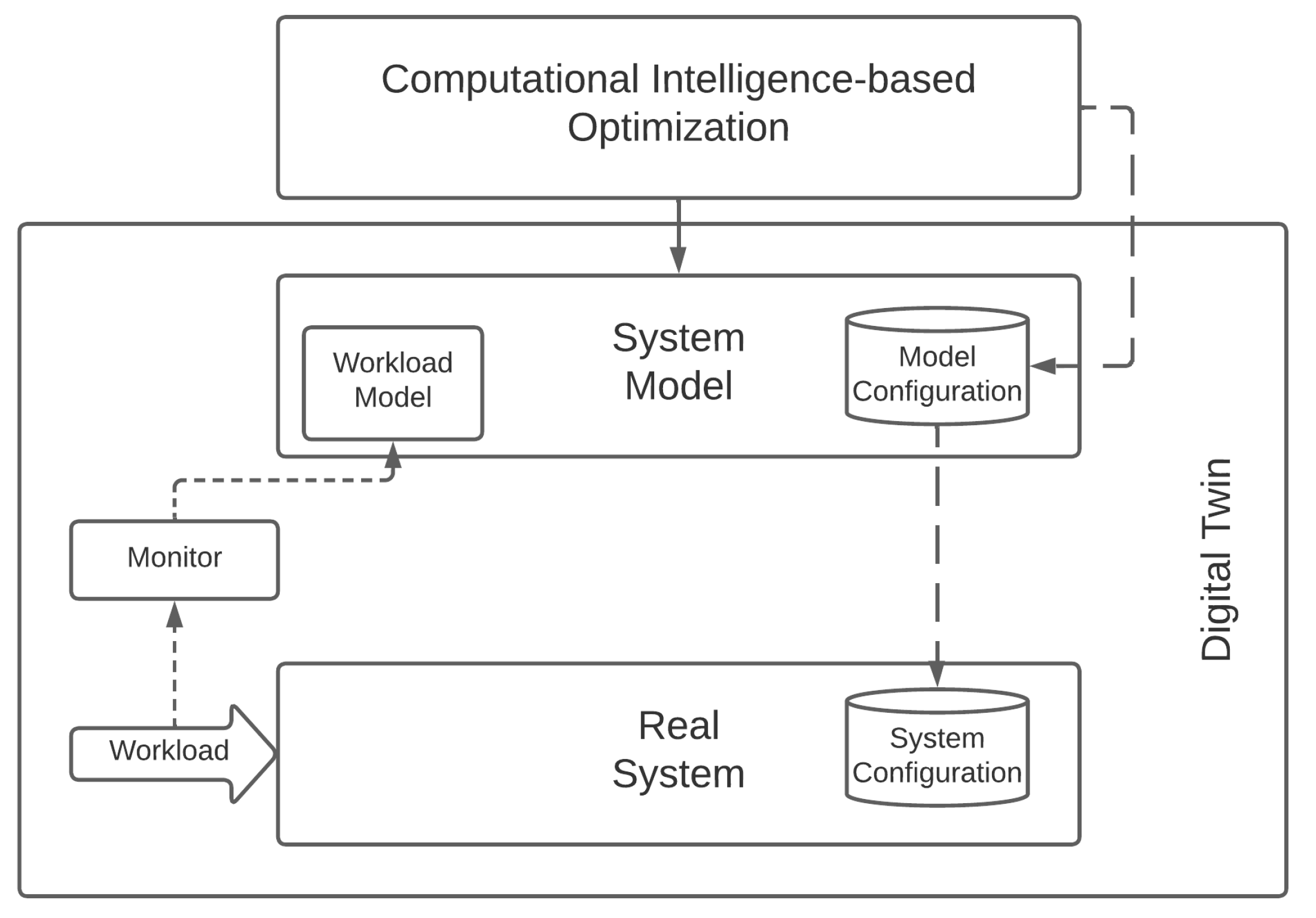

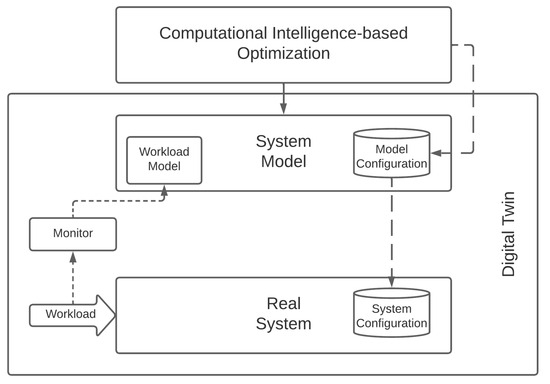

Let us note that CI solutions play particularly well with the Digital Twin (In this manuscript, we adopted the definition by Minerva et al. [38], which uses the term Digital Twin to refer to the ensemble of the Physical Object (PO), the logical object(s), and the relationship between them.) concept and its application, which researchers and practitioners are increasingly turning their attention to [39], effectively enabling a variant of the model described above, which we depict in Figure 3 [40]. In these cases, the Digital Twin plays the dual role of providing an accurate virtual representation of a physical system and of automatically reconfiguring the real system to operate in the optimal configuration found by the CI solution.

Figure 3.

Optimizing a real system with a Computational-Intelligence-based approach and a Digital Twin.

CI solutions essentially build the knowledge of the target function by collecting the outcomes of their sampling in a memory pool that evolves over time to explore the most-promising parts of the search space, usually through algorithms that leverage metaphors from the evolutionary and biological world. This scheme has proven very effective in the optimization of static systems, but requires some additional attention in systems with strong dynamical components, as is often the case in the Cloud Continuum [36,41]. In fact, applying CI solutions to solve dynamic and expensive optimization problems opens the problem of which parts (if any) of the memory need to be invalidated and discarded. This is an open problem that is receiving major attention in the scientific literature [14,15,42].

RL instead takes a significantly different approach. It implements a trial-and-error training phase in which a software agent learns how to improve its behavior by interacting with an environment through a series of actions and receiving a reward that measures how much the decision made by the agent is beneficial to attaining the final goal. Each environment is described as a set of possible states, which reflect all the observations of the agent.

The purpose of RL is to find the optimal policy to be used by the agent for selecting which action to take in each given state. More specifically, we want to learn the set of parameters for a policy that maximizes the expected reward:

As a result, the reward model is central to the performance of RL solutions, and its correct definition represents a key challenge. Needless to say, there is an interesting ongoing discussion about how to design effective reward models [7,43].

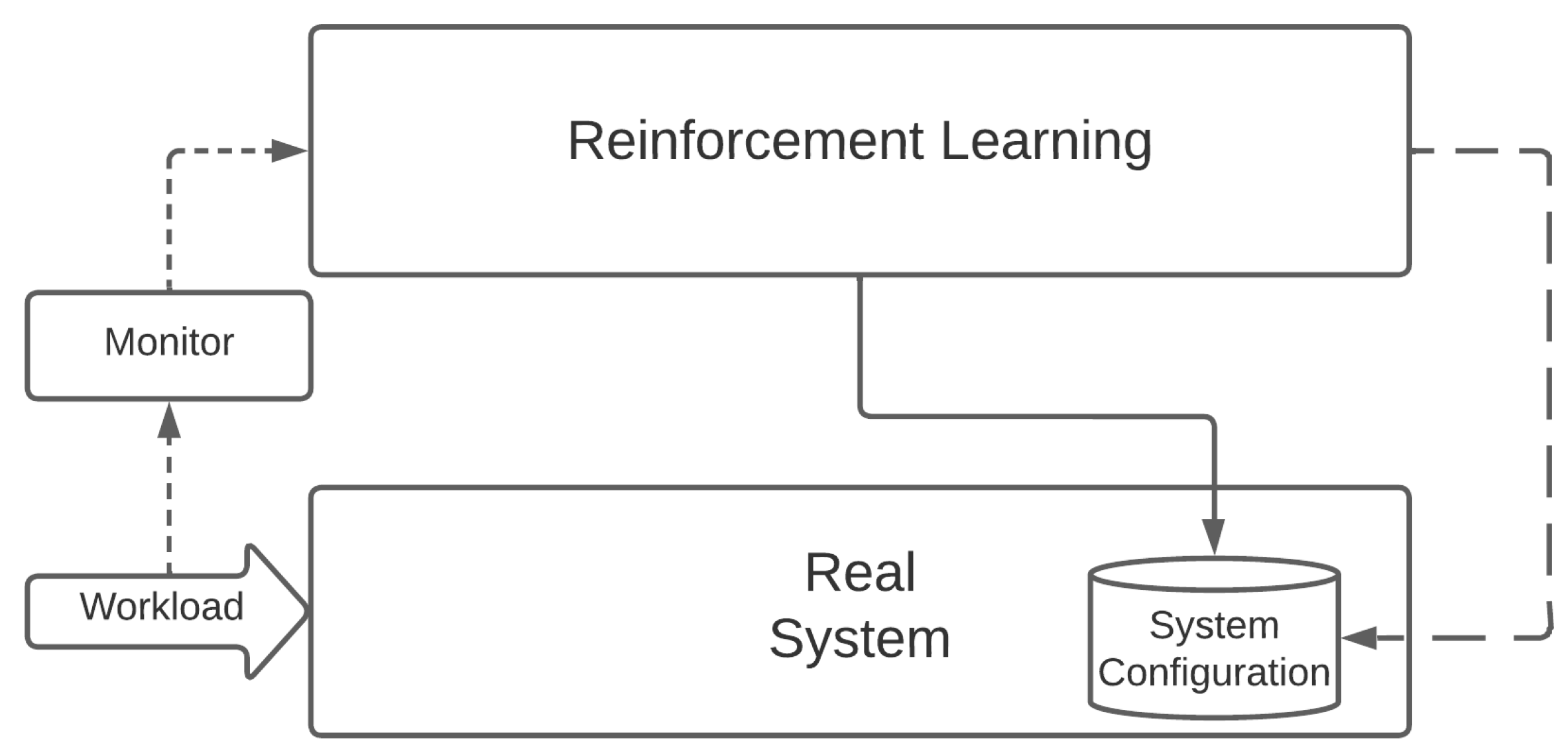

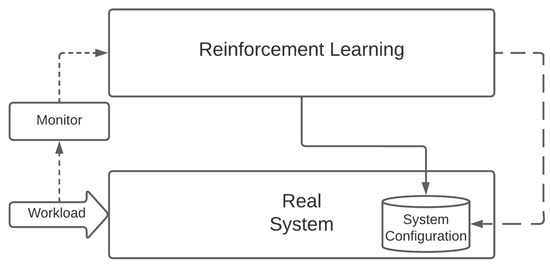

While it is not an optimization solution per se, RL is a rather flexible framework that can be adapted to several different situations. It was developed by design to consider changing environments and does not present the same memory invalidation issues of CI methods in dynamic scenarios. The premise is that policies produced by robust and well-trained RL solutions are applicable to a wide range of conditions. In case of changes in the behavior of the real system, a simple re-evaluation of the policy in an updated state should identify the actions that can be taken to optimize the system configuration—without the need to invalidate (large portions or the entirety of) the acquired knowledge base, as might be the case with CI solutions. RL also has the desirable property of being applicable to directly interact with—and learn from—a real system, as depicted in Figure 4.

Figure 4.

Optimizing a real system with Reinforcement Learning.

However, RL is notoriously considered to be sample-inefficient. In addition, traditional RL algorithms, such as SARSA and Q-Learning, also present scalability issues. They are particularly difficult to adopt in reasonably complex (and realistic) use cases, because of their tabular representation of the functions that map the effectiveness of each possible action in each possible state—which requires a very large amount of experience to be effectively exploited.

Modern Deep Reinforcement Learning (DRL) solutions were proposed to address the scalability issues of traditional RL. With the introduction of neural networks, DRL is capable of effectively dealing with high-dimensional or dynamic problems that are common in most real-world scenarios. More specifically, neural networks act as approximators of the policy (or, more precisely, of the value function V or of the state value function Q that underlies the definition of an RL policy) and have been demonstrated to be very effective in many approaches introduced in the last few years, such as Deep Q-Networks (DQNs) [44] and Trust Region Policy Optimization (TRPO) [45].

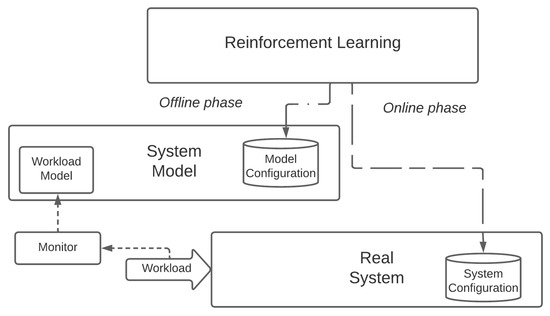

Finally, let us note that the research community is paying ever-increasing attention to offline Reinforcement Learning [46,47]. Offline RL represents a promising approach that aims at increasing the sample efficiency of RL through the adoption of a two-phase learning process. First, the RL solution learns in offline mode either from an existing knowledge base of past experiences (such as a trace log) or from a system model (or a Digital Twin). The offline learning phase generates a reasonably effective policy that represents a good starting point to be later fine-tuned during an online training phase in which the RL solution interacts directly with a real system, as depicted in Figure 5. Offline RL is outside the scope of this paper because it is still maturing and the choice of the knowledge base to adopt for a fair and meaningful evaluation, especially in comparison with CI-based approaches, represents a non-trivial issue per se. Nevertheless, we are seriously considering the evaluation of offline RL solutions in Cloud Continuum contexts for future work.

Figure 5.

Optimizing a real system with offline Reinforcement Learning.

4. Selection of Computational Intelligence and Reinforcement Learning Solutions

There are many CI- and RL-based solutions in the literature, with different characteristics and suitability to specific applications. In this section, we motivate and discuss the selection of five different methods. More specifically, we will focus our investigation on five CI-based solutions: GA, PSO, QPSO, a variant of PSO, MPSO, and GWO, and two DRL-based approaches: DQN and PPO.

The GA represents a robust and flexible optimization solution that can be applied to a wide range of problems, including service management ones [25,48]. While their relatively slow convergence rate in some cases has made them less popular than other CI solutions in recent years, we chose to consider GAs as they represent an important baseline—a gold standard, so to speak. PSO is a simple CI solution that has proven incredibly effective in a wide range of problems, despite requiring careful attention to the parameter setting to facilitate convergence [49]. QPSO is a heavily revised version of PSO inspired by Quantum mechanics, which in our experience has consistently demonstrated solid performance [36,50]. We decided to include PSO and QPSO as relatively simple, robust, and fast-converging CI solutions.

Also, due to the popularity of these approaches within the related literature, we decided to consider the DQN and PPO as representative algorithms for the off-policy and on-policy approaches of RL, respectively. Considering both approaches allows a comprehensive evaluation of RL and could provide valuable insights with respect to different aspects, such as sample efficiency. In Section 6, we will give further details about the specific implementations of the algorithms that we used.

4.1. Genetic Algorithms

The GA is a metaheuristic inspired by biological evolution. The GA considers a population of individuals, which represent candidate solutions for the optimization problem. Each individual has a genotype, which represents its specific coordinate on the search space, and a phenotype, i.e., the evaluation of the objective function in the corresponding coordinate, which represents a “fitness” value capturing how well the individual adapts to the current environment. By evolving populations through selection and recombination, which generate new individuals with better fitness values, the GA naturally explores the search space in an attempt to discover global optima.

More specifically, as the pseudo-code in Algorithm 1 illustrates, the GA implements several phases, beginning with the initial setup of a population. Then, the population is evolved through a number of generations—each one created through a process that involves the selection of individuals for mating and the recombination and mutation of genetic material.

| Algorithm 1 Genetic Algorithm. |

|

Newer generations have a different genetic material, which has the potential to create fitter individuals. With an exchange or recombination of different genes, the evolutionary processes implemented by the GA promote the generation of improved solutions, and their propagation to future generations, at the same time introducing new genes, maintaining a certain degree of diversity in the population—thus preventing premature convergence. This enables the exploration of the search space in a relatively robust and efficient fashion.

The GA presents many customization and tuning opportunities. In fact, the genotype of individuals can be represented in many different ways, such as bitstrings, integers, and real values, and the choice of chromosome encoding represents a crucial aspect of the search performance and convergence speed of the GA [48]. In addition, the GA can use different selection, recombination, and mutation operators. The binary tournament is the most-used selection scheme due to its easy implementation, minimal computational overhead, and resilience to excessively exploitative behaviors, which other selection operators often exhibit [51]. Popular recombination operators include 1-point, 2-point, and uniform crossover, and a plethora of random mutation operators have been proposed in the literature, ranging from bit flip mutation to geometrically distributed displacements with hypermutation [35].

Choosing the specific operators and parameters that control the selection and mutation processes, it is possible to easily modulate the behavior of GAs, making them more explorative or exploitative. Both choices are dependent also on the problem domain and the representation of the chromosomes. The final goal of the GA is to converge to an optimal population, which means that it is not able to produce new offspring notably different from the previous.

4.2. Particle Swarm Optimization and Quantum-Inspired Particle Swarm Optimization

PSO is another metaheuristic consisting of a particle swarm that moves in the search space of an optimization problem and is attracted by global optima. PSO evaluates the objective function at each particle’s current location in a process known as Swarm Intelligence. Each member of the swarm can be a candidate solution, and the way to aggregate the information between all members is the key to better guiding the search in the subsequent steps [52]. Through the years, PSO has drawn much attention thanks to its ease of implementation and the relatively small number of parameters that have to be tuned to obtain a very good balance between exploration and exploitation. However, finding the proper value for each parameter is not a trivial assignment, and many research efforts have been made to reach the current state-of-the-art [53,54].

More specifically, PSO is a relatively simple algorithm, inspired by the behavior of bird flocks [50], based on the exploration of the search space by a swarm of M interacting particles. At each iteration t, each particle i has:

- A current position vector , whose components represent the decision variables of the problem;

- A velocity vector , which captures the movement of the particles;

- A particle attractor , representing the “highest” (best) position that the particle has encountered so far.

The particle movement is, therefore, governed by the following equations:

in which is the swarm attractor, representing the “highest” (best) position that the entire swarm has encountered so far, and are random sequences uniformly sampled in , and and are constants.

A well-known evolution of PSO is represented by QPSO. In QPSO, all particles have a quantum behavior instead of the classical Newtonian random walks considered by classical PSO. QPSO significantly simplifies the configuration process of PSO, reducing the number of configuration parameters required to only one, i.e., the contraction–expansion parameter [55], which represents a single “knob” that enables adjusting the balance between the local and global search of the algorithm. Furthermore, QPSO has been demonstrated to overcome the weaknesses of PSO in the resolution of several benchmarks [56], which instead tends to be stuck in local minima. The steps required to implement QPSO are illustrated in Algorithm 2.

| Algorithm 2 Quantum-inspired Particle Swarm Optimization. |

|

4.3. Multi-Swarm Particle Optimization

While PSO has been successfully applied in a wide range of applications, it has proven to be less effective in dynamic environments, where it suffers from outdated memory and lack of diversity issues [57]. To address these shortcomings, researchers have started investigating Multi-Swarm PSO (MPSO) constructions [58].

More specifically, MPSO exploits two principle mechanisms for maintaining diversification—and, as a result, avoiding premature convergence and implementing effective exploration throughout the entire search space—in the optimization process: multiple populations and repulsion. Instantiating more than one swarm allows MPSO to simultaneously explore different portions of the search space, thus allowing it to achieve very good performance in the case of multiple optima, i.e., for so-called multi-peak target functions. In addition, MPSO adopts two layers of repulsion: among particles belonging to the same swarm and among all the swarms, to maintain diversity in the exploration process and to avoid premature convergence.

In this context, a particularly successful multi-swarm PSO construction has proven to be the one based on the atom analogy [57]. Taking loose inspiration from the structure of an atom, each swarm is divided into a relatively compact nucleus of neutral (or positively charged) particles and a loose nebula of negatively charged particles that float around the nucleus. This is obtained by moving the neutral portion of the particles according to classical PSO dynamics, i.e., as defined in Equation (2), and the negatively charged rest of the swarm according to quantum-inspired dynamics (Some versions of MPSO use Coulomb-force-inspired repulsion between charged particles, but QPSO dynamics has proven to be computationally simpler and at least just as effective [58]), i.e., as defined in Lines 17–23 of Algorithm 2.

In addition, swarms have and their diversity is preserved by two mechanisms: exclusion and anti-convergence, which lead to a continuous birth-and-death process for swarms. Exclusion implements local diversity, preventing swarms from converging to the same optimum (or peak). If a swarm comes closer than a predefined exclusion radius to another swarm , it is killed and a new randomly initialized swarm takes its place. Anti-convergence, instead, implements global diversity, by ensuring that at least one swarm is “free”, i.e., patrolling the search space instead of converging to an optimum. Towards that goal, MPSO monitors the diameter of each swarm, and if all of them fall below a threshold (which is typically dynamically estimated to suit the characteristics of the optimization problem), it kills and replaces one swarm.

Since they have been specifically designed for dynamic optimization problems and are currently considered state-of-the-art solutions in this context [14], MPSO techniques represent a particularly promising candidate for our investigation.

4.4. Grey-Wolf Optimization

GWO is another nature-inspired optimization algorithm based on the leadership hierarchy and hunting mechanism of grey wolves in the wild [59]. The algorithm simulates the social hierarchy and hunting behavior of grey wolves when searching for prey. In GWO, the search agents are grey wolves, which are categorized into four groups: alpha, beta, delta, and omega, which help to guide the pack’s movements.

From a mathematical perspective, the algorithm starts by initializing a population of grey wolves, where each wolf represents a potential solution in the search space. The fitness of each solution is evaluated using a problem-specific objective function. Based on their fitness, the three best solutions are selected as the leading wolves: alpha is the best solution, while beta and delta are the second- and the third-best ones, respectively.

All other candidate solutions are considered as omega, and they will follow the leading wolves in their hunting process. Their position update equations are as follows:

Similar equations are used to calculate and based on the positions of the beta and delta wolves, respectively. The new position of each wolf is then updated as follows:

Conceptually, the leading wolves delineate the boundaries of the search space, which typically is defined as a circle. However, the same considerations are still valid if we consider an n-dimensional search space, with a hyper-cube or hyper-sphere instead of a circle. A and C are the components that encourage the wolves to search for fitter prey, helping them avoid becoming stuck in local solutions. Different from A, C does not have any component that decreases linearly, emphasizing () or de-emphasizing () the exploration of each wolf not only during the initial iterations. The algorithm repeats the hunting process for a predefined number of iterations or until convergence is achieved. The alpha wolf represents the optimal solution found by the GWO algorithm. Readers can find a description of the above steps in Algorithm 3.

Similar to PSO, this metaheuristic has no direct relationships between the search agents and the fitness function [59]. This means that penalty mechanisms can be adopted effectively for modeling constraints, thus making GWO particularly suitable for this investigation.

| Algorithm 3 Grey-Wolf Optimization (GWO). |

|

4.5. Deep Q-Networks

Traditionally, the Q-Learning algorithm builds a memory table, known as the Q-Table, to store Q-Values for all possible state–action pairs. This value represents the return obtained by executing the action at the time step t, which differs from the one indicated by the current policy, and then, following the policy from the next state onward. After each iteration, the algorithm updates the table using the Bellman Equation:

where S and A are, respectively, the state (or observation) and the action that the agent takes at the time step t, R is the reward received by the agent by taking the action, is the learning rate, and is the discount factor, which assigns more value to the immediate rewards, making them more important [60]. Basically, the agent updates the current Q-Value with the best estimated future reward, which expects that the agent takes the best current known action. Although this algorithm is simple and quite powerful to create an RL agent, it struggles in dealing with complex problems composed of thousands of actions and states. A simple Q-Table would not suffice to manage reliably thousands of Q-Values, especially regarding memory requirements.

Deep Q-Learning overcomes the limitations of Q-Learning by approximating the Q-Table with a deep neural network, which optimizes memory usage and gives a solution to the curse-of-dimensionality problem, forming a more-advanced agent called the Deep Q-Network (DQN) [44]. Specifically, the deep neural network receives a representation of the current state as the input, approximates the value of the Q-Value function, and finally, generates the Q-Value for all possible actions as the output. After each iteration, the agent updates the network weights through the Bellman Equation (5). Towards that goal, the DQN first calculates the loss between the optimal and predicted actions:

where is the learning rate parameter. The policy parameters are then updated through backpropagation:

Several types of loss functions have been proposed in the literature, including the mean-squared error and cross-entropy loss.

The trial-and-error mechanism of RL could make off-policy algorithms such as the DQN relatively slow in the training process. To address this problem, the DQN usually relies on experience replay, a replay buffer where it stores experience from the past [61]. This approach collects the most-recent experiences gathered by the agent through its actions in the previous time steps. After each training iteration, the agent randomly samples one or more batches of data from the experience replay buffer, thus making the process more-stable and prone to converge.

Several optimizations have been proposed for the DQN. To stabilize training, DQN solutions often leverage a separate “target” network to evaluate the best actions to take. is updated less frequently than the policy network Q, and often through soft (Polyak) updates. Equally often, DQN solutions adopt prioritized experience replay, which assigns higher probabilities to actions that lead to higher rewards when sampling from the experience replay buffer. Finally, different DQN variants have been proposed to mitigate the reward overestimation tendencies of the algorithm [62], such as the Dueling-DQN [63] and Double-DQN [64].

Despite the DQN being one of the first Deep Q-Learning approaches, it is still very widely proposed and highly regarded in the scientific literature, as it has consistently demonstrated a remarkable effectiveness in solving a large number of problems at a reduced implementation complexity.

4.6. Trust Region Policy Optimization and Proximal Policy Optimization

Different from the off-policy nature that characterizes the DQN, Trust Region Policy Optimization (TRPO) is an on-policy RL algorithm that aims to identify the optimal step size in policy gradient descent for convergence speed and robustness purposes [45]. Specifically, TRPO searches for the best way to improve the policy by satisfying a constraint (called the Trust Region), which defines the highest accepted distance between the updated policy and the old one, thus tackling the problems of performance collapse and sample inefficiency typical of policy gradient RL algorithms. To do so, it defines the following surrogate objective:

where represents the vector of policy parameters before the update and is an estimator of the advantage function from the older policy . It is possible to prove that applying specific constraints to this equation, i.e., bounding the Kullback–Leibler divergence between and , guarantees a monotonic policy improvement and allows the reuse of off-policy data in the training process, making TRPO more-stable and sample-efficient than previous policy-gradient-based RL algorithms [65].

Unfortunately, since TRPO requires a constrained optimization at every update, it could become too complex and computationally expensive (Theoretically, it is possible to transform the constrained optimization step into an unconstrained optimization one through a penalty-based approach. However, in turn, this raises the need to identify a proper penalty coefficient to consider—which is very difficult in practice). These drawbacks call for simpler and more-effective methods for policy gradient descent. Towards that goal, Schulman et al. in [66] introduce the Proximal Policy Optimization (PPO) algorithm. There are two main variants of PPO: PPO-Penalty and PPO-Clip [66,67,68]. PPO-Penalty optimizes a regularized version of Equation (6), introducing an adaptive regularization parameter that depends on . On the other hand, PPO-Clip calculates a clipped version of the term:

and considers as a learning objective the minimum between the clipped and the unclipped versions. This ensures that the update from to remains controllable, preventing excessively large parameter updates, which could cause massive changes to the current policy, resulting in a performance collapse. More specifically, PPO-Clip leverages a modified version of the surrogate function in Equation (6) as follows:

where function clips x within the interval , with being a hyperparameter that defines the clipping neighborhood.

Thanks to a more-elegant and -computationally efficient behavior than TRPO, PPO is a particularly interesting solution for deep RL applications. PPO-Clip is arguably the most-interesting variant of PPO: it has proven to be remarkably simple and stable and to work consistently well in a wide range of scenarios, outperforming other algorithms, such as Advantage Actor–Critic [66]. It, thus, represents a very solid candidate to consider in our investigation. For simplicity, in the rest of the manuscript, we use the term PPO to refer to the PPO-Clip algorithm.

5. Service Management in the Cloud Continuum

To address service management in the Cloud Continuum, we describe an optimization problem that aims at finding the proper deployment for a pool of services with different importance. Specifically, we need to activate multiple instances of these services on the resources available throughout the CloudContinuum. Once these instances are activated on a proper device, they will start processing requests. To evaluate the performance of a given deployment configuration, this work adopted the Percentage of Satisfied Requests (PSR), i.e., the ratio between the number of users’ requests that were successfully executed and the total number of requests that were generated in a given time window t.

Specifically, is the set of application components that must be allocated by the infrastructure provider on the Cloud Continuum. Each application component has fixed resource requirements measured as the number of CPU or GPU cores that should be available for processing on servers. For simplicity, this work assumed that resource requirements for each application are immutable. This assumption is consistent with the related literature [69] and also with modern container-based orchestration techniques, which allow users to specify the number of CPU and GPU cores to assign to each container.

We modeled each application instance as an independent M/M/1 First-Come First-Served (FCFS) queue that processes requests in a sequential fashion. In addition, we considered that queues would have a maximum buffer size, i.e., queues can buffer up to a maximum number of requests. As soon as the buffer is full, the queue will start dropping incoming requests.

We define the computing resources with the set of devices , where each has an associated type to described the server’s characteristics and location. In addition, each device is assigned with resources, where represents the number of computing CPU or GPU cores. Moreover, we assumed that servers at the same computing layer would have an equal computing capacity; thus, servers at the upper computing layers would have higher capacity. At a given time t, the number of resources requested by applications allocated on servers cannot exceed the servers’ capacity .

5.1. Problem Formulation

In this work, we followed the infrastructure provider perspective, which needs to find a deployment for the application components that maximizes their performance. The infrastructure provider needs to solve the following optimization problem:

It is worth specifying that it is the responsibility of the infrastructure provider to select proper values for the components, which represent the utility that an infrastructure provider gains for running a particular application component of type . Although it is a relatively simple approach, we believe that it could be reasonably effective to treat several service components with different priorities. Specifically, we envision the values to be fixed at a certain time t. To maximize the value of (9), the infrastructure provider needs to improve the current service deployment configuration in a way that the percentage of satisfied requests of the most-important services is prioritized.

To solve the above service management problem, we define a representation that describes how the instances of applications are deployed on device . Therefore, we propose an array-like service configuration with integer values, which extends the one presented in [70] as follows:

where the value of the element describes the number of application components of type that are allocated on device for processing requests’ application , n is the number of devices, and k the . Finally, to improve the readability of the problem formulation, we show in Table 2 a summary of the notation used.

Table 2.

Summary of notation used for the service management problem.

5.2. Markov Decision Process for Reinforcement Learning Algorithms

Concerning the application of PPO and the DQN to the service management problem, we need to formulate a decision-making problem using the Markov Decision Process (MDP) framework [71]. Specifically, we define two slightly different MDP problems, one specific to the DQN and the other one to PPO. The difference between the two MDPs is related to the definition of possible actions, which can be more elaborated for PPO. Both MDPs define a set of states S, each one defined as the above deployment array (10) to represent the allocation of service components on devices , and a reward function R, which is the immediate reward received after performing an action in a state s.

The main difference between the MDPs is related to how the deployment array in (10) is analyzed and, therefore, the actions that the agent can perform. For the DQN MDP, the agent has to analyze each element of the deployment array sequentially. Specifically, at each timestamp , the DQN agent analyzes an element of the deployment array , starting from the beginning to the end. When analyzing , the agent performs an action on the element to modify the active instances for application components on , the value of . To do so, the agent can either (i) do nothing, (ii) activate up to two new instances, or (iii) deactivate up to two instances. Let us note that we encoded these actions as integer values in .

Instead, for PPO, A has the shape of a multi-discrete action space, i.e., a vector that extends the discrete action space over a space of independent discrete actions [72]. Our proposal consists of two vectors of choices: to perform the first choice, the agent picks an element of C, corresponding to the number of active instances for service component on device . Then, for the second choice, the agent modifies the number of active instances to improve the current allocation according to the three actions described for the DQN. Therefore, according to this formulation, the PPO agent does not scan the deployment array in a sequential fashion; instead, it can learn a smarter way to improve the overall value of (9).

Concerning the reward definition, we modeled the reward by performing an action a in state S bringing to a new state , i.e., , as the difference in (9) calculated between two consecutive time steps. Specifically, with this reward function, we want to verify if a specific action can improve or not the value of (9).

Specifically, and are defined as:

where and are the objective functions calculated, respectively, in states and S and is the penalty quantified in the number of accumulated infeasible allocations during the simulation multiplied by a facto , which we set to . If there is an improvement from one time step to the next one (), the agent receives an additional bonus of as compensation for its profitable move. Otherwise, the action taken is registered as a wrong pass since it is not remunerative in improving the objective function calculated previously. If the agent reaches an amount of 150 wrong passes, the training episode terminates immediately and resets the environment to the initial state.

5.3. Target Function Formulation for Computational Intelligence Algorithms

CI techniques require their adopters to define a “fitness function” or “evaluation function” to drive the optimization process. One of the advantages of these techniques is that is possible to use the objective function directly as the fitness function. However, it is common to add additional components, (e.g., a penalty component) to guide the optimization process to better solutions.

For this work, we adopted two different configurations for the Computational Intelligence techniques. Specifically, we used a baseline configuration, namely “GA”, “PSO”, “QPSO”, “MPSO”, and “GWO”, which uses as the fitness function the objective function , and another configuration called Enforced ConstrainT (ECT), namely “GA-ECT”, “PSO-ECT”, “QPSO-ECT”, “MPSO-ECT”, and “GWO-ECT”, which instead takes into account the infeasible allocations generated at a given iteration as a penalty in the fitness function :

where is the target function (i.e., the problem objective) and is the penalty component visible in Equation (13). Different from the baseline configuration, the ECT configuration would also minimize the number of infeasible allocations while maximizing the PSR. Finally, let us note that the ECT approach reconstructs the operating conditions of the RL algorithms and forces both metaheuristics to respond to the same challenge, thus enabling a fair comparison with the DQN and PPO.

6. Experiments

As part of this section, we want to compare the RL and Computational Intelligence approaches in finding the best resource management solution in our proposed scenario. As mentioned before, we argue that metaheuristic approaches could be very effective tools in exploring the parameters’ search space and providing near-optimal configuration solutions. Furthermore, these approaches are less inclined to suffer from the inefficient sampling curse. RL methodologies instead provide autonomous learning and adaptation at the expense of a superior sample inefficiency. Therefore, they do a better job in scenarios characterized by high dynamicity and sudden variations, which often induce metaheuristics to have poor performances, forcing them to a new training phase.

To compare the CI and RL approaches for service management, we define a use case for a simulator capable of reenacting services running in a Cloud Continuum scenario. We built this simulator by extending the Phileas simulator [73] (https://github.com/DSG-UniFE/phileas (accessed on 21 September 2023)), a discrete event simulator that we designed to reenact Value-of-Information (VoI)-based services in Fog computing environments. However, even if, in this work, we did not consider the VoI-based management of services, the Phileas simulator represented a good commodity to evaluate different optimization approaches for service management in the Cloud Continuum. In fact, Phileas allows us to accurately simulate the processing of service requests on top of a plethora of computing devices with heterogeneous resources and to model the communication latency between the parties involved in the simulation, i.e., from users to computing devices and vice versa.

To make this comparison, we evaluated the quality of the best solutions generated with respect to the objective function (9), but also in terms of sample efficiency, i.e., the number of evaluations of the objective function. Finally, we evaluated whether these approaches can work properly when environmental conditions change, e.g., the sudden disconnection of computing resources.

6.1. Use Case

For this comparison, we present a scenario to be simulated in Phileas that describes a smart city that provides several applications to its citizens. Specifically, the use case contains the description of a total of 13 devices distributed among the Cloud Continuum: 10 Edge devices, 2 Fog devices, and a Cloud device with unlimited resources to simulate unlimited scalability. Along with the devices’ description, the use case defines 6 different smart city applications, namely: healthcare, pollution-monitoring, traffic-monitoring, video-processing, safety, and audio-processing applications, whose importance is described by the parameters shown in Table 3.

Table 3.

Weight parameters used for the objective function.

To simulate a workload for the described applications, we reenacted 10 different groups of users located at the Edge that generate requests according to the configuration values illustrated in Table 4. It is worth specifying that the request generation is a stochastic process that we modeled using 10 different random variables with an exponential distribution, i.e., one for each user group. Furthermore, Table 4 also reports the computed latency for each service type. As for the time between message generation, we modeled the processing time of a task by sampling from a random variable with an exponential distribution. Let us also note that the “compute latency” value does not include queuing time, i.e., the time that a request spends before being processed. Finally, the resource occupancy indicates the number of cores that each service instance requires to be allocated on a computing device.

Table 4.

Service description: time between request generation and compute latency modeled as exponential random variables with rate parameter for each service type and resource occupancy.

We set the simulation to be 10 s long, including 1 s of warmup, to simulate the processing of approximately 133 requests per second. Finally, we report the intra-layer communication model in Table 5, where each element is the configuration for a normal random variable that we used to simulate the transfer time between the different layers of the Cloud Continuum.

Table 5.

Communication latency configuration for the Cloud Continuum use case.

Concerning the optimization approaches, we compared the open-source and state-of-the-art DQN and PPO algorithms provided by Stable Baselines3 (https://github.com/DLR-RM/stable-baselines3 (accessed on 21 September 2023)) with the GA, PSO, QPSO, MPSO, and GWO. For the metaheuristics, we used the implementations of a Ruby metaheuristic library called ruby-mhl, which is available on GitHub (https://github.com/mtortonesi/ruby-mhl (accessed on 21 September 2023)).

6.2. Algorithms Configurations

To collect statically significant results from the evaluation of the CI approaches, we decided to collect the log of 30 optimization runs. Each optimization run consisted of 50 iterations of the metaheuristic algorithm. This was to ensure the interpretability of the results and to verify if the use of different seeds can significantly change the outcome of the optimization process. At the end of the 30 optimization runs, we measured the average best value found by each approach to verify which one performed better in terms of the value of the objective function and sample efficiency.

Delving into the configuration details of each metaheuristic, for the GA, we used a population of 128 randomly initialized individuals, an integer genotype space, a mutation operator implemented as an independent perturbation of all the individual’s chromosomes sampled from a geometric distribution random variable with a probability of success of 50%, and an extended intermediate recombination operator controlled by a random variable with a uniform distribution in [74]. Moreover, we set a lower and an upper bound of 0 and 15, respectively. At each iteration, the GA generates the new population using a binary tournament selection mechanism, in which we applied the configured mutation and recombination. For PSO, we set a swarm size of 40 individuals randomly initialized in the float search space and the acceleration coefficients C1 and C2 to 2.05. Then, for QPSO, we configured a swarm of 40 individuals randomly initialized in and a contraction–expansion coefficient of 0.75. These particular parameter configurations have shown very promising results in different analyses made in the past [36,75], so we decided to keep them also for this one.

For GWO, we set the population size to 30 individuals and the same lower and upper bounds of 0 and 15. Then, a different configuration was used for MPSO, where we set the initial number of swarms to 4, each one with 50 individuals, and the maximum number of non-converging swarms to 3. Readers can find a summary table containing the configuration for each CI algorithm provided in Table 6.

Table 6.

CI algorithms configuration for the experiments.

Regarding the DRL algorithms, we implemented two different environments to address the different MDP formulations described above. Specifically, the DQN scans the entire allocation array sequentially twice for 156 time steps, while PPO uses a maximum of 200 time steps, which correspond to their respective episode length. Since the DQN implementation of Stable Baselines3 does not support the multi-discrete action space as PPO does, we chose this training model to ensure the training conditions were as similar as possible for both algorithms. As previously mentioned, during a training episode, the agent modifies how service instances are allocated on top of the Cloud Continuum resources. Concerning the DRL configurations, we followed the guidelines of Stable Baseline3 for one-dimensional observation spaces to define a neural network architecture with 2 fully connected layers with 64 units each and a Rectified Linear Unit (ReLU) activation function for the DQN and PPO. Furthermore, we set for both the DQN and PPO a training period of 100,000 time steps long. Then, to collect statistically significant results comparable to the ones of the CI algorithms, we tested the trained models 30 times.

6.3. Results

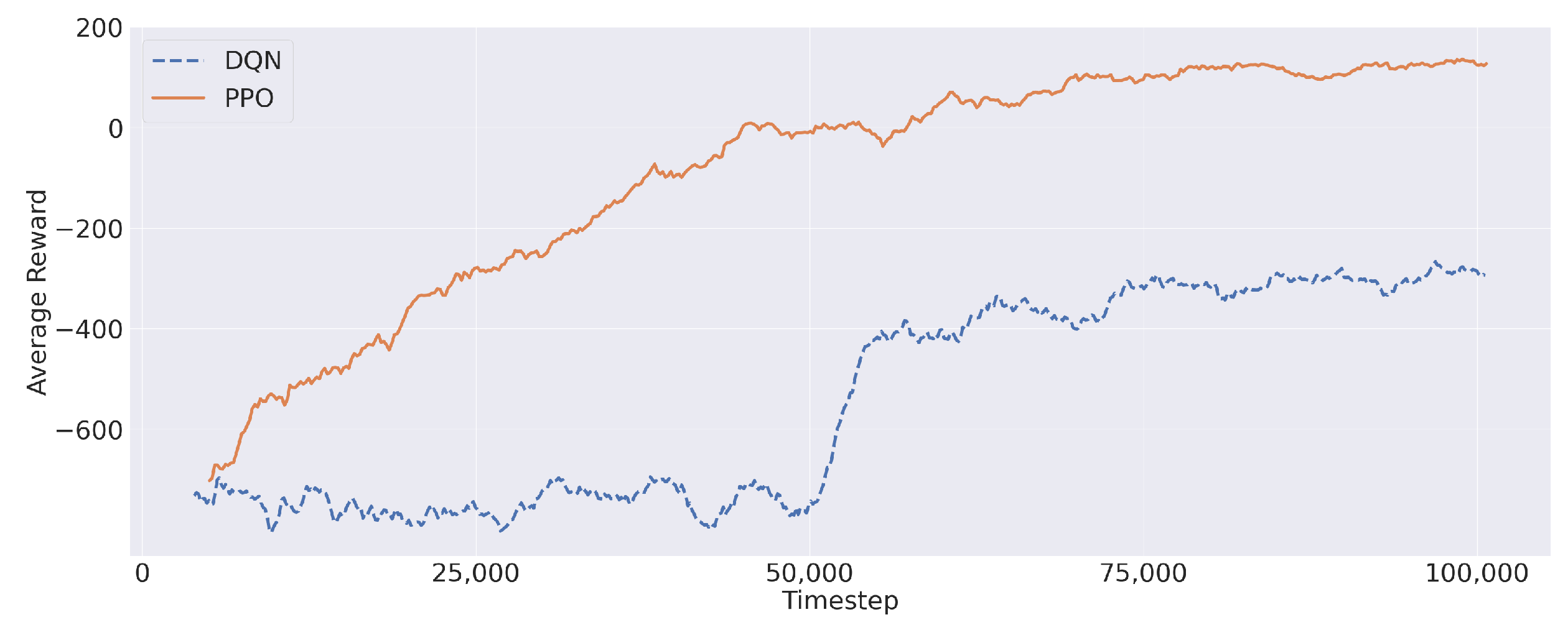

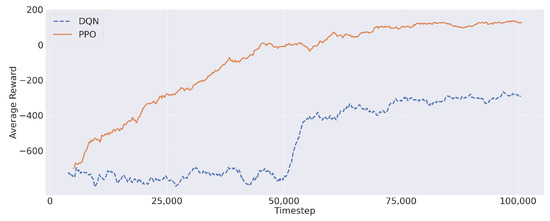

For each optimization algorithm, we took note of the solution that maximized the value of (9), also paying particular attention to the percentage of satisfied requests and service latency. Firstly, let us report the average reward during the training process obtained by PPO and the DQN in Figure 6. Both algorithms showed a progression, in terms of average reward, during the training process. Furthermore, Figure 6 shows that the average reward converged to a stable value before the end of the training process, thus confirming the validity of the reward structure presented in Section 3. In this regard, PPO showed a better reward improvement, reaching a maximum near 200. Differently, the DQN remained stuck in negative values despite a rapid reward increase (around Iteration 50,000) during the training session.

Figure 6.

The DQN and PPO mean reward during the training process.

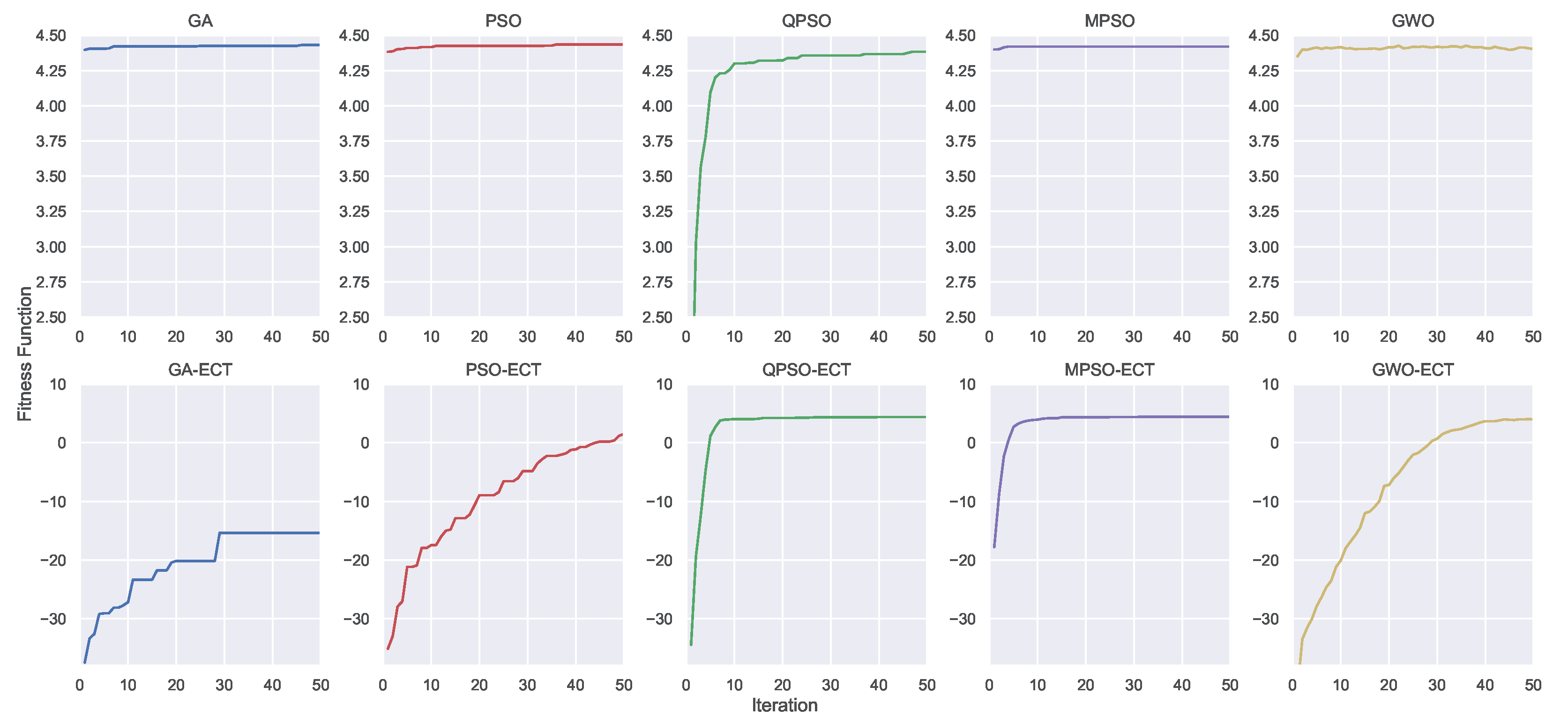

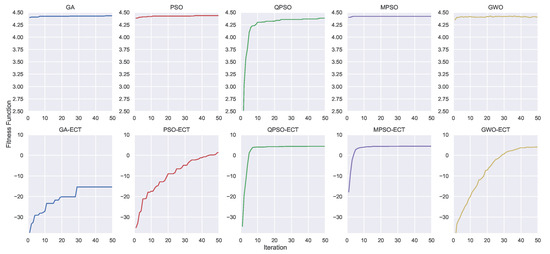

Aside from the DRL training, let us show the convergence process of the CI algorithms in Figure 7, which is an illustrative snapshot of the progress of the optimization process. Specifically, Figure 7 shows the convergence process of one of the 30 optimization runs and visualizes on the top the GA, PSO, QPSO, MPSO, and GWO, while on the bottom their constrained versions the GA-ECT, PSO-ECT, QPSO-ECT, MPSO-ECT, and GWO-ECT. For all metaheuristics displayed in the top row, it is easy to note how they can converge very quickly except for QPSO, which showed an increasing trend throughout all 50 iterations. Instead, considering their ECT variants, the GA, PSO, and GWO struggled a bit in dealing with their imposed constraints. In contrast, QPSO-ECT and MPSO-ECT demonstrated very similar performance compared to the previous case. Notably, these two algorithms did not appear to be negatively affected by the introduction of the penalty factor, and they were still able to find the best solution overall without significant difficulty. In this regard, it is worth noting that MPSO makes use of four large swarms of 50 particles, i.e., at each iteration, the number of evaluations of the objective function was even larger than the GA, which was configured with 128 individuals.

Figure 7.

An illustrative snapshot of the optimization process using CI. The GA, PSO, QPSO, MPSO, GWO (on the top) and their constrained (ECT) versions (on the bottom). The constrained versions take into account a penalty component in the fitness function.

To give a complete summary of the performance of the adopted methodologies (CI and DRL), we report in Table 7 the average and the standard deviation we collected along the 30 optimization runs. More specifically, Table 7 reports the results for the objective function, the number of generations needed to find the best objective function, and the average number of infeasible allocations associated with the best solutions found. Let us specify that the “Sample efficiency” column in Table 7 represents for the CI algorithms the average number of samples that were evaluated in order to find the best solution. Specifically, this was approximated by multiplying the number of the average generations with the number of samples evaluated at each generation, e.g, the number of samples at each generation for the GA would be 128 (readers can find this information in Table 6). Instead, for the DRL algorithms, the “Sample Efficiency” represents the average number of steps, i.e., an evaluation of the objective function that the agent took to achieve the best result—in terms of the objective function—during the 30 episodes.

Table 7.

Comparison of the average best solutions and the sample efficiency for the chosen algorithms.

With regard to the objective function, Table 7 shows that MPSO was the algorithm that achieved the highest average score for this specific experiment, as opposed to the GA-ECT, which confirmed the poor trend shown in Figure 7. However, the two versions of MPSO required the highest number of samples to achieve high-quality solutions. It is important to note that all ECT algorithms delivered great solutions in terms of balancing the objective function against the number of infeasible allocations. This trend highlights that using a penalty component to guide the optimization process of CI algorithms can provide higher efficiency in exploring the search space.

On the other hand, the RL algorithms exhibited competitive results compared to the CI algorithms in terms of maximizing the objective function. Specifically, PPO was the quickest to reach its best result, with a great objective function alongside a particularly low number of infeasible allocations (the lowest if we exclude the ECT variants). The DQN was demonstrated to not be as effective as the PPO. Despite requiring the second-fewest generations to find its best solution, all metaheuristic implementations in this analysis outperformed it in both the objective function value and total infeasible allocations. In our opinion, the main reason behind this lies in the implementation of the DQN provided by Stable Baselines3, which does not integrate any prioritized experience replay or improved versions like the Double-DQN. Consequentially, it was not capable of dealing with more-complex observation spaces, such as the multi-discrete action space of PPO. With a sophisticated problem like the one we are dealing with in this manuscript, the Stable Baseline3 DQN implementation appeared to lack the tools to reach the same performance as PPO.

The last step of this experimentation was to analyze the performance of the best solutions presented previously, thus showing how the different solutions perform in terms of the PSR and average latency. Even if the problem formulation does not take into account latency minimization, it is still interesting to analyze how the various algorithms can distribute the service load across the Cloud Continuum and to see which offers the highest-quality solution. It is expected that solutions that make use of Edge and Fog computing devices should be capable of reducing the overall latency. However, given the limited computing resources, there is a need to exploit the Cloud layer for deploying service instances.

Specifically, for each algorithm and each measure, we report both the average and the standard deviation of the best solutions found during the 30 optimization runs grouped by service in Table 8. From these data, it is easy to note that most of the metaheuristic approaches can find an allocation that nearly maximizes the PSR of the mission-critical services (identified in healthcare, video, and safety as mentioned in Table 3) and the other as well. Contrarily, both DRL approaches cannot reach PSR performance as competitively as the CI methodologies. This aspect explains why their objective functions visible in Table 7 ranked among the lowest. Nevertheless, both PPO and the DQN provided very good outcomes in terms of the average latency for each micro-service. Despite certain shortcomings in the PSR of specific services, particularly Audio and Video, PPO consistently outperformed most of them in terms of latency.

Table 8.

Comparison of the average PSR and latency of the best solutions; the standard deviation is enclosed in ().

On the other hand, looking at the average service latency of the other approaches, Table 8 shows that the algorithms performed quite differently. Indeed, it is clear how the ECT methodologies consistently outperformed their counterparts in the majority of cases. Among them, QPSO-ECT emerged as the most-efficient overall in minimizing the average latency, particularly for mission-critical services. Specifically, QPSO-ECT overcame the GA-ECT by an average of 50%, PSO-ECT by 37%, GWO-ECT by 48%, and MPSO-ECT by 19% for these services. Oppositely, both variants of the GA registered the worst performance, with a significant number of micro-services registering a latency between 100 and 200 ms.

However, let us specify that minimizing the average service latency was not within the scope of this manuscript, which instead aimed at maximizing the PSR, as is visible in (9). To conclude, we can suggest that PPO emerged as the best DRL algorithm. It can find a competitive value in terms of the objective function along with the best results in terms of sample efficiency and latency at the price of a longer training procedure—when compared to the CI algorithms. While the ECT variants of the metaheuristics included in this comparison demonstrated great performance as well, they require much more samples to find the best solution.

6.4. What-If Scenario

To verify the effectiveness of DRL algorithms in dynamic environments, we conducted a what-if scenario analysis in which the Cloud Computing layer is suddenly deactivated. Therefore, the service instances that were previously running in the Cloud need to be reallocated on the over devices available if there is enough resource availability.

To generate a different service component allocation that takes into account the modified availability of computing resources, we leveraged the same models—trained on the previous scenario—for the DQN and PPO and we used the same models trained on the previous scenario and tested them for 30 episodes. Instead, for the CI algorithms, we used a cold restart technique, consisting of running another 30 optimization runs, each one with 50 iterations. This was to ensure the statistical significance of these experiments. After the additional optimization runs, all CI algorithms should be capable of finding optimized allocations that consider the different availability of computing resources in the modified scenario, i.e., exploiting only the Edge and Fog layers.

As for the previous experiment, we report the statistics collected during the optimization runs to compare the best values of the objective function (9) and the PSR of services in Table 9 and Table 10. Looking at Table 9, it is easy to note how PPO can still find service component allocations that achieve an objective function score close to 4, without re-training the model. This seems to confirm the good performance of PPO for the service management problem discussed in this manuscript. Moreover, PPO can achieve this result after an average of 36 steps, i.e., each one corresponding to an evaluation of the objective function. This was the result of the longer training procedures that on-policy DRL algorithms require. On the other hand, as is visible in Table 7, the DQN showed a strong performance degradation, as the best solutions found during the 30 test episodes had an average of 1.60. Therefore, the DQN demonstrated lower adaptability when compared to PPO in solving the problem discussed in this work.

Table 9.

Comparison of the average best solutions and the sample efficiency for the chosen algorithms in the what-if scenario.

Table 10.

Comparison of the average PSR and latency for different services across approaches; the standard deviation is enclosed in ().

With regard to the CI algorithms, the GA was the worst in terms of the average values of the objective functions, while all the other algorithms achieved average scores higher than 4.0. As for the average number of infeasible allocations, the constrained versions achieved remarkable results, especially QPSO-ECT and MSPO-ECT, where the number of infeasible allocations was zero or close to zero for GWO-ECT. Differently, the GA-ECT and PSO-ECCT could not minimize the number of infeasible allocations to zero. Overall, MPSO was the algorithm that achieved the highest score at the cost of a higher number of iterations.

From a sample efficiency perspective, Table 9 shows that QPSO was the CI algorithm that achieved the best result in terms of the number of evaluations of the objective function. At the same time, MPSO-ECT showed that it found its best solution with an average of 1493 steps, which was considerably lower than the 7432 steps required by MPSO, which, in turn, found the average best solution overall. Finally, the GA and GA-ECT were the CI algorithms that required a larger number of steps to find their best solutions.

Furthermore, looking at Table 10, we can see the reasons for the poor performance of the DQN: four out of six services had a PSR less than 50%. More specifically, the PSR for the safety service was 0%, and the one for healthcare was 5%. Even PPO was not great in terms of the PSR in the what-if scenario. On the other hand, all the CI algorithms, excluding the GA and GA-ECT, recorded PSR values above 90% for all services, thus demonstrating that the cold restart technique was effective at exploring the optimal solutions in the modified search space.

Finally, we can conclude that PPO was demonstrated to be effective in exploiting the experience built upon the previous training even in the modified computing scenario. Contrarily, the DQN did not seem to be as effective as PPO in reallocating services’ instances in the what-if experiment. On the other hand, the training of CI algorithms does not create a knowledge base that these algorithms can exploit when the scenario changes remarkably. However, the cold restart technique was effective in re-optimizing the allocation of service instances.

7. Conclusions and Future Works

In this work, we presented a service-management problem in which an infrastructure provider needs to manage a pool of services in the Cloud Continuum by maximizing the percentage of satisfied requests considering the criticality of the different services. We solved this service management problem by comparing the performance of CI algorithms (GA, PSO, QPSO, MPSO, GWO, and their variations) with DRL algorithms (DQN and PPO).

To solve the service-management problem using DRL algorithms, we proposed an MDP in which an agent learns how to distribute service instances throughout the Cloud Continuum by using a custom reward that takes into account the percentage of satisfied requests and a penalty for infeasible allocations. To compare the metaheuristics and DRL algorithms, we ran the comparison in a simulated Cloud Continuum scenario. The experimental results showed that, given an adequate number of training steps, all approaches can find good-quality solutions in terms of the objective function. Furthermore, the adoption of a penalty component in the fitness function of the CI algorithms was an effective methodology to drive the convergence of the CI algorithms and to improve the overall results

Then, we conducted a what-if experiment in which we simulated the sudden disconnection of the Cloud layer. Here, PPO retained its effectiveness even without performing another training round, presenting consistency with the first experimentation, while the DQN was not capable of achieving good results. Among the CI approaches, both versions of the GA had a significant drop in the maximization of the objective function. On the other hand, all variants of PSO found competitive solutions compared with the DRL algorithms. However, all of them needed a new training phase to achieve that performance, making them more costly and less adaptive in highly dynamic scenarios. In future works, we will aim to improve this study with the introduction of other RL algorithms, such as Multi-Agent Reinforcement Learning (MARL), for coordinating multiple orchestration entities and offline RL. Finally, adding features such as device mobility could add remarkable value to this analysis, making the environment more realistic and challenging for both metaheuristics and RL solutions.

Author Contributions

Conceptualization, F.P. and M.T.; methodology, F.P. and M.T.; software, F.P., M.T. and M.Z.; validation, F.P. and M.T.; formal analysis, F.P.; investigation, F.P.; resources, C.S.; data curation, M.Z.; writing—original draft preparation, F.P., M.T. and M.Z.; writing—review and editing, F.P., C.S. and M.T.; visualization, F.P. and M.Z.; supervision, C.S. and M.T.; project administration, C.S. and M.T. All authors have read and agreed to the published version of the manuscript.

Funding

This work has been partially supported by the Spoke 1 “FutureHPC & BigData” of the Italian Research Center on High-Performance Computing, Big Data and Quantum Computing (ICSC) funded by MUR Missione 4—Next Generation EU (NGEU).

Data Availability Statement

The data presented in this study are contained within the article.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AI | Artificial Intelligence |

| API | Application Programming Interface |

| CI | Computational Intelligence |

| DOAJ | Directory of Open Access Journals |

| DQN | Deep Q-Network |

| DRL | Deep Reinforcement Learning |

| E-SARSA | Expected SARSA |

| FCFS | First-Come First-Served |

| GA | Genetic Algorithm |

| GA-ECT | Genetic Algorithm-Enforced ConstrainT |

| GWO | Grey-Wolf Optimizer |

| GWO-ECT | Grey-Wolf Optimizer-Enforced ConstrainT |

| IoT | Internet-of-Things |

| LD | Linear Dichroism |

| MARL | Multi-Agent Reinforcement Learning |

| MDP | Markov Decision Process |

| MEC | Multi-Access Edge Computing |

| MPSO | Multi-Swarm PSO |

| MPSO-ECT | MPSO-Enforced ConstrainT |

| PPO | Proximal Policy Optimization |

| PSO | Particle Swarm Optimization |

| PSO-ECT | Particle Swarm Optimization-Enforced ConstrainT |

| PSR | Percentage of Satisfied Requests |

| QPSO | Quantum-inspired Particle Swarm Optimization |

| QPSO-ECT | Quantum-inspired Particle Swarm Optimization-Enforced ConstrainT |

| ReLU | Rectified Linear Unit |

| RL | Reinforcement Learning |

| VoI | Value of Information |

| SAC | Soft Actor–Critic |

| TRPO | Trust Region Policy Optimization |

| SARSA | State–Action–Reward–State–Action |

References

- Moreschini, S.; Pecorelli, F.; Li, X.; Naz, S.; Hästbacka, D.; Taibi, D. Cloud Continuum: The Definition. IEEE Access 2022, 10, 131876–131886. [Google Scholar] [CrossRef]

- Cavicchioli, R.; Martoglia, R.; Verucchi, M. A Novel Real-Time Edge-Cloud Big Data Management and Analytics Framework for Smart Cities. J. Univers. Comput. Sci. 2022, 28, 3–26. [Google Scholar] [CrossRef]

- Kimovski, D.; Matha, R.; Hammer, J.; Mehran, N.; Hellwagner, H.; Prodan, R. Cloud, Fog, or Edge: Where to Compute? IEEE Internet Comput. 2021, 25, 30–36. [Google Scholar] [CrossRef]

- Chang, V.; Golightly, L.; Modesti, P.; Xu, Q.A.; Doan, L.M.T.; Hall, K.; Boddu, S.; Kobusińska, A. A Survey on Intrusion Detection Systems for Fog and Cloud Computing. Future Internet 2022, 14, 89. [Google Scholar] [CrossRef]

- Bittencourt, L.; Immich, R.; Sakellariou, R.; Fonseca, N.; Madeira, E.; Curado, M.; Villas, L.; DaSilva, L.; Lee, C.; Rana, O. The Internet of Things, Fog and Cloud continuum: Integration and challenges. Internet Things 2018, 3–4, 134–155. [Google Scholar] [CrossRef]

- Papidas, A.G.; Polyzos, G.C. Self-Organizing Networks for 5G and Beyond: A View from the Top. Future Internet 2022, 14, 95. [Google Scholar] [CrossRef]

- Silver, D.; Singh, S.; Precup, D.; Sutton, R.S. Reward is enough. Artif. Intell. 2021, 299, 103535. [Google Scholar] [CrossRef]

- Wei, F.; Feng, G.; Sun, Y.; Wang, Y.; Liang, Y.C. Dynamic Network Slice Reconfiguration by Exploiting Deep Reinforcement Learning. In Proceedings of the 2020 IEEE International Conference on Communications (ICC 2020), Dublin, Ireland, 7–11 June 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Quang, P.T.A.; Hadjadj-Aoul, Y.; Outtagarts, A. A Deep Reinforcement Learning Approach for VNF Forwarding Graph Embedding. IEEE Trans. Netw. Serv. Manag. 2019, 16, 1318–1331. [Google Scholar] [CrossRef]

- Kaur, A.; Kumar, K. Energy-Efficient Resource Allocation in Cognitive Radio Networks Under Cooperative Multi-Agent Model-Free Reinforcement Learning Schemes. IEEE Trans. Netw. Serv. Manag. 2020, 17, 1337–1348. [Google Scholar] [CrossRef]

- Santos, J.; Wauters, T.; Volckaert, B.; De Turck, F. Reinforcement Learning for Service Function Chain Allocation in Fog Computing. In Communication Networks and Service Management in the Era of Artificial Intelligence and Machine Learning; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 2021; Chapter 7; pp. 147–173. [Google Scholar] [CrossRef]

- Alonso, J.; Orue-Echevarria, L.; Osaba, E.; López Lobo, J.; Martinez, I.; Diaz de Arcaya, J.; Etxaniz, I. Optimization and Prediction Techniques for Self-Healing and Self-Learning Applications in a Trustworthy Cloud Continuum. Information 2021, 12, 308. [Google Scholar] [CrossRef]