Federated Learning and Its Role in the Privacy Preservation of IoT Devices

Abstract

:1. Introduction

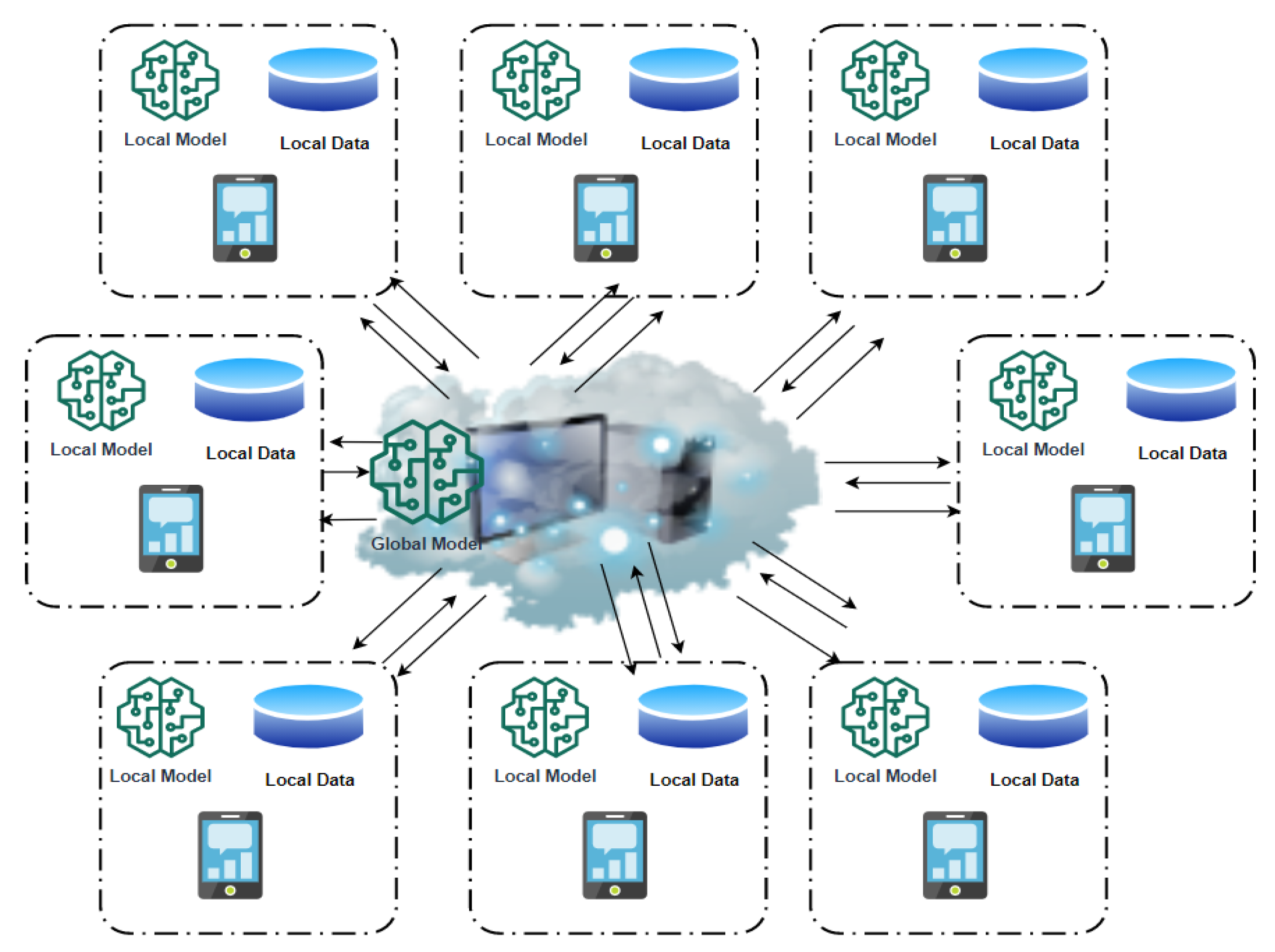

1.1. FL Basics

- Implementing the training algorithm.

- Assembling all learning results for devices.

- Changing the global model.

- Notifying devices after the global model-based improvement and preparing for the next training session.

1.2. Roles of FL Applications

1.3. Importance of FL

1.4. Challenge

- i.

- Differences between different local portions of data: Each node may have some bias towards multiple individuals, and the size of databases may vary significantly.

- ii.

- Temporary heterogeneity: the database distribution for each area may vary over time.

- iii.

- Database interaction of each node is a requirement.

- iv.

- The database for each node may need to be overwritten by default.

- v.

- Disappearing training data may allow attackers to go after the domain standard.

- vi.

- Due to the lack of global training data, it is necessary to identify the undesirable options that feed into the training, such as age and gender.

- vii.

- Limited or complete model loss is renewed due to node failure affecting the global standard.

1.5. Contributions

1.6. Organization of Paper

2. Related Works

2.1. Introduce the Term FL

2.2. Improve the Learning Capabilities

2.3. Privacy-Preserving

2.4. FL Developments

2.5. FL Development Issues

- Distribution of FL

- Surprising FL Collection

- FL security

2.6. FL Applications

- Self-driving vehicles

- Medicine: a digital existence

- Protecting the sensitive data

3. From Federated Database to FL

3.1. Independence

3.2. Differentiation

3.3. Federated Cloud Computing

3.4. Multi-Resource Scheduling

4. Methods

4.1. Asynchronous Communication

4.2. Device Sensing

4.3. Fault Tolerance Process

4.4. Model Heterogeneity

5. Roles of FL in Privacy-Preserving

5.1. Threat Model and Attacks

5.2. Single Attack

5.3. Attacks during Training Phase

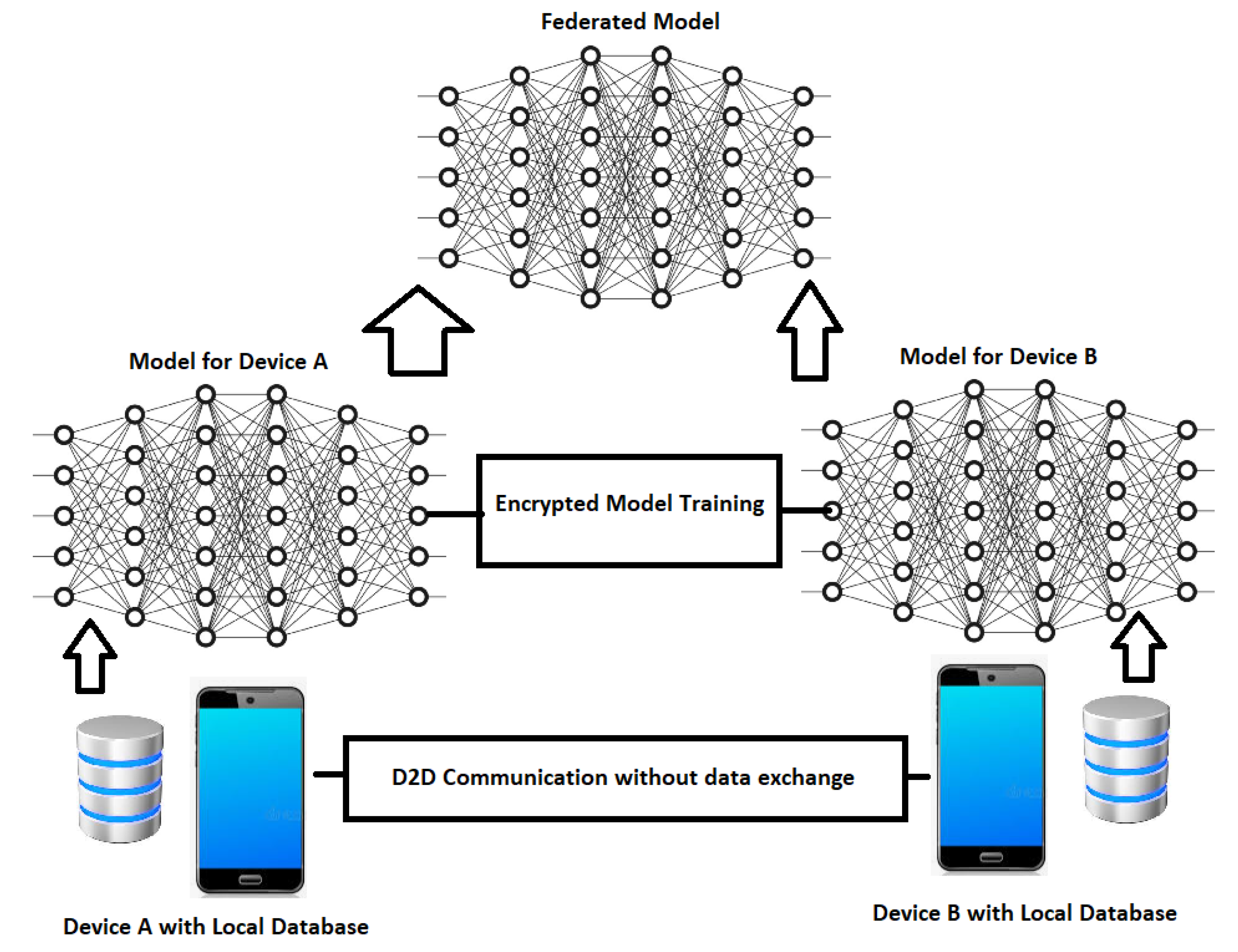

5.4. FL Structure for Effective Interaction and Privacy Safety

5.5. Blockchain FL

5.6. Learning at the Edge with Federated Computing

6. Discussion

7. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- McMahan, B.H.; Moore, E.; Ramage, D.; Hampson, S.; Agüera y Arcas, B. Communication-efficient learning of deep networks from decentralized data. arXiv 2016, arXiv:1602.05629. [Google Scholar]

- Qi, Y.; Hossain, M.S.; Nie, J.; Li, X. Privacy-preserving blockchain-based Federated Learning for traffic flow prediction. Future Gener. Comput. Syst. 2021, 117, 328–337. [Google Scholar] [CrossRef]

- Xu, G.; Li, H.; Liu, S.; Yang, K.; Lin, X. VerifyNet: Secure and Verifiable Federated Learning. IEEE Trans. Inf. Forensics Secur. 2020, 15, 911–926. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhao, J.; Jiang, L.; Tan, R.; Niyato, D.; Li, Z.; Lyu, L.; Liu, Y. Privacy-Preserving Blockchain-Based Federated Learning for IoT Devices. IEEE Internet Things J. 2020, 8, 1817–1829. [Google Scholar] [CrossRef]

- Qu, X.; Wang, S.; Hu, Q.; Cheng, X. Proof of Federated Learning: A novel energy-recycling consensus algorithm. arXiv 2019, arXiv:1912.11745. [Google Scholar] [CrossRef]

- Yang, Q.; Liu, Y.; Cheng, Y.; Kang, Y.; Chen, T.; Yu, H. Federated learning. Synth. Lect. Artif. Intell. Mach. Learn. 2019, 13, 1–207. [Google Scholar]

- Lim, W.Y.B.; Luong, N.C.; Hoang, D.T.; Jiao, Y.; Liang, Y.-C.; Yang, Q.; Niyato, D.; Miao, C. Federated Learning in Mobile Edge Networks: A Comprehensive Survey. IEEE Commun. Surv. Tutor. 2020, 22, 2031–2063. [Google Scholar] [CrossRef]

- Google Trends. Available online: https://trends.google.com/trends/explore?date=2016-07-01%202022-08-01&q=%2Fg%2F11hyd49kls (accessed on 1 August 2022).

- Maheswaran, J.; Jackowitz, D.; Zhai, E.; Wolinsky, D.I.; Ford, B. Building privacy-preserving cryptographic credentials from federated online identities. In Proceedings of the Sixth ACM Conference on Data and Application Security and Privacy, New Orleans, LA, USA, 9–11 March 2016; pp. 3–13. [Google Scholar]

- Alam, T.; Benaida, M. CICS: Cloud–Internet Communication Security Framework for the Internet of Smart Devices. Int. J. Interact. Mob. Technol. (iJIM) 2018, 12, 74–84. [Google Scholar] [CrossRef]

- Zhang, H.; Bosch, J.; Olsson, H.H. Engineering Federated Learning Systems: A Literature Review. In International Conference on Software Business; Springer: Cham, Switzerland, 2020; pp. 210–218. [Google Scholar]

- Lyu, L.; Yu, H.; Zhao, J.; Yang, Q. Threats to federated learning. In Federated Learning; Springer: Cham, Switzerland, 2020; pp. 3–16. [Google Scholar]

- Mothukuri, V.; Parizi, R.M.; Pouriyeh, S.; Huang, Y.; Dehghantanha, A.; Srivastava, G. A survey on security and privacy of federated learning. Futur. Gener. Comput. Syst. 2021, 115, 619–640. [Google Scholar] [CrossRef]

- Hao, M.; Li, H.; Luo, X.; Xu, G.; Yang, H.; Liu, S. Efficient and Privacy-Enhanced Federated Learning for Industrial Artificial Intelligence. IEEE Trans. Ind. Inform. 2019, 16, 6532–6542. [Google Scholar] [CrossRef]

- Wei, K.; Li, J.; Ding, M.; Ma, C.; Yang, H.H.; Farokhi, F.; Jin, S.; Quek, T.Q.S.; Poor, H.V. Federated Learning with Differential Privacy: Algorithms and Performance Analysis. IEEE Trans. Inf. Forensics Secur. 2020, 15, 3454–3469. [Google Scholar] [CrossRef]

- Rodríguez-Barroso, N.; Stipcich, G.; Jiménez-López, D.; Ruiz-Millán, J.A.; Martínez-Cámara, E.; González-Seco, G.; Luzón, M.V.; Veganzones, M.A.; Herrera, F. Federated Learning and Differential Privacy: Software tools analysis, the Sherpa. ai Federated Learning framework and methodological guidelines for preserving data privacy. Inf. Fusion 2020, 64, 270–292. [Google Scholar] [CrossRef]

- Machine Learning Market by Vertical (BFSI, Healthcare and Life Sciences, Retail, Telecommunication, Government and Defense, Manufacturing, Energy and Utilities), Deployment Mode, Service, Organization Size, and Region—Global Forecast to 2022. Available online: https://www.researchandmarkets.com/research/c4gp8n/global_machine?w=4 (accessed on 13 August 2022).

- Qu, Y.; Pokhrel, S.R.; Garg, S.; Gao, L.; Xiang, Y. A Blockchained Federated Learning Framework for Cognitive Computing in Industry 4.0 Networks. IEEE Trans. Ind. Inform. 2020, 17, 2964–2973. [Google Scholar] [CrossRef]

- Isaksson, M.; Norrman, K. Secure Federated Learning in 5G mobile networks. arXiv 2020, arXiv:2004.06700. [Google Scholar]

- Zhang, C.; Xie, Y.; Bai, H.; Yu, B.; Li, W.; Gao, Y. A survey on federated learning. Knowl. Based Syst. 2021, 216, 106775. [Google Scholar] [CrossRef]

- Li, Q.; Wen, Z.; Wu, Z.; Hu, S.; Wang, N.; He, B. A survey on Federated Learning systems: Vision, hype and reality for data privacy and protection. arXiv 2019, arXiv:1907.09693. [Google Scholar] [CrossRef]

- Aledhari, M.; Razzak, R.; Parizi, R.M.; Saeed, F. Federated Learning: A Survey on Enabling Technologies, Protocols, and Applications. IEEE Access 2020, 8, 140699–140725. [Google Scholar] [CrossRef]

- Kulkarni, V.; Kulkarni, M.; Pant, A. Survey of personalization techniques for Federated Learning. In Proceedings of the 2020 Fourth World Conference on Smart Trends in Systems, Security and Sustainability (WorldS4), London, UK, 27–28 July 2020; IEEE: Pittsburgh, PA, USA, 2020; pp. 794–797. [Google Scholar]

- Khan, L.U.; Pandey, S.R.; Tran, N.H.; Saad, W.; Han, Z.; Nguyen, M.N.; Hong, C.S. Federated Learning for edge networks: Resource optimization and incentive mechanism. IEEE Commun. Mag. 2020, 58, 88–93. [Google Scholar] [CrossRef]

- Wang, S.; Tuor, T.; Salonidis, T.; Leung, K.K.; Makaya, C.; He, T.; Chan, K. Adaptive Federated Learning in Resource Constrained Edge Computing Systems. IEEE J. Sel. Areas Commun. 2019, 37, 1205–1221. [Google Scholar] [CrossRef]

- Li, L.; Fan, Y.; Lin, K.Y. A Survey on Federated Learning. In Proceedings of the 2020 IEEE 16th International Conference on Control & Automation (ICCA), Singapore, 9–11 October 2020; IEEE: Pittsburgh, PA, USA, 2020; pp. 791–796. [Google Scholar]

- Zhan, Y.; Zhang, J.; Hong, Z.; Wu, L.; Li, P.; Guo, S. A Survey of Incentive Mechanism Design for Federated Learning. IEEE Trans. Emerg. Top. Comput. 2021, 10, 1. [Google Scholar] [CrossRef]

- Li, L.; Fan, Y.; Tse, M.; Lin, K.-Y. A review of applications in Federated Learning. Comput. Ind. Eng. 2020, 149, 106854. [Google Scholar] [CrossRef]

- Zhu, H.; Zhang, H.; Jin, Y. From Federated Learning to federated neural architecture search: A survey. Complex Intell. Syst. 2021, 7, 639–657. [Google Scholar] [CrossRef]

- Kolias, C.; Kambourakis, G. TermID: A distributed swarm intelligence-based approach for wireless intrusion detection. Int. J. Inf. Secur. 2017, 16, 401–416. [Google Scholar] [CrossRef]

- Pham, Q.-V.; Zeng, M.; Huynh-The, T.; Han, Z.; Hwang, W.-J. Aerial Access Networks for Federated Learning: Applications and Challenges. IEEE Netw. 2022, 36, 159–166. [Google Scholar] [CrossRef]

- Ghimire, B.; Rawat, D.B. Recent Advances on Federated Learning for Cybersecurity and Cybersecurity for Federated Learning for Internet of Things. IEEE Internet Things J. 2022, 9, 8229–8249. [Google Scholar] [CrossRef]

- Zhang, T.; Gao, L.; He, C.; Zhang, M.; Krishnamachari, B.; Avestimehr, A.S. Federated Learning for the Internet of Things: Applications, Challenges, and Opportunities. IEEE Internet Things Mag. 2022, 5, 24–29. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, X.; Wang, L. Asymmetrically vertical Federated Learning. arXiv 2020, arXiv:2004.07427. [Google Scholar]

- Junxu, L.; Xiaofeng, M. Survey on privacy-preserving machine learning. J. Comput. Res. Dev. 2020, 57, 346. [Google Scholar]

- Yuan, B.; Ge, S.; Xing, W. A Federated Learning framework for healthcare IoT devices. arXiv 2020, arXiv:2005.05083. [Google Scholar]

- Truex, S.; Baracaldo, N.; Anwar, A.; Steinke, T.; Ludwig, H.; Zhang, R.; Zhou, Y. A hybrid approach to privacy-preserving Federated Learning. In Proceedings of the 12th ACM Workshop on Artificial Intelligence and Security, London, UK, 15 November 2019; pp. 1–11. [Google Scholar]

- Yang, Z.; Chen, M.; Wong, K.K.; Poor, H.V.; Cui, S. Federated Learning for 6G: Applications, Challenges, and Opportunities. arXiv 2021, arXiv:2101.01338. [Google Scholar] [CrossRef]

- Yang, H.H.; Liu, Z.; Quek, T.Q.; Poor, H.V. Scheduling policies for FL in wireless networks. IEEE Trans. Commun. 2019, 68, 317–333. [Google Scholar] [CrossRef]

- Mammen, P.M. Federated Learning: Opportunities and Challenges. arXiv 2021, arXiv:2101.05428. [Google Scholar]

- Cheng, Y.; Liu, Y.; Chen, T.; Yang, Q. Federated Learning for privacy-preserving AI. Commun. ACM 2020, 63, 33–36. [Google Scholar] [CrossRef]

- Lyu, L.; Xu, X.; Wang, Q.; Yu, H. Collaborative fairness in Federated Learning. In Federated Learning; Springer: Cham, Switzerland, 2020; pp. 189–204. [Google Scholar]

- Ghosh, A.; Hong, J.; Yin, D.; Ramchandran, K. Robust Federated Learning in a heterogeneous environment. arXiv 2019, arXiv:1906.06629. [Google Scholar]

- Nishio, T.; Yonetani, R. Client selection for Federated Learning with heterogeneous resources in mobile edge. In Proceedings of the ICC 2019–2019 IEEE International Conference on Communications (ICC), Shanghai, China, 20–24 May 2019; IEEE: Pittsburgh, PA, USA, 2019; pp. 1–7. [Google Scholar]

- Choudhury, O.; Gkoulalas-Divanis, A.; Salonidis, T.; Sylla, I.; Park, Y.; Hsu, G.; Das, A. Anonymizing data for privacy-preserving Federated Learning. arXiv 2020, arXiv:2002.09096. [Google Scholar]

- Huang, Y.; Chu, L.; Zhou, Z.; Wang, L.; Liu, J.; Pei, J.; Zhang, Y. Personalized Federated Learning: An attentive collaboration approach. arXiv 2020, arXiv:2007.03797. [Google Scholar]

- Wang, K.; Mathews, R.; Kiddon, C.; Eichner, H.; Beaufays, F.; Ramage, D. Federated evaluation of on-device personalization. arXiv 2019, arXiv:1910.10252. [Google Scholar]

- Geyer, R.C.; Klein, T.; Nabi, M. Differentially private Federated Learning: A client level perspective. arXiv 2017, arXiv:1712.07557. [Google Scholar]

- Bui, D.; Malik, K.; Goetz, J.; Liu, H.; Moon, S.; Kumar, A.; Shin, K.G. Federated user representation learning. arXiv 2019, arXiv:1909.12535. [Google Scholar]

- Tran, N.H.; Bao, W.; Zomaya, A.; Nguyen, M.N.; Hong, C.S. Federated Learning over wireless networks: Optimization model design and analysis. In Proceedings of the IEEE INFOCOM 2019-IEEE Conference on Computer Communications, Paris, France, 29 April 2019–2 May 2019; IEEE: Pittsburgh, PA, USA, 2019; pp. 1387–1395. [Google Scholar]

- Peterson, D.; Kanani, P.; Marathe, V.J. Private Federated Learning with domain adaptation. arXiv 2019, arXiv:1912.06733. [Google Scholar]

- Yu, F.; Rawat, A.S.; Menon, A.; Kumar, S. Federated Learning with only positive labels. In Proceedings of the International Conference on Machine Learning, Virtual Event, 13–18 July 2020; pp. 10946–10956. [Google Scholar]

- Wang, L.; Xu, S.; Wang, X.; Zhu, Q. Towards Class Imbalance in Federated Learning. arXiv 2020, arXiv:2008.06217. [Google Scholar]

- Li, A.; Wang, S.; Li, W.; Liu, S.; Zhang, S. Predicting Human Mobility with Federated Learning. In Proceedings of the 28th International Conference on Advances in Geographic Information Systems, Seattle, WA, USA, 13 November 2020; pp. 441–444. [Google Scholar]

- Guler, B.; Yener, A. Sustainable Federated Learning. arXiv 2021, arXiv:2102.11274. [Google Scholar]

- Pokhrel, S.R. WITHDRAWN: Towards efficient and reliable Federated Learning using Blockchain for autonomous vehicles. Comput. Netw. 2020, 107431. [Google Scholar] [CrossRef]

- Qian, Y.; Hu, L.; Chen, J.; Guan, X.; Hassan, M.M.; Alelaiwi, A. Privacy-aware service placement for mobile edge computing via Federated Learning. Inf. Sci. 2019, 505, 562–570. [Google Scholar] [CrossRef]

- Hu, L.; Yan, H.; Li, L.; Pan, Z.; Liu, X.; Zhang, Z. MHAT: An efficient model-heterogenous aggregation training scheme for Federated Learning. Inf. Sci. 2021, 560, 493–503. [Google Scholar] [CrossRef]

- Doku, R.; Rawat, D.B.; Liu, C. Towards Federated Learning approach to determine data relevance in big data. In Proceedings of the 2019 IEEE 20th International Conference on Information Reuse and Integration for Data Science (IRI), Los Angeles, CA, USA, 30 July 2019–1 August 2019; IEEE: Pittsburgh, PA, USA, 2019; pp. 184–192. [Google Scholar]

- Sharghi, H.; Ma, W.; Sartipi, K. Federated service-based authentication provisioning for distributed diagnostic imaging systems. In Proceedings of the 2015 IEEE 28th International Symposium on Computer-Based Medical Systems, Sao Carlos, Brazil, 22–25 June 2015; IEEE: Pittsburgh, PA, USA, 2015; pp. 344–347. [Google Scholar]

- Ge, S.; Wu, F.; Wu, C.; Qi, T.; Huang, Y.; Xie, X. FedNER: Privacy-preserving medical named entity recognition with Federated Learning. arXiv 2020, arXiv:2003.09288. [Google Scholar]

- Jiang, Y.; Konečný, J.; Rush, K.; Kannan, S. Improving Federated Learning personalization via model agnostic meta learning. arXiv 2019, arXiv:1909.12488. [Google Scholar]

- Liu, Y.; Ai, Z.; Sun, S.; Zhang, S.; Liu, Z.; Yu, H. Fedcoin: A peer-to-peer payment system for Federated Learning. In Federated Learning; Springer: Cham, Switzerland, 2020; pp. 125–138. [Google Scholar]

- Zhan, Y.; Li, P.; Qu, Z.; Zeng, D.; Guo, S. A Learning-Based Incentive Mechanism for Federated Learning. IEEE Internet Things J. 2020, 7, 6360–6368. [Google Scholar] [CrossRef]

- Kang, J.; Xiong, Z.; Niyato, D.; Xie, S.; Zhang, J. Incentive Mechanism for Reliable Federated Learning: A Joint Optimization Approach to Combining Reputation and Contract Theory. IEEE Internet Things J. 2019, 6, 10700–10714. [Google Scholar] [CrossRef]

- Tuor, T.; Wang, S.; Ko, B.J.; Liu, C.; Leung, K.K. Data selection for Federated Learning with relevant and irrelevant data at clients. arXiv 2020, arXiv:2001.08300. [Google Scholar]

- Chen, F.; Luo, M.; Dong, Z.; Li, Z.; He, X. Federated meta-learning with fast convergence and efficient communication. arXiv 2018, arXiv:1802.07876. [Google Scholar]

- Zhuo, H.H.; Feng, W.; Xu, Q.; Yang, Q.; Lin, Y. Federated reinforcement learning. arXiv 2019, arXiv:1901.08277. [Google Scholar]

- Jiao, Y.; Wang, P.; Niyato, D.; Lin, B.; Kim, D.I. Toward an Automated Auction Framework for Wireless Federated Learning Services Market. IEEE Trans. Mob. Comput. 2020, 20, 3034–3048. [Google Scholar] [CrossRef]

- Yao, X.; Huang, T.; Wu, C.; Zhang, R.; Sun, L. Towards faster and better Federated Learning: A feature fusion approach. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; IEEE: Pittsburgh, PA, USA, 2019; pp. 175–179. [Google Scholar]

- Kim, Y.J.; Hong, C.S. Blockchain-based node-aware dynamic weighting methods for improving Federated Learning performance. In Proceedings of the 2019 20th Asia-Pacific Network Operations and Management Symposium (APNOMS), Matsue, Japan, 18–20 September 2019; IEEE: Pittsburgh, PA, USA, 2019; pp. 1–4. [Google Scholar]

- Nilsson, A.; Smith, S.; Ulm, G.; Gustavsson, E.; Jirstrand, M. A performance evaluation of Federated Learning algorithms. In Proceedings of the Second Workshop on Distributed Infrastructures for Deep Learning, Rennes, France, 10 December 2018; pp. 1–8. [Google Scholar]

- Yurochkin, M.; Agarwal, M.; Ghosh, S.; Greenewald, K.; Hoang, N.; Khazaeni, Y. Bayesian nonparametric Federated Learning of neural networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 7252–7261. [Google Scholar]

- Van Berlo, B.; Saeed, A.; Ozcelebi, T. Towards federated unsupervised representation learning. In Proceedings of the Third ACM International Workshop on Edge Systems, Analytics and Networking, Heraklion, Greece, 27 April 2020; pp. 31–36. [Google Scholar]

- Chandiramani, K.; Garg, D.; Maheswari, N. Performance Analysis of Distributed and Federated Learning Models on Private Data. Procedia Comput. Sci. 2019, 165, 349–355. [Google Scholar] [CrossRef]

- Sahu, A.K.; Li, T.; Sanjabi, M.; Zaheer, M.; Talwalkar, A.; Smith, V. On the convergence of federated optimization in heterogeneous networks. arXiv 2018, arXiv:1812.06127. [Google Scholar]

- Sheth, A.P.; Larson, J.A. Federated database systems for managing distributed, heterogeneous, and autonomous databases. ACM Comput. Surv. 1990, 22, 183–236. [Google Scholar] [CrossRef]

- Anelli, V.W.; Deldjoo, Y.; Di Noia, T.; Ferrara, A. Towards effective device-aware Federated Learning. In International Conference of the Italian Association for Artificial Intelligence; Springer: Cham, Switzerland, 2019; pp. 477–491. [Google Scholar]

- Konecný, J.; McMahan, H.B.; Yu, F.X.; Richtárik, P.; Suresh, A.T.; Bacon, D. Federated Learning: Strategies for improving communication efficiency. arXiv 2016, arXiv:1610.05492. [Google Scholar]

- Kairouz, P.; McMahan, H.B.; Avent, B.; Bellet, A.; Bennis, M.; Bhagoji, A.N.; Bonawitz, K.; Charles, Z.; Cormode, G.; Zhao, S.; et al. Advances and open problems in Federated Learning. arXiv 2019, arXiv:1912.04977. [Google Scholar]

- Lu, Y.; Huang, X.; Dai, Y.; Maharjan, S.; Zhang, Y. Blockchain and Federated Learning for Privacy-Preserved Data Sharing in Industrial IoT. IEEE Trans. Ind. Informatics 2019, 16, 4177–4186. [Google Scholar] [CrossRef]

- Lalitha, A.; Kilinc, O.C.; Javidi, T.; Koushanfar, F. Peer-to-peer Federated Learning on graphs. arXiv 2019, arXiv:1901.11173. [Google Scholar]

- Song, M.; Wang, Z.; Zhang, Z.; Song, Y.; Wang, Q.; Ren, J.; Qi, H. Analyzing User-Level Privacy Attack Against Federated Learning. IEEE J. Sel. Areas Commun. 2020, 38, 2430–2444. [Google Scholar] [CrossRef]

- Liu, Y.; Peng, J.; Kang, J.; Iliyasu, A.M.; Niyato, D.; El-Latif, A.A.A. A Secure Federated Learning Framework for 5G Networks. IEEE Wirel. Commun. 2020, 27, 24–31. [Google Scholar] [CrossRef]

- Lim, H.-K.; Kim, J.-B.; Heo, J.-S.; Han, Y.-H. Federated Reinforcement Learning for Training Control Policies on Multiple IoT Devices. Sensors 2020, 20, 1359. [Google Scholar] [CrossRef] [PubMed]

- Wu, Q.; He, K.; Chen, X. Personalized Federated Learning for Intelligent IoT Applications: A Cloud-Edge Based Framework. IEEE Open J. Comput. Soc. 2020, 1, 35–44. [Google Scholar] [CrossRef]

- Chen, Y.; Ning, Y.; Rangwala, H. Asynchronous online Federated Learning for edge devices. arXiv 2019, arXiv:1911.02134. [Google Scholar]

- Hardy, S.; Henecka, W.; Ivey-Law, H.; Nock, R.; Patrini, G.; Smith, G.; Thorne, B. Private Federated Learning on vertically partitioned data via entity resolution and additively homomorphic encryption. arXiv 2017, arXiv:1711.10677. [Google Scholar]

- Cheng, K.; Fan, T.; Jin, Y.; Liu, Y.; Chen, T.; Yang, Q. Secureboost: A lossless Federated Learning framework. arXiv 2019, arXiv:1901.08755. [Google Scholar] [CrossRef]

- Amiri, M.M.; Gündüz, D. Federated Learning over wireless fading channels. IEEE Trans. Wirel. Commun. 2020, 19, 3546–3557. [Google Scholar] [CrossRef]

- Pandey, S.R.; Tran, N.H.; Bennis, M.; Tun, Y.K.; Manzoor, A.; Hong, C.S. A Crowdsourcing Framework for On-Device Federated Learning. IEEE Trans. Wirel. Commun. 2020, 19, 3241–3256. [Google Scholar] [CrossRef]

- Qin, Z.; Li, G.Y.; Ye, H. Federated Learning and wireless communications. arXiv 2020, arXiv:2005.05265. [Google Scholar] [CrossRef]

- Savazzi, S.; Nicoli, M.; Rampa, V. Federated Learning with Cooperating Devices: A Consensus Approach for Massive IoT Networks. IEEE Internet Things J. 2020, 7, 4641–4654. [Google Scholar] [CrossRef]

- Lalitha, A.; Shekhar, S.; Javidi, T.; Koushanfar, F. Fully decentralized Federated Learning. In Proceedings of the Third Workshop on Bayesian Deep Learning (NeurIPS), Montréal, QC, Canada, 7 December 2018. [Google Scholar]

- Mills, J.; Hu, J.; Min, G. Communication-Efficient Federated Learning for Wireless Edge Intelligence in IoT. IEEE Internet Things J. 2019, 7, 5986–5994. [Google Scholar] [CrossRef]

- Du, Z.; Wu, C.; Yoshinaga, T.; Yau, K.-L.A.; Ji, Y.; Li, J. Federated Learning for Vehicular Internet of Things: Recent Advances and Open Issues. IEEE Open J. Comput. Soc. 2020, 1, 45–61. [Google Scholar] [CrossRef] [PubMed]

- Lu, Y.; Huang, X.; Zhang, K.; Maharjan, S.; Zhang, Y. Blockchain Empowered Asynchronous Federated Learning for Secure Data Sharing in Internet of Vehicles. IEEE Trans. Veh. Technol. 2020, 69, 4298–4311. [Google Scholar] [CrossRef]

- Zhou, C.; Fu, A.; Yu, S.; Yang, W.; Wang, H.; Zhang, Y. Privacy-Preserving Federated Learning in Fog Computing. IEEE Internet Things J. 2020, 7, 10782–10793. [Google Scholar] [CrossRef]

- Jiang, J.C.; Kantarci, B.; Oktug, S.; Soyata, T. Federated Learning in Smart City Sensing: Challenges and Opportunities. Sensors 2020, 20, 6230. [Google Scholar] [CrossRef]

- Alam, T. Federated Learning approach for privacy-preserving on the D2D communication in IoT. In International Conference on Emerging Technologies and Intelligent Systems; Springer: Cham, Switzerland, 2021; pp. 369–380. [Google Scholar]

- Li, T.; Sahu, A.K.; Talwalkar, A.; Smith, V. Federated Learning: Challenges, methods, and future directions. IEEE Signal Process. Mag. 2020, 37, 50–60. [Google Scholar] [CrossRef]

- Li, Z.; Sharma, V.; Mohanty, S.P. Preserving Data Privacy via Federated Learning: Challenges and Solutions. IEEE Consum. Electron. Mag. 2020, 9, 8–16. [Google Scholar] [CrossRef]

- Nguyen, T.D.; Marchal, S.; Miettinen, M.; Fereidooni, H.; Asokan, N.; Sadeghi, A.R. DÏoT: A federated self-learning anomaly detection system for IoT. In Proceedings of the 2019 IEEE 39th International Conference on Distributed Computing Systems (ICDCS), Dallas, TX, USA, 7–10 July 2019; IEEE: Pittsburgh, PA, USA, 2019; pp. 756–767. [Google Scholar]

- Wang, S.; Chen, M.; Yin, C.; Saad, W.; Hong, C.S.; Cui, S.; Poor, H.V. Federated Learning for task and resource allocation in wireless high altitude balloon networks. arXiv 2020, arXiv:2003.09375. [Google Scholar] [CrossRef]

- Chen, D.; Xie, L.J.; Kim, B.; Wang, L.; Hong, C.S.; Wang, L.C.; Han, Z. Federated Learning based mobile edge computing for augmented reality applications. In Proceedings of the 2020 International Conference on Computing, Networking and Communications (ICNC), Big Island, HI, USA, 17–20 February 2020; IEEE: Pittsburgh, PA, USA, 2020; pp. 767–773. [Google Scholar]

- Feng, J.; Rong, C.; Sun, F.; Guo, D.; Li, Y. PMF: A privacy-preserving human mobility prediction framework via Federated Learning. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2020, 4, 1–21. [Google Scholar] [CrossRef]

- Bakopoulou, E.; Tillman, B.; Markopoulou, A. A Federated Learning approach for mobile packet classification. arXiv 2019, arXiv:1907.13113. [Google Scholar] [CrossRef]

- Choudhury, O.; Gkoulalas-Divanis, A.; Salonidis, T.; Sylla, I.; Park, Y.; Hsu, G.; Das, A. Differential privacy-enabled Federated Learning for sensitive health data. arXiv 2019, arXiv:1910.02578. [Google Scholar]

- Ye, D.; Yu, R.; Pan, M.; Han, Z. Federated Learning in vehicular edge computing: A selective model aggregation approach. IEEE Access 2020, 8, 23920–23935. [Google Scholar] [CrossRef]

- Saputra, Y.M.; Nguyen, D.N.; Hoang, D.T.; Vu, T.X.; Dutkiewicz, E.; Chatzinotas, S. Federated Learning Meets Contract Theory: Energy-Efficient Framework for Electric Vehicle Networks. arXiv 2020, arXiv:2004.01828. [Google Scholar]

- Liu, Y.; Yu, J.J.Q.; Kang, J.; Niyato, D.; Zhang, S. Privacy-Preserving Traffic Flow Prediction: A Federated Learning Approach. IEEE Internet Things J. 2020, 7, 7751–7763. [Google Scholar] [CrossRef]

- Gursoy, M.E.; Inan, A.; Nergiz, M.E.; Saygin, Y. Privacy-Preserving Learning Analytics: Challenges and Techniques. IEEE Trans. Learn. Technol. 2016, 10, 68–81. [Google Scholar] [CrossRef]

- Rieke, N.; Hancox, J.; Li, W.; Milletarì, F.; Roth, H.R.; Albarqouni, S.; Bakas, S.; Galtier, M.N.; Landman, B.A.; Maier-Hein, K.; et al. The future of digital health with Federated Learning. NPJ Digit. Med. 2020, 3, 1–7. [Google Scholar] [CrossRef]

- Rahman, S.A.; Tout, H.; Ould-Slimane, H.; Mourad, A.; Talhi, C.; Guizani, M. A Survey on Federated Learning: The Journey from Centralized to Distributed On-Site Learning and Beyond. IEEE Internet Things J. 2020, 8, 5476–5497. [Google Scholar] [CrossRef]

- Zheng, Z.; Zhou, Y.; Sun, Y.; Wang, Z.; Liu, B.; Li, K. Federated Learning in Smart Cities: A Comprehensive Survey. arXiv 2021, arXiv:2102.01375. [Google Scholar]

- Khan, L.U.; Saad, W.; Han, Z.; Hossain, E.; Hong, C.S. Federated Learning for internet of things: Recent advances, taxonomy, and open challenges. arXiv 2020, arXiv:2009.13012. [Google Scholar] [CrossRef]

- Briggs, C.; Fan, Z.; Andras, P. A Review of Privacy-preserving Federated Learning for the Internet-of-Things. arXiv 2020, arXiv:2004.11794. [Google Scholar]

- Fantacci, R.; Picano, B. Federated Learning framework for mobile edge computing networks. CAAI Trans. Intell. Technol. 2020, 5, 15–21. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Moustafa, N.; Hawash, H.; Ding, W. Federated Learning for Privacy-Preserving Internet of Things. In Deep Learning Techniques for IoT Security and Privacy; Springer: Cham, Switzerland, 2022; pp. 215–228. [Google Scholar]

- Alam, T.; Ullah, A.; Benaida, M. Deep reinforcement learning approach for computation offloading in blockchain-enabled communications systems. J. Ambient Intell. Humaniz. Comput. 2022, 1–14. [Google Scholar] [CrossRef]

- Alam, T. Blockchain-Enabled Deep Reinforcement Learning Approach for Performance Optimization on the Internet of Things. Wirel. Pers. Commun. 2022, 1–17. [Google Scholar] [CrossRef]

- Gupta, R.; Alam, T. Survey on Federated-Learning Approaches in Distributed Environment. Wirel. Pers. Commun. 2022, 125, 1631–1652. [Google Scholar] [CrossRef]

| Abbreviation | Means |

|---|---|

| FL | Federated Learning |

| IoT | Internet of Things |

| ML | Machine Learning |

| AI | Artificial Intelligence |

| DL | Deep Learning |

| CAGR | Compound annual growth rate |

| BFSI | Banking, finance, and insurance |

| SBN | Static Batch Normalization |

| FC | Federated cloud |

| HeteroFL | Heterogeneous Federated Learning |

| SGD | Stochastic gradient descent |

| FDBS | Federated database systems |

| PRLC | Pulling Reduction with Local Compensation |

| FedAvg | Federated Averaging |

| BlockFL | Blockchain-based federated learning |

| MEC | Mobile edge computing |

| TCP CUBIC | Transmission control protocol and Cubic Curve Binary Increase Congestion |

| Ref. No. | Authors | Year | Title/Topic |

|---|---|---|---|

| [7] | Lim, Wei Yang Bryan, et al. | 2020 | FL in mobile edge networks |

| [8] | Chamikara, M. A. P., et al. | 2021 | Privacy preservation in FL |

| [11] | Zhang, H., et al. | 2020 | Engineering FL systems |

| [13] | Mothukuri, V., et al. | 2021 | Security and privacy in FL |

| [20] | Zhang, C., et al. | 2021 | FL |

| [21] | Li, Q., et al. | 2019 | FL systems |

| [22] | Aledhari, M., et al. | 2020 | FL |

| [23] | Kulkarni, V., et al. | 2020 | FL |

| [26] | Li, L., et al. | 2020 | A Survey on FL |

| [27] | Zhan, Y., et al. | 2021 | Mechanism Design for FL |

| [28] | Li, L., et al. | 2020 | Applications in FL |

| [29] | Zhu, H., et al. | 2021 | From FL to federated neural architecture |

| [30] | Kolias, C., et al. | 2022 | Wireless intrusion detection |

| [31] | Pham, Q. V., et al. | 2022 | Aerial access networks for federated learning |

| [32] | Ghimire, B., and Rawat, D. B. | 2022 | Federated learning for cybersecurity |

| [33] | Zhang, T., et al. | 2022 | Federated learning for the Internet of Things |

| Year | Ref | Contribution |

|---|---|---|

| 2016 | [1] | Introduce the term FL |

| 2016 | [77] | To enhance the functioning of the global model and decrease communications load. |

| 2017 | [48,78] | Studies of attacks on privacy. |

| 2018 | [67,72,76,79,80] | Development of resource allocation strategies |

| 2019 | [5,71,81] | Proof of FL in Blockchain |

| 2019 | [14,37] | Improving privacy using FL |

| 2019 | [25,44] | Resource allocation strategies |

| 2019 | [39,43,50,57] | Applied Federated Learning in wireless communications on mobile edge |

| 2019 | [47,49,51] | Applied Federated Learning on-device personalization |

| 2019 | [59,62,82] | Applied Federated Learning for data privacy in big data |

| 2020 | [3] | VerifyNet for secure and verifiable FL |

| 2020 | [4,18,56,83] | Privacy-preserving Blockchain-based FL |

| 2020 | [19,84] | FL in 5G mobile network |

| 2020 | [24] | FL in Resource Optimizations |

| 2020 | [36,61] | FL implementation in healthcare |

| 2020 | [54] | Human mobility Prediction using FL |

| 2020 | [63] | FedCoin payment system |

| 2020 | [85,86,87] | Applied FL on IoT devices |

| 2020 | [88] | FL in smart city sensing |

| 2021 | [2] | FL in traffic flow prediction |

| 2021 | [8] | FL-based distributed machine learning |

| 2021 | [38] | FL for 6G |

| 2021 | [58] | MHAT: FL-based model aggregation training scheme |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alam, T.; Gupta, R. Federated Learning and Its Role in the Privacy Preservation of IoT Devices. Future Internet 2022, 14, 246. https://doi.org/10.3390/fi14090246

Alam T, Gupta R. Federated Learning and Its Role in the Privacy Preservation of IoT Devices. Future Internet. 2022; 14(9):246. https://doi.org/10.3390/fi14090246

Chicago/Turabian StyleAlam, Tanweer, and Ruchi Gupta. 2022. "Federated Learning and Its Role in the Privacy Preservation of IoT Devices" Future Internet 14, no. 9: 246. https://doi.org/10.3390/fi14090246

APA StyleAlam, T., & Gupta, R. (2022). Federated Learning and Its Role in the Privacy Preservation of IoT Devices. Future Internet, 14(9), 246. https://doi.org/10.3390/fi14090246