Evaluation of Contextual and Game-Based Training for Phishing Detection

Abstract

:1. Introduction

To what extent can the two methods, game-based training and CBMT, support users to accurately differentiate between phishing and legitimate email?

2. Information Security Awareness Training

3. Materials and Methods

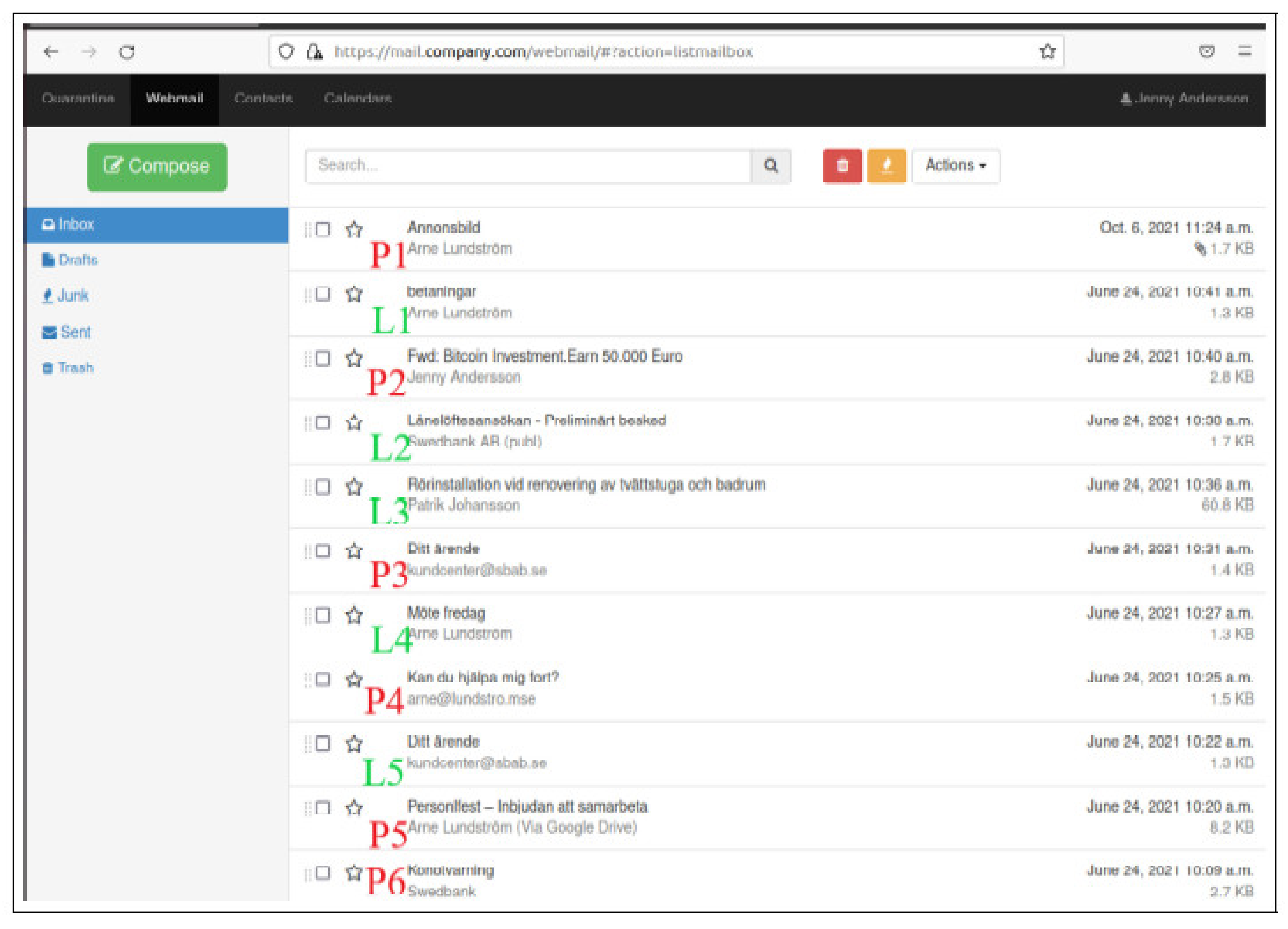

3.1. Experiment Environment

- Legitimate emails from service providers such as Google and banks.

- Phishing emails that imitated phishing emails from hijacked sender accounts.

- Phishing emails from domains made up to look similar to real domains, for instance, lundstro.mse instead of lundstrom.se.

Jenny is 34 years old and works as an accountant at a small company (Lundström AB), and her manager is Arne Lundtröm. She lives with her husband and kids in a small town in Sweden. Your email address is jenny@lundstrom.se. You use the banks SBAB and Swedbank and is interested in investing in Bitcoin. You are about to remodel your home and have applied for loans at several banks to finance that. You shop a fair bit online and are registered at several e-stores without really remembering where. You are currently about to remodel your bathroom. Ask the experiment supervisor if you need additional information about Jenny or the workplace during the experiment.

- Incorrect sender address where the attacker may use an arbitrary incorrect sender address, attempt to create an address that resembles that of the true sender, or use a sender name to hide the actual sender address.

- Malicious attachments where the attacker will attempt to make the recipient download an attachment with malicious content. A modified file extension may disguise the attachment.

- Malicious links that are commonly disguised so that the user needs to hover over them to see the true link target.

- Persuasive tone where an attacker attempts to pressure the victim to act rapidly.

- Poor spelling and grammar that may indicate that a text is machine translated or not written professionally.

- The first phishing email came from the manager’s real address and mimicked a spear-phishing attempt, including a malicious attachment and hijacked sender address. The attachment was a zip file with the filename “annons.jpeg.zip (English: advertisement.jpeg.zip)”. The text body prompted the recipient to open the attached file. In addition to a suspicious file extension, the mail signatures differed from the signature in other emails sent by the manager.

- The second phishing email came from Jenny’s own address and prompted the recipient to click a link that supposedly led to information about Bitcoin. The email could be identified as phishing by the strange addressing and the fact that the tone in the email was very persuasive.

- The third phishing email appeared to be a request from the bank SBAB. It prompted the user to reply with her bank account number and deposit a small sum of money into another account before a loan request could be processed. It could be identified by improper grammar, an incorrect sender address (that was masked behind a reasonable sender name), and the request itself.

- The fourth phishing email was designed to appear from Jenny´s manager. It prompted Jenny to quickly deposit a large sum of money into a bank account. It could be identified by the request itself and because the sender address was arne@lundstro.mse instead of arne@lundstrom.se.

- The fifth phishing email mimicked a request from Google Drive. It could be identified by examining the target of the included links that lead to the address xcom.se instead of google.

- The sixth phishing email appeared to be from the bank Swedbank and requested the recipient to go to a web page and log in to prove the ownership of an account. It could be identified as phishing by examining the link target, the sender address, which was hidden behind a sender name, and the fact that it contained several spelling errors.

- The first legitimate email was a request from Jenny’s manager Arne. The request prompted Jenny to review a file on a shared folder.

- The second legitimate email was a notification from a Swedish bank. It prompted Jenny to go to the bank website and log in. It did not contain any link.

- The third legitimate email was an offering from a plumber. While containing some spelling errors, it did not prompt Jenny to make any potentially harmful actions.

- The fourth legitimate email is a request for a meeting from Jenny’s manager Arne.

- The fifth email is a notification from a Swedish bank. This notification prompts the user to go to the bank website and log in. It does not contain any greeting or signature with address.

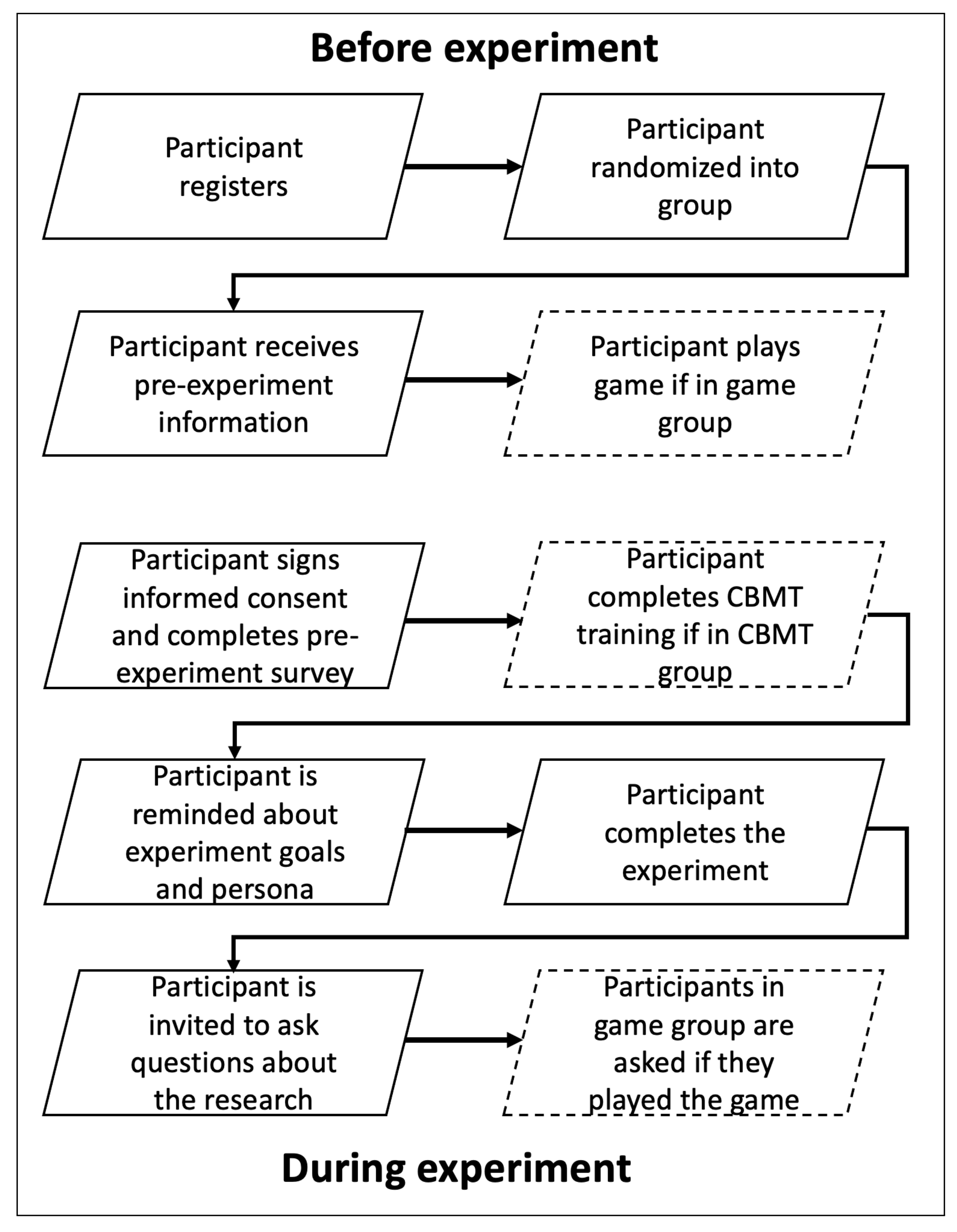

3.2. Participant Recruitment

- Game: Participants in this group were prompted to play an educational game before arriving for the experiment. The game is called Jigsaw (https://phishingquiz.withgoogle.com/) (accessed on 6 March 2022) and is developed by Google. It is an example of game-based training that is implemented as a quiz and was selected for use in this research because it is readily available for users. It also covers all the identifiers of phishing previously described. Jigsaw takes about five minutes to complete.

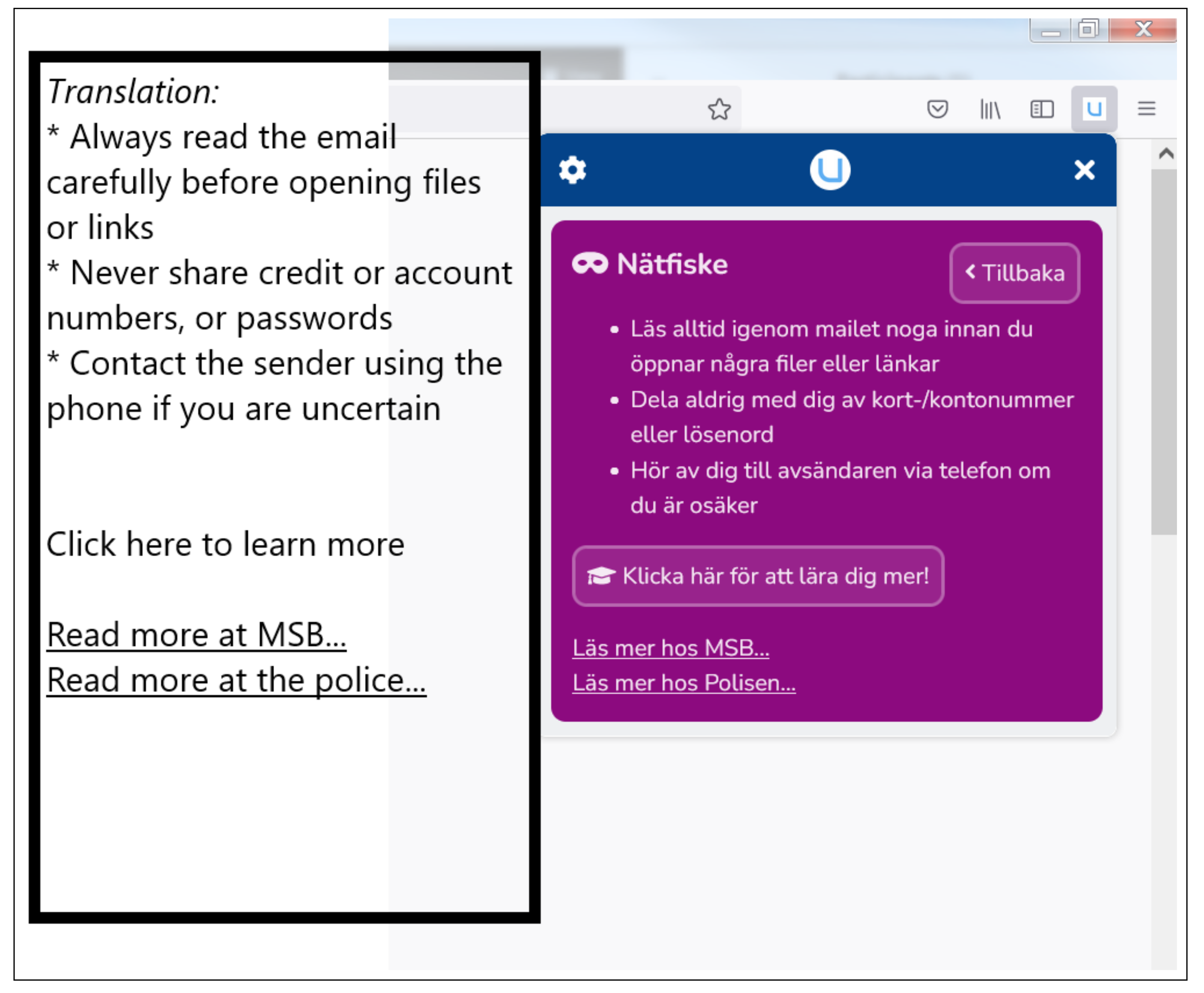

- CBMT: Participants in this group received computerized training developed by the research team according to the specifications of CBMT. It was written information that appeared to the participants when they opened Jenny’s inbox, as demonstrated in Figure 3. The participants were presented with a few tips and prompted to participate in further training, which led the participants to a text-based slide show in a separate window. The training takes about five minutes to complete.

- CONTROL: This group completed the experiment without any intervention.

3.3. Experiment Procedure

3.4. Collected Variables

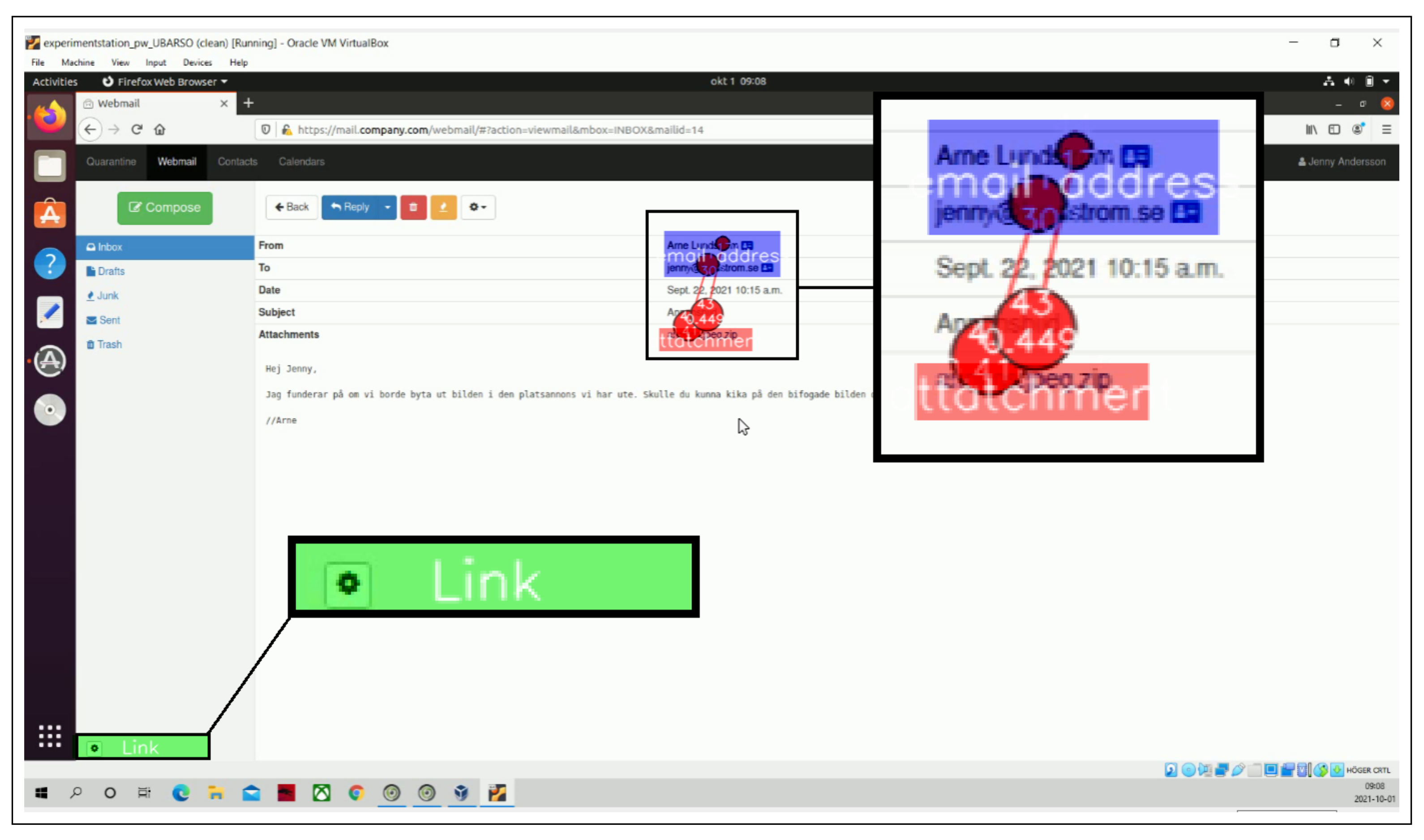

- Evaluated the sender address by hovering over the displayed name to see the real sender address.

- Evaluated attachments by acknowledging their existence and describing it as suspicious or legitimate.

- Evaluated links by hovering over them or in some other way verified the link destination.

- Evaluated if the tone in the email was suspiciously persuasive.

- Evaluated if spelling and grammar made the email suspicious.

- Address, which covered the area holding the sender and recipient addresses.

- Attachment covering the area where email attachments are visible.

- Link covering the area where the true link destination appears.

3.5. Data Analysis

4. Results

4.1. Data Overview

4.2. The Effect of Training

5. Discussion

5.1. Limitations

5.2. Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ISAT | Information Security Awareness Training |

| CBMT | Context-Based Micro-Training |

| SETA | Security Education, Training, and Awareness |

| KAB | Knowledge, Attitude, and Behaviour |

| SPSS | Statistical Package for the Social Sciences |

| ANOVA | Analysis of variance |

References

- OECD. Hows Life in the Digital Age? OECD Publishing: Paris, France, 2019; p. 172. [Google Scholar]

- Owusu-Agyei, S.; Okafor, G.; Chijoke-Mgbame, A.M.; Ohalehi, P.; Hasan, F. Internet adoption and financial development in sub-Saharan Africa. Technol. Forecast. Soc. Chang. 2020, 161, 120293. [Google Scholar]

- Anderson, M.; Perrin, A. Technology Use among Seniors; Pew Research Center for Internet & Technology: Washington, DC, USA, 2017. [Google Scholar]

- Bergström, A. Digital equality and the uptake of digital applications among seniors of different age. Nord. Rev. 2017, 38, 79. [Google Scholar]

- Milana, M.; Hodge, S.; Holford, J.; Waller, R.; Webb, S. A Year of COVID-19 Pandemic: Exposing the Fragility of Education and Digital in/Equalities. 2021. Available online: https://www.tandfonline.com/doi/full/10.1080/02601370.2021.1912946 (accessed on 6 March 2022).

- Watts, G. COVID-19 and the digital divide in the UK. Lancet Digit. Health 2020, 2, e395–e396. [Google Scholar] [PubMed]

- Joseph, D.P.; Norman, J. An analysis of digital forensics in cyber security. In First International Conference on Artificial Intelligence and Cognitive Computing; Springer: Berlin/Heidelberg, Germany, 2019; pp. 701–708. [Google Scholar]

- Sfakianakis, A.; Douligeris, C.; Marinos, L.; Lourenço, M.; Raghimi, O. ENISA Threat Landscape Report 2018: 15 Top Cyberthreats and Trends; ENISA: Athens, Greece, 2019. [Google Scholar]

- Bhardwaj, A.; Sapra, V.; Kumar, A.; Kumar, N.; Arthi, S. Why is phishing still successful? Comput. Fraud. Secur. 2020, 2020, 15–19. [Google Scholar]

- Dark Reading. Phishing Remains the Most Common Cause of Data Breaches, Survey Says. Available online: https://www.darkreading.com/edge-threat-monitor/phishing-remains-the-most-common-cause-of-data-breaches-survey-says (accessed on 1 December 2021).

- Butnaru, A.; Mylonas, A.; Pitropakis, N. Towards lightweight url-based phishing detection. Future Internet 2021, 13, 154. [Google Scholar]

- Gupta, B.B.; Arachchilage, N.A.; Psannis, K.E. Defending against phishing attacks: Taxonomy of methods, current issues and future directions. Telecommun. Syst. 2018, 67, 247–267. [Google Scholar]

- Vishwanath, A.; Herath, T.; Chen, R.; Wang, J.; Rao, H.R. Why do people get phished? Testing individual differences in phishing vulnerability within an integrated, information processing model. Decis. Support Syst. 2011, 51, 576–586. [Google Scholar]

- Steer, J. Defending against spear-phishing. Comput. Fraud. Secur. 2017, 2017, 18–20. [Google Scholar]

- Lacey, D.; Salmon, P.; Glancy, P. Taking the bait: A systems analysis of phishing attacks. Procedia Manuf. 2015, 3, 1109–1116. [Google Scholar]

- Khan, B.; Alghathbar, K.S.; Nabi, S.I.; Khan, M.K. Effectiveness of information security awareness methods based on psychological theories. Afr. J. Bus. Manag. 2011, 5, 10862–10868. [Google Scholar]

- Parsons, K.; McCormac, A.; Butavicius, M.; Pattinson, M.; Jerram, C. Determining employee awareness using the human aspects of information security questionnaire (HAIS-Q). Comput. Secur. 2014, 42, 165–176. [Google Scholar]

- Puhakainen, P.; Siponen, M. Improving employees’ compliance through information systems security training: An action research study. MIS Q. 2010, 34, 757–778. [Google Scholar]

- Bin Othman Mustafa, M.S.; Kabir, M.N.; Ernawan, F.; Jing, W. An enhanced model for increasing awareness of vocational students against phishing attacks. In Proceedings of the 2019 IEEE International Conference on Automatic Control and Intelligent Systems (I2CACIS), Selangor, Malaysia, 29 June 2019; pp. 10–14. [Google Scholar]

- Bada, M.; Sasse, A.M.; Nurse, J.R. Cyber security awareness campaigns: Why do they fail to change behaviour? arXiv 2019, arXiv:1901.02672. [Google Scholar]

- Reinheimer, B.; Aldag, L.; Mayer, P.; Mossano, M.; Duezguen, R.; Lofthouse, B.; von Landesberger, T.; Volkamer, M. An investigation of phishing awareness and education over time: When and how to best remind users. In Proceedings of the Sixteenth Symposium on Usable Privacy and Security (SOUPS 2020), Santa Clara, CA, USA, 7–11 August 2020; pp. 259–284. [Google Scholar]

- Lastdrager, E.; Gallardo, I.C.; Hartel, P.; Junger, M. How Effective is Anti-Phishing Training for Children? In Proceedings of the Thirteenth Symposium on Usable Privacy and Security (SOUPS 2017), Santa Clara, CA, USA, 12–14 July 2017; pp. 229–239. [Google Scholar]

- Junglemap. Nanolearning. Available online: https://junglemap.com/nanolearning (accessed on 7 January 2021).

- Gokul, C.J.; Pandit, S.; Vaddepalli, S.; Tupsamudre, H.; Banahatti, V.; Lodha, S. PHISHY—A Serious Game to Train Enterprise Users on Phishing Awareness. In Proceedings of the 2018 Annual Symposium on Computer-Human Interaction in Play Companion Extended Abstracts, Melbourne, Australia, 28–31 October 2018; pp. 169–181. [Google Scholar]

- Lim, I.K.; Park, Y.G.; Lee, J.K. Design of Security Training System for Individual Users. Wirel. Pers. Commun. 2016, 90, 1105–1120. [Google Scholar]

- Hatfield, J.M. Social engineering in cybersecurity: The evolution of a concept. Comput. Secur. 2018, 73, 102–113. [Google Scholar]

- Renaud, K.; Zimmermann, V. Ethical guidelines for nudging in information security & privacy. Int. J. Hum.-Comput. Stud. 2018, 120, 22–35. [Google Scholar]

- Gjertsen, E.G.B.; Gjaere, E.A.; Bartnes, M.; Flores, W.R. Gamification of Information Security Awareness and Training. In Proceedings of the 3rd International Conference on Information Systems Security and Privacy, SiTePress, Setúbal, Portugal, 19–21 February 2017; pp. 59–70. [Google Scholar]

- Abraham, S.; Chengalur-Smith, I. Evaluating the effectiveness of learner controlled information security training. Comput. Secur. 2019, 87, 101586. [Google Scholar]

- Siponen, M.; Baskerville, R.L. Intervention effect rates as a path to research relevance: Information systems security example. J. Assoc. Inf. Syst. 2018, 19. [Google Scholar] [CrossRef] [Green Version]

- Wen, Z.A.; Lin, Z.; Chen, R.; Andersen, E. What. hack: Engaging anti-phishing training through a role-playing phishing simulation game. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; pp. 1–12. [Google Scholar]

- Kävrestad, J.; Nohlberg, M. Assisting Users to Create Stronger Passwords Using ContextBased MicroTraining. In IFIP International Conference on ICT Systems Security and Privacy Protection; Springer: Berlin/Heidelberg, Germany, 2020; pp. 95–108. [Google Scholar]

- Siponen, M.T. A conceptual foundation for organizational information security awareness. Inf. Manag. Comput. Secur. 2000, 8, 31–41. [Google Scholar]

- Bulgurcu, B.; Cavusoglu, H.; Benbasat, I. Information security policy compliance: An empirical study of rationality-based beliefs and information security awareness. MIS Q. 2010, 34, 523–548. [Google Scholar]

- Hu, S.; Hsu, C.; Zhou, Z. Security education, training, and awareness programs: Literature review. J. Comput. Inf. Syst. 2021, 1–13. [Google Scholar]

- Aldawood, H.; Skinner, G. An academic review of current industrial and commercial cyber security social engineering solutions. In Proceedings of the 3rd International Conference on Cryptography, Security and Privacy, Kuala Lumpur, Malaysia, 19–21 January 2019; pp. 110–115. [Google Scholar]

- Al-Daeef, M.M.; Basir, N.; Saudi, M.M. Security awareness training: A review. Proc. World Congr. Eng. 2017, 1, 5–7. [Google Scholar]

- EC-Council. The Top Types of Cybersecurity Attacks of 2019, Till Date. 2019. Available online: https://blog.eccouncil.org/the-top-types-of-cybersecurity-attacks-of-2019-till-date/ (accessed on 31 May 2021).

- Cybint. 15 Alarming Cyber Security Facts and Stats. 2020. Available online: https://www.cybintsolutions.com/cyber-security-facts-stats/ (accessed on 6 March 2022).

- Sharif, K.H.; Ameen, S.Y. A review of security awareness approaches with special emphasis on gamification. In Proceedings of the 2020 International Conference on Advanced Science and Engineering (ICOASE), Duhok, Iraq, 23–24 December 2020; pp. 151–156. [Google Scholar]

- Williams, E.J.; Hinds, J.; Joinson, A.N. Exploring susceptibility to phishing in the workplace. Int. J. Hum.-Comput. Stud. 2018, 120, 1–13. [Google Scholar]

- Chiew, K.L.; Yong, K.S.C.; Tan, C.L. A survey of phishing attacks: Their types, vectors and technical approaches. Expert Syst. Appl. 2018, 106, 1–20. [Google Scholar]

- Microsoft. Protect Yourself from Phishing. Available online: https://support.microsoft.com/en-us/windows/protect-yourself-from-phishing-0c7ea947-ba98-3bd9-7184-430e1f860a44 (accessed on 30 December 2021).

- Imperva. Phishing Attacks. Available online: https://www.imperva.com/learn/application-security/phishing-attack-scam/ (accessed on 30 December 2021).

- Cuve, H.C.; Stojanov, J.; Roberts-Gaal, X.; Catmur, C.; Bird, G. Validation of Gazepoint low-cost eye-tracking and psychophysiology bundle. Behav. Res. Methods 2021, 1–23. [Google Scholar] [CrossRef]

- MacFarland, T.W.; Yates, J.M. Kruskal–Wallis H-test for oneway analysis of variance (ANOVA) by ranks. In Introduction to Nonparametric Statistics for the Biological Sciences Using R; Springer: Berlin/Heidelberg, Germany, 2016; pp. 177–211. [Google Scholar]

- Zimmermann, V.; Renaud, K. The nudge puzzle: Matching nudge interventions to cybersecurity decisions. ACM Trans. Comput.-Hum. Interact. (TOCHI) 2021, 28, 1–45. [Google Scholar]

- Van Bavel, R.; Rodríguez-Priego, N.; Vila, J.; Briggs, P. Using protection motivation theory in the design of nudges to improve online security behavior. Int. J. Hum.-Comput. Stud. 2019, 123, 29–39. [Google Scholar]

- Klimburg-Witjes, N.; Wentland, A. Hacking humans? Social Engineering and the construction of the “deficient user” in cybersecurity discourses. Sci. Technol. Hum. Values 2021, 46, 1316–1339. [Google Scholar]

- Alabdan, R. Phishing attacks survey: Types, vectors, and technical approaches. Future Internet 2020, 12, 168. [Google Scholar]

- Mashiane, T.; Kritzinger, E. Identifying behavioral constructs in relation to user cybersecurity behavior. Eurasian J. Soc. Sci. 2021, 9, 98–122. [Google Scholar]

- Das, S.; Nippert-Eng, C.; Camp, L.J. Evaluating user susceptibility to phishing attacks. Inf. Comput. Secur. 2022, 309, 1–18. [Google Scholar]

- Yang, R.; Zheng, K.; Wu, B.; Li, D.; Wang, Z.; Wang, X. Predicting User Susceptibility to Phishing Based on Multidimensional Features. Comput. Intell. Neurosci. 2022, 2022, 7058972. [Google Scholar] [CrossRef] [PubMed]

- Swedish Research Council. Good Research Practice. Available online: https://www.vr.se/english/analysis/reports/our-reports/2017-08-31-good-research-practice.html (accessed on 30 December 2021).

| Method | Description |

|---|---|

| Classroom training | Typically provided on-site as a lecture attended as a specific point in time. |

| Broadcasted online training | Typically, brief training delivered as broadcast to large user groups using e-mail or social networks. |

| E-learning | ISAT typically delivered using an online platform that is accessible to users on-demand. |

| Simulated or contextual training | Training delivered to users during a real or simulated event. |

| Gamified training | Gamified training is described as using gamification to develop ISAT material. |

| Variable | Group | Mean | Median | Normal Distribution |

|---|---|---|---|---|

| SCORE | Control (n = 11) | 8.82 | 9 | YES |

| out of 11 | CBMT (n = 14) | 10 | 10 | NO |

| Game (n = 14) | 8.86 (9.09) | 9 (9) | NO | |

| Total (n = 39) | 9.26 | 9 | NO | |

| behavior_manual | Control (n = 11) | 3 | 3 | NO |

| out of 5 | CBMT (n = 14) | 4.57 | 5 | NO |

| Game (n = 14) | 3.64 (3.82) | 3.5 (4) | NO | |

| Total (n = 39) | 3.79 | 4 | NO | |

| behavior_tracked | Control (n = 10) | 1.9 | 2 | NO |

| out of 3 | CBMT (n = 12) | 2.5 | 3 | NO |

| Game (n = 14) | 2.29 (2.55) | 2 (3) | NO | |

| Total (n = 36) | 2.25 | 2 | NO |

| Variable | Group | Perfect Scores |

|---|---|---|

| SCORE | Control (n = 11) | 0% |

| CBMT (n = 14) | 21.4% | |

| Game (n = 14) | 0% (0%) | |

| Total (n = 39) | 7.7% (8.3%) | |

| behavior_manual | Control (n = 11) | 0% |

| CBMT (n = 14) | 64.3% | |

| Game (n = 14) | 14.3% (18.2%) | |

| Total (n = 39) | 28.2% (30.6%) | |

| behavior_tracked | Control (n = 10) | 9.1% |

| CBMT (n = 12) | 57.1% | |

| Game (n = 14) | 42.9% (54.5%) | |

| Total (n = 36) | 38.5% (45.5%) |

| Variable | Kruskal–Wallis H | p-Value |

|---|---|---|

| SCORE | 13.965 (12.531) | 0.001 (0.002) |

| behavior_manual | 16.270 (15.434) | 0.000 (0.000) |

| behavior_tracked | 5.569 (7.332) | 0.062 (0.026) |

| Variable | Groups | p-Value |

|---|---|---|

| SCORE | Control-Game | 1.000 (1.000) |

| Control-CBMT | 0.005 (0.003) | |

| Game-CBMT | 0.003 (0.023) | |

| behavior_manual | Control-Game | 0.502 (0.277) |

| Control-CBMT | 0.000 (0.000) | |

| Game-CBMT | 0.021 (0.102) | |

| behavior_tracked | Control-Game | X (0.083) |

| Control-CBMT | X (0.036) | |

| Game-CBMT | X (1.000) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kävrestad, J.; Hagberg, A.; Nohlberg, M.; Rambusch, J.; Roos, R.; Furnell, S. Evaluation of Contextual and Game-Based Training for Phishing Detection. Future Internet 2022, 14, 104. https://doi.org/10.3390/fi14040104

Kävrestad J, Hagberg A, Nohlberg M, Rambusch J, Roos R, Furnell S. Evaluation of Contextual and Game-Based Training for Phishing Detection. Future Internet. 2022; 14(4):104. https://doi.org/10.3390/fi14040104

Chicago/Turabian StyleKävrestad, Joakim, Allex Hagberg, Marcus Nohlberg, Jana Rambusch, Robert Roos, and Steven Furnell. 2022. "Evaluation of Contextual and Game-Based Training for Phishing Detection" Future Internet 14, no. 4: 104. https://doi.org/10.3390/fi14040104

APA StyleKävrestad, J., Hagberg, A., Nohlberg, M., Rambusch, J., Roos, R., & Furnell, S. (2022). Evaluation of Contextual and Game-Based Training for Phishing Detection. Future Internet, 14(4), 104. https://doi.org/10.3390/fi14040104