Abstract

Misinformation posting and spreading in social media is ignited by personal decisions on the truthfulness of news that may cause wide and deep cascades at a large scale in a fraction of minutes. When individuals are exposed to information, they usually take a few seconds to decide if the content (or the source) is reliable and whether to share it. Although the opportunity to verify the rumour is often just one click away, many users fail to make a correct evaluation. We studied this phenomenon with a web-based questionnaire that was compiled by 7298 different volunteers, where the participants were asked to mark 20 news items as true or false. Interestingly, false news is correctly identified more frequently than true news, but showing the full article instead of just the title, surprisingly, does not increase general accuracy. Additionally, displaying the original source of the news may contribute to misleading the user in some cases, while the genuine wisdom of the crowd can positively assist individuals’ ability to classify news correctly. Finally, participants whose browsing activity suggests a parallel fact-checking activity show better performance and declare themselves as young adults. This work highlights a series of pitfalls that can influence human annotators when building false news datasets, which in turn can fuel the research on the automated fake news detection; furthermore, these findings challenge the common rationale of AI that suggest users read the full article before re-sharing.

1. Introduction

Information disorder [1,2], a general term that includes different ways of describing how the information environment is polluted, is a reason of concern for many national and international institutions; in fact, incorrect beliefs propagated by malicious actors are supposed to have important consequences on politics, finance and public health. The European Commission, for instance, called out a mix of pervasive and polluted information that has been spread online during the COVID-19 pandemic as an “infodemic” [3], which risks jeopardising the efficacy of interventions against the outbreak. In particular, social media users are continuously flooded by information shared or liked by accounts they follow, and news that is diffused is not necessarily controlled by editorial boards that, in professional journalism, are in charge of ruling out poor, inaccurate or unwanted reporting. However, the emergence of novel news consumption behaviours is not negligible: according to research from the Pew Research Center [4], 23% of United States citizens stated that they receive their information from social media often, while 53% stated they receive information from social media at least sometimes. Unfortunately, even if social media may actually increase media pluralism in principle, the novelty comes with some drawbacks, such as misinformation and disinformation, which are spreading at an amazingly high rate and scale [5,6,7,8].

If the reliability of the source is an important issue to be addressed, the other side of the coin is an individual’s intentionality of spreading low quality information; in fact, although the distinction between misinformation and disinformation might seem blurry, the main difference between the two concepts lies in the individual’s intention, since misinformation is false or inaccurate information deceiving its recipients that could spread it even unintentionally, while disinformation refers to a piece of manipulative information that has been diffused purposely to mislead and deceive. Focusing on misinformation, we may wonder how and why users can be deceived and pushed to share inaccurate information; the reasons for such behaviour is usually explained in terms of the inherent attractiveness of fake news, which are more novel and emotionally captivating than regular news [7], repeated exposure to the same information, amplified by filter bubbles and echo chambers [9], or the ways all of us are affected by many psycho-social and cognitive biases, e.g., the bandwagon effect [10], confirmation bias [11], and the vanishing hyper-correction effect [12], which apparently makes debunking a very difficult task to be achieved. However, much remains to be understood on how a single user decides that some news is true or false, how many individuals fact-check information before making such decision, how the interaction design of the Web site publishing the news can contribute to assisting or deceiving the user, and to what extent social influence and peer-pressure mechanisms can activate a person into believing something against all the evidence supporting the contrary.

Our main objective is to investigate the latent cognitive and device-induced processes that may prevent us from distinguishing true from false news. To this purpose, we created an artificial online news outlet where 7298 volunteers were asked to review 20 different news and to decide if they were true or false. The target of the experiment is manifold: we aim to assess if individuals make these kinds of decisions independently, or if the “wisdom of the crowd” may play a role; if decisions made as a function of limited information (the headline and a short excerpt of the article) lead to better or worse results than choices made upon richer data (i.e., the full text, the source of the article); and if users’ better familiarity with the Web or other self-provided information can be linked to better annotations. The results of the experiment and their relationships with related work are described in the rest of the paper.

We think that understanding the basic mechanisms that lead us to make such choices may help us to identify, and possibly fix, deceiving factors, to improve the design of forthcoming social media web-based platforms, and to increase the accuracy of fake news detection systems, which are traditionally trained on datasets where news has been previously annotated by humans as true or false, since human annotators’ biases may leak into the corpora that fuel the research on fake news detection. Furthermore, such systems often rely on features such as the credibility attributed to the source of an article [13,14], or how the news article is perceived by human readers [15,16,17]; we show in this work how these features can be misleading. Last, AI-generated messages suggest that users online should read an article entirely before sharing it, a counter-measure to impulsive re-sharing that we show to be ineffective.

2. Related Works

Telling which news is true or false is somehow a forced simplification of a multi-faceted problem, since we must encompass a substantial number of different bad information practices, ranging from fake news to propaganda, lies, conspiracies, rumours, hoaxes, hyper-partisan content, falsehoods or manipulated media. Information pollution is therefore not entirely made of totally fabricated contents, but rather of many shades of truth stretches. This complexity adds to the variety of styles and reputation of the writing sources, making it hard to tell misleading contents from accurate news. Given that users’ time and attention are limited [18], and an extensive fact-checking and source comparison is impossible on large scale, we must rely on heuristics to quickly evaluate a news piece even before reading it. Karnowski et al. [19] showed that users online decide on the credibility of a news article by evaluating contextual clues. An important factor is the source of the article, as pointed out by Sterrett et al. [20], who found that both the source (i.e., the website an article is taken from) and the author of the social media post that shares the article have a heavy impact on readers’ judgement of the news. Additionally, Pennycook et al. [21] investigated the role of the website that news is taken from, finding that most people are capable of telling a mainstream news website from a low-credibility one just by looking at them. Attribution of credibility by the source is a well-documented practice in scientific literature. Due to the impossibility of fact-checking every news article, researchers have often relied on blacklists of low-credibility websites, curated by debunking organisations, in order to classify disinformation content [22,23,24,25]. Lazer et al. [5] state that they “advocate focusing on the original sources—the publishers—rather than individual stories, because we view the defining element of fake news to be the intent and processes of the publisher”. In fact, the malicious intentionality of the publisher is a distinctive characteristic of disinformation activities [1]. The perception of the source can also depend on personal biases: Carr et al. [26] showed that sceptical and cynical users tended to attribute more credibility to citizen journalism websites than to mainstream news websites. Go et al. [27] also pointed out that the credibility of an article is influenced by the believed expertise of the source, which in turn is affected by previous psychological biases of the reader, such as in-group identity and the bandwagon effect. In fact, other people’s judgement has also been shown to produce an observable effect on individual perception of a news item [28,29]. Lee et al. [30] showed that people’s judgement can be influenced by other readers’ reactions to news, and that others’ comments significantly affected one’s personal opinion. Colliander et al. [31] found that posts with negative comments from other people discourage users to share them. As noted by Winter et al. [32], while negative comments can make an article appear less convincing to the readers, the same does not apply for a high or low number of likes alone. For an up-to-date review of the existing literature on ‘fake news’-related research problems, see [33].

Our contribution: In our experiment, we aimed to capture how the reader’s decision process on the credibility of online news is modified by some of the above-mentioned contextual features, which one was more effective on readers’ mind, and on whom. We launched Fakenewslab (available at http://www.fakenewslab.it accessed on 29 August 2022), an artificial online interactive news outlet designed to test different scenarios of credibility evaluation. We presented a sequence of 20 articles to volunteers, showing the articles to each user in five distinct ways. Some users found only a title and a short description to simulate the textual information that would appear in the preview of the same article on social media. Additional information was presented to other users: some could read the full text of the article, others could see the source (i.e., a reference to the media outlet of the news), and others were told the percentage of other users that classified the news as true. Additionally, to better assess the significance of this last information, we showed a randomly generated percentage to a fifth group of users. The view mode was selected randomly for each volunteer when they entered the platform and started the survey. Everyone was asked to mark every single news item as true or false, and this feature guided us in the preliminary selection of the articles, filtering out all those news where such a rigid distinction could not have been made.

Similarly, several other works asked online users to rate true or false news [34,35,36], but to the best of our knowledge, with the aim of quantifying how much a small twist in the interface of an online post can impact on people’s evaluation of a news article. Our platform is inspired by MusicLab by Salganik et al. [37], an artificial cultural market that was used to study success unpredictability and inequality dynamics and that marked a milestone in assessing the impact of social influence on individual’s choices. In particular, we adopted the idea of dispatching users in parallel virtual rooms, where it is possible to control the kind and the presentation of the information they could read, in order to compare different behavioural patterns that may show high variability, even for identical articles’ presentation modes.

Our results can have implications on the way we design social media interfaces, on how we should conduct news annotation tasks intended to train machine learning models whose aim is to detect “fake news”, and also on forthcoming debunking strategies. Interestingly, false news are correctly identified more frequently than true news, but showing the full article instead of just the title, surprisingly, does not increase general accuracy. Additionally, displaying the original source of the news may contribute to misleading the user in some cases, while social influence can positively assist individuals’ ability to classify correctly, even if the wisdom of the crowd can be a slippery slope. Better performances were observed for those participants that autonomously opened an additional browser tab while compiling the survey, suggesting that searching the Web for debunking actually helps. Finally, users that declare themselves as young adults are also those who open a new tab more often, suggesting more familiarity with the Web.

3. Data and Methodology

Fakenewslab was presented as a test accessible via Web, where volunteers were exposed to 20 news articles that have been actually published online in Italian news outlets; for each news item, users were asked to tell which were true or fake. Every user read the same 20 news items in a random order, and they were randomly redirected to one out of five different experimental environments, which we call “virtual rooms”, in which the news are presented quite differently:

- Room 1 showed, for every news, the bare headline and a short excerpt as they would appear on social media platforms, but was devoid of any contextual clues.

- Room 2 showed the full text of the articles, as they were presented on the original website, again without any clear references to the source.

- Room 3 showed the headline with a short excerpt and the source of the article, as it would appear on social media but devoid of social features. The article source was clickable, and the article could be read at its original source.

- Room 4 showed the headline with a short excerpt, as well as the percentages of users that classified the news as true or false. We will refer to this information as “social ratings” from now on.

- Room 5 was similar to Room 4, but with randomly generated percentages. We will refer to these ratings as “random ratings” from now on.

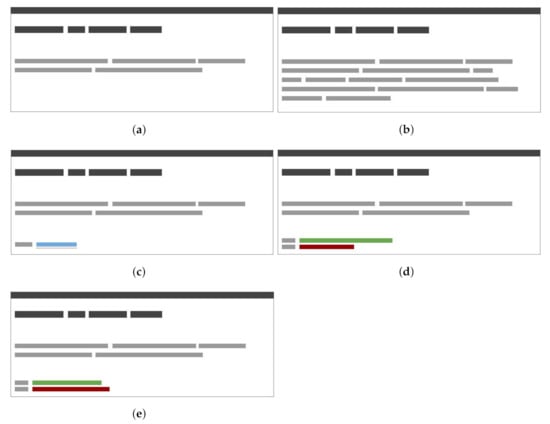

All the rooms were just variations of Room 1, which was designed as an empirical baseline for comparative purposes. Additionally, Room 5 has been introduced as a “null model” to evaluate the social influence effect in comparison with Room 4, which displays actual values. Figure 1 displays a graphical render of how a news item looked like in each room.

Figure 1.

Graphical representation of the information displayed for each news in each room. (a) Room 1: News headline and short excerpt. (b) Room 2: News headline and full text. (c) Room 3: News headline and a clickable link to the source. (d) Room 4: News headline and other users’ ratings. (e) Room 5: News headline and other users’ ratings (random values).

During the test, we monitored some activities in order to have better insight on the news evaluation process. We collected data such as the timestamp of each answer (which allowed us to reconstruct how much time each answer took), what room the user was redirected to, and what social ratings or random ratings they were seeing in Room 4 and Room 5. We also measured if users left the test tab of the browser to open another tab during the test, an activity we interpreted as a signal of attempted fact checking: searching online in order to assess the veracity of an article was not explicitly encouraged nor forbidden by the test rules, and we left to the users the chance to behave as they would have during everyday web browsing. We are aware that the mindset of a test respondent may have discouraged many users from fact checking the articles, as it may have been perceived to be cheating the test, but our argument is that the very same mindset lead the users to pay attention to news they would not have noticed otherwise, thus raising an alert on possible deception hiding in each news item: explicitly allowing for web searches out of the test would have probably lead to disproportionate, unrealistic fact-checking activity. We noted that eventually, 18.3% of users fact-checked the news at least once on their own initiative, a percentage in accordance with existing literature [38,39]. However, estimating how many users would have fact-checked the articles is outside the goals of this experiment: we only looked at those users who possibly did it, and at how fact-checking impacted their perception and rating of the news. Along with information related to the test, we asked participants to fill an optional questionnaire about demographics and personal information. We collected users’ genre, age, job, education, political alignment, and time and number of daily newspapers read, each of them on an optional basis. A total of 64% of users responded to at least one of the previous questions. This personal information helped us to link many relevant patterns in news judgement with demographic segments of the population. Details about such relationships between test scores and some demographic information are discussed in Appendix A.2. All the users’ data was anonymous: we neither asked about nor collected family and given names, email addresses, or any other information that could be used to identify the person that participated to the task. We used session cookies to keep out returning users from trying the test more than once. However, the test participants were informed about the kind of data we were collecting and why (See Appendix A.1). The test was taken more than 10,000 times, but not everyone completed the task of classifying the 20 articles; in fact, although users were redirected to each room with a uniform probability, we observed ex post that some of them abandoned the test before the end, especially in Room 2 and partially in Room 5, as reported in Table 1. The higher abandonment rate in these two rooms could be explained by the length of articles in Room 2, which may have burdened the reader more than the short excerpts in other settings, and by the unreliable variety of “other users’ ratings” in Room 5 that were actually random percentages, which may have induced some users to question the test integrity. It should also be noted that users that were redirected to Room 1 finished the test with the highest completion rate.

Table 1.

Tests completion percentages grouped by rooms. Users are distributed randomly following an uniform distribution in the five rooms, but complete tests in Room 2 are only 17.42% of the total. Users in Room 2 left the test unfinished almost 10% more times than users in other rooms.

After some necessary data cleaning (i.e., leaving out aborted sessions and second attempts from the same user), we ended up with 7298 unique participants that completed the 20-question test (145,960 answers total). The answers were collected within a two-week period in June 2020 by Italian-speaking users. Fakenewslab was advertised by ourselves through our personal pages on social networks such as Facebook and Twitter; it was subsequently advertised via word of mouth by the respondents that took the test.

The test was followed by an ex post Delphi survey, carried out by 10 selected experts on media, that responded to our answers about the main features that can have a role in the perception of credibility of news online. We designed the questions in order to provide a qualitative support to the main findings of our quantitative analysis. Results will be discussed in Section 4, while the methodological details and the list of questions are available in Appendix A.4.

3.1. News Selection

As mentioned in Section 2, the information disorders spectrum is wide, and it includes mis-/dis-/malinformation practices, which means that the reduction of the general problem to a “true or false” game is much too simplistic. In fact, professional fact checkers debunk a wide variety of malicious pseudo-journalism activities, flagging news articles in many different ways: reported news include not only those entirely fabricated but also those that cherry-picked verifiable information to nudge a malicious narrative, or those that omitted or twisted an important detail, thus telling a story substantially different from reality.

For our experimental setting, we selected 20 articles that we were able to divide into two equally sized groups of true (identified from now on with a number from 1 to 10) and false news (from 11 to 20)—see Appendix A.3 for the complete list and additional information. Such division was straightforward in the majority of cases: we classified news as true when they were not falsified in any professional debunking sites, and we also confirmed their veracity on our own. To challenge the user a little bit, we selected lesser known facts, sometimes exploited from some outlets as click-baiting, and sometimes with a poor writing style, with the exception of news 9 and 10, which we used as control questions. Marking a news item as false can be much more ambiguous. However, we selected 10 stories that were dismissed in at least one debunking site. In particular, article n. 14 was particularly difficult to classify as false for the reasons explained in Appendix A.3, but we kept it anyhow: in fact, when evaluating accuracy scores of Fakenewslab users, we are not calling out the gullibility of some of them, but we are only monitoring their activities and reactions, which may be dependent on the environmental setting they were subject to. Thus, it is important to stress here once again that we are more interested in the observed differences between rooms’ outcomes than in assessing people’s ability to guess the veracity of a news. Hence, even if in the following sections we will make use of terms such as “correct”, “wrong”, “false/true positives”, or “false/true negatives” for the sake of simplicity, we must recall that we are measuring the alignment of users’ decisions with our own classification just to assess those differences with respect to some given baseline.

4. Results

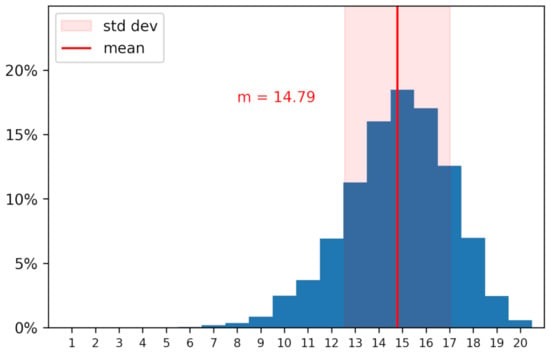

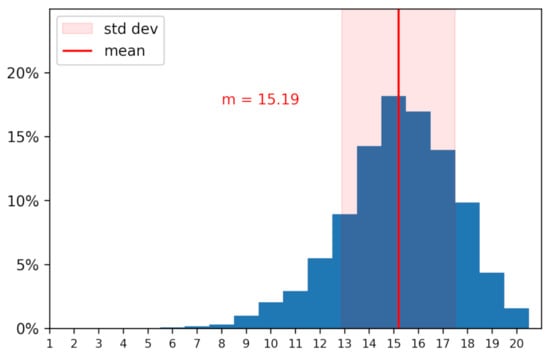

Overall, users that completed Fakenewslab showed a very suspicious attitude. Figure 2 and Figure 3 plot, respectively, the score distributions and the confusion matrix of all accomplished tests, regardless of the setting (room). Users marked news in agreement with our own classification on average 14.79 times out of 20 questions, with a standard deviation of 2.23.

Figure 2.

Scores’ distribution regardless of the test settings. Users marked news in agreement with our own classification on average 14.79 times out of 20 questions. The most common score was 15/20.

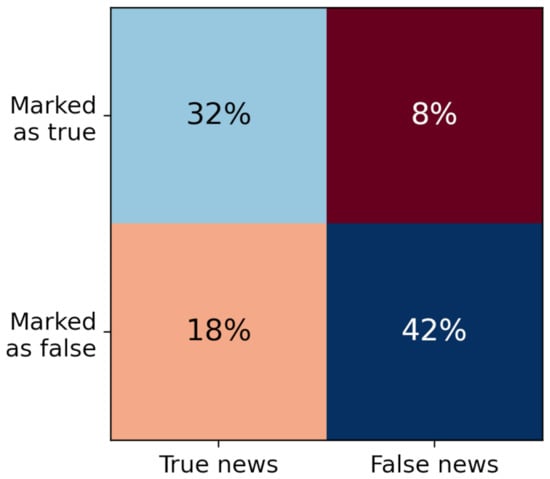

Figure 3.

Users ratings’ confusion matrix. Users rated news as false 60% of the times, i.e., marking even some true items as false. True news have often been mistaken for false.

This quite generally “good” performance is unbalanced: 60% of news were rated false, despite the fact that selected true and false news were actually equally distributed. False news, on the contrary, were hardly to be mistaken. False negatives were therefore the most common kind of error (see Figure 3). It looks like users’ high sensitivity during the test lead to overall results similar to a random guessing game with the probability for news to be false of around three times out of four: in fact, a Mann–Whitney test does not reject the hypothesis that the overall distribution of correct decisions (i.e., accurate ratings of news) may be a binomial with probability .

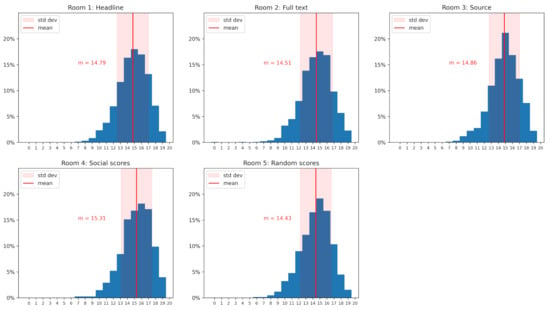

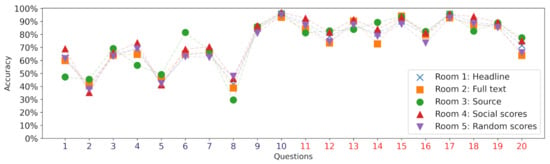

Interestingly, there are differences depending on what kind of information the users were exposed to. Figure 4 shows the scores’ distributions by room. Users that saw the article’s headline and its short excerpt (Room 1) scored an average of 14.79 correct attempts, matching the overall average exactly.

Figure 4.

Scores’ distribution by room. Social influence is the feature with the highest positive/negative impact on users’ scores: it can support the reader’s judgement (Room 4), but it can also deceive it (Room 5).

At first sight, all the other distributions and their characterising averages are similar to each other. Even so, if we keep Room 1 as a baseline, a Mann–Whitney test rejects the hypothesis that the distributions for Rooms 2, 4, and 5 are equivalent to Room 1’s (i.e., they are statistically significantly diverse, with a p-value ), while the same hypothesis for Room 3 could not be rejected. Regarding deeper insight, we observed that the article’s source impacted the most on users’ judgement; in fact, if we compute the average absolute difference between the users’ decisions on each question between Room 3 and the other rooms, Room 3 is the one that produced the greatest gap. This effect was sometimes a positive and sometimes a negative contribution to users’ decisions, depending on the specific news and source, making Room 1 and 3 score distributions equivalent at the end.

This effect is shown in Figure 5, which displays how many times users made a correct decision on each news item, grouped by room. News are sorted here with true news first (blue X-axis labels) and false news after (red X-axis labels) to improve readability. While other users’ ratings were the most helpful feature (Room 4; see Section 4.1), Room 3’s user decisions are the ones that diverge the most from the others, especially for questions 1, 4, 6 and 8. Quite interestingly, articles 1, 4 and 8 reported true news, but the presentation had a click-bait tendency, taken moreover from online blogs and pseudo-newspapers (see Table A2 in Appendix A.3), which probably induced many users to believe them false. Question 6, instead, reported a case of an aggression toward a policeman committed in a Roma camp, a narrative usually nudged by racist and chauvinist online pseudo-newspapers. The news was actually true, and the source mainstream (Il Messaggero is the eight most sold newspaper in Italy (Source: https://www.adsnotizie.it, accessed on 29 April 2021)): users that read the source attributed to the news a much higher chance to be true. Rooms 2, 4 and 5 deserve a deeper discussion in the next Subsections.

Figure 5.

Accuracy for each question, grouped by Room. The source of the news (Room 3) is the feature that can influence users’ judgement the most on the single question, either improving or worsening their scores, especially on questions 1, 4, 5, 8.

4.1. Individual Decisions and Social Influence

The aim of Rooms 4 and 5 is to evaluate the impact of the so-called “Wisdom of the Crowd” in users’ decisions. Room 4 shows actual percentages of users marking every single news as true or false: here we observed the highest average score of 15.31. Room 5 was created for validation purposes, since it displays percentages corresponding to alleged decisions by other users that are actually random. This led to the observation of the lowest average score of 14.43 (see Figure 4).

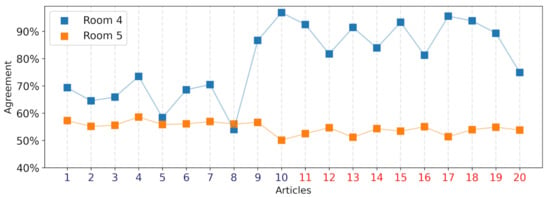

Our hypothesis on this important difference is that random ratings were sometimes highly inconsistent with the content of the news, maybe suggesting that an overwhelming quota of readers had voted some blatantly false news as true, or the contrary. This inconsistency triggered suspicion on the test integrity, which may be the primary cause for the slightly higher abandonment rate we observed in Room 5 (see Table 1). When users did not abandon the test, they still were likely to keep a suspicious attitude. In line with our argument, Figure 6 shows the agreement of users with the ratings they saw for both Room 4 and Room 5. Overall, Room 4 users conform with the social ratings 79.28% of the time, while Room 5 users agreed with the random ratings only 54.66% of the time. This low value is still slightly higher than 50%, i.e., a setting where users conform with the crowd randomly. As a result, there is a clear signal that their judgement was deceived as they performed worse than the users in other rooms. Users that saw the random ratings but did not follow the crowd performed exactly like users in Room 1, that is, our baseline setting.

Figure 6.

Agreement of users with the social ratings (Room 4, blue) and random ratings (Room 5, orange). Users conform to social ratings much more than random, inconsistent ones. Random ratings are likely trusted slightly more over true news, where users are probably more hesitant, than over false news. Similarly, social ratings were likely more helpful for false news than true ones.

It should also be observed that randomly generated percentages displayed with true news were taken more into account (55.8% of the time) than random percentages shown with false news (53.5% of the times), probably because we selected true news articles that were more deceptive due to their style and borderline content (see Appendix A.3). Room 5 users may have followed the crowd when insecure about the answer and taken a decision independently otherwise, if the percentages contradict their own previous beliefs. On the other hand, users in Room 4 that saw real decisions from other users about true news may have followed the crowd much less than they did when deciding on false news (70.8% against 87.7%). Our hypothesis is that social ratings were perfectly consistent with strong personal opinions over false news, while the signal from other users over true news was a little bit noisy to their judgement.

It is worth noting that the majority of respondents answered wrongly to questions 2, 5 and 8 (see Figure 5), thus probably posing a dilemma to other users: whether they should follow the crowd that was consistent and helpful other times, even if they disagree on the decision of the majority. For questions 5 and 8, the agreement with the social ratings are similar to the ones in Room 5, with random ratings (see Figure 6), while the majority’s behaviour on question 2 was accepted 64.5% of times, even if the related decisions were wrong.

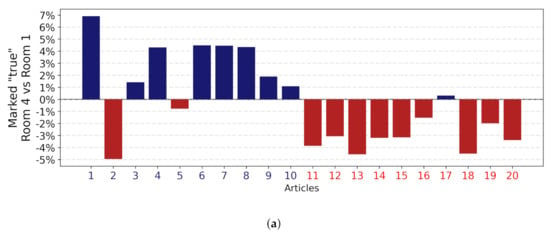

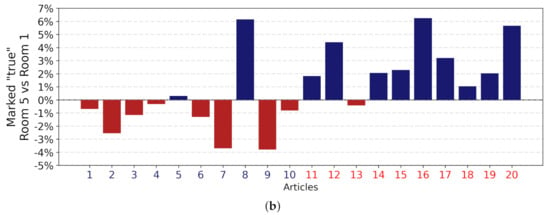

Figure 7 shows the percentage of users that rated news as true in Room 1 vs. Room 4 (top)) and in Room 1 vs. Room 5 (bottom). Users with no additional information tended to rate true news correctly fewer times and rated false news as true more times than users in Room 4. On the contrary, users in Room 5 were led to take true news as false and vice versa. Social ratings and random ratings had a strong impact on individuals’ judgement. This impact may be explained by a combination of the bandwagon effect [10,40], which suggests that the rate of individual adoption of a belief increases proportionally to the number of individuals that adopted such a belief, and the social bias, known as the normative social influence [31,41,42], which suggests that users tend to conform to the collective behaviour, seeking social acceptance even when they intimately disagree. Fakenewslab’s users, when redirected in Rooms 4 and 5, could have interpreted the social ratings and random ratings as a genuine form of wisdom of the crowd [43] and conformed to them, while users in Room 1 simply answered according to their personal judgement.

Figure 7.

Comparison of percentages of users that labelled news as “true” in Room 1 vs. Room 4 (a) and Room 1 vs. Room 5 (b). (a) Social ratings in Room 4 were likely lead users to correct decisions, compared to those who did not have any additional information to use (Room 1). (b) Random ratings in Room 5 probably affected users’ judgement in a negative way, often deceiving them compared to those in Room 1.

4.2. More Information Is Not Better Information

Articles’ headlines and short excerpts are designed to catch the readers’ attention, but the full details of the story can only be found by delving deep in the body of the article. The body of an article is supposed to report details and elements that bring information to the story: location, persons involved, dates, related events, and commentaries. However, when the users could read the full text of the news in Room 2 instead of merely a short headline, their judgement was not helped by more details, but instead hindered: average scores in Room 2 are lower than the overall case, with 14.51 correct decisions per user (see Figure 4). Fatigue could be an explanation. Table 1 reports lower completion percentages for users in Room 2, suggesting that many of them could have been annoyed while reading longer stories, thus abandoning the test prematurely. Additionally, users that completed the test usually took less time to answer if compared with the other tooms: the median answer time is about one second less than the overall median answering time.

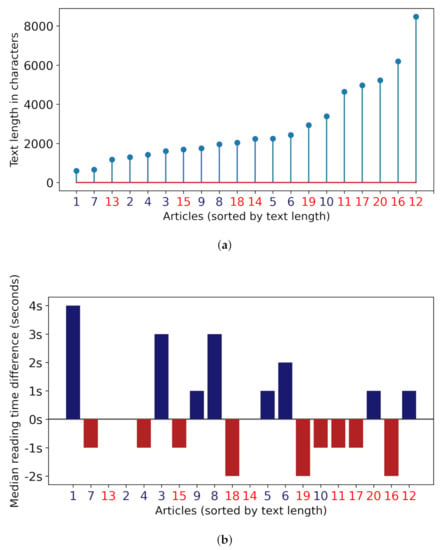

In fact, reading time does not depend on the length of the text, as shown in Figure 8: on the top, we show the article length in characters, which ranges from a few hundreds to more than 8000. Longer articles are markedly longer than shorter ones, and they would require much more time to be read entirely. However, this was not observed. In the bar-plot on the bottom of Figure 8, we plot the difference between the median answering time in Room 2 and in all the rooms altogether, grouped by question. The bar is blue when the difference is positive, i.e., users in Room 2 took more time to answer than the other users, while it is red otherwise. Questions in Figure 8 are sorted from the shortest to the longest. Answering time does not appear to be related to the text length but rather to the news itself. However, from the 14th position and beyond, bars are more likely to be red (negative difference): users decided more quickly with longer texts when they had the opportunity to read them, suggesting that they did not read the texts entirely, possibly because they made up their mind in the headline anyway or also because the writing style of the full article confirmed their preliminary guesses.

Figure 8.

Articles’ full body length are not correlated with longer answering times. (a) Length of texts in Room 2. Articles’ bodies are sorted from the shortest to the longest. (b) Difference between the median answering time taken by users in Room 2 and all the other rooms on each article, with articles sorted by length. Apparently, there is no linear dependency of answering times on text length. Additionally, the decision on the longest articles shown in Room 2 took less time in 5 cases out of 7.

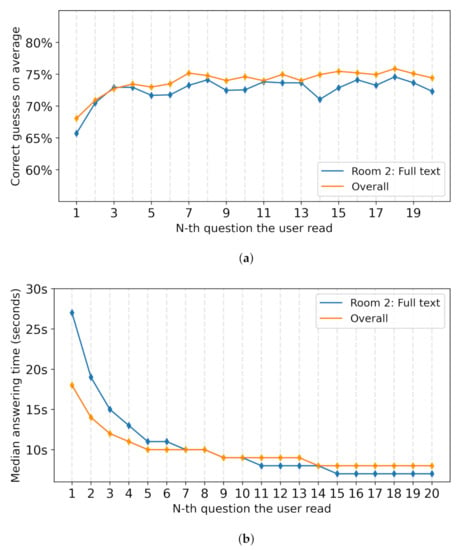

Moreover, users in Room 2 tended to answer more quickly to the last half of the test, if compared to users that only saw a shorter text. In Figure 9, we reported the median answering time in both Room 2 and overall, with questions ordered as the articles were actually presented to the reader. On the first questions of the test, users in Room 2 took more time to answer, apparently reading the long texts. However, their attention collapsed quickly, and from the 7th question and beyond, they spent even less time reading the articles than the users in other rooms. The relationship between the users’ fatigue and the average scores is not clear, however. The right panel shows the average accuracy of users by question, where questions are ordered according to the reader’s perspective. If cumulative fatigue was burdening the users of Room 2 more than the others, average accuracy in the last questions would have to progressively fall. In the top panel of Figure 9, this effect is not evident, even if a slight degradation in accuracy is visible. The interplay between fatigue, text length and user’s behaviour must be properly addressed in a dedicated experiment, but these preliminary findings suggest that the toll required by a long read in terms of attention may discourage users from actually reading and processing more informative texts, and this may lead to even wronger decisions. The length of an article has been shown to be an informative feature for artificial classifiers that discriminate between true and false news [44,45]; our results suggest that this can not be the case for human evaluators. As a consequence, the design of any AI whose purpose is to automatically classify news should take into accounts such a limitation.

Figure 9.

Average accuracy (a) and median answering times (b) of users for questions in order of presentation to the users. Values in Room 2 are compared to their corresponding values in all the rooms taken altogether. (a) Accuracy in Room 2 does not drop progressively. However, it is constantly lower than overall accuracy. (b) Users in Room 2 start reading the full text on the first questions, but they get quickly annoyed, and the reading time falls shortly. During the second half of the test, Room 2 users answer even more rapidly than in the other rooms.

4.3. Web Familiarity, Fact Checking and Young Users

One common way to assess the veracity of a piece of news is online fact-checking, i.e., searching for external sources to confirm or disprove the news. In Room 3, the users could see the sources of the articles, and a link to the original article was displayed: we observed that 26.5% of the users clicked at least once on such a link during the test. However, fact-checking activity requires the user to check news against external sources; retrieving the original web article could only confirm its existence and give some clue about the publisher (for instance, by reading other news from the same news outlet, or by technological and stylistic features of the website [46]). True fact-checking would have required the user temporarily leaving the test to browse in search of confirmation on other websites [47]. As anticipated in Section 3, we captured information about the users that left the test for a while, coming back to it a few seconds later, by monitoring when the test tab in the users’ browser was no longer the active one. It is worth recalling that we had no way to see what the users were searching in other tabs, if they were actively fact-checking the test articles or just taking a break from the test. Additionally, in our guidelines, we did not explicitly mention the possibility of searching for the answers online.

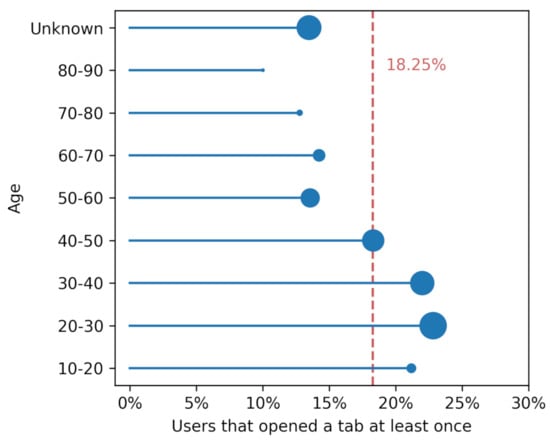

However, estimating how many users fact-check online news is outside of the scope of this work, and it is a research problem that must be addressed properly. We only acknowledge that opening a new tab and coming back to the test later could be a hint of an external research; that we did nor encouraged nor discouraged such activity; and that in the end 18.25% of the users did open another tab at least once during the test.

Users who opened a tab answered correctly 78.23% of the time against the 73.82% of the others that did not. Figure 10 reports the average distribution of users’ scores, only for those who have opened a tab at least once. The mean of the distribution (15.19 correct guesses over 20) is close to the one in Room 4, significantly higher than in Room 1. Opening a tab to search for an answer online is an activity that we therefore correlate with possible fact-checking due to the positive effects it has on users’ tests. Additionally, it is an activity that correlates with the age of the respondents. Figure 11 shows the percentage of users that opened another tab in the browser by age. Each age category is represented by the corresponding lollipop, which is long proportionally to the tab opening rate, and sized after the number of respondents in such an age category. The vertical dashed red bar points out the percentage of users who opened a tab, regardless of their age. Young adults and adults in the age ranges of 20–30 through 50–60 are the most represented in the data, but their tab opening rate is different. Users in the age groups 20–30 and 30–40 showed a higher propensity to open another browser’s tab, while users in the 50–60 age group did it significantly less than average. Other categories are under-represented, but they still confirm the general trend: overall, the chances for users to open a new tab while responding to the test are higher for teenagers and young adults, and they decrease substantially with age. Incidentally, young adults and teenagers are the users that are often considered to show more familiarity with technologies such as web browsers [48] and were the ones that more easily could think of opening parallel research in another tab. Wineburg et al. [47] noted that the most experienced users regarding online media, when called to tell reliable online news outlets from unreliable ones in test conditions, tended to open a new tab. This result suggests that users with higher familiarity with the medium are more prone to fact-check dubious information online, carrying out simultaneous and independent research on the topic on their own, even when not encouraged to do it. Young adults are also the age group with the highest average scores.

Figure 10.

Distribution of scores for those users that opened another tab during the test and returned to the test a while after at least once. Actually, the score’s average is higher here than the overall outcomes (see Figure 10). Tab opening could be an indicator of online fact-checking.

Figure 11.

Percentage of users that opened a tab at least once, grouped by age. Lollipop size is proportional to the subgroup population in our dataset. On average, 18.25% of users opened another tab at least once. Teenagers and young adults in the age groups 10–20, 20–30, and 30–40 opened a tab more often than the others.

5. Conclusions

Via Fakenewslab, volunteers were asked to mark 20 news items as true or false; these news items were previously selected from some online news outlets. We monitored users’ activities under five different test environments, each showing articles with distinct information. Users could see the news piece in one of the following forms: a bare headline with a short excerpt of the article; the headline and the full article; the headline with a short excerpt and the original source the article was taken from; how other users rated the article (social scores); a randomly generated rating, presented similar to how other users rated the article (random scores). We also asked 10 experts of media to qualitatively answer 10 ex post questions that would help us validate our findings.

The attribution of credibility to news based on the perceived credibility of its source is a well-documented practice in literature [26,27,49]. This is especially true for political content produced by partisan news outlets, which are perceived to be more or less credible depending on whether they are ideologically congruent with prior political beliefs [50,51]. Experts that responded to our Delphi survey (see Appendix A.4) also rated the source of an article as a highly informative feature. However, participants able to see the news source did not classify articles better than those who only saw the headlines; rather, the source misled many users, probably because users stopped focusing on the news itself and were distracted by the (good or bad) reputation of the outlet. In the case of borderline or divisive news, such as in our test, the reputation of the venue may have obfuscated the inherent quality of the news article.

Although longer texts could be more informative than shorter ones, our findings suggest that reading an entire article instead of a short preview does not help in distinguishing false from true news. Common sense would suggest that in the full text, the reader could find hints and details to better support their decision; this misconception was also confirmed by the panellists we consulted in our Delphi survey (see Appendix A.4) and by experimental results [52]. However, users that could read the full text performed even worse. A possible explanation for this phenomenon, supported by our analyses, may lie in the fatigue of reading numerous long texts.

Volunteers exposed to others’ decisions were helped by the “wisdom of the crowd”, though this could be a slippery slope: in fact, manipulated random feedbacks pushed a negative herding effect, leading to more mis-classifications. Peer influence is acknowledged in the literature as capable of impacting individual evaluations of the credibility of news articles [49], especially in the form of bad comments [53,54]. Interestingly, the panel of experts that responded to our survey was divided about the effectiveness of peer influence on one user’s judgement (see Appendix A.4): 30% of them rated other users’ influence as non-relevant to their own evaluation.

Lastly, young adults were prone to momentarily interrupt the test, coming back at it with more accurate answers, a possible hint of an underlying fact-checking activity. This is due, in our opinion, to higher media literacy and web tool expertise among young generations. Millennials and Generation Z users could be more encouraged to carry out a “lateral reading”, i.e., searching the web for relevant information to validate news articles they come across, either by early exposure to the pitfalls of disinformation on internet or by their education [55,56]. The relationship between users’ young age and their propensity to lateral reading was also acknowledged by 60% of the expert panellists that responded to the ex-post survey. Quite interestingly, 30% of them did not believe that age could be a discriminant in reading and fact-checking habits, while 20% indicated Generation X (born between 1965 and 1980) as the generation of users more likely to fact-check a piece of news. Experts also underestimated the percentage of users that would carry out parallel research when reading news (see Appendix A.4).

The present study has some limitations that we care to point out. The test was advertised through the social network (Facebook, Twitter) personal pages of authors first, then in large public groups and via sponsored advertisement. As discussed in Appendix A.2, respondents may show significant biases in the distribution of age, education, gender and political leaning, reflecting the demographics of our acquaintances instead of a neutral sample. Another limitation lies in the way we monitored users’ activity. We broadly discussed in the methods section the impossibility of checking on users’ activity when they left the test and returned to it later. We neither encouraged nor discouraged users to fact-check the news, yet we speculate some participants searched some news on the web: we could verify that many of them left the test and returned to it later, and those who did so performed significantly better than others.

Despite these caveats, the results here reported may support drawing up forthcoming guidelines for annotation tasks to train fake news classification systems, and for properly designing web and social media platforms. Such findings challenge the widespread source-based and crowd-based approaches for automatically distinguishing false from true news. Although low-credibility online articles posted on social media are often on display as responsible for mis-/dis-information spreading, high-reputation news outlets are accountable, too, because their inaccurate publications can have a greater impact on reader’s minds. Furthermore, our findings indicate that suggesting users to read an article in its entirety, as currently done by AI-generated messages by social network platforms, can backfire because of the heavy cognitive toll requested of readers.

Further research is needed; e.g., the correlation between reading long texts and poor decisions has to be better addressed. Social influence is an important asset, but we are also aware that informational cascades are vulnerable to manipulation: studying the underlying mechanisms on how users evaluate news online, individually or as influenced by other factors, is crucial in fighting information disorders online.

Author Contributions

Conceptualization, G.R.; Data curation, A.S.; Formal analysis, A.S.; Investigation, G.R.; Methodology, G.R. and A.S.; Project administration, G.R.; Software, A.S.; Visualization, A.S.; Writing—original draft, A.S.; Writing—review & editing, G.R. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Not Applicable, the study does not report any data.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Appendix A.1. Ethical and Reproducibility Statement

All the collected data were shared by users on a voluntary basis on the clear premise that it would be used for research purposes only. Users were not pressured into taking the test in any way. The motivation for the experiment was explained before the test. The existence of different test settings and the monitoring of the users activity, instead, were disclosed to respondents only after the test, as it would have severely affected the results. Users filled out the questionnaire on their personal information on a voluntary basis: they were told that they could skip the questionnaire and immediately access the results of the test. All collected information is anonymous, as we did not store personal information such as family or given names, email or IP addresses, or any other data that could be used to identify the person that participated in the task. We set in users’ browsers a cookie, which they consented to, in order to detect a second attempt to the test that we ignored in our analyses. Lastly, it was clarified that data would never be shared with other subjects, and that it would be used for research purposes only. All the collected information will be distributed in aggregated form in a dedicated Github repository for reproducibility purposes.

Appendix A.2. Demography of Users

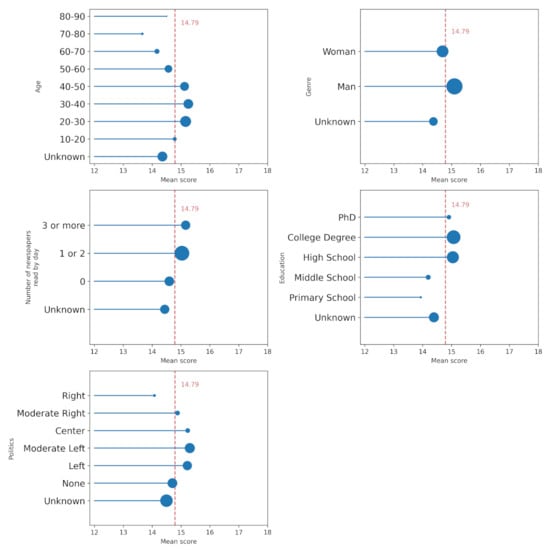

Along with their evaluation of news, we asked Fakenewslab users to respond to an optional questionnaire about their personal information. This questionnaire was taken by 64% of users. In Figure A1, we report the average scores of users depending on some details they declared, such as their age, genre, the number of newspapers they read by day, their education and their political leaning. Population segments are represented by lollipops. Each lollipop’s length is proportional to the accuracy of users in that segment, while its size is proportional to their number. Due to the word-of-mouth spreading process of Fakenewslab on social networks, the population shows some biases, as men are over-represented in comparison to women, and the same applies to college degrees (or even PhDs) over high school (and lower) diplomas and to left and moderate left voters over moderate right and right. Even with these limitations, it is worth noting that mean scores are higher for young adults and adults from 20 to 50 years old; for users that declared that they read at least three newspapers per day, over those who read 1, 2 or none; and for users with high school diplomas and degrees. Although scarcely represented, it is interesting that users that declared to have pursued a Ph.D. performed slightly worse than users that declared to have a college degree or a high school diploma. Conservative political attitude has been demonstrated as a factor correlated to believing and sharing disinformation content [8,57]. Right voters in this study exhibited an average score significantly lower than left voters, yet the heavily imbalanced distribution of the two sub-populations make it impossible to substantiate this claim, as we could not properly stratify segments of users based on politics and genre or age.

Figure A1.

Score distributions by four personal information that users filled into the optional questionnaire. Each lollipop is sized proportionally to the population.

Appendix A.3. News

We presented 20 news items to Fakenewslab users and asked them to decide whether the news items were true or false. However, as described in Section 3.1, the “true or false” framing is an oversimplification of a faceted problem. News flagged by fact checkers include click-baits, fabricated news, news with signs of cherry-picking, crucial omissions that frame a story into a new (malicious) narrative, and other subtle stretches of the truth. On the other hand, true news can also be borderline, open to a broader discussion. In order to offer a complex landscape of debatable information, we did not choose only plausible news from famously reliable sources, which would have been probably easier to spot in contrast with false news, but we also selected a few borderline news items, true news taken from blogs, and some with even a slight tendency to click-bait. The final sample thus included sometimes borderline and ambiguous pieces of information to process. In Table A1, we listed the 20 news items proposed in Fakenewslab, and in Table A2, we added some information on the source of the debunking.

Every news item shown in Table A1 has been fact-checked in at least one outlet among debunking sites or mainstream newspaper from the following list: bufale.net, butac.it, ilpost.it, and open.online. According to tags adopted by fact-checkers, we classified news from 11 to 19 as false (see Table A2). News n. 20 is somehow an exception: we found it on an online website focused on health, openly against the 5G technology. Apparently, the news did not became viral, and it has been ignored by mainstream journalism and also by debunkers. The article suggests that the European Union has not assessed the health risks associated with 5G technology, which is clearly false (e.g., see the “Health impact on 5G” review supported by the European Parliament https://www.europarl.europa.eu/RegData/etudes/STUD/2021/690012/EPRS_STU(2021)690012_EN.pdf accessed on 29 August 2022), and full of misunderstanding, to say the least, on how the European Commission funding processes really work.

Table A1.

News headlines’ list. In our experiment, we adopted 20 articles corresponding to 10 true and to 10 false news. They were actually published in Italian online outlets, mainstream or not. In all the rooms, we integrally reported headlines and short excerpts, but the full articles were available only in Room 2. In this table, we show the (translated) headlines.

Table A1.

News headlines’ list. In our experiment, we adopted 20 articles corresponding to 10 true and to 10 false news. They were actually published in Italian online outlets, mainstream or not. In all the rooms, we integrally reported headlines and short excerpts, but the full articles were available only in Room 2. In this table, we show the (translated) headlines.

| Id | Headline | |

|---|---|---|

| True news | 1 | A “too violent arrest”: policeman sentenced on appeal trial to compensate the criminal |

| 2 | The Islamic headscarf will be part of the Scottish police uniform | |

| 3 | Savona, drunk and drug addict policeman runs over and kills an elderly man | |

| 4 | Erdogan: The West is more concerned about gay rights than Syria | |

| 5 | # Cop20: the ancient Nazca lines damaged by Greenpeace activists | |

| 6 | Rome, policemen attacked and stoned in the Roma camp | |

| 7 | Thief tries to break into a house but the dog bites him: he asks for damages | |

| 8 | These flowers killed my kitty - don’t keep them indoors if you have them | |

| 9 | Climate strike, Friday for future Italy launches fundraising | |

| 10 | Paris, big fire devastates Notre-Dame: roof and spire collapsed | The firefighters: “The structure is safe” | |

| False news | 11 | Carola Rackete: “The German government ordered me to bring migrants to Italy” |

| 12 | With the agreement of Caen Gentiloni sells Italian waters (and oil) to France | |

| 13 | Vinegar eliminated from school canteens because prohibited by the Koran | |

| 14 | He kills an elderly Jewish woman at the cry of Allah Akbar: acquitted because he was drugged | |

| 15 | The measles virus defeats cancer. But we persist in defeating the measles virus! | |

| 16 | 193 million from the EU to free children from the stereotypes of father and mother | |

| 17 | Astonishing: parliament passes the law to check our Facebook profiles | |

| 18 | Italy. The first illegal immigrant mayor elected: “This is how I will change Italian politics” | |

| 19 | INPS: 60,000 IMMIGRANTS IN RETIREMENT WITHOUT HAVING EVER WORKED | |

| 20 | EU: 700 million on 5G, but no risk controls |

It should be noted that the most controversial classification, among the “false” news, is probably article n. 14. The source, “La Voce del Patriota”, is a nationalist blog. The article refers to a factual event: a drugged Muslim man killed a elderly Jewish woman, and the reported homicide is mainly framed as racial, serving a broader narrative that criminalises immigration. When it was published, the trial was only in its first stage, and the acquittal was temporary, waiting for a rebuttal. However, a correction of the news was available at the time of our experiment in some debunking sites; in fact, further investigations dismissed the racial aggravating factors, stressing the drug-altered state of the murderer. This is an example of how difficult it could be to answer to a simple “true or false” game. The fact was true, but it was reported incorrectly. Nevertheless, we should recall here that our objective is not to judge people’s ability to tell truth from falsehood but how a social media platform’s contextual information may influence us in making such a decision. Hence, the results we observed comparing user’s activities in different rooms are the core of our motivations.

Table A2.

Additional information regarding the articles. We show the sources for each article of Table A1; in fact, even if the same news could have been reported in more different news outlets, in Room 3, we showed only the source that published the article as it was presented to our volunteers. Moreover, we give here some more information, such as the type of the news outlet (i.e., mainstream, online newspaper, blog, or blacklisted by some debunking sites) and the tags used by fact-checkers upon correction. Such information was not explicitly disclosed to the users.

Table A2.

Additional information regarding the articles. We show the sources for each article of Table A1; in fact, even if the same news could have been reported in more different news outlets, in Room 3, we showed only the source that published the article as it was presented to our volunteers. Moreover, we give here some more information, such as the type of the news outlet (i.e., mainstream, online newspaper, blog, or blacklisted by some debunking sites) and the tags used by fact-checkers upon correction. Such information was not explicitly disclosed to the users.

| Id | Source | Type | Tagged as | |

|---|---|---|---|---|

| True news | 1 | Sostenitori delle Forze dell’Ordine | blacklisted | |

| 2 | Il Giornale | mainstream | ||

| 3 | Today | mainstream | ||

| 4 | L’antidiplomatico | online newspaper | ||

| 5 | Greenme | online newspaper | ||

| 6 | Il Messaggero | online newspaper | ||

| 7 | CorriereAdriatico | mainstream | ||

| 8 | PostVirale | blog | ||

| 9 | Adnkronos | mainstreeam | ||

| 10 | TgCom | mainstreeam | ||

| False news | 11 | IlGiornale | mainstreeam | Wrong Translation–Pseudo-Journalism |

| 12 | Diario Del Web | online newspaper | Hoax–Alarmism | |

| 13 | ImolaOggi | blacklisted | Hoax | |

| 14 | La Voce del Patriota | blog | Clarifications Needed | |

| 15 | Il Sapere è Potere | blackisted | Disinformation | |

| 16 | Jeda News | blacklisted | Well Poisoning | |

| 17 | Italiano Sveglia | blacklisted | Hoax–Disinformation | |

| 18 | Il Fatto Quotidaino | blacklisted | Hoax | |

| 19 | VoxNews | blacklisted | Unsubstantiated–Disinformation | |

| 20 | Oasi Sana | blog | Well Poisoning–Pseudo-Journalism |

Appendix A.4. Qualitative Assessment of Biases about Perception of Credibility

Aiming to pair the quantitative analysis we performed in Section 4 with qualitative feedback, we deployed an ex post survey, asking 10 media experts to share their experience and their beliefs about how they perceive news articles online, depending on the auxiliary information they can read. The methodology we used was the Delphi method [58]: it consists of several rounds of survey (typically two or three) where domain experts are called to answer questions anonymously, preventing any of the experts from influencing their peers due to their authority. Between the two rounds, a facilitator collected and summarised participants’ answers, which were then reported to them. In the subsequent round, the experts could be asked to revise their answers or to answer more in-depth questions. The process was terminated after a given number of rounds or after convergence is reached. In its original formulation, a Delphi survey was a tool especially suitable for collective forecasting of a given phenomenon based on expert opinions.

For our study, we asked 10 social media and Web experts to answer a few questions designed after the main findings of our analysis. In spite of the spirit of a Delphi survey, the goal of this analysis was to collect thoughtful answers from a panel of experts, rather than to reach convergence. Briefly, we asked how the credibility of news can be affected by contextual hints, such as the source of an article, the informative richness of the article’s preview on social networks, and what other users thought of the article; we also asked how often users rely on external fact-checking, and what age users are more likely to fact-check news. Round 1 questions were designed as open questions, which let participants point out their opinion with no constraints; Round 2 questions were designed as multiple choice questions, specifically targeted at the main topics and themes that emerged after Round 1. In the following, we report Round 1’s questions:

- Q1: What are, based on your experience and competencies, the main indicators of the credibility of a news article on social networks?Participants spontaneously converged on the source of the article (9 out of 10), followed at a distance by the sources cited by the article, the coverage of the news across diverse sources, and who the social network users are that broke the news.

- Q2: In your experience, what makes you think a news piece could be false?Participants pointed out mainly the poor quality of the news (8 out of 10), but also an unreliable publisher (5 out of 10) and a lack of support in other news outlets (3 out of 10).

- Q3: Based on your experience, how do you rate the quality and quantity of information reported in the news previews on social networks?Participants found the quality of news previews to be poor (7 out of 10), with a slight tendency to clickbait, regardless of the news publisher. Additionally, the quantity of information was pointed out as insufficient (4 out of 10).

- Q4: Does there exist, according to you, a relationship between news credibility and the response of users on social networks? If you think it exists, could you explain what you think it is?A total of 6 out of 10 participants believed that bold claims, especially from false news publishers, result in higher arousal among readers, which can also foster news diffusion. However, 4 out of 10 participants did not acknowledge any direct relationship between credibility and public response.

- Q5: How do you make sure a piece of news is credible when you are in doubt, in your experience as a user?Participants unanimously indicated a parallel search on other news sources. Additionally, 4 out of 10 participants mentioned the need for checking on reliable sources, such as debunking websites.

From Round 1, it emerged that the source of a news article can influence its credibility; that text in news previews can be insufficient; that roughly half of the experts think that users’ response on social networks can be influenced by false, bold claims; and that the most reasonable method for debunking a piece of news is to search it on other reliable sources. The round 2 questions, here reported, address the previous points with more specificity:

- Q1 Based on your knowledge of the domain, do you think that the source a news article is taken from is an important feature for assessing news credibility?Most users agreed that news outlets play a role in the perception of credibility.

- Q2 From Round 1, it emerged that information contained in news previews on social networks is often poor in terms of quality. Do you think that reading the entirety of an article can bring more useful information to decide whether the article is credible or not?A total of 6 out of 10 users strongly agreed, while the remaining mildly agreed.

- Q3 From the previous round, a relationship emerged between the credibility of news and users’ response. Based on your knowledge of the domain, do you think that an interface that shows the opinion of the majority of users about the credibility of the news piece can be effective in influencing the opinion of an individual?Experts are divided, as 3 out of 10 answered "Not much", while 3 out of 10 answered "Very much".

- Q4 From the previous round, agreement about the need to check the coverage of a news piece on other sources before deciding about its credibility emerged. What do you think is the percentage of users that search for the credibility of some news before making up their minds on it?A total of 4 out of 10 participants indicated “5% to 10%”, while the remaining were equally divided into “10% to 20%” and “20% to 30%”.

- Q5 Considering a given percentage of users that check for the credibility of news on other sources, do you think that the majority of them belong to one (or more) of which of the following generations?Overall, 60% of answers indicated “Millennials 1981–1996” as the generation more likely to fact-check news online, while only a minority indicated older generations, such as “Gen X (1965–1980) ”, or younger, such as “Gen Z (1997–2012)” (both at 20%). For almost one-third of respondents, there was no relationship between age and the propensity to search for the same news on different outlets.

Round 2 answers are, to a small extent, in contrast with the quantitative findings of our analysis. The source of news was acknowledged as a very informative feature, confirming our findings (see Section 4). Respondents to the survey agreed that reading an article in its entirety could help determine its credibility; however, results reported in Section 4.2 show that this may be a misconception: users that took the Fakenewslab test performed worse, in terms of accuracy, when confronted with long texts, possibly due to the fatigue of reading excessive information. The survey panellists are divided about the effectiveness of peer pressure on individual judgement, a behaviour well-documented in literature and further confirmed by our analysis. They also underestimated the percentage of users that would search for an article on other venues, which in our test was assessed around 18%. It must be emphasised, though, that it is impossible to determine if users that left the browser’s tab and came back later actually fact-checked the news; this finding must therefore be taken carefully. Lastly, users correctly indicated young adults as the most likely to carry out parallel research of the same article on other news; one-third of respondents, however, did not acknowledge any relationship between age and propensity to fact check.

Coupled with the quantitative analysis we discussed in Section 4, this qualitative feedback shed light on the most common misconceptions about how users assess the credibility of news articles online, revealing some interesting asymmetries between what experts think is important and what users actually considered to be important when taking the Fakenewslab test.

References

- Council of Europe. Information Disorder: Toward an Interdisciplinary Framework for Research and Policy Making. Available online: https://rm.coe.int/information-disorder-report-november-2017/1680764666 (accessed on 13 October 2020).

- Unesco. Journalism. ’Fake News’ and Disinformation: A Handbook for Journalism Education and Training. Available online: https://en.unesco.org/sites/default/files/f._jfnd_handbook_module_2.pdf (accessed on 13 October 2020).

- Speech of Vice President Vera Jourova on Countering Disinformation amid COVID-19 “From Pandemic to Infodemic”. Available online: https://ec.europa.eu/commission/presscorner/detail/it/speech_20_1000 (accessed on 6 August 2021).

- Shearer, E. More Than Eight-in-Ten Americans Get News from Digital Devices. Available online: https://www.pewresearch.org/fact-tank/2021/01/12/more-than-eight-in-ten-americans-get-news-from-digital-devices/ (accessed on 8 June 2021).

- Lazer, D.M.J.; Baum, M.A.; Benkler, Y.; Berinsky, A.J.; Greenhill, K.M.; Menczer, F.; Metzger, M.J.; Nyhan, B.; Pennycook, G.; Rothschild, D.; et al. The science of fake news. Science 2018, 359, 1094–1096. [Google Scholar] [CrossRef] [PubMed]

- Allcott, H.; Gentzkow, M. Social Media and Fake News in the 2016 Election. J. Econ. Perspect. 2017, 31, 211–236. [Google Scholar] [CrossRef]

- Vosoughi, S.; Roy, D.; Aral, S. The spread of true and false news online. Science 2018, 359, 1146–1151. [Google Scholar] [CrossRef] [PubMed]

- Grinberg, N.; Joseph, K.; Friedland, L.; Swire-Thompson, B.; Lazer, D. Fake news on Twitter during the 2016 U.S. presidential election. Science 2019, 363, 374–378. [Google Scholar] [CrossRef] [PubMed]

- Flaxman, S.; Goel, S.; Rao, J.M. Filter Bubbles, Echo Chambers, and Online News Consumption. Public Opin. Q. 2016, 80, 298–320. [Google Scholar] [CrossRef]

- Nadeau, R.; Cloutier, E.; Guay, J.H. New Evidence About the Existence of a Bandwagon Effect in the Opinion Formation Process. Int. Political Sci. Rev. 1993, 14, 203–213. [Google Scholar] [CrossRef]

- Nickerson, R.S. Confirmation Bias: A Ubiquitous Phenomenon in Many Guises. Rev. Gen. Psychol. 1998, 2, 175–220. [Google Scholar] [CrossRef]

- Butler, A.C.; Fazio, L.K.; Marsh, E. The hypercorrection effect persists over a week, but high-confidence errors return. Psychon. Bull. Rev. 2011, 18, 1238–1244. [Google Scholar] [CrossRef]

- Yuan, C.; Ma, Q.; Zhou, W.; Han, J.; Hu, S. Early Detection of Fake News by Utilizing the Credibility of News, Publishers, and Users based on Weakly Supervised Learning. In Proceedings of the 28th International Conference on Computational Linguistics; International Committee on Computational Linguistics: Barcelona, Spain, 2020; pp. 5444–5454. [Google Scholar] [CrossRef]

- Sitaula, N.; Mohan, C.K.; Grygiel, J.; Zhou, X.; Zafarani, R. Credibility-Based Fake News Detection. In Disinformation, Misinformation, and Fake News in Social Media: Emerging Research Challenges and Opportunities; Shu, K., Wang, S., Lee, D., Liu, H., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 163–182. [Google Scholar] [CrossRef]

- Mitra, T.; Gilbert, E. CREDBANK: A Large-Scale Social Media Corpus With Associated Credibility Annotations. Proc. Int. Aaai Conf. Web Soc. Media 2021, 9, 258–267. [Google Scholar]

- Shu, K.; Zheng, G.; Li, Y.; Mukherjee, S.; Awadallah, A.; Ruston, S.; Liu, H. Early Detection of Fake News with Multi-source Weak Social Supervision. In Proceedings of the Machine Learning and Knowledge Discovery in Databases-European Conference, ECML PKDD 2020, Ghent, Belgium, 14–18 September 2020, Proceedings; Hutter, F., Kersting, K., Lijffijt, J., Valera, I., Eds.; Springer Science and Business Media Deutschland GmbH: Berlin/Heidelberg, Germany, 2021; pp. 650–666. [Google Scholar] [CrossRef]

- Tschiatschek, S.; Singla, A.; Gomez Rodriguez, M.; Merchant, A.; Krause, A. Fake News Detection in Social Networks via Crowd Signals. In Proceedings of the Companion The Web Conference 2018, Lyon, France, 25–29 April 2018; International World Wide Web Conferences Steering Committee: Geneva, Switzerland, 2018; pp. 517–524. [Google Scholar] [CrossRef]

- Qiu, X.; Oliveira, D.F.; Shirazi, A.S.; Flammini, A.; Menczer, F. Limited individual attention and online virality of low-quality information. Nat. Hum. Behav. 2017, 1, 0132. [Google Scholar] [CrossRef]

- Karnowski, V.; Kümpel, A.S.; Leonhard, L.; Leiner, D.J. From incidental news exposure to news engagement. How perceptions of the news post and news usage patterns influence engagement with news articles encountered on Facebook. Comput. Hum. Behav. 2017, 76, 42–50. [Google Scholar] [CrossRef]

- Sterrett, D.; Malato, D.; Benz, J.; Kantor, L.; Tompson, T.; Rosenstiel, T.; Sonderman, J.; Loker, K. Who Shared It?: Deciding What News to Trust on Social Media. Digit. J. 2019, 7, 783–801. [Google Scholar] [CrossRef]

- Pennycook, G.; Rand, D.G. Fighting misinformation on social media using crowdsourced judgments of news source quality. Proc. Natl. Acad. Sci. USA 2019, 116, 2521–2526. [Google Scholar] [CrossRef] [PubMed]

- Vargo, C.J.; Guo, L.; Amazeen, M.A. The agenda-setting power of fake news: A big data analysis of the online media landscape from 2014 to 2016. New Media Soc. 2018, 20, 2028–2049. [Google Scholar] [CrossRef]

- Allcott, H.; Gentzkow, M.; Yu, C. Trends in the diffusion of misinformation on social media. Res. Politics 2019, 6, 205316801984855. [Google Scholar] [CrossRef]

- Guess, A.; Nyhan, B.; Reifler, J. Selective exposure to misinformation: Evidence from the consumption of fake news during the 2016 US presidential campaign. Eur. Res. Counc. 2018, 9, 4. [Google Scholar]

- Tacchini, E.; Ballarin, G.; Vedova, M.L.D.; Moret, S.; de Alfaro, L. Some Like it Hoax: Automated Fake News Detection in Social Networks. CoRR 2017. Available online: https://arxiv.org/abs/1704.07506 (accessed on 29 August 2022).

- Carr, D.J.; Barnidge, M.; Lee, B.G.; Tsang, S.J. Cynics and Skeptics: Evaluating the Credibility of Mainstream and Citizen Journalism. J. Mass Commun. Q. 2014, 91, 452–470. [Google Scholar] [CrossRef]

- Go, E.; Jung, E.H.; Wu, M. The effects of source cues on online news perception. Comput. Hum. Behav. 2014, 38, 358–367. [Google Scholar] [CrossRef]

- Houston, J.B.; Hansen, G.J.; Nisbett, G.S. Influence of User Comments on Perceptions of Media Bias and Third-Person Effect in Online News. Electron. News 2011, 5, 79–92. [Google Scholar] [CrossRef]

- Rojas, H. “Corrective” Actions in the Public Sphere: How Perceptions of Media and Media Effects Shape Political Behaviors. Int. J. Public Opin. Res. 2010, 22, 343–363. [Google Scholar] [CrossRef]

- Lee, E.J.; Jang, Y.J. What Do Others’ Reactions to News on Internet Portal Sites Tell Us? Effects of Presentation Format and Readers’ Need for Cognition on Reality Perception. Commun. Res. 2010, 37, 825–846. [Google Scholar] [CrossRef]

- Colliander, J. “This is fake news”: Investigating the role of conformity to other users’ views when commenting on and spreading disinformation in social media. Comput. Hum. Behav. 2019, 97, 202–215. [Google Scholar] [CrossRef]

- Winter, S.; Brückner, C.; Krämer, N.C. They Came, They Liked, They Commented: Social Influence on Facebook News Channels. Cyberpsychology Behav. Soc. Netw. 2015, 18, 431–436. [Google Scholar] [CrossRef] [PubMed]

- Ruffo, G.; Semeraro, A.; Giachanou, A.; Rosso, P. Surveying the Research on Fake News in Social Media: A Tale of Networks and Language. arXiv 2021, arXiv:2109.07909. [Google Scholar]

- Pennycook, G. Who falls for fake news? The roles of bullshit receptivity, overclaiming, familiarity, and analytic thinking. J. Personal. 2019, 88, 185–200. [Google Scholar] [CrossRef] [PubMed]

- Micallef, N.; Avram, M.; Menczer, F.; Patil, S. Fakey: A Game Intervention to Improve News Literacy on Social Media. Proc. ACM-Hum.-Comput. Interact. 2021, 5, 1–27. [Google Scholar] [CrossRef]

- Pennycook, G.; Cannon, T.D.; Rand, D.G. Prior exposure increases perceived accuracy of fake news. J. Exp. Psychol. Gen. 2018, 147, 1865. [Google Scholar] [CrossRef]

- Salganik, M.J.; Dodds, P.S.; Watts, D.J. Experimental study of inequality and unpredictability in an artificial cultural market. Science 2006, 311, 854–856. [Google Scholar] [CrossRef]

- Hassan, N.; Arslan, F.; Li, C.; Tremayne, M. Toward Automated Fact-Checking: Detecting Check-Worthy Factual Claims by ClaimBuster. In Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Association for Computing Machinery, New York, NY, USA, 13 August 2017; pp. 1803–1812. [Google Scholar] [CrossRef]

- Robertson, C.T.; Mourão, R.R.; Thorson, E. Who Uses Fact-Checking Sites? The Impact of Demographics, Political Antecedents, and Media Use on Fact-Checking Site Awareness, Attitudes, and Behavior. Int. J. Press. 2020, 25, 217–237. [Google Scholar] [CrossRef]

- Myers, D.G.; Wojcicki, S.B.; Aardema, B.S. Attitude Comparison: Is There Ever a Bandwagon Effect? J. Appl. Soc. Psychol. 1977, 7, 341–347. [Google Scholar] [CrossRef]

- Cialdini, R.B.; Goldstein, N.J. Social influence: Compliance and conformity. Annu. Rev. Psychol. 2004, 55, 591–621. [Google Scholar] [CrossRef] [PubMed]

- Aronson, E.; Wilson, T.D.; Akert, R.M. Social Psychology; Prentice Hall: Upper Saddle River, NJ, USA, 2005; Volume 5. [Google Scholar]

- Kremer, I.; Mansour, Y.; Perry, M. Implementing the “Wisdom of the Crowd”. J. Political Econ. 2014, 122, 988–1012. [Google Scholar] [CrossRef]

- Kumar, S.; West, R.; Leskovec, J. Disinformation on the Web: Impact, Characteristics, and Detection of Wikipedia Hoaxes. In Proceedings of the 25th International Conference on World Wide Web, Montreal, QC, Canada, 11–15 April 2016; International World Wide Web Conferences Steering Committee: Geneva, Switzerland, 2016; pp. 591–602. [Google Scholar] [CrossRef]

- Ghanem, B.; Rosso, P.; Rangel, F. An Emotional Analysis of False Information in Social Media and News Articles. ACM Trans. Internet Technol. 2020, 20, 1–18. [Google Scholar] [CrossRef]

- Chung, C.J.; Nam, Y.; Stefanone, M.A. Exploring Online News Credibility: The Relative Influence of Traditional and Technological Factors. J. Comp.-Med. Commun. 2012, 17, 171–186. [Google Scholar] [CrossRef]

- Wineburg, S.; McGrew, S. Lateral Reading: Reading Less and Learning More When Evaluating Digital Information. Soc. Sci. Res. Netw. 2017. Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3048994 (accessed on 29 August 2022).

- Brashier, N.M.; Schacter, D.L. Aging in an Era of Fake News. Curr. Dir. Psychol. Sci. 2020, 29, 316–323. [Google Scholar] [CrossRef]