Author Contributions

Conceptualization, A.D. and H.M.; methodology, A.D.; software, A.D. and H.M.; validation, A.D. and H.M.; formal analysis, A.D. and S.S.; investigation, A.D., H.M. and K.R.; resources, A.D.; data curation, A.D.; writing—original draft preparation, A.D., H.M. and K.R.; writing—review and editing, A.D. and S.S.; visualization, A.D. and M.O.S.; supervision, A.B., T.G. and K.R.; project administration, A.D., T.G. and K.R. All authors have read and agreed to the published version of the manuscript.

Figure 1.

The methodology of the proposed work.

Figure 1.

The methodology of the proposed work.

Figure 2.

The sample text written by Bankim Chandra Chattopadhyay [

3].

Figure 2.

The sample text written by Bankim Chandra Chattopadhyay [

3].

Figure 3.

The sample text written by Rabindranath Tagore [

3].

Figure 3.

The sample text written by Rabindranath Tagore [

3].

Figure 4.

The sample text written by Suchitra Bhattacharya [

3].

Figure 4.

The sample text written by Suchitra Bhattacharya [

3].

Figure 5.

The sample text written by Sunil Gangopadhyay [

3].

Figure 5.

The sample text written by Sunil Gangopadhyay [

3].

Figure 6.

The feature visualizations of one document by four authors using line charts. The x-axis represents the 17 features considered in the experiment. The y-axis represents the feature values obtained for each feature. The line chart follows the default color for the feature values.

Figure 6.

The feature visualizations of one document by four authors using line charts. The x-axis represents the 17 features considered in the experiment. The y-axis represents the feature values obtained for each feature. The line chart follows the default color for the feature values.

Figure 7.

The feature visualizations of one document by four authors using imagesc charts. The x-axis represents the 17 features considered in the experiment. The y-axis represents the feature values obtained for each feature. Here, the “jet” theme was used for distinguishing the feature values from one another.

Figure 7.

The feature visualizations of one document by four authors using imagesc charts. The x-axis represents the 17 features considered in the experiment. The y-axis represents the feature values obtained for each feature. Here, the “jet” theme was used for distinguishing the feature values from one another.

Figure 8.

The feature visualizations of one document by four authors using pie charts. Each area represents each feature value obtained for 17 features used in the experiment. Here, the “hsv” theme was used for distinguishing the feature values from one another.

Figure 8.

The feature visualizations of one document by four authors using pie charts. Each area represents each feature value obtained for 17 features used in the experiment. Here, the “hsv” theme was used for distinguishing the feature values from one another.

Figure 9.

The architecture of the proposed network.

Figure 9.

The architecture of the proposed network.

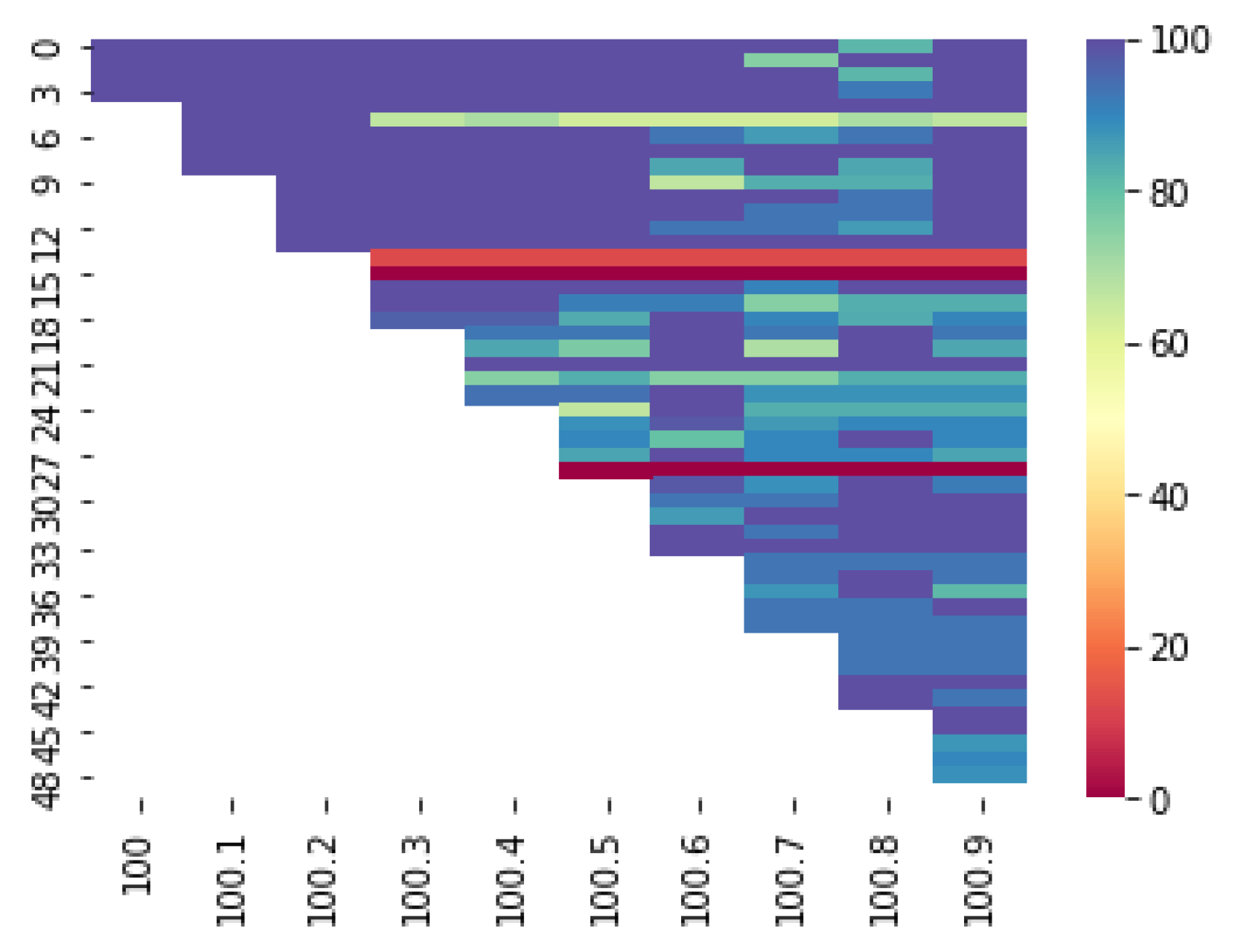

Figure 10.

The heat map obtained for the proposed approach.

Figure 10.

The heat map obtained for the proposed approach.

Figure 11.

The inter- and intraclass similarity analysis. In this figure, four instances of four different authors are shown ((a)–(d) from Bankim Chandra Chattopadhyay; (e)–(h) from Rabindranath Tagore; (i)–(l) from Suchitra Bhattacharya; and (m)–(p) from Sunil Gangopadhyay). It can be observed that the interclass similarity is quite high among the authors. There is also a high similarity between different authors such as (d) and (h) marked in orange boxes, (j) and (n) marked in red boxes, and (l) and (p) marked in green boxes, which leads to confusion. Furthermore, an intraclass difference is observed, adding more challenge to our task: the difference can be observed for the pairs (c)–(d), (e)–(f), (i)–(j), and (n)–(o), all pairs from the same author, which further adds to the chances of misclassification.

Figure 11.

The inter- and intraclass similarity analysis. In this figure, four instances of four different authors are shown ((a)–(d) from Bankim Chandra Chattopadhyay; (e)–(h) from Rabindranath Tagore; (i)–(l) from Suchitra Bhattacharya; and (m)–(p) from Sunil Gangopadhyay). It can be observed that the interclass similarity is quite high among the authors. There is also a high similarity between different authors such as (d) and (h) marked in orange boxes, (j) and (n) marked in red boxes, and (l) and (p) marked in green boxes, which leads to confusion. Furthermore, an intraclass difference is observed, adding more challenge to our task: the difference can be observed for the pairs (c)–(d), (e)–(f), (i)–(j), and (n)–(o), all pairs from the same author, which further adds to the chances of misclassification.

Figure 12.

The relative differences in accuracy with increasing number of authors.

Figure 12.

The relative differences in accuracy with increasing number of authors.

Figure 13.

Heat map obtained for datasets of disparate sizes.

Figure 13.

Heat map obtained for datasets of disparate sizes.

Figure 14.

The Heat map obtained on the Bangla dataset and English datasets. The heat maps illustrate the model in which the datasets obtained maximum accuracies (for the English dataset it was the Inception model whereas for the Bangla dataset it was the MobileNet model).

Figure 14.

The Heat map obtained on the Bangla dataset and English datasets. The heat maps illustrate the model in which the datasets obtained maximum accuracies (for the English dataset it was the Inception model whereas for the Bangla dataset it was the MobileNet model).

Figure 15.

Performance of different popular classifiers.

Figure 15.

Performance of different popular classifiers.

Table 1.

The brief analysis of the literature study.

Table 1.

The brief analysis of the literature study.

| Reference | Approach | Accuracy (%) |

|---|

| Qian et al. [4] | Deep learning | 89.20 |

| Mohsen et al. [5] | Deep learning | 95.12 |

| Zhang et al. [6] | Semantic relationship | 95.30 |

| Benzebouchi et al. [7] | Word embeddings and MLP | 95.83 |

| Anwar et al. [9] | LDA model with n-grams | 93.17 |

| | and cosine similarity | |

| Rexha et al. [10] | Content and stylometric features | 72.00 Confidence |

| Nirkhi et al. [12] | Unigram and SVM | 88.00 |

| López-Monroy et al. [13] | Bag-of-words model with SVM | 80.80 |

| Sarwar and Hassan [16] | Stylometric features | 94.03 |

| Chakraborty and Choudhury [17] | Graph-based algorithm | 94.98 |

| Digamberrao and Prasad [18] | SMO with J48 algorithm | 80.00 |

| Rakshit et al. [19] | Stylistic feature and SVM | 92.30 |

| Anisuzzaman and Salam [20] | n-gram and NB | 95.00 |

Table 2.

The partitioned dataset illustrating the number of articles for each set.

Table 2.

The partitioned dataset illustrating the number of articles for each set.

| No. of Authors | No. of Articles |

|---|

| 5 | 55 |

| 10 | 160 |

| 15 | 273 |

| 20 | 368 |

| 25 | 468 |

| 30 | 572 |

| 35 | 753 |

| 40 | 905 |

| 45 | 1055 |

| 50 | 1200 |

Table 3.

The statistical analysis of the data after preprocessing.

Table 3.

The statistical analysis of the data after preprocessing.

| Analysis per Document | Number of Words |

|---|

| Maximum | 3753 |

| Minimum | 462 |

| Average | 2882 |

Table 4.

The number of parameters for different layers of the network.

Table 4.

The number of parameters for different layers of the network.

| Layers | Parameters |

|---|

| Convolution1 | 2432 |

| Convolution2 | 4624 |

| Dense1 | 3,936,512 |

| Dense2 | 25,700 |

| Dense3 | 5050 |

| Total | 3,974,318 |

Table 5.

Results obtained for three charts using three feature sets.

Table 5.

Results obtained for three charts using three feature sets.

| Types of Charts | Accuracy (in %) |

|---|

| Statistical Features | Internal Features | Combination of Both |

|---|

| line | 91.92 | 86.67 | 93.58 |

| imagesc | 86.50 | 81.67 | 89.92 |

| pie | 91.75 | 88.42 | 92.42 |

Table 6.

Results obtained for different batch sizes keeping image size and epochs at 100 and dropout at 0.5.

Table 6.

Results obtained for different batch sizes keeping image size and epochs at 100 and dropout at 0.5.

| Batch size | 50 | 100 | 150 | 200 |

| Accuracy (in %) | 92.75 | 93.58 | 93.25 | 92.92 |

Table 7.

Results obtained for different image sizes keeping batch size and epochs at 100 and dropout at 0.5.

Table 7.

Results obtained for different image sizes keeping batch size and epochs at 100 and dropout at 0.5.

| Image size | 50 | 100 | 150 | 200 |

| Accuracy (in %) | 93.25 | 93.58 | 89.75 | 92.42 |

Table 8.

Results obtained for different epochs keeping batch size and image size as 100 and dropout as 0.5.

Table 8.

Results obtained for different epochs keeping batch size and image size as 100 and dropout as 0.5.

| Epochs | 100 | 150 | 200 |

| Accuracy (in %) | 93.17 | 93.58 | 93.25 |

Table 9.

Results obtained for different dropout values keeping batch size, image size, and epochs as 100, 100, and 150, respectively.

Table 9.

Results obtained for different dropout values keeping batch size, image size, and epochs as 100, 100, and 150, respectively.

| Dropout | 0.1 | 0.2 | 0.3 | 0.4 | 0.5 | 0.6 | 0.7 | 0.8 | 0.9 |

| Accuracy (in %) | 91.00 | 90.67 | 92.50 | 92.83 | 93.58 | 92.58 | 93.17 | 93.50 | 93.25 |

Table 10.

The results obtained for the partitioned datasets.

Table 10.

The results obtained for the partitioned datasets.

| No. of Authors | No. of Articles | Accuracy (in %) | Deviation from Past Accuracy |

|---|

| 5 | 55 | 100.00 | — |

| 10 | 160 | 100.00 | 0.00 |

| 15 | 273 | 100.00 | 0.00 |

| 20 | 368 | 94.84 | −5.16 |

| 25 | 468 | 94.44 | −0.40 |

| 30 | 572 | 91.44 | −3.00 |

| 35 | 753 | 94.29 | 2.85 |

| 40 | 905 | 90.50 | −3.79 |

| 45 | 1055 | 93.46 | 2.96 |

| 50 | 1200 | 93.58 | 0.12 |

Table 11.

Results obtained for the other two CNN models.

Table 11.

Results obtained for the other two CNN models.

| Model | Parameters | Accuracy (in %) |

|---|

| English Dataset | Bangla Dataset |

|---|

| Inception | 26,524,202 | 84.36 | 77.58 |

| MobileNet | 7,425,994 | 82.96 | 81.58 |

| Proposed | 3,974,318 | 93.52 | 93.58 |

Table 12.

The obtained accuracies of the existing systems on our dataset.

Table 12.

The obtained accuracies of the existing systems on our dataset.

| Reference | Approach | Accuracy (%) |

|---|

| Rakshit et al. | Semantic and stylistic features + SVM | 90.67 |

| Anisuzzaman and Salam | N-gram + NB | 84.28 |

| Our approach | Image-based features + CNN | 93.58 |

Table 13.

The results obtained on the C50 dataset using the proposed approach.

Table 13.

The results obtained on the C50 dataset using the proposed approach.

| Reference | Accuracy (%) |

|---|

| Gupta et al. (2019) [44] | 78.10 |

| Nirkhi et al. (2015) [45] | 68.76 |

| Our method | 93.52 |