Abstract

Modern AI technologies make use of statistical learners that lead to self-empiricist logic, which, unlike human minds, use learned non-symbolic representations. Nevertheless, it seems that it is not the right way to progress in AI. The structure of symbols—the operations by which the intellectual solution is realized—and the search for strategic reference points evoke important issues in the analysis of AI. Studying how knowledge can be represented through methods of theoretical generalization and empirical observation is only the latest step in a long process of evolution. For many years, humans, seeing language as innate, have carried out symbolic theories. Everything seems to have skipped ahead with the advent of Machine Learning. In this paper, after a long analysis of history, the rule-based and the learning-based vision, we would investigate the syntax as possible meeting point between the different learning theories. Finally, we propose a new vision of knowledge in AI models based on a combination of rules, learning, and human knowledge.

1. Introduction

Human language arises from biological evolution, individual learning, and transmission. For centuries, humanist scholars have contributed to the preservation of memory and knowledge of the past [1,2] by analyzing symbolic documents with symbolic minds and, today, they are seeking the help of artificial intelligence (AI) to speed up their analyses. Currently, AI “minds” are dominated by non-symbolic, obscure statistical learners, which have canceled symbols in their controlling strategies. Humanist scholars can hardly control these statistical models by using their knowledge expressed with symbols.

There are then two theories of how to manipulate knowledge: the current empiric trend in AI and the nativist theory of Chomsky [3,4,5]. The first ones, the empiricist models, seems to pay no attention to form, such as the difference between a noun or a verb because they do not have a built-in symbolic base structure. A different philosophy for nativists, who emphasize form in order to appreciate substance. Nativists claim that there are innate structures from the moment of birth.

The current trend of AI seems to agree with the empiricists, who are building performing models. An example that could be approached from the empiricist model theory is the Transformers-models [6] which has no specific a priori knowledge about space, time, or objects other than what is represented in the training corpus. This basic idea is precisely the antithesis of Chomsky who, through universal grammars [3], provided an explanation for the phenomenon of innate linguistics.

However, the idea that thoughts and sentences can be represented as vectors, flat and meaningless, rather than as complex symbolic structures such as syntactic trees [3] makes the Transformers-models a very good tester of the empiricist hypothesis.

Recently, many works have shown that knowledge acquired only from experience, as is done among the Transformers-models, is superficial and unreliable [7,8] and they adhere to hooks that emerge from the statistical nature of the model [9]. Nevertheless, this is nothing new, it was already been pointed out by Lake and Baroni in [10]. They argued that Recurrent Neural Network (RNNs), which are after all the parents of Transformers-models, generalize well discretely when differences between training set and test set are small but if generalization requires systematic compositional skills, RNNs fail spectacularly. Rather than supporting the empiricist view, Transformers-models seems to be incidental counter-evidence to it. Summing up, it does not seem like great news for the symbol-free thought-vector view [11]. Vector-based systems can predict word categories, but they do not embody thoughts in a sufficiently reliable way. As far as the underlying symbolic structures are concerned, they seem to be captured in a discrete but imperfect way [12,13]. Transformers-models are a triumph for empiricism and in light of the massive resources of data and computation that have been poured into them. However, this is a clear sign that it is time to dust off old approaches.

With the aim of studying these phenomena, we propose KERMITsystem [14] (Kernel-inspired Encoder with Recursive Mechanism for Interpretable Trees) as a meeting point between the two theories of knowledge. We propose this architecture with the aim of embed the long symbolic-syntactic history in modern Transformer architecture. In order to investigate the origin of knowledge and the achievable performance, a long reflection on the role of knowledge in artificial minds, and network science [15] will be used as a means of analyzing human minds.

The contributions of our paper are as follows: firstly, we will try to give “form” to the origins and development of artificial intelligence (Section 2). After investigating the long history, KERMITsystem will be introduced as a unit of investigation (Section 3) and KERMIT ⋈ Transformers will be tested on classification tasks with different configurations (Section 4). Finally, limitations, weaknesses and future developments will be highlighted in a wide-ranging discussion (Section 5).

2. Related Works

In the last few years, there has been an increasing interest in the digitization of many activities previously carried out by humans. Many public and private digitization campaigns have made billions of documents accessible by using online tools. This gives rise to new tools for the end-user of this data where the artificial intelligence (AI) domain plays a key role. Indeed, the volume and size of textual data lead to critical factors [1]. The most important one concerns knowledge extraction and inference. To solve this problem, the application of Machine Learning (ML) provides an opportunity to improve the processing of information from archives as proposed by Fiorucci in [2]. Although very accurate and performing, ML-based models fail to bring with them a long history of linguistic theory and the symbols are merely meaningless numbers. In order to preserve the richness and uniqueness of the symbols transmitted, it is important to trace the path and ideas behind symbolic minds and data abstraction. Modern currents of thought have classical origins, with both nativist and empiricist theories going back to Plato and Aristotle.

Plato, the father of nativism, is at the origin of innateness, and most of his works deal with the theory of inborn knowledge. More specifically, the Athenian philosopher showed his thinking in a dialogue called Meno. By posing a mathematical puzzle (known as The Learner’s Paradox) to a slave who does not know the principles of geometry, Plato demonstrated that concepts and ideas are present in the human mind before birth. Therefore, “seeking and learning is in fact nothing but recollection”. Plato tried to explain the innactivity of knowledge in the human brain by defining it as a “receptacle of all that comes to be” [16]. In this space, matter takes form and symbols take on meaning thanks to the ideas and thoughts innately embedded in the human brain.

Centuries later, Noam Chomsky, studying symbols in the form of linguistic phenomena and following nativist theories, argued that human children could not acquire human language unless they were born with a “language acquisition device” [3], or what Steven Pinker [17] called a “language instinct”. From this school of thought derive the theories for symbolic syntactic interpretations, Chomsky grammars [4,5]. Over the years, theories that confirm the universal structural basis of language [18] have evolved and provided increasingly advanced models, like rule-based and statistical parsers [19,20]. Until a few years ago these were the only means of working with language. These tools required a lot of manpower, tokenization, lemmatization, and a series of repetitive and low-value additional operations performed by humans. These approaches bring success but require that the modeling is well done and requires a lot of time and resources [21].

On the other side of the coin, Aristotle in the “Physics” revised the ideas of his mentor Plato on the difference between “matter” and “form” [22]. Aristotle broke down the theories of innatism and in later works defined theories based on experience. If we think of Aristotle’s “Logic” [23] we find a closeness to the ideas of today AI, because he interpreted the state that the study of thought is the basis of knowledge. In fact, Aristotle, thinking about the processes of forming concrete proofs, developed a non-formalized system of syllogisms and used them in the design of proof procedures. Aristotle’s ideas were the founding pillars for studying the formal axiomatization of logical reasoning, which, added to a “tabula-rasa” knowledge, allows the human being to think, and it can be seen as a physical system, a precursor idea of the ML. In this philosophy of thought, matter takes shape through experience, so symbols take on meaning after a process of acquisition. These ideas have been handed down over the centuries through the thought of Roger Bacon, Thomas Aquinas, John Locke, and Immanuel Kant. In modern times, the developmental psychologist Elizabeth Bates [24] and the cognitive scientist Jeff Elman [25]. The thought that unites them is something like a “tabula-rasa”, for which our knowledge comes from experience, provided through the senses, arguments reminiscent of the ML approach. With the aim of making the text computable [26] assumed to encode the presence of the text by counting the occurrences. This approach turned out to be very superficial; in fact, sometime later [27,28,29], using purpose-built neural networks and large corpora made large distributed representations of the text. Recently, these representations have been built with Transformers-models [6]. Architectures such as Bidirectional Encoder Representations from Transformers (BERT) [30] and Generative Pre-trained Transformer (GPT) [31] are based on several layers of Transformers-models encoders or decoders. In BERT, there appear to be appropriate mechanisms for learning universal linguistic representations that are task-independent. Although these models achieve extraordinary results, knowledge from experience alone seems to be not enough. The statistical learners are very good students as long as we talk about superficial and “simple” tasks. However, when the bar is raised and the task becomes more difficult, the inability of the statistical learners emerges [9]. Moreover, it seems that the knowledge acquired by the Transformers-models is superficial and unreliable [7,8].

To briefly summarise, there seems to be a huge gap between nativist and empiricist theory. The gap between nativists and empiricists is also transmitted in the representation of the world because while the former makes strong use of symbols, the latter use dense vectors. In the field of representation, this gap is nothing new, as also Zanzotto et al. [32] describe a clear division between the symbols that were used for the older representations and the numbers and vectors that are used for the newer representations.

Actually, we will find out that it is not entirely true, because the gap is not so huge. In fact, it seems that human beings have an innate mechanism, ready to adapt, as we will see in Section 3.3 and consequently also representations are not so radical and firm as several representations can coexist at the same time.

In order to test this hypotheses, we propose KERMITsystem [14]. This architecture could be useful to analyze two important aspects of the two great visions of language development and knowledge. In Section 3, after extensively reviewing the theories on the origin of knowledge, knowledge in the form of function will be proposed (Section 3.4) and KERMITsystem will be studied as a unit of investigation (Section 3.5) for hybrid knowledge between symbols and experience.

3. Our Point of View

Digitalization techniques, and word embedding techniques, are degrading the uniqueness of textual data. Although the ongoing analysis and study of new technologies for representing textual language seems to propose more accurate models, it is not extensible to all conditions, and the uniqueness of symbols does not always seem preserved. Before the advent of Artificial Intelligence (AI), the only means of the study was the human mind dominated by symbols while with modern AI technologies everything has been canceled out. To investigate the origins and future developments of Machine Learning and Natural Language Processing, in the following sections we will try to examine the nativist and empiricist viewpoints, the representations through which the theories manifest themselves, and a point of intersection.

3.1. Innatness

There is much evidence to suggest the presence of innateness. Despite languages varying, they share many universal structural properties [3,18]. For this reason, we asked whether we are heading in the right direction or whether there is a need for innate mechanisms. To answer this question we went to dust off the ancient linguistic theories. The theories of innateness were started, unconsciously, by Plato and transmitted through the centuries to the present day with the studies of Chomsky [3,4]. Chomsky in his studies claimed that there are universal grammars unique to everyone [3] and humans at birth are endowed with a kind of innate machine already initialized [4]. Therefore, to mitigate the hard view of innateness, humans—unlike animals—are predisposed to learn, starting with the innate mechanism with which they are endowed from birth. Even if there is a part of the experience in the processing of linguistic knowledge, the underlying structures can be defined by recurrent and universal patterns [33].

In the early years of the 2000s, these theories were advanced by Cristianini et al. [34], Moschitti [35] and Collins et al. [36]. In order to understand the relationships between the underlying structures, they focused on the structures by working on kernel functions capable of counting common subtrees to similarities. While long studies on syntactic theories have led to the production of very good representations that are quite light from a computational point of view but unfortunately not ready because they are not computable. In the years that followed, a number of methodologies have been developed to make syntactic structures computable [37,38,39].

3.2. No-Innatness

On the other side of the coin, there is the theory derived from the thoughts of Aristotle, who can be classed as a “tabula rasa” empiricist, for he rejects the claim that we have innate ideas or principles of reasoning. These principles were widely shared by supporters of statistical learners.

Nowadays, we can see what is happening with the Transformers-models [6], they are only based on experience and seem to be achieving state-of-the-art results in many downstream tasks. Transformers-models rely only on the knowledge derived from the experience they learned on a huge corpus. This way of working follows the empiricist theories widely studied since Aristotle. Thus, the Transformers-models are the proven proof that these theories can work, as they claim that knowledge can be constructed by experience alone. All this is great if and only if things work out.

Unfortunately, this is not always the case, considering that these architectures, although very good learners, tend to adapt very much too shallow heuristics [9]. The knowledge they learn is very superficial [7,8], in fact, in hostile contexts, where an acception or negation can totally change the meaning of a sentence, they do not work; however, they perform well in long contexts, only in the presence of many resources [40]. These things stem from the fact that working with these means the only important thing is to maximize or minimize a cost function. Thus, with Transformers-models we have a representation ready to be used by neural networks, it seems to encode syntactic and semantic information [13,41] but unfortunately in unclear ways and are very computationally expensive.

3.3. The Truth Lies in the Middle

All thinkers of every time would recognize that genius and experience are not separate but work together. Like the nativists, empiricists would not doubt that we were born with specific biological machinery that enables us to learn. Indeed, Chomsky’s famous “Language Acquisition Device” [3], in another view, should be seen precisely as an innate learning mechanism. At the end of the day, it has been supported the stance for which a significant part of the innate knowledge provided to humans consists of learning mechanisms, that is a form of innateness that enables learning [17,42,43]. From these insights, it is clear how the two theories are related in human minds.

An interesting question relates to the basic argument of this article: do artificially intelligent systems need to be equipped with significant amounts of innate machinery or is it sufficient, given the powerful Machine Learning systems recently developed, for such systems to work in “tabula-rasa” mode? An answer to this question has been investigated for years by researchers in various fields: from psychology to neuroscience as well as traditional linguistics and today’s natural language processing.

Some studies claim that children are endowed early in life with a “knowledge base”, as stated by Spelke [44]. Indeed, children have some ability to trace and reason about objects, which is unlikely to arise through associative learning. It seems that the brain of an 8-month-old child can learn and identify abstract syntactic rules with only a few minutes of exposure to artificial grammars [45]. Other work has suggested that deaf children can invent language without any direct model [46], and that language can be selectively impaired even in children with a normal cognitive function [47].

Humans are not precise machines, so many questions remain unanswered. We do not know if at the moment of birth we are equipped with Chomsky’s machine or if we have a machine set up for learning language. On the other hand, even if we could demonstrate the evidence of innatism, it would not mean that there is no learning.

Probably the presence of innatism means that any learning takes place against a background of certain mechanisms that precede learning. On the other hand, can the “innate machinery” be an ingredient for a human-like artificial intelligence?

3.4. Knowledge as Function

There is much evidence to suggest the presence of empiricism or nativism, but there is also evidence to suggest that it is only the starting point for the development of knowledge and actually, there is a middle way between the two streams of thought as mentioned in Section 3.3.

In order to understand the ingredients in depth we could define the knowledge as a function:

In function 1 there is a kind of background knowledge, called initialization knowledge denoted by k, in contrast, e denoting knowledge derived from experience. Regarding r and t are the representation of the innate part and the representation non innate part. Additionally, m indicates the mechanisms and the underlying algorithms used to arrive at K. Finally, denoted by i there is interpretability, it is a really important component because it is like a hook for human or artificial brains that explain K.

In a tabula rasa radical scenario, it would set k,i, t and r to zero, set to some extremely minimal value (e.g., an operation for adjusting weights relative to reinforcement signals), and leave the rest to experience. This very radical view is also held by the father of deep learning in LeCun et al. [11] and is applied in Transformers [6]. The same thing but with exchanged variables could be argued by following Chomsky’s ideas. In this view the variables r and e are almost zero.

What AI researchers, in general, think about the values of k,e,r,m,i and t, is largely unknown, in part because few researchers ever explicitly discuss the question, probabily because people get wrapped up in the practicalities of their immediate research, and do not really tend to think about nativism at all, as claim Marcus [43].

Many researchers believe that the methods for incorporating prior knowledge in the form of symbolic rules are too cumbersome and, while they are very useful from an engineering point of view, do not contribute to a plausible theory of general intelligence. However, there are some conditions that delineate the boundary. The “No Free Lunch” theorem [48,49] effectively shows that the above variables cannot be absolute zero. Each system will generalize in different ways, depending on which initial algorithm is specified, and no algorithm is uniquely the best. This idea ties in with what was said in Section 3.3, because although on paper the scenario looks radical enough to set some variables of the function 1 to zero in practice this is not exactly. Although it seems that no variable is truly absolute zero, at the same time it is rare to find an architecture in which all these variables coexist at the same time.

3.5. KERMIT as a Meeting Point

For this reason, it would be interesting to understand and experience all these variables combined in one system. A suitable environment for testing this proposal is that of the artificial brain because it guarantees the possibility of quantitatively measuring the weight of each variable. Furthermore, this artificial brain must guarantee the possibility of investigating what has happened and not function as a black box.

An answer can be found in KERMIT ⋈ Transformers. Kernel inspired Encoder with Recursive Mechanism for Interpretable Trees (KERMIT) [14], combined ⋈, with the powerful Transformer [6] architecture.

This system is the trade-off between long linguistic theory and modern universal sentence embedding representations. Using this framework, we try to combine knowledge derived from universal syntactic interpretations and knowledge derived from the experience of universal sentence embeddings. These two components recall the two theories on the origin of linguistic knowledge. Equation (1) is satisfied and all variables are given the same weight. Basic knowledge, denoted by k, is provided by the symbolic part. Experience, denoted by e, comes from the Transformer part. The representation, denoted by r, comes directly from the encoding of experience. While the symbolic representation is obtained thanks to the underlying mechanisms allowing the encoding of the tree representation t in a computable form. Finally, the interpretability part, denoted by i, is also guaranteed. The framework provides a heat-parse-tree, which will be discussed later.

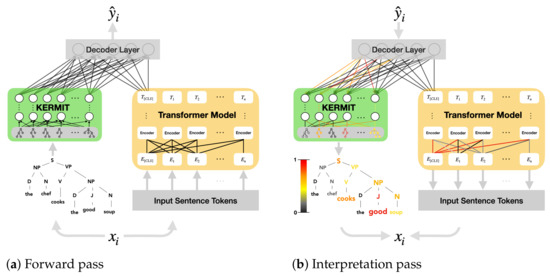

Figure 1 shows how KERMIT ⋈ Transformers works. Specifically, in Figure 1a we can observe the input of KERMIT ⋈ Transformers in symbolic and in Transformers-models form, the input is then joined to a special decoder layer denoted with . To enable the human observer to understand the power of the framework, in Figure 1b, another mechanism can be seen. This mechanism makes it possible to observe the most activated parts of the symbolic input, that is, the parts that contributed most to the classifier’s final decision. This part is very important, KERMIT predictions can be investigated. The tool for investigating predictions is KERMITviz [50]. This tool, using Layer-wise Relevance Propagation (LRP) [51], which is an important Feature Importance Explanations technique, allows weighted trees to be drawn, displaying the weights with a color scale ranging from red to black according to the importance of the feature.

Figure 1.

The KERMIT ⋈ Transformers architecture. During the interpretation pass KERMITviz is used to produce heat parse trees, while a transformer’s activation visualizer is used for the remainder of the network.

To support the hypothesis of the union of the two knowledge we can look at the results obtained in the official paper [14]. KERMIT ⋈ Transformers has been tested in different configurations. For the Transformers-models part, BERT [30] was used.

In addition, we reinforced some scenarios in order to observe the knowledge gained from the experience. In this regard, the potential of BERT will be extrapolated, which will not only be used as an encoder but will also be enabled during the learning phase. Finally, the two parts have been merged into one layer which chooses the most important. These experiments were carried out and will be introduced in Section 4.

In KERMIT ⋈ Transformers, we can test how the nativist and empiricist aspects can work together to produce new, holistic and enriched forms of knowledge. In Section 4 we will try to analyze this hypothesis and try to understand how much knowledge is required by the AI, how much must be already encoded, and how much must be left to be learned.

4. Experiments

We aim to investigate whether KERMIT ⋈ Transformers can be used as a meeting point between empiricist and nativist theory we exploited the potential of Transformers-models.

We used the KERMIT ⋈ Transformers architecture to answer the following research questions: (1) Can innate symbolic knowledge encoded in neural networks be used as a means of enriching knowledge? (2) Does symbolic innate knowledge encode a different knowledge than that learned by experience and then embedded in vectors? (3) Through symbolic knowledge, governed by known structures, is it easier to understand the learning process of statistical learners than through those offered on embedded vectors?

To correctly answering to the above questions, we experimented with our KERMIT ⋈ Transformers architecture by using state-of-the-art pre-trained transformers BERT [30]. We used the code provided in KERMIT [14]. The source code is publically available (The code is available at https://github.com/ART-Group-it/KERMIT (accessed on 16 October 2021)). The same experiments as in KERMIT were repeated, and further data and experiments were added as follows.

As in KERMIT, we tested the architecture in two different settings: (1) a completely universal setting where both KERMIT and BERT are trained only in the last decision layer; (2) a task-adapted setting where KERMIT is equipped with deeper multi-layer perceptrons and the Transformers parameters are learned in different layers. We experimented with 4 public datasets where we believe the role of syntactic information is relevant with respect to the task.

The rest of the section describes the experimental set-up, the quantitative experimental results of KERMIT.

4.1. Experimental Set-Up

The following lines describe the KERMIT, BERT and KERMIT ⋈ Transformers parameters and their configurations. Finally, the datasets used and the preprocessing done are described.

4.1.1. KERMIT

In this work, KERMIT is used as an encoder of syntactic information, which as we said before symbolizes the innate part. This encoder stems from tree kernels [36] and distributed tree kernels [52]. The basic idea is to represent parse trees in vector spaces incorporating huge subtree spaces where n is the huge number of different subtrees.

The encoder is based on an embedding layer for the tree node labels and a recursive encoding function based on a function shuffled called circular convolution, introduced by Zanzotto and Dell’Arciprete [52]. In the embedding layer, there is an encoding function. The encoding function is untrained, it maps the one-hot vectors of the tree nodes into unique random vectors for each subtrees. After defining the encodings for each component of the input vector, the representations are incorporated using dynamic algorithms [14].

The parameters on which KERMIT encoder has been tested on a distributed representation space with with the penalizing factor set to as it was estimated as the best value [35]. The symbolic representation, hence in this case the syntactic trees, which constitute the input of KERMIT encoder was obtained using the Standford constituent parser. More precisely, the parser is part of CoreNLP probabilistic context-free grammar parser [53].

4.1.2. BERT

For the empiricist part, we proposed the best known Transformers-models architecture: BERT [30]. This architecture allows the use of pre-trained weights.

The pre-training phase is long and requires a lot of computational power, but can be avoided by using the pre-trained versions provided by Huggingface [54]. To solve a text classification task, it is sufficient to use a pre-trained version and use the architecture as an encoder, using transfer learning mode. The architecture will produce an embedding vector, the one of interest being identifiable with the special token . Then, an embedding vector of size 768 from the token will be used as input to a custom classifier. The custom classifier will output a vector of size equal to the number of classes in our classification task.

In our work, the Transformers-models pre-trained weights have been used: (1) BERT, used in the uncased setting with the pre-trained English model; (2) BERT, used with the same settings of BERT. The models come from the library Huggingface [54]. The input text for BERT and BERT has been preprocessed and tokenized as specified in respectively in Devlin et al. [30].

4.1.3. KERMIT ⋈ Transformers

After describing KERMIT and BERT individually, the KERMIT ⋈ Transformers part and its configurations are described. The decoder layer of our KERMIT ⋈ Transformers architecture is a fully connected layer with the softmax activation function applied to the concatenation of the KERMIT output and the final [CLS] token representation of the selected Transformers model. Finally, the optimizer used to train the whole architecture is AdamW [55] with the learning rate set to .

In the completely universal setting, KERMIT is composed only by the first lightweight encoder layer, that is the grey layer in the green block in Figure 1. To study universality, Transformers’ weights are fixed in order to avoid the representation drifting toward the data distribution of the task.

The KERMIT ⋈ Transformers is trained with a batch size of 125 for 50 epochs. In addition, each experiment has been repeated 5 times with 5 different fixed seeds, then the average of the results was taken. This approach is designed to assess whether the symbolic knowledge provided by the KERMIT part adds different information than the part learned through experience. Moreover, we want to try to understand whether symbolic-syntactic interpretations are a viable solution to increase the performance of statistical learners more precisely than neural networks.

In the first setting, we used two different architectures of BERT, BERT as also proposed in the KERMIT paper [14] and in addition, we add BERT. We trained different layers of these architectures. In this way, BERT can adapt the knowledge embedded in the vectors also called universal sentence embedding to include task-specific information. This information is learned during the training phase and is a surplus. Our goal is to understand how much influence using a double configuration of BERT, that is, BERT, could have.

The KERMIT side has also been extended and adapted, in fact, we used two different multi-layer perceptrons: (1) the first, called funnel MLP with two linear layers that brings the 4000 units of the KERMIT encoder down to 200 units with an intermediate level of 300 units (KERMIT); (2) the second, called diamond MLP with four linear layers forming a diamond shape: 4000 units, 5000 units, 8000 units, 5000 units and, finally 4000 units (KERMIT). Both KERMIT and KERMIT have ReLU [56] activation functions and dropout [57] set to 0.25 for each layer.

We trained the overall model in two settings: a one-epoch training session and a normal training session. Aside from possible overfitting issues, the motivation for this choice is due to the desire to test how accurate the predictions of the model can be under minimal learning conditions and thus how important the part we assumed to be innate in KERMIT could be. In the normal training session, we trained the architecture for 5 epochs with batches of 32. The evaluation metric we used is accuracy defined as the ratio of the number of correct predictions to the total number of inputs.

We experimented with an old desktop consisting of a 4 Cores Intel Xeon E3-1230 CPU with 62 Gb of RAM and 1 Nvidia 1070 GPU with 8Gb of onboard memory and a dedicated server consisting of an IBM PowerPC 32 Cores CPU with 256 Gb of RAM and 2 Nvidia V100 GPUs with 32Gb of on board memory each.

4.1.4. Datasets

To verify our model, we experimented with four classification tasks http://goo.gl/JyCnZq (accessed on 16 October 2021) [58] which should be sensitive to syntactic information, using the versions proposed in huggingface.co. The tasks include: (1) AGNews, a news classification task with 4 target classes; (2) DBPedia, a classification task over Wikipedia with 14 classes; (3) Yelp Polarity, a binary sentiment classification task of Yelp reviews; and (4) Yelp Review, a sentiment classification task with 5 classes. Given the computational constraints of the light system setting, we created a smaller version of the original training datasets by randomly sampling 11% of the examples and keeping the datasets balanced as the original versions, in setting up this scenario, accuracy seemed to be the best metric for estimating performance. The number of examples and the distribution of examples for the classes are shown in Table 1.

Table 1.

Number of examples, number of classes, average number of examples per class, and variance distribution examples by classes in the training and testing datasets.

4.2. Results

The results suggest that innate knowledge derived from universal syntactic interpretations can help complements knowledge derived from the experience of universal sentence embeddings.

This conclusion is derived from the following observations of Table 2, which reports results in terms of the the number of correct predictions out of total predictions, that is, accuracy of the different models based on the different datasets. In fact, in three out of a total of four cases, the KERMIT ⋈ Transformers model seems to perform better than KERMIT and BERT alone.

Table 2.

Universal Setting—Average accuracy and standard deviation on four text classification tasks. Results derive from 5 runs. ☆, ⋄, † and * indicate a statistically significant difference between two results with a 95% confidence level with the sign test.

Moreover, we can see that KERMIT alone outperforms BERT in two tests, Yelp Review, Yelp Polarity, where KERMIT ⋈ Transformers obtains the best results.

In order to understand whether syntactic or structural information is important for the specific task, we also experimented with two versions: BERT-Reverse that is BERT with inverted text as input, and BERT-Random that is BERT with randomly mixed text as input. These further results show, in three out of four tests, that if the order of the input words changes, the performance of the model is also affected. From these tests we can conclude that the symbolic syntactic knowledge provided by KERMIT influences the predictions of the model and enriches the knowledge of the neural network. These arguments are not always valid because KERMIT performed worse with respect to BERT in DBPedia. This may be justified as BERT are trained on Wikipedia, thus universal sentence embeddings are already adapted to the specific dataset.

Where syntactic information is relevant, in three cases (Yelp Review, Yelp Polarity, and DBPedia), the complete KERMIT ⋈ Transformers outperforms the model that is based only on the related Transformers-models, and the difference is statistically significant. Even in DBPedia, where Transformers’ embeddings are pre-trained, KERMIT ⋈ Transformers outperforms the model based only on the related transformer.

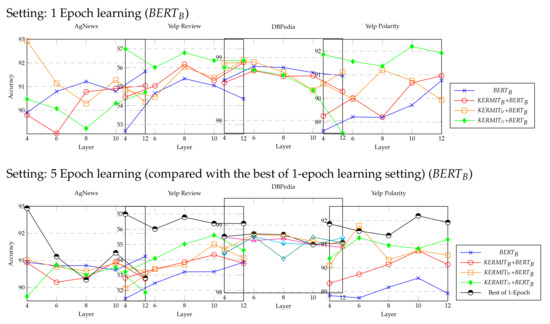

A more in-depth analysis has been done in the task adapted setting. This setting show that the symbolic-syntactic knowledge provided by KERMIT is still useful even when embeddings of universal sentences are adapted to the specific task. The results confirm the findings of Jawahar et al. [59] that universal sentence embeddings capture syntactic phenomena better when the intermediate layers of BERT are learned on the task. The results can be observed in Figure 2 where the accuracy of the system is plotted against the number of learned layers of BERT starting from the output layer.

Figure 2.

Comparison between KERMIT ⋈ Transformers and BERT when training layers in BERT: Accuracy vs. Learned Layers in two different learning configurations—1-Epoch and 5-Epoch training.

In fact, it seems that different BERT layers encode different information Jawahar et al. [59]. Hence, learning different layers in a specific setting means adapting that kind of information. As mentioned earlier we experimented under the computationally lighter setting where training is done only for one epoch and the more expensive setting where training is done for five epochs.

Our results in the task-adapted setting where the learning part is reinforced, BERT adapts the universal sentence embeddings to include a better syntactic model when its weights in the different layers are trained on the specific corpus.

When symbolic knowledge matters and affects accuracy, in the case observed in Yelp Review and Yelp Polarity, KERMIT endowed with innate knowledge enacts its potential by exploiting universal syntactic interpretation. This phenomenon makes it possible to compensate for the syntactic knowledge missing in the task-adapted sentence embeddings of a trained BERT.

In fact, KERMIT ⋈ Transformers outperforms a trained BERT in both the 1-epoch and 5-epoch settings for any number of trained layers (see Figure 2).

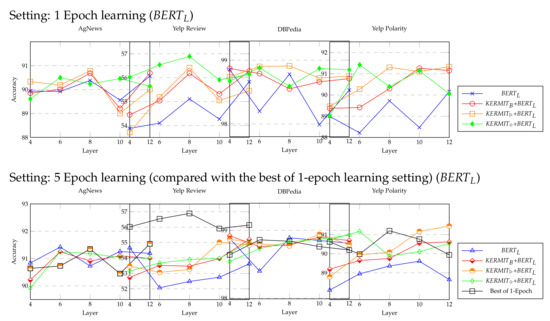

In order to have a complete overview, we have reproduced the same configurations of KERMIT changing the part of BERT. In the previous experiments, we used a standard version of BERT called BERT. In these experiments, we used the version of BERT which is simply made up of twice as many levels as the base version. In Figure 3 we can observe the same phenomena described in the previous lines. The main difference is given by the variance of the accuracies since in the BERT version the variances are minimal while in the BERT version they increase.

Figure 3.

Comparison between KERMIT ⋈ Transformers and BERT when training layers in BERT: Accuracy vs. Learned Layers in two different learning configurations—1-Epoch and 5-Epoch training.

Furthermore, we can see that in the large version the symbolic part of KERMIT is almost totally absorbed. A clear example is the performance of AGNews, a syntax-sensitive dataset, the configuration BERT with only one epoch gets the best results. As the learning epochs increase and the configuration changes from basic to large, there is a clear predominance of the Transformers-models and therefore the performance decreases.

4.3. Discussion

Examining the results and comments in Section 4.2, we try to investigate the experience of the Transformers-models. In our work, the BERT Transformer was used in two pre-trained versions: the first Base (BERT) and the second Large (BERT), as explained in Section 4.1.2. These two versions, despite having different learning parameters—one twice as much as the other—are trained on the same data. BERT versions are pre-trained on an English corpus of 3300 M words consisting of books [60] and Wikipedia. Therefore, some of the data presented in the dataset could have already been seen by the Transformer during learning. This phenomenon can be noted in the DBPedia dataset. In fact, in DBPedia cases, BERT’s performances are very high. A clear investigation of the lexicon present in some datasets, such as DBPedia and the Yelp datasets, could clarify many obscure aspects. In addition, uncommon data from outside the normal web could be used to support this hypothesis, in order to test the true capabilities of the Transformer and, thus, of BERT.

4.4. Measuring Knowledge

After defining the basic ingredients for knowledge through the Equation (1) and after identifying KERMIT ⋈ Transformers as a possible complete and balanced embodiment of the function, important questions arose: how much knowledge must be present, how much must be entered by humans and how much must be left to be learned by a system?

A first answer can be found by looking at the experiments in Section 4. Indeed, it seems that syntactic–symbolic knowledge can be encoded and used by statistical learners with good results. In any case, the power of large architectures offering universal sentence embedding totally trained in a empiricist way seems to perform better in general tasks (see Figure 2 and Figure 3). From the results it seems to emerge that syntax is important, therefore sentence structure is important, from which we can deduce that Chomsky’s theories on universal grammars cannot be ignored in the same way as theories of innatism.

A second answer opens up new frontiers in the study of required knowledge. To answer these questions, we propose two different approaches: (1) empirical investigation of theories of knowledge, acquisition and learning of language; (2) discern how much innateness might be required for AI.

An answer to the first question could be found by studying the approaches collected and proposed by Siew [15], who deals with Cognitive Network Science (CSI), which is not only concerned with producing empirical tests but also with the interpretation and visualization of the results in more familiar contexts such as social media [61]. Interpretability of the empirical evidence could be exploited in this way. This, in fact, seems to support associative learning in language acquisition. This revolution would provide the possibility to further investigate the connection between concepts rather than the latent features of individual concepts. This change also involves a readjustment of K, which will be built not only on the syntax but also on other kinds of semantic features.

While the field of CSI is well defined, the field of the second question is not. The approach is based on a more empirical approach, that is, after setting the inputs of Equation (1), we would be to create synthetic agents that do difficult tasks, with some initial degree of innateness, achieve a state of the art performance with those tasks, and then iterate, reducing as much innateness as possible, ultimately converging on some minimal amount of innate machinery. This strategy is close to the one proposed by Silver et al. [62] and taken up by Marcus [43].

In order to try this test, we need to build machines that are partially innate and therefore, able to learn from experience, as a machine learning paradigm, but at the same time act by human hand. This architecture should be similar to Pat-in-the-Loop [63], a system that allows humans to input rules into a neural network. The results must be interpretable, so humans must be able to understand the decisions made by the system. In order to guarantee the explainability of the interpretation pass (see Figure 1b) present in the KERMIT architecture introduced in the previous Section could be used.

5. Conclusions

Over the centuries, symbolic documents have been extensively studied; their interpretation and study were at the heart of social processes that have now been overcome by statistical learners. Unfortunately, current AI technologies sometimes use non-symbolic representations learned in obscure and complex ways.

After a long view on the computational application of nativist and empiricist theories, we tried to define knowledge in the form of a function from which we proposed KERMIT[14] as a point of intersection between symbolic theories and recent statistical learning. In addition, we tested the potential of KERMIT on downstream tasks. From the results, we can see that universal syntactic interpretations could enrich the knowledge of universal sentence embeddings, which is sometimes not sufficient.

Finally, we concluded this work by proposing an innateness test. The aim we would like to pursue with this test is to enable researchers to investigate the amount of innateness needed to perform better on a task.

The point of this paper is that the issue is difficult to solve, and therefore requires a very in-depth study, and on the other hand, the balance between the two approaches is broken because it seems that machine learning is getting the better of us, erasing many positive things done in the past.

Author Contributions

Data curation, L.R.; Formal analysis, F.F.; Funding acquisition, F.M.Z.; Investigation, L.R.; Methodology, L.R.; Project administration, L.R. and F.M.Z.; Validation, F.F.. All authors have read and agreed to the published version of the manuscript.

Funding

This research was partially funded by 2019 BRIC INAIL ID32/2019 SfidaNow project.

Data Availability Statement

Not Applicable, the study does not report any data.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Condorelli, F.; Rinaudo, F.; Salvadore, F.; Tagliaventi, S. A Neural Networks Approach to Detecting Lost Heritage in Historical Video. ISPRS Int. J. Geo-Inf. 2020, 9, 297. [Google Scholar] [CrossRef]

- Fiorucci, M.; Khoroshiltseva, M.; Pontil, M.; Traviglia, A.; Del Bue, A.; James, S. Machine Learning for Cultural Heritage: A Survey. Pattern Recognit. Lett. 2020, 133, 102–108. [Google Scholar] [CrossRef]

- Chomsky, N. Aspects of the Theory of Syntax; The MIT Press: Cambridge, UK, 1965. [Google Scholar]

- Chomsky, N. On certain formal properties of grammars. Inf. Control 1959, 2, 137–167. [Google Scholar] [CrossRef]

- Chomsky, N. Syntactic Structures; Mouton: Columbus, OH, USA, 1957. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. 2017. Available online: http://xxx.lanl.gov/abs/1706.03762 (accessed on 16 October 2021).

- Sinha, K.; Sodhani, S.; Dong, J.; Pineau, J.; Hamilton, W.L. CLUTRR: A Diagnostic Benchmark for Inductive Reasoning from Text. 2019. Available online: http://xxx.lanl.gov/abs/1908.06177 (accessed on 16 October 2021).

- Talmor, A.; Elazar, Y.; Goldberg, Y.; Berant, J. oLMpics—On what Language Model Pre-training Captures. 2020. Available online: http://xxx.lanl.gov/abs/1912.13283 (accessed on 16 October 2021).

- McCoy, T.; Pavlick, E.; Linzen, T. Right for the Wrong Reasons: Diagnosing Syntactic Heuristics in Natural Language Inference. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 3428–3448. [Google Scholar] [CrossRef]

- Lake, B.M.; Baroni, M. Generalization without Systematicity: On the Compositional Skills of Sequence-To-Sequence Recurrent Networks. 2018. Available online: http://xxx.lanl.gov/abs/1711.00350 (accessed on 16 October 2021).

- LeCun, Y.; Bengio, Y.; Hinton, G.E. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Goldberg, Y. Assessing BERT’s Syntactic Abilities. 2019. Available online: http://xxx.lanl.gov/abs/1901.05287 (accessed on 16 October 2021).

- Hewitt, J.; Manning, C.D. A Structural Probe for Finding Syntax in Word Representations. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MN, USA, 2–7 June 2019; pp. 4129–4138. [Google Scholar] [CrossRef]

- Zanzotto, F.M.; Santilli, A.; Ranaldi, L.; Onorati, D.; Tommasino, P.; Fallucchi, F. KERMIT: Complementing Transformer Architectures with Encoders of Explicit Syntactic Interpretations. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16–20 November 2020; pp. 256–267. [Google Scholar] [CrossRef]

- Siew, C.S. Using network science to analyze concept maps of psychology undergraduates. Appl. Cogn. Psychol. 2019, 33, 662–668. [Google Scholar] [CrossRef]

- Zeyl, D.; Sattler, B. Plato’s Timaeus. In The Stanford Encyclopedia of Philosophy, Summer 2019 ed.; Zalta, E.N., Ed.; Metaphysics Research Lab, Stanford University: Redwood City, CA, USA, 2019. [Google Scholar]

- Pinker, S.; Jackendoff, R. The faculty of language: What’s special about it? Cognition 2005, 95, 201–236. [Google Scholar] [CrossRef]

- Newmeyer, F.J. Explaining language universals. J. Linguist. 1990, 26, 203–222. [Google Scholar] [CrossRef]

- Manning, C.D.; Schütze, H. Foundations of Statistical Natural Language Processing; The MIT Press: Cambridge, MA, USA, 1999. [Google Scholar]

- Collins, M. Head-Driven Statistical Models for Natural Language Parsing. Comput. Linguist. 2003, 29, 589–637. [Google Scholar] [CrossRef]

- Settles, B.; Craven, M.; Friedland, L.A. Active Learning with Real Annotation Costs. 2008. Available online: http://burrsettles.com/pub/settles.nips08ws.pdf (accessed on 16 October 2021).

- Bodnar, I. Aristotle’s Natural Philosophy. In The Stanford Encyclopedia of Philosophy, Spring 2018 ed.; Zalta, E.N., Ed.; Metaphysics Research Lab, Stanford University: Redwood City, CA, USA, 2018. [Google Scholar]

- Smith, R. Aristotle’s Logic. In The Stanford Encyclopedia of Philosophy, Fall 2020 ed.; Zalta, E.N., Ed.; Metaphysics Research Lab, Stanford University: Redwood City, CA, USA, 2020. [Google Scholar]

- Bates, E.; Thal, D.; Finlay, B.; Clancy, B. Early Language Development And Its Neural Correlates. Handb. Neuropsychol. 1970, 6, 69. [Google Scholar]

- Elman, J.L.; Bates, E.A.; Johnson, M.H.; Karmiloff-Smith, A.; Parisi, D.; Plunkett, K. Rethinking Innateness: A Connectionist Perspective on Development; MIT Press: Cambridge, MA, USA, 1996. [Google Scholar]

- Salton, G. Automatic text processing: The transformation, analysis, and retrieval of Reading-Addison-Wesley. 1989, 169. Available online: http://www.iro.umontreal.ca/~nie/IFT6255/Introduction.pdf (accessed on 16 October 2021).

- Mikolov, T.; Chen, K.; Corrado, G.; Dean, J. Efficient Estimation of Word Representations in Vector Space. arXiv 2013, arXiv:1301.3781. [Google Scholar]

- Pennington, J.; Socher, R.; Manning, C. GloVe: Global Vectors for Word Representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1532–1543. [Google Scholar] [CrossRef]

- Bojanowski, P.; Grave, E.; Joulin, A.; Mikolov, T. Enriching Word Vectors with Subword Information. arXiv 2016, arXiv:1607.04606. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. arXiv 2019, arXiv:1810.04805. [Google Scholar]

- Brown, T.B.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models are Few-Shot Learners. arXiv 2020, arXiv:2005.14165. [Google Scholar]

- Ferrone, L.; Zanzotto, F.M. Symbolic, Distributed, and Distributional Representations for Natural Language Processing in the Era of Deep Learning: A Survey. Front. Robot. AI 2020, 6, 153. [Google Scholar] [CrossRef]

- White, L. Second Language Acquisition and Universal Grammar. Stud. Second. Lang. Acquis. 1990, 12, 121–133. [Google Scholar] [CrossRef]

- Cristianini, N.; Shawe-Taylor, J. An Introduction to Support Vector Machines and Other Kernel-based Learning Methods; Cambridge University Press: Cambridge, UK, 2000. [Google Scholar]

- Moschitti, A. Making Tree Kernels practical for Natural Language Learning. In Proceedings of the EACL’06, Trento, Italy, 3–7 April 2006. [Google Scholar]

- Collins, M.; Duffy, N. New Ranking Algorithms for Parsing and Tagging: Kernels over Discrete Structures, and the Voted Perceptron. In Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics, Philadelphia, PA, USA, 7–12 July 2002. [Google Scholar]

- Culotta, A.; Sorensen, J. Dependency Tree Kernels for Relation Extraction. In Proceedings of the 42nd Annual Meeting on Association for Computational Linguistics, Barcelona, Spain, 21–26 July 2004; pp. 423–429. [Google Scholar] [CrossRef]

- Pighin, D.; Moschitti, A. On Reverse Feature Engineering of Syntactic Tree Kernels. In Proceedings of the Fourteenth Conference on Computational Natural Language Learning, Uppsala, Sweden, 15–16 July 2010; pp. 223–233. [Google Scholar]

- Zanzotto, F.M.; Dell’Arciprete, L. Distributed Tree Kernels. arXiv 2012, arXiv:1206.4607. [Google Scholar]

- Dai, Z.; Yang, Z.; Yang, Y.; Carbonell, J.; Le, Q.V.; Salakhutdinov, R. Transformer-XL: Attentive Language Models Beyond a Fixed-Length Context. arXiv 2019, arXiv:1901.02860. [Google Scholar]

- Puccetti, G.; Miaschi, A.; Dell’Orletta, F. How Do BERT Embeddings Organize Linguistic Knowledge? In Proceedings of the Deep Learning Inside Out (DeeLIO): The 2nd Workshop on Knowledge Extraction and Integration for Deep Learning Architectures, Online, 10 June 2021; pp. 48–57. [Google Scholar]

- Marler, P. Innateness and the instinct to learn. An. Acad. Bras. CiêNcias 2004, 76, 189–200. [Google Scholar] [CrossRef][Green Version]

- Marcus, G. Innateness, AlphaZero, and Artificial Intelligence. arXiv 2018, arXiv:1801.05667. [Google Scholar]

- Spelke, E.S.; Kinzler, K.D. Core knowledge. Dev. Sci. 2007, 10, 89–96. [Google Scholar] [CrossRef]

- Gervain, J.; Berent, I.; Werker, J.F. Binding at Birth: The Newborn Brain Detects Identity Relations and Sequential Position in Speech. J. Cognitive Neurosci. 2012, 24, 564–574. [Google Scholar] [CrossRef]

- Senghas, A.; Kita, S.; Özyürek, A. Children Creating Core Properties of Language: Evidence from an Emerging Sign Language in Nicaragua. Science 2004, 305, 1779–1782. [Google Scholar] [CrossRef]

- Lely, H.; Pinker, S. The biological basis of language: Insight from developmental grammatical impairments. Trends Cogn. Sci. 2014, 18, 586–595. [Google Scholar] [CrossRef]

- Geman, S.; Bienenstock, E.; Doursat, R. Neural Networks and the Bias/Variance Dilemma. Neural Comput. 1992, 4, 1–58. [Google Scholar] [CrossRef]

- Wolpert, D.H. The Lack of a Priori Distinctions between Learning Algorithms. Neural Comput. 1996, 8, 1341–1390. [Google Scholar] [CrossRef]

- Ranaldi, L.; Fallucchi, F.; Zanzotto, F.M. KERMITviz: Visualizing Neural Network Activations on Syntactic Trees. In Proceedings of the 15th International Conference on Metadata and Semantics Research (MTSR’21), Madrid, Spain, 29 November–3 December 2021; Volume 1. [Google Scholar]

- Bach, S.; Binder, A.; Montavon, G.; Klauschen, F.; Müller, K.R.; Samek, W. On pixel-wise explanations for non-linear classifier decisions by layer-wise relevance propagation. PLoS ONE 2015, 10, 1–46. [Google Scholar] [CrossRef]

- Zanzotto, F.M.; Dell’Arciprete, L. Distributed tree kernels. In Proceedings of the 29th International Conference on Machine Learning, ICML, Edinburgh, Scotland, 26 June–1 July 2012; Volume 1, pp. 193–200. [Google Scholar]

- Manning, C.; Surdeanu, M.; Bauer, J.; Finkel, J.; Bethard, S.; McClosky, D. The Stanford CoreNLP natural language processing toolkit. In Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics: System Demonstrations, Baltimore, MD, USA, 23–24 June 2014; pp. 55–60. [Google Scholar] [CrossRef]

- Wolf, T.; Debut, L.; Sanh, V.; Chaumond, J.; Delangue, C.; Moi, A.; Cistac, P.; Rault, T.; Louf, R.; Funtowicz, M.; et al. HuggingFace’s Transformers: State-of-the-art Natural Language Processing. arxiv 2019, arXiv:1910.03771. [Google Scholar]

- Loshchilov, I.; Hutter, F. Decoupled weight decay regularization. In Proceedings of the 7th International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Agarap, A.F. Deep Learning using Rectified Linear Units (ReLU). arXiv 2018, arXiv:1803.08375. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A Simple Way to Prevent Neural Networks from Overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Zhang, X.; Zhao, J.; LeCun, Y. Character-level Convolutional Networks for Text Classification. Adv. Neural Inf. Process. Syst. 2015, 28, 649–657. [Google Scholar]

- Jawahar, G.; Sagot, B.; Seddah, D. What Does BERT Learn about the Structure of Language? In Proceedings of the Conference of the Association for Computational Linguistics, Association for Computational Linguistics (ACL), Florence, Italy, 28 July–2 August 2019; pp. 3651–3657. [Google Scholar] [CrossRef]

- Zhu, Y.; Kiros, R.; Zemel, R.; Salakhutdinov, R.; Urtasun, R.; Torralba, A.; Fidler, S. Aligning Books and Movies: Towards Story-Like Visual Explanations by Watching Movies and Reading Books. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Washington, DC, USA, 7–13 December 2015; pp. 19–27. [Google Scholar] [CrossRef]

- Stella, M.; Vitevitch, M.S.; Botta, F. Cognitive networks identify the content of English and Italian popular posts about COVID-19 vaccines: Anticipation, logistics, conspiracy and loss of trust. arXiv 2021, arXiv:2103.15909. [Google Scholar]

- Silver, D.; Schrittwieser, J.; Simonyan, K.; Antonoglou, I.; Huang, A.; Guez, A.; Hubert, T.; Baker, L.; Lai, M.; Bolton, A.; et al. Mastering the game of Go without human knowledge. Nature 2017, 550, 354–359. [Google Scholar] [CrossRef] [PubMed]

- Zanzotto, F.M.; Onorati, D.; Tommasino, P.; Ranaldi, L.; Fallucchi, F. Pat-in-the-Loop: Declarative Knowledge for Controlling Neural Networks. Future Internet 2020, 12, 218. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).