Language-Independent Fake News Detection: English, Portuguese, and Spanish Mutual Features

Abstract

1. Introduction

2. Definitions and Related Work

3. Material and Methods

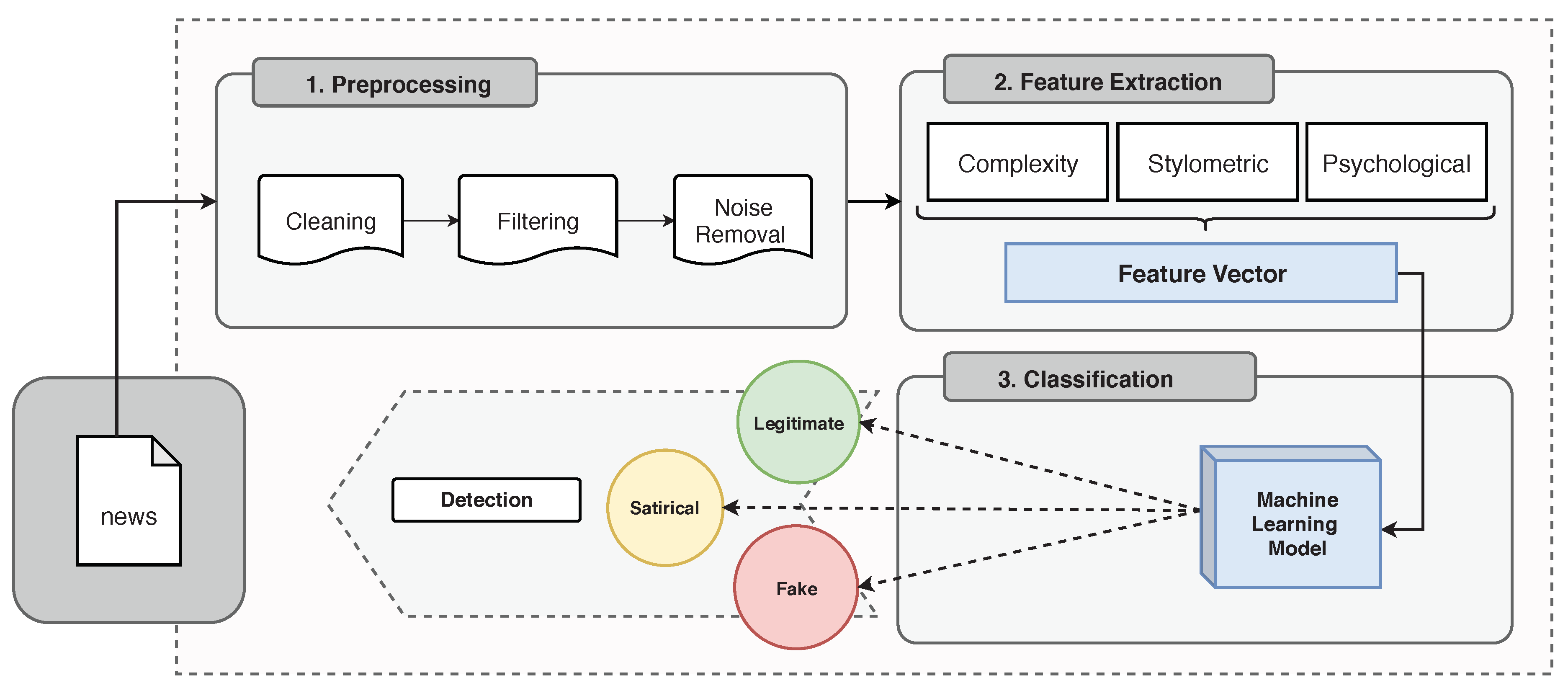

3.1. Text Processing Pipeline

3.2. News Datasets

3.3. Classification Algorithms

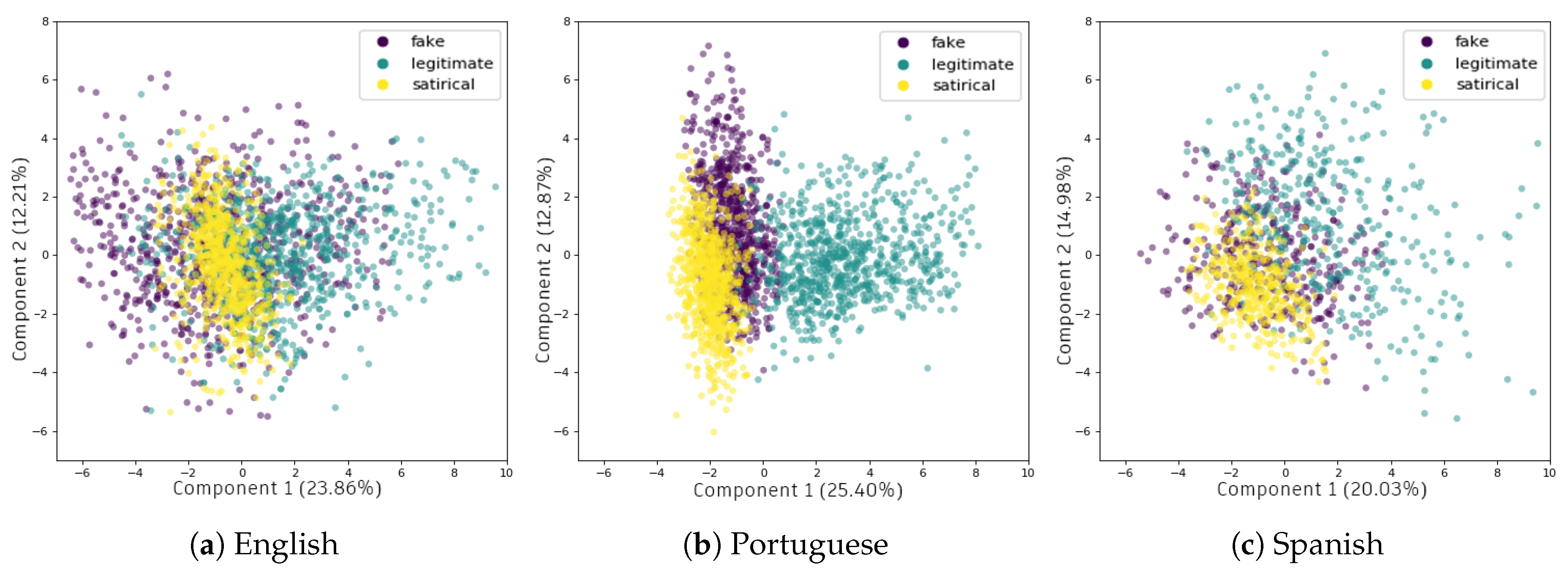

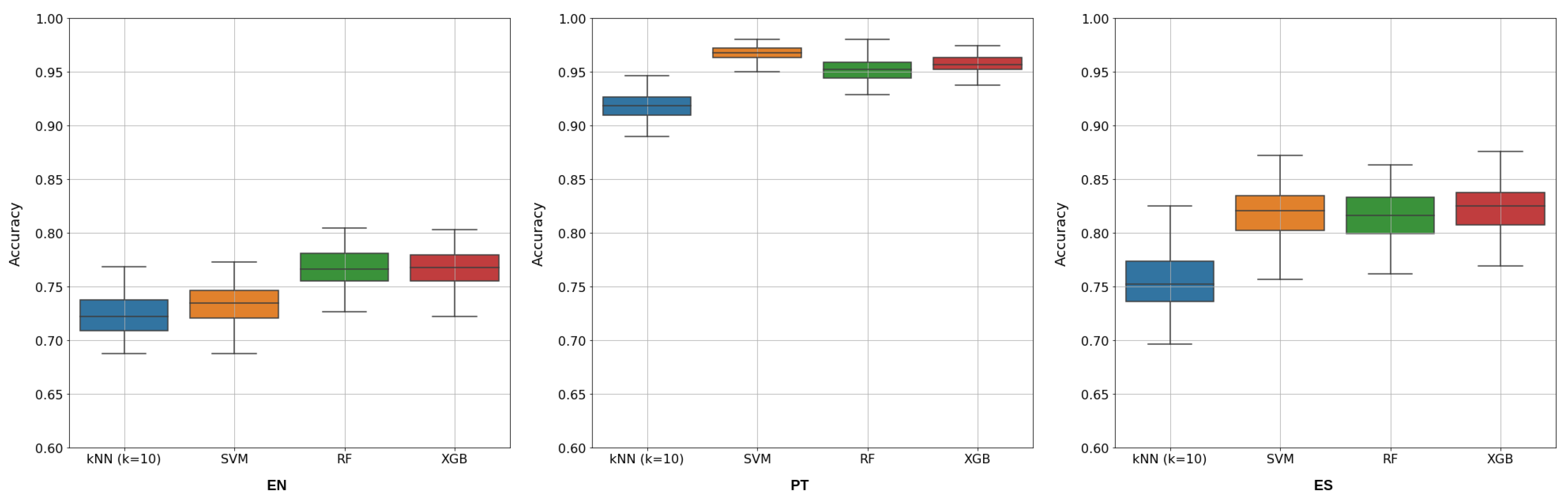

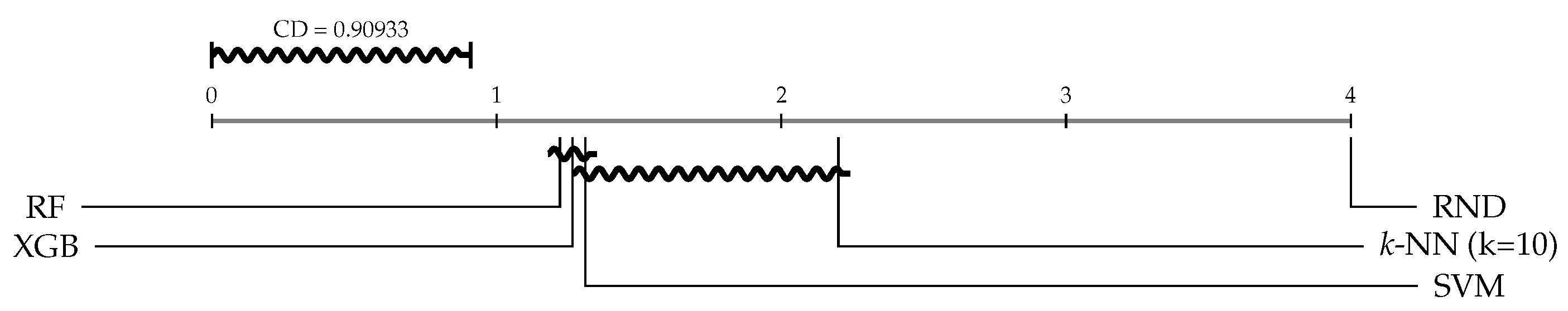

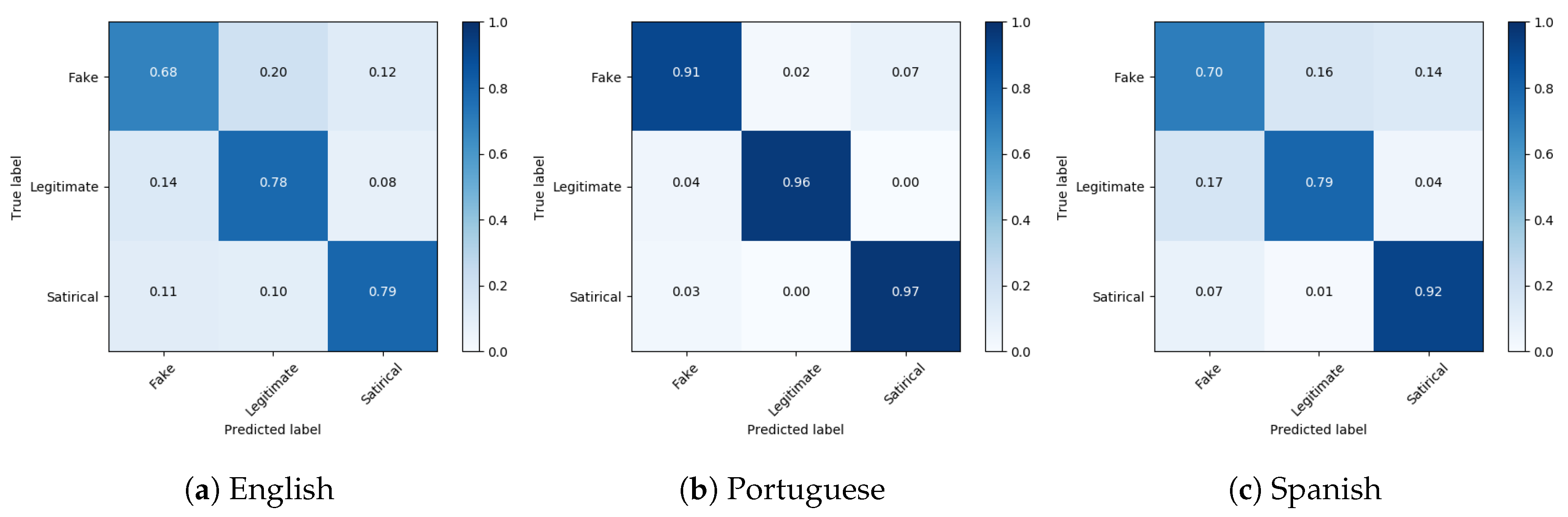

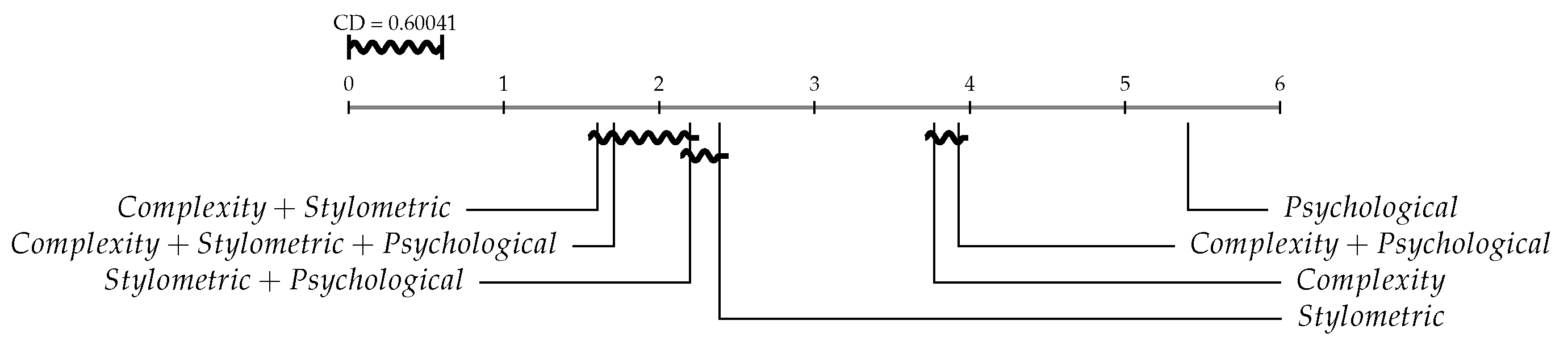

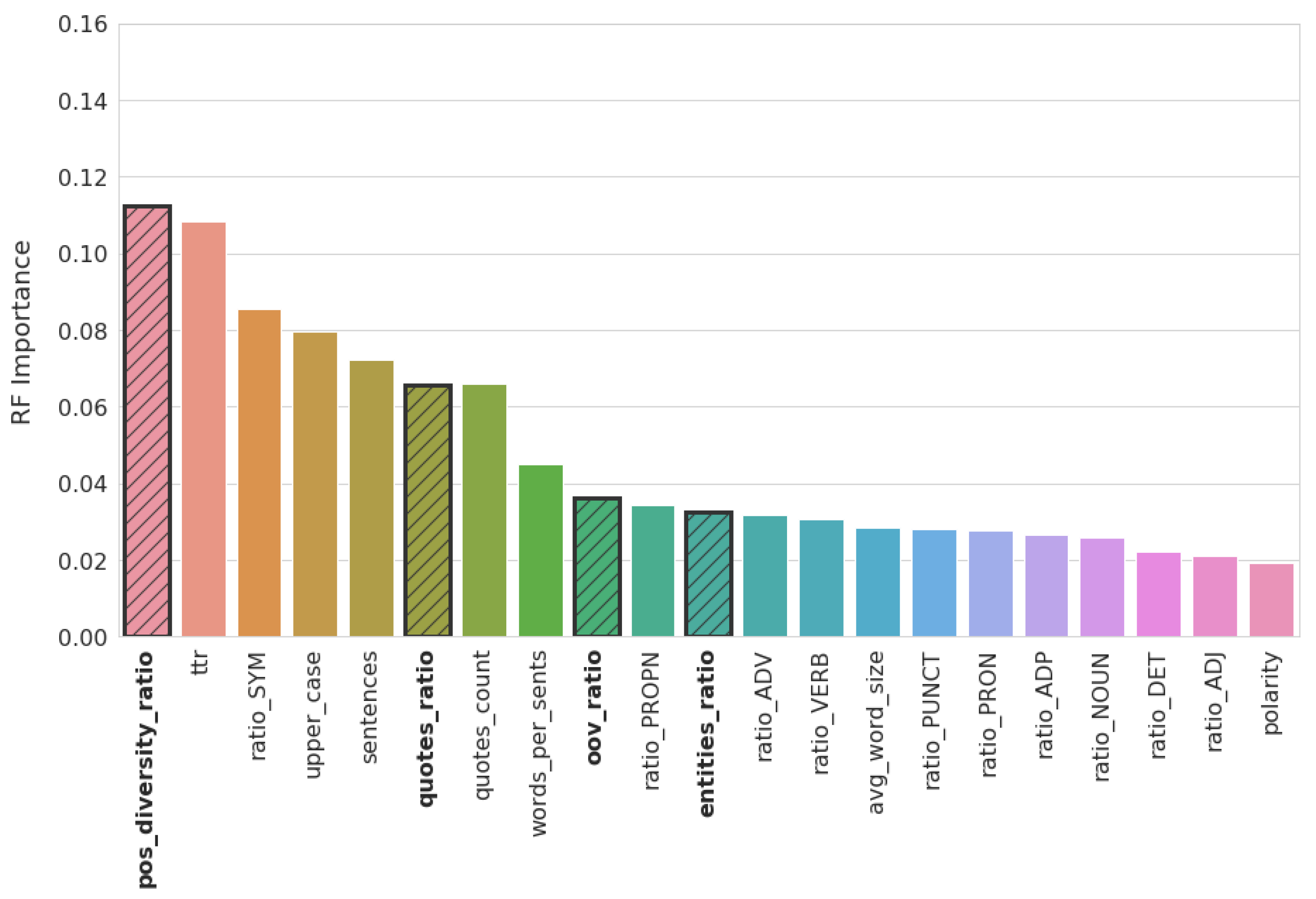

4. Analysis and Discussions

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Baccarella, C.V.; Wagner, T.F.; Kietzmann, J.H.; McCarthy, I.P. Social media? It’s serious! Understanding the dark side of social media. Eur. Manag. J. 2018, 36, 431–438. [Google Scholar] [CrossRef]

- Ahuja, R.; Singhal, V.; Banga, A. Using Hierarchies in Online Social Networks to Determine Link Prediction. In Soft Computing and Signal Processing; Springer: Berlin/Heidelberg, Germany, 2019; pp. 67–76. [Google Scholar]

- Correa, T.; Bachmann, I.; Hinsley, A.W.; de Zúñiga, H.G. Personality and social media use. In Organizations and Social Networking: Utilizing Social Media to Engage Consumers; IGI Global: Hershey, PA, USA, 2013; pp. 41–61. [Google Scholar]

- Lazer, D.M.; Baum, M.A.; Benkler, Y.; Berinsky, A.J.; Greenhill, K.M.; Menczer, F.; Metzger, M.J.; Nyhan, B.; Pennycook, G.; Rothschild, D.; et al. The science of fake news. Science 2018, 359, 1094–1096. [Google Scholar] [CrossRef] [PubMed]

- Shao, C.; Ciampaglia, G.L.; Varol, O.; Yang, K.C.; Flammini, A.; Menczer, F. The spread of low-credibility content by social bots. Nat. Commun. 2018, 9, 4787. [Google Scholar] [CrossRef] [PubMed]

- Allcott, H.; Gentzkow, M. Social media and fake news in the 2016 election. J. Econ. Perspect. 2017, 31, 211–236. [Google Scholar] [CrossRef]

- Gelfert, A. Fake news: A definition. Informal Log. 2018, 38, 84–117. [Google Scholar] [CrossRef]

- Shu, K.; Sliva, A.; Wang, S.; Tang, J.; Liu, H. Fake news detection on social media: A data mining perspective. ACM SIGKDD Explor. Newsl. 2017, 19, 22–36. [Google Scholar] [CrossRef]

- Rubin, V.L.; Chen, Y.; Conroy, N.J. Deception Detection for News: Three Types of Fakes. In Proceedings of the 78th ASIS&T Annual Meeting: Information Science with Impact: Research in and for the Community, St. Louis, MO, USA, 15 November 2015; pp. 83:1–83:4. [Google Scholar]

- Rubin, V.; Conroy, N.; Chen, Y.; Cornwell, S. Fake news or truth? using satirical cues to detect potentially misleading news. In Proceedings of the Second Workshop on Computational Approaches to Deception Detection, San Diego, CA, USA, 17 June 2016; pp. 7–17. [Google Scholar]

- del Pilar Salas-Zárate, M.; Paredes-Valverde, M.A.; Rodriguez-Garcia, M.A.; Valencia-García, R.; Alor-Hernández, G. Automatic detection of satire in Twitter: A psycholinguistic-based approach. Knowl.-Based Syst. 2017, 128, 20–33. [Google Scholar] [CrossRef]

- Thu, P.P.; New, N. Impact analysis of emotion in figurative language. In Proceedings of the 2017 IEEE/ACIS 16th International Conference on Computer and Information Science (ICIS), Wuhan, China, 24–26 May 2017; pp. 209–214. [Google Scholar]

- Conroy, N.J.; Rubin, V.L.; Chen, Y. Automatic deception detection: Methods for finding fake news. In Proceedings of the 78th ASIS&T Annual Meeting: Information Science with Impact: Research in and for the Community, St. Louis, MO, USA, 15 November 2015; p. 82. [Google Scholar]

- Bajaj, S. “The Pope Has a New Baby!” Fake News Detection Using Deep Learning. Available online: https://pdfs.semanticscholar.org/19ed/b6aa318d70cd727b3cdb006a782556ba657a.pdf (accessed on 5 May 2020).

- Van Hee, C.; Lefever, E.; Hoste, V. LT3: Sentiment analysis of figurative tweets: Piece of cake# NotReally. In Proceedings of the 9th International Workshop on Semantic Evaluation (SemEval 2015), Denver, CO, USA, 4–5 June 2015; pp. 684–688. [Google Scholar]

- Rashkin, H.; Choi, E.; Jang, J.Y.; Volkova, S.; Choi, Y. Truth of varying shades: Analyzing language in fake news and political fact-checking. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, Copenhagen, Denmark, 9–11 September 2017; pp. 2931–2937. [Google Scholar]

- Zhu, Y.; Gao, X.; Zhang, W.; Liu, S.; Zhang, Y. A Bi-Directional LSTM-CNN Model with Attention for Aspect-Level Text Classification. Future Internet 2018, 10, 116. [Google Scholar] [CrossRef]

- Kincl, T.; Novák, M.; Přibil, J. Improving sentiment analysis performance on morphologically rich languages: Language and domain independent approach. Comput. Speech Lang. 2019, 56, 36–51. [Google Scholar] [CrossRef]

- Wang, W.Y. “Liar, Liar Pants on Fire”: A New Benchmark Dataset for Fake News Detection. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers), Vancouver, BC, Canada, 30 July–4 August 2017; pp. 422–426. [Google Scholar] [CrossRef]

- Ferreira, W.; Vlachos, A. Emergent: A novel data-set for stance classification. In Proceedings of the 2016 conference of the North American chapter of the association for computational linguistics: Human language technologies, San Diego, CA, USA, 12–17 June 2016; pp. 1163–1168. [Google Scholar]

- Hanselowski, A.; PVS, A.; Schiller, B.; Caspelherr, F.; Chaudhuri, D.; Meyer, C.M.; Gurevych, I. A Retrospective Analysis of the Fake News Challenge Stance Detection Task. arXiv 2018, arXiv:1806.05180. Available online: https://arxiv.org/abs/1806.05180 (accessed on 5 May 2020).

- Horne, B.D.; Adali, S. This just in: Fake news packs a lot in title, uses simpler, repetitive content in text body, more similar to satire than real news. In Proceedings of the Eleventh International AAAI Conference on Web and Social Media, Montreal, QC, Canada, 15–18 May 2017. [Google Scholar]

- Zhou, X.; Jain, A.; Phoha, V.V.; Zafarani, R. Fake news early detection: A theory-driven model. arXiv 2019, arXiv:1904.11679. [Google Scholar]

- Monteiro, R.A.; Santos, R.L.; Pardo, T.A.; de Almeida, T.A.; Ruiz, E.E.; Vale, O.A. Contributions to the Study of Fake News in Portuguese: New Corpus and Automatic Detection Results. In Proceedings of the International Conference on Computational Processing of the Portuguese Language, Canela, Brazil, 24–26 September 2018; pp. 324–334. [Google Scholar]

- Posadas-Durán, J.; Gómez-Adorno, H.; Sidorov, G.; Moreno, J. Detection of Fake News in a New Corpus for the Spanish Language. J. Intell. Fuzzy Syst. 2018, in press. [Google Scholar]

- de Morais, J.I.; Abonizio, H.Q.; Tavares, G.M.; da Fonseca, A.A.; Barbon, S., Jr. Deciding among Fake, Satirical, Objective and Legitimate news: A multi-label classification system. In Proceedings of the XV Brazilian Symposium on Information Systems, Aracaju, Brazil, 20–24 May 2019; pp. 1–8. [Google Scholar]

- Krishnan, S.; Chen, M. Identifying Tweets with Fake News. In Proceedings of the 2018 IEEE International Conference on Information Reuse and Integration (IRI), Salt Lake City, UT, USA, 6–9 July 2018; pp. 460–464. [Google Scholar] [CrossRef]

- de Sousa Pereira Amorim, B.; Alves, A.L.F.; de Oliveira, M.G.; de Souza Baptista, C. Using Supervised Classification to Detect Political Tweets with Political Content. In Proceedings of the 24th Brazilian Symposium on Multimedia and the Web, Salvador, Brazil, 16–19 October 2018; pp. 245–252. [Google Scholar]

- Gruppi, M.; Horne, B.D.; Adali, S. An Exploration of Unreliable News Classification in Brazil and The U.S. arXiv 2018, arXiv:1806.02875. [Google Scholar]

- Guibon, G.; Ermakova, L.; Seffih, H.; Firsov, A.; Le Noé-Bienvenu, G. Multilingual Fake News Detection with Satire. In Proceedings of the CICLing: International Conference on Computational Linguistics and Intelligent Text Processing, La Rochelle, France, 7–13 April 2019. [Google Scholar]

- Taboada, M.; Brooke, J.; Tofiloski, M.; Voll, K.D.; Stede, M. Lexicon-Based Methods for Sentiment Analysis. Comput. Linguist. 2011, 37, 267–307. [Google Scholar] [CrossRef]

- Lynch, G.; Vogel, C. The translator’s visibility: Detecting translatorial fingerprints in contemporaneous parallel translations. Comput. Speech Lang. 2018, 52, 79–104. [Google Scholar] [CrossRef]

- Chen, Y.; Conroy, N.J.; Rubin, V.L. Misleading online content: Recognizing clickbait as false news. In Proceedings of the 2015 ACM on Workshop on Multimodal Deception Detection, Seattle, WA, USA, 9–13 November 2015; pp. 15–19. [Google Scholar]

- Qin, T.; Burgoon, J.K.; Blair, J.P.; Nunamaker, J.F. Modality effects in deception detection and applications in automatic-deception-detection. In Proceedings of the 38th Annual Hawaii International Conference on System Sciences, Big Island, HI, USA, 6 January 2005; p. 23b. [Google Scholar]

- Zhou, L.; Burgoon, J.K.; Nunamaker, J.F.; Twitchell, D. Automating Linguistics-Based Cues for Detecting Deception in Text-Based Asynchronous Computer-Mediated Communications. Group Decis. Negot. 2004, 13, 81–106. [Google Scholar] [CrossRef]

- Castillo, C.; Mendoza, M.; Poblete, B. Predicting information credibility in time-sensitive social media. Internet Res. 2013, 23, 560–588. [Google Scholar] [CrossRef]

- Mc Laughlin, G.H. SMOG grading—A new readability formula. J. Read. 1969, 12, 639–646. [Google Scholar]

- Smith, E.A.; Kincaid, J.P. Derivation and Validation of the Automated Readability Index for Use with Technical Materials. Hum. Factor. 1970, 12, 457–564. [Google Scholar] [CrossRef]

- Al-Rfou, R.; Kulkarni, V.; Perozzi, B.; Skiena, S. Polyglot-NER: Massive Multilingual Named Entity Recognition. In Proceedings of the 2015 SIAM International Conference on Data Mining, Vancouver, BC, Canada, 30 April–2 May 2015. [Google Scholar]

- Al-Rfou, R.; Perozzi, B.; Skiena, S. Polyglot: Distributed Word Representations for Multilingual NLP. In Proceedings of the Seventeenth Conference on Computational Natural Language Learning, Sofia, Bulgaria, 8–9 August 2013; pp. 183–192. [Google Scholar]

- Chen, Y.; Skiena, S. Building sentiment lexicons for all major languages. In Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics (Short Papers), Baltimore, MD, USA, 23–25 June 2014; pp. 383–389. [Google Scholar]

- Brown, R. Non-linear mapping for improved identification of 1300+ languages. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 627–632. [Google Scholar]

- Japkowicz, N. The Class Imbalance Problem: Significance and Strategies. Available online: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.35.1693&rep=rep1&type=pdf (accessed on 5 May 2020).

- Chawla, N.V. Data mining for imbalanced datasets: An overview. In Data Mining and Knowledge Discovery Handbook; Springer: Berlin/Heidelberg, Germany, 2009; pp. 875–886. [Google Scholar]

- Aha, D.W.; Kibler, D.; Albert, M.K. Instance-based learning algorithms. Mach. Learn. 1991, 6, 37–66. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar] [CrossRef]

- Afroz, S.; Brennan, M.; Greenstadt, R. Detecting hoaxes, frauds, and deception in writing style online. In Proceedings of the 2012 IEEE Symposium on Security and Privacy, San Francisco, CA, USA, 20–23 May 2012; pp. 461–475. [Google Scholar]

- Potthast, M.; Kiesel, J.; Reinartz, K.; Bevendorff, J.; Stein, B. A Stylometric Inquiry into Hyperpartisan and Fake News. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Melbourne, Australia, 15–20 July 2018; pp. 231–240. [Google Scholar]

- Tosik, M.; Mallia, A.; Gangopadhyay, K. Debunking Fake News One Feature at a Time. arXiv 2018, arXiv:1808.02831. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Bergstra, J.S.; Bardenet, R.; Bengio, Y.; Kégl, B. Algorithms for Hyper-Parameter Optimization. In Advances in Neural Information Processing Systems 24; Shawe-Taylor, J., Zemel, R.S., Bartlett, P.L., Pereira, F., Weinberger, K.Q., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2011; pp. 2546–2554. [Google Scholar]

- James, G.; Witten, D.; Hastie, T.; Tibshirani, R. An Introduction to Statistical Learning; Springer: Berlin/Heidelberg, Germany, 2013; Volume 112. [Google Scholar]

- Tipping, M.E.; Bishop, C.M. Probabilistic principal component analysis. J. R. Stat. Soc. Ser. B (Stat. Methodol. 1999, 61, 611–622. [Google Scholar] [CrossRef]

- Demšar, J. Statistical Comparisons of Classifiers over Multiple Data Sets. J. Mach. Learn. Res. 2006, 7, 1–30. [Google Scholar]

- Lin, Y.; Yang, S.; Stoyanov, V.; Ji, H. A multi-lingual multi-task architecture for low-resource sequence labeling. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Melbourne, Australia, 15–20 July 2018; pp. 799–809. [Google Scholar]

- Dinu, A.; Dinu, L.P. On the Syllabic Similarities of Romance Languages. In Computational Linguistics and Intelligent Text Processing; Gelbukh, A., Ed.; Springer: Berlin/Heidelberg, Germany, 2005; pp. 785–788. [Google Scholar]

| No | Type | Name | Description | Reference |

|---|---|---|---|---|

| 1 | words_per_sents | Average words per sentence | [22,32] | |

| 2 | avg_word_size | Average word size | [32,33] | |

| 3 | sentences | Count of sentences | [34] | |

| 4 | ttr | Type-Token Ratio (lexical diversity) | [32,35] | |

| 5 | pos_diversity_ratio | POS-tag diversity | Proposed | |

| 6 | entities_ratio | Ratio of Named Entities to text size | Proposed | |

| 7 | upper_case | Uppercase letters | [36] | |

| 8 | oov_ratio | OOV words frequency | Proposed | |

| 9 | quotes_count | Quotation marks count | [22] | |

| 10 | quotes_ratio | Ratio of quotation marks to text size | Proposed | |

| 11 | ratio_ADJ | ADJ tag frequency | [22] | |

| 12 | ratio_ADP | ADP tag frequency | [22,32] | |

| 13 | ratio_ADV | ADV tag frequency | [22] | |

| 14 | ratio_DET | DET tag frequency | [22] | |

| 15 | ratio_NOUN | NOUN tag frequency | [22,32] | |

| 16 | ratio_PRON | PRON tag frequency | [22,32] | |

| 17 | ratio_PROPN | PROPN tag frequency | [22] | |

| 18 | ratio_PUNCT | PUNCT tag frequency | [22] | |

| 19 | ratio_SYM | SYM tag frequency | [22] | |

| 20 | ratio_VERB | VERB tag frequency | [22,32] | |

| 21 | polarity | Sentiment polarity | [22,36] |

| Corpus | Samples | Samples per Class | Tokens | Unique Tokens | References |

|---|---|---|---|---|---|

| English (EN) | 6129 | 2043 | 4,432,906 | 94,496 | [22], FNC |

| Portuguese (PT) | 2538 | 846 | 1,246,924 | 58,129 | [24], Sensacionalista, Diário Pernambucano |

| Spanish (ES) | 1263 | 421 | 459,406 | 40,891 | [25], FNC |

| Language | Class | Content |

|---|---|---|

| EN | fake | “Voters on the right have been waiting for this for a long time! Police have finally raided a Democratic strategic headquarters, and the results are devastating! (…)” |

| legitimate | “The search warrant that authorized the FBI to examine a laptop in connection with Hillary Clinton’s use of a private email (…)” | |

| satirical | “NEW YORK (The Borowitz Report) Speaking to reporters late Friday night, President-elect Donald Trump revealed that he had Googled Obamacare for the first time earlier in the day. (…)” | |

| ES | fake | “La Universidad de Oxford da más tiempo a las mujeres para hacer los exámenes (…)” |

| legitimate | “El PSOE reactiva el debate sobre la eutanasia El partido del Gobierno llevará su propuesta para regularla al Pleno la próxima semana y cree que saldrá adelante (…)” | |

| satirical | “Mucha euforia ha generado el gran lanzamiento del nuevo reality colombiano "Yo me abro" que se estrena hoy y en el que los participantes demostrarán sus habilidades para escapar de la justicia colombiana. (…)” | |

| PT | fake | “Ministro que pediu demissão do governo Temer explica o motivo: “Não faço maracutaias. Não tenho rabo preso” (…)” |

| legitimate | “Governo federal decide decretar intervenção na segurança pública do RJ. Decreto será publicado nesta sexta-feira (16), segundo o presidente do Senado, Eunício Oliveira. Decisão foi tomada em meio à escalada de violência na capital carioca. (…)” | |

| satirical | “A senadora Kátia Abreu é uma mulher que não tira o corpo fora de polêmicas. Ela dá uma tora de árvore para não entrar numa briga mas derruba uma floresta inteira pelo prazer de não sair. (…)” |

| EN | PT | ES | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Fake | Legitimate | Satire | Fake | Legitimate | Satire | Fake | Legitimate | Satire | |

| avg_word_size | 4.40 | 4.30 | 4.18 | 4.14 | 4.28 | 4.38 | 4.30 | 4.35 | 4.40 |

| entities_ratio | 0.007 | 0.008 | 0.009 | 0.013 | 0.011 | 0.009 | 0.008 | 0.009 | 0.005 |

| oov_ratio | 0.100 | 0.094 | 0.009 | 0.062 | 0.050 | 0.079 | 0.023 | 0.019 | 0.036 |

| polarity | 0.011 | −0.075 | 0.010 | −0.262 | −0.303 | −0.245 | −0.411 | −0.388 | −0.299 |

| pos_diversity_ratio | 0.09 | 0.05 | 0.07 | 0.44 | 0.22 | 0.50 | 0.21 | 0.15 | 0.20 |

| quotes_count | 8.14 | 23.43 | 7.26 | 5.00 | 6.80 | 6.06 | 5.29 | 8.90 | 0.01 |

| quotes_ratio | 0.002 | 0.004 | 0.003 | 0.003 | 0.002 | 0.003 | 0.005 | 0.001 | 0.000 |

| ratio_ADJ | 0.078 | 0.082 | 0.081 | 0.055 | 0.057 | 0.065 | 0.041 | 0.044 | 0.045 |

| ratio_ADP | 0.101 | 0.112 | 0.110 | 0.121 | 0.140 | 0.128 | 0.144 | 0.155 | 0.153 |

| ratio_ADV | 0.042 | 0.045 | 0.049 | 0.039 | 0.028 | 0.036 | 0.038 | 0.037 | 0.051 |

| ratio_DET | 0.078 | 0.082 | 0.089 | 0.101 | 0.105 | 0.111 | 0.123 | 0.125 | 0.130 |

| ratio_NOUN | 0.185 | 0.178 | 0.175 | 0.168 | 0.174 | 0.193 | 0.164 | 0.180 | 0.173 |

| ratio_PRON | 0.028 | 0.029 | 0.031 | 0.053 | 0.039 | 0.053 | 0.034 | 0.034 | 0.041 |

| ratio_PROPN | 0.11 | 0.10 | 0.10 | 0.11 | 0.10 | 0.09 | 0.08 | 0.11 | 0.06 |

| ratio_PUNCT | 0.124 | 0.128 | 0.109 | 0.102 | 0.101 | 0.103 | 0.149 | 0.137 | 0.132 |

| ratio_SYM | 0.003 | 0.001 | 0.004 | 0.011 | 0.012 | 0.011 | 0.012 | 0.026 | 0.001 |

| ratio_VERB | 0.149 | 0.152 | 0.160 | 0.095 | 0.078 | 0.088 | 0.126 | 0.117 | 0.139 |

| sentences | 34.0 | 49.9 | 25.1 | 10.3 | 17.2 | 12.0 | 13.2 | 67.3 | 8.1 |

| ttr | 0.522 | 0.439 | 0.491 | 0.596 | 0.412 | 0.669 | 0.532 | 0.457 | 0.562 |

| upper_case | 132 | 199 | 90 | 66 | 126 | 31 | 41 | 212 | 28 |

| words_per_sents | 19.7 | 25.1 | 22.7 | 17.9 | 20.6 | 27.5 | 37.9 | 36.6 | 31.0 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Abonizio, H.Q.; de Morais, J.I.; Tavares, G.M.; Barbon Junior, S. Language-Independent Fake News Detection: English, Portuguese, and Spanish Mutual Features. Future Internet 2020, 12, 87. https://doi.org/10.3390/fi12050087

Abonizio HQ, de Morais JI, Tavares GM, Barbon Junior S. Language-Independent Fake News Detection: English, Portuguese, and Spanish Mutual Features. Future Internet. 2020; 12(5):87. https://doi.org/10.3390/fi12050087

Chicago/Turabian StyleAbonizio, Hugo Queiroz, Janaina Ignacio de Morais, Gabriel Marques Tavares, and Sylvio Barbon Junior. 2020. "Language-Independent Fake News Detection: English, Portuguese, and Spanish Mutual Features" Future Internet 12, no. 5: 87. https://doi.org/10.3390/fi12050087

APA StyleAbonizio, H. Q., de Morais, J. I., Tavares, G. M., & Barbon Junior, S. (2020). Language-Independent Fake News Detection: English, Portuguese, and Spanish Mutual Features. Future Internet, 12(5), 87. https://doi.org/10.3390/fi12050087