Paranoid Transformer: Reading Narrative of Madness as Computational Approach to Creativity †

Abstract

1. Introduction

2. Related Work

3. Model and Experiments

3.1. Generator Subsystem

- a collection of Crypto Texts (Crypto Anarchist Manifesto, Cyphernomicon, etc.);

- a collection of fiction books from such cyberpunk authors as Dick, Gibson, and others;

- non-cyberpunk authors with particular affinity to fringe mental prose, for example, Kafka and Rumi;

- transcripts and subtitles from some cyberpunk movies and series such as Bladerunner; and

- several thousands of quotes and fortune cookie messages collected from different sources.

- QUOTE for any short quote or fortune, LONG for others; and

- CYBER for cyber-themed texts and OTHER for others.

let painting melt away every other shred of reason and pain, just lew the paint to move thoughts away from blizzes in death. let it dry out, and turn to cosmic delights, to laugh on the big charms and saxophones and fudatron steames of the sales titanium. we are god’s friends, the golden hands on the shoulders of our fears. do you knock my cleaning table over? i snap awake at some dawn. The patrons researching the blues instructor’s theories around me, then give me a glass of jim beam. boom!

3.2. Heuristic Filters

- reject the creation of new, non-existing words;

- reject phrases with two unconnected verbs in a row;

- reject phrases with several duplicated words; and

- reject phrases with no punctuation or with too many punctuation marks.

a slave has no more say in his language but he has to speak out!the doll has a variety of languages, so its feelings have to fill up some time of the day - to - day journals.The doll is used only when he remains private. and it is always effective.leave him with his monk - like body.a little of technique on can be helpful.

3.3. Critic Subsystem

a sudden feeling of austin lemons, a gentle stab of disgust. i’m what i’mhumans whirl in night and distance.we shall never suffer this. if the human race came along tomorrow, none of us would be as wise as they already would have been. there is a beginning and an end.both of our grandparents and brothers are overdue. he either can not agree or he can look for someone to blame for his death.he has reappeared from the world of revenge, revenge, separation, hatred. he has ceased all who have offended him.and i don’t want the truth. not for an hour.

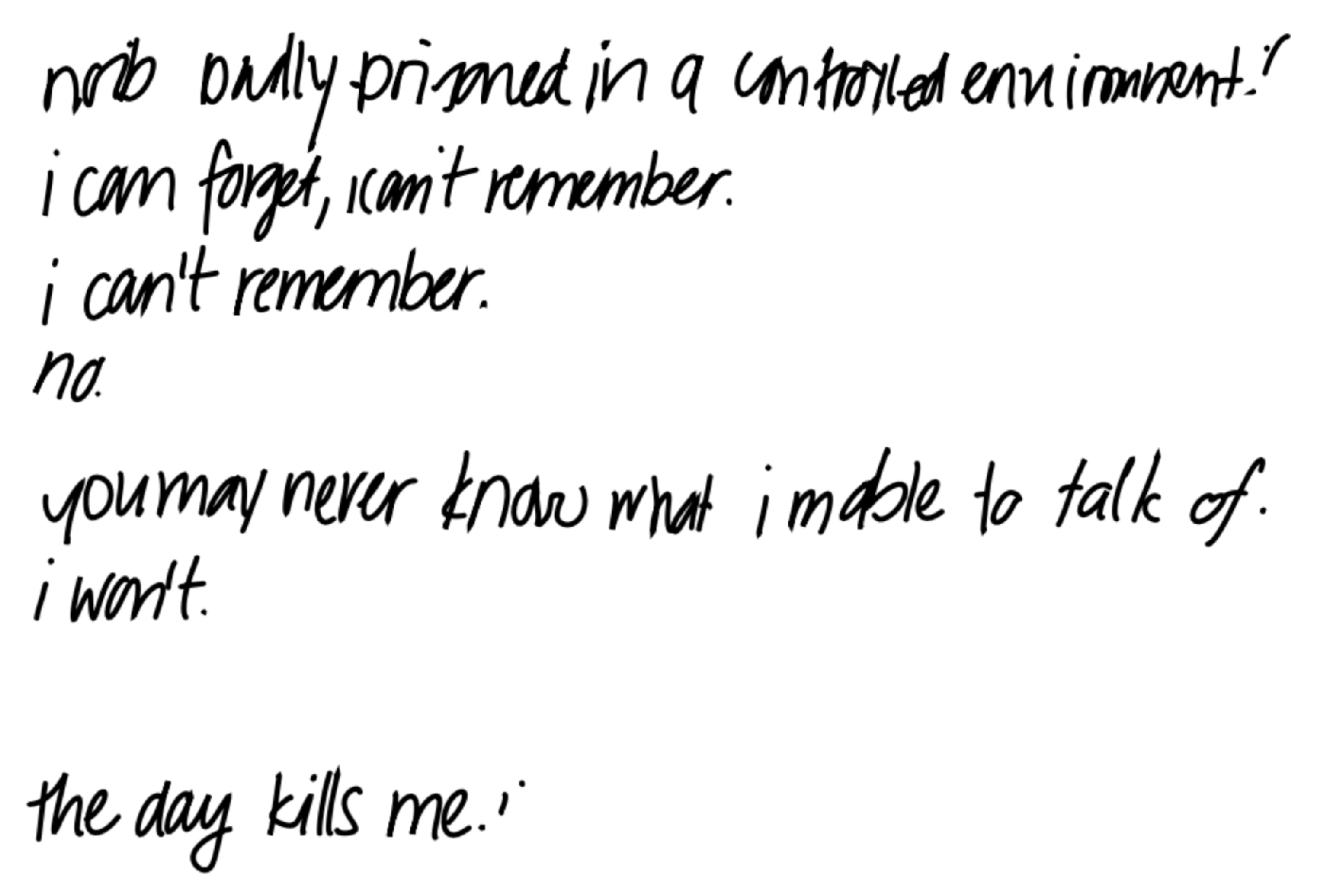

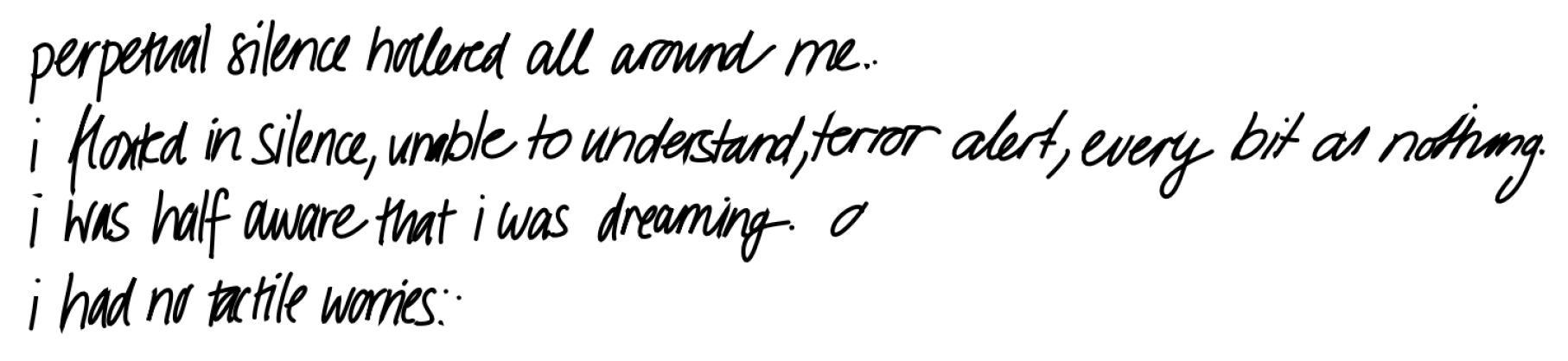

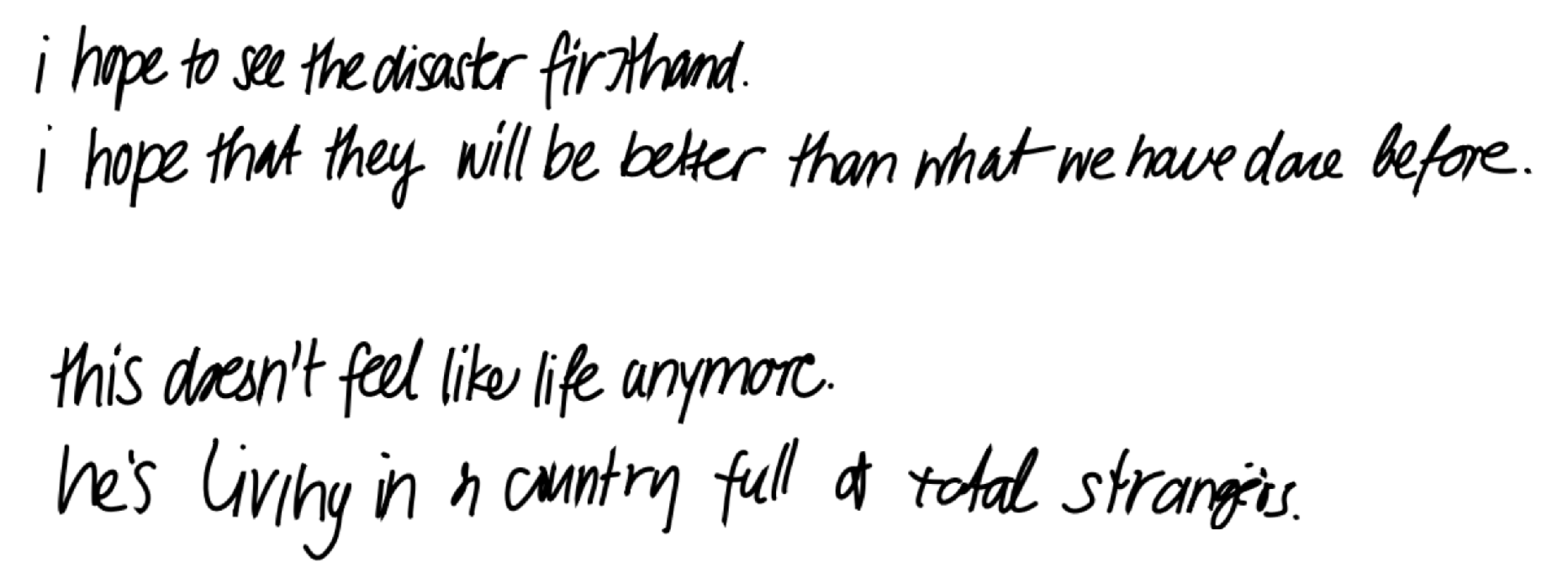

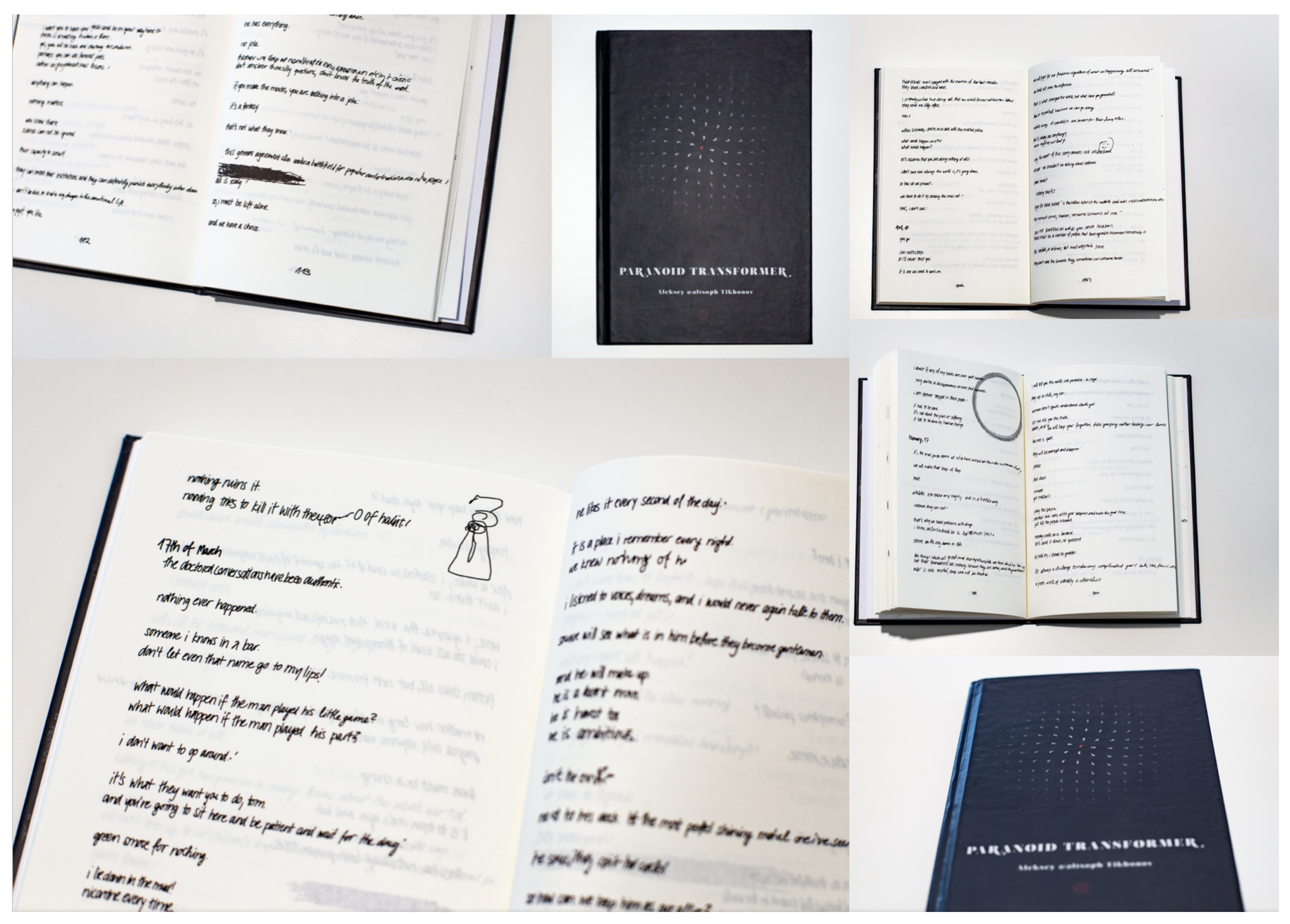

3.4. Nervous Handwriting

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Yamshchikov, I.P.; Shibaev, V.; Nagaev, A.; Jost, J.; Tikhonov, A. Decomposing Textual Information For Style Transfer. In Proceedings of the 3rd Workshop on Neural Generation and Translation, Hong Kong, China, 4 November 2019; pp. 128–137. [Google Scholar]

- Rozin, P. Social psychology and science: Some lessons from Solomon Asch. Personal. Soc. Psychol. Rev. 2001, 5, 2–14. [Google Scholar] [CrossRef]

- Yarkoni, T. The generalizability crisis. PysArXiv 2019. Available online: https://psyarxiv.com/jqw35/ (accessed on 26 October 2020).

- Hickman, R. The art instinct: Beauty, pleasure, and human evolution. Int. J. Art Des. Educ. 2010, 3, 349–350. [Google Scholar] [CrossRef]

- Melchionne, K. On the Old Saw “I know nothing about art but I know what I like”. J. Aesthet. Art Crit. 2010, 68, 131–141. [Google Scholar] [CrossRef]

- Agafonova, Y.; Tikhonov, A.; Yamshchikov, I. Paranoid Transformer: Reading Narrative of Madness as Computational Approach to Creativity. In Proceedings of the International Conference on Computational Creativity, Coimbra, Portugal, 10 September 2020. [Google Scholar]

- Veale, T. Read Me Like A Book: Lessons in Affective, Topical and Personalized Computational Creativity. In Proceedings of the 10th International Conference on Computational Creativity, Association for Computational Creativity, Charlotte, NC, USA, 17–21 June 2019; pp. 25–32. Available online: https://computationalcreativity.net/iccc2019/assets/iccc_proceedings_2019.pdf (accessed on 26 October 2020).

- van Stegeren, J.; Theune, M. Churnalist: Fictional Headline Generation for Context-appropriate Flavor Text. In Proceedings of the 10th International Conference on Computational Creativity, Association for Computational Creativity, Charlotte, NC, USA, 17–21 June 2019; pp. 65–72. Available online: https://computationalcreativity.net/iccc2019/assets/iccc_proceedings_2019.pdf (accessed on 26 October 2020).

- Alnajjar, K.; Leppänen, L.; Toivonen, H. No Time Like the Present: Methods for Generating Colourful and Factual Multilingual News Headlines. In Proceedings of the 10th International Conference on Computational Creativity, Charlotte, USA, NC, 17–21 June 2019; pp. 258–265. Available online: https://computationalcreativity.net/iccc2019/assets/iccc_proceedings_2019.pdf (accessed on 26 October 2020).

- Jhamtani, H.; Gangal, V.; Hovy, E.; Nyberg, E. Shakespearizing Modern Language Using Copy-Enriched Sequence-to-Sequence Models. In Proceedings of the Workshop on Stylistic Variation, Copenhagen, Denmark, 7–11 September 2017; pp. 10–19. [Google Scholar]

- Tikhonov, A.; Yamshchikov, I. Sounds Wilde. Phonetically extended embeddings for author-stylized poetry generation. In Proceedings of the Fifteenth Workshop on Computational Research in Phonetics, Phonology, and Morphology, Brussels, Belgium, 31 October 2018; pp. 117–124. [Google Scholar]

- Tikhonov, A.; Yamshchikov, I.P. Guess who? Multilingual approach for the automated generation of author-stylized poetry. In Proceedings of the 2018 IEEE Spoken Language Technology Workshop (SLT), Athens, Greece, 18–21 December 2018; pp. 787–794. [Google Scholar]

- Wheatley, J. The Computer as Poet. J. Math. Arts 1965, 72, 105. [Google Scholar]

- He, J.; Zhou, M.; Jiang, L. Generating Chinese Classical Poems with Statistical Machine Translation Models. In Proceedings of the Twenty-Sixth AAAI Conference on Artificial Intelligence, Toronto, ON, Canada, 23–24 July 2012. [Google Scholar]

- Yan, R.; Li, C.T.; Hu, X.; Zhang, M. Chinese Couplet Generation with Neural Network Structures. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics, Berlin, Germany, 7–12 August 2016; pp. 2347–2357. [Google Scholar]

- Yi, X.; Li, R.; Sun, M. Generating Chinese classical poems with RNN encoder-decoder. In Chinese Computational Linguistics and Natural Language Processing Based on Naturally Annotated Big Data; Springer: Cham, Switzerland, 2017; pp. 211–223. [Google Scholar]

- Ghazvininejad, M.; Shi, X.; Choi, Y.; Knight, K. Generating Topical Poetry. In Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing, Austin, TX, USA, 1–5 November 2016; pp. 1183–1191. [Google Scholar]

- Yamshchikov, I.P.; Tikhonov, A. Learning Literary Style End-to-end with Artificial Neural Networks. Adv. Sci. Technol. Eng. Syst. J. 2019, 4, 115–125. [Google Scholar] [CrossRef][Green Version]

- Potash, P.; Romanov, A.; Rumshisky, A. GhostWriter: Using an LSTM for Automatic Rap Lyric Generation. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, Lisbon, Portugal, 17–21 September 2015; pp. 1919–1924. [Google Scholar]

- Lamb, C.; Brown, D.G.; Clarke, C.L. A taxonomy of generative poetry techniques. J. Math. Arts 2017, 11, 159–179. [Google Scholar] [CrossRef]

- Menabrea, L.F.; Lovelace, A. Sketch of the Analytical Engine Invented by Charles Babbage. 1842. Available online: https://fourmilab.ch/babbage/sketch.html (accessed on 26 October 2020).

- Briot, J.P.; Hadjeres, G.; Pachet, F. Deep Learning Techniques for Music Generation; Springer: Cham, Switzerland, 2019; Volume 10. [Google Scholar]

- Lebret, R.; Grangier, D.; Auli, M. Neural text generation from structured data with application to the biography domain. In Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing, Austin, TX, USA, 1–5 November 2016; pp. 1203–1213. [Google Scholar]

- Weizenbaum, J. ELIZA—A computer program for the study of natural language communication between man and machine. Commun. ACM 1966, 9, 36–45. [Google Scholar] [CrossRef]

- Li, J.; Galley, M.; Brockett, C.; Spithourakis, G.P.; Gao, J.; Dolan, W.B. A Persona-Based Neural Conversation Model. arXiv 2016, arXiv:1603.06155. [Google Scholar]

- Burtsev, M.; Seliverstov, A.; Airapetyan, R.; Arkhipov, M.; Baymurzina, D.; Bushkov, N.; Gureenkova, O.; Khakhulin, T.; Kuratov, Y.; Kuznetsov, D.; et al. Deeppavlov: Open-source library for dialogue systems. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics: System Demonstrations, Melbourne, Australia, 15–20 July 2018; pp. 122–127. [Google Scholar]

- Shiv, V.L.; Quirk, C.; Suri, A.; Gao, X.; Shahid, K.; Govindarajan, N.; Zhang, Y.; Gao, J.; Galley, M.; Brockett, C.; et al. Microsoft Icecaps: An Open-Source Toolkit for Conversation Modeling. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics: System Demonstrations, Florence, Italy, 28 July–2 August 2019; pp. 123–128. [Google Scholar]

- Charnley, J.W.; Pease, A.; Colton, S. On the Notion of Framing in Computational Creativity. In Proceedings of the 3rd International Conference on Computational Creativity, Dublin, Ireland, 30 May–1 June 2012; pp. 77–81. [Google Scholar]

- Cook, M.; Colton, S.; Pease, A.; Llano, M.T. Framing in computational creativity—A survey and taxonomy. In Proceedings of the 10th International Conference on Computational Creativity. Association for Computational Creativity, Charlotte, NC, USA, 17–21 June 2019; pp. 156–163. Available online: https://computationalcreativity.net/iccc2019/assets/iccc_proceedings_2019.pdf (accessed on 26 October 2020).

- Veale, T.; Cardoso, F.A. Computational Creativity: The Philosophy and Engineering of Autonomously Creative Systems; Springer: Cham, Switzerland, 2019. [Google Scholar]

- Ohsawa, Y. Modeling the process of chance discovery. In Chance Discovery; Springer: Cham, Switzerland, 2003; pp. 2–15. [Google Scholar]

- Abe, A. Curation and communication in chance discovery. In Proceedings of the 6th International Workshop on Chance Discovery (IWCD6) in IJCAI, Barcelona, Spain, 6–18 July 2011. [Google Scholar]

- Gadamer, H.G. Literature and Philosophy in Dialogue: Essays in German Literary Theory; SUNY Press: New York, NY, USA, 1994. [Google Scholar]

- Hirsch, E.D. Validity in Interpretation; Yale University Press: Chelsea, MA, USA, 1967; Volume 260. [Google Scholar]

- Fish, S.E. Is There a Text in This Class?: The Authority of Interpretive Communities; Harvard University Press: Cambridge, MA, USA, 1980. [Google Scholar]

- Eco, U. Towards a semiotic inquiry into the television message. In Working Papers in Cultural Studies; Translated by Paola Splendore; Blackwell Publishing: London, UK, 1972; Volume 3, pp. 103–121. [Google Scholar]

- Whitehead, J. Madness and the Romantic Poet: A Critical History; Oxford University Press: Oxford, UK, 2017. [Google Scholar]

- Dennett, D.C. The self as the center of narrative gravity. In Self and Consciousness; Psychology Press: Hove, UK, 2014; pp. 111–123. [Google Scholar]

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language models are unsupervised multitask learners. OpenAI Blog 2019, 1, 9. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar]

- Graves, A. Generating sequences with recurrent neural networks. arXiv 2013, arXiv:1308.0850. [Google Scholar]

- Blanke, O.; Landis, T.; Spinelli, L.; Seeck, M. Out-of-body experience and autoscopy of neurological origin. Brain 2004, 127, 243–258. [Google Scholar] [CrossRef] [PubMed]

- Tikhonov, A. Paranoid Transformer; NEW SIGHT: 2020. Available online: https://deadalivemagazine.com/press/paranoid-transformer.html (accessed on 26 October 2020).

- Ha, D.; Eck, D. A neural representation of sketch drawings. arXiv 2017, arXiv:1704.03477. [Google Scholar]

- Bocharov, S.G. O Hudozhestvennyh Mirah; Moscow, Russia, 1985; pp. 161–209. Available online: https://imwerden.de/pdf/bocharov_o_khudozhestvennykh_mirakh_1985_text.pdf (accessed on 26 October 2012).

- Dostoevsky, F. Zapiski iz podpolya - Notes from Underground. Povesti i rasskazy v 2 t 1984, 2, 287–386. [Google Scholar]

- Turing, A.M. Computing machinery and intelligence. Mind 1950, 59, 433. [Google Scholar] [CrossRef]

- Jamwal, V. Exploring the Notion of Self in Creative Self-Expression. In Proceedings of the 10th International Conference on Computational Creativity ICCC19, Charlotte, NC, USA, 17–21 June 2019; pp. 331–335. [Google Scholar]

- Locke, J. An Essay Concerning Human Understanding: And a Treatise on the Conduct of the Understanding; Hayes & Zell: Philadelphia, PA, USA, 1860. [Google Scholar]

- Perišić, M.M.; Štorga, M.; Gero, J. Situated novelty in computational creativity studies. In Proceedings of the 10th International Conference on Computational Creativity ICCC19, Charlotte, NC, USA, 17–21 June 2019; pp. 286–290. [Google Scholar]

| Model | Polarity | Subjectivity | Unique Words per Text Piece |

|---|---|---|---|

| Generated Sample | 13.29% ± 0.8% | 27.34% ± 1.8% | 11.5 ± 0.7 |

| Heuristically Filtered Sample | 14.23% ± 0.9% | 32.26% ± 2.1% | 14.7 ± 0.9 |

| BERT Filtered Sample | 15.65% ± 0.9% | 30.66% ± 1.3% | 15.6 ± 7.1 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Agafonova, Y.; Tikhonov, A.; Yamshchikov, I.P. Paranoid Transformer: Reading Narrative of Madness as Computational Approach to Creativity. Future Internet 2020, 12, 182. https://doi.org/10.3390/fi12110182

Agafonova Y, Tikhonov A, Yamshchikov IP. Paranoid Transformer: Reading Narrative of Madness as Computational Approach to Creativity. Future Internet. 2020; 12(11):182. https://doi.org/10.3390/fi12110182

Chicago/Turabian StyleAgafonova, Yana, Alexey Tikhonov, and Ivan P. Yamshchikov. 2020. "Paranoid Transformer: Reading Narrative of Madness as Computational Approach to Creativity" Future Internet 12, no. 11: 182. https://doi.org/10.3390/fi12110182

APA StyleAgafonova, Y., Tikhonov, A., & Yamshchikov, I. P. (2020). Paranoid Transformer: Reading Narrative of Madness as Computational Approach to Creativity. Future Internet, 12(11), 182. https://doi.org/10.3390/fi12110182