A Knowledge-Driven Multimedia Retrieval System Based on Semantics and Deep Features

Abstract

1. Introduction

2. Related Work

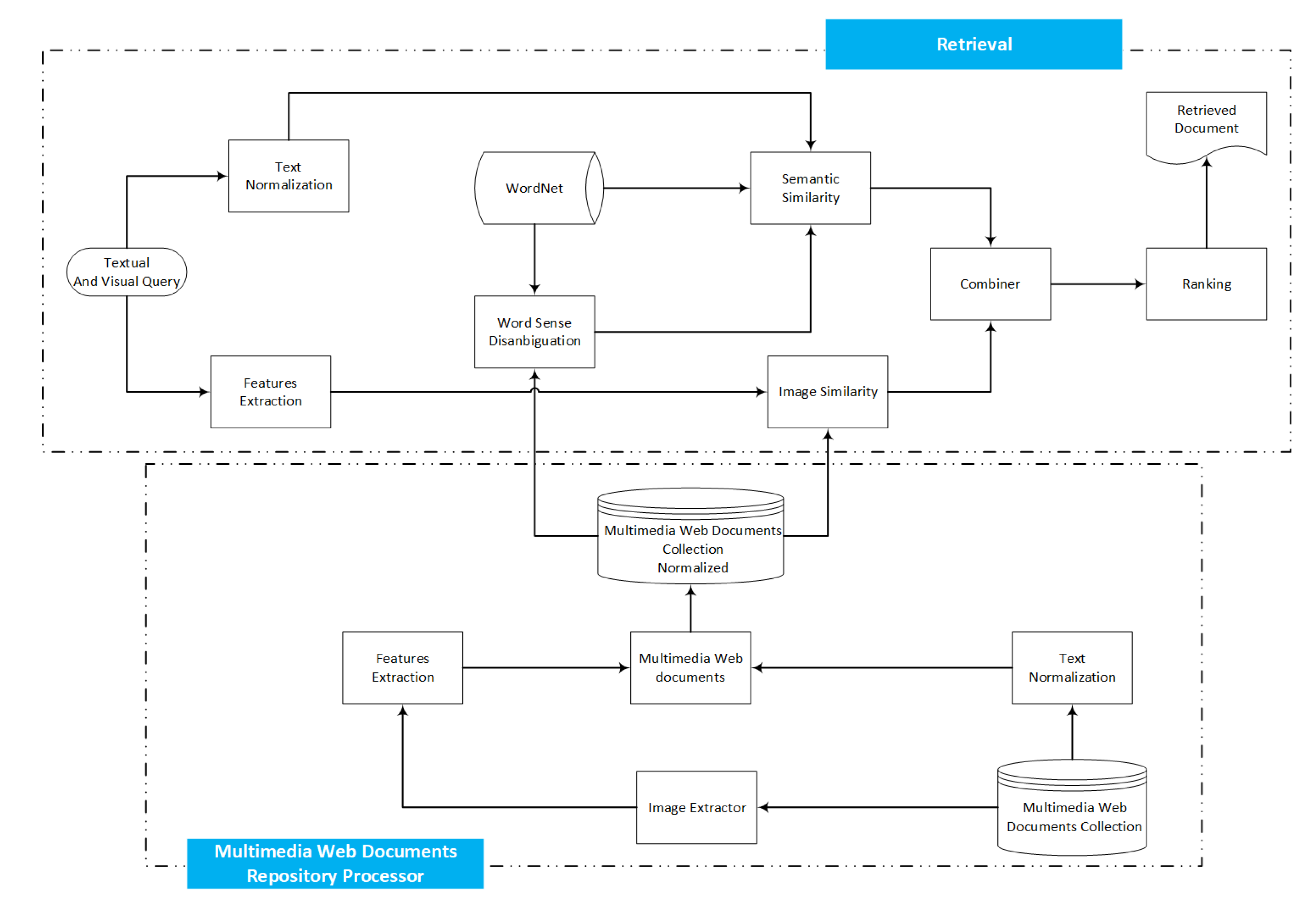

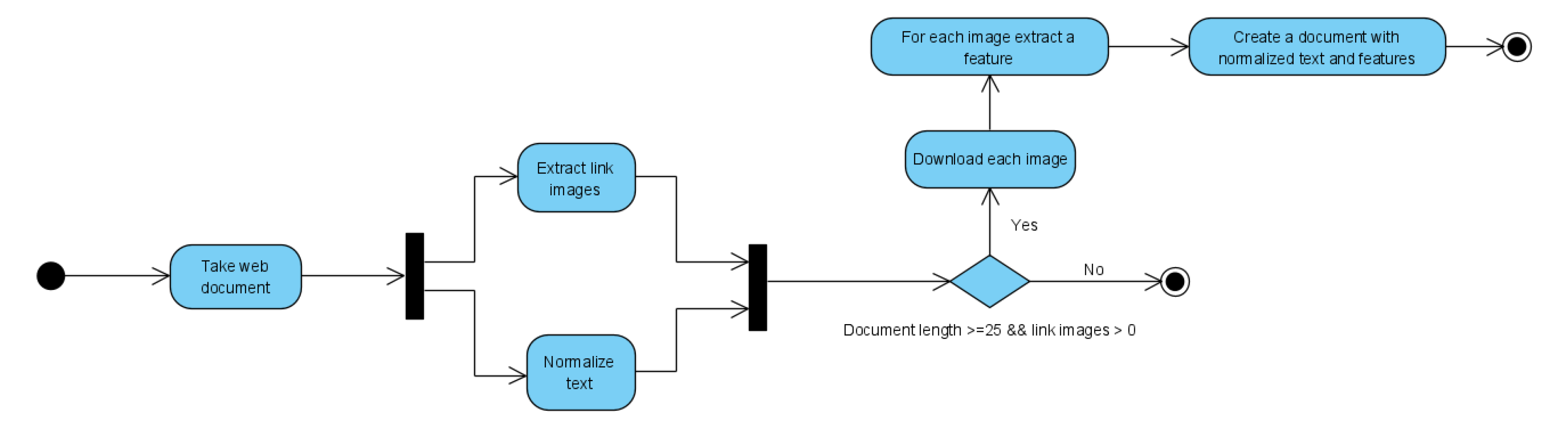

3. The Proposed System

- Shortest Path based Measure: this measure only takes into account . It assumes that the depend on closeness of two concepts are in the taxonomy, and that a similarity between two terms is proportional to the number of edges between them.

- Wu & Palmer’s Measure: it introduced a scaled measure. This similarity measure considers the position of concepts c1 and c2 in the taxonomy relatively to the position of the most specific common concept . It assumes that the similarity between two concepts is the function of path length and depth in path-based measures.

- Leakcock & Chodorow’s Measure: it uses the maximum depth of taxonomy from the considered terms.

- Resnik’s Measure: it assumes that for two given concepts, similarity is depended on the information content that subsumes them in the taxonomy

- Jiang’s Measure: Jiang’s Measure uses both the amount of information needed to state the shared information between the two concepts and the information needed to fully describe these terms. The value is a semantic distance between two concepts. Semantic similarity is the opposite of the semantic distance.

- Name: web site name;

- Url: web site url;

- Images: array like structure, each element is a json like structure (see Figure 4);

- -

- Image name;

- -

- Image path: image storage path;

- -

- PHOG: PHOG feature;

- -

- ORB: 2-D array of ORB key point;

- -

- Deep Descriptor: array of deep descriptors;

- S: the set of signs;

- P: the set of properties useed to relate signs to concepts;

- C: the set of constraints on the set P.

4. Experimental Results

- VGG-16 with global max pooling and global average pooling;

- ResNet-50 with global max pooling and global average pooling;

- Inception V3 with global max pooling and global average pooling;

- MobileNet V2 with global max pooling and global average pooling.

- Case A: text query:

- -

- Path similarity;

- -

- Resnik similarity;

- Case B: visual query, using deep descriptor obtained with MobileNet V2 with global average pooling;

- Case C: visual and text query:

- -

- Path similarity and deep descriptor;

- -

- Resnik similarity and deep descriptor;

5. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Baeza-Yates, R.; Ribeiro-Neto, B. Modern Information Retrieval: The Concepts and Technology Behind Search, 2nd ed.; Addison-Wesley Publishing Company: Boston, MA, USA, 2011. [Google Scholar]

- Rinaldi, A.M. An ontology-driven approach for semantic information retrieval on the web. ACM Trans. Internet Technol. (TOIT) 2009, 9, 10. [Google Scholar] [CrossRef]

- Saracevic, T. Relevance: A review of and a framework for the thinking on the notion in information science. J. Am. Soc. Inf. Sci. 1975, 26, 321–343. [Google Scholar] [CrossRef]

- Swanson, D.R. Subjective versus objective relevance in bibliographic retrieval systems. Libr. Q. 1986, 56, 389–398. [Google Scholar] [CrossRef]

- Harter, S.P. Psychological relevance and information science. J. Am. Soc. Inf. Sci. 1992, 43, 602–615. [Google Scholar] [CrossRef]

- Barry, C.L. Document representations and clues to document relevance. J. Am. Soc. Inf. Sci. 1998, 49, 1293–1303. [Google Scholar] [CrossRef]

- Park, T.K. The nature of relevance in information retrieval: An empirical study. Libr. Q. 1993, 63, 318–351. [Google Scholar]

- Vakkari, P.; Hakala, N. Changes in relevance criteria and problem stages in task performance. J. Doc. 2000, 56, 540–562. [Google Scholar] [CrossRef]

- Saracevic, T. Relevance reconsidered. In Proceedings of the Second Conference on Conceptions of Library and Information Science (CoLIS 2), Seattle, WA, USA, 13–16 October 1996; ACM: New York, NY, USA, 1996; pp. 201–218. [Google Scholar]

- Miller, K. Communication Theories; Macgraw-Hill: New York, NY, USA, 2005. [Google Scholar]

- Danesi, M.; Perron, P. Analyzing Cultures: An Introduction and Handbook; Indiana University Press: Bloomington, IN, USA, 1999. [Google Scholar]

- Rinaldi, A.M.; Russo, C. User-centered information retrieval using semantic multimedia big data. In Proceedings of the 2018 IEEE International Conference on Big Data (Big Data), Seattle, WA, USA, 10–13 December 2018; pp. 2304–2313. [Google Scholar]

- Smeulders, A.W.; Worring, M.; Santini, S.; Gupta, A.; Jain, R. Content-based image retrieval at the end of the early years. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1349–1380. [Google Scholar] [CrossRef]

- Chen, Y.; Wang, J.Z.; Krovetz, R. An unsupervised learning approach to content-based image retrieval. In Proceedings of the Seventh International Symposium on Signal Processing and Its Applications, Paris, France, 4 July 2003; Volume 1, pp. 197–200. [Google Scholar]

- Rui, Y.; Huang, T.S.; Chang, S.F. Image retrieval: Current techniques, promising directions, and open issues. J. Vis. Commun. Image Represent. 1999, 10, 39–62. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, D.; Lu, G.; Ma, W.Y. A survey of content-based image retrieval with high-level semantics. Pattern Recognit. 2007, 40, 262–282. [Google Scholar] [CrossRef]

- Eakins, J.; Graham, M. Content-Based Image Retrieval. 1999. Available online: http://www.leeds.ac.uk/educol/documents/00001240.htm (accessed on 2 September 2020).

- Meng, L.; Huang, R.; Gu, J. A review of semantic similarity measures in wordnet. Int. J. Hybrid Inf. Technol. 2013, 6, 1–12. [Google Scholar]

- Wang, S.; Han, K.; Jin, J. Review of image low-level feature extraction methods for content-based image retrieval. Sens. Rev. 2019, 39, 783–809. [Google Scholar] [CrossRef]

- Zheng, L.; Yang, Y.; Tian, Q. SIFT meets CNN: A decade survey of instance retrieval. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 1224–1244. [Google Scholar] [CrossRef]

- Bosch, A.; Zisserman, A.; Munoz, X. Representing shape with a spatial pyramid kernel. In Proceedings of the 6th ACM International Conference on Image and Video Retrieval, Amsterdam, The Netherlands, 9–11 July 2007; pp. 401–408. [Google Scholar]

- Mikolajczyk, K.; Tuytelaars, T. Local Image Features. In Encyclopedia of Biometrics; Li, S.Z., Jain, A.K., Eds.; Springer: Boston, MA, USA, 2015; pp. 1100–1105. [Google Scholar]

- Introduction to SIFT (Scale-Invariant Feature Transform). Available online: https://docs.opencv.org/master/da/df5/tutorial_py_sift_intro.html (accessed on 1 September 2020).

- Introduction to SURF (Speeded-Up Robust Features). Available online: https://opencv-python-tutroals.readthedocs.io/en/latest/py_tutorials/py_feature2d/py_surf_intro/py_surf_intro.html (accessed on 1 September 2020).

- ORB (Oriented FAST and Rotated BRIEF). Available online: https://docs.opencv.org/3.4/d1/d89/tutorial_py_orb.html (accessed on 1 September 2020).

- Karami, E.; Prasad, S.; Shehata, M. Image matching using SIFT, SURF, BRIEF and ORB: Performance comparison for distorted images. arXiv 2017, arXiv:1710.02726. [Google Scholar]

- Alom, M.Z.; Taha, T.M.; Yakopcic, C.; Westberg, S.; Sidike, P.; Nasrin, M.S.; Hasan, M.; Van Essen, B.C.; Awwal, A.A.; Asari, V.K. A state-of-the-art survey on deep learning theory and architectures. Electronics 2019, 8, 292. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar]

- Wan, J.; Wang, D.; Hoi, S.C.H.; Wu, P.; Zhu, J.; Zhang, Y.; Li, J. Deep learning for content-based image retrieval: A comprehensive study. In Proceedings of the 22nd ACM International Conference on Multimedia, Mountain View, CA, USA, 18–19 June 2014; pp. 157–166. [Google Scholar]

- Leng, C.; Zhang, H.; Li, B.; Cai, G.; Pei, Z.; He, L. Local Feature Descriptor for Image Matching: A Survey. IEEE Access 2019, 7, 6424–6434. [Google Scholar] [CrossRef]

- Chang, E.; Goh, K.; Sychay, G.; Wu, G. CBSA: Content-based soft annotation for multimodal image retrieval using Bayes point machines. IEEE Trans. Circuits Syst. Video Technol. 2003, 13, 26–38. [Google Scholar] [CrossRef]

- Zhao, R.; Grosky, W.I. Narrowing the semantic gap-improved text-based web document retrieval using visual features. IEEE Trans. Multimed. 2002, 4, 189–200. [Google Scholar] [CrossRef]

- Wang, X.J.; Ma, W.Y.; Xue, G.R.; Li, X. Multi-model similarity propagation and its application for web image retrieval. In Proceedings of the 12th Annual ACM International Conference on Multimedia, New York, NY, USA, 10–16 October 2004; pp. 944–951. [Google Scholar]

- Clinchant, S.; Ah-Pine, J.; Csurka, G. Semantic combination of textual and visual information in multimedia retrieval. In Proceedings of the 1st ACM International Conference on Multimedia Retrieval, Trento, Italy, 18–20 April 2011; pp. 1–8. [Google Scholar]

- Giordano, D.; Kavasidis, I.; Pino, C.; Spampinato, C. A semantic-based and adaptive architecture for automatic multimedia retrieval composition. In Proceedings of the 2011 9th International Workshop on Content-Based Multimedia Indexing (CBMI), Madrid, Spain, 13–15 June 2011; pp. 181–186. [Google Scholar]

- Buscaldi, D.; Zargayouna, H. Yasemir: Yet another semantic information retrieval system. In Proceedings of the Sixth International Workshop on Exploiting Semantic Annotations in Information Retrieval, San Francisco, CA, USA, 28 October 2013; pp. 13–16. [Google Scholar]

- Kannan, P.; Bala, P.S.; Aghila, G. A comparative study of multimedia retrieval using ontology for semantic web. In Proceedings of the IEEE-International Conference on Advances in Engineering, Science and Management (ICAESM-2012), Nagapattinam, Tamil Nadu, India, 30–31 March 2012; pp. 400–405. [Google Scholar]

- Moscato, V.; Picariello, A.; Rinaldi, A.M. Towards a user based recommendation strategy for digital ecosystems. Knowl.-Based Syst. 2013, 37, 165–175. [Google Scholar] [CrossRef]

- Cao, J.; Huang, Z.; Shen, H.T. Local deep descriptors in bag-of-words for image retrieval. In Proceedings of the on Thematic Workshops of ACM Multimedia, Mountain View, CA, USA, 23–27 October 2017; pp. 52–58. [Google Scholar]

- Boer, M.H.D.; Lu, Y.J.; Zhang, H.; Schutte, K.; Ngo, C.W.; Kraaij, W. Semantic reasoning in zero example video event retrieval. ACM Trans. Multimed. Comput. Commun. Appl. (TOMM) 2017, 13, 1–17. [Google Scholar] [CrossRef]

- Habibian, A.; Mensink, T.; Snoek, C.G. Videostory: A new multimedia embedding for few-example recognition and translation of events. In Proceedings of the 22nd ACM International Conference on Multimedia, Mountain View, CA, USA, 18–19 June 2014; pp. 17–26. [Google Scholar]

- Miller, G.A. WordNet: A Lexical Database for English. Commun. ACM 1995, 38, 39–41. [Google Scholar] [CrossRef]

- Purificato, E.; Rinaldi, A.M. Multimedia and geographic data integration for cultural heritage information retrieval. Multimed. Tools Appl. 2018, 77, 27447–27469. [Google Scholar] [CrossRef]

- Rinaldi, A. A multimedia ontology model based on linguistic properties and audio-visual features. Inf. Sci. 2014, 277, 234–246. [Google Scholar] [CrossRef]

- Rinaldi, A.M.; Russo, C. A semantic-based model to represent multimedia big data. In Proceedings of the 10th International Conference on Management of Digital EcoSystems, Tokyo, Japan, 25–28 September 2018; pp. 31–38. [Google Scholar]

- Web Ontology Language. Available online: https://www.w3.org/OWL/ (accessed on 1 Semptember 2020).

- ImageNet. Available online: http://www.image-net.org/ (accessed on 1 Semptember 2020).

- Lesk, M. Automatic sense disambiguation using machine readable dictionaries: How to tell a pine cone from an ice cream cone. In Proceedings of the 5th Annual International Conference on Systems Documentation, Toronto, ON, Canada, 8–11 June 1986; pp. 24–26. [Google Scholar]

- Vasilescu, F.; Langlais, P.; Lapalme, G. Evaluating Variants of the Lesk Approach for Disambiguating Words. Available online: http://www.iro.umontreal.ca/~felipe/Papers/paper-lrec-2004.pdf (accessed on 27 October 2020).

- Tolias, G.; Sicre, R.; Jégou, H. Particular object retrieval with integral max-pooling of CNN activations. arXiv 2015, arXiv:1511.05879. [Google Scholar]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- Kittler, J. Combining classifiers: A theoretical framework. Pattern Anal. Appl. 1998, 1, 18–27. [Google Scholar] [CrossRef]

- 20 Newsgroups Scikit-Lean. Available online: https://scikit-learn.org/0.15/datasets/twenty_newsgroups.html (accessed on 1 September 2020).

- Visual Object Classes Challenge 2012 (VOC2012). Available online: http://host.robots.ox.ac.uk/pascal/VOC/voc2012/ (accessed on 1 September 2020).

- DMOZ Website. Available online: https://dmoz-odp.org/ (accessed on 1 September 2020).

- Caldarola, E.; Rinaldi, A. A multi-strategy approach for ontology reuse through matching and integration techniques. Adv. Intell. Syst. Comput. 2018, 561, 63–90. [Google Scholar]

- Rinaldi, A.M.; Russo, C. A matching framework for multimedia data integration using semantics and ontologies. In Proceedings of the 2018 IEEE 12th International Conference on Semantic Computing (ICSC), Laguna Hills, CA, USA, 31 January–2 February 2018; pp. 363–368. [Google Scholar]

| Topic | Documents |

|---|---|

| alt.atheism | 799 |

| comp.graphics | 973 |

| comp.os.ms-windows.misc | 985 |

| comp.sys.ibm.pc.hardware | 982 |

| comp.sys.mac.hardware | 961 |

| comp.windows.x | 980 |

| misc.forsale | 972 |

| rec.autos | 990 |

| rec.motorcycles | 994 |

| rec.sport.baseball | 994 |

| rec.sport.hockey | 999 |

| sci.crypt | 991 |

| sci.electronics | 981 |

| sci.med | 990 |

| sci.space | 987 |

| soc.religion.christian | 997 |

| talk.politics.guns | 910 |

| talk.politics.mideast | 940 |

| talk.politics.misc | 775 |

| talk.religion.misc | 628 |

| Total | 18,828 |

| Object | Images |

|---|---|

| Aeroplane | 1340 |

| Bicycle | 1104 |

| Bird | 1530 |

| Boat | 1016 |

| Bottle | 1412 |

| Bus | 842 |

| Car | 2322 |

| Cat | 2160 |

| Chair | 2238 |

| Cow | 606 |

| Diningtable | 1176 |

| Dog | 2572 |

| Horse | 964 |

| Motorbike | 1052 |

| Person | 8174 |

| Pottedplant | 1054 |

| Sheep | 650 |

| Sofa | 1014 |

| Train | 1088 |

| Tvmonitor | 1150 |

| Total | 23,080 |

| Top Category | Num. |

|---|---|

| Sports | 1087 |

| Society | 824 |

| Computers | 758 |

| Shopping | 1537 |

| Arts | 785 |

| Business | 1895 |

| Health | 1025 |

| Games | 385 |

| News | 459 |

| Science | 509 |

| Total | 9264 |

| Level | Num. |

|---|---|

| I | 2 |

| II | 54 |

| III | 1023 |

| IV | 2353 |

| V | 2485 |

| VI | 1699 |

| VII | 918 |

| VIII | 641 |

| XI | 81 |

| X | 8 |

| Total | 9264 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rinaldi, A.M.; Russo, C.; Tommasino, C. A Knowledge-Driven Multimedia Retrieval System Based on Semantics and Deep Features. Future Internet 2020, 12, 183. https://doi.org/10.3390/fi12110183

Rinaldi AM, Russo C, Tommasino C. A Knowledge-Driven Multimedia Retrieval System Based on Semantics and Deep Features. Future Internet. 2020; 12(11):183. https://doi.org/10.3390/fi12110183

Chicago/Turabian StyleRinaldi, Antonio Maria, Cristiano Russo, and Cristian Tommasino. 2020. "A Knowledge-Driven Multimedia Retrieval System Based on Semantics and Deep Features" Future Internet 12, no. 11: 183. https://doi.org/10.3390/fi12110183

APA StyleRinaldi, A. M., Russo, C., & Tommasino, C. (2020). A Knowledge-Driven Multimedia Retrieval System Based on Semantics and Deep Features. Future Internet, 12(11), 183. https://doi.org/10.3390/fi12110183