Efficient Tensor Sensing for RF Tomographic Imaging on GPUs

Abstract

1. Introduction

2. Related Works

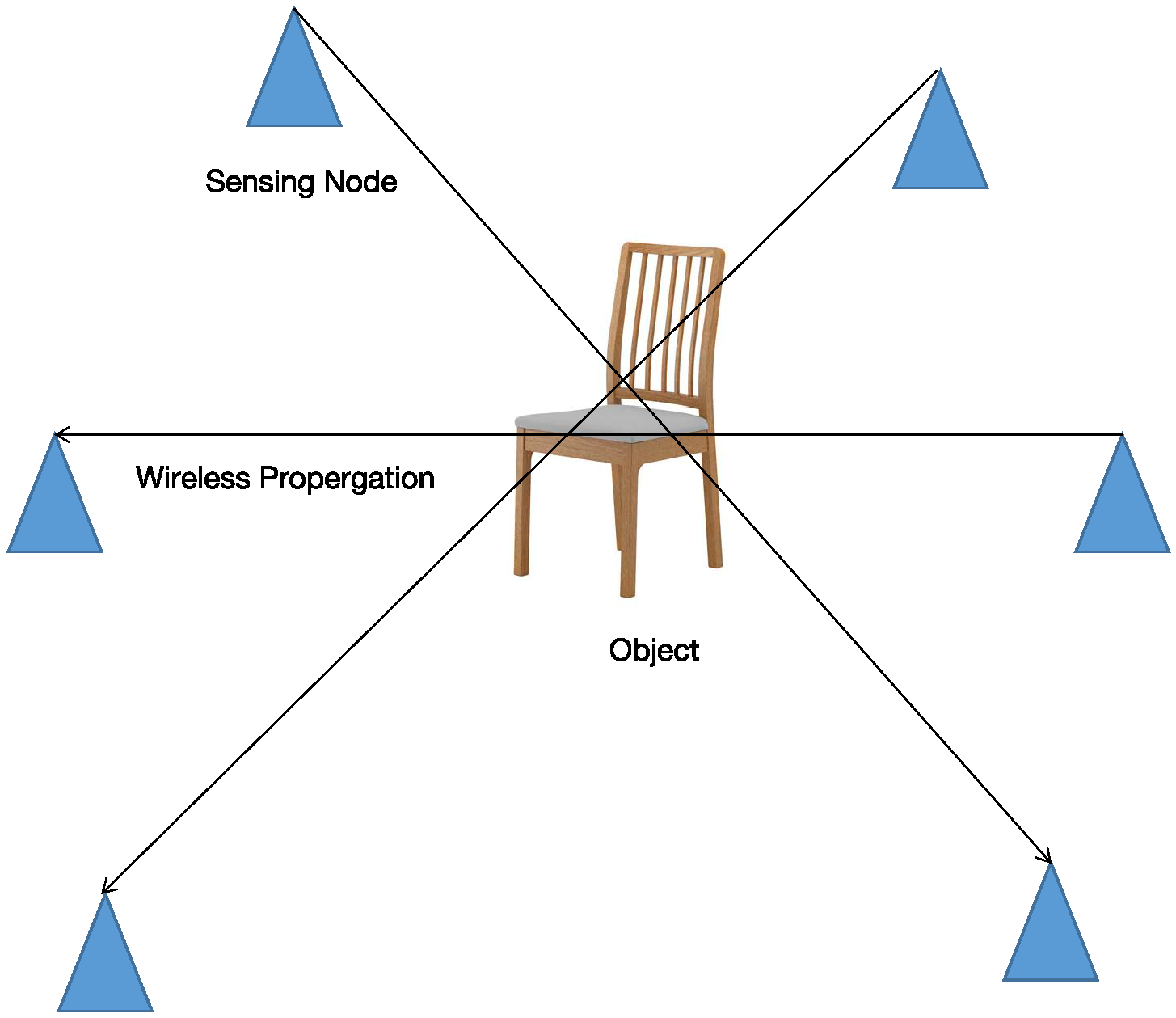

3. Description of the Tensor Sensing Problem

3.1. Alt-Min Algorithm

| Algorithm 1 Alt-Min algorithm of the tensor sensing. |

| Input: linear map matrix , measurement vector , iteration number L. |

| Output: squeezed : |

|

| Output: Pair of tensors (). |

3.2. Implementation of the Tensor Sensing on CPU

| Algorithm 2 Implementation of the tensor sensing on CPU. |

| Input: Randomly-initialized matrix , measurement vector , linear map matrix |

| Output: |

|

4. The Implementation and Optimization of Efficient GPU Tensor Sensing

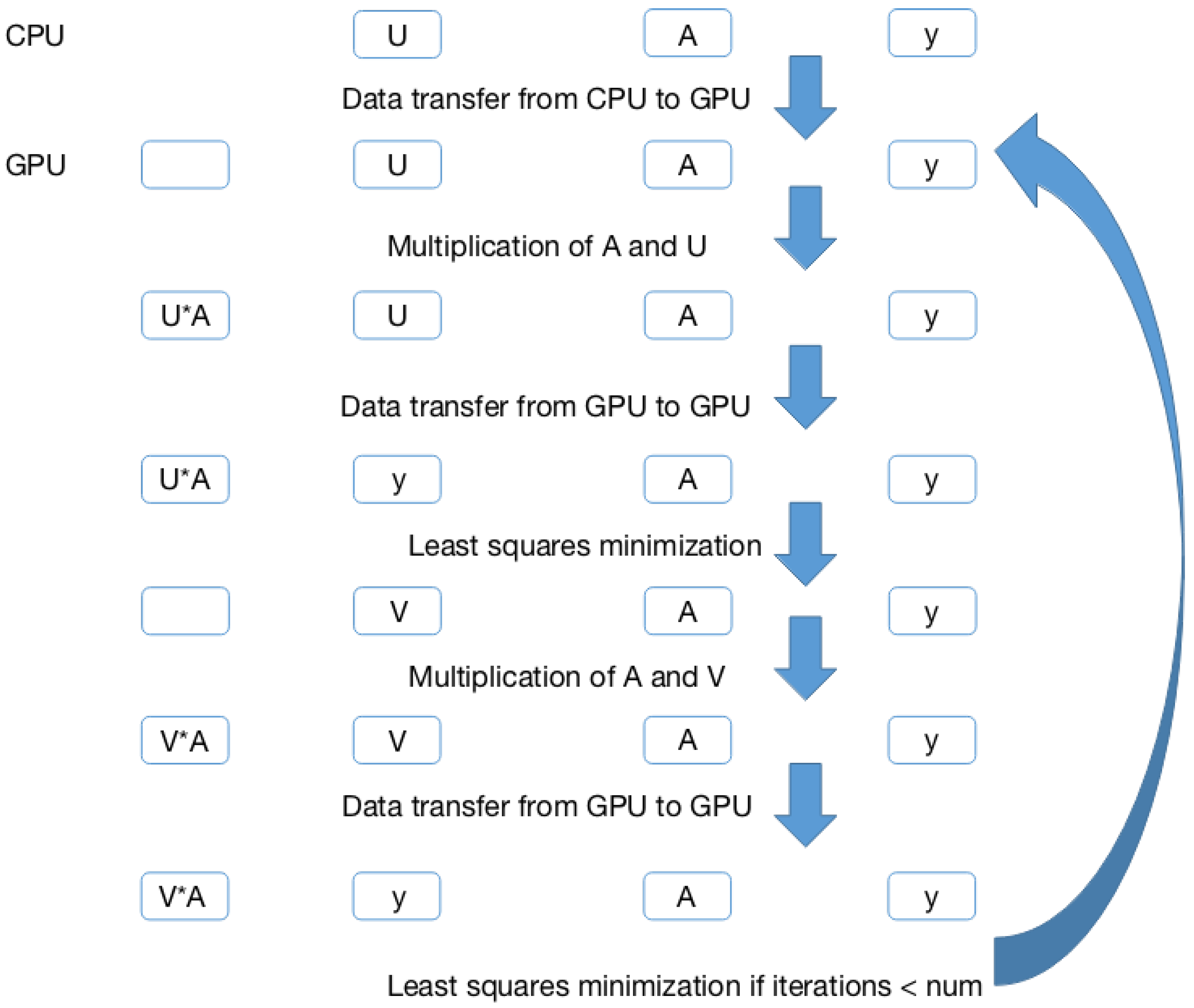

4.1. Design and Implementation of the GPU Tensor Sensing

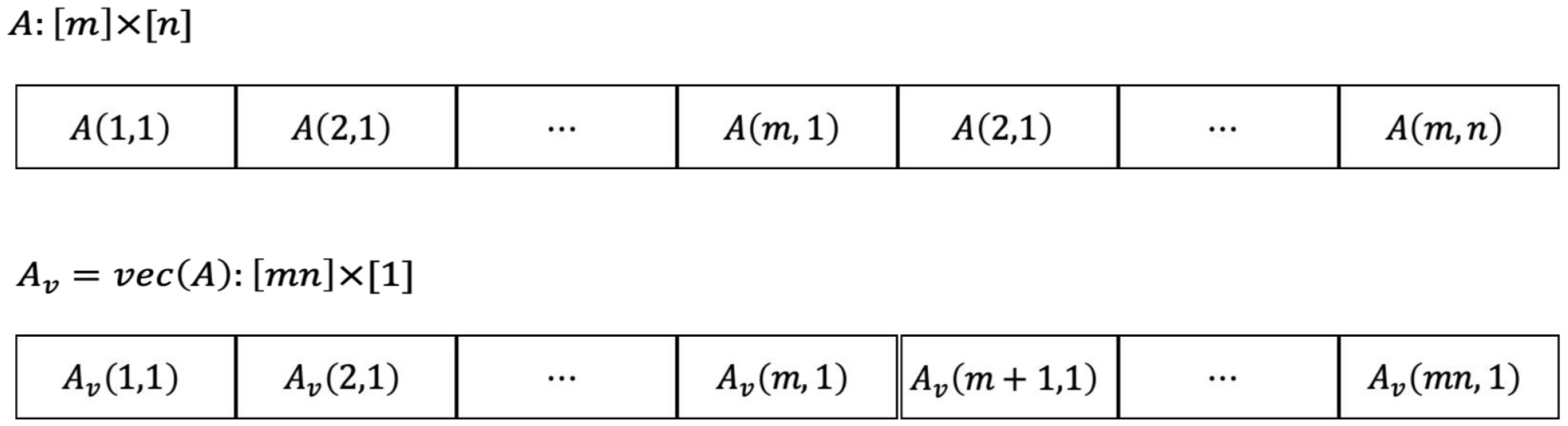

4.1.1. Data Structure

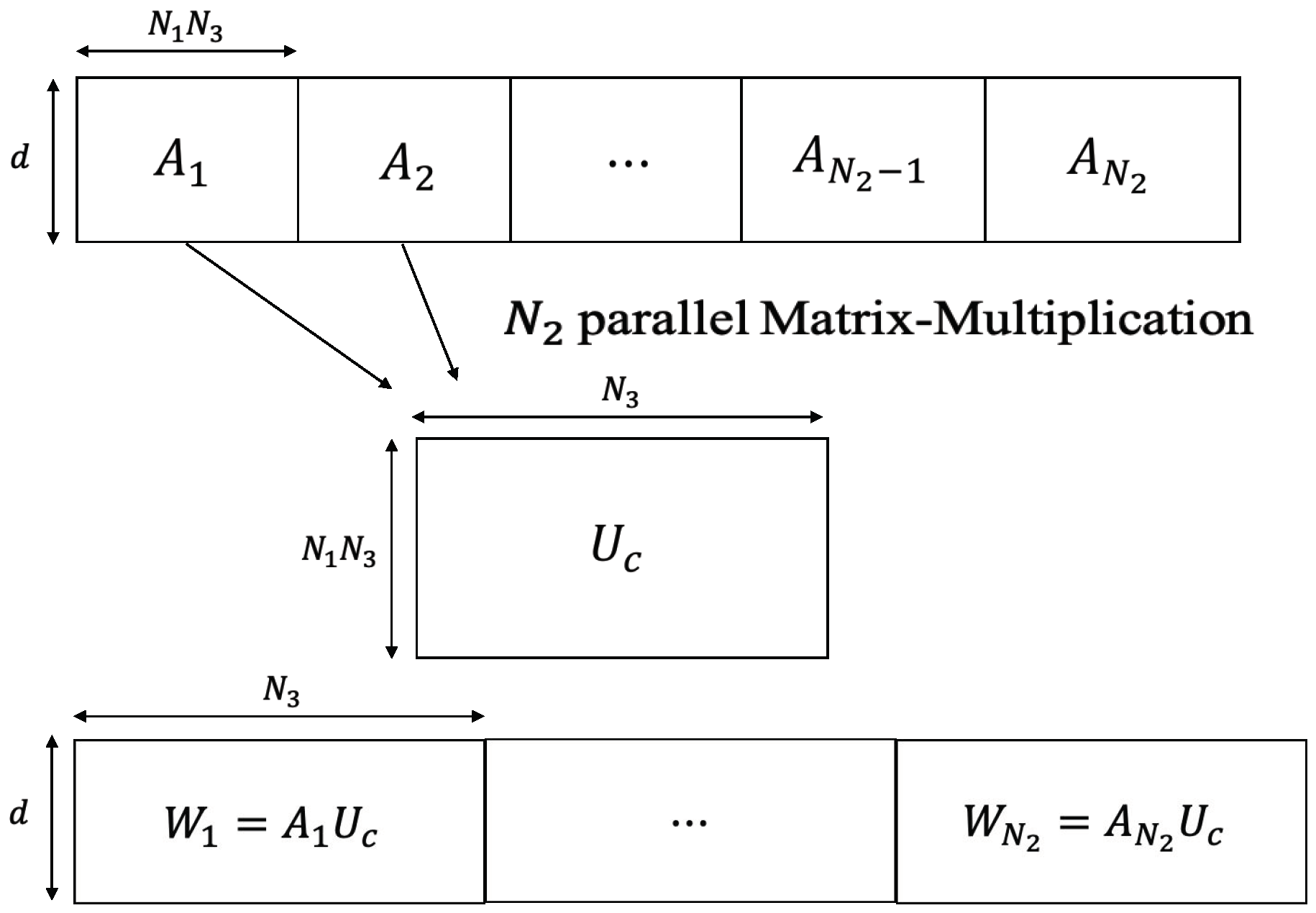

4.1.2. Multiplication of Block Diagonal Matrices

4.1.3. Eliminating Explicit Transpose Operations

4.1.4. Least Squares Minimization

4.2. Optimizations of the GPU Tensor Sensing

| Algorithm 3 Computation flow of the tensor sensing on the GPU. |

| Input: Data on CPU memory: randomly-initialized , measurement vector , matrix converted from M sensing tensors |

| Output: |

|

5. Experiment Methodology

5.1. Hardware and Software Platform

5.2. Testing Data

5.3. Testing Process

5.4. Comparison Metrics

- Running time: Varying the tensor size and fixing other parameters, we measured the execution time of the CPU tensor sensing, unoptimized GPU tensor sensing, and optimized GPU tensor sensing. Finally, we calculated speedups as the running time of the CPU tensor sensing divided by the running time of GPU tensor sensing.

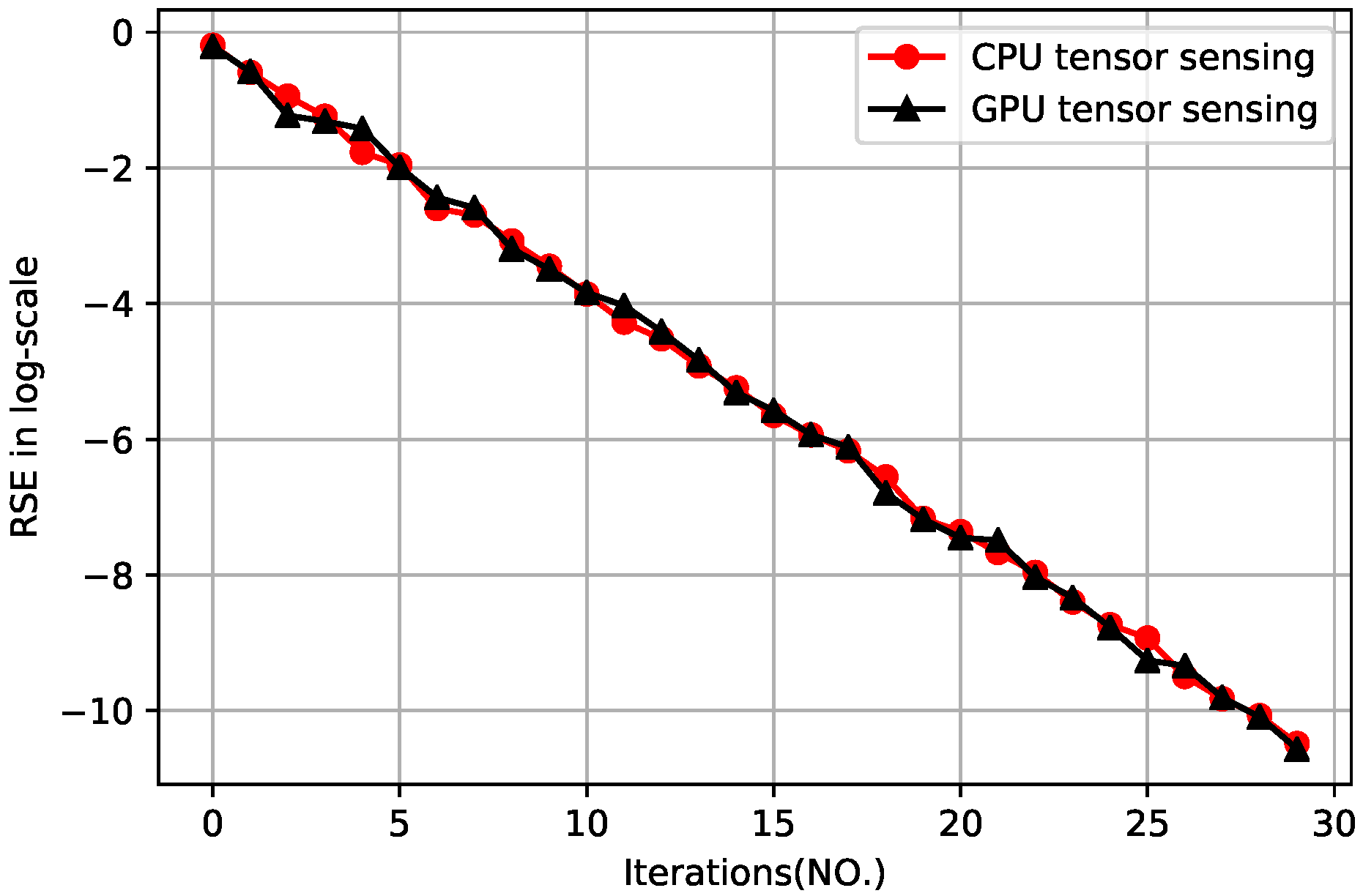

- Error rate: We adopted the metric relative square error, defined as .

6. Results and Analysis

6.1. Running Time of Synthetic Data

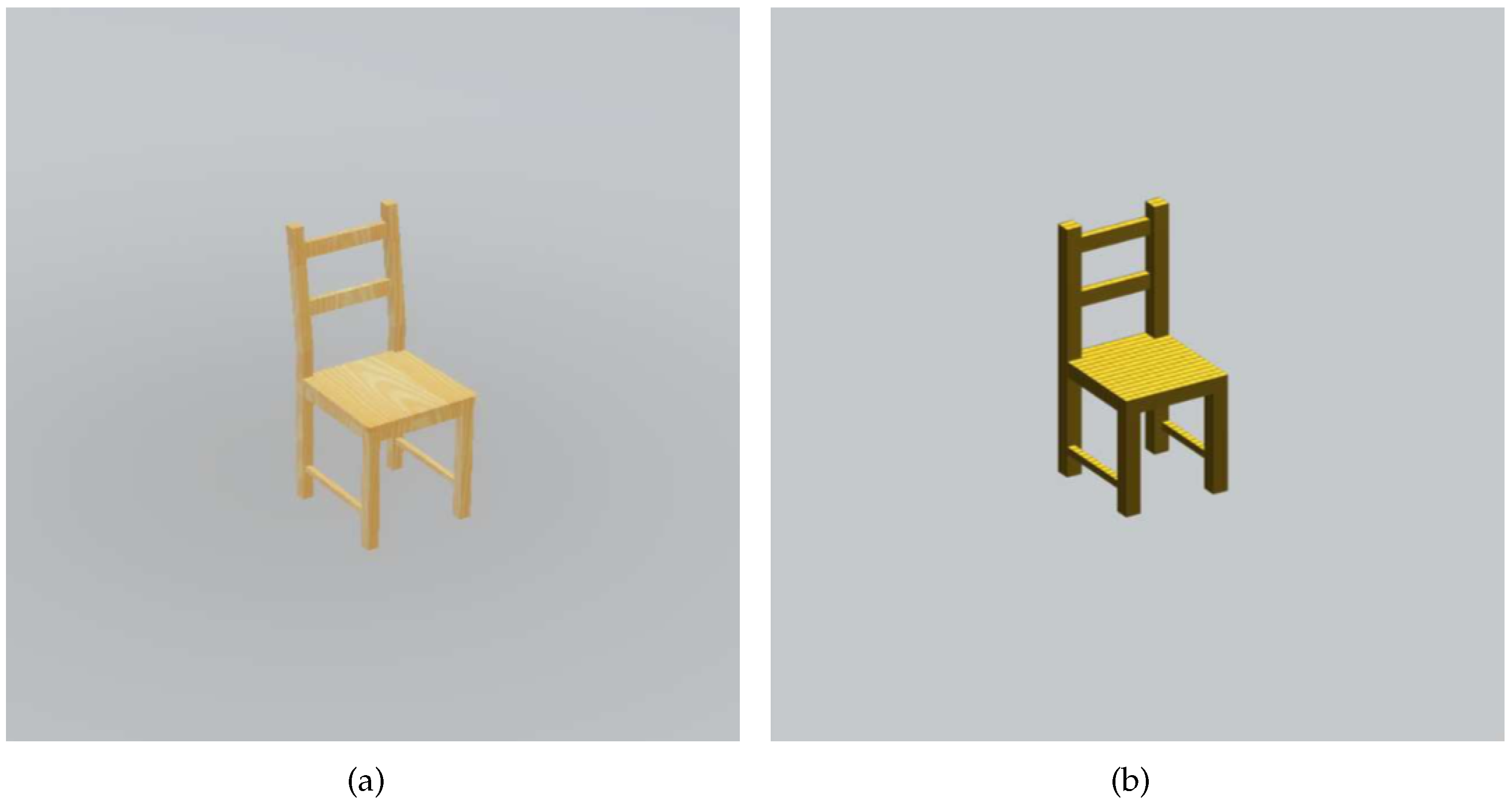

6.2. Error Rate and Running Time of IKEA Model Data

7. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Matsuda, T.; Yokota, K.; Takemoto, K.; Hara, S.; Ono, F.; Takizawa, K.; Miura, R. Multi-dimensional wireless tomography using tensor-based compressed sensing. Wirel. Pers. Commun. 2017, 96, 3361–3384. [Google Scholar] [CrossRef]

- Wilson, J.; Patwari, N. Radio tomographic imaging with wireless networks. IEEE Trans. Mob. Comput. 2010, 9, 621–632. [Google Scholar] [CrossRef]

- Beck, B.; Ma, X.; Baxley, R. Ultrawideband Tomographic Imaging in Uncalibrated Networks. IEEE Trans. Wirel. Commun. 2016, 15, 6474–6486. [Google Scholar] [CrossRef]

- Deng, T.; Qian, F.; Liu, X.Y.; Zhang, M.; Walid, A. Tensor Sensing for Rf Tomographic Imaging. In Proceedings of the 2018 IEEE International Conference on Multimedia and Expo (ICME), Miami, FL, USA, 10–12 April 2018; pp. 1–6. [Google Scholar]

- Cui, H.; Zhang, H.; Ganger, G.R.; Gibbons, P.B.; Xing, E.P. GeePS: Scalable deep learning on distributed GPUs with a GPU-specialized parameter server. In Proceedings of the Eleventh European Conference on Computer Systems, London, UK, 18–21 April 2016; pp. 1–16. [Google Scholar]

- Brito, R.; Fong, S.; Song, W.; Cho, K.; Bhatt, C.; Korzun, D. Detecting Unusual Human Activities Using GPU-Enabled Neural Network and Kinect Sensors. In Internet of Things and Big Data Technologies for Next Generation Healthcare; Springer: Berlin/Heidelberg, Germany, 2017; pp. 359–388. [Google Scholar]

- Campos, V.; Sastre, F.; Yagües, M.; Torres, J.; Giró-i Nieto, X. Scaling a Convolutional Neural Network for Classification of Adjective Noun Pairs with TensorFlow on GPU Clusters. In Proceedings of the 17th IEEE/ACM International Symposium on Cluster, Cloud and Grid Computing, Madrid, Spain, 14–17 May 2017; pp. 677–682. [Google Scholar]

- Shi, X.; Luo, X.; Liang, J.; Zhao, P.; Di, S.; He, B.; Jin, H. Frog: Asynchronous graph processing on GPU with hybrid coloring model. IEEE Trans. Knowl. Data Eng. 2018, 30, 29–42. [Google Scholar] [CrossRef]

- Zhong, W.; Sun, J.; Chen, H.; Xiao, J.; Chen, Z.; Cheng, C.; Shi, X. Optimizing Graph Processing on GPUs. IEEE Trans. Parallel Distrib. Syst. 2017, 28, 1149–1162. [Google Scholar] [CrossRef]

- Pan, Y.; Wang, Y.; Wu, Y.; Yang, C.; Owens, J.D. Multi-GPU graph analytics. In Proceedings of the 2017 IEEE International Parallel and Distributed Processing Symposium (IPDPS), Orlando, FL, USA, 29 May–2 June 2017; pp. 479–490. [Google Scholar]

- Gutiérrez, P.D.; Lastra, M.; Benítez, J.M.; Herrera, F. SMOTE-GPU: Big Data preprocessing on commodity hardware for imbalanced classification. Prog. Artif. Intell. 2017, 6, 1–8. [Google Scholar] [CrossRef]

- Rathore, M.M.; Son, H.; Ahmad, A.; Paul, A.; Jeon, G. Real-time big data stream processing using GPU with spark over hadoop ecosystem. Int. J. Parallel Program. 2017, 46, 1–17. [Google Scholar] [CrossRef]

- Devadithya, S.; Pedross-Engel, A.; Watts, C.M.; Landy, N.I.; Driscoll, T.; Reynolds, M.S. GPU-Accelerated Enhanced Resolution 3-D SAR Imaging With Dynamic Metamaterial Antennas. IEEE Trans. Microw. Theory Tech. 2017, 65, 5096–5103. [Google Scholar] [CrossRef]

- Verma, K.; Szewc, K.; Wille, R. Advanced load balancing for SPH simulations on multi-GPU architectures. In Proceedings of the High Performance Extreme Computing Conference (HPEC), Waltham, MA, USA, 12–14 September 2017; pp. 1–7. [Google Scholar]

- Intelligent Information Processing (IIP) Lab. Available online: http://www.findai.com (accessed on 15 February 2019).

- Kanso, M.A.; Rabbat, M.G. Compressed RF tomography for wireless sensor networks: Centralized and decentralized approaches. In International Conference on Distributed Computing in Sensor Systems; Springer: Berlin/Heidelberg, Germany, 2009; pp. 173–186. [Google Scholar]

- Mostofi, Y. Compressive cooperative sensing and mapping in mobile networks. IEEE Trans. Mob. Comput. 2011, 10, 1769–1784. [Google Scholar] [CrossRef]

- Li, Q.; Schonfeld, D.; Friedland, S. Generalized tensor compressive sensing. In Proceedings of the 2013 IEEE International Conference on Multimedia and Expo (ICME), San Jose, CA, USA, 15–19 July 2013; pp. 1–6. [Google Scholar]

- Liu, X.Y.; Wang, X. Fourth-order tensors with multidimensional discrete transforms. arXiv, 2017; arXiv:1705.01576. [Google Scholar]

- Jing, N.; Jiang, L.; Zhang, T.; Li, C.; Fan, F.; Liang, X. Energy-efficient eDRAM-based on-chip storage architecture for GPGPUs. IEEE Trans. Comput. 2016, 65, 122–135. [Google Scholar] [CrossRef]

- Zhang, T.; Jing, N.; Jiang, K.; Shu, W.; Wu, M.Y.; Liang, X. Buddy SM: Sharing Pipeline Front-End for Improved Energy Efficiency in GPGPUs. ACM Trans. Archit. Code Optim. (TACO) 2015, 12, 1–23. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, J.; Shu, W.; Wu, M.Y.; Liang, X. Efficient graph computation on hybrid CPU and GPU systems. J. Supercomput. 2015, 71, 1563–1586. [Google Scholar] [CrossRef]

- Zhang, T.; Shu, W.; Wu, M.Y. CUIRRE: An open-source library for load balancing and characterizing irregular applications on GPUs. J. Parallel Distrib. Comput. 2014, 74, 2951–2966. [Google Scholar] [CrossRef]

- Zhang, T.; Tong, W.; Shen, W.; Peng, J.; Niu, Z. Efficient Graph Mining on Heterogeneous Platforms in the Cloud. In Cloud Computing, Security, Privacy in New Computing Environments; Springer: Berlin/Heidelberg, Germany, 2016; pp. 12–21. [Google Scholar]

- Nelson, T.; Rivera, A.; Balaprakash, P.; Hall, M.; Hovland, P.D.; Jessup, E.; Norris, B. Generating efficient tensor contractions for gpus. In Proceedings of the 44th International Conference on Parallel Processing (ICPP), Beijing, China, 1–4 September 2015; pp. 969–978. [Google Scholar]

- Shi, Y.; Niranjan, U.; Anandkumar, A.; Cecka, C. Tensor contractions with extended BLAS kernels on CPU and GPU. In Proceedings of the IEEE 23rd International Conference on High Performance Computing (HiPC), Kochi, India, 16–19 December 2016; pp. 193–202. [Google Scholar]

- Antikainen, J.; Havel, J.; Josth, R.; Herout, A.; Zemcik, P.; Hautakasari, M. Nonnegative tensor factorization accelerated using GPGPU. IEEE Trans. Parallel Distrib. Syst. 2011, 22, 1135–1141. [Google Scholar] [CrossRef]

- Lyakh, D.I. An efficient tensor transpose algorithm for multicore CPU, Intel Xeon Phi, and NVidia Tesla GPU. Comput. Phys. Commun. 2015, 189, 84–91. [Google Scholar] [CrossRef]

- Hynninen, A.P.; Lyakh, D.I. cuTT: A high-performance tensor transpose library for CUDA compatible GPUs. arXiv, 2017; arXiv:1705.01598. [Google Scholar]

- Rogers, D.M. Efficient primitives for standard tensor linear algebra. In Proceedings of the XSEDE16 Conference on Diversity, Big Data, and Science at Scale, ACM, Miami, FL, USA, 17–21 July 2016; p. 14. [Google Scholar]

- Zou, B.; Li, C.; Tan, L.; Chen, H. GPUTENSOR: Efficient tensor factorization for context-aware recommendations. Inf. Sci. 2015, 299, 159–177. [Google Scholar] [CrossRef]

- Li, J.; Ma, Y.; Yan, C.; Vuduc, R. Optimizing sparse tensor times matrix on multi-core and many-core architectures. In Proceedings of the IEEE Workshop on Irregular Applications: Architecture and Algorithms (IA3), Salt Lake City, UT, USA, 13–18 November 2016; pp. 26–33. [Google Scholar]

| Parameters | Meaning | Value |

|---|---|---|

| transA | operation op() that is non- or transpose | non-transpose |

| transU | operation op() that is non- or transpose | transpose |

| pointer to the matrix corresponding to the first instance of the batch | ||

| pointer to the matrix | ||

| pointer to the matrix | ||

| strideA | the address offset between and | |

| strideU | the address offset between and | 0 |

| strideW | the address offset between and | |

| batchNum | number of to perform in the batch |

| n | 40 | 60 | 80 | 100 | 120 |

|---|---|---|---|---|---|

| CPU tensor sensing time (s) | 3.07 | 13.65 | 50.43 | 118.84 | 251.54 |

| Unoptimized GPU tensor sensing time (s) | 9.50 | 11.40 | 12.15 | 20.70 | 25.74 |

| Optimized GPU tensor sensing time (s) | 0.44 | 0.63 | 0.98 | 2.01 | 2.97 |

| Speedups-unoptimized | 0.32 | 1.20 | 4.15 | 5.74 | 9.77 |

| Speedups-optimized | 6.98 | 21.67 | 51.46 | 59.12 | 84.70 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, D.; Zhang, T. Efficient Tensor Sensing for RF Tomographic Imaging on GPUs. Future Internet 2019, 11, 46. https://doi.org/10.3390/fi11020046

Xu D, Zhang T. Efficient Tensor Sensing for RF Tomographic Imaging on GPUs. Future Internet. 2019; 11(2):46. https://doi.org/10.3390/fi11020046

Chicago/Turabian StyleXu, Da, and Tao Zhang. 2019. "Efficient Tensor Sensing for RF Tomographic Imaging on GPUs" Future Internet 11, no. 2: 46. https://doi.org/10.3390/fi11020046

APA StyleXu, D., & Zhang, T. (2019). Efficient Tensor Sensing for RF Tomographic Imaging on GPUs. Future Internet, 11(2), 46. https://doi.org/10.3390/fi11020046