1. Introduction

Forests are a critical natural resource, providing abundant forestry products and maintaining ecological balance, climate regulation, air purification, and soil conservation. Among forest disturbances, wildfires are among the most devastating threats.

China has widely distributed forest resources, with wildfire risks across all provinces and seasons, showing distinct seasonal variations [

1]. Spring is the peak wildfire season, mainly due to agricultural activities (e.g., slash-and-burn farming). Autumn is the second most high-risk period, primarily due to drought and ignition sources. Winter wildfires mostly result from human activities (e.g., ritual burning), while summer wildfires are mainly triggered by lightning.

Forest fires spread rapidly and are highly destructive, making timely identification of potential fire zones critical for prevention. Various detection systems have been proposed. Kumar, S.K. et al. [

2] developed an intelligent forest fire detection system using LoRaWAN sensors and machine/deep learning for early warning in remote areas. Gobinda Prasad Acharya et al. [

3] proposed a Forest Protection System (FPS) integrating IoT and LoRa communication to detect intrusions and fires. Nahar, A. et al. [

4] introduced an IoT system combining Smart EcoHydrant Stations (SEHS), UAVs, and blockchain for wildfire monitoring and deforestation control.

Early fire detection algorithms determine monitoring system effectiveness. Remote sensing and machine vision are increasingly used for forest fire monitoring. Ghaderpour, E. et al. [

5], Jang, E. et al. [

6], and Marsha, A. L. et al. [

7] used remote sensing for near-real-time surveillance, but these methods require significant infrastructure and maintenance costs.

Recently, drone-based monitoring with machine vision has emerged as a promising approach [

8]. The accurate identification of potential fire zones in forest imagery is essential. Early forest fire image recognition relied on manually extracted color features. Pathare et al. [

9] analyzed the R-component intensity of flame pixels in the RGB space, while C. Hossain, F. M. A. et al. [

10] used the YUV space for flame detection. Yuan, C. et al. [

11] developed a color-based fire detection method for drone patrols.

The success of deep learning in image processing has spurred research into early forest fire detection [

12]. Current approaches face three challenges.

First, models lack generalization. Forest environments vary in vegetation, terrain, and weather, requiring adaptable models. Marques, E.Q. et al. [

13] studied vegetation effects, and Mambile, C. et al. [

14] explored weather impacts, but generalization across regions and seasons remains understudied [

15]. Huang, J. et al. [

16] showed that the detection performance degrades significantly under low-light dawn/dusk scenarios and dense fog. Environmental sensitivity, caused by seasonal color shifts, varying humidity, and ambient temperature, remains one of the most cited limitations in current deep-learning fire detection systems [

17].

Second, the subtle features of early-stage forest fires and partial occlusion often lead to false negatives or misclassifications. To address this, Lee, Y. et al. [

18] improved accuracy by integrating global and local features in Faster R-CNN. Xu, R. et al. [

19] combined YOLOv5 and EfficientDet with a classifier for verification. Recent advancements have further improved forest fire detection accuracy through optimizations to the YOLO algorithm, including backbone network enhancements [

20,

21], attention mechanism integration [

20,

22], Neck structure modifications [

21,

22], and loss function refinements [

22,

23]. Wang F. [

24] enhanced small-target detection with attention mechanisms. Zhu et al. [

25] replaced full convolution with partial convolution in YOLOv8’s C2f module, reducing complexity. Wang, H. et al. [

26] proposed the DSS-YOLO to address missed detections and real-time issues for small flames and smoke.

Third, near-real-time monitoring requires faster inference. Model lightweighting and hardware acceleration improve efficiency. Honglin Wang et al. [

27] used Depthwise Separable Convolutions to simplify YOLOv5. Lin et al. [

28] introduced a lightweight self-attention detection head (SADH) for YOLOv8. Jin, L. et al. [

29] applied RepViT and SimAM for lightweighting. Briley, A. A. et al. [

30] achieved faster inference via hardware acceleration.

A key challenge is the lack of public, high-quality datasets for early fire detection [

31]. Forest fires’ unpredictability and regional heterogeneity hinder data collection. Currently, researchers predominantly rely on data augmentation techniques to expand and diversify forest fire image datasets, facilitating model training and optimization [

32]. To address the lack of real forest fire data, Soliman [

33] proposed collecting data by simulating small-scale forest fires to approximate large-scale wildfire scenarios.

To address the poor performance and low accuracy of models in identifying suspicious regions of forest fire due to a limited dataset sample size, dispersed sample features, and small target sizes, this paper conducts the following work based on relevant research achievements:

- (1)

Designed the suspicious regions of forest fire dataset (SRFFD), incorporating diverse early-fire images across seasons, times, and weather conditions.

- (2)

Introduced an embedding layer to integrate seasonal (S) and temporal (T) information as additional channels, improving adaptability to environmental variations.

- (3)

Replaced standard convolutions in YOLOv8’s C2f module with dilated convolutions, enlarging receptive fields without parameter increase.

- (4)

Incorporated the convolutional block attention module (CBAM) in the Neck to enhance the focus on small targets and critical regions.

- (5)

Modified feature fusion by linking Backbone outputs (P2, P4, and SPPF) to the Neck, improving multi-scale detection.

These innovations collectively address challenges in small-target detection, generalization, and real-time processing, advancing forest fire monitoring systems.

2. Materials and Methods

2.1. Dataset Construction

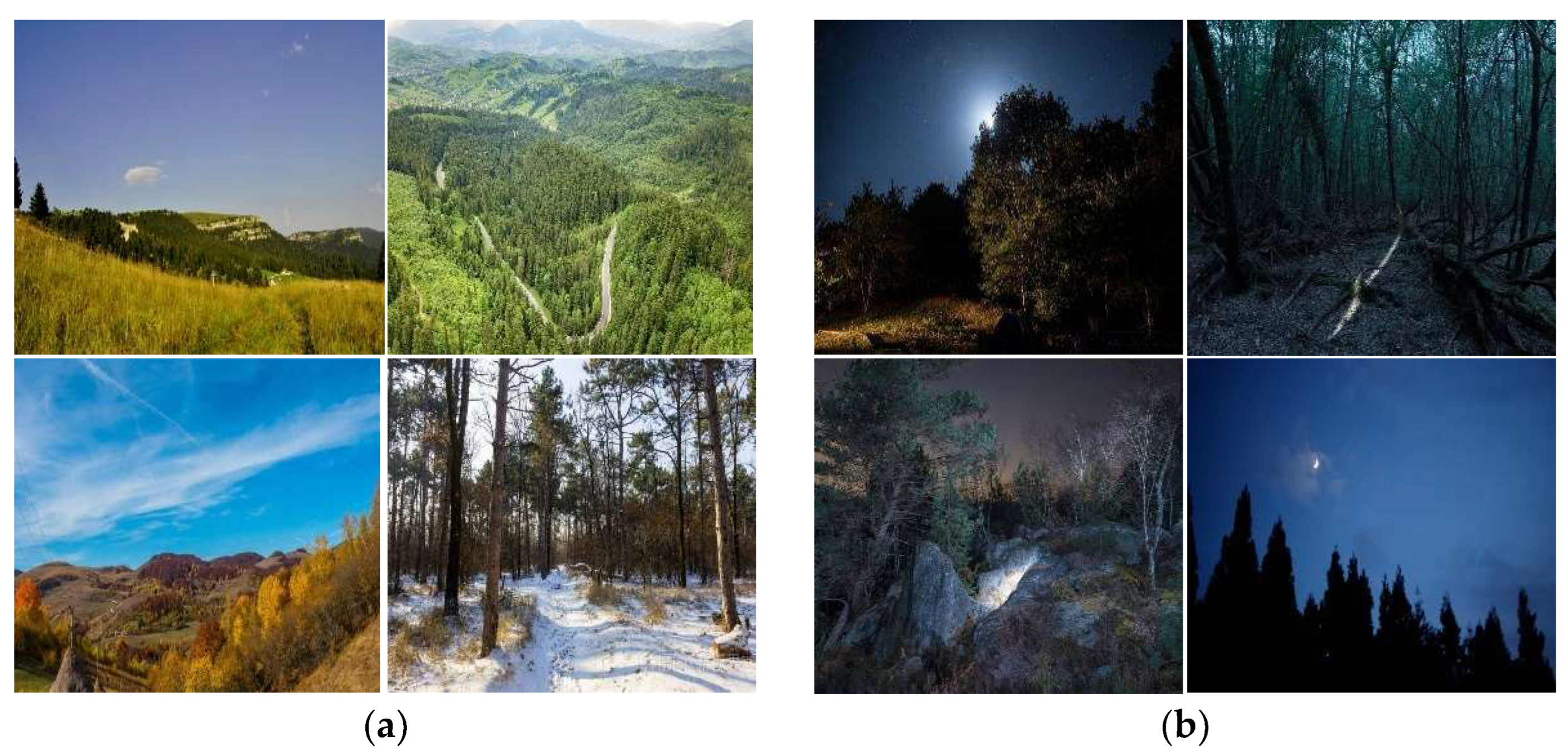

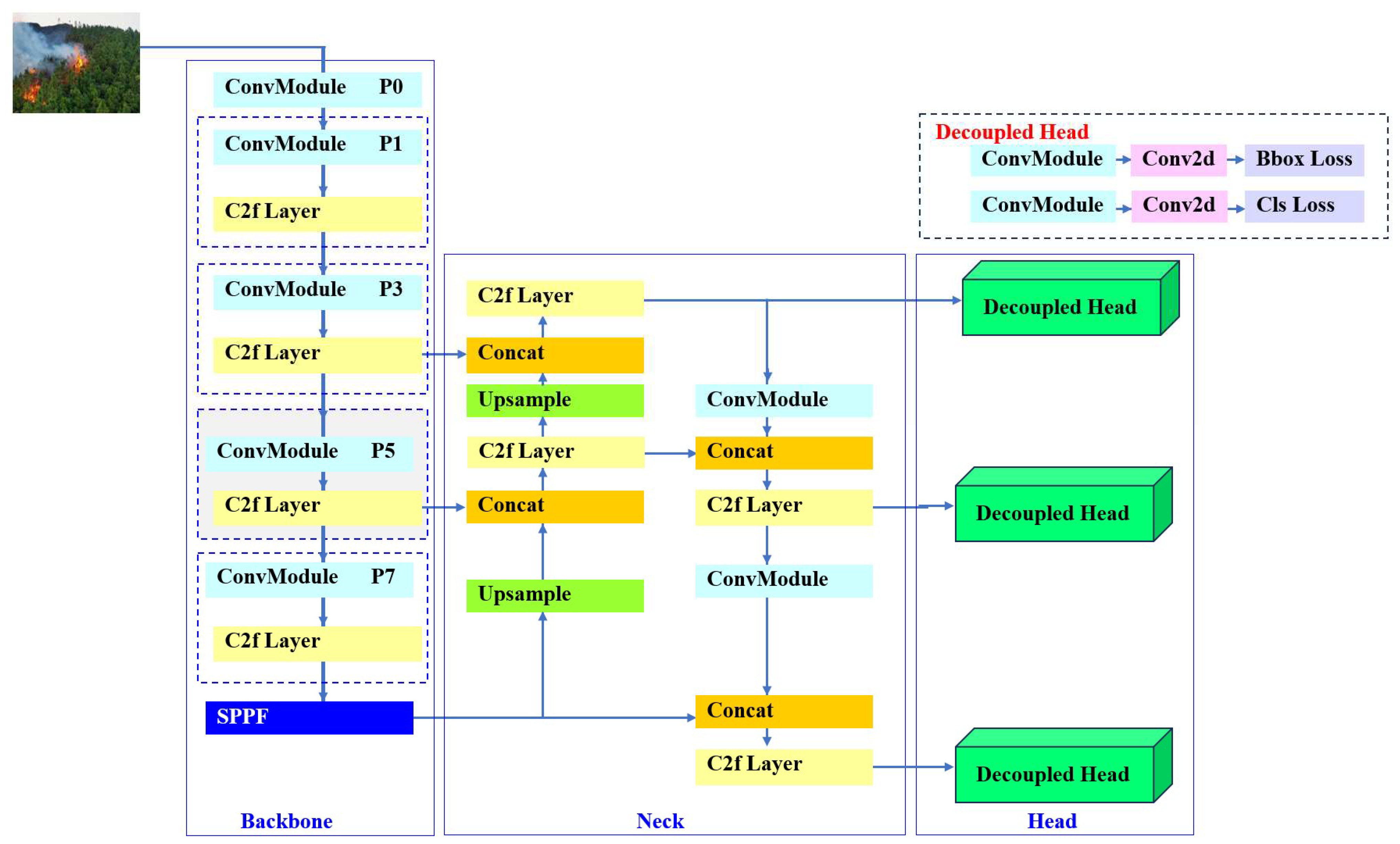

To ensure the diversity and representativeness of the data, an initial dataset’s wildfire data was established by utilizing early forest fire videos and images sourced from the internet, while also incorporating datasets from the Corsican Fire Database [

34], the Dataset for Fire and Smoke detection (DFS) [

35], the FLAME dataset from Northern Arizona University [

36], and the VISFIRE dataset of Bilkent University. The initial dataset was collected from forest environments across multiple typical regions, including the Mediterranean, North American forests, and Asian forests. During the image selection process, fire scenarios under seasonal variations (spring, summer, autumn, and winter) and diurnal differences (day/night) were comprehensively considered, while also covering various weather conditions such as clear, cloudy, overcast, and light rain to simulate the complex meteorological environments encountered in real-world fire detection. The selected images include not only the characteristic features of early-stage fires (e.g., small flames) but also partially capture mid-to-late-stage fire conditions (e.g., large-scale burning). These fire images serve as positive samples, while non-fire images with similar scene characteristics, collected from the internet, are used as negative samples.

The initial dataset contains 2392 images comprising forest early-fire images and their corresponding negative samples. The image size in the dataset was uniformly resized to 480 × 480 pixels. The positive samples were labeled as “Yes”, while the negative samples were labeled as “No”. For positive sample images, LabelImg (

https://github.com/HumanSignal/labelImg (accessed on 8 January 2025)) was employed to annotate the corresponding suspected fire areas.

Subsequently, we selected three image data augmentation techniques to enhance the images in the initial dataset, resulting in the SRFFD. The three image augmentation techniques—affine transformation, HSV random enhancement, and copy-paste augmentation—generate diverse training image data by adjusting image angles and colors. The SRFFD comprises 64,584 samples, including 36,180 positive samples and 28,404 negative samples. The distribution of samples in the SRFFD is shown in

Table 1.

Representative images of positive and negative samples in the SRFFD are displayed in

Figure 1 and

Figure 2.

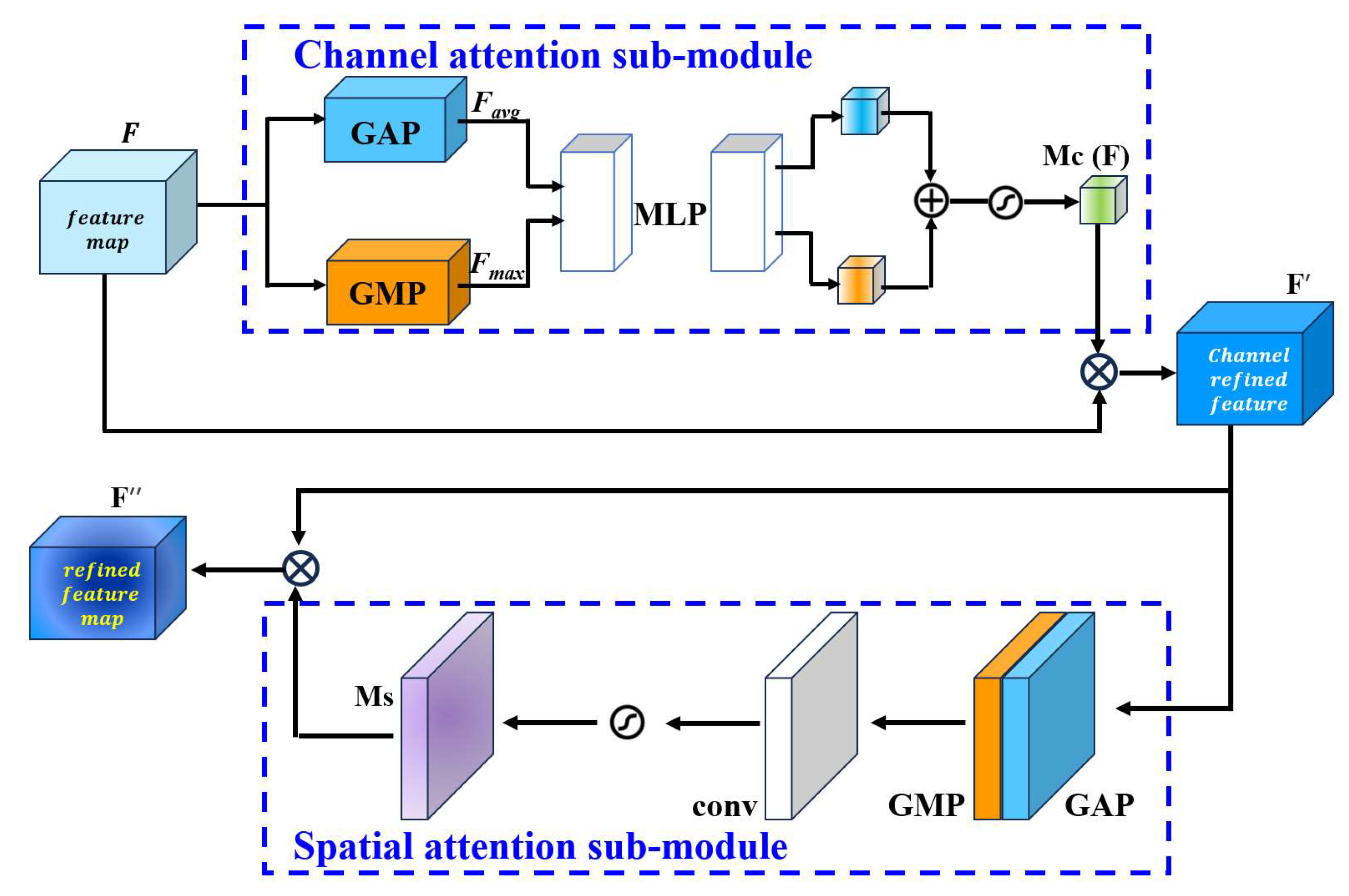

2.2. YOLOv8

You Only Look Once (YOLO) is an advanced real-time object detection algorithm renowned for its efficiency and accuracy. The primary strength of the YOLO lies in its speed, enabling real-time detection while maintaining high precision. The YOLOv8 was developed and released by the Ultralytics team in 2023 (

https://docs.ultralytics.com/zh/models/yolov8 (accessed on 2 December 2024)). The architecture of the YOLOv8 primarily consists of a Backbone, Neck, and Head, as illustrated in

Figure 3.

It can be observed that the C2fLayer, ConvModule, and Spatial Pyramid Pooling Fast (SPPF) are the main modules in the YOLOv8. The C2fLayer in the YOLOv8 is an enhanced feature extraction module that integrates the Cross-Stage Partial (CSP) structure and multi-branch convolution to improve the model’s feature extraction capability and computational efficiency. The SPPF module in the YOLOv8 is an improved spatial pyramid pooling structure designed to extract multi-scale features for object detection tasks. Compared to the traditional SPP module, the SPPF reduces computational overhead by sequentially stacking pooling layers (e.g., repeatedly applying 5 × 5 max pooling) while retaining the effectiveness of multi-scale feature extraction. This design significantly enhances computational efficiency.

The YOLOv8 retains the efficiency characteristic of the YOLO series while achieving higher detection accuracy and speed through structural optimizations and technical enhancements. Notably, the YOLOv8 exhibits distinct advantages in lightweight design and small object detection [

37,

38,

39].

2.3. Dilated Convolution

Dilated convolution is a convolutional operation that expands the receptive field by introducing a dilation rate into the standard convolution kernel [

40].

The core concept involves enlarging the convolution kernel’s receptive field without additional parameters, thereby enabling the capture of broader contextual information. This is achieved by inserting “holes” (zeros) between the kernel weights in the standard convolution. The dilation rate serves as the key parameter in dilated convolution, controlling the degree of kernel expansion. Specifically, the dilation rate (

r) determines the spacing between the kernel weights. When

r = 1, the dilated convolution becomes equivalent to standard convolution. When

r > 1, (

r − 1) zeros are inserted between the kernel weights, effectively expanding the receptive field. For a given input feature map (

I) and kernel (

K), the output (

O) of the dilated convolution operation can be mathematically expressed by Equation (1) [

40].

where

indicate positions on the output feature map, while (

i,j) represent kernel indices, and

r specifies the dilation rate.

The receptive field of dilated convolution is given by Equation (2) [

40].

where

k is the kernel size and

r denotes the dilation rate.

For a 3 × 3 kernel, when r = 1, the kernel covers a 3 × 3 region corresponding to a 3 × 3 receptive field. When r = 2, the kernel spans a 5 × 5 region while computing only 9 pixels, yielding a 5 × 5 receptive field. At r = 3, the kernel encompasses a 7 × 7 region with a 7 × 7 receptive field. Standard convolution performs dense sampling on the input feature map, whereas dilated convolution achieves sparse sampling through the insertion of holes, thereby covering an expanded area. This approach enlarges the receptive field without increasing the number of parameters, which facilitates the capture of broader contextual information. Furthermore, dilated convolution preserves the feature map resolution, making it particularly suitable for tasks requiring high-resolution outputs. While maintaining computational costs comparable to standard convolution, dilated convolution provides a significantly larger receptive field.

Dilated convolution expands the receptive field without increasing the number of parameters by introducing a dilation rate, while maintaining resolution and computational efficiency.

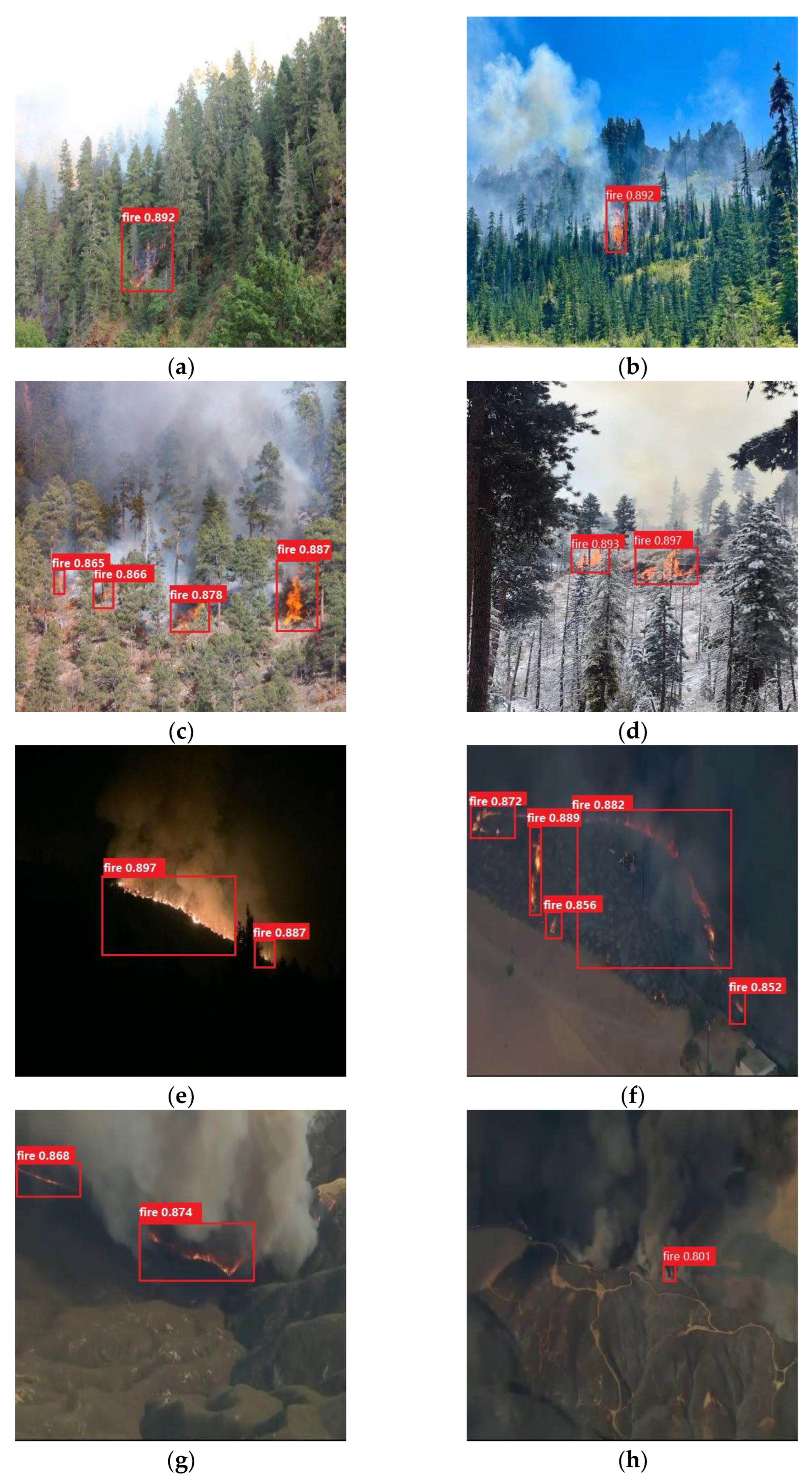

2.4. Convolutional Block Attention Module (CBAM)

The CBAM [

41] is a simple yet effective attention module designed for feedforward convolutional neural networks. By incorporating an attention mechanism, the CBAM enhances the model’s representation capability. This module specifically improves the model’s focus on regions containing small targets. The CBAM comprises two sequentially connected sub-modules: a channel attention sub-module and a spatial attention sub-module.

Figure 4 illustrates the structure of the CBAM.

The input of the CBAM is the input feature map (F), with dimensions , where C denotes the number of channels, H represents the height of the feature map, and W corresponds to the width of the feature map.

In the channel attention sub-module, firstly, Global Average Pooling (GAP) and Global Max Pooling (GMP) are applied to the input feature map to generate two 1D vectors. For each channel, the average value across all spatial positions

is computed, yielding a 1D vector (

) with dimensions

C × 1 × 1. The calculation is expressed as follows (Equation (3)) [

41].

Here, c represents the channel index, while i and j denote spatial positions.

For each channel, the maximum value across all spatial positions (

) is computed, resulting in a 1D vector Fmax with dimensions

. The calculation is expressed as follows (Equation (4)) [

41].

The pooling operations capture the global average and the most salient features of each channel, respectively. The resulting vectors, Favg and Fmax, are then processed by a shared two-layer MultiLayer Perceptron (MLP) to generate channel attention weights. The MLP outputs are combined through element-wise addition, followed by a sigmoid activation function to produce the channel attention weights Mc(F) with dimensions C × 1 × 1. Finally, the channel refined feature map F′ is obtained by multiplying the input feature map F with the attention weights Mc(F) in a channel-wise manner.

The CBAM spatial attention sub-module processes the channel-weighted feature map through a series of operations to generate the spatially weighted feature map. First, the input feature map (F′) undergoes both average pooling and max pooling along the channel dimension, yielding two 2D feature maps (F′avg and F′max). These two feature maps are then concatenated along the channel dimension to form a composite 2D feature map. A convolutional operation is subsequently applied to this concatenated feature map to produce spatial attention weights (Ms(F′)). These weights are then normalized using the sigmoid function, generating the final spatial attention weights (Ms(F′)). Finally, the weights are multiplied element-wise with the input feature map (F) across all spatial positions, resulting in the spatially weighted feature map (F″). This mechanism enhances salient spatial regions while suppressing less important ones, thereby refining the feature representation for downstream tasks.

The CBAM is an efficient attention mechanism that refines feature maps by combining channel and spatial attention along both dimensions. Being lightweight and highly versatile, it can be easily integrated into existing architectures, serving as a powerful tool to enhance the performance of CNNs in various computer vision tasks.

2.5. Our Improved Model

Considering the unique characteristics of forest fire detection, we propose an identification model for suspicious regions of forest fire based on YOLO v8, named SRoFF-Yolover.

The architecture of SRoFF-Yolover is illustrated in

Figure 5.

The SRoFF-Yolover takes 480 × 480 color images as input and outputs the bounding box coordinates along with classification results for suspicious regions of forest fire.

Through the analysis of images in the SRFFD, we observe that suspicious regions of forest fire exhibit distinct characteristics compared to the background across different seasons and time periods. To enhance the model’s adaptability to temporal variations and improve its generalization capability across seasons, we introduce an embedding layer that integrates seasonal and temporal information into the image representation.

The embedding layer is achieved by augmenting the existing three-channel color image with two additional channels dedicated to seasonal (

S) and temporal (

T) information. The detailed embedding methodology is formalized in Equation (5).

where

X is the input of the SRoFF-Yolover, a color image of dimensions 480 × 480 × 3.

S represents a seasonal information matrix of dimensions 480 × 480 × 1.

T denotes a temporal information matrix of dimensions 480 × 480 × 1.

The matrices S and T are implemented as learnable parameters initialized with random values sampled from a normal distribution (mean = 0, std = 0.01). During training, these matrices are optimized via backpropagation alongside other model parameters. The seasonal matrix S is designed to capture recurring patterns (e.g., dry vs. wet seasons), while T encodes time-of-day variations (e.g., daylight vs. nighttime). To ensure meaningful learning, we constrain the values of S and T to the range [−1, 1] using a tanh activation function. This prevents extreme values from dominating the original RGB features.

Both S and T are implemented as learnable information matrices, with S encoding seasonal patterns and T capturing temporal variations. Three matrices are concatenated along the last dimension to form a new matrix EX, with dimensions of 480 × 480 × 5. The first three channels of this new matrix retain the RGB color information of the original image, while the fourth channel encapsulates seasonal information, and the fifth channel embodies temporal information. Therefore, the embedding layer introduces seasonal and temporal information as prior knowledge into the SRoFF-Yolover.

To improve the SRoFF-Yolover’s accuracy in detecting small targets while reducing its complexity, we replaced the C2fLayer in the Backbone with the DCC2fLayer. The DCC2fLayer is created by substituting the standard convolution in the C2fLayer with the dilated convolution. The standard convolution kernels (e.g., 3 × 3) employed in the C2fLayer possess a relatively limited receptive field. Moreover, these conventional convolutions incur high computational costs, particularly when processing high-resolution inputs, which may consequently impact the model’s inference speed. In contrast, dilated convolution can capture multi-scale contextual information while reducing computational complexity and enhancing the detection capability for small targets. Therefore, the DCC2fLayer is used to improve small target detection performance. The hybrid strategy is employed to adjust the dilation rate, ensuring that the sampling points of the convolutional kernel cover continuous spatial regions and thereby avoiding feature discontinuity caused by a fixed large dilation rate. Experimental results demonstrate that setting the dilation rate (r) to 2 in the DCC2fLayer achieves an optimal balance between preserving local details and moderately expanding the receptive field.

To improve multi-scale target adaptation, we enhanced the Backbone-to-Neck linkage. The feature map at the P2 level exhibits a higher resolution, enabling it to capture more detailed information and exhibit greater sensitivity to small targets, particularly in the recognition of small objects within complex backgrounds. The SPPF module integrates multi-scale feature information, and its connection further enhances the model’s capability for multi-scale target detection. Therefore, in the SRoFF-Yolover, the linkage between the Backbone and Neck is implemented using the P2, P4, and SPPF outputs from the Backbone.

By integrating the CBAM before the C2fLayer in the YOLOv8’s Neck, we aim to enhance the SRoFF-Yolover’s capacity to focus on crucial channels and spatial regions, which is particularly advantageous for small object detection. Small targets typically occupy only a minor proportion of an image. The primary reason for the low accuracy in small target detection lies in the fact that convolutional networks processing images with extensive background areas tend to accumulate substantial redundant information. This adaptation enables the SRoFF-Yolover to preferentially emphasize and amplify relevant features for small targets during both feature extraction and fusion stages.

2.6. Experimental Settings

The SRFFD was split into training, validation, and test sets at an 8:1:1 ratio. Further specifics regarding the SRFFD are provided in

Table 2. The training set was used for model training, the validation set for hyperparameter tuning, and the test set for evaluating model performance. The experimental conditions in this paper are shown in

Table 3. The hyperparameters were initially set based on theoretical principles and then fine-tuned experimentally. The hyperparameter configurations are detailed in

Table 4.

2.7. Evaluation Metrics

The models were assessed based on two criteria: complexity and predictive performance. The model complexity encompasses spatial complexity, temporal complexity, and model forward inference time. The metrics used to evaluate the model’s complexity properties include the following: (1) #Params (K): The total number of model parameters, which is positively correlated with the model’s spatial complexity. (2) Giga Floating-Point Operations (GFLOPs): A metric for evaluating the computational complexity of deep learning models. It represents the number of floating-point operations required for a single forward pass through the model. GFLOPs provide valuable insight into the computational demands of a model, facilitating performance comparison and optimization. For models with similar architectures, a lower GFLOP count generally indicates reduced computational cost and faster inference on the same hardware. (3) Frames Per Second (FPS): A critical metric for evaluating the inference speed of object detection models, FPS represents the number of image frames a model can process per second. Higher FPS values indicate better real-time performance.

In this study, we evaluate the model’s predictive performance using the PASCAL VOC assessment criteria, a widely recognized standard in object detection research. The PASCAL VOC evaluation metrics are quantified by the mean average precision (

mAP) [

42] at an Intersection over Union (IoU) threshold of 0.5, denoted as

mAP@0.5. The calculation of

mAP@0.5 requires the determination of precision and recall values, which are computed using the following formulas [

42].

In these formulas, P(r) denotes precision at a specific recall value r, representing a point on the precision-recall curve where precision is plotted on the y-axis and recall on the x-axis. Here, n indicates the total number of categories. Average Precision (AP) is defined as the area under the precision-recall curve. mAP represents the mean of AP values across all categories.

3. Results

3.1. Ablation Experiments

The experimental procedure for each ablation experiment is conducted as follows. First, we configure the training hyperparameters according to

Table 4 and train the suspicious regions of forest fire detection models using the training set. Subsequently, we evaluate the trained models on the test dataset to assess key performance metrics, including

mAP@0.5, FPS, number of parameters (#Params), and GFLOPs.

The ablation experiment results are summarized in

Table 5.

To systematically evaluate the efficacy of our proposed enhancements, we implemented four consecutive modifications (Experiments 2–5) to the baseline model (YOLOv8s). Experiment 2 replaced the C2fLayer in the backbone with the DCC2fLayer for achieving lightweight; Experiment 3 incorporated a CBAM attention module before the C2fLayer in the Neck to enhance accuracy; Experiment 4 modified the Backbone-Neck linkage via P2, P4, and SPPF to improve detection performance; and Experiment 5 added an embedding layer to the YOLOv8s to boost accuracy. The results from Experiments 2 to 5 demonstrate the specific contributions of each module. Experiment 2 shows that the lightweight module (DCC2f) reduces parameters by 9.5% (6.3 MB→5.7 MB), decreases GFLOPs by 9.0% (7.8→7.1), and improves mAP@0.5 by 4.4% (0.764→0.798). This indicates that the DCC2f effectively reduces computation but has a limited impact on accuracy improvement. The CBAM (Experiment 3) achieves the highest single-module mAP improvement (+10.5%, 0.764→0.844), though with increased parameters and computation. The CBAM significantly enhances small-target detection accuracy. The multi-scale feature reconstruction through P2/P4/SPPF optimization (Experiment 4) increases mAP@0.5 by 5.8% and FPS by 4%, validating the effectiveness of multi-scale feature fusion. The spatiotemporal embedding layer designed for seasonal/diurnal variations (Experiment 5) improves mAP@0.5 by 7.6% (0.764→0.822), enhancing detection robustness in winter nighttime scenarios. These results demonstrate that all four improvements can enhance the suspicious regions of the forest fire detection model in terms of accuracy, inference speed, and model lightweighting to varying degrees.

In Experiments 6–8, we progressively integrated the aforementioned four improvements. The results demonstrate that the detection accuracy (mAP@0.5) shows a continuous improvement, as all four modifications contribute to enhancing detection performance to varying degrees. The DCC2f + CBAM combination (Experiment 6) achieves 12.8% mAP@0.5 improvement (0.764→0.862), exceeding the individual module gains, demonstrating synergistic effects. The model in Experiment 7 achieves the lowest parameter count and the fastest inference speed. The complete model (Experiment 8) maintains real-time performance (113 FPS) while achieving 0.902 mAP@0.5, an 18.1% improvement over baseline, with fewer parameters (6.1 MB) than the original YOLOv8s (6.3 MB). These results confirm that the SRoFF-Yolover delivers superior comprehensive detection performance in forest fire detection.

The curves depicting the variation in

mAP@0.5 with the number of iterations for different structural improvements are presented in

Figure 6.

Figure 6 shows the trend of

mAP@0.5 for different models during the training process. The models involved include the YOLOV8s model (Experiment 1), model equipped with the DCC2f (Experiment 2), model integrated with the CBAM (Experiment 3), model based on Backbone-Neck linkage (Experiment 4), and model based on Embedding (Experiment 5). It can be seen from the figure that with the increase in the number of training rounds, the

mAP@0.5 value of each model is on the rise, and shows different growth rates at different stages. The models from Experiments 2 to 5 all achieved the improvement of

mAP@0.5. Among them, the CBAM-based model in Experiment 3 performs well, and its

mAP@0.5 improves the most. This result shows that the attention mechanism introduced by the CBAM can effectively guide the model to learn key features, thereby greatly improving the object detection performance of the model. When the number of training iterations reaches 300 rounds, the

mAP@0.5 values of all models gradually become stable, which indicates that the model has basically reached the convergence state and the training process tends to be stable.

The trained SRoFF-Yolover was employed to identify the suspicious regions of forest fire in the test set. Some representative results are shown in

Figure 7.

Nighttime detection (

Figure 7e–h) generally outperforms daytime detection (

Figure 7a–d), likely because flames are more distinct against dark backgrounds at night. Winter scenes (

Figure 7d,h) show the lowest false detection rates, possibly due to the sparse vegetation reducing interference. In contrast, summer scenes (

Figure 7b,f) exhibit lower detection accuracy, likely because of noise from high temperatures.

3.2. The Comparative Experiments

Considering that suspicious regions of forest fire typically consist of scattered small targets with significant seasonal variations, we selected the YOLO series (v5/v8s/v10) and the Faster R-CNN [

43] as the comparative models to validate the applicability of our proposed model. As a classic two-stage detection model, the Faster R-CNN preserves more detailed information through its Region Proposal Network (RPN), while its improved RoIAlign method effectively mitigates feature misalignment issues, making it particularly effective for small target detection. However, it suffers from a slower inference speed and higher computational costs. In contrast, the YOLO series, as single-stage detection models, demonstrate faster inference speeds. The YOLOv5 achieves multi-scale feature fusion through its FPN + PAN architecture, providing certain small target detection capabilities. YOLOv8s enhances feature propagation for small targets by optimizing the FPN structure and cross-stage connections. The newly released YOLOv10 [

44] incorporates a lightweight Transformer module to explore its performance in detecting scattered small targets.

To ensure a fair comparison, all competing models (YOLOv5, YOLOv8s, and YOLOv10) were fine-tuned on the SRFFD under identical training protocols, including data augmentation, optimizer settings (AdamW), and learning rate schedules. Default anchor boxes and hyperparameters were adjusted to match the scale of forest fire detection tasks, and all models were trained until convergence. The comparative experiment results are shown in

Table 6.

Our proposed model (SRoFF-Yolover) achieved the highest mAP@0.5 (0.902) among all the compared models, while the YOLOv10 demonstrated the fastest inference speed (128 FPS).

Compared with the Faster R-CNN, the SRoFF-Yolover exhibited a 9.9 percentage point improvement in mAP@0.5 (0.902 vs. 0.821) and a 4.2× acceleration in inference speed (113 FPS vs. 27 FPS), highlighting the advantages of single-stage detectors. When evaluated against the YOLO series, the SRoFF-Yolover maintained a comparable model size (6.1 MB vs. YOLOv10’s 5.6 MB) while outperforming the YOLOv10 by 3.3% in mAP@0.5 (0.902 vs. 0.873), demonstrating the efficacy of its customized design.

Furthermore, we randomly selected 10 images from four typical scenarios in the test set: winter night, autumn night, summer day, and spring day. Each of the five models was used for prediction on these 10 images. The average prediction results (

mAP@0.5) are recorded in

Table 7.

The results show that the SRoFF-Yolover maintains comprehensive superiority (average mAP@0.5: 0.872), while the YOLOv5 performs the worst. The best-performing scenario is the winter night, whereas the most challenging scenario is the summer day. Further analysis indicates that winter achieves the highest detection performance due to simpler backgrounds, while the summer day suffers from decreased detection accuracy due to increased flame occlusion caused by dense vegetation.

Although the comparative models—including the YOLOv5, YOLOv8s, YOLOv10, and Faster R-CNN—achieved relatively high accuracy in target detection, they are primarily designed for general-purpose object detection tasks and lack specialized modeling for the unique characteristics of early-stage forest fires. In contrast, the SRoFF-Yolover is not merely an accuracy-oriented improvement over the YOLOv8 but a task-specific innovation that directly addresses key challenges in forest fire detection, such as early occurrence, small and scattered targets, and significant seasonal variability. First, the SRoFF-Yolover is tailored for early-stage forest fire detection. It incorporates a novel embedding layer to integrate seasonal and temporal information into image features, significantly enhancing detection robustness across different seasons and time periods—an aspect overlooked by most baseline models. Second, the model applies dilated convolutions in the backbone network to expand the receptive field, which strengthens its capability to detect small, dispersed fire sources—common in early fire stages—and compensates for the limitations of models like the YOLOv5 and the YOLOv8s in this regard. Third, a CBAM attention module is integrated before the C2fLayer in the neck, which increases the model’s sensitivity to critical fire regions, and effectively reduces false positives caused by environmental noise—this mechanism is not included in the baseline models. Finally, the SRoFF-Yolover features a redesigned backbone-to-neck connection using P2, P4, and SPPF layers, optimizing the transmission and fusion of multiscale features while maintaining high computational efficiency. In summary, the SRoFF-Yolover demonstrates not only competitive mAP performance but also significant structural innovations and task adaptability, offering a more practical and effective solution for early forest fire detection in real-world scenarios.

4. Discussion

We conducted a systematic evaluation of various image enhancement techniques, with a particular focus on analyzing their impact on the performance of both the YOLOv8s and the SRoFF-Yolover. Through comparative studies, we identified the most suitable dataset augmentation methods for improving suspicious regions of forest fire detection capabilities, thereby providing a foundation for subsequent data augmentation efforts. Furthermore, we thoroughly investigated the SRoFF-Yolover’s detection performance for suspicious regions of forest fire across different seasons to assess their seasonal adaptability.

4.1. The Effects of Data Augmentation Techniques on Model Detection Performance

Applying various forms of image augmentation to the dataset can increase the quantity and diversity of sample data, effectively mitigate the issue of model overfitting, and improve the model’s generalization ability. We employed three data augmentation techniques—affine transformation, HSV random enhancement, and copy-paste augmentation—to augment the dataset and form the SRFFD. The experiments were conducted to validate the impact of various data augmentation techniques on YOLOv8s and SRoFF-Yolover detection performance, respectively. Based on the initial image dataset (containing 2392 images), we designed the following experimental scheme. In Experiments 1–3, three individual data augmentation methods were separately applied to expand the initial dataset by six times (resulting in 14,352 images); in Experiments 4–6, pairwise combinations of the three augmentation methods were used to similarly expand the initial dataset by six times; in Experiment 7, all three augmentation methods were simultaneously applied to expand the initial dataset by six times. Using the aforementioned dataset, we trained the YOLOv8s and the SRoFF-Yolover according to

Table 3 and

Table 4, respectively. The

mAP@0.5 obtained by testing the trained model on the test set is listed in

Table 8.

The “Yes” indicates the application of the corresponding image enhancement technique, while “No” represents its absence.

The experimental results demonstrate that data augmentation techniques play a critical role in improving model performance, particularly for complex tasks like forest fire detection. Among individual augmentation methods, HSV random enhancement performs the best, indicating that color features are central to forest fire detection. Flames (e.g., red, orange, and yellow) exhibit distinct separability in the HSV space. Random adjustments to hue (H), saturation (S), and value (V) simulate flame appearances under varying lighting conditions, enhancing the model’s robustness to color variations. Additionally, the diversity of forest backgrounds (e.g., green leaves, dry wood) may be better represented through HSV augmentation, reducing false detections. Copy-paste augmentation, while increasing sample quantity, is slightly less effective than HSV enhancement. This may be because simple copy-paste operations fail to fully simulate realistic interactions between flames and backgrounds. Affine transformations (e.g., rotation, scaling, and translation) show the weakest performance when used alone, likely because flame shapes and orientations follow certain natural patterns, and excessive transformations may disrupt these patterns.

Experimental results demonstrate that combining multiple augmentation techniques maximizes model performance. Specifically, the “HSV + copy-paste + affine” combination achieves the highest mAP@0.5 (0.902) on the SRoFF-Yolover. This suggests a synergistic effect among different augmentation strategies: color enhancement enriches flame appearance diversity, copy-paste increases sample quantity, and affine transformations improve adaptability to geometric variations. This combined strategy provides a valuable reference for data augmentation in complex scenarios.

4.2. Results of Suspicious Regions of Forest Fire Detection

Generally, forest fires occur randomly. To validate the adaptability of the SRoFF-Yolover across different seasons and times of day, a series of experiments were conducted. As presented in

Table 9, the SRoFF-Yolover demonstrates robust performance in detecting suspicious regions of forest fire under varying temporal conditions.

Seasonal and temporal adaptability in forest fire detection is a critical issue in practical applications. Experimental results demonstrate that the SRoFF-Yolover maintains stable performance across different times and seasons, highlighting its adaptability.

First, the SRoFF-Yolover exhibits significant day-night performance differences, with consistently better nighttime detection. This can be attributed to the following: (1) In low-light conditions, flames become prominent light sources with higher contrast against backgrounds, significantly improving detection. (2) Reduced nighttime interference factors (e.g., sunlight reflection, cloud cover) effectively lower false alarm rates.

Second, the SRoFF-Yolover achieves optimal performance in winter, particularly at night (mAP@0.5: 0.951). Our analysis reveals key influencing factors: (1) Environmental factors: Sparse winter vegetation significantly reduces background complexity, making flame features easier to identify. In contrast, autumn shows relatively lower performance (daytime mAP@0.5: 0.836), mainly due to increased background complexity from fallen leaves and dry vegetation. (2) Spectral characteristics: Winter flames exhibit stable H-value distribution (concentrated in the red channel) in the HSV color space, while summer flames show multi-channel dispersion. This difference directly affects detection difficulty, confirming that our HSV-based data augmentation effectively improves winter detection performance. (3) Combustion properties: Winter flames maintain more stable burning states with lower flickering frequencies due to colder temperatures, facilitating feature capture. Conversely, summer flames display more dynamic variations under high temperatures, potentially increasing miss rates. (4) Data balance: The seasonally and diurnally balanced dataset ensures the temporal adaptability of the model. (5) Model architecture: The SRoFF-Yolover’s multi-scale feature extraction effectively captures flame features at various distances and sizes, significantly enhancing the model’s adaptability to lighting variations, particularly its temporal adaptability.

Based on the flame pixel area in the test set, we classified the samples into three groups: (1) small targets (<32 × 32 pixels, 68% of samples, average mAP@0.5 = 0.894), (2) medium targets (32 × 32 to 64 × 64 pixels, 23% of samples, average mAP@0.5 = 0.899), and (3) large targets (>64 × 64 pixels, 9% of samples, average mAP@0.5 = 0.911).

The results show that the CBAM performs similarly for small and medium targets but achieves better detection for large targets. This suggests the CBAM’s ability to adapt to different target scales, which can be explained by two factors: (1) channel attention evaluates feature importance independently of absolute scale, and (2) spatial attention uses dual-path pooling to capture multi-scale features.

Meanwhile, we conducted a comparison between our work and other related studies. Both the SRoFF-Yolover and the FEDS-YOLOv11n [

45] optimize the detection of obscured or concealed fire sources using attention mechanisms, but their focuses differ. The SRoFF-Yolover performs exceptionally well in severely obscured scenarios, such as winter nights. It employs the CBAM, which is more suitable for low-visibility conditions (e.g., nighttime). The FEDS-YOLOv11n adopts the SEAM attention module, effectively improving the detection capability for obscured targets while maintaining a lightweight design. It achieves a balance between accuracy and efficiency, making it more suitable for real-time detection. The YOLOGX [

46] employs a GD mechanism and Focal-SIoU to address environmental sensitivity, achieving better performance in complex fire recognition scenarios. The SRoFF-Yolover is specifically optimized for early fire detection (small flames, occluded fires), demonstrating superior performance for winter nighttime scenarios compared to daytime detection. This improvement stems from its seasonal embedding layer, which enhances temporal sensitivity. While the YOLOGX exhibits greater innovation in its loss function and feature fusion mechanism, the SRoFF-Yolover provides more refined data augmentation and temporal information embedding.

This study systematically analyzes the impact of data augmentation and temporal adaptability on forest fire detection. Combining color and geometric augmentation maximizes model performance, while nighttime and winter conditions favor detection. These findings provide a scientific basis for optimizing forest fire monitoring systems and a reference for similar detection tasks.

5. Conclusions

We conducted research addressing the key challenges in suspicious regions of forest fire detection, including small-target detection capability, real-time performance, and generalization ability (particularly seasonal adaptability). The study was carried out from two perspectives: dataset construction and model optimization. We developed the SRFFD to address the lack of diverse early-stage fire imagery. Additionally, we proposed the SRoFF-Yolover, an enhanced YOLOv8-based model for detecting suspicious regions of forest fire, aiming to achieve both accurate detection and a lightweight design.

The initial dataset comprises early-stage forest fire videos and images gathered from online sources, supplemented by several additional public datasets. The SRFFD is generated by augmenting and expanding the initial dataset through three data augmentation techniques: affine transformations, HSV random enhancement, and copy-paste augmentation. The SRoFF-Yolover, based on the YOLOv8, achieves performance improvements through four key modifications: the incorporation of an embedding layer, Backbone modification, modification to the linkage between the Backbone and Neck, and Neck modification. Compared to the baseline model (the YOLOv8s), the SRoFF-Yolover achieves an 18.1% improvement in mAP@0.5, a 4.6% increase in FPS, a 2.6% reduction in GFLOPs, and a 3.2% decrease in #Params. For the same season, the SRoFF-Yolover exhibits a higher detection rate for suspected forest fire areas at night compared to daytime. Across different seasons, the model achieves the highest detection accuracy in winter and the lowest in autumn.

This study still has several limitations. First, the lack of dataset diversity restricts further improvements in model performance, particularly due to insufficient image samples under extreme lighting conditions (e.g., intense midday sunlight in the summer) and adverse weather (e.g., heavy rain, dense fog, and other low-visibility scenarios). Additionally, negative samples from easily confusable scenes (e.g., sunset vs. fire, welding sparks vs. flames) are underrepresented. Second, the SRoFF-Yolover’s computational complexity remains high, making efficient deployment on resource-constrained edge devices (e.g., mobile chips or embedded systems) challenging.

To address these issues, future work could explore generative AI techniques, such as Generative Adversarial Networks (GANs) or diffusion models, to synthesize diverse training samples and expand dataset coverage. Furthermore, model compression techniques—including neural network pruning, quantization, and knowledge distillation—could be employed to optimize the model architecture, reduce computational overhead, and enhance compatibility with edge computing devices. This study is conducted based on visible light images, where the model exhibits certain levels of missed detections and false alarms in summer day scenarios. The future incorporation of thermal infrared images for multimodal fusion may potentially improve detection accuracy under such conditions.