Developing a Novel Method for Vegetation Mapping in Temperate Forests Using Airborne LiDAR and Hyperspectral Imaging

Abstract

1. Introduction

- (a)

- Can the synergistic integration of high-resolution HSI and LiDAR data effectively delineate and classify individual tree species within complex temperate mixed forests?

- (b)

- To what extent can this integrated approach improve the accuracy of vegetation community mapping compared to traditional methods in temperate mixed forests?

- (c)

- Can machine learning-based clustering reliably delineate community boundaries and identify dominant species for vegetation and forest type mapping in this complex ecosystem?

2. Materials and Methods

2.1. Study Site and Data Acquisition

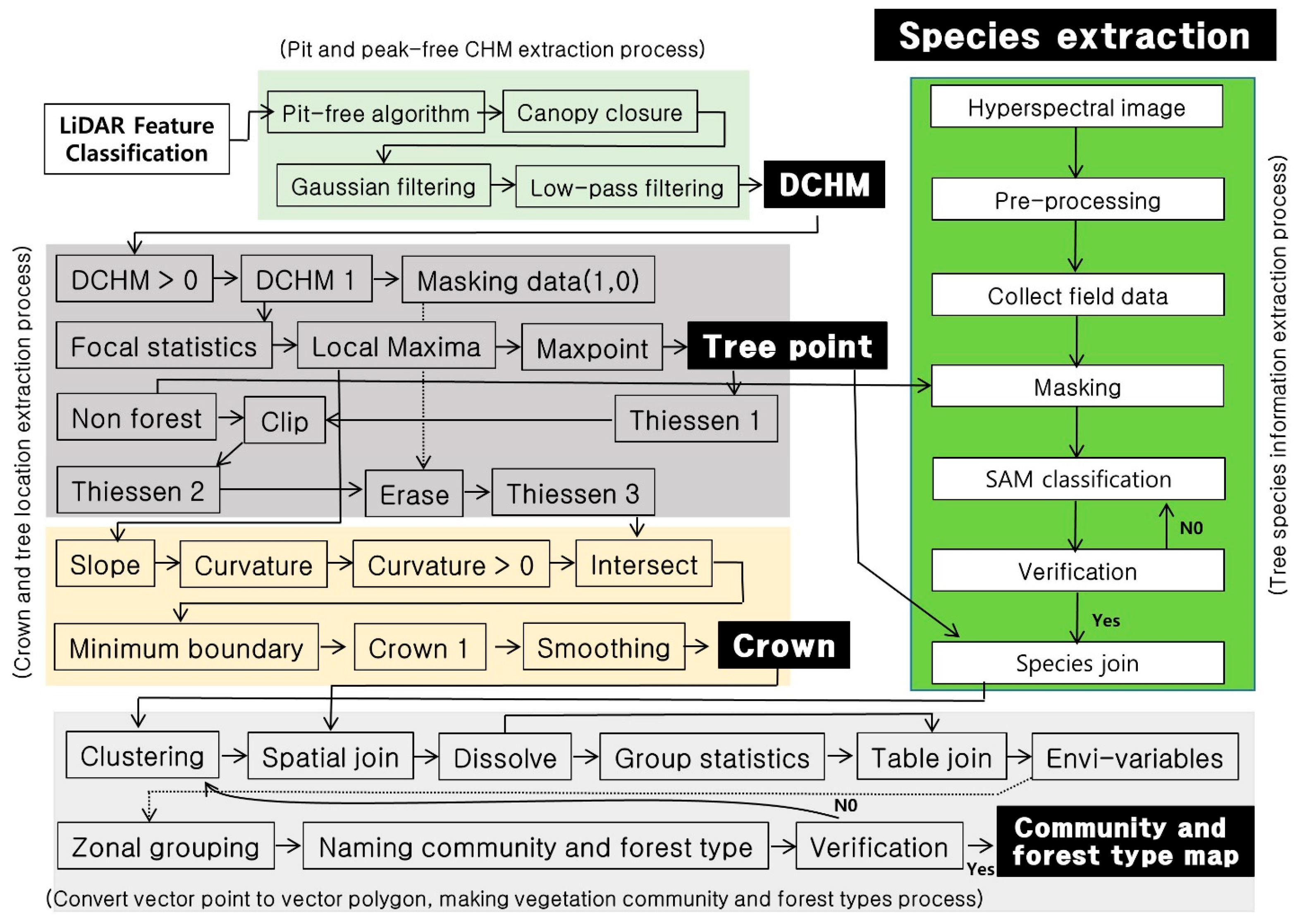

2.2. Digital Canopy Height Model Extraction

2.3. Crown Extraction with DCHM

2.4. Extracting Species Information

2.5. Physiognomic Community and Forest Type Mapping

2.6. Species Distribution

3. Results

3.1. Accuracy Assessment of Supervised Classification in Vegetation Mapping

3.2. Species Mapping and Classification Accuracy Using Multi-Sensor Clustering Techniques

3.3. Field Validation and Accuracy Assessment of Vegetation and Forest Mapping

4. Discussion

4.1. Methodological Contributions and Implementation Scope

4.2. Methodological Limitations and Future Improvements

4.3. Policy Applications and Strategic Relevance

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Wang, B.; Liu, J.; Li, J.; Li, M. UAV LiDAR and Hyperspectral Data Synergy for Tree Species Classification in the Maoershan Forest Farm Region. Remote. Sens. 2023, 15, 1000. [Google Scholar] [CrossRef]

- Wang, A.; Shi, S.; Yang, J.; Luo, Y.; Tang, X.; Du, J.; Bi, S.; Qu, F.; Gong, C.; Gong, W. Integration of LiDAR and Hyperspectral Imagery for Tree Species Identification at the Individual Tree Level. Photogramm. Rec. 2025, 40, e70007. [Google Scholar] [CrossRef]

- Lee, A.C.; Lucas, R.M. A LiDAR-derived canopy density model for tree stem and crown mapping in Australian forests. Remote Sens. Environ. 2007, 111, 493–518. [Google Scholar] [CrossRef]

- Kattenborn, T.; Eichel, J.; Wiser, S.; Burrows, L.; Fassnacht, F.E.; Schmidtlein, S. Convolutional Neural Networks accurately predict cover fractions of plant species and communities in Unmanned Aerial Vehicle imagery. Remote Sens. Ecol. Conserv. 2020, 6, 472–486. [Google Scholar] [CrossRef]

- Shu, X.; Ma, L.; Chang, F. Integrating hyperspectral images and LiDAR data using vision transformers for enhanced vegetation classification. Forests 2025, 16, 620. [Google Scholar] [CrossRef]

- Zhang, H.; Liu, B.; Yang, B.; Guo, J.; Hu, Z.; Zhang, M.; Yang, Z.; Zhang, J. Efficient tree species classification using machine and deep learning algorithms based on UAV-LiDAR data in North China. Front. For. Glob. Change 2025, 8, 1431603. [Google Scholar] [CrossRef]

- Havrilla, C.A.; Villarreal, M.L.; DiBiase, J.K.; Duniway, M.C.; Barger, N.N. Ultra-high-resolution mapping of biocrusts with unmanned aerial systems. Remote Sens. Ecol. Conserv. 2020, 6, 441–456. [Google Scholar] [CrossRef]

- Ehbrecht, M.; Seidel, D.; Annighöfer, P.; Kreft, H.; Köhler, M.; Zemp, D.C.; Puettmann, K.; Nilus, R.; Babweteera, F.; Willim, K.; et al. Global patterns and climatic controls of forest structural complexity. Nat. Commun. 2021, 12, 519. [Google Scholar] [CrossRef]

- Chen, C.; Wang, Y.; Li, Y.; Yue, T.; Wang, X. Robust and parameter-free algorithm for constructing pit-free canopy height models. Int. J. Geogr. Inf. 2017, 6, 219. [Google Scholar] [CrossRef]

- Hakkenberg, C.R.; Zhu, K.; Peet, P.K.; Song, C. Mapping multi-scale vascular plant richness in a forest landscape with integrated LiDAR and hyperspectral remote-sensing. Ecology 2018, 99, 474–487. [Google Scholar] [CrossRef]

- Sankey, T.T.; McVay, J.; Swetnam, T.L.; McClaran, M.P.; Heilman, P.; Nichols, M.; Pettorelli, N.; Horning, N. UAV hyperspectral and LiDAR data and their fusion for arid and semi-arid land vegetation monitoring. Remote Sens. Ecol. Conserv. 2018, 1, 20–33. [Google Scholar] [CrossRef]

- Lopatin, J.; Dolos, K.; Kattenborn, T.; Fassnacht, F.E. How canopy shadow affects invasive plant species classification in high spatial resolution remote sensing. Remote Sens. Ecol. Conserv. 2019, 5, 302–317. [Google Scholar] [CrossRef]

- Klehr, D.; Stoffels, J.; Hill, A.; Pham, V.-D.; van der Linden, S.; Frantz, D. Mapping tree species fractions in temperate mixed forests using Sentinel-2 time series and synthetically mixed training data. Remote Sens. Environ. 2025, 323, 114740. [Google Scholar] [CrossRef]

- Zhao, Y.; Zeng, Y.; Zheng, Z.; Dong, W.; Zhao, D.; Wu, B.; Zhao, Q. Forest species diversity mapping using airborne LiDAR and hyperspectral data in a subtropical forest in China. Remote Sens. Environ. 2018, 213, 104–114. [Google Scholar] [CrossRef]

- Yun, T.; Jiang, K.; Li, G.; Eichhorn, M.P.; Fan, J.; Liu, F.; Chen, B.; An, F.; Cao, L. Individual tree crown segmentation from airborne LiDAR data using a novel Gaussian filter and energy function minimization-based approach. Remote Sens. Environ. 2021, 256, 112307. [Google Scholar] [CrossRef]

- Man, Q.; Dong, P.; Zhang, B.; Liu, H.; Yang, X.; Wu, J.; Liu, C.; Han, C.; Zhou, C.; Tan, Z. Precise identification of individual tree species in urban areas with high canopy density by multi-sensor UAV data in two seasons. Int. J. Digit. Earth 2025, 18, 2496804. [Google Scholar] [CrossRef]

- Ørka, H.O.; Næsset, E.; Bollandsås, O.M. Classifying species of individual trees by intensity and structure features derived from airborne laser scanner data. Remote Sens. Environ. 2009, 113, 1163–1174. [Google Scholar] [CrossRef]

- La, H.P.; Eo, Y.D.; Chang, A.; Kim, C. Extraction of individual tree crown using hyperspectral image and LiDAR data. KSCE J. Civ. Eng. 2015, 19, 1078–1087. [Google Scholar] [CrossRef]

- Ballanti, L.; Blesius, L.; Hines, E.; Kruse, B. Tree species classification using hyperspectral imagery: A comparison of two classifiers. Remote Sens. 2016, 8, 445. [Google Scholar] [CrossRef]

- Sothe, C.; Dalponte, M.; de Almeida, C.M.; Schimalski, M.B.; Lima, C.L.; Liesenberg, V.; Miyoshi, G.T.; Tommaselli, A.M.G. Tree species classification in a highly diverse subtropical forest integrating uav-based photogrammetric point cloud and hyperspectral data. Remote Sens. 2019, 11, 1338. [Google Scholar] [CrossRef]

- Sinaice, B.B.; Owada, N.; Ikeda, H.; Toriya, H.; Bagai, Z.; Shemang, E.; Adachi, T.; Kawamura, Y. Spectral angle mapping and AI methods applied in automatic identification of placer deposit magnetite using multispectral camera mounted on UAV. Minerals 2022, 12, 268. [Google Scholar] [CrossRef]

- Estrada, J.S.; Fuentes, A.; Reszka, P.; Cheein, F.A. Machine learning assisted remote forestry health assessment: A comprehensive state of the art review. Front. Plant Sci. 2023, 14, 1139232. [Google Scholar] [CrossRef]

- Jia, J.; Zhang, L.; Yin, K.; Sörgel, U. An Improved Tree Crown Delineation Method Based on a Gradient Feature-Driven Expansion Process Using Airborne LiDAR Data. Remote Sens. 2025, 17, 196. [Google Scholar] [CrossRef]

- Budei, B.C.; St-Onge, B.; Hopkinson, C.; Audet, F.-A. Identifying the genus or species of individual trees using a three-wavelength airborne LiDAR system. Remote Sens. Environ. 2018, 204, 632–647. [Google Scholar] [CrossRef]

- Isenburg, M. LAStools, 2.0.1; Software for LiDAR Data Processing; rapidlasso GmbH: Gilching, Germany, 2020; Available online: https://rapidlasso.com/lastools (accessed on 10 July 2025).

- L3Harris Geospatial. ENVI, 5.6; L3Harris Geospatial: Broomfield, CO, USA, 2021; Available online: https://www.l3harrisgeospatial.com/Software-Technology/ENVI (accessed on 10 July 2025).

- Esri. ArcGIS Desktop, 10.8; Esri: Redlands, CA, USA, 2020; Available online: https://www.esri.com/en-us/arcgis/products/arcgis-desktop/overview (accessed on 10 July 2025).

- Esri. ArcGIS Pro, 2.8.2; [Software]. 2021. Available online: https://www.esri.com/en-us/arcgis/products/arcgis-pro/overview (accessed on 10 July 2025).

- Girouard, G.; Bannari, A.; Harti, A.E.; Desrochers, A. Validated Spectral Angle Mapper algorithm for geological mapping: Comparative study between Quickbird and Landsat-TM. In Proceedings of the 20th ISPRS Congress: Geo-Imagery Bridging Continents, Istanbul, Turkey, 12–23 July 2004; pp. 1099–1113. [Google Scholar]

- Marcu, C.; Stătescu, F.; Iurist, N. A GIS-based algorithm to generate a LiDAR pit-free canopy height model. Present. Environ. Sustain. Dev. 2017, 2, 89–95. [Google Scholar] [CrossRef][Green Version]

- Lindsay, J.B.; Francioni, A.; Cockburn, M.H. LiDAR DEM smoothing and the preservation of drainage features. Remote Sens. 2019, 11, 1926. [Google Scholar] [CrossRef]

- Matsuki, T.; Yokoya, N.; Iwasaki, A. Fusion of hyperspectral and LiDAR data for tree species classification. In Proceedings of the 34th Asian Conference on Remote Sensing, Bali, Indonesia, 20–24 October 2013; Available online: http://naotoyokoya.com/assets/pdf/TMatsukiACRS2013.pdf (accessed on 10 July 2025).

- Lukaszkiewicz, J.; Kosmala, M. Determining the age of streetside trees with diameter at breast height-based multifactorial model. Arboric. Urban For. 2008, 4, 137–143. [Google Scholar] [CrossRef]

- Smits, I.; Prieditis, G.; Dagis, S.; Dubrovskis, D. Individual tree identification using different LiDAR and optical imagery data processing methods. Biosyst. Inf. Technol. 2012, 1, 19–24. [Google Scholar] [CrossRef]

- Petropoulos, G.P.; Kalaitzidis, C. Spectral angle mapper and object-based classification combined with hyperspectral remote sensing imagery for obtaining land use/cover mapping in a Mediterranean region. Geocarto Int. 2013, 28, 114–129. [Google Scholar] [CrossRef]

- Ryu, J.H.; Yu, B.H.; Kim, J.C.; Seo, S.A.; Kim, J.S. Production of the 5th Forest Stand Map Using Aerial Photograph Database Resources; Korea Forest Research Institute: Seoul, Republic of Korea, 2011; Available online: http://scienceon.kisti.re.kr/srch/selectPORSrchReport.do?cn=TRKO201400016922 (accessed on 10 July 2025).

- TreesCharlotte. Tree Age Equation. 2020. Available online: https://treescharlotte.org/tree-education/tree-age-equation/ (accessed on 10 July 2025).

- Microsoft Corporation. Microsoft Excel; [Software]. 2021. Available online: https://www.microsoft.com/en-us/microsoft-365/excel (accessed on 10 July 2025).

- Cha, J.Y.; Kim, H.S.; Park, J.M.; Lee, S.W.; Jeon, H.S.; Song, H.S. Guidelines for the 5th National Survey on Natural Environment; Korea National Institute of Ecology: Seocheon, Republic of Korea, 2019; Available online: https://www.nie.re.kr/nie/bbs/BMSR00071/view.do?boardId=31713172&menuNo=200299 (accessed on 12 May 2022).

- de Almeida, C.T.; Galvão, L.S.; Ometto, J.P.H.B.; Jacon, A.D.; Pereira, F.R.d.S.; Sato, L.Y.; Silva-Junior, C.H.L.; Brancalion, P.H.S.; Aragão, L.E.O.e.C.d. Advancing Forest Degradation and Regeneration Assessment Through Light Detection and Ranging and Hyperspectral Imaging Integration. Remote Sens. 2024, 16, 3935. [Google Scholar] [CrossRef]

- Dian, Y.; Pang, Y.; Dong, Y.; Li, Z. Urban tree species mapping using airborne LiDAR and hyperspectral data. J. Indian Soc. Remote Sens. 2016, 44, 595–603. [Google Scholar] [CrossRef]

- Onojeghuo, A.O.; Onojeghuo, A.R. Object-based habitat mapping using very high spatial resolution multispectral and hyperspectral imagery with LiDAR data. Int. J. Appl. Earth Obs. Geoinf. 2017, 59, 79–91. [Google Scholar] [CrossRef]

- Krzystek, P.; Serebryanyk, A.; Schnörr, C.; Červenka, J.; Heurich, M. Large-scale mapping of tree species and dead trees in Šumava National Park and Bavarian Forest National Park using Lidar and multispectral Imagery. Remote Sens. 2020, 12, 661. [Google Scholar] [CrossRef]

- Ferreira, M.P.; Zortea, M.; Zanotta, D.C.; Shimabukuro, Y.E.; de Souza Filho, C.R. Mapping tree species in tropical seasonal semi-deciduous forests with hyperspectral and multispectral Data. Remote Sens. Environ. 2016, 179, 66–78. [Google Scholar] [CrossRef]

- Duncanson, L.I.; Cook, B.D.; Hurtt, G.C.; Dubayah, R.O. An efficient, multi-layered crown delineation algorithm for mapping individual tree structure across multiple ecosystems. Remote Sen. Environ. 2014, 154, 378–386. [Google Scholar] [CrossRef]

- Li, W.; Guo, Q.; Jakubowski, M.K.; Kelly, M. A new method for segmenting individual trees from the LiDAR point cloud. Photogram. Eng. Remote Sens. 2012, 78, 75–84. [Google Scholar] [CrossRef]

- Roussel, J.-R.; Auty, D.; Coops, N.C.; Tompalski, P.; Goodbody, T.R.H.; Meador, A.S.; Bourdon, J.-F.; de Boissieu, F.; Achim, A. lidR: An R package for analysis of airborne laser scanning (ALS) data. Remote Sens. Environ. 2020, 251, 112061. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, A.; Gao, P. From crown detection to boundary segmentation: Advancing forest analytics with enhanced YOLO model and airborne LiDAR point clouds. Forests 2025, 16, 248. [Google Scholar] [CrossRef]

- Wallace, L.O.; Lucieer, A.; Watson, C.S. Assessing the feasibility of UAV-based LiDAR for high resolution forest change detection. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2012, XXXIX-B7, 499–504. [Google Scholar] [CrossRef]

- Sankey, T.T. UAV Hyperspectral-Thermal-Lidar fusion in phenotyping: Genetic trait differences among Fremont Cottonwood Populations. Landsc. Ecol. 2025, 40, 45. [Google Scholar] [CrossRef]

- Campos, M.B.; Litkey, P.; Wang, Y.; Chen, Y.; Hyyti, H.; Hyyppä, J.; Puttonen, E. A long-term terrestrial laser scanning measurement station to continuously monitor structural and phenological dynamics of boreal forest canopy. Front. Plant Sci. 2021, 11, 606752. [Google Scholar] [CrossRef]

- Arrizza, S.; Marras, S.; Ferrara, R.; Pellizzaro, G. Terrestrial Laser Scanning (TLS) for Tree Structure Studies: A Review of Methods for Wood-Leaf Classifications from 3D Point Clouds. Remote Sens. Appl. 2024, 36, 101364. [Google Scholar] [CrossRef]

- Luo, W.; Ma, H.; Yuan, J.; Zhang, L.; Ma, H.; Cai, Z.; Zhou, W. High-Accuracy Filtering of Forest Scenes Based on Full-Waveform LiDAR Data and Hyperspectral Images. Remote Sens. 2023, 15, 3499. [Google Scholar] [CrossRef]

- Gibbs, D.A.; Rose, M.; Grassi, G.; Melo, J.; Rossi, S.; Heinrich, V.; Harris, N.L. Revised and Updated Geospatial Monitoring of 21st Century Forest Carbon Fluxes. Earth Syst. Sci. Data 2025, 17, 1217–1243. [Google Scholar] [CrossRef]

- Bossy, T.; Ciais, P.; Renaudineau, S.; Wan, L.; Ygorra, B.; Adam, E.; Barbier, N.; Bauters, M.; Delbart, N.; Frappart, F.; et al. State of the Art in Remote Sensing Monitoring of Carbon Dynamics in African Tropical Forests. Front. Remote Sens. 2025, 6, 1532280. [Google Scholar] [CrossRef]

| Supervised Code | Scientific Name |

|---|---|

| 1, 4, 6, 8, 10, 11, 14, 15, 16, 17, 19 | Quercus acutissima |

| 3 | Castanea crenata |

| 2, 9 | Pinus rigida |

| 5 | Prunus sargentii |

| 7 | Larix kaempferi |

| 12 | Larix kaempferi |

| 13 | Pinus koraiensis |

| 18 | Platanus occidentalis |

| Scientific Name | Number of Individuals | Accuracy (%) |

|---|---|---|

| Castanea crenata | 1 | 100 |

| Larix kaempferi | 4 | 100 |

| Pinus densiflora | 3 | 100 |

| Pinus koraiensis | 2 | 100 |

| Pinus rigida | 7 | 100 |

| Platanus occidentalis | 1 | 100 |

| Prunus sargentii | 1 | 100 |

| Quercus acutissima | 17 | 100 |

| Scientific Name | Total Trees | Mapped Accuracy (%) | Errors | Misidentified Species |

|---|---|---|---|---|

| Castanea crenata | 2 | 100 | ||

| Larix kaempferi | 11 | 100 | ||

| Pinus densiflora | 12 | 92 | 1 | Platanus occidentalis |

| Pinus koraiensis | 6 | 100 | ||

| Pinus rigida | 22 | 95 | 1 | Pinus koraiensis |

| Platanus occidentalis | 1 | 100 | ||

| Prunus sargentii | 1 | 100 | ||

| Quercus acutissima | 35 | 100 | ||

| Total/Average | 90 | 98.4 (Average) | 2 |

| Contents | Total Count | Verification (%) | Notes |

|---|---|---|---|

| Tree point | 560,339 | - | - |

| Crown | 560,339 | - | - |

| Species | 8 | - | Castanea crenata, Larix kaempferi, etc. |

| 1st Verification | - | 100 | No errors in 90 points |

| 2nd Verification | - | 97.8 | 2 errors in 90 points |

| Boundary polygons | 3199 | - | - |

| Communities | 55 | - | Groups in 3199 forest patches |

| Forest types | 8 | - | Groups in 3199 forest patches |

| Verification of communities | - | 93.1 | 12 inconsistencies in 174 points |

| Verification of forest types | - | 97.7 | 4 inconsistencies in 174 points |

| ID | Hyperspectral LiDAR-Derived Community | Field Survey Observation | Community Type | Dominant Species Accuracy | Notes |

|---|---|---|---|---|---|

| 1 | Quercus acutissima–Pinus rigida | Pinus densiflora– Castanea crenata | Mixed | Incorrect | C.M. |

| 2 | Quercus acutissima–Pinus rigida | Robinia pseudoacacia– Quercus acutissima | Mixed | Correct | C.I. |

| 3 | Quercus acutissima–Pinus rigida | Quercus acutissima– Castanea crenata | Mixed | Correct | C.I. |

| 4 | Quercus acutissima–Pinus rigida | Quercus acutissima– Castanea crenata | Mixed | Correct | C.I. |

| 5 | Quercus acutissima–Pinus rigida | Metasequoia glyptostroboides–Castanea crenata | Mixed | Incorrect | C.M. |

| 6 | Platanus occidentalis–Quercus acutissima | Pinus rigida | Single | Incorrect | C.M. |

| 7 | Quercus acutissima–Prunus sargentii | Castanea crenata | Single | Incorrect | C.M. |

| 8 | Quercus acutissima–Larix kaempferi | Castanea crenata | Single | Incorrect | C.M. |

| 9 | Quercus acutissima–Castanea crenata | Pinus densiflora | Mixed | Incorrect | C.M. |

| 10 | Quercus acutissima–Larix kaempferi | Quercus acutissima– Quercus serrata | Mixed | Correct | C.I. |

| 11 | Quercus acutissima–Platanus occidentalis | Larix kaempferi | Single | Incorrect | C.M. |

| 12 | Quercus acutissima–Pinus rigida | Quercus acutissima– Castanea crenata | Mixed | Correct | C.I. |

| ID | Hyperspectral LiDAR-Derived Community | Field Survey Observation |

|---|---|---|

| 1 | Platanus occidentalis | Pinus rigida |

| 2 | Quercus acutissima | Castanea crenata |

| 3 | Quercus acutissima | Pinus densiflora |

| 4 | Quercus acutissima | Larix kaempferi |

| Comm. | FT. | Area (Canopy) (m2) | Ht./Age (m/yrs) | Dens.(%)/ Diam. (cm) | Ind./ Sp. (count) |

|---|---|---|---|---|---|

| Quercus acutissima–Pinus rigida | Quercus acutissima | 318 (212) | 5.5/31.2 | 66.7/17.5 | 10/2 |

| Quercus acutissima | Quercus acutissima | 235 (156.6) | 11.5/30.6 | 66.5/17.2 | 8/2 |

| Quercus acutissima–Pinus rigida | Quercus acutissima | 22,208 (15,342.9) | 10.1/34.5 | 69.1/19.4 | 646/8 |

| Quercus acutissima | Quercus acutissima | 88 (51.9) | 8.7/32.3 | 59.2/18.2 | 3/1 |

| Quercus acutissima–Pinus densiflora | Quercus acutissima | 11,588 (7049) | 13.6/35.9 | 60.8/20.3 | 304/8 |

| Pinus rigida–Quercus acutissima | Pinus rigida | 91 (54) | 2.2/33 | 59.4/18.7 | 2/2 |

| Quercus acutissima–Pinus rigida | Quercus acutissima | 67,200 (38,249.4) | 17.2/36.8 | 56.9/20.7 | 1432/8 |

| Quercus acutissima–Pinus rigida | Quercus acutissima | 847 (357.3) | 9.4/34.5 | 42.2/19.5 | 17/5 |

| Quercus acutissima–Larix kaempferi | Quercus acutissima | 51,119 (37,710.5) | 16.7/36.2 | 73.8/20.4 | 1477/8 |

| Quercus acutissima–Pinus rigida | Quercus acutissima | 13,071 (10,220.5) | 19.1/37.1 | 78.2/20.9 | 391/8 |

| Quercus acutissima–Pinus densiflora | Quercus acutissima | 28,469 (21,396.7) | 17.1/38.3 | 75.2/21.6 | 836/8 |

| Quercus acutissima–Platanus occidentalis | Quercus acutissima | 177 (71.7) | 16.4/38.5 | 40.6/21.6 | 2/2 |

| Pinus koraiensis– Larix kaempferi | Pinus koraiensis | 154 (112.3) | 13.1/45.3 | 73.1/25.5 | 3/3 |

| Prunus sargentii | Prunus sargentii | 80 (12.6) | 5.6/27 | 15.8/15.3 | 1/1 |

| Quercus acutissima–Prunus sargentii | Quercus acutissima | 197 (109.1) | 6.5/28.3 | 55.2/15.9 | 7/3 |

| Quercus acutissima–Prunus sargentii | Quercus acutissima | 2034 (887.7) | 3.7/25.8 | 43.6/14.5 | 81/5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, N.S.; Lim, C.H. Developing a Novel Method for Vegetation Mapping in Temperate Forests Using Airborne LiDAR and Hyperspectral Imaging. Forests 2025, 16, 1158. https://doi.org/10.3390/f16071158

Kim NS, Lim CH. Developing a Novel Method for Vegetation Mapping in Temperate Forests Using Airborne LiDAR and Hyperspectral Imaging. Forests. 2025; 16(7):1158. https://doi.org/10.3390/f16071158

Chicago/Turabian StyleKim, Nam Shin, and Chi Hong Lim. 2025. "Developing a Novel Method for Vegetation Mapping in Temperate Forests Using Airborne LiDAR and Hyperspectral Imaging" Forests 16, no. 7: 1158. https://doi.org/10.3390/f16071158

APA StyleKim, N. S., & Lim, C. H. (2025). Developing a Novel Method for Vegetation Mapping in Temperate Forests Using Airborne LiDAR and Hyperspectral Imaging. Forests, 16(7), 1158. https://doi.org/10.3390/f16071158