Research on Protective Forest Change Detection in Aral City Based on Deep Learning

Abstract

1. Introduction

- (1)

- Explore the potential of GF-2 remote sensing data in protective forest change monitoring, with a focus on its ability to capture subtle variations in high-resolution imagery. This task aims to leverage the high spatial resolution of GF-2 data to enhance the detection of fine-scale changes in forest cover, which is critical for understanding the dynamics of protective forests in arid regions.

- (2)

- Evaluate the effectiveness of the STANet-based deep learning approach in detecting protective forest changes under complex environmental conditions.

- (3)

- Analyze the spatial characteristics of protective forest in Aral City, providing decision-making support for regional ecological restoration and forestland management.

2. Research Area and Method

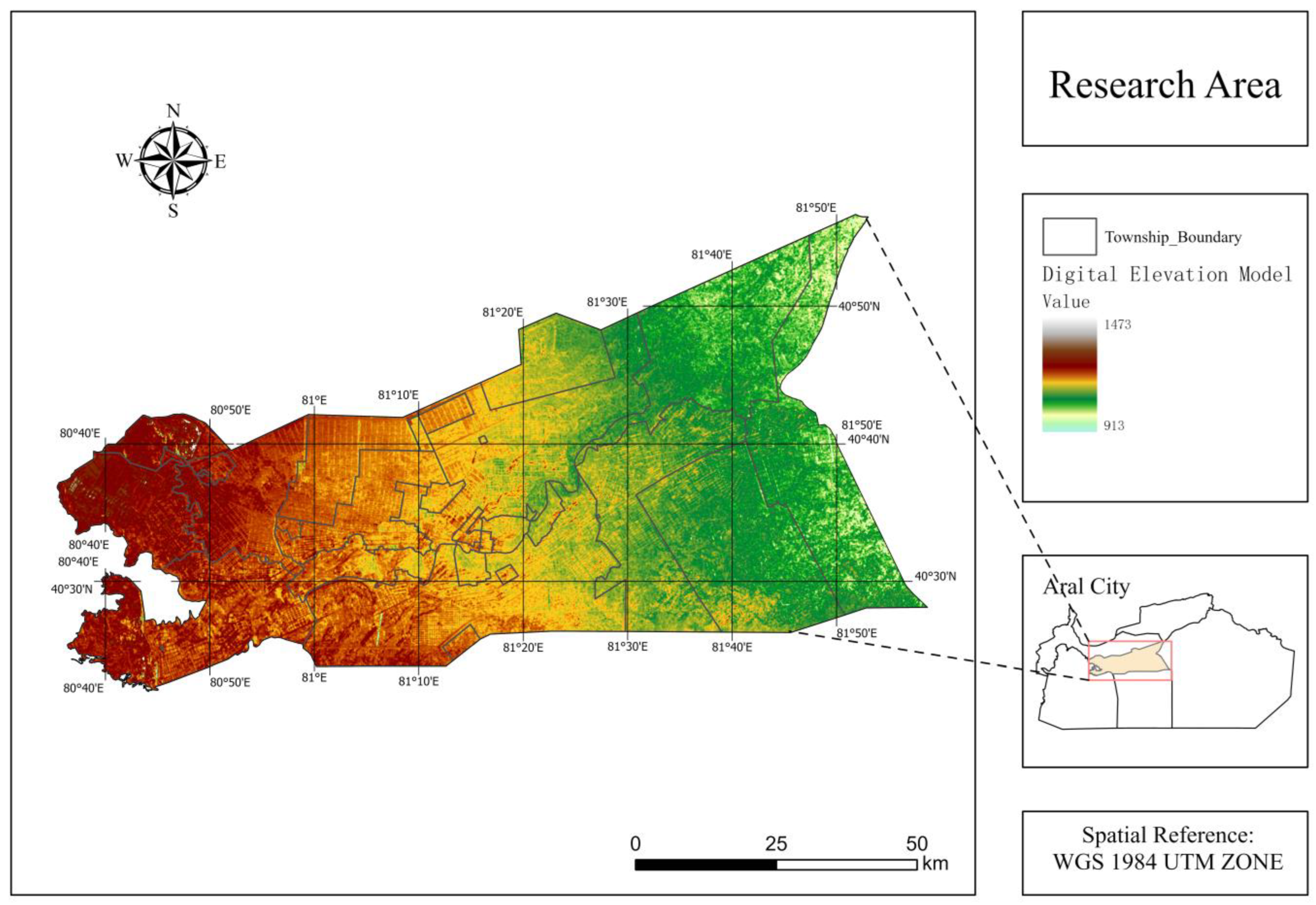

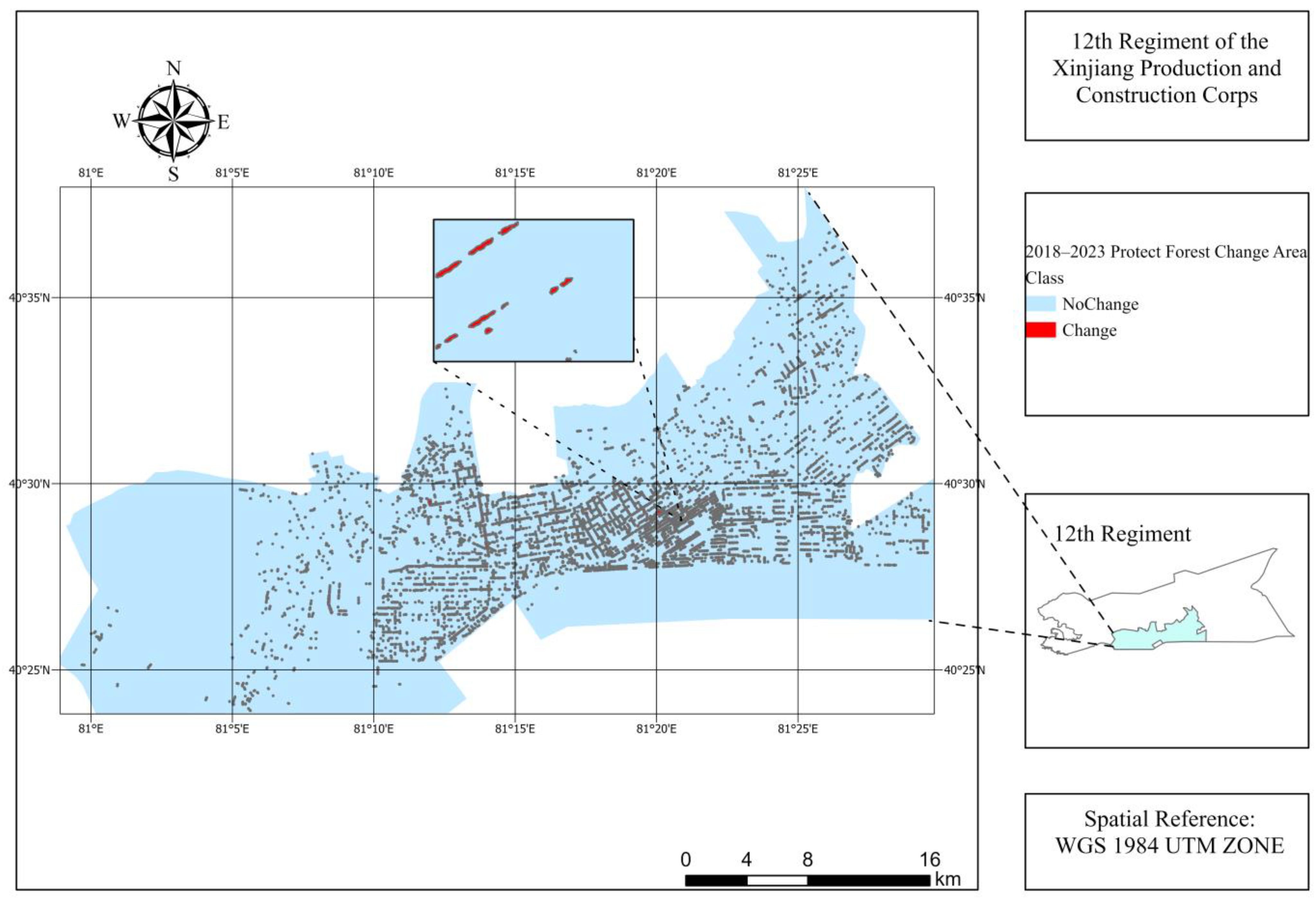

2.1. Research Area

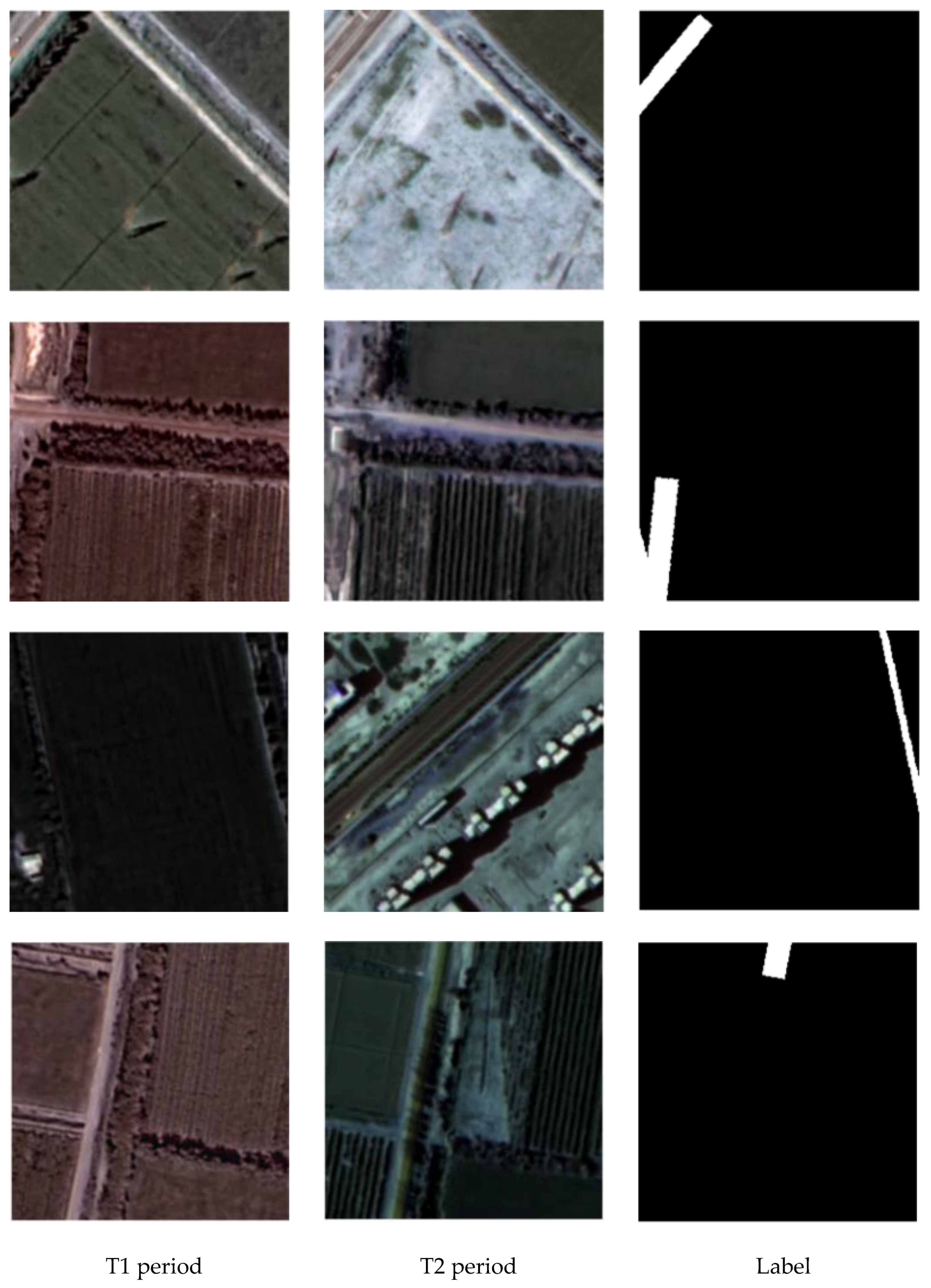

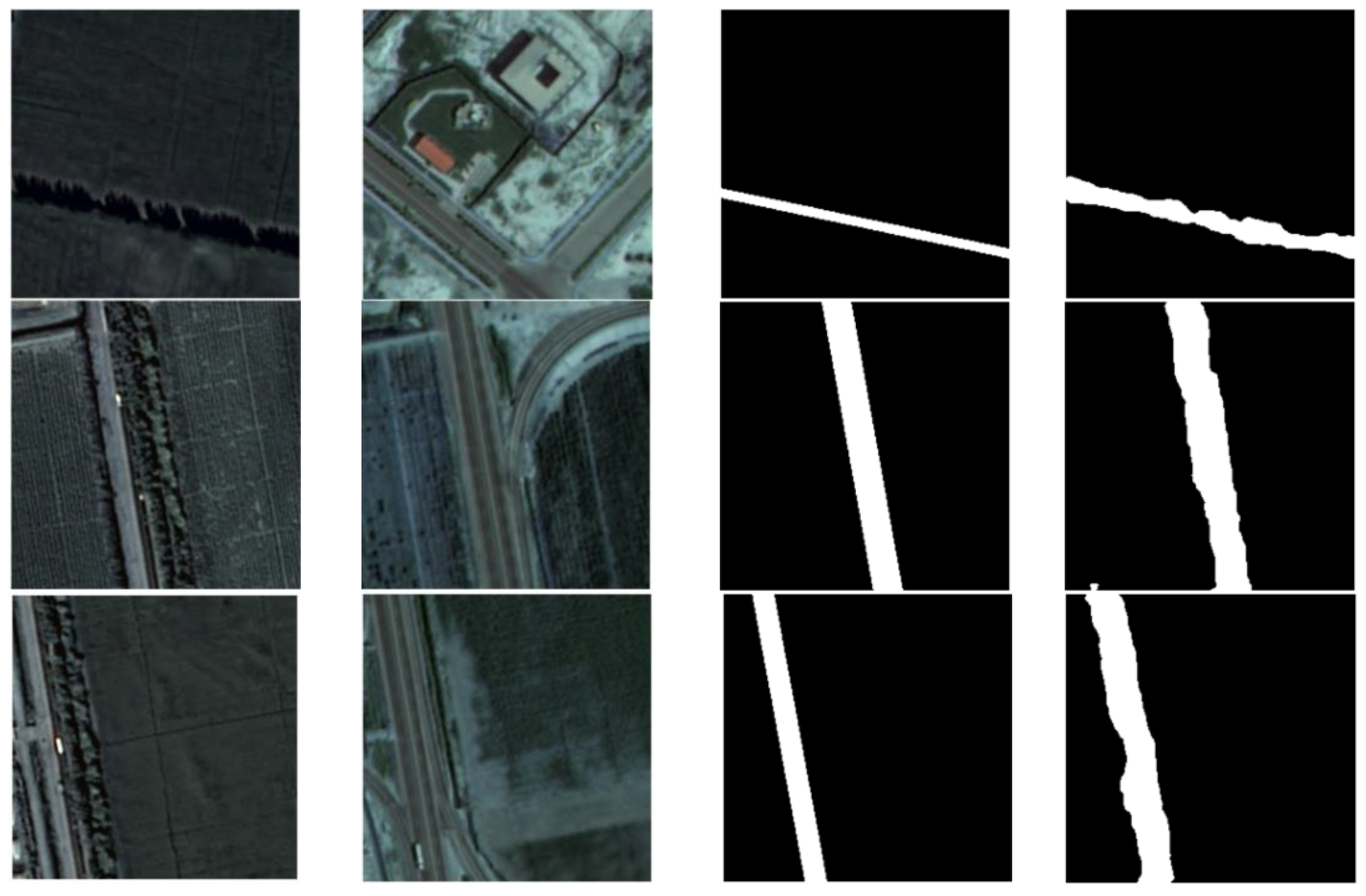

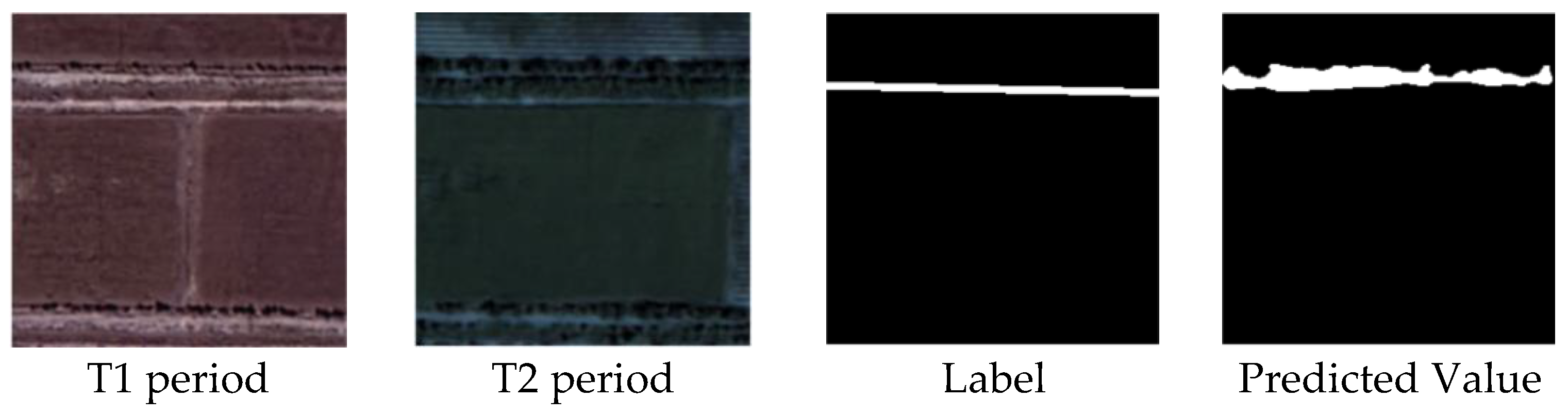

2.2. Dataset

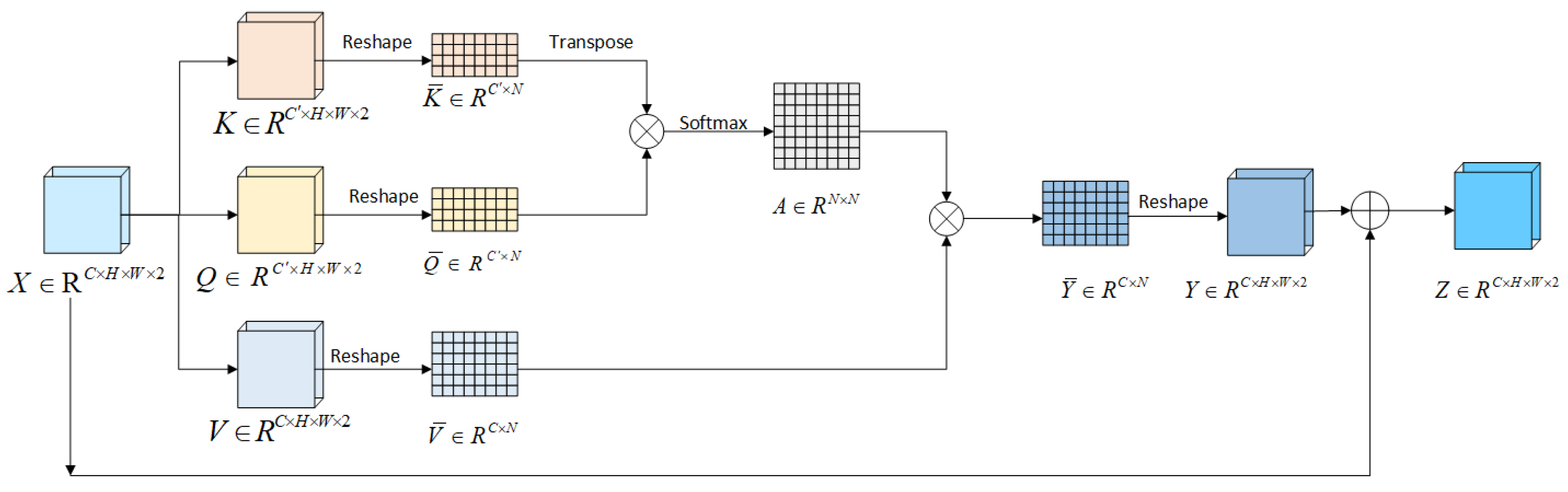

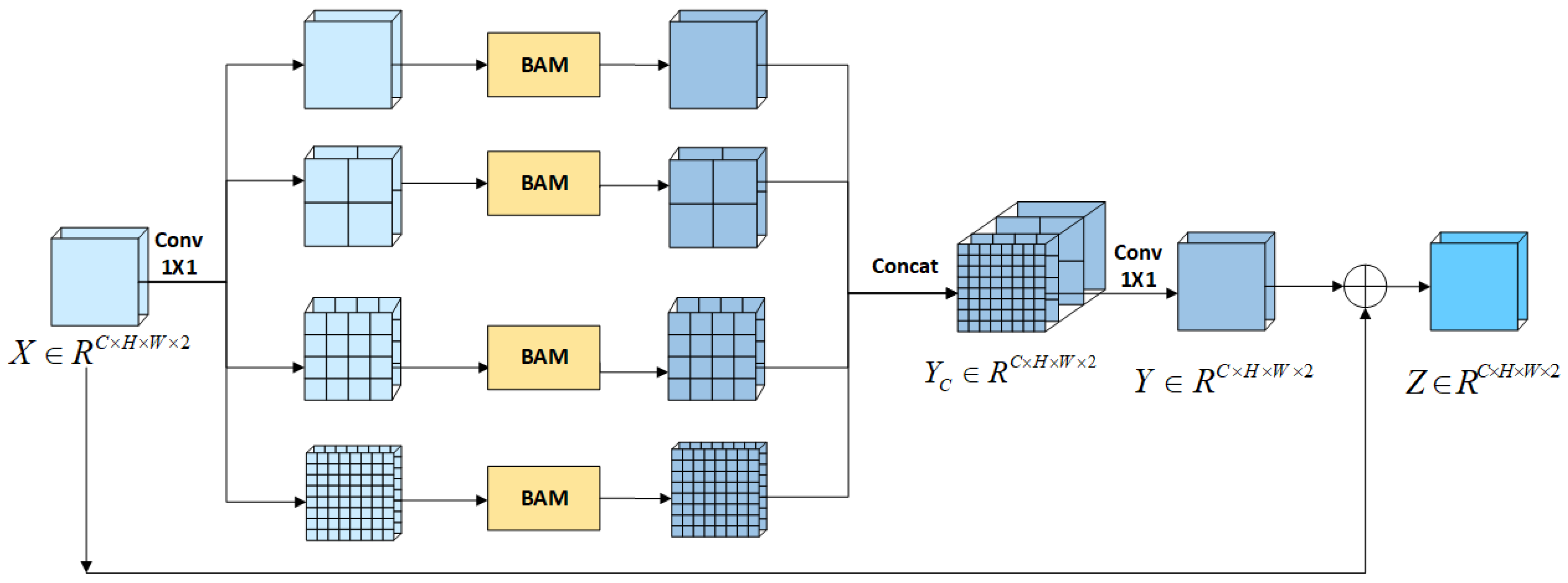

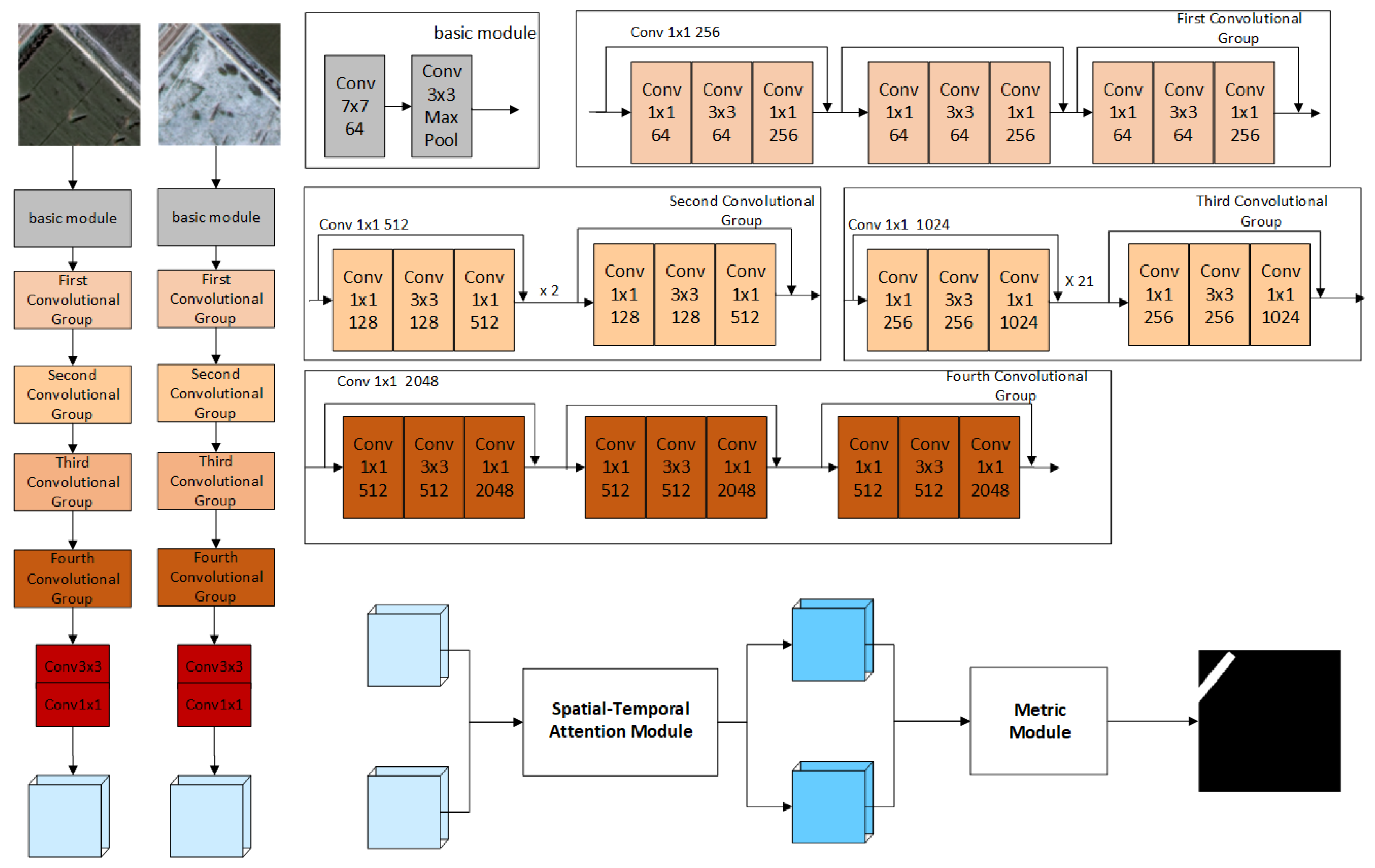

2.3. Deep Learning Model

2.4. Spatial Feature Analysis Method

2.4.1. Spatial Correlation Analysis

2.4.2. High–Low Clustering

3. Experimental Results and Analysis

3.1. Evaluation Metrics

3.2. Experimental Environment and Results

3.3. Spatial Feature Analysis of Change Detection

3.3.1. Spatial Correlation Analysis

3.3.2. High–Low Clustering

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zalesov, S.; Magasumova, A. Protective Forest Management Problems in Russia. E3S Web Conf. 2021, 258, 08004. [Google Scholar] [CrossRef]

- Accastello, C.; Poratelli, F.; Renner, K.; Cocuccioni, S.; D’amboise, C.J.L.; Teich, M. Risk-based decision support for Protective Forest and natural hazard management. In Protective Forests as Ecosystem-based Solution for Disaster Risk Reduction (Eco-DRR); IntechOpen: London, UK, 2022. [Google Scholar] [CrossRef]

- Kundu, K.; Halder, P.; Mandal, J.K. Change detection and patch analysis of Sundarban Forest during 1975–2018 using remote sensing and GIS Data. SN Comput. Sci. 2021, 2, 364. [Google Scholar] [CrossRef]

- Huang, C.; Song, K. Forest-cover change detection using support Vector Machines. In Remote Sensing of Land Use and Land Cover; Taylor & Francis: Abingdon, UK, 2016; pp. 212–227. [Google Scholar] [CrossRef]

- Huang, L.; Fang, Y.; Zuo, X.; Yu, X. Automatic change detection method of multitemporal remote sensing images based on 2D-Otsu algorithm improved by Firefly algorithm. J. Sens. 2015, 2015, 327123. [Google Scholar] [CrossRef]

- Li, M.; Im, J.; Beier, C. Machine learning approaches for forest classification and change analysis using multi-temporal Landsat TM images over Huntington Wildlife Forest. GIScience Remote Sens. 2013, 50, 361–384. [Google Scholar] [CrossRef]

- Reddy, C.S.; Jha, C.S.; Dadhwal, V.K. Assessment and monitoring of long-term forest cover changes (1920–2013) in Western Ghats biodiversity hotspot. J. Earth Syst. Sci. 2016, 125, 103–114. [Google Scholar] [CrossRef]

- Wessels, K.; Bergh, F.V.D.; Roy, D.P.; Salmon, B.P.; Steenkamp, K.C.; MacAlister, B.; Swanepoel, D.; Jewitt, D. Rapid land cover map updates using change detection and robust random forest classifiers. Remote Sens. 2016, 8, 888. [Google Scholar] [CrossRef]

- Rocha, I. Towards asimov’s psychohistory: Harnessing Topological Data Analysis. artificial intelligence and social media data to forecast societal trends. arXiv 2024, arXiv:2407.03446. [Google Scholar] [CrossRef]

- de Bem, P.; de Carvalho Junior, O.A.; Fontes Guimarães, R.; Trancoso Gomes, R.A. Change detection of deforestation in the Brazilian amazon using landsat data and Convolutional Neural Networks. Remote Sens. 2020, 12, 901. [Google Scholar] [CrossRef]

- Al-Quraishi, A.M.; Gaznayee, H.A.; Crespi, M. Drought trend analysis in a semi-arid area of Iraq based on normalized difference vegetation index. Normalized Difference Water Index and standardized precipitation index. J. Arid. Land 2021, 13, 413–430. [Google Scholar] [CrossRef]

- Xie, G.; Niculescu, S. Mapping and monitoring of land cover/land use (LCLU) changes in the Crozon Peninsula (Brittany. France) from 2007 to 2018 by machine learning algorithms (Support Vector Machine, random forest, and convolutional neural network) and by post-classification comparison (PCC). Remote Sens. 2021, 13, 3899. [Google Scholar] [CrossRef]

- Govedarica, M.; Ristic, A.; Jovanovic, D.; Herbei, M.V.; Sala, F. Object oriented image analysis in remote sensing of forest and Vineyard Areas. Bull. Univ. Agric. Sci. Veter- Med. Cluj-Napoca. Hortic. 2015, 72, 362–370. [Google Scholar] [CrossRef] [PubMed]

- Zhou, W.; Ming, D.; Hong, Z.; Lv, X. Scene division based stratified object oriented remote sensing image classification. In Proceedings of the 2018 Fifth International Workshop on Earth Observation and Remote Sensing Applications (EORSA), Xi’an, China, 18–20 June 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Chen, X.; Zhao, W.; Chen, J.; Qu, Y.; Wu, D.; Chen, X. Mapping large-scale forest disturbance types with multi-temporal CNN framework. Remote Sens. 2021, 13, 5177. [Google Scholar] [CrossRef]

- Chen, P.; Zhang, B.; Hong, D.; Chen, Z.; Yang, X.; Li, B. FCCDN: Feature constraint network for VHR Image Change Detection. ISPRS J. Photogramm. Remote Sens. 2022, 187, 101–119. [Google Scholar] [CrossRef]

- Khusni, U.; Dewangkoro, H.; Arymurthy, A. Urban Area Change Detection with combining CNN and RNN from sentinel-2 Multispectral Remote Sensing Data. In Proceedings of the 2020 3rd International Conference on Computer and Informatics Engineering (IC2IE), Yogyakarta, Indonesia, 15–16 September 2020; pp. 171–175. [Google Scholar] [CrossRef]

- Kotin, K.K.; Kumar, S.; Alabdeli, H.; Kumar, G.R.; Ramachandra, A.C. Transformer encoder and decoder method for forest estimation and change detection. In Proceedings of the 2024 International Conference on Integrated Circuits and Communication Systems (ICICACS), Raichur, India, 23–24 February 2024; pp. 1–4. [Google Scholar] [CrossRef]

- Chen, H.; Song, J.; Han, C.; Xia, J.; Yokoya, N. Changemamba: Remote sensing change detection with spatiotemporal state space model. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4409720. [Google Scholar] [CrossRef]

- Chen, H.; Shi, Z. A spatial-temporal attention-based method and a new dataset for Remote Sensing Image Change Detection. Remote Sens. 2020, 12, 1662. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Lecture Notes in Computer Science. pp. 234–241. [Google Scholar] [CrossRef]

- Peng, D.; Zhang, Y.; Guan, H. End-to-end change detection for high resolution satellite images using improved UNET++. Remote Sens. 2019, 11, 1382. [Google Scholar] [CrossRef]

- Seo, J.; Park, W.; Kim, T. Feature-based approach to change detection of small objects from high-resolution satellite images. Remote Sens. 2022, 14, 462. [Google Scholar] [CrossRef]

- Zhang, H.; Chen, K.; Liu, C.; Chen, H.; Zou, Z.; Shi, Z. CDMamba: Incorporating local clues into mamba for remote sensing image binary change detection. IEEE Trans. Geosci. Remote Sens. 2025, 63, 4405016. [Google Scholar] [CrossRef]

- Brahim, E.; Amri, E.; Barhoumi, W. Enhancing change detection in spectral images: Integration of unet and resnet classifiers. In Proceedings of the 2023 IEEE 35th International Conference on Tools with Artificial Intelligence (ICTAI), Atlanta, GA, USA, 6–8 November 2023; pp. 513–517. [Google Scholar] [CrossRef]

- Zhou, J.; Hao, M.; Zhang, D.; Zou, P.; Zhang, W. Fusion pspnet image segmentation based method for Multi-Focus Image Fusion. IEEE Photonics J. 2019, 11, 1–12. [Google Scholar] [CrossRef]

- Chai, J.X.; Zhang, Y.S.; Yang, Z.; Wu, J. 3D change detection of point clouds based on density adaptive local Euclidean distance. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, XLIII-B2-2022, 523–530. [Google Scholar] [CrossRef]

- Wu, H.; Huang, H.; Tang, J.; Chen, W.; He, Y. NET greenhouse gas emissions from agriculture in China: Estimation, spatial correlation and Convergence. Sustainability 2019, 11, 4817. [Google Scholar] [CrossRef]

- Kumari, M.; Sarma, K.; Sharma, R. Using Moran.s I and GIS to study the spatial pattern of land surface temperature in relation to land use/cover around a thermal power plant in Singrauli district, Madhya Pradesh, India. Remote Sens. Appl. Soc. Environ. 2019, 15, 100239. [Google Scholar] [CrossRef]

- Glatthorn, J.; Feldmann, E.; Tabaku, V.; Leuschner, C.; Meyer, P. Classifying development stages of primeval European Beech Forests: Is clustering a useful tool? BMC Ecol. 2018, 18, 47. [Google Scholar] [CrossRef] [PubMed]

- Kobayashi, T.; Yamaguchi MAnd Yokoyama, J. Generalized G-inflation: Inflation with the most general second-order field equations. In Towards Ultimate Understanding of the Universe; World Scientific Publishing: Singapore, 2013; pp. 161–169. [Google Scholar] [CrossRef]

- Olofsson, P.; Foody, G.M.; Herold, M.; Stehman, S.V.; Woodcock, C.E.; Wulder, M.A. Good practices for estimating area and assessing accuracy of Land Change. Remote Sens. Environ. 2014, 148, 42–57. [Google Scholar] [CrossRef]

- Solórzano, J.V.; Mas, J.F.; Gallardo-Cruz, J.A.; Gao, Y.; de Oca, A.F.-M. Deforestation detection using a spatio-temporal deep learning approach with synthetic aperture radar and multispectral images. ISPRS J. Photogramm. Remote Sens. 2023, 199, 87–101. [Google Scholar] [CrossRef]

| Category | No Change | Change |

|---|---|---|

| precision | 0.995375 | 0.785219 |

| Recall | 0.939136 | 0.894787 |

| F1-score | 0.966438 | 0.836430 |

| OID | Value | Class | Red | Green | Blue | Count |

|---|---|---|---|---|---|---|

| 1 | 0 | No Change | 0 | 0 | 0 | 1,395,979,110 |

| 2 | 255 | Change | 255 | 255 | 255 | 2,773,666 |

| Moran’s I Index | Z-Score | p-Value |

|---|---|---|

| 0.045686 | 11.809507 | 0.000000 |

| General G | Z-Score | p-Value |

|---|---|---|

| 0.000145 | 7.900724 | 0.000000 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, P.; Yin, X.; Ding, M.; Pan, S. Research on Protective Forest Change Detection in Aral City Based on Deep Learning. Forests 2025, 16, 775. https://doi.org/10.3390/f16050775

Liu P, Yin X, Ding M, Pan S. Research on Protective Forest Change Detection in Aral City Based on Deep Learning. Forests. 2025; 16(5):775. https://doi.org/10.3390/f16050775

Chicago/Turabian StyleLiu, Pengshuai, Xiaojun Yin, Mingrui Ding, and Shaoliang Pan. 2025. "Research on Protective Forest Change Detection in Aral City Based on Deep Learning" Forests 16, no. 5: 775. https://doi.org/10.3390/f16050775

APA StyleLiu, P., Yin, X., Ding, M., & Pan, S. (2025). Research on Protective Forest Change Detection in Aral City Based on Deep Learning. Forests, 16(5), 775. https://doi.org/10.3390/f16050775