Preliminary Machine Learning-Based Classification of Ink Disease in Chestnut Orchards Using High-Resolution Multispectral Imagery from Unmanned Aerial Vehicles: A Comparison of Vegetation Indices and Classifiers

Abstract

1. Introduction

- Physiological sensitivity to stress symptoms: Given that Phytophthora spp. infection often manifests initially through subtle changes in chlorophyll content, leaf structure, and canopy density, we selected indices known for their ability to detect vegetation stress and physiological deterioration [40,41];

- Diversity of spectral characteristics and mathematical formulations: The indices span a range of spectral regions (visible, near-infrared, and red-edge) and incorporate various correction mechanisms (e.g., for soil background or atmospheric effects), allowing us to test and compare the relative performance of VIs under different spectral sensitivities;

- NDVI remains the most widely used VI for assessing general vegetation vigor and health [42]; GnDVI replaces the red band with the green band, improving sensitivity to chlorophyll content [43]; RdNDVI leverages the red-edge band, which has shown superior performance in detecting subtle physiological stress responses in plant canopies [43,44]; MCARI emphasizes chlorophyll absorption and is particularly well-suited for detecting changes in leaf pigment concentration [45]; SAVI and EVI/EVI2 offer soil and atmospheric correction capabilities, which help minimize non-canopy signal contamination, which is particularly important when ground exposure varies due to canopy thinning [46,47,48]; ExGreenRed was included to evaluate the performance of simpler spectral combinations in vegetation detection.

2. Materials and Methods

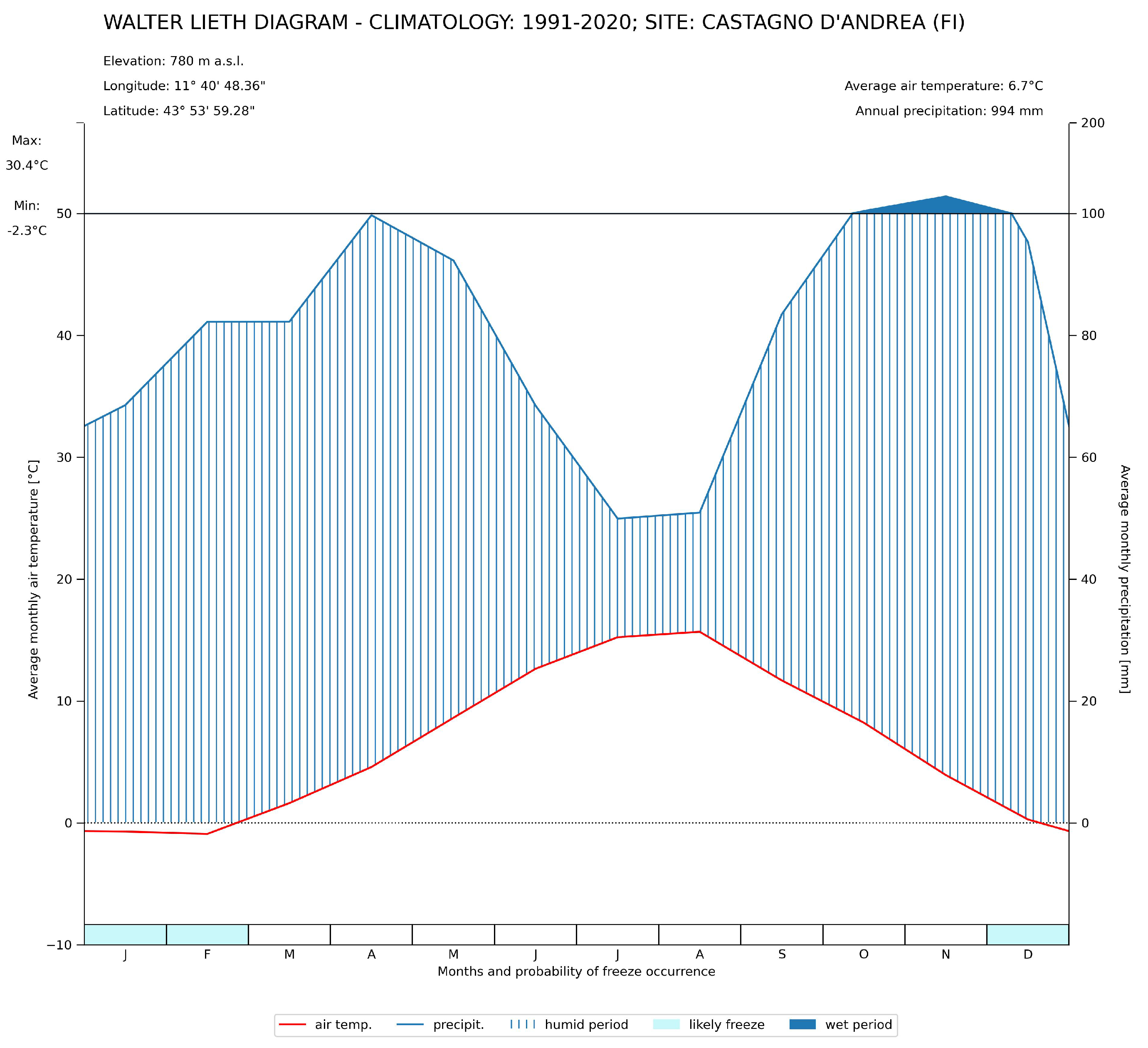

2.1. Site Description, Dendrometric Measurement, and Health Evaluation of Trees

2.2. Surveyed Area and Data Acquisition

2.3. Image Processing, Maps Production, and Extraction of VIs

2.4. Pixel Extraction

2.5. Data Analysis and Modeling the Classifiers

- Support Vector Machine (SVM) Classifier

- The SVM classifier is a versatile machine learning algorithm that is widely employed for both classification and regression tasks, and it operates by finding the hyperplane that best separates data points belonging to different classes in the feature space. The main strength of the SVM lies in its ability to handle linear and non-linear relationships in the data, making it a versatile choice for a wide range of applications, including image classification [84,85,86]. In this work, the C-Support Vector Classification algorithm based on LIBSVM [87] and integrated into Scikit-Learn Python module [88] was used. The combination of hyperparameters used by GridSearchCV function for this classifier was as follows: Kernel type [rbf, linear]; Parameter C [1, 10, 100, 1000]; Parameter gamma [0.0003, 0.0004].

- Gaussian Naive Bayes (GNB) Classifier

- The GNB classifier is a probabilistic machine learning algorithm that is particularly well-suited for classification tasks where the goal is to assign an input data point to one of several predefined classes based on its features. It leverages conditional probability to make predictions by estimating the likelihood of a particular class given the observed features of an input. Despite its ‘naive’ assumption of independence among features, which is often unrealistic in real-world scenarios, the GNB classifier has demonstrated effectiveness in various applications, especially when dealing with continuous and normally distributed data, and the Gaussian distribution simplifies the estimation of the probability density function. In context, the assumption is that the features within each class allow for efficient parameter estimation with limited training data. The classifier calculates the class posterior probabilities for a given input and assigns the data point to the class with the highest probability [89]. The hyperparameter tuning technique has not been applied to this classifier as it is non-parametric, but we modulated the portion of the largest variance of all features that is added to variances for calculation stability using a logarithmic (base = 10) space of 100 values, calculated from 0 to −9.For GNB, we tested log-spaced priors for smoothing. Hence, within the selected algorithms, we performed systematic parameter optimization to ensure that each classifier uses the best set of hyperparameters.

- Logistic (Log) Classifier

- The logistic classifier is a widely used statistical method in the field of machine learning, and statistical modeling for binary classification problems is particularly well-suited for scenarios where the dependent variable is categorical and binary, meaning it has only two possible outcomes. The logistic classifier is employed to predict the probability that an instance belongs to a particular class.

- Unlike linear regression, which predicts continuous outcomes, logistic regression models the probability of an event occurring.

- The logistic model utilizes the logistic function [90], also known as the sigmoid function, to map any real-valued number into a range between 0 and 1. The combination of hyperparameters used by the GridSearchCV function for this classifier was as follows: Solver type [newton-cg, lbfgs, liblinear]; Parameter Penality [none, l1, l2, elasticnet]; Parameter C [1, 10, 100, 1000]; Parameter classweight [balanced].

2.6. Training and Testing Data

2.7. Model Evaluation

- True positive (TP): represents the number of symptomatic trees correctly classified as symptomatic;

- True negative (TN): represents the number of asymptomatic trees correctly classified as asymptomatic;

- False positive (FP): represents the number of asymptomatic trees incorrectly classified as asymptomatic;

- False negative (FN): represents the number of symptomatic trees incorrectly classified as symptomatic.

- Accuracy (ACC): represents the proportion of correct classified instances to the total number of classifications

- Precision (P): represents the ratio of correctly predicted positive instances to the total predicted positive instances

- Recall detection rate (R): represents the ratio of correctly predicted positive instances to the overall number of actual positive instances

- F1Score (F1s): represents the weighted average of precision and recall values and can be used as a single measure of performance of the test for the positive class

3. Results

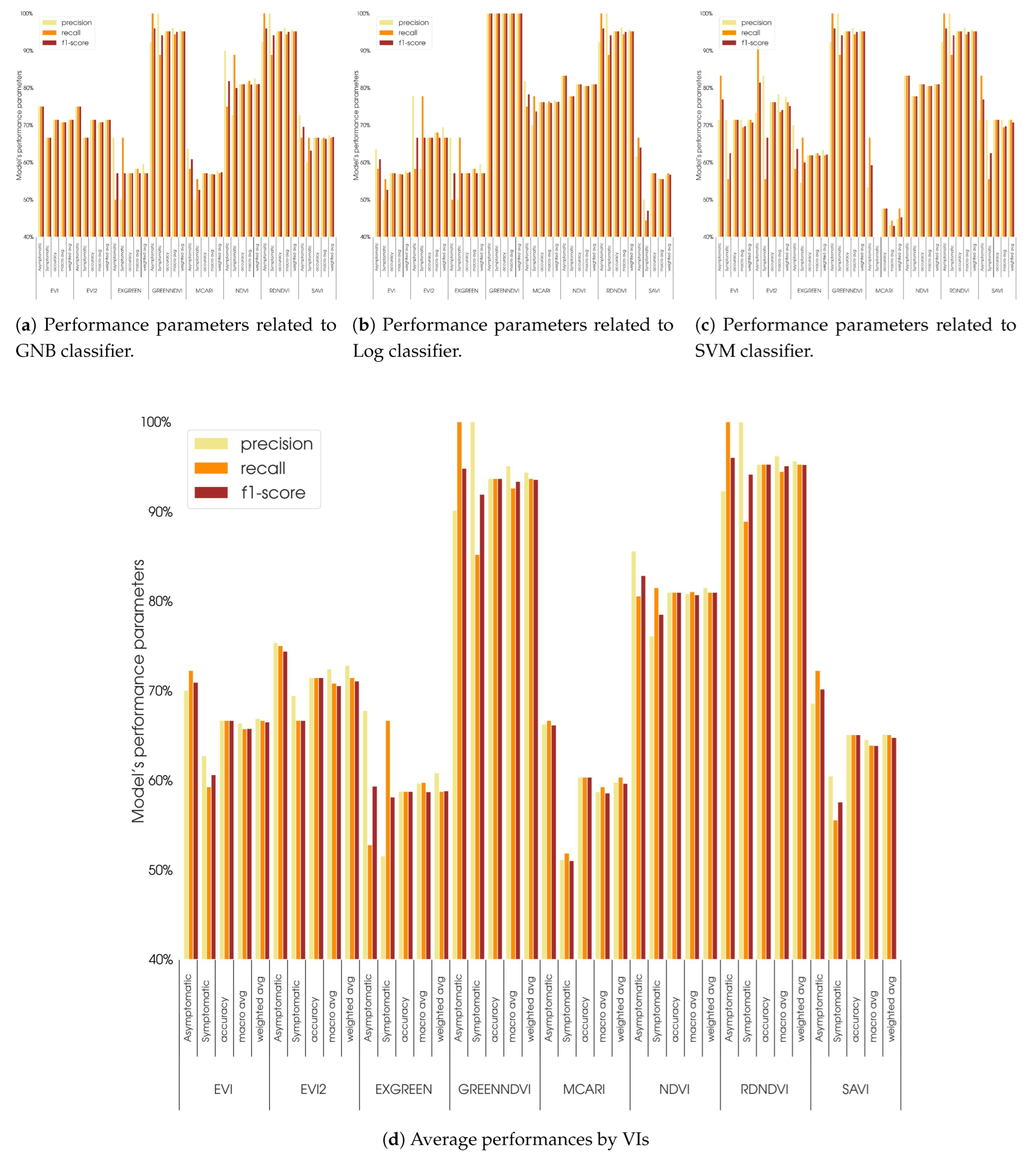

Classification Approach Performances

4. Discussion

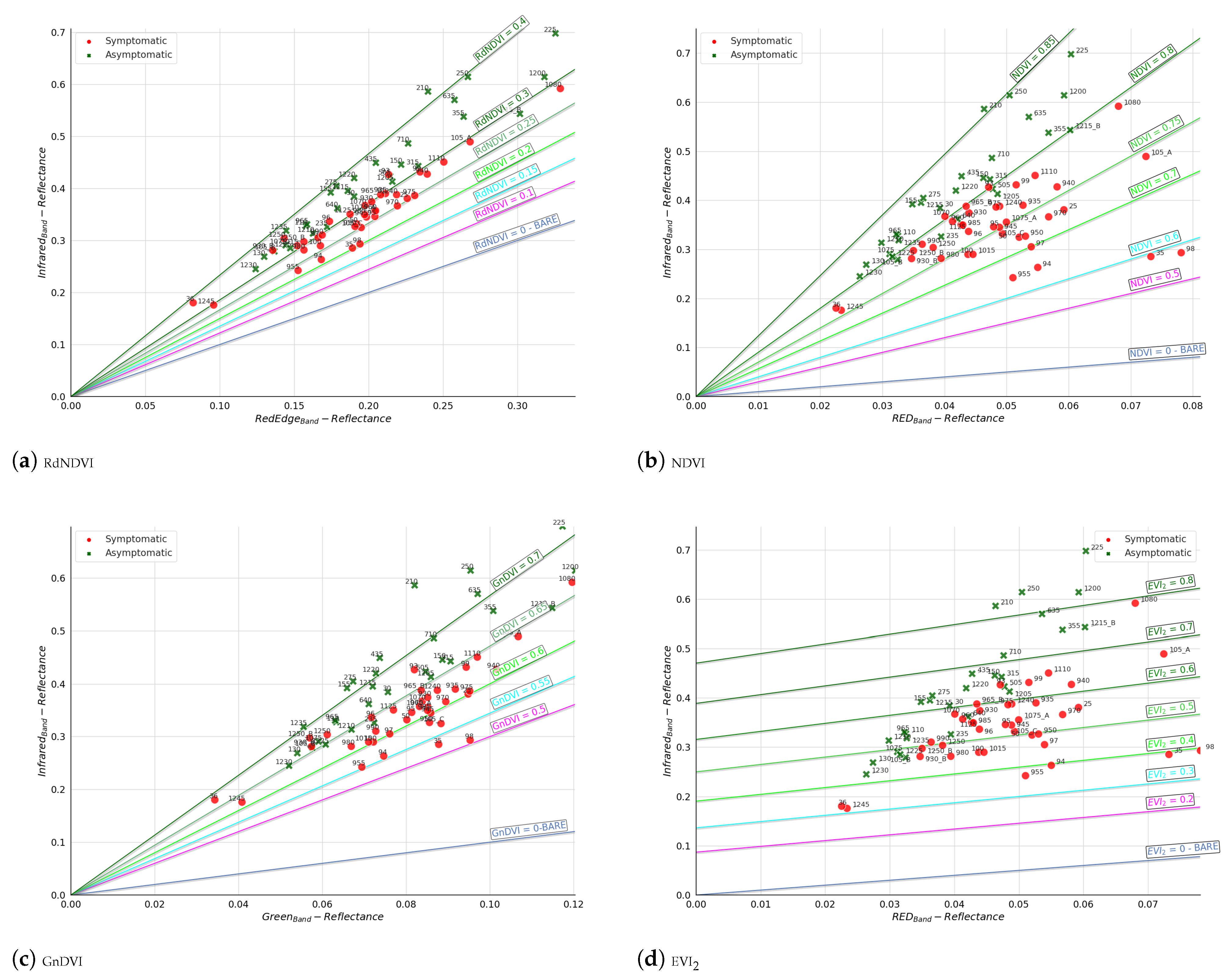

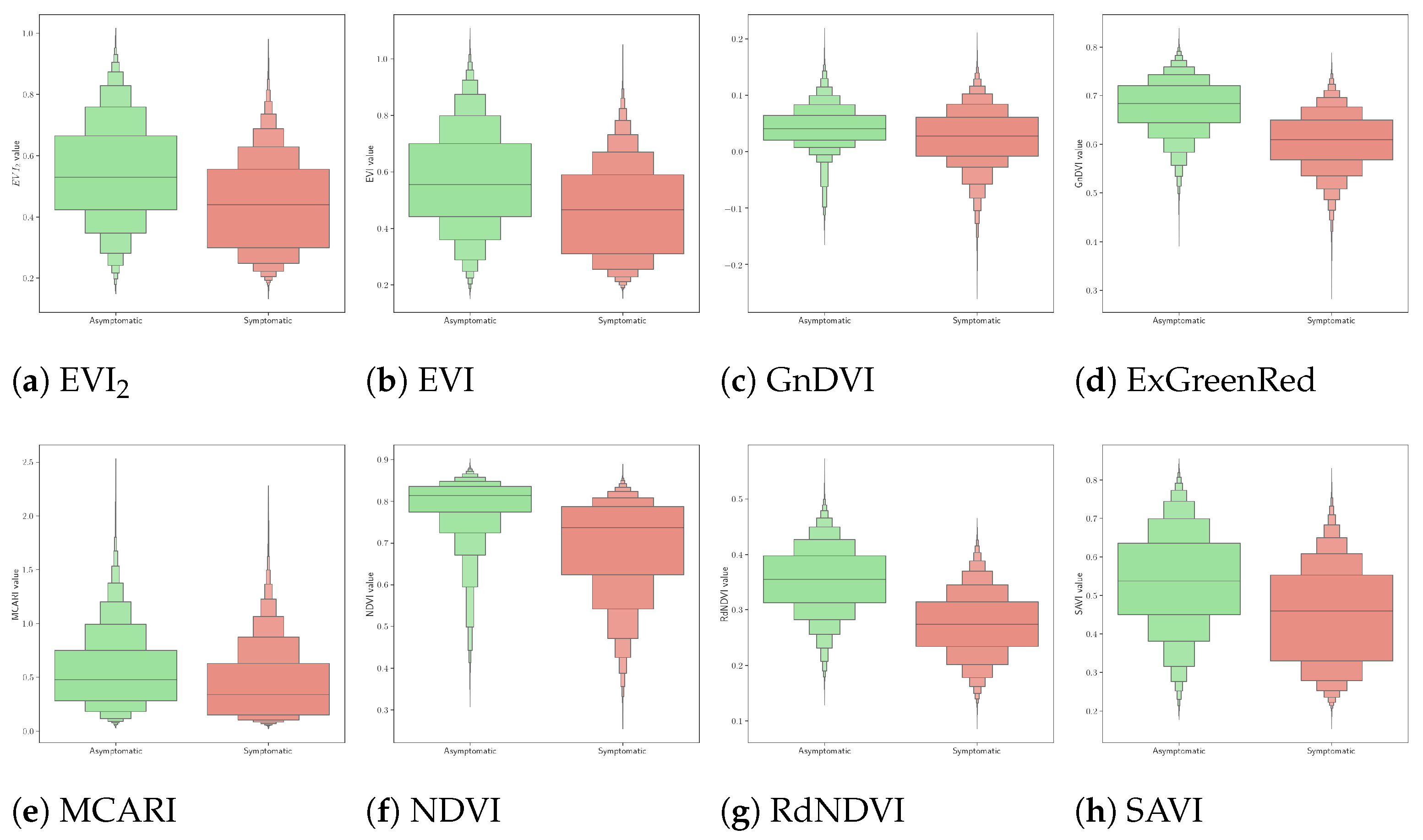

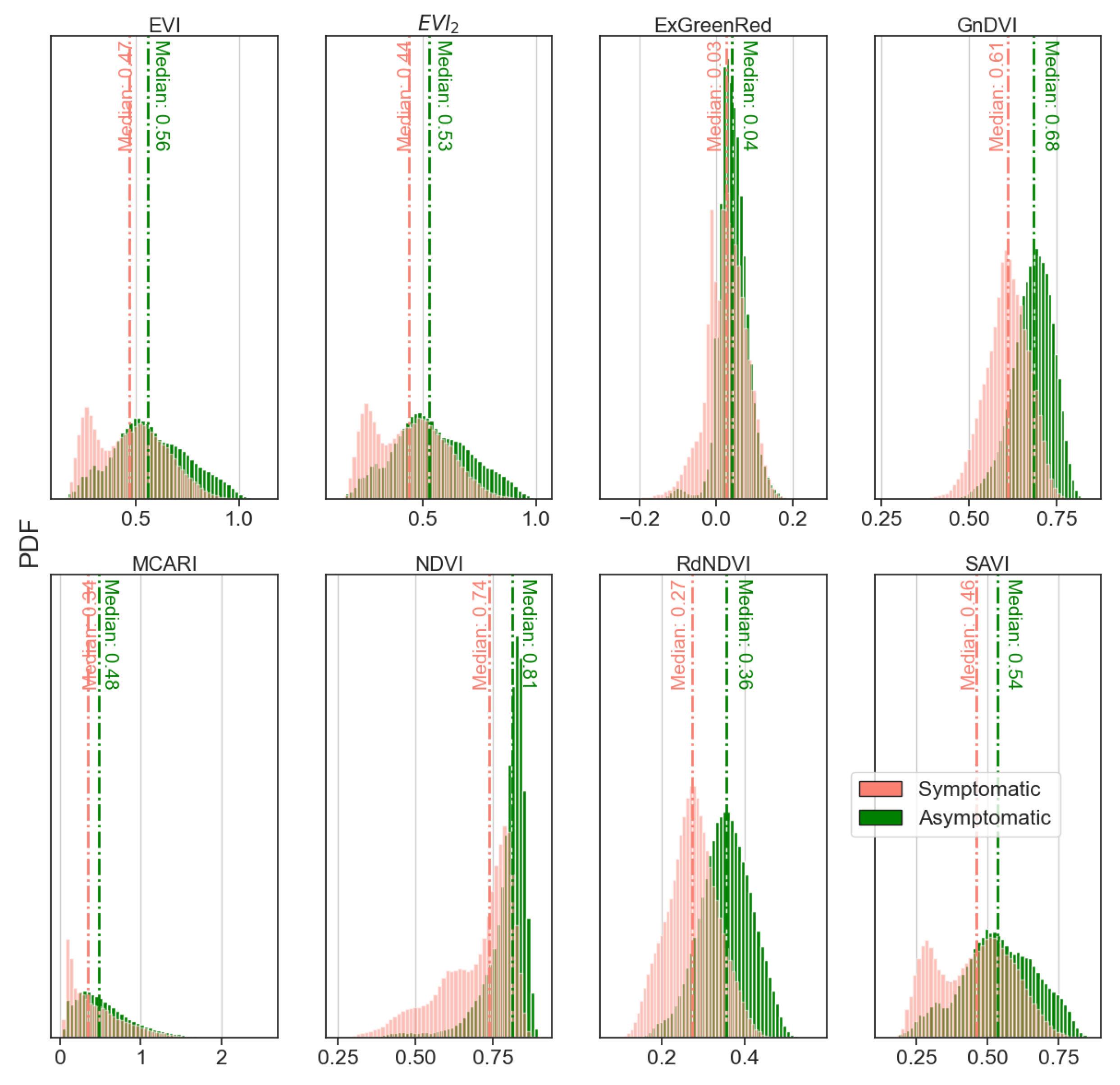

- The VIs used (e.g., GnDVI and RdNDVI) demonstrated strong discriminatory power between symptomatic and asymptomatic classes. As shown in our isocline and distribution plots (Figure 6), these VIs produced relatively linearly separable or well-clustered data distributions;

- GNB assumes feature independence and Gaussian-distributed data, which is not strictly true for most real-world datasets. However, in our case, the VI values—especially at crown level—approximated unimodal and symmetric distributions, fulfilling GNB’s assumptions reasonably well;

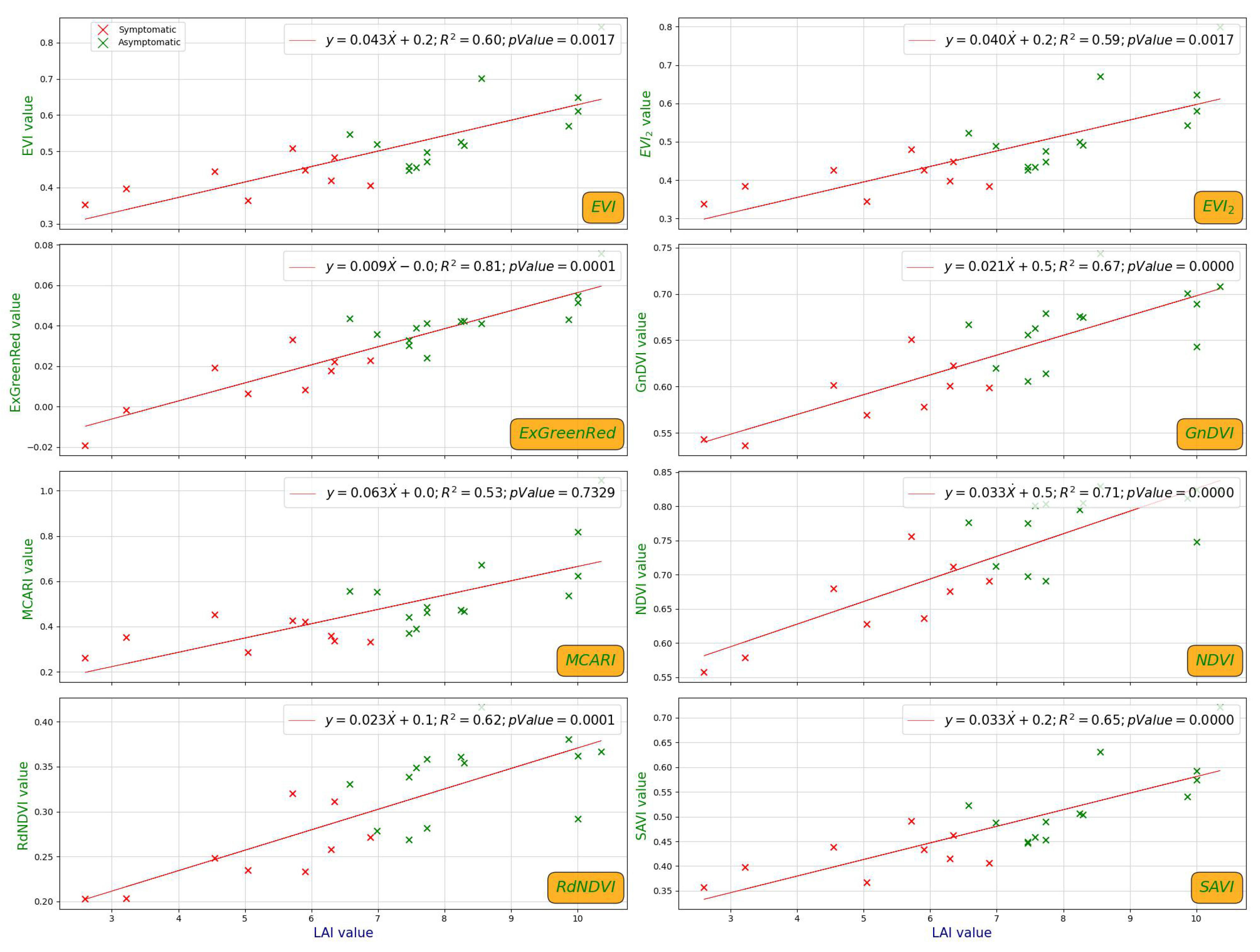

- The logistic classifier models the linear decision boundary in log-odds space, which works effectively when features correlate linearly with class probability. Our VIs, particularly those built on normalized differences (e.g., NDVI), exhibited such relationships, as corroborated by strong correlations with LAI and clear VI value ranges across health classes;

- The SVM, especially with linear or RBF kernels, is robust in both linearly and non-linearly separable contexts, but when the data are already separable (as in our case), its decision surface closely aligns with that of logistic regression and even GNB.

5. Conclusions

- The spectral indices GnDVI and RdNDVI demonstrated the highest effectiveness, more than NDVI, and achieved classification accuracies up to 95.2% when combined with the SVM and GNB classifiers. These indices effectively captured physiological changes associated with ink disease symptoms and underscore the potential of high-resolution UAV imagery in achieving accurate tree health assessments;

- Significant correlations (p < 0.001) were observed between LAI and most vegetation indices, confirming LAI’s value as a reliable physiological proxy for validating spectral assessments of chestnut tree health;

- Limitations of certain vegetation indices: indices such as MCARI and SAVI showed comparatively limited discriminatory power, highlighting the need for the careful selection of vegetation indices that are specifically tailored to reflect subtle physiological changes due to disease.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ACC | accuracy |

| AGL | above-ground levels |

| B475 | blue475nm wavelength |

| C3S | Copernicus Climate Change Service |

| DSM | digital surface model |

| DTM | digital terrain model |

| ECMWF | European Center for Medium-Range Weather Forecasts |

| EPSG | European Petroleum Survey Group |

| EU | European Union |

| EVI | enhanced vegetation index |

| EVI2 | enhanced vegetation index |

| ExGreenRed | excess green-excess red index |

| F1s | F1Score |

| FN | false negative |

| FP | false positive |

| G560 | green560nm wavelength |

| GNB | Gaussian Naive Bayes |

| GnDVI | green normalized difference vegetation |

| GNSS | Global Navigation Satellite System |

| GSD | ground sample distance |

| INFC | National Inventory of Forests and forest Carbon Pools |

| LAI | leaf area index |

| Log | logistic |

| MCARI | modified chlorophyll absorption in reflectance index |

| ML | machine learning |

| NDVI | normalized difference vegetation index |

| NiR840 | near infrared840nm wavelength |

| P | precision |

| probability density function | |

| R | recall detection rate |

| R668 | red668nm wavelength |

| RdNDVI | red-edge normalized difference vegetation |

| RE717 | red edge717nm wavelength |

| RTK | real-time kinematic positioning |

| SAR | synthetic aperture radar |

| SAVI | enhanced vegetation index |

| SfM | structure from motion |

| SVM | support vector machines |

| TN | true negative |

| TP | true positive |

| UAV | unmanned aerial vehicle |

| UID | unique identifier number |

| VIs | vegetation indices |

| VTOL | vertical take-off and landing |

References

- Vettraino, A.M.; Morel, O.; Perlerou, C.; Robin, C.; Diamandis, S.; Vannini, A. Occurrence and distribution of Phytophthora species in European chestnut stands, and their association with Ink Disease and crown decline. Eur. J. Plant Pathol. 2005, 111, 169–180. [Google Scholar] [CrossRef]

- Turchetti, T.; Maresi, G. Biological Control and Management of Chestnut Diseases; Springer: Dordrecht, The Nertherlands, 2008; pp. 85–118. [Google Scholar] [CrossRef]

- Conedera, M.; Krebs, P.; Gehring, E.; Wunder, J.; Hülsmann, L.; Abegg, M.; Maringer, J. How future-proof is Sweet chestnut (Castanea sativa) in a global change context? For. Ecol. Manag. 2021, 494, 119320. [Google Scholar] [CrossRef]

- Di Renzo, L.; Bianchi, A.; De Lorenzo, A. Chestnut Fruits: Nutritional Value and New Products. In Proceedings of the Chestnut (Castanea sativa): A Multipurpose European Tree, Bruxelles, Belgium, 30 September 2010. [Google Scholar]

- Bruzzese, S.; Blanc, S.; Brun, F. The Chestnut tree: A resource for the socio-economic revival of inland areas in a bio-economy perspective. In Proceedings of the Social and Ecological Value Added of Small-Scale Forestry to the Bio-Economy, Bolzano, Italy, 7–8 October 2020; p. 1. [Google Scholar] [CrossRef]

- Vannini, A.; Vettraino, A.M. Ink disease in chestnuts: Impact on the European chestnut. For. Snow Landsc. Res. 2001, 76, 345–350. [Google Scholar]

- Vettraino, A.M.; Natili, G.; Anselmi, N.; Vannini, A. Recovery and pathogenicity of Phytophthora species associated with a resurgence of ink disease in Castanea sativa Italy. Plant Pathol. 2001, 50, 90–96. [Google Scholar] [CrossRef]

- Jung, T.; Durán, A.; von Stowasser, E.S.; Schena, L.; Mosca, S.; Fajardo, S.; González, M.; Ortega, A.D.N.; Bakonyi, J.; Seress, D.; et al. Diversity of Phytophthora species in Valdivian rainforests and association with severe dieback symptoms. For. Pathol. 2018, 48, e12443. [Google Scholar] [CrossRef]

- Robin, C.; Morel, O.; Vettraino, A.M.; Perlerou, C.; Diamandis, S.; Vannini, A. Genetic variation in susceptibility to Phytophthora cambivora in European chestnut (Castanea sativa). For. Ecol. Manag. 2006, 226, 199–207. [Google Scholar] [CrossRef]

- Şimşek, S.A.; Katrcoğlu, Y.Z.; Maden, S. Phytophthora Root Rot Diseases Occurring on Forest, Parks, and Nurseries in Turkey and Their Control Measures. Turk. J. Agric.-Food Sci. Technol. 2018, 6, 770–782. [Google Scholar] [CrossRef][Green Version]

- Frascella, A.; Sarrocco, S.; Mello, A.; Venice, F.; Salvatici, C.; Danti, R.; Emiliani, G.; Barberini, S.; Rocca, G.D. Biocontrol of Phytophthora xcambivora on Castanea sativa: Selection of Local Trichoderma spp. Isolates for the Management of Ink Disease. Forests 2022, 13, 1065. [Google Scholar] [CrossRef]

- Carratore, R.D.; Aghayeva, D.N.; Ali-zade, V.M.; Bartolini, P.; Rocca, G.D.; Emiliani, G.; Pepori, A.; Podda, A.; Maserti, B. Detection of Cryphonectria hypovirus 1 in Cryphonectria parasitica isolates from Azerbaijan. For. Pathol. 2021, 51, e12718. [Google Scholar] [CrossRef]

- Prospero, S.; Rigling, D. Invasion genetics of the chestnut blight fungus Cryphonectria parasitica in Switzerland. Phytopathology 2012, 102, 73–82. [Google Scholar] [CrossRef]

- Venice, F.; Vizzini, A.; Frascella, A.; Emiliani, G.; Danti, R.; Rocca, G.D.; Mello, A. Localized reshaping of the fungal community in response to a forest fungal pathogen reveals resilience of Mediterranean mycobiota. Sci. Total Environ. 2021, 800, 149582. [Google Scholar] [CrossRef]

- Erwin, D.C.; Ribeiro, O.K. Phytophthora Diseases Worldwide; American Phytopathological Society (APS Press): St. Paul, MN, USA, 1996. [Google Scholar]

- Vannini, A.; Vettraino, A. Phytophthora cambivora. Forest Phytophthoras 2011, 1. [Google Scholar] [CrossRef]

- Blom, J.M.; Vannini, A.; Vettraino, A.M.; Hale, M.D.; Godbold, D.L. Ectomycorrhizal community structure in a healthy and a Phytophthora-infected chestnut (Castanea sativa Mill.) stand in central Italy. Mycorrhiza 2009, 20, 25–38. [Google Scholar] [CrossRef] [PubMed]

- Jakubauskas, M.E.; Legates, D.R.; Kastens, J.H. Crop identification using harmonic analysis of time-series AVHRR NDVI data. Comput. Electron. Agric. 2003, 37, 127–139. [Google Scholar] [CrossRef]

- Prospero, S.; Heinz, M.; Augustiny, E.; Chen, Y.; Engelbrecht, J.; Fonti, M.; Hoste, A.; Ruffner, B.; Sigrist, R.; Van Den Berg, N.; et al. Distribution, causal agents, and infection dynamic of emerging ink disease of sweet chestnut in Southern Switzerland. Environ. Microbiol. 2023, 25, 2250–2265. [Google Scholar] [CrossRef]

- Marzocchi, G.; Maresi, G.; Luchi, N.; Pecori, F.; Gionni, A.; Longa, C.M.O.; Pezzi, G.; Ferretti, F. 85 years counteracting an invasion: Chestnut ecosystems and landscapes survival against ink disease. Biol. Invasions 2024, 26, 2049–2062. [Google Scholar] [CrossRef]

- Șandric, I.; Irimia, R.; Petropoulos, G.P.; Anand, A.; Srivastava, P.K.; Pleșoianu, A.; Faraslis, I.; Stateras, D.; Kalivas, D. Tree’s detection & health’s assessment from ultra-high resolution UAV imagery and deep learning. Geocarto Int. 2022, 37, 10459–10479. [Google Scholar] [CrossRef]

- Iost Filho, F.H.; Heldens, W.B.; Kong, Z.; De Lange, E.S. Drones: Innovative Technology for Use in Precision Pest Management. J. Econ. Entomol. 2020, 113, 1–25. [Google Scholar] [CrossRef]

- Ecke, S.; Stehr, F.; Frey, J.; Tiede, D.; Dempewolf, J.; Klemmt, H.J.; Endres, E.; Seifert, T. Towards operational UAV-based forest health monitoring: Species identification and crown condition assessment by means of deep learning. Comput. Electron. Agric. 2024, 219, 108785. [Google Scholar] [CrossRef]

- De Luca, G.; Modica, G.; Silva, J.M.N.; Praticò, S.; Pereira, J.M. Assessing tree crown fire damage integrating linear spectral mixture analysis and supervised machine learning on Sentinel-2 imagery. Int. J. Digit. Earth 2023, 16, 3162–3198. [Google Scholar] [CrossRef]

- Widjaja Putra, B.T.; Soni, P. Evaluating NIR-Red and NIR-Red edge external filters with digital cameras for assessing vegetation indices under different illumination. Infrared Phys. Technol. 2017, 81, 148–156. [Google Scholar] [CrossRef]

- Fraser, B.T.; Congalton, R.G. Monitoring Fine-Scale Forest Health Using Unmanned Aerial Systems (UAS) Multispectral Models. Remote Sens. 2021, 13, 4873. [Google Scholar] [CrossRef]

- Ollinger, S.V. Sources of variability in canopy reflectance and the convergent properties of plants. New Phytol. 2011, 189, 375–394. [Google Scholar] [CrossRef] [PubMed]

- Duarte, A.; Borralho, N.; Cabral, P.; Caetano, M. Recent advances in forest insect pests and diseases monitoring using UAV-based data: A systematic review. Forests 2022, 13, 911. [Google Scholar] [CrossRef]

- Cotrozzi, L. Spectroscopic detection of forest diseases: A review (1970–2020). J. For. Res. 2022, 33, 21–38. [Google Scholar] [CrossRef]

- Stone, C.; Mohammed, C. Application of remote sensing technologies for assessing planted forests damaged by insect pests and fungal pathogens: A review. Curr. For. Rep. 2017, 3, 75–92. [Google Scholar] [CrossRef]

- Yin, D.; Cai, Y.; Li, Y.; Yuan, W.; Zhao, Z. Assessment of the Health Status of Old Trees of Platycladus orientalis L. Using UAV Multispectral Imagery. Drones 2024, 8, 91. [Google Scholar] [CrossRef]

- Abdulridha, J.; Ehsani, R.; Castro, A.D. Detection and differentiation between laurel wilt disease, phytophthora disease, and salinity damage using a hyperspectral sensing technique. Agriculture 2016, 6, 56. [Google Scholar] [CrossRef]

- Sandino, J.; Pegg, G.; Gonzalez, F.; Smith, G. Aerial mapping of forests affected by pathogens using UAVs, hyperspectral sensors, and artificial intelligence. Sensors 2018, 18, 944. [Google Scholar] [CrossRef]

- Gold, K.M.; Townsend, P.A.; Chlus, A.; Herrmann, I.; Couture, J.J.; Larson, E.R.; Gevens, A.J. Hyperspectral measurements enable pre-symptomatic detection and differentiation of contrasting physiological effects of late blight and early blight in potato. Remote Sens. 2020, 12, 286. [Google Scholar] [CrossRef]

- Appeltans, S.; Pieters, J.G.; Mouazen, A.M. Potential of laboratory hyperspectral data for in-field detection of Phytophthora infestans on potato. Precis. Agric. 2022, 23, 876–893. [Google Scholar] [CrossRef]

- Wavrek, M.T.; Carr, E.; Jean-Philippe, S.; McKinney, M.L. Drone remote sensing in urban forest management: A case study. Urban For. Urban Green. 2023, 86, 127978. [Google Scholar] [CrossRef]

- Newby, Z.; Murphy, R.J.; Guest, D.I.; Ramp, D.; Liew, E.C.Y. Detecting symptoms of Phytophthora cinnamomi infection in Australian native vegetation using reflectance spectrometry: Complex effects of water stress and species susceptibility. Australas. Plant Pathol. 2019, 48, 409–424. [Google Scholar] [CrossRef]

- Hornero, A.; Zarco-Tejada, P.J.; Quero, J.L.; North, P.R.J.; Ruiz-Gómez, F.J.; Sánchez-Cuesta, R.; Hernández-Clemente, R. Modelling hyperspectral-and thermal-based plant traits for the early detection of Phytophthora-induced symptoms in oak decline. Remote Sens. Environ. 2021, 263, 112570. [Google Scholar] [CrossRef]

- Croeser, L.; Admiraal, R.; Barber, P.; Burgess, T.I.; Hardy, G.E.S.J. Reflectance spectroscopy to characterize the response of Corymbia calophylla to Phytophthora root rot and waterlogging stress. Forestry 2022, 95, 312–330. [Google Scholar] [CrossRef]

- Gomes-Laranjo, J.; Araújo-Alves, J.; Ferreira-Cardoso, J.; Pimentel-Pereira, M.; Abreu, C.; Torres-Pereira, J. Effect of chestnut ink disease on photosynthetic performance. J. Phytopathol. 2004, 152, 138–144. [Google Scholar] [CrossRef]

- Dinis, L.T.; Peixoto, F.; Zhang, C.; Martins, L.; Costa, R.; Gomes-Laranjo, J. Physiological and biochemical changes in resistant and sensitive chestnut (Castanea) plantlets after inoculation with Phytophthora cinnamomi. Physiol. Mol. Plant Pathol. 2011, 75, 146–156. [Google Scholar] [CrossRef]

- Li, S.; Xu, L.; Jing, Y.; Yin, H.; Li, X.; Guan, X. High-quality vegetation index product generation: A review of NDVI time series reconstruction techniques. Int. J. Appl. Earth Obs. Geoinf. 2021, 105, 102640. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Merzlyak, M.N. Use of a green channel in remote sensing of global vegetation from EOS-MODIS. Remote Sens. Environ. 1996, 58, 289–298. [Google Scholar] [CrossRef]

- Gao, S.; Yan, K.; Liu, J.; Pu, J.; Zou, D.; Qi, J.; Mu, X.; Yan, G. Assessment of remote-sensed vegetation indices for estimating forest chlorophyll concentration. Ecol. Indic. 2024, 162, 112001. [Google Scholar] [CrossRef]

- Daughtry, C. Estimating Corn Leaf Chlorophyll Concentration from Leaf and Canopy Reflectance. Remote Sens. Environ. 2000, 74, 229–239. [Google Scholar] [CrossRef]

- Huete, A. A soil-adjusted vegetation index (SAVI). Remote Sens. Environ. 1988, 25, 295–309. [Google Scholar] [CrossRef]

- Huete, A.; Didan, K.; Miura, T.; Rodriguez, E.; Gao, X.; Ferreira, L. Overview of the radiometric and biophysical performance of the MODIS vegetation indices. Remote Sens. Environ. 2002, 83, 195–213. [Google Scholar] [CrossRef]

- Jiang, Z.; Huete, A.; Didan, K.; Miura, T. Development of a two-band enhanced vegetation index without a blue band. Remote Sens. Environ. 2008, 112, 3833–3845. [Google Scholar] [CrossRef]

- Gill, K.S.; Anand, V.; Chauhan, R.; Verma, G.; Gupta, R. Agricultural Pests Classification Using Deep Convolutional Neural Networks and Transfer Learning. In Proceedings of the 2023 2nd International Conference on Futuristic Technologies (INCOFT), Belagavi, Karnataka, India, 24–26 November 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Sun, Y.; Hao, Z.; Guo, Z.; Liu, Z.; Huang, J. Detection and Mapping of Chestnut Using Deep Learning from High-Resolution UAV-Based RGB Imagery. Remote Sens. 2023, 15, 4923. [Google Scholar] [CrossRef]

- Kamal, M.; Phinn, S.; Johansen, K. Object-Based Approach for Multi-Scale Mangrove Composition Mapping Using Multi-Resolution Image Datasets. Remote Sens. 2015, 7, 4753–4783. [Google Scholar] [CrossRef]

- Srivastava, P.K.; Malhi, R.K.M.; Pandey, P.C.; Anand, A.; Singh, P.; Pandey, M.K.; Gupta, A. Revisiting hyperspectral remote sensing: Origin, processing, applications and way forward. In Hyperspectral Remote Sensing; Elsevier: Amsterdam, The Netherlands, 2020; pp. 3–21. [Google Scholar] [CrossRef]

- Gallardo-Salazar, J.L.; Pompa-García, M. Detecting Individual Tree Attributes and Multispectral Indices Using Unmanned Aerial Vehicles: Applications in a Pine Clonal Orchard. Remote Sens. 2020, 12, 4144. [Google Scholar] [CrossRef]

- Vannini, A.; Vettraino, A.M.; Belli, C.; Montaghi, A.; Natili, G. New Technologies of Remote Sensing in Phytosanitary Monitoring: Application to Ink Disease of Chestnut [Castanea sativa Mill.; Latium]; Italus Hortus: Sesto Fiorentino, Italy, 2005. [Google Scholar]

- Pádua, L.; Marques, P.; Martins, L.; Sousa, A.; Peres, E.; Sousa, J.J. Monitoring of Chestnut Trees Using Machine Learning Techniques Applied to UAV-Based Multispectral Data. Remote Sens. 2020, 12, 3032. [Google Scholar] [CrossRef]

- Pisner, D.A.; Schnyer, D.M. Support vector machine. In Machine Learning; Elsevier: Amsterdam, The Netherlands, 2020; pp. 101–121. [Google Scholar] [CrossRef]

- Hearst, M.; Dumais, S.; Osuna, E.; Platt, J.; Scholkopf, B. Support vector machines. IEEE Intell. Syst. Their Appl. 1998, 13, 18–28. [Google Scholar] [CrossRef]

- Suthaharan, S. Machine Learning Models and Algorithms for Big Data Classification: Thinking with Examples for Effective Learning; Integrated Series in Information Systems; Springer: Boston, MA, USA, 2016; Volume 36. [Google Scholar] [CrossRef]

- Rish, I. An empirical study of the naive Bayes classifier. In Proceedings of the IJCAI 2001 Workshop on Empirical Methods in Artificial Intelligence, Seattle, WA, USA, 4 August 2001. [Google Scholar]

- Xu, S. Bayesian Naïve Bayes classifiers to text classification. J. Inf. Sci. 2018, 44, 48–59. [Google Scholar] [CrossRef]

- Kazakeviciute, A.; Olivo, M. A study of logistic classifier: Uniform consistency in finite-dimensional linear spaces. J. Math. Oper. Res. 2016, 3, 1–7. [Google Scholar]

- Sebastiani, A.; Bertozzi, M.; Vannini, A.; Morales-Rodriguez, C.; Calfapietra, C.; Vaglio Laurin, G. Monitoring ink disease epidemics in chestnut and cork oak forests in central Italy with remote sensing. Remote Sens. Appl. Soc. Environ. 2024, 36, 101329. [Google Scholar] [CrossRef]

- USDA-NRCS. Keys to Soil Taxonomy, 13th ed.; Technical Report; USDA Natural Resources Conservation Service: Washington, DC, USA, 2022. [Google Scholar]

- IUSS Working Group WRB. World Reference Base for Soil Resources, 2006: A Framework for International Classification, Correlation and Communication; Technical Report; FAO: Rome, Italy, 2006. [Google Scholar]

- Muñoz-Sabater, J.; Dutra, E.; Agustí-Panareda, A.; Albergel, C.; Arduini, G.; Balsamo, G.; Boussetta, S.; Choulga, M.; Harrigan, S.; Hersbach, H.; et al. ERA5-Land: A state-of-the-art global reanalysis dataset for land applications. Earth Syst. Sci. Data 2021, 13, 4349–4383. [Google Scholar] [CrossRef]

- Vannini, A.; Natili, G.; Anselmi, N.; Montaghi, A.; Vettraino, A.M. Distribution and gradient analysis of Ink disease in chestnut forests. For. Pathol. 2010, 40, 73–86. [Google Scholar] [CrossRef]

- Maresi, G.; Turchetti, T. Management of diseases in chestnut orchards and stands: A significant prospect. Adv. Hortic. Sci. 2006, 20, 33–39. [Google Scholar]

- Schomaker, M. Crown-Condition Classification: A Guide to Data Collection and Analysis; US Department of Agriculture, Forest Service, Southern Research Station: Asheville, NC, USA, 2007. [Google Scholar]

- Bosshard, W. Sanasilva—Kronenbilder; Eidgenossische Anstalt fur das Forstliche Versuchswesen: Birmensdorf, Switzerland, 1986. [Google Scholar]

- Topcon-Positioning-Systems Inc. HiPer HR Operator’s Manual; Technical Report; Topcon Positioning Systems Inc.: Livermore, CA, USA, 2016. [Google Scholar]

- Topcon-Positioning-Systems Inc. MAGNET Field Layout Help; Technical Report; Topcon Positioning Systems Inc.: Livermore, CA, USA, 2015. [Google Scholar]

- Cutini, A. New management options in chestnut coppices: An evaluation on ecological bases. For. Ecol. Manag. 2001, 141, 165–174. [Google Scholar] [CrossRef]

- Prada, M.; Cabo, C.; Hernández-Clemente, R.; Hornero, A.; Majada, J.; Martínez-Alonso, C. Assessing Canopy Responses to Thinnings for Sweet Chestnut Coppice with Time-Series Vegetation Indices Derived from Landsat-8 and Sentinel-2 Imagery. Remote Sens. 2020, 12, 3068. [Google Scholar] [CrossRef]

- MicaSense-Inc. RedEdge 3 User Manual MicaSense RedEdge 3 Multispectral Camera User Manual; Technical Report; MicaSense-Inc.: Seattle, WA, USA, 2015. [Google Scholar]

- Iglhaut, J.; Cabo, C.; Puliti, S.; Piermattei, L.; O’Connor, J.; Rosette, J. Structure from Motion Photogrammetry in Forestry: A Review. Curr. For. Rep. 2019, 5, 155–168. [Google Scholar] [CrossRef]

- Aldrich, R.C. Detecting Disturbances in a Forest Environment. Photogramm. Eng. Remote Sens. 1975, 41.1, 39–48. [Google Scholar]

- Coppin, P.; Jonckheere, I.; Nackaerts, K.; Muys, B.; Lambin, E. Review ArticleDigital change detection methods in ecosystem monitoring: A review. Int. J. Remote Sens. 2004, 25, 1565–1596. [Google Scholar] [CrossRef]

- Rouse, J.W.; Haas, R.H.; Schell, J.A.; Deering, D.W.; Freden, S.C.; Mercanti, E.P.; Becker, M.A. A Monitoring vegetation systems in the great plains with ERTS. In Proceedings of the Third ERTS Symposium, Washington DC, USA, 10–14 December 1973; Volume I, pp. 309–317. [Google Scholar] [CrossRef]

- Woebbecke, D.; Meyer, G.; Von Bargen, K.; Mortensen, D. Shape features for identifying young weeds using image analysis. Trans. ASAE 1995, 38, 271–281. [Google Scholar] [CrossRef]

- Gillies, S. Rasterio: Geospatial raster I/O for Python programmers. 2013. Available online: https://github.com/rasterio/rasterio (accessed on 4 March 2024).

- Harris, C.R.; Millman, K.J.; van der Walt, S.J.; Gommers, R.; Virtanen, P.; Cournapeau, D.; Wieser, E.; Taylor, J.; Berg, S.; Smith, N.J.; et al. Array programming with NumPy. Nature 2020, 585, 357–362. [Google Scholar] [CrossRef]

- ESRI, Redlands, CA: Environmental Systems Research Institute, ArcGIS Pro: Release 3.3.0. 2024.

- Anaconda Software Distribution, Anaconda Documentation. 2020. Available online: https://docs.anaconda.com/ (accessed on 5 July 2023).

- Boser, B.E.; Guyon, I.M.; Vapnik, V.N. A training algorithm for optimal margin classifiers. In Proceedings of the Fifth Annual Workshop on Computational Learning Theory, New York, NY, USA, 27–29 July 1992; COLT’92. pp. 144–152. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V.; Saitta, L. Support-Vector Networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Platt, J.C. Probabilistic Outputs for Support Vector Machines and Comparisons to Regularized Likelihood Methods; MIT Press: Cambridge, MA, USA, 1999. [Google Scholar]

- Chang, C.C.; Lin, C.J. LIBSVM: A Library for Support Vector Machines; Association for Computing Machinery: New York, NY, USA, 2001. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Chan, T.F.; Golub, G.H.; Leveque, R.J. Updating Formulae and a Pairwise Algorithm for Computing Sample Variances; Association for Computing Machinery: New York, NY, USA, 1979. [Google Scholar] [CrossRef]

- Raschka, S. MLxtend: Providing machine learning and data science utilities and extensions to Python’s scientific computing stack. J. Open Source Softw. 2018, 3, 638. [Google Scholar] [CrossRef]

- Buitinck, L.; Louppe, G.; Blondel, M.; Pedregosa, F.; Mueller, A.; Grisel, O.; Niculae, V.; Prettenhofer, P.; Gramfort, A.; Grobler, J.; et al. API design for machine learning software: Experiences from the scikit-learn project. In Proceedings of the ECML PKDD Workshop: Languages for Data Mining and Machine Learning, Prague, Czech Republic, 23–27 September 2013; pp. 108–122. [Google Scholar] [CrossRef]

- Pasta, S.; Sala, G.; La Mantia, T.; Bondì, C.; Tinner, W. The past distribution of Abies nebrodensis (Lojac.) Mattei: Results of a multidisciplinary study. Veg. Hist. Archaeobotany 2020, 29, 357–371. [Google Scholar] [CrossRef]

- Padua, L.; Marques, P.; Martins, L.; Sousa, A.; Peres, E.; Sousa, J.J. Estimation of Leaf Area Index in Chestnut Trees using Multispectral Data from an Unmanned Aerial Vehicle. In Proceedings of the IGARSS 2020—2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020; pp. 6503–6506. [Google Scholar] [CrossRef]

- Pádua, L.; Chiroque-Solano, P.M.; Marques, P.; Sousa, J.J.; Peres, E. Mapping the Leaf Area Index of Castanea sativa Miller Using UAV-Based Multispectral and Geometrical Data. Drones 2022, 6, 422. [Google Scholar] [CrossRef]

- Mahlein, A.K.; Steiner, U.; Dehne, H.W.; Oerke, E.C. Spectral signatures of sugar beet leaves for the detection and differentiation of diseases. Precis. Agric. 2010, 11, 413–431. [Google Scholar] [CrossRef]

- Pinter, P.J., Jr.; Hatfield, J.L.; Schepers, J.S.; Barnes, E.M.; Moran, M.S.; Daughtry, C.S.; Upchurch, D.R. Remote Sensing for Crop Management. Photogramm. Eng. Remote Sens. 2003, 69, 647–664. [Google Scholar] [CrossRef]

- Praticò, S.; Solano, F.; Di Fazio, S.; Modica, G. Machine Learning Classification of Mediterranean Forest Habitats in Google Earth Engine Based on Seasonal Sentinel-2 Time-Series and Input Image Composition Optimisation. Remote Sens. 2021, 13, 586. [Google Scholar] [CrossRef]

| Plant UID | Circunf. (cm) | High (m) | Crown Width (m) | Symptomatic (S)/ Asymptomatic (A) | Crown Mortality (%) | LAI |

|---|---|---|---|---|---|---|

| 30 | 328 | 15.2 | 14.3 | A | 0–10 | 8.25 |

| 105 | 270 | 14.5 | 12.0 | A | 0–10 | 7.58 |

| 110 | 255 | 16.9 | 11.5 | A | 0–10 | 8.30 |

| 130 | 225 | 12.4 | 9.3 | A | 0–10 | 7.58 |

| 155 | 300 | 18.3 | 13.0 | A | 0–10 | 9.87 |

| 210 | 540 | 19.6 | 15.0 | A | 0–10 | 8.56 |

| 225 | 270 | 21 | 15.0 | A | 0–10 | 10.36 |

| 315 | 245 | 15.7 | 12.0 | A | 0–10 | 6.58 |

| 965 | 350 | 12.7 | 13.0 | A | 0–10 | 7.74 |

| 1075 | 230 | 16.1 | 11.8 | A | 0–10 | 7.47 |

| Average for A group | 297.7 cm | 15.4 m | 12.5 m | 0–10 | 8.27 | |

| 25 | 350 | 16.5 | 10.0 | S | 25–50 | 5.91 |

| 35 | 255 | 18.4 | 7.2 | S | 50–99 | 2.60 |

| 50 | 330 | 13.6 | 10.3 | S | 50–99 | 6.30 |

| 94 | 290 | 15.8 | 9.5 | S | 25–50 | 5.05 |

| 95 | 325 | 10.4 | 10.4 | S | 10–25 | 4.55 |

| 96 | 200 | 17.6 | 9.9 | S | 25–50 | 5.72 |

| 97 | 180 | 16.2 | 8.6 | S | 50–99 | 6.35 |

| 98 | 160 | 13.4 | 4.0 | S | 50–99 | 3.22 |

| 99 | 210 | 10.5 | 9.5 | S | 0–10 | 6.99 |

| 105 | 200 | 14 | 8.5 | S | 25–50 | 5.91 |

| Average for S group | 248.4 cm | 14.7 m | 8.6 m | 50–80 | 5.25 |

| Sensor | Central Wavelength (nm) | Filter Bandwidth 1 (nm) |

|---|---|---|

| Blue (band 1) | 475 (B475) | 20 |

| Green (band 2) | 560 (G560) | 20 |

| Red (band 3) | 668 (R668) | 10 |

| Near-IR (band 4) | 840 (NiR840) | 40 |

| Red-Edge (band 5) | 717 (RE717) | 10 |

| MicaSense RedEdge-MX | |

|---|---|

| Pixel size | 3.75 M |

| Resolution | 1280 × 960 (1.2 MP × 5 imagers) |

| Aspect ratio | 04:03 |

| Sensor size | 4.8 mm × 3.6 mm |

| Focal length | 5.5 mm |

| Field of view | 47.2 degrees horizontal; 35.4 degrees vertical |

| Output bit depth | 12-bit |

| GSD @ 120 m (∼400 ft) | 8 cm/pixel per band |

| GSD @ 60 m (∼200 ft) | 4 cm/pixel per band |

| Index | Brief Explanation | Formula | Range | Reference |

|---|---|---|---|---|

| NDVI | Normalized difference vegetation index. Most commonly used to estimate vegetation health and biomass. | −1 to +1 | [78] | |

| GnDVI | Green NDVI. Uses green reflectance instead of red, often more sensitive to chlorophyll content. | −1 to +1 | [43] | |

| ExGreenRed | Excess green minus excess red. A color-based index (RGB) used in vegetation detection from standard cameras. | ∞ to +∞ | [79] | |

| RdNDVI | Similar to NDVI but uses the red-edge band instead of red, improving sensitivity to changes in canopy structure. | −1 to +1 | [43] | |

| SAVI | Soil adjusted vegetation index. Reduces soil background reflectance using a factor L. | −1 to +1 | [46,47] | |

| EVI | Enhanced vegetation index. Optimized to enhance vegetation signals in high-biomass areas. | −1 to +1 | [47] | |

| EVI2 | A two-band version of EVI that removes the need for a blue band. | 0 to +2 | [48] | |

| MCARI | Modified chlorophyll absorption in reflectance index. Emphasizes chlorophyll absorption in the red region. | 0 to 1 | [45] |

| Predictive Records | ||

|---|---|---|

| Actual records | TP | FP |

| FN | TN | |

| SVM | Log | GNB | Average | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| VIs | Parameters | Precision | Recall | F1-Score | Support | Precision | Recall | F1-Score | Support | Precision | Recall | F1-Score | Support | Precision | Recall | F1-Score | Support |

| Asymptomatic | 71.4% | 83.3% | 76.9% | 12 | 63.6% | 58.3% | 60.9% | 12 | 75.0% | 75.0% | 75.0% | 12 | 70.0% | 72.2% | 70.9% | 12 | |

| Symptomatic | 71.4% | 55.6% | 62.5% | 9 | 50.0% | 55.6% | 52.6% | 9 | 66.7% | 66.7% | 66.7% | 9 | 62.7% | 59.3% | 60.6% | 9 | |

| EVI | Accuracy | 71.4% | 71.4% | 71.4% | 71.4% | 57.1% | 57.1% | 57.1% | 57.1% | 71.4% | 71.4% | 71.4% | 71.4% | 66.7% | 66.7% | 66.7% | 66.7% |

| Macro avg | 71.4% | 69.4% | 69.7% | 21 | 56.8% | 56.9% | 56.8% | 21 | 70.8% | 70.8% | 70.8% | 21 | 66.4% | 65.7% | 65.8% | 21 | |

| Weighted avg | 71.4% | 71.4% | 70.7% | 21 | 57.8% | 57.1% | 57.3% | 21 | 71.4% | 71.4% | 71.4% | 21 | 66.9% | 66.7% | 66.5% | 21 | |

| Asymptomatic | 73.3% | 91.7% | 81.5% | 12 | 77.8% | 58.3% | 66.7% | 12 | 75.0% | 75.0% | 75.0% | 12 | 75.4% | 75.0% | 74.4% | 12 | |

| Symptomatic | 83.3% | 55.6% | 66.7% | 9 | 58.3% | 77.8% | 66.7% | 9 | 66.7% | 66.7% | 66.7% | 9 | 69.4% | 66.7% | 66.7% | 9 | |

| EVI2 | Accuracy | 76.2% | 76.2% | 76.2% | 76.2% | 66.7% | 66.7% | 66.7% | 66.7% | 71.4% | 71.4% | 71.4% | 71.4% | 71.4% | 71.4% | 71.4% | 71.4% |

| Macro avg | 78.3% | 73.6% | 74.1% | 21 | 68.1% | 68.1% | 66.7% | 21 | 70.8% | 70.8% | 70.8% | 21 | 72.4% | 70.8% | 70.5% | 21 | |

| Weighted avg | 77.6% | 76.2% | 75.1% | 21 | 69.4% | 66.7% | 66.7% | 21 | 71.4% | 71.4% | 71.4% | 21 | 72.8% | 71.4% | 71.1% | 21 | |

| Asymptomatic | 70.0% | 58.3% | 63.6% | 12 | 66.7% | 50.0% | 57.1% | 12 | 66.7% | 50.0% | 57.1% | 12 | 67.8% | 52.8% | 59.3% | 12 | |

| Symptomatic | 54.5% | 66.7% | 60.0% | 9 | 50.0% | 66.7% | 57.1% | 9 | 50.0% | 66.7% | 57.1% | 9 | 51.5% | 66.7% | 58.1% | 9 | |

| ExGreenRed | Accuracy | 61.9% | 61.9% | 61.9% | 61.9% | 57.1% | 57.1% | 57.1% | 57.1% | 57.1% | 57.1% | 57.1% | 57.1% | 58.7% | 58.7% | 58.7% | 58.7% |

| Macro avg | 62.3% | 62.5% | 61.8% | 21 | 58.3% | 58.3% | 57.1% | 21 | 58.3% | 58.3% | 57.1% | 21 | 59.6% | 59.7% | 58.7% | 21 | |

| Weighted avg | 63.4% | 61.9% | 62.1% | 21 | 59.5% | 57.1% | 57.1% | 21 | 59.5% | 57.1% | 57.1% | 21 | 60.8% | 58.7% | 58.8% | 21 | |

| Asymptomatic | 92.3% | 100.0% | 96.0% | 12 | 85.7% | 100.0% | 92.3% | 12 | 92.3% | 100.0% | 96.0% | 12 | 90.1% | 100.0% | 94.8% | 12 | |

| Symptomatic | 100.0% | 88.9% | 94.1% | 9 | 100.0% | 77.8% | 87.5% | 9 | 100.0% | 88.9% | 94.1% | 9 | 100.0% | 85.2% | 91.9% | 9 | |

| GnDVI | Accuracy | 95.2% | 95.2% | 95.2% | 95.2% | 90.5% | 90.5% | 90.5% | 90.5% | 95.2% | 95.2% | 95.2% | 95.2% | 93.7% | 93.7% | 93.7% | 93.7% |

| Macro avg | 96.2% | 94.4% | 95.1% | 21 | 92.9% | 88.9% | 89.9% | 21 | 96.2% | 94.4% | 95.1% | 21 | 95.1% | 92.6% | 93.3% | 21 | |

| Weighted avg | 95.6% | 95.2% | 95.2% | 21 | 91.8% | 90.5% | 90.2% | 21 | 95.6% | 95.2% | 95.2% | 21 | 94.3% | 93.7% | 93.5% | 21 | |

| Asymptomatic | 53.3% | 66.7% | 59.3% | 12 | 81.8% | 75.0% | 78.3% | 12 | 63.6% | 58.3% | 60.9% | 12 | 66.3% | 66.7% | 66.1% | 12 | |

| Symptomatic | 33.3% | 22.2% | 26.7% | 9 | 70.0% | 77.8% | 73.7% | 9 | 50.0% | 55.6% | 52.6% | 9 | 51.1% | 51.9% | 51.0% | 9 | |

| MCARI | Accuracy | 47.6% | 47.6% | 47.6% | 47.6% | 76.2% | 76.2% | 76.2% | 76.2% | 57.1% | 57.1% | 57.1% | 57.1% | 60.3% | 60.3% | 60.3% | 60.3% |

| Macro avg | 43.3% | 44.4% | 43.0% | 21 | 75.9% | 76.4% | 76.0% | 21 | 56.8% | 56.9% | 56.8% | 21 | 58.7% | 59.3% | 58.6% | 21 | |

| Weighted avg | 44.8% | 47.6% | 45.3% | 21 | 76.8% | 76.2% | 76.3% | 21 | 57.8% | 57.1% | 57.3% | 21 | 59.8% | 60.3% | 59.6% | 21 | |

| Asymptomatic | 83.3% | 83.3% | 83.3% | 12 | 83.3% | 83.3% | 83.3% | 12 | 90.0% | 75.0% | 81.8% | 12 | 85.6% | 80.6% | 82.8% | 12 | |

| Symptomatic | 77.8% | 77.8% | 77.8% | 9 | 77.8% | 77.8% | 77.8% | 9 | 72.7% | 88.9% | 80.0% | 9 | 76.1% | 81.5% | 78.5% | 9 | |

| NDVI | Accuracy | 81.0% | 81.0% | 81.0% | 81.0% | 81.0% | 81.0% | 81.0% | 81.0% | 81.0% | 81.0% | 81.0% | 81.0% | 81.0% | 81.0% | 81.0% | 81.0% |

| Macro avg | 80.6% | 80.6% | 80.6% | 21 | 80.6% | 80.6% | 80.6% | 21 | 81.4% | 81.9% | 80.9% | 21 | 80.8% | 81.0% | 80.7% | 21 | |

| Weighted avg | 81.0% | 81.0% | 81.0% | 21 | 81.0% | 81.0% | 81.0% | 21 | 82.6% | 81.0% | 81.0% | 21 | 81.5% | 81.0% | 81.0% | 21 | |

| Asymptomatic | 92.3% | 100.0% | 96.0% | 12 | 92.3% | 100.0% | 96.0% | 12 | 92.3% | 100.0% | 96.0% | 12 | 92.3% | 100.0% | 96.0% | 12 | |

| Symptomatic | 100.0% | 88.9% | 94.1% | 9 | 100.0% | 88.9% | 94.1% | 9 | 100.0% | 88.9% | 94.1% | 9 | 100.0% | 88.9% | 94.1% | 9 | |

| RdNDVI | Accuracy | 95.2% | 95.2% | 95.2% | 95.2% | 95.2% | 95.2% | 95.2% | 95.2% | 95.2% | 95.2% | 95.2% | 95.2% | 95.2% | 95.2% | 95.2% | 95.2% |

| Macro avg | 96.2% | 94.4% | 95.1% | 21 | 96.2% | 94.4% | 95.1% | 21 | 96.2% | 94.4% | 95.1% | 21 | 96.2% | 94.4% | 95.1% | 21 | |

| Weighted avg | 95.6% | 95.2% | 95.2% | 21 | 95.6% | 95.2% | 95.2% | 21 | 95.6% | 95.2% | 95.2% | 21 | 95.6% | 95.2% | 95.2% | 21 | |

| Asymptomatic | 71.4% | 83.3% | 76.9% | 12 | 61.5% | 66.7% | 64.0% | 12 | 72.7% | 66.7% | 69.6% | 12 | 68.6% | 72.2% | 70.2% | 12 | |

| Symptomatic | 71.4% | 55.6% | 62.5% | 9 | 50.0% | 44.4% | 47.1% | 9 | 60.0% | 66.7% | 63.2% | 9 | 60.5% | 55.6% | 57.6% | 9 | |

| SAVI | Accuracy | 71.4% | 71.4% | 71.4% | 71.4% | 57.1% | 57.1% | 57.1% | 57.1% | 66.7% | 66.7% | 66.7% | 66.7% | 65.1% | 65.1% | 65.1% | 65.1% |

| Macro avg | 71.4% | 69.4% | 69.7% | 21 | 55.8% | 55.6% | 55.5% | 21 | 66.4% | 66.7% | 66.4% | 21 | 64.5% | 63.9% | 63.9% | 21 | |

| Weighted avg | 71.4% | 71.4% | 70.7% | 21 | 56.6% | 57.1% | 56.7% | 21 | 67.3% | 66.7% | 66.8% | 21 | 65.1% | 65.1% | 64.8% | 21 | |

| Classifier | Parameters | Precision | Recall | F1-Score | Support |

|---|---|---|---|---|---|

| Asymptomatic | 78.5% | 75.0% | 76.4% | 12 | |

| Symptomatic | 70.8% | 73.6% | 71.8% | 9 | |

| GNB | Accuracy | 74.4% | 74.4% | 74.4% | 74.4% |

| Macro avg | 74.6% | 74.3% | 74.1% | 21 | |

| Weighted avg | 75.2% | 74.4% | 74.4% | 21 | |

| Asymptomatic | 76.6% | 74.0% | 74.8% | 12 | |

| Symptomatic | 69.5% | 70.8% | 69.6% | 9 | |

| Log | Accuracy | 72.6% | 72.6% | 72.6% | 72.6% |

| Macro avg | 73.1% | 72.4% | 72.2% | 21 | |

| Weighted avg | 73.6% | 72.6% | 72.6% | 21 | |

| Asymptomatic | 75.9% | 83.3% | 79.2% | 12 | |

| Symptomatic | 74.0% | 63.9% | 68.0% | 9 | |

| SVM | Accuracy | 75.0% | 75.0% | 75.0% | 75.0% |

| Macro avg | 75.0% | 73.6% | 73.6% | 21 | |

| Weighted avg | 75.1% | 75.0% | 74.4% | 21 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Arcidiaco, L.; Danti, R.; Corongiu, M.; Emiliani, G.; Frascella, A.; Mello, A.; Bonora, L.; Barberini, S.; Pellegrini, D.; Sabatini, N.; et al. Preliminary Machine Learning-Based Classification of Ink Disease in Chestnut Orchards Using High-Resolution Multispectral Imagery from Unmanned Aerial Vehicles: A Comparison of Vegetation Indices and Classifiers. Forests 2025, 16, 754. https://doi.org/10.3390/f16050754

Arcidiaco L, Danti R, Corongiu M, Emiliani G, Frascella A, Mello A, Bonora L, Barberini S, Pellegrini D, Sabatini N, et al. Preliminary Machine Learning-Based Classification of Ink Disease in Chestnut Orchards Using High-Resolution Multispectral Imagery from Unmanned Aerial Vehicles: A Comparison of Vegetation Indices and Classifiers. Forests. 2025; 16(5):754. https://doi.org/10.3390/f16050754

Chicago/Turabian StyleArcidiaco, Lorenzo, Roberto Danti, Manuela Corongiu, Giovanni Emiliani, Arcangela Frascella, Antonietta Mello, Laura Bonora, Sara Barberini, David Pellegrini, Nicola Sabatini, and et al. 2025. "Preliminary Machine Learning-Based Classification of Ink Disease in Chestnut Orchards Using High-Resolution Multispectral Imagery from Unmanned Aerial Vehicles: A Comparison of Vegetation Indices and Classifiers" Forests 16, no. 5: 754. https://doi.org/10.3390/f16050754

APA StyleArcidiaco, L., Danti, R., Corongiu, M., Emiliani, G., Frascella, A., Mello, A., Bonora, L., Barberini, S., Pellegrini, D., Sabatini, N., & Della Rocca, G. (2025). Preliminary Machine Learning-Based Classification of Ink Disease in Chestnut Orchards Using High-Resolution Multispectral Imagery from Unmanned Aerial Vehicles: A Comparison of Vegetation Indices and Classifiers. Forests, 16(5), 754. https://doi.org/10.3390/f16050754