Abstract

Forests are critical ecosystems, supporting biodiversity, economic resources, and climate regulation. The traditional techniques applied in forestry segmentation based on RGB photos struggle in challenging circumstances, such as fluctuating lighting, occlusions, and densely overlapping structures, which results in imprecise tree detection and categorization. Despite their effectiveness, semantic segmentation models have trouble recognizing trees apart from background objects in cluttered surroundings. In order to overcome these restrictions, this study advances forestry management by integrating depth information into the YOLOv8 segmentation model using the FinnForest dataset. Results show significant improvements in detection accuracy, particularly for spruce trees, where mAP50 increased from 0.778 to 0.848 and mAP50-95 from 0.472 to 0.523. These findings demonstrate the potential of depth-enhanced models to overcome the limitations of traditional RGB-based segmentation, particularly in complex forest environments with overlapping structures. Depth-enhanced semantic segmentation enables precise mapping of tree species, health, and spatial arrangements, critical for habitat analysis, wildfire risk assessment, and sustainable resource management. By addressing the challenges of size, distance, and lighting variations, this approach supports accurate forest monitoring, improved resource conservation, and automated decision-making in forestry. This research highlights the transformative potential of depth integration in segmentation models, laying a foundation for broader applications in forestry and environmental conservation. Future studies could expand dataset diversity, explore alternative depth technologies like LiDAR, and benchmark against other architectures to enhance performance and adaptability further.

1. Introduction

Forestry management and environmental monitoring are important in maintaining ecological balance, promoting biodiversity, and enhancing sustainable use of resources. Accurate tree recognition and segmentation in dense forest environments are important for their various applications in biodiversity assessments, carbon stock measurements, and forest health monitoring. It facilitates accurate forest mapping, which is crucial in obtaining the required knowledge to ensure the profitable exploitation of forest resources and increase resilience against wildfires [1]. Traditional methods of forest monitoring rely on manual surveys and aerial imagery. These methods are time-consuming, costly, and prone to mistakes because of the complex and cluttered nature of most forest environments. These issues are resolved with the application of computer vision and deep learning techniques. These techniques have significantly advanced the field of remote sensing and forest monitoring [2].

Object detection models, such as the You Only Look Once (YOLO) series, have achieved immense success due to their ability to detect objects in real-time with high levels of accuracy. YOLO models are relatively fast and accurate [3]. The combination of speed and accuracy makes them ideal for applications that require the fast processing of large datasets. In forestry management, the models rely on RGB images. RGB images are digital images that use a color model that combines red, green, and blue light to create a wide spectrum of colors [4]. One of the main challenges with using RGB images for forestry applications is their inability to capture all the necessary details in highly cluttered and occluded environments. Forests are characterized by varying lighting conditions, dense undergrowth, overlapping branches, and shadows. These factors can obscure important parts of the trees and even lead to false positives in the detection process. A tree that is partially hidden by undergrowth or by the branches of another tree, for example, may be difficult to distinguish from the surrounding vegetation [5]. This could lead to missed detections and inaccurate segmentation. In addition, shadows cast by the tree canopy or the landscape terrain can create variations in pixel intensity due to lighting variations. This often complicates the accurate identification of tree boundaries. Typically, these challenges make it difficult for the RGB-based models to differentiate between foreground and background objects accurately. RGB-based models also face similar challenges in recognizing partially occluded trees, which results in lower detection and segmentation accuracy [6]. The inability to perceive depth and spatial relationships between objects in RGB images means that the models struggle to interpret complex forest scenes where the spatial arrangement of objects is required for accurate identification.

A reliable approach to overcoming the limitations of RGB images is the incorporation of depth information in addition to the use of traditional RGB data. Depth information provides the spatial information needed to differentiate foreground objects from background objects [7]. This helps improve the YOLO model’s ability to recognize and segment objects in even the most complex scenes, such as dense forests. In such an environment, depth information would help enhance the detection and segmentation accuracy of the model by providing a more detailed capture of the scene structure. While the integration of depth information into object detection and segmentation models remains considerably underexplored, this study aims to affirm its applicability in the context of forest environments [8].

The significance of depth in forestry lies in its transformative impact on segmentation processes, offering several key advantages over conventional RGB-based approaches. Depth-enhanced segmentation has emerged as a cost-effective alternative to LiDAR, increasing accessibility to advanced segmentation techniques. It enables models to adapt more effectively to varying lighting conditions, minimizing noise and preventing the blurriness associated with RGB-only models. Precise tree identification and measurement optimize resources during timber harvesting, reducing waste and improving operational efficiency. Additionally, depth data enhance the accuracy of boundary and size estimations, allowing for the distinction of individual trees in densely populated forests. This technology also supports preservation efforts and risk prevention by aiding in the detection of diseases, assessing vulnerability to wildfires, and monitoring overall tree health. In dense forests with limited visibility, depth information is crucial for collision avoidance by forestry equipment.

Practical applications of depth-enhanced segmentation are extensive. Automated timber harvesting benefits from precise evaluations enabled by depth data, identifying mature trees ready for harvest and facilitating selective targeting to reduce loss and promote sustainability. This automation significantly expedites processes traditionally reliant on field inspections [9]. In remote forest monitoring, drones equipped with depth-enhanced segmentation provide three-dimensional representations of forests, enabling teams to assess growth, density, and health without manual intervention. This capability is particularly valuable in inaccessible or hazardous locations. Three-dimensional maps generated in real time further support decision-making by identifying areas prone to natural disasters, diseases, or pest infestations, offering a comprehensive tool for proactive forest management [10].

However, while prior work on Depth Anything V2 [11,12] demonstrates its feasibility for single-image depth estimation, real-world error rates can vary. In our Discussion (Section 5, Section 6 and Section 7), we further address the potential limitations of using AI-based, non-metric depth (relative depth) for forestry applications and compare it to LiDAR’s more precise but costlier solutions.

1.1. Literature Review

The YOLO family of models has been studied and applied in various fields. Some fields where the application of YOLO models has been successful include autonomous driving, security surveillance, and medical imaging [13]. Their application is motivated by the perfect balance between speed and accuracy in the model’s performance. Redmon [14] introduced the first YOLO model, which achieved advancements in real-time object detection by framing detection as a single regression problem [15]. Subsequent versions of the model, such as YOLOv3 and YOLOv4, have achieved major improvements in accuracy and efficiency, which made them more suitable for complex tasks [14,16]. In addition, recent advancements, including the development of YOLOv8, YOLOv9 [17], and the latest, YOLOv11 [18], have continued to improve model performance due to the integration of features like improved architectures and detection heads [19]. In this research, the focus was on YOLOv8 because empirical testing demonstrated that it performs best in forestry applications [20].

In computer vision, depth information, which is often obtained from point clouds like LiDAR, has been crucial in enhancing model performance, especially in complex scenes with major occlusions. While such data sources can provide valuable depth information, our research focuses on AI algorithms to extract depth information, as we do not have access to such data. This AI-based approach ensures broader applicability across various use cases. Researchers have explored various methods of incorporating depth information into convolutional neural networks (CNNs) for tasks like object detection, semantic segmentation, and scene understanding. According to a study by Eigen [21], depth maps can significantly improve the accuracy of semantic segmentation models by providing additional context about the spatial arrangement of objects in a particular scene [21]. Other studies have also shown that integrating depth information into CNNs can improve the detection and segmentation of objects in cluttered environments, including clustered urban and dense forest landscapes [22,23].

Forestry monitoring significantly benefits from the application of computer vision techniques. However, challenges may emerge due to the unique characteristics of forest environments including varying lighting conditions, dense foliage, and the presence of non-tree objects. The application of computer vision techniques has been faced with various limitations that potentially lead to false positives and sometimes missed detections [24]. The use of depth information in forestry applications is still in its early stages of development, although preliminary studies suggest that it holds significant promise for improving the accuracy of tree detection and segmentation in densely forested areas [25].

When comparing LiDAR with depth estimation, selecting a cost-effective option requires careful consideration of their respective advantages and limitations. While LiDAR provides exceptional accuracy for depth mapping, it presents challenges that limit its practicality in certain contexts. Synchronization and calibration during integration with other sensory systems can be complex, and the high upfront and ongoing costs make LiDAR less feasible for large-scale forestry operations [26].

Machine learning-based depth models, such as Depth Anything V2 [11], which was utilized in this research, offer a more affordable and flexible alternative. Though not as precise as LiDAR, these models provide significant advantages. Lidar provides absolute depth measure (in meters), whereas Depth Anything V2 provides relative depth (no metric values). They streamline maintenance and integration by deriving depth directly from RGB images, simplifying setup, and reducing upkeep requirements. Their scalability allows implementation across a wide range of platforms and devices, including drones and mobile systems, without the need for specialized LiDAR-compatible infrastructure. Furthermore, depth estimation models are substantially more cost-effective, leveraging RGB data from standard recording equipment. Unlike LiDAR sensors, which can cost tens of thousands of dollars to purchase and operate, these algorithms provide an accessible and economical solution for forestry applications, as demonstrated in this study [12].

1.2. Problem Statement

Despite the major advancements in YOLO models and the recognized potential of depth information, a significant gap still exists in their application to forestry environments. Current object detection models are mainly trained and optimized for urban and indoor settings, where the challenges differ from those encountered in forests. Forests are characterized by a complex structure involving overlapping trees, dense branches, and undergrowth, which creates obstacles for accurate object detection and segmentation. These complexities result in lower performance when using models that rely solely on RGB images. The integration of depth information into YOLO models offers a solution to these challenges by providing additional spatial context that helps differentiate between objects in cluttered forest environments. They have emerged as alternatives to the region-based object detection methods considering their primary focus on realizing real-time performance [27]. However, this approach has not been explored deeply in the context of forestry applications. As such, further research is necessary to evaluate the effectiveness of incorporating depth information into YOLO models and determine its impact on improving model performance for tree recognition and segmentation.

1.3. Objectives

This study aims to address the identified gap in forestry object detection and segmentation by integrating depth information into the YOLOv8 model. The primary objectives of this research are as follows:

- i.

- To develop and implement a methodology for incorporating depth information into the YOLOv8 segmentation model for the forestry environment.

- ii.

- To evaluate the performance of the depth-enhanced YOLOv8 model on forestry datasets, with a focus on tree recognition and segmentation accuracy in complex forest scenes.

- iii.

- To compare the performance of the depth-enhanced YOLOv8 model with a baseline YOLOv8 model that uses only RGB images to quantify the impact of depth information on segmentation accuracy in forestry applications.

2. Methods

This chapter outlines the methodology and experimental design for evaluating a modified YOLOv8x-seg model on the FinnForest dataset. The dataset, prepared with preprocessing and converted annotations, was split into training and validation sets. The YOLOv8x-seg model architecture was enhanced with depth information to improve object detection and segmentation in three-dimensional space. The model training involved data augmentation and fine-tuning over one thousand epochs with early stopping. Model performance was assessed using mAP50 and mAP50-95 metrics, comparing a baseline model without depth data to an enhanced version to measure the impact of depth information on accuracy and robustness.

2.1. Dataset

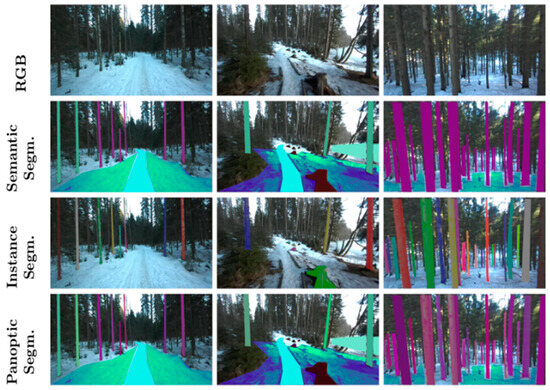

The FinnForest [12] dataset entails a collection of images meant for forestry and environmental analysis. It is gathered from various sources and organized into various categories. The FinnForest dataset contains more information than that used by the FinnWoodlands subset, which consists of RGB stereo images, point clouds, and sparse depth maps, as well as ground truth manual annotations for semantic, instance, and panoptic segmentation. We should emphasize that we used only the RGB images and their annotations. The dataset (specification in Table 1) preparation involves several preprocessing steps to ensure the images are suitable for training. Those are presented in Figure 1.

Table 1.

Summary of the FinnWoodlands dataset, including available data types, sample counts, and formats.

Figure 1.

Example of RGB images and corresponding semantic, instance, and panoptic segmentation from the FinnForest dataset.

The FinnForest dataset is designed for forestry research, particularly in tree detection and segmentation using RGB and LiDAR data. The first image showcases different segmentation techniques applied to sample images, highlighting how trees and pathways are identified. The second image provides a structured summary of the dataset, indicating the variety of data formats available, including RGB images, LiDAR point clouds, and depth maps. These resources support advanced forest mapping and analysis for robotics and remote sensing applications.

For the purpose of this study, the data were organized into training and validation sets. COCO (Common Objects in Contact) annotations were converted into a YOLO-compatible format [28]. A YAML (YAML Ain’t Markup Language) file was then created to specify dataset configurations. The preprocessing step aimed to facilitate effective model training by ensuring that the data were correctly labeled and formatted.

Although we highlight single-image depth estimation in principle, we tested our final model on a real-life forest set of 67 images to assess performance under actual field conditions. These 67 test images came from the same forest region but different viewpoints, ensuring a more practical evaluation than a single illustrative image.

2.2. Model Architecture

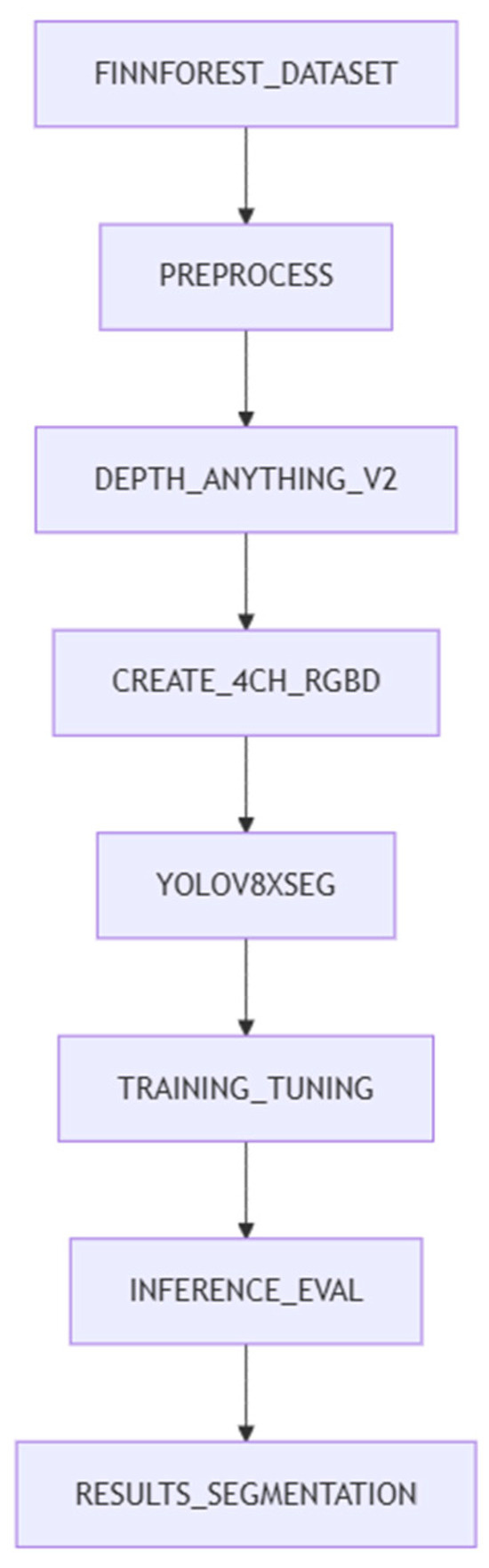

The largest YOLOv8x-seg model variant was employed for this study. YOLOv8 utilizes a CSP-DarkNet53 architecture for feature extraction, enhanced by CSP (Cross-Stage Partial Networks), which improves gradient flow and reduces computation. It incorporates PANet (Path Aggregation Network) to refine feature maps from different layers, improving object localization. It uses anchor-free detection, simplifying the object detection pipeline and enhancing performance on complex backgrounds like forests. YOLOv8x-seg adds a mask prediction branch, which applies convolutional layers to generate segmentation masks for detected objects. The modified model uses 4-channel RGB-D data, where the additional depth channel provides spatial information to improve tree segmentation by using the Ultralytics4Channel repository [11]. The Depth Anything V2 model was employed to generate depth maps, transforming standard RGB images into RGB-D format. The model uses a specialized loss function to optimize segmentation accuracy, incorporating weighted depth-based loss to emphasize depth-aware object boundaries [29]. This enhances the ability of the model to detect and categorize objects in three-dimensional space [30]. The simplified solution architecture is given in Figure 2.

Figure 2.

Solution diagram.

2.3. Training Procedure

The training process was performed with a focus on optimizing the model performance through fine-tuning on the FinnForest dataset. The following parameter setups were employed during training. For stability and computational efficiency, a batch size of 16 was used. Cosine Annealing Decay was used to dynamically lower the learning rate, which was initially set at 0.001. The weight decay was maintained at 0.0005 in order to avoid overfitting. For improved convergence stability, the momentum was 0.9. Performance in tasks such as segmentation was improved by using an optimizer such as AdamW in place of SGD. To standardize training images, 640 × 640 pixels was chosen as the input image size [31].

Several data augmentation methods, including random cropping, flipping, Gaussian noise, and brightness fluctuations, were used to improve the model’s capacity for generalization. A sizable amount of the FinnForest dataset was set aside for training, while a smaller piece was set aside for validation. The dataset was divided into both validation and training subsets. A total of 1000 epochs were used to train the model for fine-tuning, with an early stop set at 100 epochs provided no performance improvement was seen. To evaluate its effect on model performance, depth information was included in the training RGB images during training.

The model’s performance was optimized using the following loss functions. For accurate object localization, Bounding Box Regression Loss (CIoU Loss) was employed. Additionally, throughout training, Focal Loss was used to alleviate the class imbalance. To improve segmentation mask accuracy, dice loss was used. An 80/20 training/validation split was used to guarantee a thorough assessment of the model’s functionality [32].

In order to avoid instability, the learning rate was first raised gradually throughout the first ten epochs using the warm-up technique. Additionally, by using the Cosine Annealing Schedule, the learning rate gradually dropped over time, enhancing generalization and avoiding overshooting [33].

2.4. Evaluation Metrics

To evaluate the performance of the YOLO models, two metrics were utilized. These included mean average precision at 50% intersection over union (mAP50) and mean average precision at 50%–95% intersection over union (mAP50-95). The mAP50 measures the accuracy of the model in detecting objects with at least 50% overlap between predicted and ground truth mask boxes, while the mAP50-95 provides a more elaborate assessment by averaging precision across multiple overlap thresholds. This section provides a detailed breakdown of how mAP is calculated. The mathematical formulation of mAP is given below.

Precision and Recall Calculations:

Precision (P): The ratio of correctly predicted objects to all predicted objects.

Recall (R): The ratio of correctly predicted objects to total ground-truth objects.

where

TP (True Positives) are the correctly detected objects;

FP (False Positives) are the incorrectly detected objects;

FN (False Negatives) are missed detections.

Intersection over Union (IoU):

To determine true positives, an IoU threshold was applied between the predicted bounding boxes and the ground truth annotations.

IoU measures the overlap between the predicted mask (BpB_pBp) and the ground truth mask (BgB_gBg).

where ∣Bp ∩ Bg∣ is the intersection of the predicted and ground truth masks and ∣Bp ∪ Bg∣ is their union.

Applying IoU Thresholds:

mAP@50 (mAP50): Uses an IoU threshold of 0.50;

mAP@50-95 (mAP50-95): Averaged over IoU thresholds from 0.50 to 0.95 in increments of 0.05.

Computing Average Precision (AP):

Once precision and recall values were obtained, the precision–recall (PR) curve was plotted for each class.

AP (Average Precision) is the area under the precision–recall curve, and p(R) is the precision–recall curve.

To ensure a fair evaluation, the AP scores were computed at multiple IoU thresholds:

AP@50 (AP50): IoU threshold fixed at 0.50;

AP@50-95 (AP50-95): Averaged across IoU values from 0.50 to 0.95 in increments of 0.05.

Mean Average Precision (mAP):

The final model performance was assessed by computing the mean average precision (mAP) across all classes:

mAP (Mean Average Precision) is the mean of AP scores across all classes, and N is the number of detected objects.

Using the FinnForest dataset, the trained YOLOv8x-seg model was evaluated in the course of the mAP calculation implementation steps. The model generated segmentation masks and bounding boxes with confidence scores for every image. For mAP50, the IoU threshold was set at 0.50, and for mAP50-95, it ranged from 0.50 to 0.95. The IoU values were used to calculate true positives, false positives, and false negatives. Multiple confidence thresholds were used to calculate precision and recall. Each class’s precision–recall curve was plotted, and the area under the curve was used to calculate the AP. The average of the AP scores for each class was used to determine the final mAP score. Only projections with confidence scores ≥ 0.5 were taken into account in the evaluation to ensure consistency [34].

2.5. Experiment Design

When comparing LiDAR with depth estimation, selecting a cost-effective option requires careful consideration of their respective advantages and limitations. While LiDAR provides exceptional accuracy for depth mapping, it presents challenges that limit its practicality in certain contexts. Synchronization and calibration during integration with other sensory systems can be complex, and the high upfront and ongoing costs make LiDAR less feasible for large-scale forestry operations [35].

Machine learning-based depth models, such as Depth Anything V2, which was utilized in this research, offer a more affordable and flexible alternative. Though not as precise as LiDAR, these models provide significant advantages. They streamline maintenance and integration by deriving depth directly from RGB images, simplifying setup, and reducing upkeep requirements. Their scalability allows implementation across a wide range of platforms and devices, including drones and mobile systems, without the need for specialized LiDAR-compatible infrastructure. Furthermore, depth estimation models are substantially more cost-effective, leveraging RGB data from standard recording equipment. Unlike LiDAR sensors, which can cost tens of thousands of dollars to purchase and operate, these algorithms provide an accessible and economical solution for forestry applications, as demonstrated in this study.

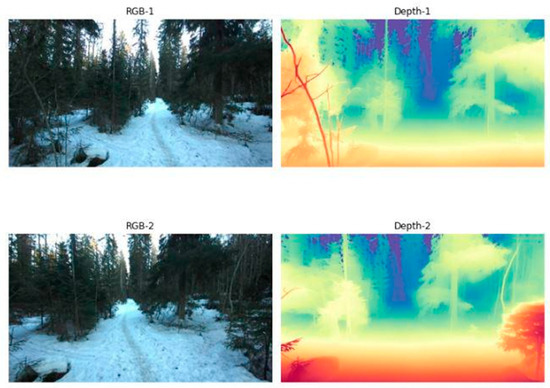

In this research, the incorporation of depth information relied on the Depth Anything V2 model and the Ultralytics4Channel (https://github.com/g-h-anna/ultralytics4channel (accessed 25 February 2025)) [15] repository, a specialized adaptation of YOLOv8 architecture designed for RGB-D data [16]. Depth Anything V2 was employed to extract the relative depth information from RGB images, providing additional spatial context, such as tree sizes and distances, which enhanced segmentation accuracy significantly. By combining the extracted depth data with RGB imagery to form 4-channel (RGB-D) inputs, the Ultralytics4Channel library facilitated seamless processing and training for depth-enhanced segmentation tasks. A sample is presented in Figure 3.

Figure 3.

Examples of the RGB images vs. the corresponding relative depth images based on Depth Anything V2.

The experimental design involved comparing a baseline YOLOv8x-seg model trained on RGB images without depth information and an enhanced version of the YOLOv8x-seg model that incorporated depth data into RGB images. The baseline model served as a reference to evaluate the efficacy of depth information in improving object recognition capabilities. Performance metrics, including mAP50 and mAP50-95, were analyzed to determine the impact of depth information on detection and segmentation accuracy and model performance. By leveraging both Depth Anything V2 and Ultralytics4Channel, this study effectively demonstrated the advantages of incorporating depth data in forestry segmentation tasks, providing a cost-effective and robust alternative to LiDAR-based systems.

3. Results

The performance of the YOLOv8 models was assessed using two metrics to evaluate their effectiveness in object segmentation. These included the mAP50 and the mAP50-95, presented in Table 2. We also note that while Depth Anything V2 produces a relative depth scale rather than an absolute metric distance, prior evaluations [11,12] indicate it can still reliably differentiate near from far objects, which aids segmentation.

Table 2.

Model Performance Comparison for Spruce vs. Ground Segmentation Based on Masks.

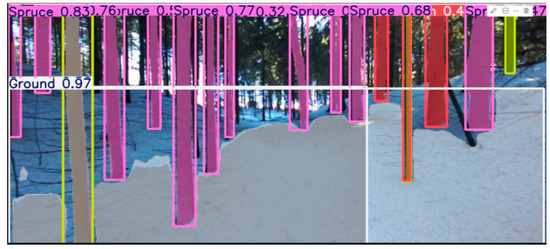

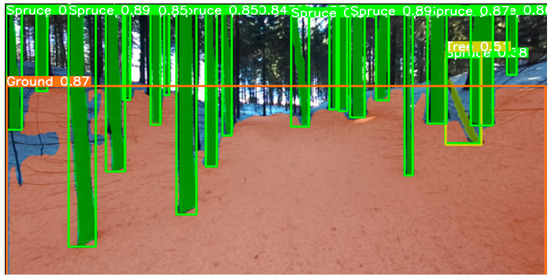

A performance comparison between the baseline YOLOv8 model and the depth-enhanced YOLOv8 model depicted a major difference between the sample without depth information and the one with depth information. For spruce segmentation, the baseline YOLOv8x-seg model achieved a mAP50 of 0.778 and a mAP50-95 of 0.472 for masks. After incorporating depth information, the mAP50 improved to 0.848 (+0.070) while the mAP50-95 improved to 0.523 (+0.051) for mAP50-95, which indicated a significant enhancement in segmentation accuracy. In the case of ground segmentation, the baseline model had a mAP50 of 0.750 and a mAP50-95 of 0.460. After incorporating depth information, the performance increased slightly to 0.770 (+0.020) for mAP50 and to 0.485 (+0.025) for mAP50-95. This depicted a more modest improvement compared to the Spruce segmentation. The samples are shown in Figure 3 and Figure 4. Please note that the annotations in the FinnForest dataset are not very accurate. For this reason, the score for ground segmentation in Figure 3 is higher than in Figure 4, even though ground segmentation looks more accurate in Figure 4.

Figure 4.

Spruce and Ground segmentation with no depth information.

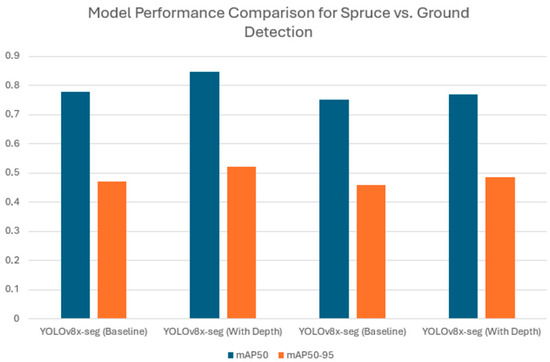

Generally, the integration of depth information using Depth Anything v2 improved the tree recognition capabilities of the YOLOv8x-seg model on the FinnForest dataset. The improvement in Spruce segmentation, with mAP50 increasing from 0.778 to 0.848 and mAP50-95 increasing from 0.472 to 0.523, depicts the importance of depth information in refining the accuracy of object segmentation. Although Ground segmentation also benefited from improved performance following the incorporation of depth information, such improvements were less pronounced compared to Spruce segmentation. This comparison is presented graphically, as shown below in Figure 5.

Figure 5.

Spruce and Ground segmentation with depth information.

This improvement represents (see Figure 6) the value of depth information in refining the accuracy of object segmentation in forested environments. The difference in performance improvement between Spruce and Ground segmentation reflects the specific advantages of depth information in identifying vertical structures like trees.

Figure 6.

Model Performance Comparison for Spruce vs. Ground Segmentation.

Analysis

The addition of depth information to the YOLOv8x-seg model significantly improves object detection performance in complex forest environments, particularly in dense scenes with overlapping boundaries, such as tree trunks and foliage. Depth information provides additional spatial context, enabling the model to differentiate between closely positioned or partially occluded objects. A direct contrast with LiDAR is not completely achievable despite the fact that the technique for detection is not optimal, as shown in Figure 3 and Figure 4. A straight one-to-one comparison was not possible because the photos we tested were not from the same scans, even though we used real LiDAR data. However, our findings showed that occlusion by foreground items made it difficult to distinguish distant objects, even using LiDAR scans. Alignment is also impacted by a small amount of shift in the RGB and LiDAR scans.

It is crucial to stress that our main goal was to operate the UAV within the forest and gather scans from different depths and positions. In order to increase detection precision, this method concentrates on identifying foreground items, which are of major relevance because they are more visible and filmed at a greater resolution. This enhancement is especially evident in the recognition of vertical structures like trees, where the inclusion of depth data allows for precise identification and segmentation.

Notable performance gains were observed in specific classes, such as “Spruce”, where the mAP50 increased from 0.778 to 0.848 and the mAP50-95 improved from 0.472 to 0.523. These results emphasize the importance of depth information in recognizing objects with diverse three-dimensional structures. Although improvements in ground detection were also observed, with mAP50 rising from 0.750 to 0.770 and mAP50-95 increasing from 0.460 to 0.485, these gains were more modest, indicating that depth data have a greater impact on vertical structures than on flat surfaces. This enhanced performance is attributed to the model’s ability to detect even subtle variations in object depth, reinforcing the value of depth information in forestry applications.

Depth-enhanced segmentation offers several practical benefits, particularly for forestry operations. It enables accurate tree sizing, allowing for precise height and diameter estimations critical to resource management. Additionally, depth data improve robustness against lighting challenges, such as shadows and light variations, where RGB-based accuracy often diminishes. By enhancing boundary definition, it enables better distinction between overlapping logs and trees, a key requirement in dense forest environments.

Overall, incorporating depth information into the YOLOv8x-seg model not only enhances its accuracy and robustness but also offers transformative advantages for practical applications. These include improved tree identification, better segmentation in challenging environments, and reliable performance in scenarios where RGB data alone may fail. This demonstrates the critical role of depth information in advancing forestry segmentation technologies. Although the Depth Anything V2 model offers a fresh approach, more study is still needed to determine its accuracy and practicality. The observed rise in mAP indicates its efficacy, even if a direct contrast to LiDAR is not possible due to the shortage of available LiDAR data. LiDAR is still anticipated to offer greater depth measurement precision, however. Therefore, pure information from LiDAR would probably produce more accurate findings, even though depth estimation using AI is a practical alternative.

4. Discussion

The integration of depth information into object detection and segmentation models leads to significant improvement in accuracy. This has been successfully demonstrated in this study with significantly better results than in most previous studies. For instance, the mAP50 of 0.848 achieved with the depth-enhanced YOLOv8 model for spruce detection is considerably higher than the results reported in several studies that used traditional RGB-only models. Some examples of comparable studies include a study by Redmon et al. [15], which achieved a mAP50 of 0.731 using YOLOv3 and a study by Bochkovskiy et al. [16], which reached a mAP50 of 0.734 using the YOLOv4 model. These results point out the effectiveness of depth data in improving detection accuracy, especially in vertical structures like trees as evidenced by the improvement being more significant in Spruce detection compared to Ground detection. The depth information helps provide additional spatial information that cannot be captured in RGB images alone [15,16].

Similarly, the improved mAP50-95 score of 0.523 with the depth-enhanced model seems to surpass the performance of models in previous studies. For instance, in the study by Zhang et al. [36], a mAP50-95 of 0.430 was achieved using Faster R-CNN with multi-scale feature fusion [36]. This implies that the incorporation of depth information facilitates the extraction of more distinguishable features and the localization of objects. This has also been observed in previous studies, such as the study by Liu et al. [37], in which depth maps improved the performance of object detection in cluttered environments [37]. In the case of forest monitoring, these advancements align with the findings of various studies, including those by Indirian et al. [38] and Lechner et al. [39]. In their studies, they were able to demonstrate that depth sensors enhance the detection of vegetation and trees more significantly compared to traditional methods [38,39]. They complement the findings of this study, where depth-enhanced models were found to significantly improve the accuracy of classifying tree species and analyzing forest structures. They highlight the benefits associated with the depth-enhanced YOLOv8 model, as pointed out by Xie et al. [40] and Wang et al. [41] in their studies in which they highlight similar improvements in object detection and segmentation through the integration of depth information [40,41].

5. Implications

The findings of this study have significant practical implications for forest monitoring, environmental conservation, and sustainable resource management. By providing accurate assessments of forest structure, depth-enhanced models facilitate more effective forest management strategies and biodiversity evaluations while supporting the development of automated systems for monitoring extensive forest areas.

In conservation efforts, the ability to accurately differentiate between tree species and analyze forest structures aids in the implementation of targeted conservation measures. This precision supports habitat protection initiatives and informs conservation priorities. Process automation enables more comprehensive and timely forest assessments, which are essential for proactive environmental conservation [42].

The real-world applications of depth-enhanced segmentation extend to several critical domains. In carbon accounting, the technology supports accurate biomass and carbon stock estimation, aiding green initiatives aimed at reducing emissions and building climate resilience. Mapping tree sizes and forest areas with high precision facilitates strategic planning for sustainable forestry and climate change adaptation. Depth-enhanced segmentation also plays a key role in fire risk assessment by enabling continuous monitoring of vegetation, moisture levels, and other wildfire risk indicators [43].

For semi-autonomous and autonomous machinery, depth data ensure safer navigation in dense and irregular forest environments, contributing to more secure and efficient forest management practices. These advancements highlight the transformative potential of depth-enhanced segmentation in addressing critical environmental challenges and advancing sustainable forestry solutions.

6. Limitations

One of the most notable limitations of the study is the size, diversity, and accuracy of the FinnForest dataset, which may affect the generalizability of the results. The processing and integration of depth data required more computational resources, which would significantly affect the real-time processing capabilities of the model [39]. The study faced limitations due to the constraints associated with the YOLOv8 model architecture. Nevertheless, future work could focus more on exploring the integration of more sophisticated depth-sensing technologies and hybrid models that integrate depth with other features to further enhance the model performance [43,44].

Additionally, while Depth Anything V2 has been shown to provide reliable relative depth in lab tests [11,12], real-world performance in heavily occluded forests may deviate. Benchmarking against a physically measured ground truth could further validate absolute error rates.

7. Business Implications

The integration of advanced technologies into forestry practices comes with various business implications, touching mainly on smart forestry, ecological sustainability, as well as cost optimization. The application of technologies like Geographic Information Systems (GIS), remote sensing techniques, and the Internet of Things (IoT) in forestry is revolutionizing the management and use of forest resources [45]. These technologies enable real-time monitoring of forest conditions, optimization of harvest schedules, and improvement of resource management. Smart sensors, for instance, can help track the health of trees and their growth, which can lead to more informed decisions pertaining to when and where to harvest. This reduces wastage and enhances productivity [46].

Technological advancements have also helped contribute to ecological sustainability by enabling higher levels of precision in forestry practices. Better forestry practices ensure that the management of forests is aligned with conservation goals. This ultimately leads to a reduction in environmental impact and improved support of biodiversity [47].

The other key implication is cost optimization. Emerging technologies are helping to facilitate cost optimization in forestry operations through streamlined processes and reduced manual labor. For instance, automated machinery like harvesters and forwarders are increasingly being equipped with advanced sensors to increase operational efficiency and decrease overall operational costs. Additionally, the use of predictive analytics can help forecast demand and supply trends, which can further assist in strategic planning and cost management [48].

8. Conclusions

Integrating depth information into the YOLOv8x-seg model offers significant advantages for forestry applications, revolutionizing object recognition and segmentation in complex forest environments where traditional RGB-only models often fall short. This study demonstrates that incorporating depth data enhances the accuracy of tree identification, forest health monitoring, and ecosystem analysis. By improving data precision, this approach contributes to more effective forest management strategies, better responses to environmental challenges, and innovations in remote sensing and computer vision aimed at supporting sustainable forestry practices globally.

The implications of depth-enhanced segmentation extend beyond forestry tasks. This advancement supports applications ranging from timber harvest optimization to habitat health monitoring and wildfire risk assessment. By leveraging depth data, these models improve their ability to operate in complex environments, reducing the risks of accidents and enhancing safety in forestry operations.

The results of the study demonstrate how depth-enhanced segmentation can be used practically in forestry to improve resource management, conservation initiatives, and forest monitoring. This method helps climate resilience initiatives, such as carbon stock estimation and wildfire risk assessment, automated monitoring, and biodiversity evaluations by facilitating accurate assessments of forest structures.

Notwithstanding its benefits, the study is limited by the size of the dataset and the computational requirements of depth integration, which have an impact on real-time processing. To improve effectiveness and applicability in various forestry contexts, future research needs to explore hybrid models and modern depth-sensing technologies.

Intelligent utilization of resources, conservation of the environment, and cost optimization are all facilitated by the incorporation of modern technology in forestry. While technology in forests streamlines procedures and lowers operating costs, innovations like GIS and IoT, as well as predictive analytics, increase productivity, lessen their impact on the environment, and improve decision-making.

Depth-enhanced segmentation facilitates thorough environmental assessments, aids in biodiversity preservation, and supports wildfire risk evaluation by enabling more accurate mapping of forest structures and conditions. This approach strengthens the capacity to address environmental challenges while promoting sustainable practices.

Further validation of Depth Anything V2 or similar single-image depth estimators against physically measured references in real forest plots would strengthen claims of real-world applicability, especially for critical tasks like calculating tree distances, diameters, and heights.

Future research could further enhance these findings by expanding datasets to include a broader range of forest types and conditions, improving the robustness and generalizability of the model. Additionally, exploring the integration of alternative depth-sensing technologies, such as LiDAR combined with RGB-depth data, may yield even greater advancements in detection accuracy, segmentation performance, and processing efficiency.

Author Contributions

Conceptualization, K.W. and J.N.; methodology, K.W.; validation, M.S.T.; formal analysis, M.S.T.; investigation, K.W. and M.S.T.; resources, K.W. and M.S.T.; data curation, K.W. and M.S.T.; writing—original draft preparation, K.W. and M.K.; writing—review and editing, K.W. and J.N.; visualization, M.K.; supervision, O.Ż. All authors have read and agreed to the published version of the manuscript.

Funding

This research is developed as a part of the Agrarsense Project under Grant Agreement No. 101095835. The project is supported by the Chips Joint Undertaking and its members, including the top-up funding by Sweden, Czechia, Finland, Ireland, Italy, Latvia, Netherlands, Norway, Poland, and Spain, including the National Centre for Research and Development of Poland.

Data Availability Statement

The data are contained within the article.

Conflicts of Interest

Authors Krzysztof Wołk, Jacek Niklewski, Marek S. Tatara, Michał Kopczyński and Oleg Żero were employed by the company DAC.Digital SA. All authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Chehreh, B.; Moutinho, A.; Viegas, C. Latest trends on tree classification and segmentation using UAV data—A review of agroforestry applications. Remote Sens. 2023, 15, 2263. [Google Scholar] [CrossRef]

- Wołk, K.; Tatara, M.S. A Review of Semantic Segmentation and Instance Segmentation Techniques in Forestry Using LiDAR and Imagery Data. Electronics 2024, 13, 4139. [Google Scholar] [CrossRef]

- Terven, J.; Córdova-Esparza, D.-M.; Romero-González, J.-A. A comprehensive review of YOLO architectures in computer vision: From YOLOv1 to YOLOv8 and YOLO-NAS. Mach. Learn. Knowl. Extr. 2023, 5, 1680–1716. [Google Scholar] [CrossRef]

- Rasi, D.; AntoBennet, M.; Renjith, P.N.; Arun, M.R.; Vanathi, D. YOLO based deep learning model for segmenting the color images. Int. J. Electr. Electron. Res. (IJEER) 2023, 11, 359–370. [Google Scholar] [CrossRef]

- Martinho, V.J.P.D.; Ferreira, A.J.D. Forest resources management and sustainability: The specific case of european union countries. Sustainability 2020, 13, 58. [Google Scholar] [CrossRef]

- Ülkü, İ.; Akagündüz, E.; Ghamisi, P. Deep semantic segmentation of trees using multispectral images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 7589–7604. [Google Scholar] [CrossRef]

- Liang, F.; Duan, L.; Ma, W.; Qiao, Y.; Miao, J.; Ye, Q. Context-aware network for RGB-D salient object detection. Pattern Recognit. 2021, 111, 107630. [Google Scholar] [CrossRef]

- Ma, K.; Chen, Z.; Fu, L.; Tian, W.; Jiang, F.; Yi, J.; Sun, H. Performance and sensitivity of individual tree segmentation methods for UAV-LiDAR in multiple forest types. Remote Sens. 2022, 14, 298. [Google Scholar] [CrossRef]

- Wang, R.; Chen, L.; Huang, Z.; Zhang, W.; Wu, S. A Review on the High-Efficiency Detection and Precision Positioning Technology Application of Agricultural Robots. Processes 2024, 12, 1833. [Google Scholar] [CrossRef]

- Braunschweiger, D.; Ohmura, T.; Schweier, J.; Olschewski, R.; Schulz, T. Preferences for proactive and reactive climate-adaptive forest management and the role of public financial support. For. Policy Econ. 2024, 169, 103348. [Google Scholar] [CrossRef]

- Yang, L.; Kang, B.; Huang, Z.; Zhao, Z.; Xu, X.; Feng, J.; Zhao, H. Depth Anything V2: AI-based depth estimation for RGB images. arXiv 2024, arXiv:2406.09414. [Google Scholar]

- Yang, L.; Kang, B.; Huang, Z.; Xu, X.; Feng, J.; Zhao, H. Depth anything: Unleashing the power of large-scale unlabeled data. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 10371–10381. [Google Scholar]

- Park, S.S.; Tran, V.T.; Lee, D.E. Application of various yolo models for computer vision-based real-time pothole detection. Appl. Sci. 2021, 11, 11229. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. Available online: https://ieeexplore.ieee.org/document/7780460 (accessed on 10 August 2024).

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Hussain, M. YOLO-v1 to YOLO-v8, the rise of YOLO and its complementary nature toward digital manufacturing and industrial defect detection. Machines 2023, 11, 677. [Google Scholar] [CrossRef]

- Jegham, N.; Koh, C.Y.; Abdelatti, M.; Hendawi, A. Evaluating the Evolution of YOLO (You Only Look Once) Models: A Comprehensive Benchmark Study of YOLO11 and Its Predecessorspr. arXiv 2024, arXiv:2411.00201. [Google Scholar]

- Swathi, Y.; Challa, M. YOLOv8: Advancements and innovations in object detection. In Smart Trends in Computing and Communications, Proceedings of SmartCom 2024, Pune, India, 12–13 January 2024; Springer: Singapore, 2024; pp. 1–13. [Google Scholar]

- Zhong, H.; Zhang, Z.; Liu, H.; Wu, J.; Lin, W. Individual Tree Species Identification for Complex Coniferous and Broad-Leaved Mixed Forests Based on Deep Learning Combined with UAV LiDAR Data and RGB Images. Forests 2024, 15, 293. [Google Scholar] [CrossRef]

- Eigen, D.; Puhrsch, C.; Fergus, R. Depth Map Prediction from a Single Image using a Multi-Scale Deep Network. In Proceedings of the Advances in Neural Information Processing Systems 27: Annual Conference on Neural Information Processing Systems 2014 (NIPS), Montreal, QC, Canada, 8–13 December 2014; pp. 2366–2374. [Google Scholar]

- Couprie, C.; Farabet, C.; Najman, L.; LeCun, Y. Indoor Semantic Segmentation using Depth Information. In Proceedings of the International Conference on Learning Representations (ICLR), Kigali, Rwanda, 1–5 May 2023. [Google Scholar]

- Gupta, S.; Girshick, R.; Arbeláez, P.; Malik, J. Learning Rich Features from RGB-D Images for Object Detection and Segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Zurich, Switzerland, 6–12 September 2014; pp. 345–360. [Google Scholar]

- Li, W.; Fu, H.; Yu, L.; Cracknell, A.; Gong, W. Deep Learning-Based Tree Recognition in Forestry Environments. Remote Sens. 2015, 7, 8115–8132. [Google Scholar]

- Yamazaki, F.; Liu, W.; Maruyama, Y. Tree Detection and Segmentation in Urban Areas using Aerial LiDAR Data and Deep Learning. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 4, 463–470. [Google Scholar]

- Wang, Y.; Chao, W.L.; Garg, D.; Hariharan, B.; Campbell, M.; Weinberger, K.Q. Pseudo-lidar from visual depth estimation: Bridging the gap in 3D object detection for autonomous driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 8445–8453. [Google Scholar]

- Hosain, M.T.; Zaman, A.; Abir, M.; Akter, S. Synchronizing Object Detection: Applications, Advancements and Existing Challenges. IEEE Access 2024, 12, 54129–54167. [Google Scholar] [CrossRef]

- Lagos, J.; Lempiö, U.; Rahtu, E. FinnWoodlands Dataset. In Image Analysis; Gade, R., Felsberg, M., Kämäräinen, J.-K., Eds.; Springer Nature: Cham, Switzerland; pp. 95–110.

- Lim, C.H.; Connie, T.; Goh, K.O.M. Vehicle Detection Based on Improved YOLOv8. In Proceedings of the 2024 IEEE International Conference on Artificial Intelligence in Engineering and Technology (IICAIET), Kota Kinabalu, Malaysia, 26–28 August 2024; pp. 100–105. [Google Scholar]

- Loshchilov, I.; Hutter, F. SGDR: Stochastic gradient descent with warm restarts. In Proceedings of the International Conference on Learning Representations (ICLR), Toulon, France, 24–26 April 2017. [Google Scholar]

- Smith, L.N. Cyclical learning rates for training neural networks. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision (WACV), Santa Rosa, CA, USA, 24–31 March 2017; pp. 464–472. [Google Scholar]

- Howard, J.; Gugger, S. Deep Learning for Coders with Fastai and PyTorch; O’Reilly Media: Sebastopol, CA, USA, 2020; Available online: https://www.oreilly.com/library/view/deep-learning-for/9781492045519/ (accessed on 23 February 2025).

- Tilley, B.K.; Munn, I.A.; Evans, D.L.; Parker, R.C.; Roberts, S.D. Cost considerations of using LiDAR for timber inventory. In Proceedings of the Southern Forest Economics Workshop (SOFEW), St. Augustine FL, USA, 14–16 March 2004. [Google Scholar]

- Gelencsér-Horváth, A. Modify Ultralytics 8.2.5 to Accept 4-Channel Images for Training and Prediction. GitHub Repository. 2024. Available online: https://github.com/g-h-anna/ultralytics4channel (accessed on 23 February 2025).

- Shaikh, M.B.; Chai, D. RGB-D data-based action recognition: A review. Sensors 2021, 21, 4246. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Zhang, L.; Li, J. Faster R-CNN with Multi-Scale Feature Fusion for Object Detection. J. Comput. Sci. Technol. 2019, 34, 595–609. [Google Scholar]

- Liu, Z.; Liu, J.; Yu, H. Depth-aware Object Detection in Cluttered Environments. IEEE Trans. Image Process. 2021, 30, 1650–1662. [Google Scholar]

- Indiran, A.P.; Manikandan, N.; Kulkarni, A.V.; Ramadoss, S.; Rathinavelu, R. Novel Integration of Depth Mapping & Object Detection for Tree Biomass Estimation in Eucalyptus camaldulensis L. SSRN. 2024. Available online: https://ssrn.com/abstract=4932479 (accessed on 23 February 2025).

- Lechner, A.M.; Foody, G.M.; Boyd, D.S. Application of Depth Sensors in Forest Monitoring: A Review. For. Ecol. Manag. 2021, 482, 118772. [Google Scholar]

- Sujoy, M.I.M.; Rahman, M.A.; Dhruba, U.S.; Prattoy, S.E. Depth-Aware Object Detection and Region Filtering for Autonomous Vehicles: A Monocular Camera-Based Novel Integration of MiDaS and YOLO for Complex Road Scenarios with Irregular Traffic. Ph.D. Dissertation, Brac University, Dhaka, Bangladesh, 2024. Available online: http://hdl.handle.net/10361/25318 (accessed on 23 February 2025).

- Clamens, T.; Rodriguez, J.; Delamare, M.; Lew-Yan-Voon, L.; Fauvet, E.; Fofi, D. YOLO-based Multi-Modal Analysis of Vineyards using RGB-D Detections. In Proceedings of the International Conference on Advances in Signal Processing and Artificial Intelligence (ASPAI’2023), Tenerife, Spain, 7–9 June 2023. [Google Scholar]

- Michelini, D.; Dalponte, M.; Carriero, A.; Kutchartt, E.; Pappalardo, S.E.; De Marchi, M.; Pirotti, F. Hyperspectral and LiDAR data for the prediction via machine learning of tree species, volume and biomass: A contribution for updating forest management plans. In Geomatics for Green and Digital Transition, Proceedings of the 25th Italian Conference, ASITA 2022, Genova, Italy, 20–24 June 2022; Springer International Publishing: Cham, Switzerland, 2022; pp. 235–250. [Google Scholar]

- Abad-Segura, E.; González-Zamar, M.D.; Vázquez-Cano, E.; López-Meneses, E. Remote sensing applied in forest management to optimize ecosystem services: Advances in research. Forests 2020, 11, 969. [Google Scholar] [CrossRef]

- Raihan, A. Artificial intelligence and machine learning applications in forest management and biodiversity conservation. Nat. Resour. Conserv. Res. 2023, 6, 3825. [Google Scholar] [CrossRef]

- Akhtar, M.N.; Ansari, E.; Alhady, S.S.N.; Abu Bakar, E. Leveraging on advanced remote sensing-and artificial intelligence-based technologies to manage palm oil plantation for current global scenario: A review. Agriculture 2023, 13, 504. [Google Scholar] [CrossRef]

- Holzinger, A.; Saranti, A.; Angerschmid, A.; Retzlaff, C.O.; Gronauer, A.; Pejakovic, V.; Medel-Jimenez, F.; Krexner, T.; Gollob, C.; Stampfer, K. Digital transformation in smart farm and forest operations needs human-centered AI: Challenges and future directions. Sensors 2022, 22, 3043. [Google Scholar] [CrossRef] [PubMed]

- Gustafson, D.I. Emerging technologies in precision forestry: Implications for efficiency and cost optimization. J. For. Eng. 2022, 31, 102–117. [Google Scholar]

- Peng, W.; Sadaghiani, O.K. Enhancement of quality and quantity of woody biomass produced in forests using machine learning algorithms. Biomass Bioenergy 2023, 175, 106884. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).