Extracting Individual Tree Positions in Closed-Canopy Stands Using a Multi-Source Local Maxima Method

Abstract

1. Introduction

2. Materials and Methods

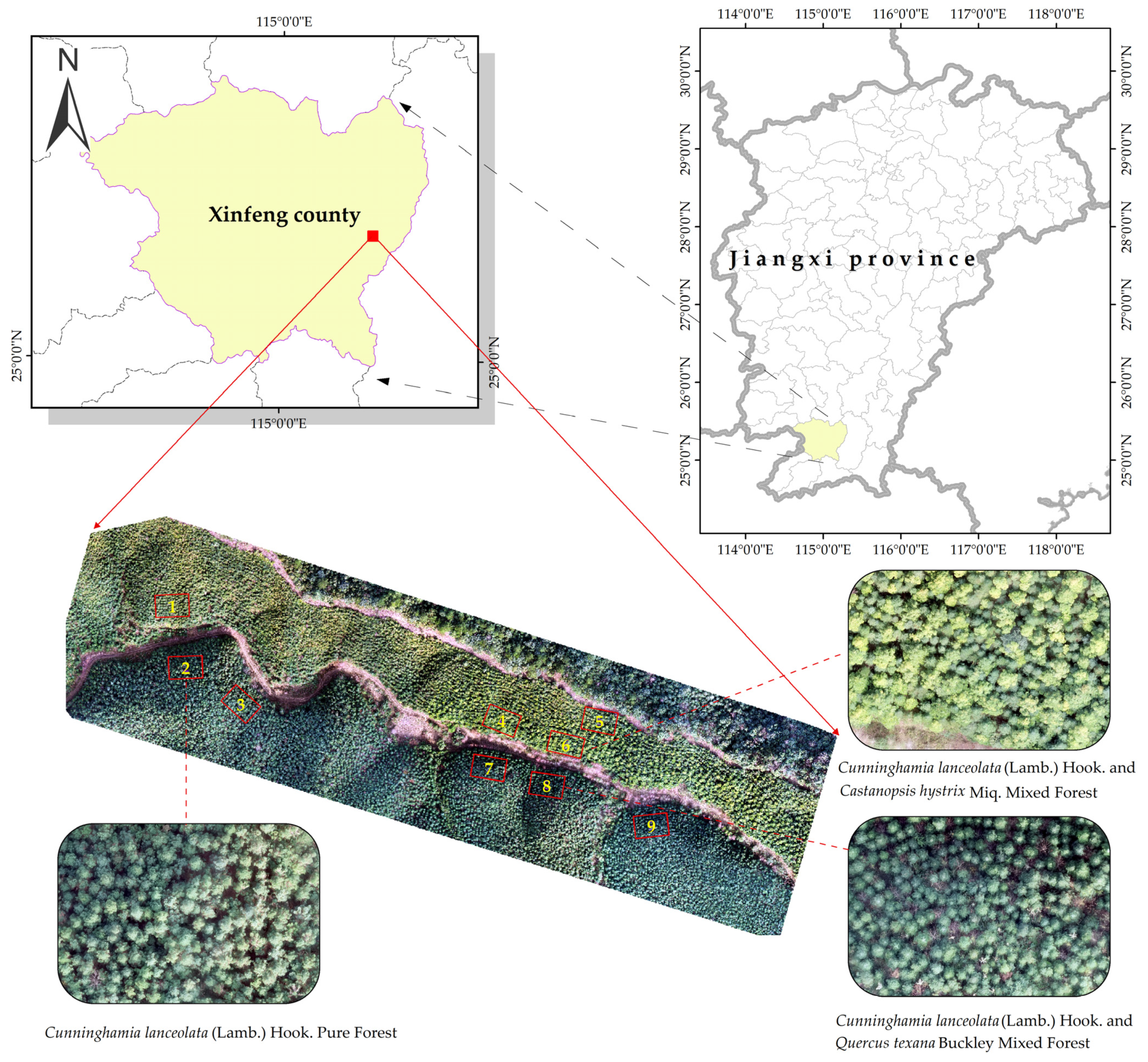

2.1. Overview of the Study Area

2.2. Study Data

2.2.1. Sample Plot Survey Data

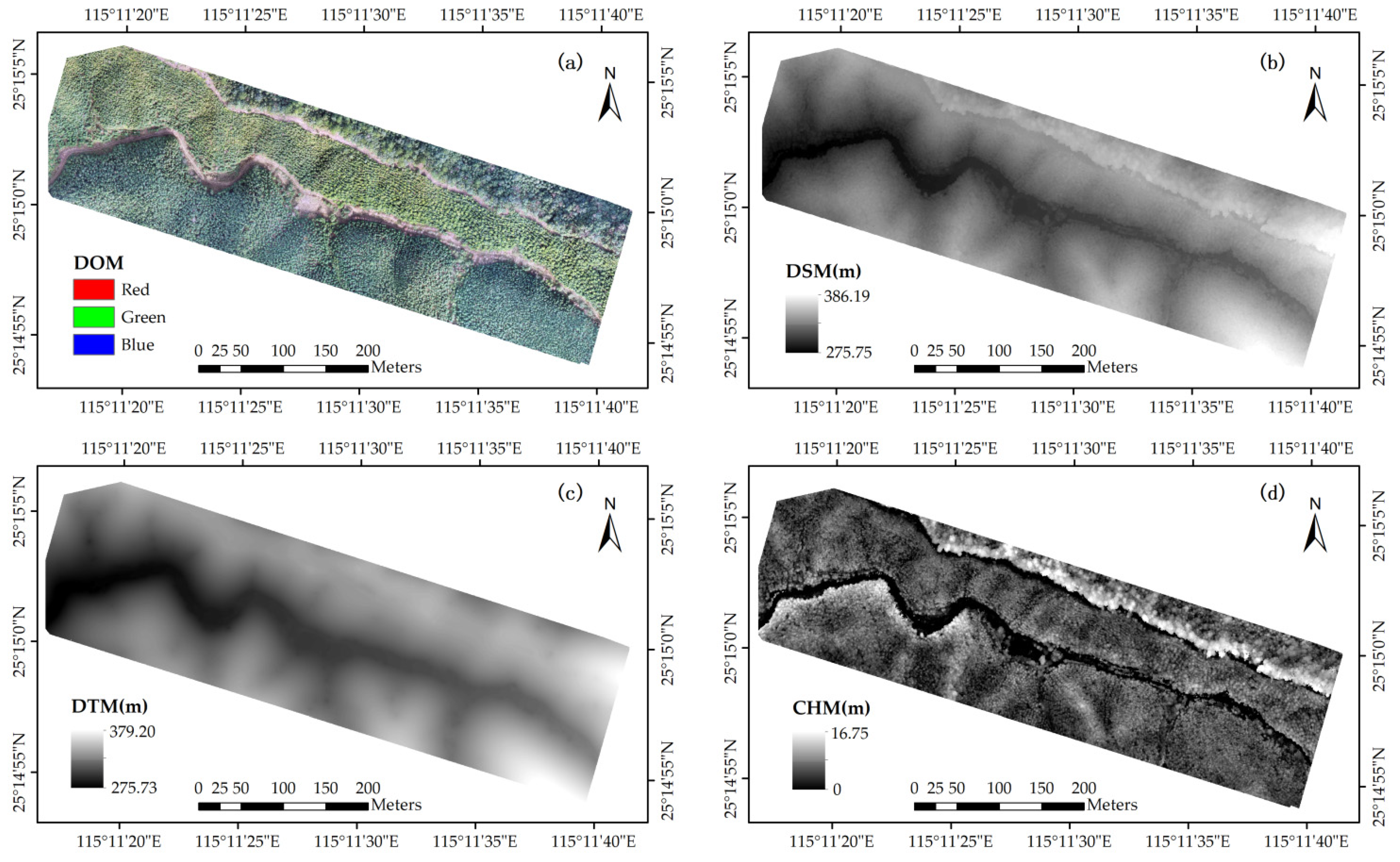

2.2.2. UAV Image Data

- Image Preprocessing: Aerial images were initially screened to exclude those with color distortion, focus failure, or improper exposure. This step ensured consistency in brightness, saturation, and hue across the dataset, ensuring high-quality subsequent data processing.

- Feature Point Extraction and Matching: The software automatically extracted relevant image and camera parameter information. Ground Control Points were added to aid feature matching and tracking.

- Aerial Triangulation: Multi-view image bundle adjustment and aerial triangulation were performed. These steps extracted and matched image feature points, generating a sparse 3D point cloud.

- Dense Point Cloud Generation: Based on the sparse point cloud, a dense 3D point cloud was produced using multi-view stereo matching algorithms.

- DSM and DOM Generation: The dense point cloud was rasterized to generate a DSM with a resolution of 2.3 cm per pixel. Each pixel in the DSM represented the elevation of ground features, including structures and vegetation. The original images were geometrically and radiometrically corrected to produce a DOM with the same resolution of 2.3 cm per pixel. The pixel values in the DOM reflected the spectral reflectance characteristics of the corresponding ground features, typically in RGB colors.

2.2.3. Canopy Height Model

2.3. Study Methods

2.3.1. Multi-Source Local Maxima Method

- Data Preprocessing

- 2.

- Identification of Potential Tree Crown Apices

- 3.

- Determination of Final Tree Positions

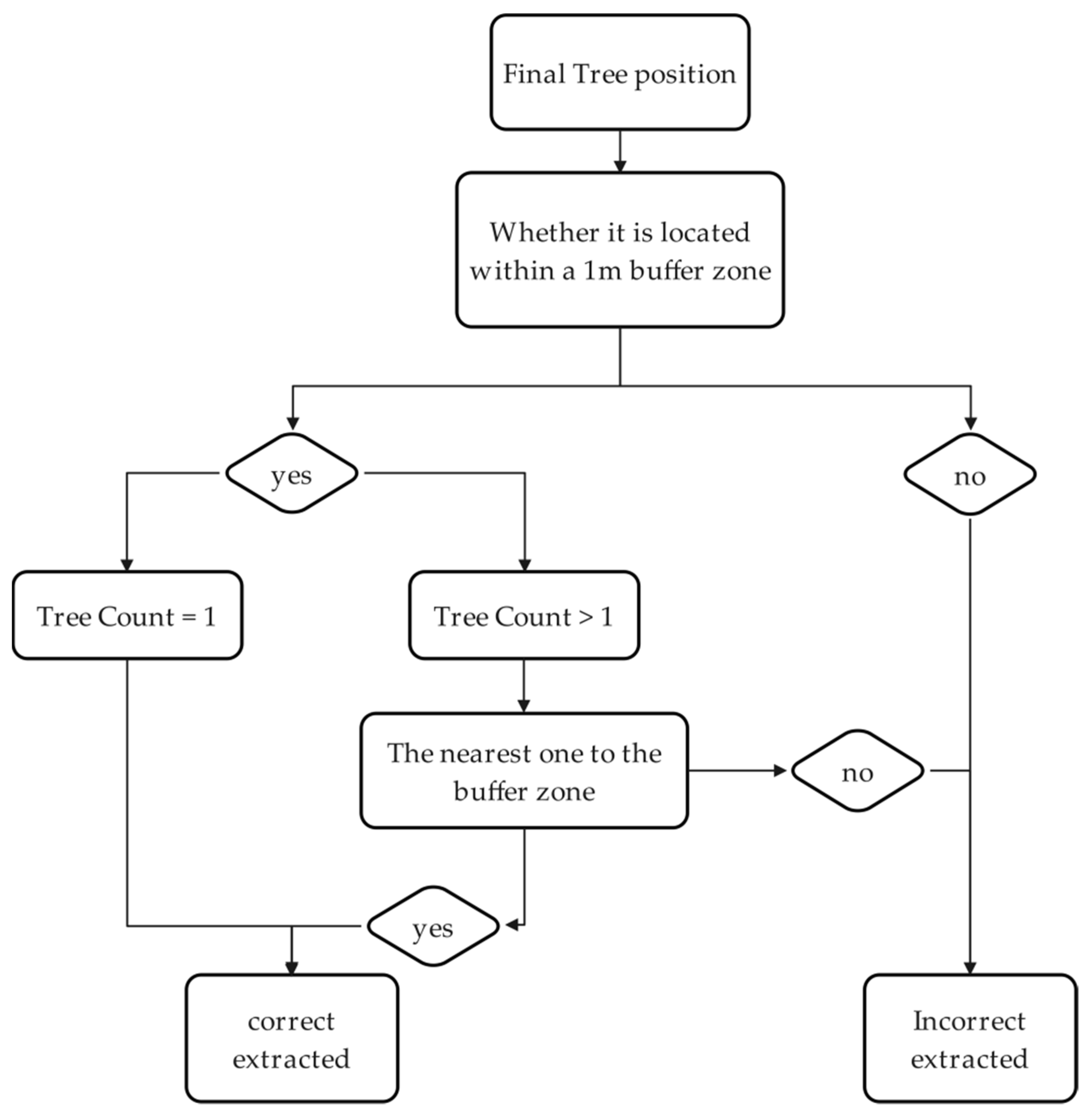

2.3.2. Individual Tree Position Extraction Accuracy Evaluation

3. Results

3.1. Individual Tree Position Extraction Results

3.2. Individual Tree Position Extraction Accuracy

3.3. Impact of Forest Type on Extraction Accuracy

4. Discussion

4.1. Parameter Settings of the MSLM Method

4.2. Performance Advantages of the MSLM Method

4.3. Limitations of the MSLM Method

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Hui, G.; Zhang, G.; Zhao, Z.; Yang, A. Methods of Forest Structure Research: A Review. Curr. For. Rep. 2019, 5, 142–154. [Google Scholar] [CrossRef]

- Atkins, J.W.; Bhatt, P.; Carrasco, L.; Francis, E.; Garabedian, J.E.; Hakkenberg, C.R.; Hardiman, B.S.; Jung, J.; Koirala, A.; LaRue, E.A.; et al. Integrating Forest Structural Diversity Measurement into Ecological Research. Ecosphere 2023, 14, e4633. [Google Scholar] [CrossRef]

- Yang, M.; Mou, Y.; Liu, S.; Meng, Y.; Liu, Z.; Li, P.; Xiang, W.; Zhou, X.; Peng, C. Detecting and Mapping Tree Crowns Based on Convolutional Neural Network and Google Earth Images. Int. J. Appl. Earth Obs. Geoinf. 2022, 108, 102764. [Google Scholar] [CrossRef]

- Keefe, R.F.; Zimbelman, E.G.; Picchi, G. Use of Individual Tree and Product Level Data to Improve Operational Forestry. Curr. For. Rep. 2022, 8, 148–165. [Google Scholar] [CrossRef]

- Dainelli, R.; Toscano, P.; Di Gennaro, S.F.; Matese, A. Recent Advances in Unmanned Aerial Vehicle Forest Remote Sensing—A Systematic Review. Part I: A General Framework. Forests 2021, 12, 327. [Google Scholar] [CrossRef]

- Yun, L.; Zhang, X.; Zheng, Y.; Wang, D.; Hua, L. Enhance the Accuracy of Landslide Detection in UAV Images Using an Improved Mask R-CNN Model: A Case Study of Sanming, China. Sensors 2023, 23, 4287. [Google Scholar] [CrossRef]

- Guimarães, N.; Pádua, L.; Marques, P.; Silva, N.; Peres, E.; Sousa, J.J. Forestry Remote Sensing from Unmanned Aerial Vehicles: A Review Focusing on the Data, Processing and Potentialities. Remote Sens. 2020, 12, 1046. [Google Scholar] [CrossRef]

- Ali, A.M.; Abouelghar, M.; Belal, A.A.; Saleh, N.; Yones, M.; Selim, A.I.; Amin, M.E.S.; Elwesemy, A.; Kucher, D.E.; Maginan, S.; et al. Crop Yield Prediction Using Multi Sensors Remote Sensing (Review Article). Egypt. J. Remote Sens. Space Sci. 2022, 25, 711–716. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhu, L. A Review on Unmanned Aerial Vehicle Remote Sensing: Platforms, Sensors, Data Processing Methods, and Applications. Drones 2023, 7, 398. [Google Scholar] [CrossRef]

- Chen, X.; Wang, R.; Shi, W.; Li, X.; Zhu, X.; Wang, X. An Individual Tree Segmentation Method That Combines LiDAR Data and Spectral Imagery. Forests 2023, 14, 1009. [Google Scholar] [CrossRef]

- Steier, J.; Goebel, M.; Iwaszczuk, D. Is Your Training Data Really Ground Truth? A Quality Assessment of Manual Annotation for Individual Tree Crown Delineation. Remote Sens. 2024, 16, 2786. [Google Scholar] [CrossRef]

- Yun, T.; Li, J.; Ma, L.; Zhou, J.; Wang, R.; Eichhorn, M.P.; Zhang, H. Status, Advancements and Prospects of Deep Learning Methods Applied in Forest Studies. Int. J. Appl. Earth Obs. Geoinf. 2024, 131, 103938. [Google Scholar] [CrossRef]

- Yu, K.; Hao, Z.; Post, C.; Mikhailova, E.; Lin, L.; Zhao, G.; Tian, S.; Liu, J. Comparison of Classical Methods and Mask R-CNN for Automatic Tree Detection and Mapping Using UAV Imagery. Remote Sens. 2022, 14, 295. [Google Scholar] [CrossRef]

- Robles-Guerrero, A.; Saucedo-Anaya, T.; Guerrero-Mendez, C.A.; Gómez-Jiménez, S.; Navarro-Solís, D.J. Comparative Study of Machine Learning Models for Bee Colony Acoustic Pattern Classification on Low Computational Resources. Sensors 2023, 23, 460. [Google Scholar] [CrossRef] [PubMed]

- Mohan, M.; Silva, C.A.; Klauberg, C.; Jat, P.; Catts, G.; Cardil, A.; Hudak, A.T.; Dia, M. Individual Tree Detection from Unmanned Aerial Vehicle (UAV) Derived Canopy Height Model in an Open Canopy Mixed Conifer Forest. Forests 2017, 8, 340. [Google Scholar] [CrossRef]

- Ahmadi, S.A.; Ghorbanian, A.; Golparvar, F.; Mohammadzadeh, A.; Jamali, S. Individual Tree Detection from Unmanned Aerial Vehicle (UAV) Derived Point Cloud Data in a Mixed Broadleaf Forest Using Hierarchical Graph Approach. Eur. J. Remote Sens. 2022, 55, 520–539. [Google Scholar] [CrossRef]

- De Sa, N.C.; Castro, P.; Carvalho, S.; Marchante, E.; Lopez-Nunez, F.A.; Marchante, H. Mapping the Flowering of an Invasive Plant Using Unmanned Aerial Vehicles: Is There Potential for Biocontrol Monitoring? Front. Plant Sci. 2018, 9, 293. [Google Scholar] [CrossRef]

- Gu, J.; Congalton, R.G. Individual Tree Crown Delineation from UAS Imagery Based on Region Growing by Over-Segments With a Competitive Mechanism. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4402411. [Google Scholar] [CrossRef]

- Xia, J.; Wang, Y.; Dong, P.; He, S.; Zhao, F.; Luan, G. Object-Oriented Canopy Gap Extraction from UAV Images Based on Edge Enhancement. Remote Sens. 2022, 14, 4762. [Google Scholar] [CrossRef]

- Chehreh, B.; Moutinho, A.; Viegas, C. Latest Trends on Tree Classification and Segmentation Using UAV Data—A Review of Agroforestry Applications. Remote Sens. 2023, 15, 2263. [Google Scholar] [CrossRef]

- García-Murillo, D.G.; Caicedo-Acosta, J.; Castellanos-Dominguez, G. Individual Detection of Citrus and Avocado Trees Using Extended Maxima Transform Summation on Digital Surface Models. Remote Sens. 2020, 12, 1633. [Google Scholar] [CrossRef]

- Hao, Z.; Lin, L.; Post, C.J.; Jiang, Y.; Li, M.; Wei, N.; Yu, K.; Liu, J. Assessing Tree Height and Density of a Young Forest Using a Consumer Unmanned Aerial Vehicle (UAV). New For. 2021, 52, 843–862. [Google Scholar] [CrossRef]

- Asli, O.-O.; Ok, A.O.; Zeybek, M.; Atesoglu, A. Automated Extraction and Validation of Stone Pine (Pinus pinea L.) Trees from UAV-Based Digital Surface Models. Geo-Spat. Inf. Sci. 2024, 27, 142–162. [Google Scholar] [CrossRef]

- Xu, X.; Zhou, Z.; Tang, Y.; Qu, Y. Individual Tree Crown Detection from High Spatial Resolution Imagery Using a Revised Local Maximum Filtering. Remote Sens. Environ. 2021, 258, 112397. [Google Scholar] [CrossRef]

- Miraki, M.; Sohrabi, H.; Fatehi, P.; Kneubuehler, M. Individual Tree Crown Delineation from High-Resolution UAV Images in Broadleaf Forest. Ecol. Inform. 2021, 61, 101207. [Google Scholar] [CrossRef]

- Chen, S.; Liang, D.; Ying, B.; Zhu, W.; Zhou, G.; Wang, Y. Assessment of an Improved Individual Tree Detection Method Based on Local-Maximum Algorithm from Unmanned Aerial Vehicle RGB Imagery in Overlapping Canopy Mountain Forests. Int. J. Remote Sens. 2021, 42, 106–125. [Google Scholar] [CrossRef]

- Azizi, Z.; Miraki, M. Individual Urban Trees Detection Based on Point Clouds Derived from UAV-RGB Imagery and Local Maxima Algorithm, a Case Study of Fateh Garden, Iran. Environ. Dev. Sustain. 2024, 26, 2331–2344. [Google Scholar] [CrossRef]

- Zhao, H.; Morgenroth, J.; Pearse, G.; Schindler, J. A Systematic Review of Individual Tree Crown Detection and Delineation with Convolutional Neural Networks (CNN). Curr. For. Rep. 2023, 9, 149–170. [Google Scholar] [CrossRef]

- Qin, H.; Zhou, W.; Yao, Y.; Wang, W. Individual Tree Segmentation and Tree Species Classification in Subtropical Broadleaf Forests Using UAV-Based LiDAR, Hyperspectral, and Ultrahigh-Resolution RGB Data. Remote Sens. Environ. 2022, 280, 113143. [Google Scholar] [CrossRef]

- Iglhaut, J.; Cabo, C.; Puliti, S.; Piermattei, L.; O’Connor, J.; Rosette, J. Structure from Motion Photogrammetry in Forestry: A Review. Curr. For. Rep. 2019, 5, 155–168. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, M.; Mango, J.; Xin, L.; Meng, C.; Li, X. Individual Tree Detection and Counting Based on High-Resolution Imagery and the Canopy Height Model Data. Geo-Spat. Inf. Sci. 2024, 27, 2162–2178. [Google Scholar] [CrossRef]

- Zhao, C.; Wang, J.; Zhou, G.; Yu, L.; Gong, X.; Meng, J. Logging Simulation of Natural Mixed Forest in Jinpen Mountain Based on Optimization of Spatial Structure. J. Southwest For. Univ. 2022, 42, 126–133. [Google Scholar] [CrossRef]

- Dong, X.; Zhang, Z.; Yu, R.; Tian, Q.; Zhu, X. Extraction of Information about Individual Trees from High-Spatial-Resolution UAV-Acquired Images of an Orchard. Remote Sens. 2020, 12, 133. [Google Scholar] [CrossRef]

- Kuang, W.; Ho, H.W.; Zhou, Y.; Suandi, S.A.; Ismail, F. A Comprehensive Review on Tree Detection Methods Using Point Cloud and Aerial Imagery from Unmanned Aerial Vehicles. Comput. Electron. Agric. 2024, 227, 109476. [Google Scholar] [CrossRef]

- Ke, Y.; Quackenbush, L.J. A Review of Methods for Automatic Individual Tree-Crown Detection and Delineation from Passive Remote Sensing. Int. J. Remote Sens. 2011, 32, 4725–4747. [Google Scholar] [CrossRef]

- Burdziakowski, P. The Effect of Varying the Light Spectrum of a Scene on the Localisation of Photogrammetric Features. Remote Sens. 2024, 16, 2644. [Google Scholar] [CrossRef]

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Chen, R.; Han, L.; Zhao, Y.; Zhao, Z.; Liu, Z.; Li, R.; Xia, L.; Zhai, Y. Extraction and Monitoring of Vegetation Coverage Based on Uncrewed Aerial Vehicle Visible Image in a Post Gold Mining Area. Front. Ecol. Evol. 2023, 11, 1171358. [Google Scholar] [CrossRef]

- Yu, T.; Ni, W.; Zhang, Z.; Liu, Q.; Sun, G. Regional Sampling of Forest Canopy Covers Using UAV Visible Stereoscopic Imagery for Assessment of Satellite-Based Products in Northeast China. J. Remote Sens. 2022, 2022, 9806802. [Google Scholar] [CrossRef]

- Pleșoianu, A.-I.; Stupariu, M.-S.; Șandric, I.; Pătru-Stupariu, I.; Drăguț, L. Individual Tree-Crown Detection and Species Classification in Very High-Resolution Remote Sensing Imagery Using a Deep Learning Ensemble Model. Remote Sens. 2020, 12, 2426. [Google Scholar] [CrossRef]

- Xie, Y.; Wang, Y.; Sun, Z.; Liang, R.; Ding, Z.; Wang, B.; Huang, S.; Sun, Y. Instance Segmentation and Stand-Scale Forest Mapping Based on UAV Images Derived RGB and CHM. Comput. Electron. Agric. 2024, 220, 108878. [Google Scholar] [CrossRef]

- Beloiu, M.; Heinzmann, L.; Rehush, N.; Gessler, A.; Griess, V.C. Individual Tree-Crown Detection and Species Identification in Heterogeneous Forests Using Aerial RGB Imagery and Deep Learning. Remote Sens. 2023, 15, 1463. [Google Scholar] [CrossRef]

- Zhang, C.; Zhou, J.; Wang, H.; Tan, T.; Cui, M.; Huang, Z.; Wang, P.; Zhang, L. Multi-Species Individual Tree Segmentation and Identification Based on Improved Mask R-CNN and UAV Imagery in Mixed Forests. Remote Sens. 2022, 14, 874. [Google Scholar] [CrossRef]

- Gan, Y.; Wang, Q.; Iio, A. Tree Crown Detection and Delineation in a Temperate Deciduous Forest from UAV RGB Imagery Using Deep Learning Approaches: Effects of Spatial Resolution and Species Characteristics. Remote Sens. 2023, 15, 778. [Google Scholar] [CrossRef]

- Sun, Y.; Li, Z.; He, H.; Guo, L.; Zhang, X.; Xin, Q. Counting Trees in a Subtropical Mega City Using the Instance Segmentation Method. Int. J. Appl. Earth Obs. Geoinf. 2022, 106, 102662. [Google Scholar] [CrossRef]

- Zhang, D.; Pan, Y.; Zhang, J.; Hu, T.; Zhao, J.; Li, N.; Chen, Q. A Generalized Approach Based on Convolutional Neural Networks for Large Area Cropland Mapping at Very High Resolution. Remote Sens. Environ. 2020, 247, 111912. [Google Scholar] [CrossRef]

- Dietenberger, S.; Mueller, M.M.; Bachmann, F.; Nestler, M.; Ziemer, J.; Metz, F.; Heidenreich, M.G.; Koebsch, F.; Hese, S.; Dubois, C.; et al. Tree Stem Detection and Crown Delineation in a Structurally Diverse Deciduous Forest Combining Leaf-On and Leaf-Off UAV-SfM Data. Remote Sens. 2023, 15, 4366. [Google Scholar] [CrossRef]

| Sample Plot | Tree Count | Density (Trees/ha) | Type | Mixed Ratio | Slope Direction | Slope (°) |

|---|---|---|---|---|---|---|

| 1 | 137 | 2283 | CLPF | 1 | Southeast | 37 |

| 2 | 121 | 2016 | CLPF | 1 | Northwest | 42 |

| 3 | 128 | 2133 | CLPF | 1 | Northeast | 40 |

| 4 | 128 | 2133 | CL-CHMF | 6:4 | South | 36 |

| 5 | 146 | 2433 | CL-CHMF | 7:3 | South | 30 |

| 6 | 148 | 2466 | CL-CHMF | 7:3 | South | 40 |

| 7 | 141 | 2350 | CL-QTMF | 8:2 | North | 36 |

| 8 | 130 | 2166 | CL-QTMF | 8:2 | North | 35 |

| 9 | 136 | 2266 | CL-QTMF | 8:2 | Northwest | 37 |

| Sample Plot | Measured | MSLM Method/RLM Method | |||

|---|---|---|---|---|---|

| Extracted | Correctly | Incorrectly | Omission | ||

| 1 | 137 | 144/130 * | 121/113 * | 23/17 * | 16/24 * |

| 2 | 121 | 133/112 * | 113/98 * | 20/14 * | 8/23 * |

| 3 | 128 | 132/116 * | 104/97 * | 28/19 * | 24/31 * |

| 4 | 128 | 128/104 * | 112/90 * | 16/14 * | 16/38 * |

| 5 | 146 | 137/90 * | 120/83 * | 17/7 * | 26/63 * |

| 6 | 148 | 139/106 * | 123/98 * | 16/8 * | 25/50 * |

| 7 | 141 | 140/118 * | 114/110 * | 26/8 * | 27/31 * |

| 8 | 130 | 134/121 * | 115/106 * | 19/15 * | 15/24 * |

| 9 | 136 | 139/128 * | 118/110 * | 21/18 * | 18/26 * |

| All | 1215 | 1226/1025 * | 1040/905 * | 186/120 * | 175/310 * |

| Sample Plot | MSLM Method/RLM Method | ||||

|---|---|---|---|---|---|

| AR | OE | CE | OA | F1_c | |

| 1 | 88.32%/82.48% | 11.68%/17.52% | 16.79%/12.41% | 94.89%/94.89% | 86.12%/84.64% |

| 2 | 93.39%/81.82% | 6.61%/18.18% | 16.53%/10.74% | 90.08%/92.56% | 88.98%/84.98% |

| 3 | 81.25%/76.56% | 18.75%/23.44% | 21.88%/14.06% | 96.88%/90.63% | 80.00%/80.33% |

| 4 | 87.50%/71.88% | 12.50%/28.13% | 12.50%/9.38% | 100.00%/81.25% | 87.50%/79.31% |

| 5 | 82.19%/56.16% | 17.81%/43.84% | 11.64%/5.48% | 93.84%/61.64% | 84.80%/69.49% |

| 6 | 83.11%/66.22% | 16.89%/33.78% | 10.81%/5.41% | 93.92%/71.62% | 85.71%/77.17% |

| 7 | 80.85%/78.01% | 19.15%/21.99% | 18.44%/5.67% | 99.29%/83.69% | 81.14%/84.94% |

| 8 | 88.46%/83.85% | 11.54%/16.15% | 14.62%/9.23% | 96.92%/93.08% | 87.12%/86.85% |

| 9 | 86.76%/80.88% | 13.24%/19.12% | 15.44%/13.24% | 97.79%/94.12% | 85.82%/83.33% |

| All | 85.59%/74.98% | 14.40%/25.02% | 15.31%/9.38% | 99.09%/84.36% | 85.21%/81.34% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lai, G.; Cao, M.; Zhou, C.; Liu, L.; Zhong, X.; Guo, Z.; Ouyang, X. Extracting Individual Tree Positions in Closed-Canopy Stands Using a Multi-Source Local Maxima Method. Forests 2025, 16, 262. https://doi.org/10.3390/f16020262

Lai G, Cao M, Zhou C, Liu L, Zhong X, Guo Z, Ouyang X. Extracting Individual Tree Positions in Closed-Canopy Stands Using a Multi-Source Local Maxima Method. Forests. 2025; 16(2):262. https://doi.org/10.3390/f16020262

Chicago/Turabian StyleLai, Guozhen, Meng Cao, Chengchuan Zhou, Liting Liu, Xun Zhong, Zhiwen Guo, and Xunzhi Ouyang. 2025. "Extracting Individual Tree Positions in Closed-Canopy Stands Using a Multi-Source Local Maxima Method" Forests 16, no. 2: 262. https://doi.org/10.3390/f16020262

APA StyleLai, G., Cao, M., Zhou, C., Liu, L., Zhong, X., Guo, Z., & Ouyang, X. (2025). Extracting Individual Tree Positions in Closed-Canopy Stands Using a Multi-Source Local Maxima Method. Forests, 16(2), 262. https://doi.org/10.3390/f16020262