Real-Time Detection of Smoke and Fire in the Wild Using Unmanned Aerial Vehicle Remote Sensing Imagery

Abstract

1. Introduction

2. Materials and Methods

2.1. Dataset and Preprocessing

2.2. Methods

2.2.1. Mixed Data Augmentation

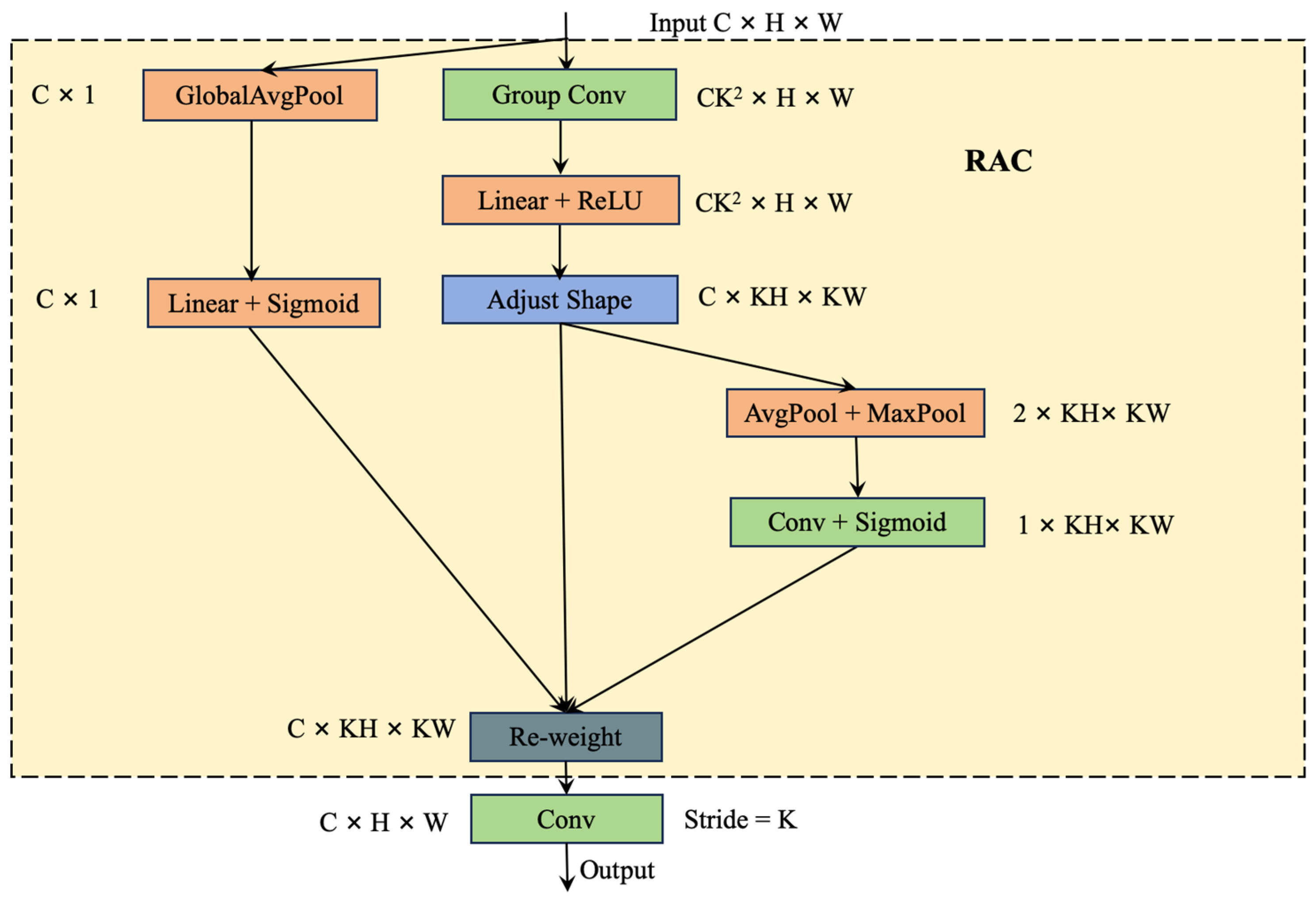

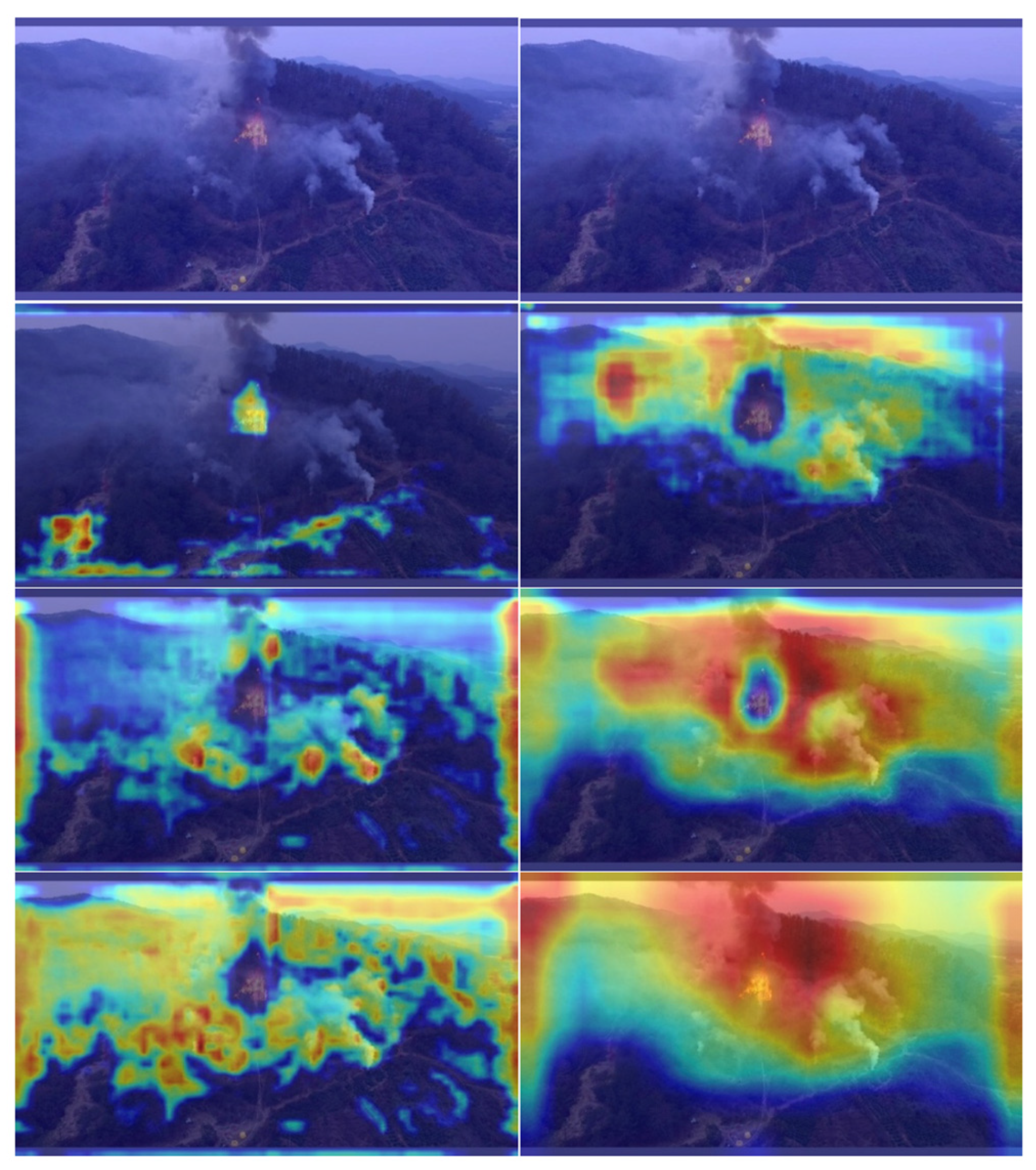

2.2.2. Vision Feature Enhancement

2.2.3. Loss Function

3. Experimental Results and Analysis

3.1. Experimental Setting

3.2. Evaluation Metric

3.3. Comparative Experiments and Analysis

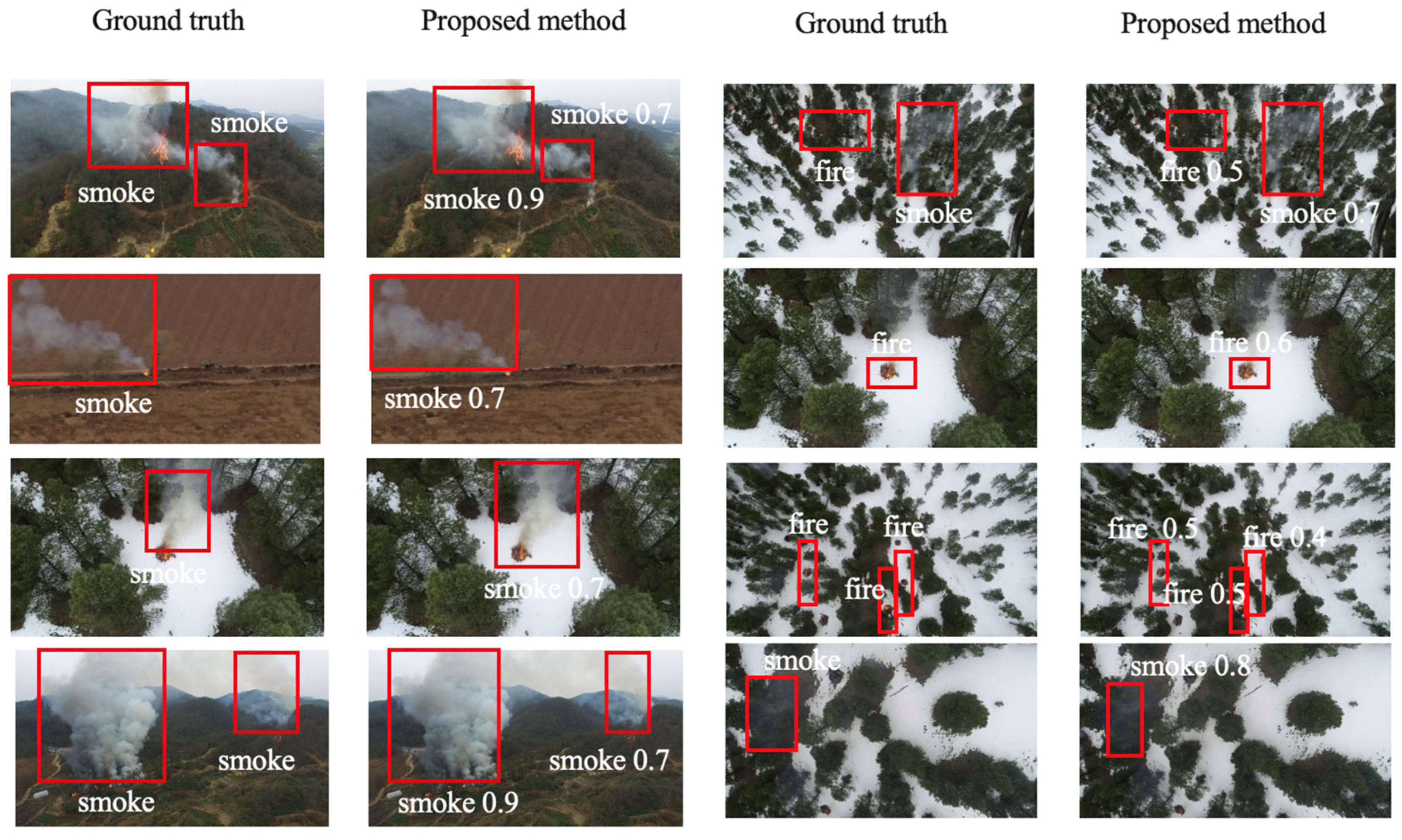

3.4. Ablasion Study

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Lambrou, N.; Crystal, K.; Anastasia, L.S.; Erica, A.; Charisma, A. Social drivers of vulnerability to wildfire disasters: A review of the literature. Landsc. Urban Plan. 2023, 237, 104797. [Google Scholar] [CrossRef]

- Yang, W.; Jiang, X.L. Review on Remote Sensing Information Extraction and Application of the Burned Forest Areas. Sci. Silvae Sin. 2018, 54, 135–142. [Google Scholar]

- Sousa, M.J.; Alexandra, M.; Miguel, A. Wildfire detection using transfer learning on augmented datasets. Expert Syst. Appl. 2020, 142, 142112975. [Google Scholar] [CrossRef]

- Rashkovetsky, D.; Florian, M.; Martin, L.; Michael, S. Wildfire detection from multisensor satellite imagery using deep semantic segmentation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 7001–7016. [Google Scholar] [CrossRef]

- Barmpoutis, P.; Periklis, P.; Kosmas, D.; Nikos, G. A review on early forest fire detection systems using optical remote sensing. Sensors 2020, 20, 6442. [Google Scholar] [CrossRef]

- Spadoni, G.L.; Moris, J.V.; Vacchiano, G.; Elia, M.; Garbarino, M.; Sibona, E.; Tomao, A.; Barbati, A.; Sallustio, L.; Salvati, L.; et al. Active governance of agro-pastoral, forest and protected areas mitigates wildfire impacts in Italy. Sci. Total Environ. 2023, 890, 164281. [Google Scholar] [CrossRef]

- Maestas, J.D.; Joseph, T.S.; Brady, W.A.; David, E.N.; Matthew, O.J.; Casey, O.C.; Chad, S.B.; Kirk, W.D.; Michele, R.C.; Andrew, C.O. Using dynamic, fuels-based fire probability maps to reduce large wildfires in the Great Basin. Rangel. Ecol. Manag. 2022, 89, 33–41. [Google Scholar] [CrossRef]

- Mohapatra, A.; Timothy, T. Early Wildfire Detection Technologies in Practice—A Review. Sustainability 2022, 14, 12270. [Google Scholar] [CrossRef]

- Kasyap, V.L.; Sumathi, D.; Alluri, K.; Reddy Ch, P.; Thilakarathne, N.; Shafi, R.M. Early Detection of Forest Fire Using Mixed Learning Techniques and UAV. Comput. Intell. Neurosci. 2022, 1, 3170244. [Google Scholar] [CrossRef] [PubMed]

- Yang, X.; Chen, R.; Zhang, F.; Zhang, L.; Fan, X.; Ye, Q.; Fu, L. Pixel-level automatic annotation for forest fire image. Eng. Appl. Artif. Intell. 2021, 104, 104353. [Google Scholar] [CrossRef]

- Celik, T.; Demirel, H. Fire detection in video sequences using a generic color model. Fire Saf. J. 2009, 44, 147–158. [Google Scholar] [CrossRef]

- Amal, B.H.; Chokri, B.A.; Yasser, A. A New Color Model for Fire Pixels Detection in PJF Color Space. Intell. Autom. Soft Comput. 2022, 33, 1607–1621. [Google Scholar]

- Dimitropoulos, K.; Barmpoutis, P.; Grammalidis, N. Higher order linear dynamical systems for smoke detection in video surveillance applications. IEEE Trans. Circuits Syst. Video Technol. 2016, 27, 1143–1154. [Google Scholar] [CrossRef]

- Prema, C.E.; Vinsley, S.S.; Suresh, S. Efficient flame detection based on static and dynamic texture analysis in forest fire detection. Fire Technol. 2018, 54, 255–288. [Google Scholar] [CrossRef]

- Lu, X.; Wang, R.; Zhang, H.; Zhou, J.; Yun, T. PosE-Enhanced Point Transformer with Local Surface Features (LSF) for Wood–Leaf Separation. Forests 2024, 15, 2244. [Google Scholar] [CrossRef]

- Wang, Q.; Fan, X.; Zhuang, Z.; Tjahjadi, T.; Jin, S.; Huan, H.; Ye, Q. One to All: Toward a Unified Model for Counting Cereal Crop Heads Based on Few-Shot Learning. Plant Phenomics 2024, 6, 0271. [Google Scholar] [CrossRef] [PubMed]

- Wu, X.; Fan, X.; Luo, P.; Choudhury, S.D.; Tjahjadi, T.; Hu, C. From laboratory to field: Unsupervised domain adaptation for plant disease recognition in the wild. Plant Phenomics 2023, 5, 0038. [Google Scholar] [CrossRef]

- Wang, J.; Fan, X.; Yang, X.; Tjahjadi, T.; Wang, Y. Semi-Supervised Learning for Forest Fire Segmentation Using UAV Imagery. Forests 2022, 13, 1573. [Google Scholar] [CrossRef]

- Shamta, I.; Demir, B.E. Development of a Deep Learning-Based Surveillance System for Forest Fire Detection and Monitoring Using UAV. PLoS ONE 2024, 19, e0299058. [Google Scholar] [CrossRef] [PubMed]

- Srinivas, K.; Mohit, D. Fog computing and deep CNN based efficient approach to early forest fire detection with unmanned aerial vehicles. In Inventive Computation Technologies 4; Springer: Berlin/Heidelberg, Germany, 2020; pp. 646–652. [Google Scholar]

- Govil, K.; Morgan, L.W.; J-Timothy, B.; Carlton, R.P. Preliminary results from a wildfire detection system using deep learning on remote camera images. Remote Sens. 2020, 12, 166. [Google Scholar] [CrossRef]

- Lee, W.; Kim, S.; Lee, Y.T.; Lee, H.W.; Choi, M. Deep Neural Networks for Wildfire Detection with Unmanned Aerial Vehicle. In Proceedings of the 2017 IEEE International Conference on Consumer Electronics, Las Vegas, NV, USA, 8–10 January 2017. [Google Scholar] [CrossRef]

- Barmpoutis, P.; Tania, S.; Kosmas, D.; Nikos, G. Early fire detection based on aerial 360-degree sensors, deep convolution neural networks and exploitation of fire dynamic textures. Remote Sens. 2020, 12, 3177. [Google Scholar] [CrossRef]

- Goyal, S.; Shagill, M.; Kaur, A.; Vohra, H.; Singh, A. A yolo based technique for early forest fire detection. Int. J. Innov. Technol. Explor. Eng. 2020, 9, 1357–1362. [Google Scholar] [CrossRef]

- Wang, Y.F.; Hua, C.C.; Ding, W.L.; Wu, R.N. Real-time detection of flame and smoke using an improved YOLOv4 network. Signal Image Video Process. 2022, 16, 1109–1116. [Google Scholar] [CrossRef]

- Gaur, A.; Singh, A.; Kumar, A.; Kumar, A.; Kapoor, K. Video flame and smoke based fire detection algorithms: A literature review. Fire Technol. 2020, 56, 1943–1980. [Google Scholar] [CrossRef]

- Sathishkumar, V.E.; Cho, J.; Subramanian, M.; Naren, O.S. Forest fire and smoke detection using deep learning-based learning without forgetting. Fire Ecol. 2023, 19, 9. [Google Scholar] [CrossRef]

- Mamadaliev, D.; Touko, P.L.M.; Kim, J.-H.; Kim, S.-C. ESFD-YOLOv8n: Early Smoke and Fire Detection Method Based on an Improved YOLOv8n Model. Fire 2024, 7, 303. [Google Scholar] [CrossRef]

- Shamsoshoara, A.; Afghah, F.; Razi, A.; Zheng, L.; Fulé, P.Z.; Blasch, E. Aerial imagery pile burn detection using deep learning: The FLAME dataset. Comput. Netw. 2021, 193, 108001. [Google Scholar] [CrossRef]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. Yolov10: Real-time end-to-end object detection. arXiv 2024, arXiv:2405.14458. [Google Scholar]

- Dai, Y.; Gieseke, F.; Oehmcke, S.; Wu, Y.; Barnard, K. Attentional feature fusion. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Virtual, 5–9 January 2021; pp. 3560–3569. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate attention for efficient mobile network design. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13713–13722. [Google Scholar]

- Zhang, H.; Zhang, S. Shape-iou: More accurate metric considering bounding box shape and scale. arXiv 2023, arXiv:2312.17663. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Ge, Z. Yolox: Exceeding yolo series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

| Model | Input Size | Precision (%) | Recall (%) | mAP (%) | F1 (%) | FPS |

|---|---|---|---|---|---|---|

| YOLOv4 | 1280 1280 | 64.22 | 71.71 | 71.33 | 67.72 | 11.8 |

| YOLOv5 | 1280 1280 | 69.31 | 68.97 | 74.52 | 69.12 | 10.6 |

| YOLOv7 | 1280 1280 | 85.14 | 63.41 | 69.23 | 72.77 | 16.9 |

| YOLOX | 1280 1280 | 60.4 | 71.9 | 71.9 | 65.7 | 20.1 |

| YOLOv10 | 1280 1280 | 80.59 | 68.75 | 75.01 | 74.20 | 8.82 |

| Proposed | 1280 1280 | 76.73 | 75.56 | 79.28 | 76.14 | 8.98 |

| Model | Input Size | Precision (%) | Recall (%) | mAP (%) | F1 (%) | FPS |

|---|---|---|---|---|---|---|

| YOLOv10 | 1280 1280 | 80.59 | 68.75 | 75.01 | 74.20 | 8.82 |

| +Mosaic9 | 1280 1280 | 83.18 | 65.09 | 76.03 | 73.03 | 8.72 |

| +Shape IoU | 1280 1280 | 81.5 | 70.01 | 76.90 | 75.31 | 8.92 |

| +RAC | 1280 1280 | 75.73 | 69.22 | 75.61 | 72.33 | 7.14 |

| +CAFR | 1280 1280 | 83.18 | 65.09 | 76.23 | 73.03 | 11.76 |

| Proposed | 1280 1280 | 76.73 | 75.56 | 79.28 | 76.14 | 8.98 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fan, X.; Lei, F.; Yang, K. Real-Time Detection of Smoke and Fire in the Wild Using Unmanned Aerial Vehicle Remote Sensing Imagery. Forests 2025, 16, 201. https://doi.org/10.3390/f16020201

Fan X, Lei F, Yang K. Real-Time Detection of Smoke and Fire in the Wild Using Unmanned Aerial Vehicle Remote Sensing Imagery. Forests. 2025; 16(2):201. https://doi.org/10.3390/f16020201

Chicago/Turabian StyleFan, Xijian, Fan Lei, and Kun Yang. 2025. "Real-Time Detection of Smoke and Fire in the Wild Using Unmanned Aerial Vehicle Remote Sensing Imagery" Forests 16, no. 2: 201. https://doi.org/10.3390/f16020201

APA StyleFan, X., Lei, F., & Yang, K. (2025). Real-Time Detection of Smoke and Fire in the Wild Using Unmanned Aerial Vehicle Remote Sensing Imagery. Forests, 16(2), 201. https://doi.org/10.3390/f16020201