Remote Sensing Identification of Picea schrenkiana var. tianschanica in GF-1 Images Based on a Multiple Mixed Attention U-Net Model

Abstract

1. Introduction

2. Materials and Methods

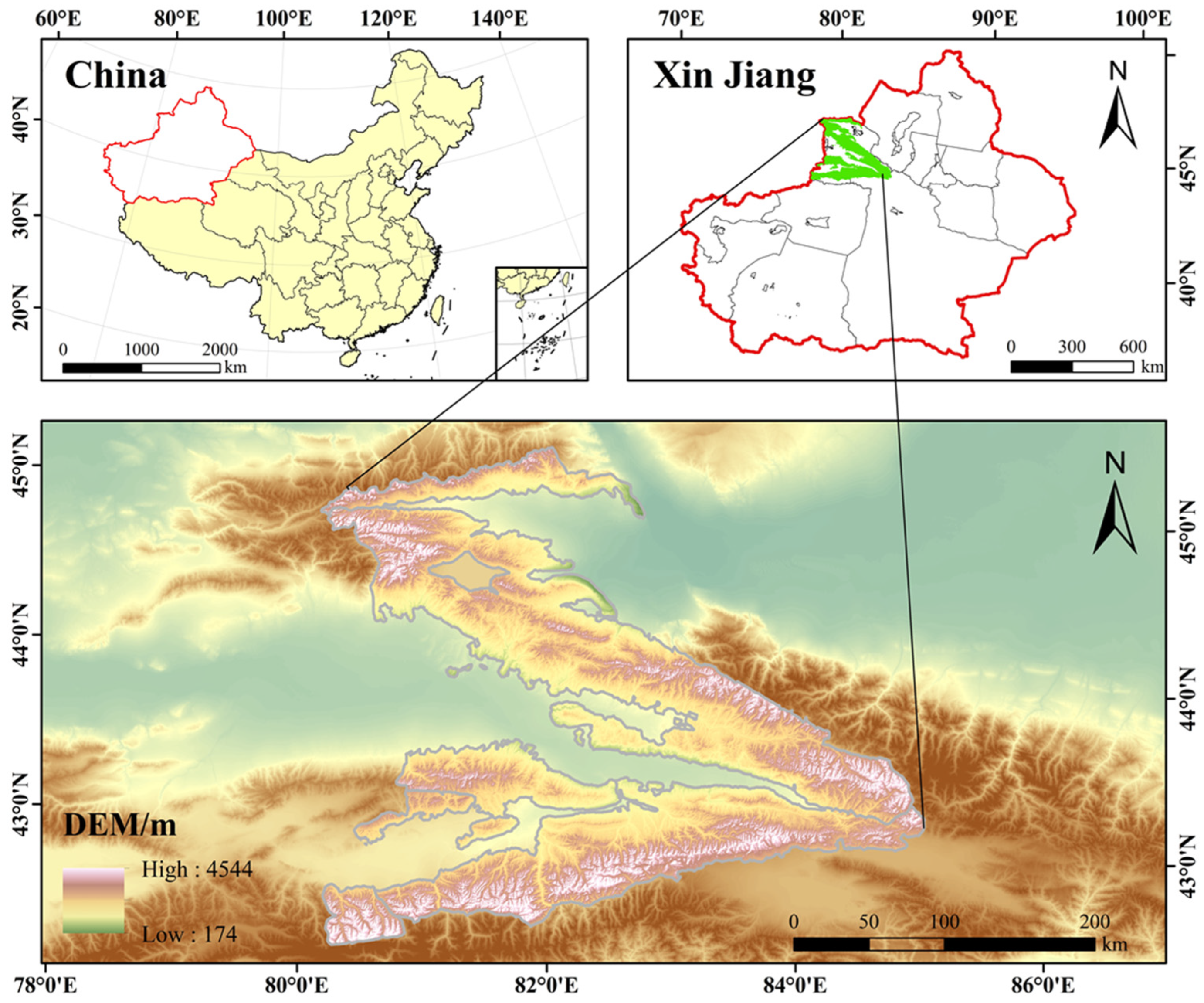

2.1. Study Area

2.2. Data Sources

2.3. Data Preprocessing

2.4. Research Methodology

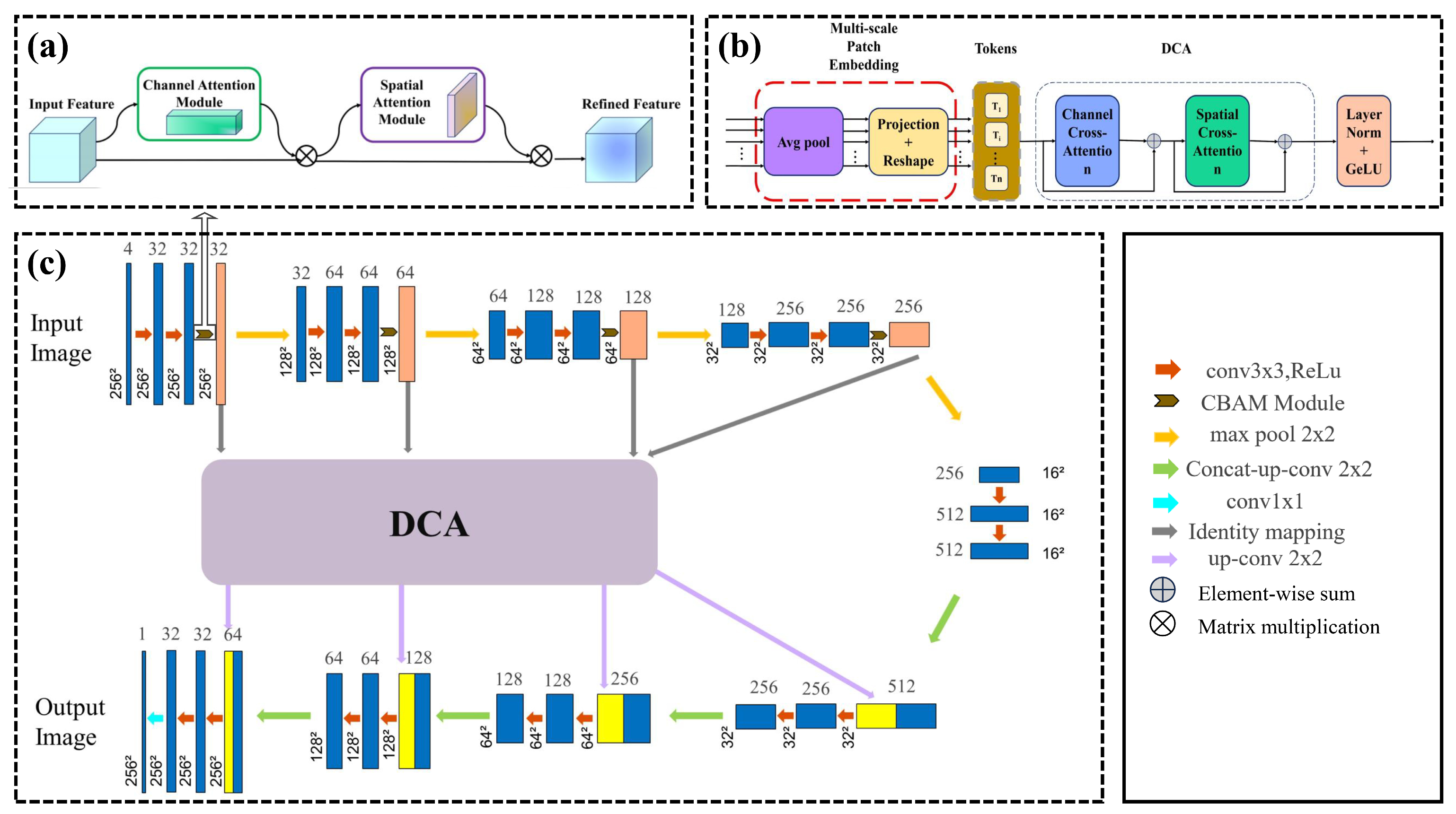

2.4.1. Convolutional Block Attention Module

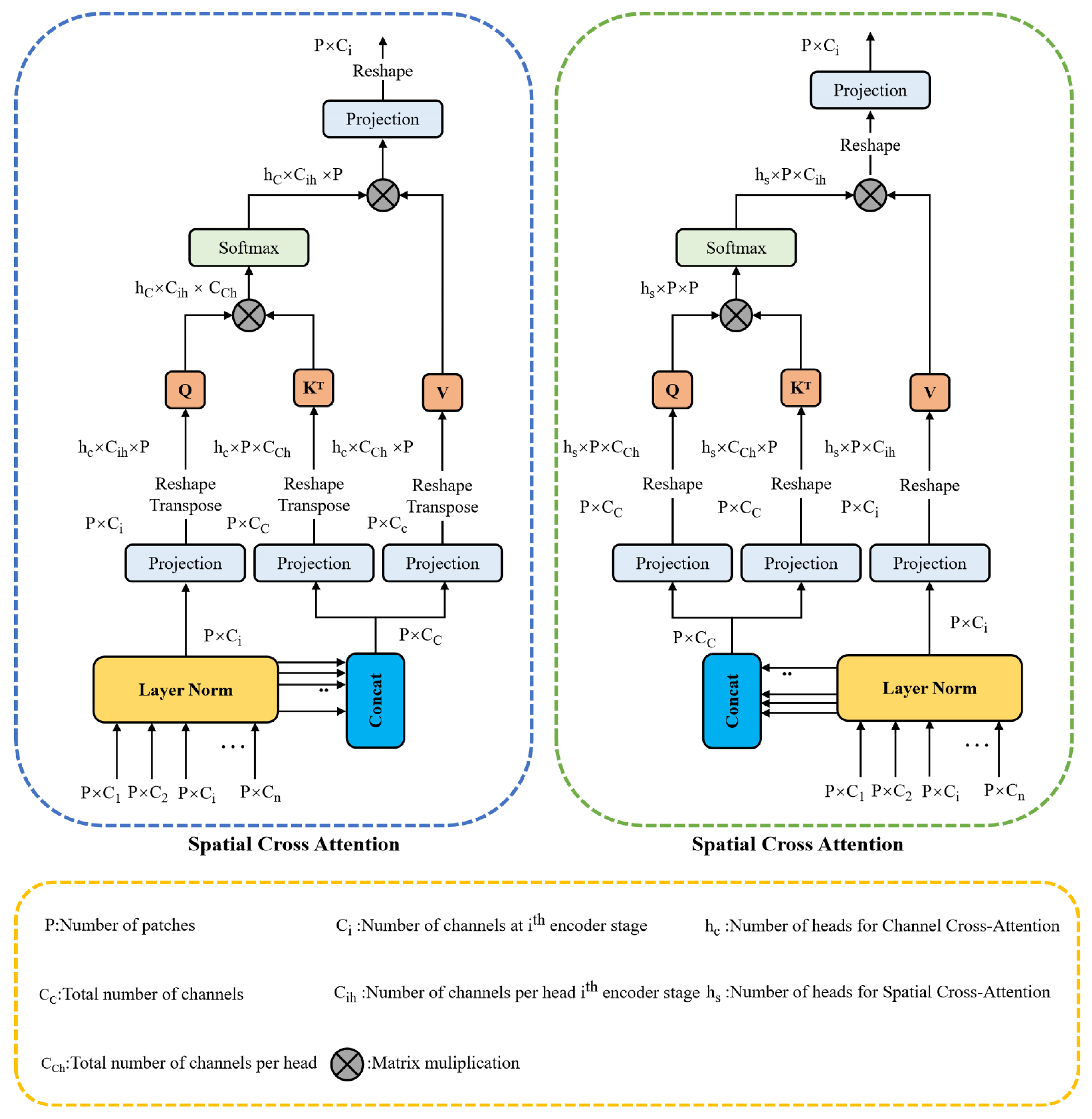

2.4.2. Dual Cross-Attention

2.4.3. U-Net Model

2.4.4. Multiple Mixed Attention U-Net

2.4.5. Accuracy Evaluation Metrics

2.4.6. Spatial Distribution Analysis of Picea schrenkiana var. tianschanica

3. Results

3.1. Experimental Parameter Setting

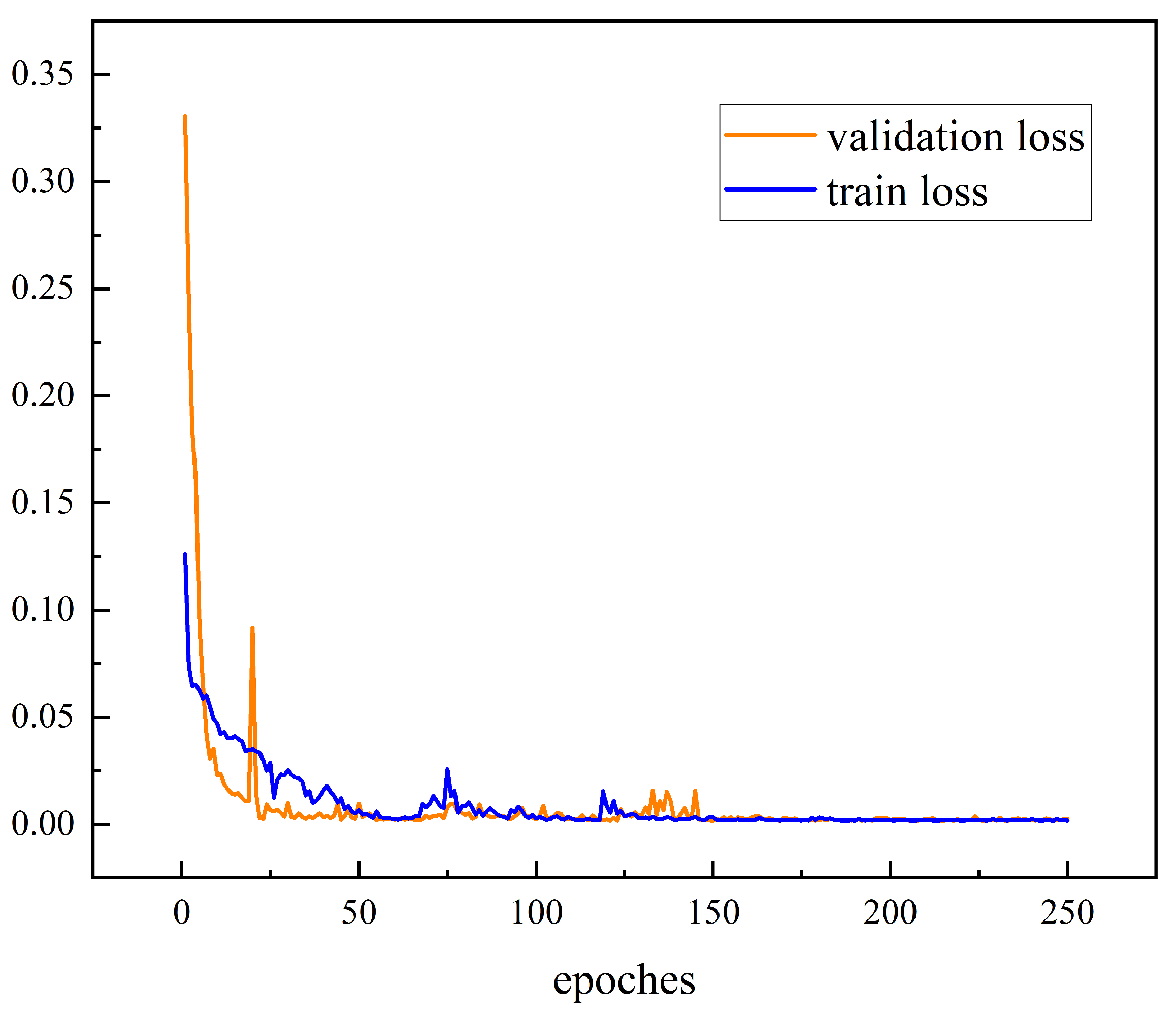

3.2. Loss Assessment

3.3. Ablation Experiment

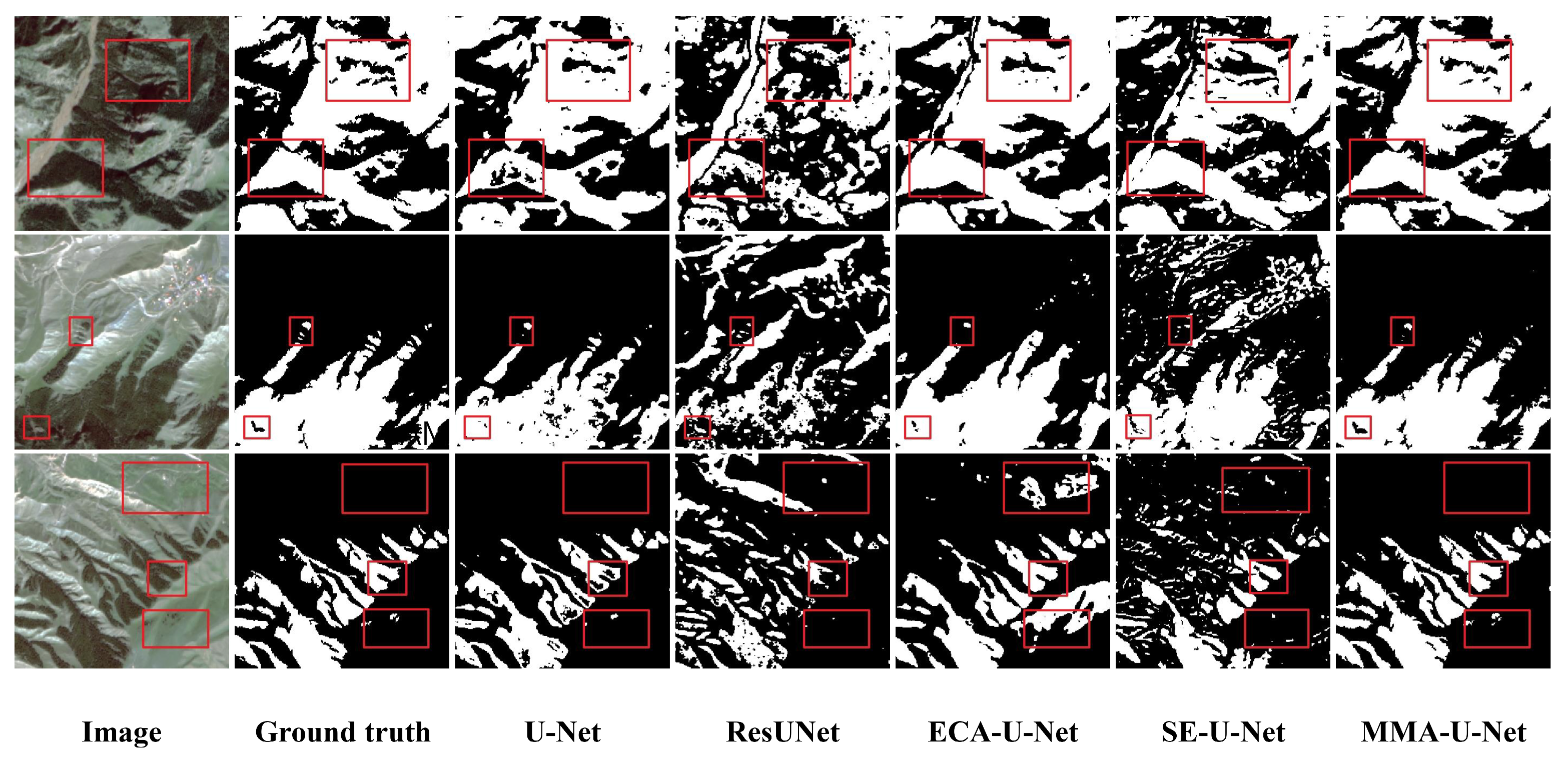

3.4. Comparison of Different Methods

3.5. Picea schrenkiana var. tianschanica Distribution

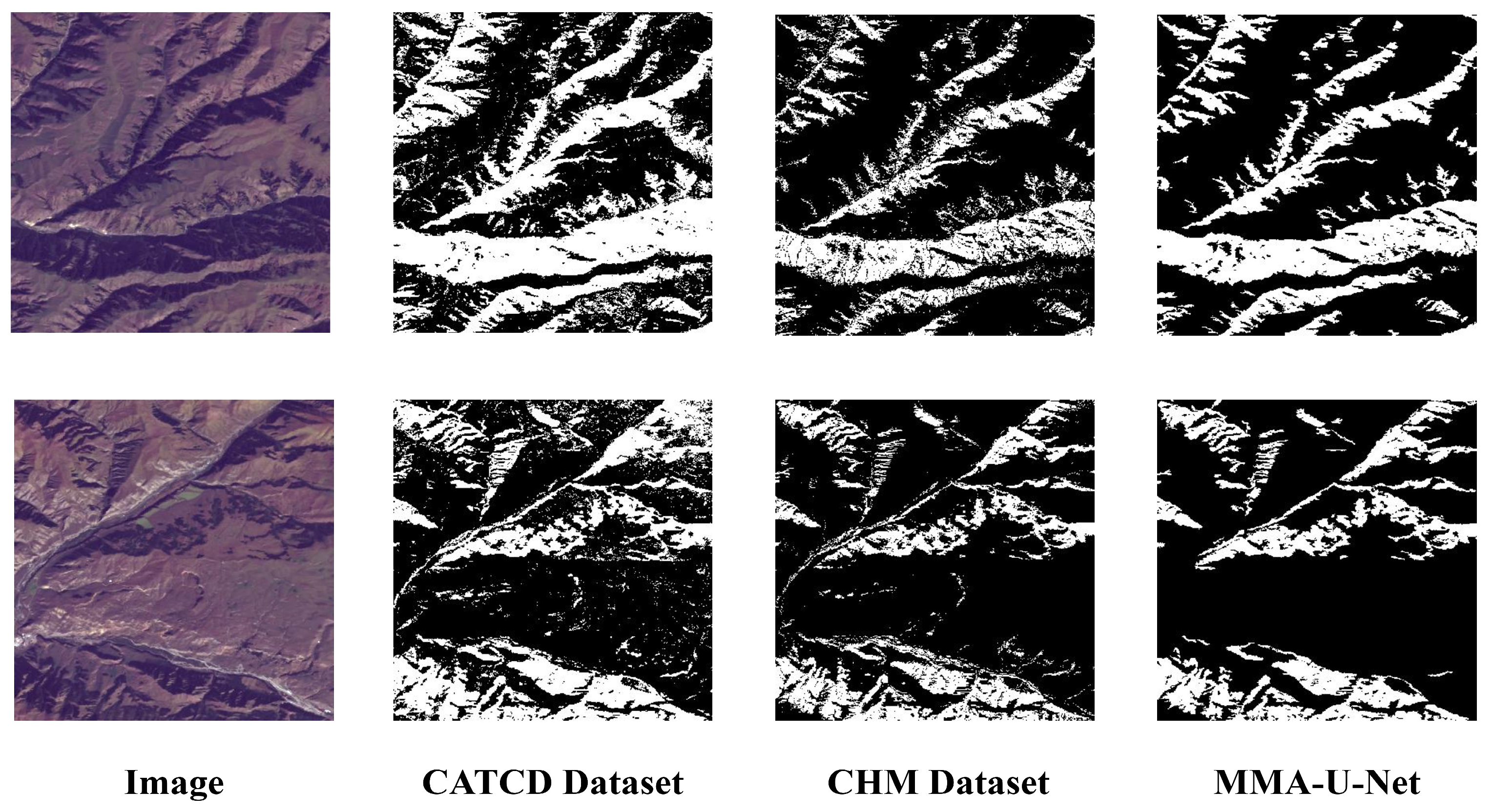

4. Discussion

4.1. Model Evaluations

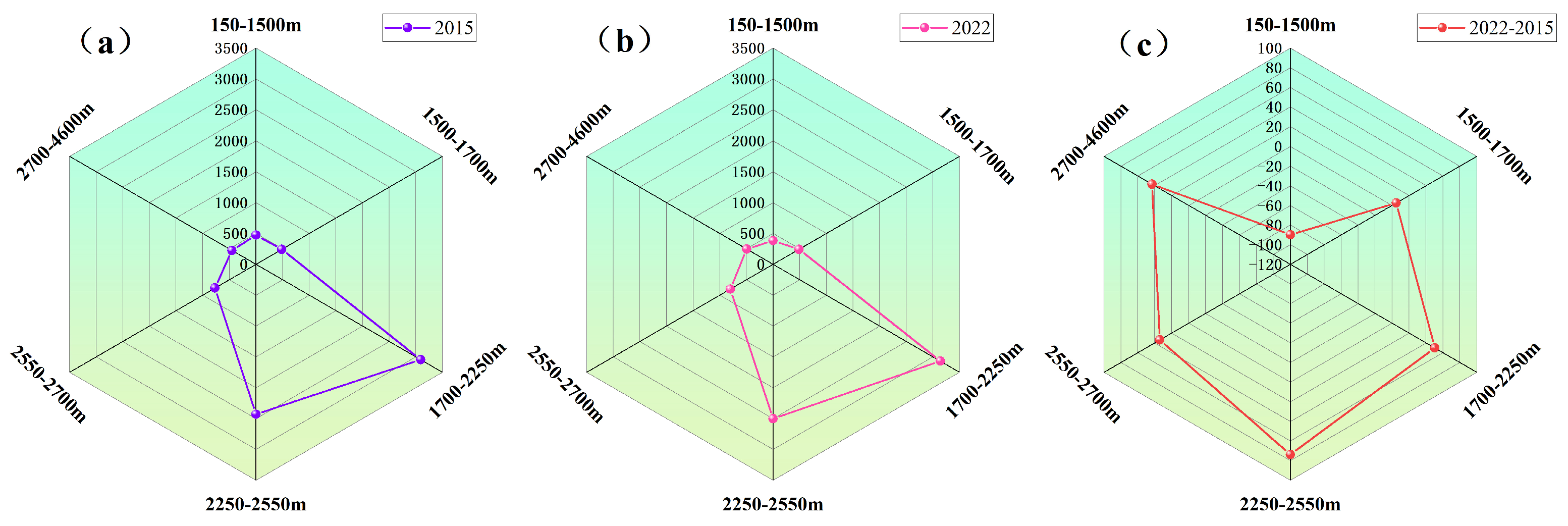

4.2. Analysis of Spatial Distribution and Change in Picea schrenkiana var. tianschanica

4.3. Deficiencies and Prospects

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Gao, G.; Guo, X.; Feng, Q.; Xu, E.; Hao, Y.; Wang, R.; Jing, W.; Ren, X.; Liu, S.; Shi, J.; et al. Environmental Controls on Evapotranspiration and Its Components in a Qinghai Spruce Forest in the Qilian Mountains. Plants 2024, 13, 801. [Google Scholar] [CrossRef] [PubMed]

- Gottardini, E.; Cristofolini, F.; Cristofori, A.; Ferretti, M. Forests Attenuate Temperature and Air Pollution Discomfort in Montane Tourist Areas. Forests 2023, 14, 545. [Google Scholar] [CrossRef]

- Nico, L.; Walter, J.; Konrad, S.; JanDirk, W. A high-resolution canopy height model of the Earth. Nat. Ecol. Evol. 2023, 7, 1778–1789. [Google Scholar]

- Liu, X.; Su, Y.; Hu, T.; Yang, Q.; Liu, B.; Deng, Y.; Tang, H.; Tang, Z.; Fang, J.; Guo, Q. Neural network guided interpolation for mapping canopy height of China’s forests by integrating GEDI and ICESat-2 data. Remote Sens. Environ. 2022, 269, 112844. [Google Scholar] [CrossRef]

- Jamie, T.; Hung, I.Y.; Benjamin, N.; Guillaume, C.; Vo, H.V.; John, B.; Justine, S.; Sayantan, M.; Daniel, H.; Janaki, V.; et al. Very high resolution canopy height maps from RGB imagery using self-supervised vision transformer and convolutional decoder trained on aerial lidar. Remote Sens. Environ. 2024, 300, 113888. [Google Scholar]

- Cai, Y.; Xu, X.; Nie, S.; Wang, C.; Zhu, P.; Xiong, Y.; Liu, X. Unveiling Spatiotemporal Tree Cover Patterns in China: The First 30m Annual Tree Cover Mapping from 1985 to 2023. ISPRS J. Photogramm. Remote Sens. 2024, 216, 240–258. [Google Scholar] [CrossRef]

- Gosselin, M.; Dumas, Y.; Cadet, S.; Cateau, E.; Paillet, Y.; Gosselin, F. Macroclimate modulates the positive dead-wood influence on bryophyte diversity in managed and unmanaged temperate lowland forests. J. Environ. Manag. 2024, 367, 121840. [Google Scholar] [CrossRef]

- Francini, S.; Schelhaas, J.M.; Vangi, E.; Lerink, B.J.; Nabuurs, G.J.; McRoberts, R.E.; Chirici, G. Forest species mapping and area proportion estimation combining Sentinel-2 harmonic predictors and national forest inventory data. Int. J. Appl. Earth Obs. Geoinf. 2024, 131, 103935. [Google Scholar] [CrossRef]

- Liu, Y.; Gong, W.; Hu, X.; Gong, J. Forest Type Identification with Random Forest Using Sentinel-1A, Sentinel-2A, Multi-Temporal Landsat-8 and DEM Data. Remote Sens. 2018, 10, 946. [Google Scholar] [CrossRef]

- Yu, E.; Zhang, M.; Hou, Y.; Liu, S.; Deng, S.; Sun, M.; Wang, Y. Identifying long-term burned forests in the rugged terrain of Southwest China: A novel method based on remote sensing and ecological mechanisms. Int. J. Appl. Earth Obs. Geoinf. 2024, 133, 104134. [Google Scholar]

- He, G.; Li, S.; Huang, C.; Xu, S.; Li, Y.; Jiang, Z.; Xu, J.; Yang, F.; Wan, W.; Zou, Q.; et al. Comparison of Algorithms and Optimal Feature Combinations for Identifying Forest Type in Subtropical Forests Using GF-2 and UAV Multispectral Images. Forests 2024, 15, 1327. [Google Scholar] [CrossRef]

- Emre, A.; Narendra, A. Low-Level Hierarchical Multiscale Segmentation Statistics of Natural Images. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 1900–1906. [Google Scholar]

- Zhang, M.; Shen, J.; Shang, Z. Color Image Fuzzy Classification Algorithm with Salient Regions. Inf. Technol. J. 2008, 7, 560. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. CoRR abs/1505.04597. 2015. Available online: https://dblp.org/rec/journals/corr/RonnebergerFB15.html (accessed on 25 February 2024).

- Cui, J.; Tan, F.; Bai, N.; Fu, Y. Improving U-net network for semantic segmentation of corns and weeds during corn seedling stage in field. Front. Plant Sci. 2024, 15, 1344958. [Google Scholar] [CrossRef]

- Jamali, A.; Roy, K.S.; Beni, H.L.; Pradhan, B.; Li, J.; Ghamisi, P. Residual wave vision U-Net for flood mapping using dual polarization Sentinel-1 SAR imagery. Int. J. Appl. Earth Obs. Geoinf. 2024, 127, 103662. [Google Scholar] [CrossRef]

- Kushanav, B.; Raj, S.M.; Lorenzo, N.; Cees, V.W.; Mario, F.; Filippo, C. Mapping landslides through a temporal lens: An insight toward multi-temporal landslide mapping using the u-net deep learning model. GISci. Remote Sens. 2023, 60, 2182057. [Google Scholar] [CrossRef]

- Khankeshizadeh, E.; Tahermanesh, S.; Mohsenifar, A.; Moghimi, A.; Mohammadzadeh, A. FBA-DPAttResU-Net: Forest burned area detection using a novel end-to-end dual-path attention residual-based U-Net from post-fire Sentinel-1 and Sentinel-2 images. Ecol. Indic. 2024, 167, 112589. [Google Scholar] [CrossRef]

- Yan, H.; Liu, G.; Li, Z.; Li, Z.; He, J. SCECA U-Net crop classification for UAV remote sensing image. Clust. Comput. 2024, 28, 23. [Google Scholar] [CrossRef]

- Li, C.; Xian, G.; Jin, S. A “Region-Specific Model Adaptation (RSMA)”-Based Training Data Method for Large-Scale Land Cover Mapping. Remote Sens. 2024, 16, 3717. [Google Scholar] [CrossRef]

- Hewarathna, I.A.; Hamlin, L.; Charles, J.; Vigneshwaran, P.; George, R.; Thuseethan, S.; Wimalasooriya, C.; Shanmugam, B. Change Detection for Forest Ecosystems Using Remote Sensing Images with Siamese Attention U-Net. Technologies 2024, 12, 160. [Google Scholar] [CrossRef]

- Xie, X.; Liu, P.; Lang, Y.; Guo, Z.; Yang, Z.; Zhao, Y. US-Net: U-shaped network with Convolutional Attention Mechanism for ultrasound medical images. Comput. Graph. 2024, 124, 104054. [Google Scholar] [CrossRef]

- Sui, T.; Huang, Q.; Wu, M.; Wu, M.; Zhang, Z. BiAU-Net: Wildfire burnt area mapping using bi-temporal Sentinel-2 imagery and U-Net with attention mechanism. Int. J. Appl. Earth Obs. Geoinf. 2024, 132, 104034. [Google Scholar] [CrossRef]

- Chen, H.; He, Y.; Zhang, L.; Yao, S.; Yang, W.; Fang, Y.; Liu, Y.; Gao, B. A landslide extraction method of channel attention mechanism U-Net network based on Sentinel-2A remote sensing images. Int. J. Digit. Earth 2023, 16, 552–577. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.; Kweon, I.S. CBAM: Convolutional Block Attention Module; Springer: Cham, Switzerland, 2018. [Google Scholar] [CrossRef]

- Zheng, Y.; Dong, W.; Yang, Z.; Lu, Y.; Zhang, X.; Dong, Y.; Sun, F. A new attention-based deep metric model for crop type mapping in complex agricultural landscapes using multisource remote sensing data. Int. J. Appl. Earth Obs. Geoinf. 2024, 134, 104204. [Google Scholar] [CrossRef]

- Liu, Q.; Yu, Z.; Jing, C.; Sun, C.; Huang, M.; Che, M.; Li, C.; Lin, S. An improved Deeplab V3+ network based coconut CT image segmentation method. Front. Plant Sci. 2023, 141, 1139666. [Google Scholar] [CrossRef]

- Tie, J.; Wu, W.; Zheng, L.; Wu, L.; Chen, T. Improving Walnut Images Segmentation Using Modified UNet3+ Algorithm. Agriculture 2024, 14, 149. [Google Scholar] [CrossRef]

- Zhu, X.; Yue, Y.; Zheng, Y.; Su, C.; Chen, F. Bilinear Attention Network for Image-Based Fine-Grained Recognition of Oil Tea (Camellia oleifera Abel.). Cultivars. Agron. 2022, 12, 1846. [Google Scholar] [CrossRef]

- Can, G.A.; Prasoon, M.; Emrah, C. Dual Cross-Attention for medical image segmentation. Eng. Appl. Artif. Intell. 2023, 126, 107139. [Google Scholar]

- Wang, J.; Ding, J.; Ran, S.; Qin, S.; Liu, B.; Li, X. Automatic Pear Extraction from High-Resolution Images by a Visual Attention Mechanism Network. Remote Sens. 2023, 15, 3283. [Google Scholar] [CrossRef]

- See, V.L.; Mohd, A.Z.; Azlan, S.; Adhi, H.S.; Siti, R.A.A.H.S.; Siti, R.A. Attention-Based Semantic Segmentation Networks for Forest Applications. Forests 2023, 14, 2437. [Google Scholar] [CrossRef]

- Xu, J.; Zhu, Y.; Zhong, R.; Lin, Z.; Xu, J.; Jiang, H.; Huang, J.; Li, H.; Lin, T. DeepCropMapping: A multi-temporal deep learning approach with improved spatial generalizability for dynamic corn and soybean mapping. Remote Sens. Environ. 2020, 247, 111946. [Google Scholar] [CrossRef]

- David, J.; Ce, Z. An attention-based U-Net for detecting deforestation within satellite sensor imagery. Int. J. Appl. Earth Obs. Geoinf. 2022, 107, 102685. [Google Scholar]

- Kassim, K.; Ahmed, O.M.; Teck, D.L.C. FCD-AttResU-Net: An improved forest change detection in Sentinel-2 satellite images using attention residual U-Net. Int. J. Appl. Earth Obs. Geoinf. 2023, 122, 103453. [Google Scholar]

- Liu, K.; Zhou, X. Forest Cover Extraction From Gaofen-1 PMS Image in Mountain Area Using Decision Tree. J. Huaqiao Univ. (Nat. Sci.) 2019, 40, 376–383. [Google Scholar] [CrossRef]

- Chang, Z.; Li, H.; Chen, D.; Liu, Y.; Zou, C.; Chen, J.; Han, W.; Liu, S.; Zhang, N. Crop Type Identification Using High-Resolution Remote Sensing Images Based on an Improved DeepLabV3+ Network. Remote Sens. 2023, 15, 5088. [Google Scholar] [CrossRef]

- Peng, D.; Zhang, Y.; Guan, H. End-to-End Change Detection for High Resolution Satellite Images Using Improved UNet++. Remote Sens. 2019, 11, 1382. [Google Scholar] [CrossRef]

- Syrris, V.; Pesek, O.; Soille, P. SatImNet: Structured and Harmonised Training Data for Enhanced Satellite Imagery Classification. Remote Sens. 2020, 12, 3358. [Google Scholar] [CrossRef]

- Abramova, I.A.; Demchev, D.M.; Kharyutkina, E.; Savenkova, E.N. Utilization of the U-Net Convolutional Neural Network and Its Modifications for Segmentation of Tundra Lakes in Satellite Optical Images. Atmos. Ocean. Opt. 2024, 37, 205–210. [Google Scholar] [CrossRef]

- Ali, G.; Mohammadmehdi, S.; Jakub, B.; Sibylle, I. Comparative performance analysis of simple U-Net, residual attention U-Net, and VGG16-U-Net for inventory inland water bodies. Appl. Comput. Geosci. 2024, 21, 100150. [Google Scholar]

- Wan, Y.; Wei, G.; Li, R.; Xiang, Y.; Yin, D.; Yang, M.; Gong, D.; Chen, J. Retinal Blood Vessels Segmentation With Improved SE-UNet Model. Int. J. Imaging Syst. Technol. 2024, 34, e23145. [Google Scholar] [CrossRef]

- Su, F.; Wang, G.; Shi, Y.; Jia, R.; Yan, Y.; Zu, L. Leaf disease segmentation model of greenhouse tomatoes based on ResUnet with attention mechanism. J. Chin. Agric. Mech. 2024, 45, 228–233. [Google Scholar]

- Shu, X.; Chang, F.; Zhang, X.; Shao, C.; Yang, X. ECAU-Net: Efficient channel attention U-Net for fetal ultrasound cerebellum segmentation. Biomed. Signal Process. Control 2022, 75, 103528. [Google Scholar] [CrossRef]

- Shahab, J.; Chen, D. On the versatility of popular and recently proposed supervised evaluation metrics for segmentation quality of remotely sensed images: An experimental case study of building extraction. ISPRS J. Photogramm. Remote Sens. 2020, 160, 275–290. [Google Scholar]

- Zhang, X.; Zhang, Y.; Chen, W.; Zheng, J.; Chen, F.; Chen, K.; Mo, G. The types and regeneration of the spruce forest in Xueling Mountains of Tianshan Mountains. Sci. Silve Sin. 1964, 9, 167–183. [Google Scholar]

- Chen, C.; Jing, L.; Li, H.; Tang, Y. A New Individual Tree Species Classification Method Based on the ResU-Net Model. Forests 2021, 12, 1202. [Google Scholar] [CrossRef]

- Li, Q.; Jia, W.; Sun, M.; Hou, S.; Zheng, Y. A novel green apple segmentation algorithm based on ensemble U-Net under complex orchard environment. Comput. Electron. Agric. 2021, 180, 105900. [Google Scholar] [CrossRef]

- Zhao, H.; Gu, B.; Lindley, S.; Zhu, T. Regulation factors driving vegetation changes in China during the past 20 years. J. Geogr. Sci. 2023, 33, 508–528. [Google Scholar] [CrossRef]

- Ma, N.; Bai, T.; Cai, Z. Vegetation Cover Change and Its Response to Climate and Surface Factors in Xinjiang Based on Different Vegetation Types. Res. Soil Water Conserv. 2024, 31, 385–394. [Google Scholar]

- Zhang, Y.; Sun, J.; Lu, Y.; Song, X. Influence of Anthropogenic Activities and Major Natural Factors on Vegetation Changes in Global Alpine Regions. Land 2022, 11, 1084. [Google Scholar] [CrossRef]

- Sun, M.; Sun, P.; Liu, N.; Zhang, L.; Yu, Z.; Feng, Q.; Smettem, K.; Liu, S. Alternating dominant effects of temperature and precipitation along elevational gradient on the alpine and subalpine vegetation activities in southwestern China. For. Ecol. Manag. 2024, 554, 121668. [Google Scholar] [CrossRef]

- Ma, Q.; Li, Y.; Li, X.; Liu, J.; Keyimu, M.; Zeng, F.; Liu, Y. Modeling future changes in potential habitats of five alpine vegetation types on the Tibetan Plateau by incorporating snow depth and snow phenology. Sci. Total Environ. 2024, 918, 170399. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; An, C.; Jiang, L.; Zheng, L.; Tan, B.; Lu, C.; Zhang, W.; Zhang, Y. Increased Vegetation Productivity of Altitudinal Vegetation Belts in the Chinese Tianshan Mountains despite Warming and Drying since the Early 21st Century. Forests 2023, 14, 2189. [Google Scholar] [CrossRef]

- Lu, T.; Gao, M.; Wang, L. Crop classification in high-resolution remote sensing images based on multi-scale feature fusion semantic segmentation model. Front. Plant Sci. 2023, 14, 1196634. [Google Scholar] [CrossRef] [PubMed]

| CBAM | DCA | Accuracy/% | Recall/% | Precision/% | F1 Score/% | mIOU/% |

|---|---|---|---|---|---|---|

| × | × | 81.61 | 79.80 | 77.80 | 77.69 | 70.8 |

| √ | × | 87.98 | 88.44 | 88.56 | 88.57 | 72.6 |

| × | √ | 88.18 | 88.18 | 88.11 | 88.06 | 72.4 |

| √ | √ | 93.06 | 92.22 | 93.26 | 93.89 | 81.8 |

| Model | Accuracy/% | Recall/% | Precision/% | F1 Score/% | mIOU/% | Speed/(it·s−1) |

|---|---|---|---|---|---|---|

| U-Net | 81.61 | 79.80 | 77.80 | 77.69 | 71.8 | 7.01 |

| SE-U-Net | 82.77 | 81.50 | 80.97 | 83.76 | 72.9 | 6.86 |

| ResU-Net | 73.22 | 72.14 | 70.61 | 73.54 | 72.7 | 6.98 |

| ECA-U-Net | 87.64 | 80.49 | 83.29 | 81.92 | 72.8 | 4.98 |

| MMA-U-Net | 93.06 | 92.22 | 93.26 | 93.89 | 81.8 | 1.28 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zheng, J.; Chen, D.; Zhang, H.; Zhang, G.; Zhen, Q.; Liu, S.; Zhang, N.; Zhao, H. Remote Sensing Identification of Picea schrenkiana var. tianschanica in GF-1 Images Based on a Multiple Mixed Attention U-Net Model. Forests 2024, 15, 2039. https://doi.org/10.3390/f15112039

Zheng J, Chen D, Zhang H, Zhang G, Zhen Q, Liu S, Zhang N, Zhao H. Remote Sensing Identification of Picea schrenkiana var. tianschanica in GF-1 Images Based on a Multiple Mixed Attention U-Net Model. Forests. 2024; 15(11):2039. https://doi.org/10.3390/f15112039

Chicago/Turabian StyleZheng, Jian, Donghua Chen, Hanchi Zhang, Guohui Zhang, Qihang Zhen, Saisai Liu, Naiming Zhang, and Haiping Zhao. 2024. "Remote Sensing Identification of Picea schrenkiana var. tianschanica in GF-1 Images Based on a Multiple Mixed Attention U-Net Model" Forests 15, no. 11: 2039. https://doi.org/10.3390/f15112039

APA StyleZheng, J., Chen, D., Zhang, H., Zhang, G., Zhen, Q., Liu, S., Zhang, N., & Zhao, H. (2024). Remote Sensing Identification of Picea schrenkiana var. tianschanica in GF-1 Images Based on a Multiple Mixed Attention U-Net Model. Forests, 15(11), 2039. https://doi.org/10.3390/f15112039